Test page for MediaWiki: Difference between revisions

(→Test) |

No edit summary |

||

| (7 intermediate revisions by one other user not shown) | |||

| Line 13: | Line 13: | ||

test333 | test333 | ||

test456 | test456 | ||

test789 | |||

[[Image:Web_security2.svg]] | |||

last last last | |||

[[Image:20150422_514701.jpg]] | |||

{| | |||

! [[File:media/image1.jpeg|299x82px]] | |||

! | |||

! '''Document-131949''' | |||

|- | |||

| '''Design Note TRI-DN-16-22 ALPHA-g Data Acquisition Notes''' | |||

|- | |||

| | |||

| | |||

|- | |||

| '''Document Type:''' | |||

| '''Design''' '''Note''' | |||

|- | |||

| '''Release:''' | |||

| '''2''' | |||

| '''Release Date:''' | |||

|- | |||

| '''Author(s):''' | |||

| '''Pierre Amaudruz''' | |||

|- | |||

| | |||

| | |||

| | |||

|- | |||

| | |||

| '''Name:''' | |||

| '''Signature:''' | |||

|- | |||

| '''Author:''' | |||

| '''Konstantin Olchanski ''' | |||

| | |||

|- | |||

| | |||

| '''Pierre Amaudruz''' | |||

| | |||

|- | |||

| '''Reviewed By:''' | |||

| '''Thomas Lindner''' | |||

| | |||

|- | |||

| '''Approved By:''' | |||

| '''Makoto Fujiwara''' | |||

| | |||

|} | |||

History of Changes | |||

{| | |||

! Release Number | |||

! Date | |||

! Description of Changes | |||

! Author(s) | |||

|- | |||

| 1 | |||

| 2016-02-28 | |||

| Initial Version | |||

| Pierre Amaudruz | |||

|- | |||

| 2 | |||

| 2017-04-10 | |||

| | |||

| Pierre Amaudruz | |||

|- | |||

| 3 | |||

| 2017-08-08 | |||

| Revise version without Grif-C | |||

| PAA, KO | |||

|- | |||

| | |||

| | |||

| | |||

| | |||

|} | |||

'''Keywords:''' | |||

'''Distribution List:''' | |||

= Table of Figures = | |||

[[#_Toc493687873|''Figure 1 - Overall dataflow for scheme from the detector to the data storage area.'' 10]] | |||

[[#_Toc493687874|''Figure 2 - Frontend local fragment assembly, similar data for the event builder. Each grey box refers to a Thread collecting and filtering locally its fragment before making it available to the fragment builder'' 11]] | |||

= Introduction = | |||

The ALPHA-g experiment is the next step in the line of successful anti-hydrogen experiments at CERN - ATHENA, ALPHA, ALPHA-2. These experiments have been designed, built and operated by mostly the same group of people, making it important to maximally maintain similarity and compatibility between the existing ALPHA-2 data acquisition system and the new ALPHA-g system. The ALPHA-2 and ALPHA-g machines are expected to operate together, at the same time, by the same set of people, for several years. | |||

Looking back, the ATHENA experiment introduced the concept of using an advanced Si-strip particle detector to image annihilations of anti-hydrogen. The ALPHA experiment brought in the highly reliable MIDAS data acquisition software and the modular VME-based electronics (built at/for TRIUMF). The ALPHA-2 experiment saw the data acquisition system expanded by 50% without having to make any architectural changes. | |||

ALPHA-g continues to build on the foundation, but because a 2 meter long Si strip detector is not practical, a TPC gas detector will be used instead. | |||

= Scope and Assumptions = | |||

Similar to ALPHA-2, ALPHA-g will consist of several computer controls systems in addition to data acquisition for the annihilation imaging detector. | |||

There will be Labview and CompactPCI-based control systems for trap vacuum, for magnet controls, for positron source controls, for trap controls, for microwave and laser system controls. | |||

All these systems are outside the scope of this document, except where, same as in ALPHA-2, they will log data into the MIDAS history system via network socket connections. | |||

The data acquisition system for the particle detector includes a number of hardware components - digitizers for analog signals, firmware-based signal processing, trigger decision making, etc. | |||

All these components are outside of the scope of this document, except where they require network communications for receiving experiment data, for configuration management and for monitoring. For details on the electronics see TRI-DN-16-15. | |||

Thus the scope of this document is limited to the software (and to hardware required to run it) that receives experiment data across the network, communicates with other hardware and software systems over the network. | |||

= Definitions and Abbreviations = | |||

General acronyms or terms used for the ALPHA-g experiment | |||

{| | |||

| rTPC | |||

| Radial Time projection Chamber | |||

|- | |||

| AD | |||

| Antiproton Decelerator (CERN building where ALPHA-g is installed) | |||

|- | |||

| Trap | |||

| Device where the Antihydrogen annihilation take place within the cryostat. | |||

|- | |||

| AW | |||

| TPC Anode Wires | |||

|- | |||

| BSC | |||

| Barrel Scintillator Detector | |||

|- | |||

| GRIFFIN | |||

| Major Experiment facility at TRIUMF | |||

|- | |||

| ALPHA-16, ALPHA-T, FMC-32 | |||

| Similar electronics designed and built at Triumf for Griffin | |||

|- | |||

| MIDAS | |||

| Data Acquisition software package from PSI & TRIUMF | |||

|- | |||

| MIDAS ODB | |||

| Shared memory tree-structured database | |||

|- | |||

| SiPM | |||

| Solid-state light sensors used in the BSC detector | |||

|- | |||

| | |||

| | |||

|- | |||

| TPC AFTER ASIC | |||

| Custom chip from Saclay for recording TPC analog signals | |||

|- | |||

| TPC pad board (FEAM) | |||

| TPC Electronics signed and build at TRIUMF for ALPHA-g | |||

|} | |||

''Table 1 – ALPHA-g Abbreviations'' | |||

= References and related Document = | |||

[http://midas.triumf.ca Midas documentation] | |||

[https://daq.triumf.ca/elog-alphag/alphag/ Experiment Elog (restricted)] | |||

[https://daq.triumf.ca/DaqWiki/index.php/Components Alpha-g Component Wiki] | |||

[https://edev-group.triumf.ca/fw/exp/alphag Edev-gitalb repository (restricted)] | |||

TRI-DN-16-15: ALPHA-G Detector Electronics | |||

= Specifications = | |||

The Data Acquisition system is overlooking all the detector parameters settings and manage the collection, analysis, recording and monitoring of the detector incoming data. These tasks can be described as follow. | |||

== Handling of experiment data == | |||

Experiment data will arrive over multiple 1GigE network links into the main DAQ computer, will be processed and transmitted to permanent storage at the main CERN data center, also across a 1GigE link. | |||

The existing network link from CERN shared by ALPHA-2 and ALPHA-g runs at 1GigE speed and the collaboration does not plan to request that CERN upgrade this link to 10GigE or faster speed. | |||

This limits the overall data throughput to 100 Mbytes/sec shared with ALPHA-2 (which in turn is limited to 20-30 Mbytes/sec). | |||

A Local Network switch (96x1GigE) gather all the different data sources such as: | |||

* 16 AW and BSC digitizers (FMC-32, ALPHA-16) | |||

** The 512 Anode wires channels (256 wires readout both ends) are digitized with 16 FMC-32 mezzanines of the ALPHA-16). Each ALPHA-16 has an Ethernet data link to the main LAN switch. | |||

** The 128 channels of the BSB are collected through the same ALPHA-16 boards. | |||

* 64 FEAMs cathode pad readout | |||

** The 18432 cathode pads are digitized with 64 FEAMs which each has a optical Ethernet link to the main LAN switch. | |||

** | |||

* The ALPHA-T trigger board has a link to the main LAN switch as well. | |||

* Barrel Scintillator TDC (see Electronics document) | |||

A total of 82 x 1GigE ports out of the 96 from the switch are allocated to the main detector operation. | |||

== == | |||

== Handling of Labview and Trap sequencer data == | |||

Same as in ALPHA-2, there will be a special MIDAS frontend to receive data from labview-based control systems (vacuum, magnets, trap sequencer, etc.). Data communication protocol and data format will be the same in ALPHA-2. | |||

== Configuration data == | |||

All configuration data will be stored in the MIDAS ODB database. | |||

Major configuration items: | |||

<ul> | |||

<li><p>Trigger configuration (multiplicity settings, channel mapping, dead channel blanking, etc.)</p></li> | |||

<li><p>AW and BSC digitizer settings</p></li> | |||

<li><p>TPC pad board settings and AFTER ASIC settings (FEAMs)</p></li> | |||

<li><p>UPS uninterruptible power supply with battery backup and "double conversion" power conditioning</p> | |||

<ol style="list-style-type: decimal;"> | |||

<li>== Slow Controls data == | |||

</li></ol> | |||

</li></ul> | |||

All slow controls settings will be stored in the MIDAS ODB database. Slow controls data will be logged through the MIDAS history system. Integration with the ALPHA-II DAQ system will require additional transfer of ALPHA-g control data to it. This being done in MySQL format. | |||

Major slow controls items: | |||

* Low voltage power supply controls | |||

* High voltage power supply controls | |||

* Environment monitoring (temperature probes, etc.) | |||

* SiPM bias voltage controls | |||

* TPC gas system control and monitoring | |||

* TPC calibration system controls | |||

* cooling system control and monitoring | |||

* UPS monitoring | |||

Some slow controls components will have software interlocks for non-safety critical protection of equipment, such as automatic shutdown of electronics on overheating, automatic shutdown of detectors on UPS alarms (i.e. loss of external AC power). | |||

(It is assumed that safety-critical interlocks will be implemented in hardware, this is outside the scope of this particular document). | |||

= Trigger consideration = | |||

The Alpha-g physics experiment follows different particle trapping stages such as: Positron accumulation, Antiproton accumulation, mixing stage for formation of anti-hydrogen in the trap and annihilation stage for the actual measurement. The whole cycle takes several minutes and needs to be constantly monitored with the detector to ensure that external background events (cosmics, noise) are properly identified by the detector and that annihilation events from the trap are all recorded for further analysis. This is mainly done using the barrel scintillator which will record external incoming events and internal trapped events (annihilation). Timing analysis will resolve the distinction of the two main sources of events. | |||

Therefore the barrel scintillator is the main contributor to the trigger decision. Additional information from the anode wires is beneficial even though its timing is extremely loose due to the implicit drift time range of the detector. | |||

The Trigger decision is based initially on simple multiplicity of the barrel scintillator detector which tags the event to record. This multiplicity is computed in each of the 16 Alpha-16 boards and transmitted to the Mater Trigger Module (Alpha-T). There the individual fragments are matched in time and final trigger decision is taken, which in turn will be redistributed to all the acquisition modules. | |||

In case the anode wire information is needed as well, the same fragment collection scheme is done for the wire and sent through the same physical link as the Alpha-16 modules are populated with the 32 channels at 62.5Msps mezzanine (FMC-32). | |||

The Data Acquisition system (MIDAS) will not be taking part to the trigger decision, therefore will have no interaction with the hardware for the trigger decision. The data stream will constantly be pulled from Ethernet of the acquisition modules and an “event builder” application using the timestamp information attached to each received packet for fragment reassembly. The hardware trigger module (ALPHA-T) will keep track of the trigger decision and produce trigger data which merged to the main data acquisition stream. | |||

= Midas Software configuration = | |||

For maximum familiarity, similarity and compatibility with the existing ALPHA-2 system, ALPHA-g will also use the MIDAS data acquisition software package. | |||

The main features of MIDAS is a modern web-based user interface, good robustness, high reliability and high scalability. The system is easy to operate by non-experts. | |||

Outside of ALPHA-2 at CERN, other major users of MIDAS are TIGRESS and GRIFFIN at TRIUMF, MEG at PSI, DEAP at SNOLAB, T2K/ND280 at JPARC (Japan). | |||

Similar to ALPHA-2 the MIDAS software will be running on one single high-end computer with an Intel socket 1151 CPU and 32/64 GB RAM. This is adequate for achieving the 100 Mbytes/sec data rates expected for ALPHA-g. During tests of 10GigE networking for the GRIFFIN experiment at TRIUMF this type of hardware was capable of recording 1000 Mbytes/sec of data from network to disk. The required disk I/O capability will be absent in ALPHA-g. The intention is to stream the data directly to CASTOR data storage provided by CERN. | |||

This computer will have 2 1GigE network interfaces, one for connecting to the CERN network, the second for connecting to the ALPHA-g private network. | |||

Data storage will be dual mirrored (RAID1) SSDs for the operating system and home directories and dual mirrored (RAID1) 6TB HDDs for local data storage. | |||

The MIDAS software running on this computer will have following components: | |||

* Mhttpd - the MIDAS web server providing the main user interface for running the system | |||

* Mlogger - the MIDAS data logger for writing experiment data to local storage and for recording MIDAS slow controls history data | |||

* Lazylogger - for moving experiment data from local storage to permanent storage at the CERN data center (EOS and Castor). | |||

* MIDAS frontend programs custom written to receive experiment data | |||

* The event builder - for assembling experiment data from different frontends into events to simplify further analysis. The event builder will have code for initial data quality tests (correct data formatting, correct event timestamps, etc.). | |||

* The online analyzer - for initial data analysis, visualization and additional data quality checks. | |||

The MIDAS frontend programs are written using a common template, with each frontend program specialized to receive data in the format of the attached equipment: | |||

* Frontend for AW and BSC digitizers - receive data from ALPHA-16 digitizers using TCP and UDP network socket connections (will use a modification of the frontend from GRIFFIN), provide configuration and monitoring (slow controls) of the digitizers. | |||

* Frontend for the TPC pad boards - receive data from the TPC using TCP and UDP network socket connections. This frontend will also provide configuration and monitoring (slow controls) of the TPC pad boards and of the TPC AFTER ASICs. | |||

* Frontend for the clock board - configuration and control of the clock board using UDP and TCP network connections. | |||

* Frontends for slow controls, according to equipment (see list in the "specification" section). Most frontend programs will be reused from previous experiments (i.e. UPS monitoring frontends from DEAP, ALPHA-II). | |||

Data from all the hardware components arrives through the MIDAS frontend programs asynchronously. This makes further analysis and data quality tests rather difficult. To rearrange the data into sensible pieces MIDAS provides an event builder component. | |||

The event builder program is usually written specifically for each experiment (also using a MIDAS template). The ALPHA-g event builder will check incoming data for basic correctness, assemble event fragments from different frontends into coherent events, and check timestamps and event numbers, possibly apply additional data reduction and data compression algorithms. We expect to reuse the multi-threaded event builder developed for DEAP as the basis of the ALPHA-g event builder. | |||

The online analyzer will look at a sample of events produced by the event builder and produce histograms and other plots of most important quantities (pulse heights, timing, etc) to confirm correct function of the detector components (i.e. each TPC pad produces hits) and of the detector as whole (i.e. hit multiplicity in the TPC pads matches hit multiplicity in the TPC AW and in the BSC). We do not expect to do full tracking and vertex reconstruction in the online analyzer - this will be done in the "near offline" analysis using the CERN data center resources (similar to ALPHA-2 procedures). | |||

The mlogger component writes MIDAS data to local disk (or remote disk using NFS or UNIX pipe methods) and provides standard data compression (gzip-1 by default, bzip2 for maximum compression and LZ4 for maximum speed). As an option CRC32c and SHA-256/SHA-512 checksums can be computed to detect data corruption. | |||

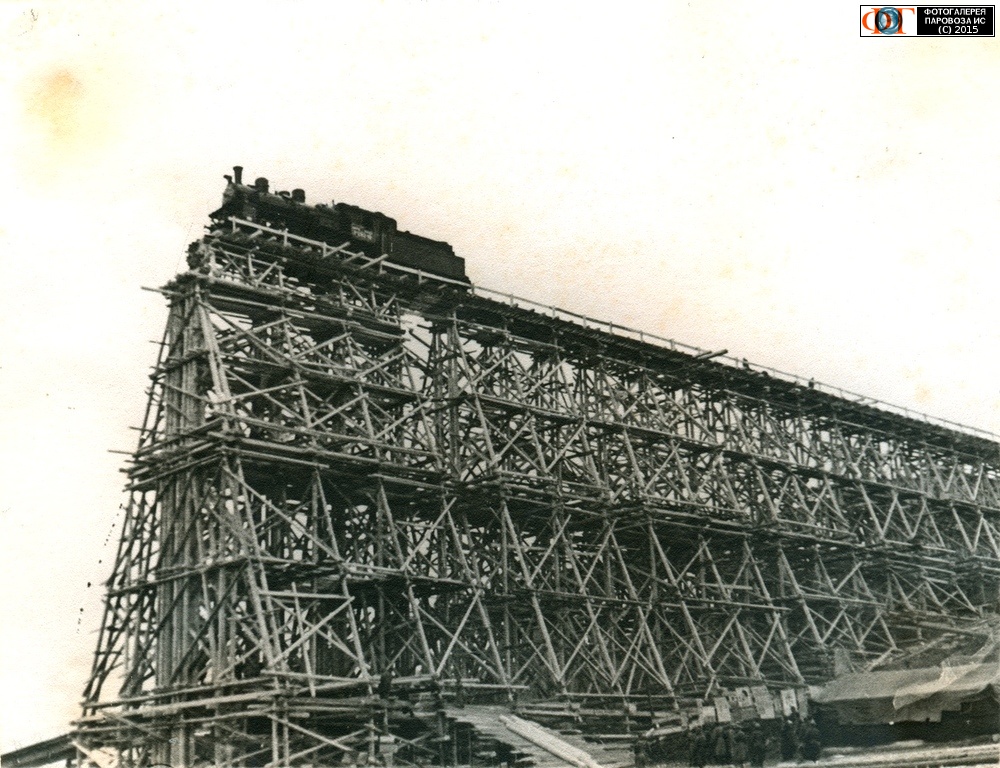

''Figure 1, 2'' and the diagram below illustrate the data flow described in this section: | |||

[[File:media/image2.png|576x386px]] | |||

<span id="_Toc493687873" class="anchor"></span>''Figure 1 - Overall dataflow for scheme from the detector to the data storage area.'' | |||

[[File:media/image3.png|536x343px]] | |||

<span id="_Ref489943269" class="anchor"><span id="_Toc493687874" class="anchor"></span></span>''Figure 2 - Frontend local fragment assembly, similar data for the event builder. Each grey box refers to a Thread collecting and filtering locally its fragment before making it available to the fragment builder '' | |||

= Computer Network configuration = | |||

Operation of the ALPHA-g experiment will involve 2 computer networks: the ALPHA-g private network and the CERN network. | |||

The private network is required to isolate experiment data traffic from the CERN network data traffic (this was not required for ALPHA-2, where there is no private network) and to restrict access to network-attached slow controls devices (power supplies, gas system controllers, etc.). | |||

This private network (192.168.x.y) will be connected to 2x 1GigE network switches (96, 24ports). The first one will connect all the FEAM (64) and the ALPHA-16 (16). The other networked equipment such as the slow controls/monitoring devices etc, will be connected to the second switch (24 ports). | |||

The 1GigE network port of the main data acquisition computer will connect to the CERN network for transmitting experiment data to the CERN data center, for receiving experiment data from the Labview based control systems (which are outside of the scope of the particle detector and of this document). | |||

The CERN network currently consists of a single fiber 1GigE network link feeding a small (24-port) 1GigE network switch in the experiment counting room. It is not anticipated that CERN will upgrade this link to higher speeds (10GigE, etc.) within the lifetime of ALPHA-g and it is not expected that ALPHA-g will ever require these higher speeds because excessive amount of data recorded to CERN storage will create significant problems for data analysis. If the detector produces too much data, it will be handled by using stronger data reduction and data compression algorithms. | |||

The ALPHA-g data acquisition computer will provide one-way network gateway connection from the private network to the CERN network using Linux based NAT. This is required to provide devices attached to the private network with access to DNS, NTP and other network services, i.e. for installing software and firmware updates. | |||

This configuration is very similar to what is in use by the DEAP experiment at SNOLAB. The main difference is ALPHA-g will run the main data acquisition and NAT/gateway services on the same computers as opposed to two separate computers at DEAP. | |||

= Computer Security considerations = | |||

In the ALPHA-2 and ALPHA-g experiments, p-bar beam time is very precious and any disturbances during beam data taking must be minimized. | |||

One the computer network side, the ALPHA-g data acquisition system needs to be protected from: | |||

* Unauthorized access to proprietary information | |||

* Unauthorized access to network-controlled devices (power supplies, etc.) | |||

* Sundry network disturbances (unwanted network port scans, unwanted web scans, accidental or malicious denial-of-service attacks, etc.). | |||

It is important to note that the CERN network is isolated from the main Internet by the CERN firewall. Generally, the CERN firewall allows all outgoing traffic rom CERN to the Internet (can be selectively blocked by special request to CERN IT), but blocks all incoming traffic, except where specifically permitted. A typical machine connected to the CERN network can talk to anywhere in the Internet, but from the Internet, only "ping" is permitted. "ssh" access is disabled (one is supposed to ssh gateways at lxplus.cern.ch) and "http" access is allowed by special permission from CERN IT. | |||

Thus we do not need to worry about protecting the ALPHA-g data acquisition system against "global Internet" threats (the CERN firewall does this job). Protection is required only against access from other machines attached to the CERN network, which can be generally considered as non-malicious. Still, over the years of operating ALPHA and ALPHA-2, we have seen unwanted network scans (that required special hardening of MIDAS) and other strange activity. | |||

The security model of ALPHA-g combines the security features of ALPHA-2 and the security features of DEAP (at SNOLAB). | |||

Access to the MIDAS Web server will be exclusively through the apache httpd proxy with password-protected HTTPS connections (same as in ALPHA, ALPHA-2 and DEAP). | |||

Access to machines in the private network will be generally blocked (by Linux firewall rules and NAT). Exceptions like access to UPS controls, webcams, etc. will be via the http proxy, with password-protected HTTPS connections, same as in DEAP. | |||

Access to MIDAS ports in the data acquisition computer will be blocked by Linux firewall rules. Exceptions will be made for specific connections such as Labview controls sending data to MIDAS. | |||

In ALPHA, ALPHA-2 and DEAP the apache httpd proxy server runs on a separate machine. In ALPHA-g we may run it on the same machine as the main data acquisition, subject to approval by CERN (they have to open HTTPS access in the CERN firewall - from the Internet to the HTTPS port on the ALPHA-g DAQ computer). | |||

Over the many years of operating ALPHA, ALPHA-2, DEAP and other similarly configured experiments, this security model has worked well for us, with no complaints from experiment users and no security incidents. | |||

= Safety and Hazard considerations = | |||

There are no safety and hazard concerns about any software or equipment inside the scope of this document. | |||

All hardware consists of commodity computer components (computers, network switches, etc.) that carries appropriate government safety certifications (i.e. CSA in Canada). | |||

All software and software controlled equipment will have hardware-enforced limits and interlocks to ensure safe operation even in case of software failure. (This is outside the scope of this document, for more information, please refer to documents or each relevant hardware component). | |||

Latest revision as of 18:05, 22 September 2017

MediaWiki has been successfully installed.

Consult the User's Guide for information on using the wiki software.

Getting started

Test

test123 test333 test456 test789

last last last

| File:Media/image1.jpeg | Document-131949 | |

|---|---|---|

| Design Note TRI-DN-16-22 ALPHA-g Data Acquisition Notes | ||

| Document Type: | Design Note | |

| Release: | 2 | Release Date: |

| Author(s): | Pierre Amaudruz | |

| Name: | Signature: | |

| Author: | Konstantin Olchanski | |

| Pierre Amaudruz | ||

| Reviewed By: | Thomas Lindner | |

| Approved By: | Makoto Fujiwara |

History of Changes

| Release Number | Date | Description of Changes | Author(s) |

|---|---|---|---|

| 1 | 2016-02-28 | Initial Version | Pierre Amaudruz |

| 2 | 2017-04-10 | Pierre Amaudruz | |

| 3 | 2017-08-08 | Revise version without Grif-C | PAA, KO |

Keywords:

Distribution List:

Table of Figures

Figure 1 - Overall dataflow for scheme from the detector to the data storage area. 10

Introduction

The ALPHA-g experiment is the next step in the line of successful anti-hydrogen experiments at CERN - ATHENA, ALPHA, ALPHA-2. These experiments have been designed, built and operated by mostly the same group of people, making it important to maximally maintain similarity and compatibility between the existing ALPHA-2 data acquisition system and the new ALPHA-g system. The ALPHA-2 and ALPHA-g machines are expected to operate together, at the same time, by the same set of people, for several years.

Looking back, the ATHENA experiment introduced the concept of using an advanced Si-strip particle detector to image annihilations of anti-hydrogen. The ALPHA experiment brought in the highly reliable MIDAS data acquisition software and the modular VME-based electronics (built at/for TRIUMF). The ALPHA-2 experiment saw the data acquisition system expanded by 50% without having to make any architectural changes.

ALPHA-g continues to build on the foundation, but because a 2 meter long Si strip detector is not practical, a TPC gas detector will be used instead.

Scope and Assumptions

Similar to ALPHA-2, ALPHA-g will consist of several computer controls systems in addition to data acquisition for the annihilation imaging detector.

There will be Labview and CompactPCI-based control systems for trap vacuum, for magnet controls, for positron source controls, for trap controls, for microwave and laser system controls.

All these systems are outside the scope of this document, except where, same as in ALPHA-2, they will log data into the MIDAS history system via network socket connections.

The data acquisition system for the particle detector includes a number of hardware components - digitizers for analog signals, firmware-based signal processing, trigger decision making, etc.

All these components are outside of the scope of this document, except where they require network communications for receiving experiment data, for configuration management and for monitoring. For details on the electronics see TRI-DN-16-15.

Thus the scope of this document is limited to the software (and to hardware required to run it) that receives experiment data across the network, communicates with other hardware and software systems over the network.

Definitions and Abbreviations

General acronyms or terms used for the ALPHA-g experiment

| rTPC | Radial Time projection Chamber |

| AD | Antiproton Decelerator (CERN building where ALPHA-g is installed) |

| Trap | Device where the Antihydrogen annihilation take place within the cryostat. |

| AW | TPC Anode Wires |

| BSC | Barrel Scintillator Detector |

| GRIFFIN | Major Experiment facility at TRIUMF |

| ALPHA-16, ALPHA-T, FMC-32 | Similar electronics designed and built at Triumf for Griffin |

| MIDAS | Data Acquisition software package from PSI & TRIUMF |

| MIDAS ODB | Shared memory tree-structured database |

| SiPM | Solid-state light sensors used in the BSC detector |

| TPC AFTER ASIC | Custom chip from Saclay for recording TPC analog signals |

| TPC pad board (FEAM) | TPC Electronics signed and build at TRIUMF for ALPHA-g |

Table 1 – ALPHA-g Abbreviations

Edev-gitalb repository (restricted)

TRI-DN-16-15: ALPHA-G Detector Electronics

Specifications

The Data Acquisition system is overlooking all the detector parameters settings and manage the collection, analysis, recording and monitoring of the detector incoming data. These tasks can be described as follow.

Handling of experiment data

Experiment data will arrive over multiple 1GigE network links into the main DAQ computer, will be processed and transmitted to permanent storage at the main CERN data center, also across a 1GigE link.

The existing network link from CERN shared by ALPHA-2 and ALPHA-g runs at 1GigE speed and the collaboration does not plan to request that CERN upgrade this link to 10GigE or faster speed.

This limits the overall data throughput to 100 Mbytes/sec shared with ALPHA-2 (which in turn is limited to 20-30 Mbytes/sec).

A Local Network switch (96x1GigE) gather all the different data sources such as:

- 16 AW and BSC digitizers (FMC-32, ALPHA-16)

- The 512 Anode wires channels (256 wires readout both ends) are digitized with 16 FMC-32 mezzanines of the ALPHA-16). Each ALPHA-16 has an Ethernet data link to the main LAN switch.

- The 128 channels of the BSB are collected through the same ALPHA-16 boards.

- 64 FEAMs cathode pad readout

- The 18432 cathode pads are digitized with 64 FEAMs which each has a optical Ethernet link to the main LAN switch.

- The ALPHA-T trigger board has a link to the main LAN switch as well.

- Barrel Scintillator TDC (see Electronics document)

A total of 82 x 1GigE ports out of the 96 from the switch are allocated to the main detector operation.

Handling of Labview and Trap sequencer data

Same as in ALPHA-2, there will be a special MIDAS frontend to receive data from labview-based control systems (vacuum, magnets, trap sequencer, etc.). Data communication protocol and data format will be the same in ALPHA-2.

Configuration data

All configuration data will be stored in the MIDAS ODB database.

Major configuration items:

Trigger configuration (multiplicity settings, channel mapping, dead channel blanking, etc.)

AW and BSC digitizer settings

TPC pad board settings and AFTER ASIC settings (FEAMs)

UPS uninterruptible power supply with battery backup and "double conversion" power conditioning

- == Slow Controls data ==

All slow controls settings will be stored in the MIDAS ODB database. Slow controls data will be logged through the MIDAS history system. Integration with the ALPHA-II DAQ system will require additional transfer of ALPHA-g control data to it. This being done in MySQL format.

Major slow controls items:

- Low voltage power supply controls

- High voltage power supply controls

- Environment monitoring (temperature probes, etc.)

- SiPM bias voltage controls

- TPC gas system control and monitoring

- TPC calibration system controls

- cooling system control and monitoring

- UPS monitoring

Some slow controls components will have software interlocks for non-safety critical protection of equipment, such as automatic shutdown of electronics on overheating, automatic shutdown of detectors on UPS alarms (i.e. loss of external AC power).

(It is assumed that safety-critical interlocks will be implemented in hardware, this is outside the scope of this particular document).

Trigger consideration

The Alpha-g physics experiment follows different particle trapping stages such as: Positron accumulation, Antiproton accumulation, mixing stage for formation of anti-hydrogen in the trap and annihilation stage for the actual measurement. The whole cycle takes several minutes and needs to be constantly monitored with the detector to ensure that external background events (cosmics, noise) are properly identified by the detector and that annihilation events from the trap are all recorded for further analysis. This is mainly done using the barrel scintillator which will record external incoming events and internal trapped events (annihilation). Timing analysis will resolve the distinction of the two main sources of events.

Therefore the barrel scintillator is the main contributor to the trigger decision. Additional information from the anode wires is beneficial even though its timing is extremely loose due to the implicit drift time range of the detector.

The Trigger decision is based initially on simple multiplicity of the barrel scintillator detector which tags the event to record. This multiplicity is computed in each of the 16 Alpha-16 boards and transmitted to the Mater Trigger Module (Alpha-T). There the individual fragments are matched in time and final trigger decision is taken, which in turn will be redistributed to all the acquisition modules.

In case the anode wire information is needed as well, the same fragment collection scheme is done for the wire and sent through the same physical link as the Alpha-16 modules are populated with the 32 channels at 62.5Msps mezzanine (FMC-32).

The Data Acquisition system (MIDAS) will not be taking part to the trigger decision, therefore will have no interaction with the hardware for the trigger decision. The data stream will constantly be pulled from Ethernet of the acquisition modules and an “event builder” application using the timestamp information attached to each received packet for fragment reassembly. The hardware trigger module (ALPHA-T) will keep track of the trigger decision and produce trigger data which merged to the main data acquisition stream.

Midas Software configuration

For maximum familiarity, similarity and compatibility with the existing ALPHA-2 system, ALPHA-g will also use the MIDAS data acquisition software package.

The main features of MIDAS is a modern web-based user interface, good robustness, high reliability and high scalability. The system is easy to operate by non-experts.

Outside of ALPHA-2 at CERN, other major users of MIDAS are TIGRESS and GRIFFIN at TRIUMF, MEG at PSI, DEAP at SNOLAB, T2K/ND280 at JPARC (Japan).

Similar to ALPHA-2 the MIDAS software will be running on one single high-end computer with an Intel socket 1151 CPU and 32/64 GB RAM. This is adequate for achieving the 100 Mbytes/sec data rates expected for ALPHA-g. During tests of 10GigE networking for the GRIFFIN experiment at TRIUMF this type of hardware was capable of recording 1000 Mbytes/sec of data from network to disk. The required disk I/O capability will be absent in ALPHA-g. The intention is to stream the data directly to CASTOR data storage provided by CERN.

This computer will have 2 1GigE network interfaces, one for connecting to the CERN network, the second for connecting to the ALPHA-g private network.

Data storage will be dual mirrored (RAID1) SSDs for the operating system and home directories and dual mirrored (RAID1) 6TB HDDs for local data storage.

The MIDAS software running on this computer will have following components:

- Mhttpd - the MIDAS web server providing the main user interface for running the system

- Mlogger - the MIDAS data logger for writing experiment data to local storage and for recording MIDAS slow controls history data

- Lazylogger - for moving experiment data from local storage to permanent storage at the CERN data center (EOS and Castor).

- MIDAS frontend programs custom written to receive experiment data

- The event builder - for assembling experiment data from different frontends into events to simplify further analysis. The event builder will have code for initial data quality tests (correct data formatting, correct event timestamps, etc.).

- The online analyzer - for initial data analysis, visualization and additional data quality checks.

The MIDAS frontend programs are written using a common template, with each frontend program specialized to receive data in the format of the attached equipment:

- Frontend for AW and BSC digitizers - receive data from ALPHA-16 digitizers using TCP and UDP network socket connections (will use a modification of the frontend from GRIFFIN), provide configuration and monitoring (slow controls) of the digitizers.

- Frontend for the TPC pad boards - receive data from the TPC using TCP and UDP network socket connections. This frontend will also provide configuration and monitoring (slow controls) of the TPC pad boards and of the TPC AFTER ASICs.

- Frontend for the clock board - configuration and control of the clock board using UDP and TCP network connections.

- Frontends for slow controls, according to equipment (see list in the "specification" section). Most frontend programs will be reused from previous experiments (i.e. UPS monitoring frontends from DEAP, ALPHA-II).

Data from all the hardware components arrives through the MIDAS frontend programs asynchronously. This makes further analysis and data quality tests rather difficult. To rearrange the data into sensible pieces MIDAS provides an event builder component.

The event builder program is usually written specifically for each experiment (also using a MIDAS template). The ALPHA-g event builder will check incoming data for basic correctness, assemble event fragments from different frontends into coherent events, and check timestamps and event numbers, possibly apply additional data reduction and data compression algorithms. We expect to reuse the multi-threaded event builder developed for DEAP as the basis of the ALPHA-g event builder.

The online analyzer will look at a sample of events produced by the event builder and produce histograms and other plots of most important quantities (pulse heights, timing, etc) to confirm correct function of the detector components (i.e. each TPC pad produces hits) and of the detector as whole (i.e. hit multiplicity in the TPC pads matches hit multiplicity in the TPC AW and in the BSC). We do not expect to do full tracking and vertex reconstruction in the online analyzer - this will be done in the "near offline" analysis using the CERN data center resources (similar to ALPHA-2 procedures).

The mlogger component writes MIDAS data to local disk (or remote disk using NFS or UNIX pipe methods) and provides standard data compression (gzip-1 by default, bzip2 for maximum compression and LZ4 for maximum speed). As an option CRC32c and SHA-256/SHA-512 checksums can be computed to detect data corruption.

Figure 1, 2 and the diagram below illustrate the data flow described in this section:

Figure 1 - Overall dataflow for scheme from the detector to the data storage area.

Figure 2 - Frontend local fragment assembly, similar data for the event builder. Each grey box refers to a Thread collecting and filtering locally its fragment before making it available to the fragment builder

Computer Network configuration

Operation of the ALPHA-g experiment will involve 2 computer networks: the ALPHA-g private network and the CERN network.

The private network is required to isolate experiment data traffic from the CERN network data traffic (this was not required for ALPHA-2, where there is no private network) and to restrict access to network-attached slow controls devices (power supplies, gas system controllers, etc.).

This private network (192.168.x.y) will be connected to 2x 1GigE network switches (96, 24ports). The first one will connect all the FEAM (64) and the ALPHA-16 (16). The other networked equipment such as the slow controls/monitoring devices etc, will be connected to the second switch (24 ports).

The 1GigE network port of the main data acquisition computer will connect to the CERN network for transmitting experiment data to the CERN data center, for receiving experiment data from the Labview based control systems (which are outside of the scope of the particle detector and of this document).

The CERN network currently consists of a single fiber 1GigE network link feeding a small (24-port) 1GigE network switch in the experiment counting room. It is not anticipated that CERN will upgrade this link to higher speeds (10GigE, etc.) within the lifetime of ALPHA-g and it is not expected that ALPHA-g will ever require these higher speeds because excessive amount of data recorded to CERN storage will create significant problems for data analysis. If the detector produces too much data, it will be handled by using stronger data reduction and data compression algorithms.

The ALPHA-g data acquisition computer will provide one-way network gateway connection from the private network to the CERN network using Linux based NAT. This is required to provide devices attached to the private network with access to DNS, NTP and other network services, i.e. for installing software and firmware updates.

This configuration is very similar to what is in use by the DEAP experiment at SNOLAB. The main difference is ALPHA-g will run the main data acquisition and NAT/gateway services on the same computers as opposed to two separate computers at DEAP.

Computer Security considerations

In the ALPHA-2 and ALPHA-g experiments, p-bar beam time is very precious and any disturbances during beam data taking must be minimized.

One the computer network side, the ALPHA-g data acquisition system needs to be protected from:

- Unauthorized access to proprietary information

- Unauthorized access to network-controlled devices (power supplies, etc.)

- Sundry network disturbances (unwanted network port scans, unwanted web scans, accidental or malicious denial-of-service attacks, etc.).

It is important to note that the CERN network is isolated from the main Internet by the CERN firewall. Generally, the CERN firewall allows all outgoing traffic rom CERN to the Internet (can be selectively blocked by special request to CERN IT), but blocks all incoming traffic, except where specifically permitted. A typical machine connected to the CERN network can talk to anywhere in the Internet, but from the Internet, only "ping" is permitted. "ssh" access is disabled (one is supposed to ssh gateways at lxplus.cern.ch) and "http" access is allowed by special permission from CERN IT.

Thus we do not need to worry about protecting the ALPHA-g data acquisition system against "global Internet" threats (the CERN firewall does this job). Protection is required only against access from other machines attached to the CERN network, which can be generally considered as non-malicious. Still, over the years of operating ALPHA and ALPHA-2, we have seen unwanted network scans (that required special hardening of MIDAS) and other strange activity.

The security model of ALPHA-g combines the security features of ALPHA-2 and the security features of DEAP (at SNOLAB).

Access to the MIDAS Web server will be exclusively through the apache httpd proxy with password-protected HTTPS connections (same as in ALPHA, ALPHA-2 and DEAP).

Access to machines in the private network will be generally blocked (by Linux firewall rules and NAT). Exceptions like access to UPS controls, webcams, etc. will be via the http proxy, with password-protected HTTPS connections, same as in DEAP.

Access to MIDAS ports in the data acquisition computer will be blocked by Linux firewall rules. Exceptions will be made for specific connections such as Labview controls sending data to MIDAS.

In ALPHA, ALPHA-2 and DEAP the apache httpd proxy server runs on a separate machine. In ALPHA-g we may run it on the same machine as the main data acquisition, subject to approval by CERN (they have to open HTTPS access in the CERN firewall - from the Internet to the HTTPS port on the ALPHA-g DAQ computer).

Over the many years of operating ALPHA, ALPHA-2, DEAP and other similarly configured experiments, this security model has worked well for us, with no complaints from experiment users and no security incidents.

Safety and Hazard considerations

There are no safety and hazard concerns about any software or equipment inside the scope of this document.

All hardware consists of commodity computer components (computers, network switches, etc.) that carries appropriate government safety certifications (i.e. CSA in Canada).

All software and software controlled equipment will have hardware-enforced limits and interlocks to ensure safe operation even in case of software failure. (This is outside the scope of this document, for more information, please refer to documents or each relevant hardware component).