| ID |

Date |

Author |

Topic |

Subject |

|

3056

|

10 Jun 2025 |

Stefan Ritt | Info | History configuration changed | Today the way the history system is configured has changed. Whenever one adds new equipment

variables, the logger has to add that variable to the history system. Previously, this happened during

startup of the logger and at run start. We have now a case in the Mu3e experiment where we have many

variables and the history configuration takes about 15 seconds, which delays data taking considerably.

After discussion with KO we decided to remove the history re-configuration at run start. This speeds up

the run start considerably, also for other experiments with many history variables. It means however that

once you add/remove/rename any equipment variable going into the history system, you have to restart the

logger for this to become active.

https://bitbucket.org/tmidas/midas/commits/c0a14c2d0166feb6b38c645947f2c5e0bef013d5

Stefan |

|

473

|

23 Mar 2008 |

Konstantin Olchanski | Info | History SQL database poll: MySQL, PgSQL, ODBC? | I would like to hear from potential users on which SQL database would be

preferable for storage of MIDAS history data.

My current preference is to use the ODBC interface, leaving the choice of

database engine to the user. While ODBC is not pretty, it appears to be adequate

for the job, permits "funny" databases (i.e. flat files) and I already have

prototype implementations for reading (mhttpd) and writing (mhdump/mlogger)

history data using ODBC.

In practice, MySQL and PgSQL are the main two viable choices for using with the

MIDAS history system. We tested both (no change in code - just tell ODBC which

driver to use) and both provide comparable performance and disk space use. We

were glad to see that the disk space use by both SQL databases is very

efficient, only slightly worse than uncompressed MIDAS history files.

At TRIUMF, for T2K/ND280, we now decided to use MySQL - it provides a better

match to MIDAS data types (has 1-byte and 2-byte integers, etc) and appears to

have working database replication (required for our use).

With mlogger already including support for MySQL, and MySQL being a better match

for MIDAS data, this gives them a slight edge and I think it would be reasonable

choice to only implement support for MySQL.

So I see 3 alternatives:

1) use ODBC (my preference)

2) use MySQL exclusively

3) implement a "midas odbc layer" supporting either MySQL or PgSQL.

Before jumping either way, I would like to hear from you folks.

K.O. |

|

Draft

|

20 Jun 2017 |

Richard Longland | Forum | High Rate | |

|

2595

|

08 Sep 2023 |

Nick Hastings | Forum | Hide start and stop buttons | The wiki documents an odb variable to enable the hiding of the Start and Stop buttons on the mhttpd status page

https://daq00.triumf.ca/MidasWiki/index.php//Experiment_ODB_tree#Start-Stop_Buttons

However mhttpd states this option is obsolete. See commit:

https://bitbucket.org/tmidas/midas/commits/2366eefc6a216dc45154bc4594e329420500dcf7

I note that that commit also made mhttpd report that the "Pause-Resume Buttons" variable is also obsolete, however that code seems to have since been removed.

Is there now some other mechanism to hide the start and stop buttons?

Note that this is for a pure slow control system that does not take runs. |

|

2596

|

08 Sep 2023 |

Nick Hastings | Forum | Hide start and stop buttons | > Is there now some other mechanism to hide the start and stop buttons?

> Note that this is for a pure slow control system that does not take runs.

Just wanted to add that I realize that this can be done by copying

status.html and/or midas.css to the experiment directory and then modifying

them/it, but wonder if the is some other preferred way. |

|

2599

|

13 Sep 2023 |

Stefan Ritt | Forum | Hide start and stop buttons | Indeed the ODB settings are obsolete. Now that the status page is fully dynamic

(JavaScript), it's much more powerful to modify the status.html page directly. You

can not only hide the buttons, but also remove the run numbers, the running time,

and so on. This is much more flexible than steering things through the ODB.

If there is a general need for that, I can draft a "non-run" based status page, but

it's a bit hard to make a one-fits-all. Like some might even remove the logging

channels and the clients, but add certain things like if their slow control front-

end is running etc.

Best,

Stefan |

|

2600

|

13 Sep 2023 |

Nick Hastings | Forum | Hide start and stop buttons | Hi Stefan,

> Indeed the ODB settings are obsolete.

I just applied for an account for the wiki.

I'll try add a note regarding this change.

> Now that the status page is fully dynamic

> (JavaScript), it's much more powerful to modify the status.html page directly. You

> can not only hide the buttons, but also remove the run numbers, the running time,

> and so on. This is much more flexible than steering things through the ODB.

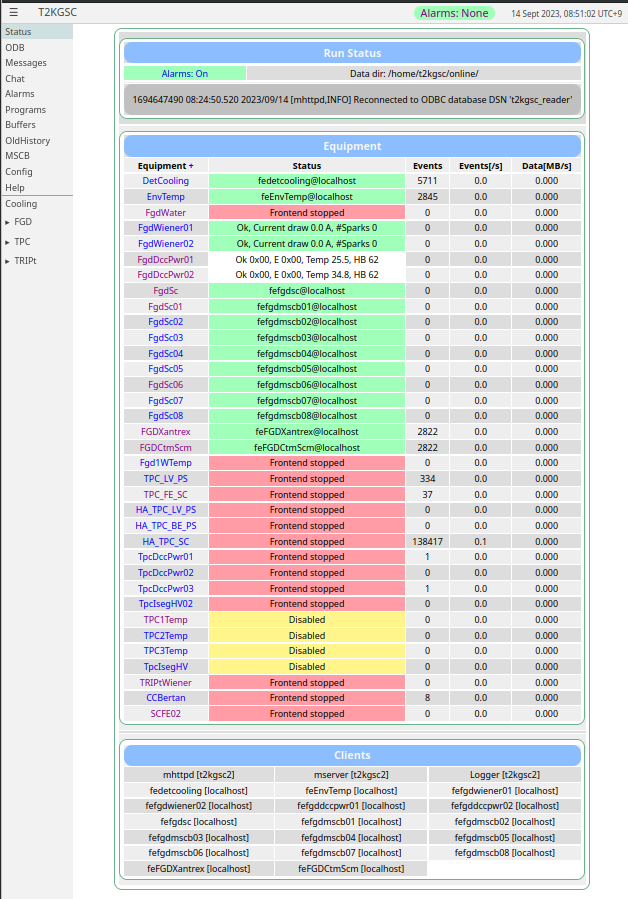

Very true. Currently I copied the resources/midas.css into the experiment directory and appended:

#runNumberCell { display: none;}

#runStatusStartTime { display: none;}

#runStatusStopTime { display: none;}

#runStatusSequencer { display: none;}

#logChannel { display: none;}

See screenshot attached. :-)

But if feels a little clunky to copy the whole file just to add five lines.

It might be more elegant if status.html looked for a user css file in addition

to the default ones.

> If there is a general need for that, I can draft a "non-run" based status page, but

> it's a bit hard to make a one-fits-all. Like some might even remove the logging

> channels and the clients, but add certain things like if their slow control front-

> end is running etc.

The logging channels are easily removed with the css (see attachment), but it might be

nice if the string "Run Status" table title was also configurable by css. For this

slow control system I'd probably change it to something like "GSC Status". Again

this is a minor thing, I could trivially do this by copying the resources/status.html

to the experiment directory and editing it.

Lots of fun new stuff migrating from circa 2012 midas to midas-2022-05-c :-)

Cheers,

Nick. |

| Attachment 1: screenshot-20230914-085054.png

|

|

|

2601

|

13 Sep 2023 |

Stefan Ritt | Forum | Hide start and stop buttons | > Hi Stefan,

>

> > Indeed the ODB settings are obsolete.

>

> I just applied for an account for the wiki.

> I'll try add a note regarding this change.

Please coordinate with Ben Smith at TRIUMF <bsmith@triumf.ca>, who coordinates the documentation.

> Very true. Currently I copied the resources/midas.css into the experiment directory and appended:

>

> #runNumberCell { display: none;}

> #runStatusStartTime { display: none;}

> #runStatusStopTime { display: none;}

> #runStatusSequencer { display: none;}

> #logChannel { display: none;}

>

> See screenshot attached. :-)

>

> But if feels a little clunky to copy the whole file just to add five lines.

> It might be more elegant if status.html looked for a user css file in addition

> to the default ones.

I would not go to change the CSS file. You only can hide some tables. But in a while I'm sure you

want to ADD new things, which you only can do by editing the status.html file. You don't have to

change midas/resources/status.html, but can make your own "custom status", name it differently, and

link /Custom/Default in the ODB to it. This way it does not get overwritten if you pull midas.

> The logging channels are easily removed with the css (see attachment), but it might be

> nice if the string "Run Status" table title was also configurable by css. For this

> slow control system I'd probably change it to something like "GSC Status". Again

> this is a minor thing, I could trivially do this by copying the resources/status.html

> to the experiment directory and editing it.

See above. I agree that the status.html file is a bit complicated and not so easy to understand

as the CSS file, but you can do much more by editing it.

> Lots of fun new stuff migrating from circa 2012 midas to midas-2022-05-c :-)

I always advise people to frequently pull, they benefit from the newest features and avoid the

huge amount of work to migrate from a 10 year old version.

Best,

Stefan |

|

2602

|

14 Sep 2023 |

Konstantin Olchanski | Forum | Hide start and stop buttons | I believe the original "hide run start / stop" was added specifically for ND280 GSC MIDAS. I do not know

why it was removed. "hide pause / resume" is still there. I will restore them. Hiding logger channel

section should probably be automatic of there is no /logger/channels, I can check if it works and what

happens if there is more than one logger channel. K.O. |

|

2603

|

14 Sep 2023 |

Nick Hastings | Forum | Hide start and stop buttons | Hi

> > > Indeed the ODB settings are obsolete.

> >

> > I just applied for an account for the wiki.

> > I'll try add a note regarding this change.

>

> Please coordinate with Ben Smith at TRIUMF <bsmith@triumf.ca>, who coordinates the documentation.

I will tread lightly.

> I would not go to change the CSS file. You only can hide some tables. But in a while I'm sure you

> want to ADD new things, which you only can do by editing the status.html file. You don't have to

> change midas/resources/status.html, but can make your own "custom status", name it differently, and

> link /Custom/Default in the ODB to it. This way it does not get overwritten if you pull midas.

We have *many* custom pages. The submenus on the status page:

▸ FGD

▸ TPC

▸ TRIPt

hide custom pages with all sorts of good stuff.

> > The logging channels are easily removed with the css (see attachment), but it might be

> > nice if the string "Run Status" table title was also configurable by css. For this

> > slow control system I'd probably change it to something like "GSC Status". Again

> > this is a minor thing, I could trivially do this by copying the resources/status.html

> > to the experiment directory and editing it.

>

> See above. I agree that the status.html file is a bit complicated and not so easy to understand

> as the CSS file, but you can do much more by editing it.

I may end up doing this since the events and data columns do not provide particularly

useful information in this instance. But for now, the css route seems like a quick and

fairly clean way to remove irrelevant stuff from a prominent place at the top of the page.

> > Lots of fun new stuff migrating from circa 2012 midas to midas-2022-05-c :-)

>

> I always advise people to frequently pull, they benefit from the newest features and avoid the

> huge amount of work to migrate from a 10 year old version.

The long delay was not my choice. The group responsible for the system departed in 2018, and

and were not replaced by the experiment management. Lack of personnel/expertise resulted in

a "if it's not broken then don't fix it" situation. Eventually, the need to update the PCs/OSs

and the imminent introduction of new sub-detectors resulted people agreeing to the update.

Cheers,

Nick. |

|

2604

|

14 Sep 2023 |

Stefan Ritt | Forum | Hide start and stop buttons | > I believe the original "hide run start / stop" was added specifically for ND280 GSC MIDAS. I do not know

> why it was removed. "hide pause / resume" is still there. I will restore them. Hiding logger channel

> section should probably be automatic of there is no /logger/channels, I can check if it works and what

> happens if there is more than one logger channel. K.O.

Very likely it was "forgotten" when the status page was converted to a dynamic page by Shouyi Ma. Since he is

not around any more, it's up to us to adapt status.html if needed.

Stefan |

|

2605

|

15 Sep 2023 |

Stefan Ritt | Forum | Hide start and stop buttons | > I believe the original "hide run start / stop" was added specifically for ND280 GSC MIDAS. I do not know

> why it was removed. "hide pause / resume" is still there. I will restore them. Hiding logger channel

> section should probably be automatic of there is no /logger/channels, I can check if it works and what

> happens if there is more than one logger channel. K.O.

Actually one thing is the functionality of the /Experiment/Start-Stop button in status.html, but the other is

the warning we get from mhttpd:

[mhttpd,ERROR] [mhttpd.cxx:1957:init_mhttpd_odb,ERROR] ODB "/Experiment/Start-Stop Buttons" is obsolete, please

delete it.

This was added by KO on Nov. 29, 2019 (commit 2366eefc). So we have to decide re-enable this feature (and

remove the warning above), or keep it dropped and work on changes of status.hmtl.

Stefan |

|

2811

|

25 Aug 2024 |

Adrian Fisher | Info | Help parsing scdms_v1 data? | Hi! I'm working on creating a ksy file to help with parsing some data, but I'm having trouble finding some information. Right now, I have it set up very rudimentary - it grabs the event header and then uses the data bank size to grab the size of the data, but then I'm needing additional padding after the data bank to reach the next event.

However, there's some irregularity in the "padding" between data banks that I haven't been able to find any documentation for. For some reason, after the data banks, there's sections of data of either 168 or 192 bytes, and it's seemingly arbitrary which size is used.

I'm just wondering if anyone has any information about this so that I'd be able to make some more progress in parsing the data.

The data I'm working with can be found at https://github.com/det-lab/dataReaderWriter/blob/master/data/07180808_1735_F0001.mid.gz

And the ksy file that I've created so far is at https://github.com/det-lab/dataReaderWriter/blob/master/kaitai/ksy/scdms_v1.ksy

There's also a block of data after the odb that runs for 384 bytes that I'm unsure the purpose of, if anyone could point me to some information about that.

Thank you! |

|

2812

|

26 Aug 2024 |

Stefan Ritt | Info | Help parsing scdms_v1 data? | The MIDAS event format is described here:

https://daq00.triumf.ca/MidasWiki/index.php/Event_Structure

All banks are aligned on a 8-byte boundary, so that one has effective 64-bit CPU access.

If you have sections of 168 or 192 bytes, this must be something else, like another bank (scaler event, slow control event, ...).

The easiest for you is to check how this events got created using the bk_create() function.

Best,

Stefan |

|

2813

|

26 Aug 2024 |

Adrian Fisher | Info | Help parsing scdms_v1 data? |

| Stefan Ritt wrote: | The MIDAS event format is described here:

https://daq00.triumf.ca/MidasWiki/index.php/Event_Structure

All banks are aligned on a 8-byte boundary, so that one has effective 64-bit CPU access.

If you have sections of 168 or 192 bytes, this must be something else, like another bank (scaler event, slow control event, ...).

The easiest for you is to check how this events got created using the bk_create() function.

Best,

Stefan |

Upon further investigation, the sections I'm looking at appear to be clusters of headers for empty banks.

Thank you! |

|

2836

|

11 Sep 2024 |

Konstantin Olchanski | Info | Help parsing scdms_v1 data? | Look at the C++ implementation of the MIDAS data file reader, the code is very

simple to follow.

Depending on how old are your data files, you may run into a problem with

misaligned 32-bit data banks. Latest MIDAS creates BANK32A events where all

banks are aligned to 64 bits. old BANK32 format had banks alternating between

aligned and misaligned. old 16-bit BANK format data hopefully you do not have.

If you successfully make a data format description file for MIDAS, please post

it here for the next user.

K.O.

[quote="Adrian Fisher"]Hi! I'm working on creating a ksy file to help with

parsing some data, but I'm having trouble finding some information. Right now, I

have it set up very rudimentary - it grabs the event header and then uses the

data bank size to grab the size of the data, but then I'm needing additional

padding after the data bank to reach the next event.

However, there's some irregularity in the "padding" between data banks that I

haven't been able to find any documentation for. For some reason, after the data

banks, there's sections of data of either 168 or 192 bytes, and it's seemingly

arbitrary which size is used.

I'm just wondering if anyone has any information about this so that I'd be able

to make some more progress in parsing the data.

The data I'm working with can be found at https://github.com/det-

lab/dataReaderWriter/blob/master/data/07180808_1735_F0001.mid.gz

And the ksy file that I've created so far is at https://github.com/det-

lab/dataReaderWriter/blob/master/kaitai/ksy/scdms_v1.ksy

There's also a block of data after the odb that runs for 384 bytes that I'm

unsure the purpose of, if anyone could point me to some information about that.

Thank you![/quote] |

|

1591

|

05 Jul 2019 |

Hassan | Bug Report | Header files missing when trying to compile rootana, roody and analyzer | First of all thank you for all the assistance provided so far, especially making

changes to the code in CMakeList file previously for our configuration.I am not

sure whether this is an appropriate Elog for this matter but we are getting the

following error when trying to make rootana, roody and analyzer on our 64 bit

DAQ machine.

At the bottom of this Elog entry I have provided information about the specifics

of our DAQ machine

Below are the 2 errors we are encountering:

=============================================================================

[hh19285@it038146 ~]$ cd packages/rootana/

[hh19285@it038146 rootana]$ ls

bitbucket-pipelines.yml Dockerfile Doxygen.cxx include libAnalyzer

libMidasInterface libNetDirectory libXmlServer Makefile.old obj

README.md thisrootana.sh

doc Doxyfile examples lib libAnalyzerDisplay

libMidasServer libUnpack Makefile manalyzer old_analyzer

thisrootana.csh

[hh19285@it038146 rootana]$ cd examples/

[hh19285@it038146 examples]$ make

g++ -o TV792Histogram.o -g -O2 -Wall -Wuninitialized -DHAVE_LIBZ

-I/home/hh19285/packages/rootana/include -DHAVE_ROOT -pthread -std=c++11 -m64

-I/usr/include/root -DHAVE_ROOT_XML -DHAVE_ROOT_HTTP -DHAVE_THTTP_SERVER

-DHAVE_MIDAS -DOS_LINUX -Dextname -I/home/hh19285/packages/midas/include -c

TV792Histogram.cxx

In file included from

/home/hh19285/packages/rootana/include/TRootanaDisplay.hxx:5:0,

from

/home/hh19285/packages/rootana/include/TCanvasHandleBase.hxx:13,

from

/home/hh19285/packages/rootana/include/THistogramArrayBase.h:9,

from TV792Histogram.h:5,

from TV792Histogram.cxx:1:

/home/hh19285/packages/rootana/include/TRootanaEventLoop.hxx:24:25: fatal error:

THttpServer.h: No such file or directory

#include "THttpServer.h"

^

compilation terminated.

make: *** [TV792Histogram.o] Error 1

[hh19285@it038146 examples]$

===============================================================================

[hh19285@it038146 analyzer]$ ls

ana.cxx midas2root.cxx TAgilentHistogram.h

TCamacADCHistogram.h TL2249Histogram.h TV1190Histogram.h

TV1720Waveform.h TV1730RawWaveform.h

anaDisplay.cxx README.txt TAnaManager.cxx

TDT724Waveform.cxx TTRB3Histogram.cxx TV1720Correlations.cxx

TV1730DppWaveform.cxx TV792Histogram.cxx

Makefile root_server.cxx TAnaManager.hxx TDT724Waveform.h

TTRB3Histogram.hxx TV1720Correlations.h TV1730DppWaveform.h

TV792Histogram.h

Makefile.old TAgilentHistogram.cxx TCamacADCHistogram.cxx

TL2249Histogram.cxx TV1190Histogram.cxx TV1720Waveform.cxx

TV1730RawWaveform.cxx

[hh19285@it038146 analyzer]$ make

g++ -o TV792Histogram.o -g -O2 -Wall -Wuninitialized -DHAVE_LIBZ -I../include

-DHAVE_ROOT -pthread -std=c++11 -m64 -I/usr/include/root -DHAVE_ROOT_XML

-DHAVE_ROOT_HTTP -DHAVE_THTTP_SERVER -DHAVE_MIDAS -DOS_LINUX -Dextname

-I/home/hh19285/packages/midas/include -c TV792Histogram.cxx

In file included from TV792Histogram.cxx:1:0:

TV792Histogram.h:5:33: fatal error: THistogramArrayBase.h: No such file or directory

#include "THistogramArrayBase.h"

^

compilation terminated.

make: *** [TV792Histogram.o] Error 1

===============================================================================

[hh19285@it038146 ~]$ cd $HOME/packages

[hh19285@it038146 packages]$ git clone https://bitbucket.org/tmidas/roody

Cloning into 'roody'...

remote: Counting objects: 1115, done.

remote: Compressing objects: 100% (470/470), done.

remote: Total 1115 (delta 662), reused 1063 (delta 631)

Receiving objects: 100% (1115/1115), 1.01 MiB | 2.12 MiB/s, done.

Resolving deltas: 100% (662/662), done.

[hh19285@it038146 packages]$ cd roody

[hh19285@it038146 roody]$ make

g++ -O2 -g -Wall -Wuninitialized -fPIC -pthread -std=c++11 -m64

-I/usr/include/root -DNEED_STRLCPY -I. -Iinclude -DHAVE_NETDIRECTORY

-I/home/hh19285/packages/rootana/include -c -MM src/*.cxx > Makefile.depends1

In file included from src/Roody.cxx:42:0:

include/TPeakFindPanel.h:46:23: fatal error: TSpectrum.h: No such file or directory

#include "TSpectrum.h"

^

compilation terminated.

In file included from src/TPeakFindPanel.cxx:12:0:

include/TPeakFindPanel.h:46:23: fatal error: TSpectrum.h: No such file or directory

#include "TSpectrum.h"

^

compilation terminated.

make: [depend] Error 1 (ignored)

sed 's#^#obj/#' Makefile.depends1 > Makefile.depends2

sed 's#^obj/ # #' Makefile.depends2 > Makefile.depends

rm -f Makefile.depends1 Makefile.depends2

mkdir -p bin

mkdir -p obj

mkdir -p lib

cd doxfiles; doxygen roodydox.cfg

Warning: Tag `USE_WINDOWS_ENCODING' at line 11 of file roodydox.cfg has become

obsolete.

To avoid this warning please remove this line from your configuration file or

upgrade it using "doxygen -u"

Warning: Tag `DETAILS_AT_TOP' at line 23 of file roodydox.cfg has become obsolete.

To avoid this warning please remove this line from your configuration file or

upgrade it using "doxygen -u"

Warning: Tag `SHOW_DIRECTORIES' at line 58 of file roodydox.cfg has become obsolete.

To avoid this warning please remove this line from your configuration file or

upgrade it using "doxygen -u"

Warning: Tag `HTML_ALIGN_MEMBERS' at line 122 of file roodydox.cfg has become

obsolete.

To avoid this warning please remove this line from your configuration file or

upgrade it using "doxygen -u"

Warning: Tag `MAX_DOT_GRAPH_WIDTH' at line 220 of file roodydox.cfg has become

obsolete.

To avoid this warning please remove this line from your configuration file or

upgrade it using "doxygen -u"

Warning: Tag `MAX_DOT_GRAPH_HEIGHT' at line 221 of file roodydox.cfg has become

obsolete.

To avoid this warning please remove this line from your configuration file or

upgrade it using "doxygen -u"

Searching for include files...

Searching for example files...

Searching for images...

Searching for dot files...

Searching for msc files...

Searching for files to exclude

Searching for files to process...

Searching for files in directory /home/hh19285/packages/roody/doxfiles

Reading and parsing tag files

Parsing files

Preprocessing /home/hh19285/packages/roody/doxfiles/features.dox...

Parsing file /home/hh19285/packages/roody/doxfiles/features.dox...

Preprocessing /home/hh19285/packages/roody/doxfiles/quickstart.dox...

Parsing file /home/hh19285/packages/roody/doxfiles/quickstart.dox...

Preprocessing /home/hh19285/packages/roody/doxfiles/roody.dox...

Parsing file /home/hh19285/packages/roody/doxfiles/roody.dox...

Preprocessing /home/hh19285/packages/roody/include/MTGListTree.h...

Parsing file /home/hh19285/packages/roody/include/MTGListTree.h...

Preprocessing /home/hh19285/packages/roody/include/Roody.h...

Parsing file /home/hh19285/packages/roody/include/Roody.h...

Preprocessing /home/hh19285/packages/roody/include/RoodyXML.h...

Parsing file /home/hh19285/packages/roody/include/RoodyXML.h...

Preprocessing /home/hh19285/packages/roody/include/TGTextDialog.h...

Parsing file /home/hh19285/packages/roody/include/TGTextDialog.h...

Preprocessing /home/hh19285/packages/roody/include/TPeakFindPanel.h...

Parsing file /home/hh19285/packages/roody/include/TPeakFindPanel.h...

Preprocessing /home/hh19285/packages/roody/src/MTGListTree.cxx...

Parsing file /home/hh19285/packages/roody/src/MTGListTree.cxx...

Preprocessing /home/hh19285/packages/roody/src/Roody.cxx...

Parsing file /home/hh19285/packages/roody/src/Roody.cxx...

Preprocessing /home/hh19285/packages/roody/src/RoodyXML.cxx...

Parsing file /home/hh19285/packages/roody/src/RoodyXML.cxx...

Preprocessing /home/hh19285/packages/roody/src/TGTextDialog.cxx...

Parsing file /home/hh19285/packages/roody/src/TGTextDialog.cxx...

Building group list...

Building directory list...

Building namespace list...

Building file list...

Building class list...

Associating documentation with classes...

Computing nesting relations for classes...

Building example list...

Searching for enumerations...

Searching for documented typedefs...

Searching for members imported via using declarations...

Searching for included using directives...

Searching for documented variables...

Building interface member list...

Building member list...

Searching for friends...

Searching for documented defines...

Computing class inheritance relations...

Computing class usage relations...

Flushing cached template relations that have become invalid...

Creating members for template instances...

Computing class relations...

Add enum values to enums...

Searching for member function documentation...

Building page list...

Search for main page...

Computing page relations...

Determining the scope of groups...

Sorting lists...

Freeing entry tree

Determining which enums are documented

Computing member relations...

Building full member lists recursively...

Adding members to member groups.

Computing member references...

Inheriting documentation...

Generating disk names...

Adding source references...

Adding xrefitems...

Sorting member lists...

Computing dependencies between directories...

Generating citations page...

Counting data structures...

Resolving user defined references...

Finding anchors and sections in the documentation...

Transferring function references...

Combining using relations...

Adding members to index pages...

Generating style sheet...

Generating search indices...

Generating example documentation...

Generating file sources...

Parsing code for file features.dox...

Generating code for file MTGListTree.cxx...

Generating code for file MTGListTree.h...

Parsing code for file quickstart.dox...

Generating code for file Roody.cxx...

Parsing code for file roody.dox...

Generating code for file Roody.h...

Generating code for file RoodyXML.cxx...

Generating code for file RoodyXML.h...

Generating code for file TGTextDialog.cxx...

Generating code for file TGTextDialog.h...

Generating code for file TPeakFindPanel.h...

Generating file documentation...

Generating docs for file features.dox...

Generating docs for file MTGListTree.cxx...

Generating docs for file MTGListTree.h...

Generating docs for file quickstart.dox...

Generating docs for file Roody.cxx...

Generating docs for file roody.dox...

Generating docs for file Roody.h...

Generating docs for file RoodyXML.cxx...

Generating docs for file RoodyXML.h...

Generating docs for file TGTextDialog.cxx...

Generating docs for file TGTextDialog.h...

Generating docs for file TPeakFindPanel.h...

Generating page documentation...

Generating docs for page features...

Generating docs for page quickstart...

Generating group documentation...

Generating class documentation...

Generating docs for compound MemDebug...

Generating docs for compound MTGListTree...

Generating docs for compound OptStatMenu...

Generating docs for compound PadObject...

Generating docs for compound PadObjectVec...

Generating docs for compound Roody...

Generating docs for compound RoodyXML...

Generating docs for compound TGTextDialog...

Generating docs for compound TPeakFindPanel...

Generating namespace index...

Generating graph info page...

Generating directory documentation...

Generating index page...

Generating page index...

Generating module index...

Generating namespace index...

Generating namespace member index...

Generating annotated compound index...

Generating alphabetical compound index...

Generating hierarchical class index...

Generating member index...

Generating file index...

Generating file member index...

Generating example index...

finalizing index lists...

lookup cache used 433/65536 hits=3757 misses=436

finished...

g++ -O2 -g -Wall -Wuninitialized -fPIC -pthread -std=c++11 -m64

-I/usr/include/root -DNEED_STRLCPY -I. -Iinclude -DHAVE_NETDIRECTORY

-I/home/hh19285/packages/rootana/include -c -o obj/main.o src/main.cxx

g++ -O2 -g -Wall -Wuninitialized -fPIC -pthread -std=c++11 -m64

-I/usr/include/root -DNEED_STRLCPY -I. -Iinclude -DHAVE_NETDIRECTORY

-I/home/hh19285/packages/rootana/include -c -o obj/DataSourceTDirectory.o

src/DataSourceTDirectory.cxx

g++ -O2 -g -Wall -Wuninitialized -fPIC -pthread -std=c++11 -m64

-I/usr/include/root -DNEED_STRLCPY -I. -Iinclude -DHAVE_NETDIRECTORY

-I/home/hh19285/packages/rootana/include -c -o obj/Roody.o src/Roody.cxx

In file included from src/Roody.cxx:42:0:

include/TPeakFindPanel.h:46:23: fatal error: TSpectrum.h: No such file or directory

#include "TSpectrum.h"

^

compilation terminated.

make: *** [obj/Roody.o] Error 1

================================================================================

For your reference here is the info about our DAQ machine

[hh19285@it038146 bin]$ uname -a

Linux it038146.users.bris.ac.uk 3.10.0-957.21.2.el7.x86_64 #1 SMP Wed Jun 5

14:26:44 UTC 2019 x86_64 x86_64

x86_64 GNU/Linux

[hh19285@it038146 bin]$ uname -a

Linux it038146.users.bris.ac.uk 3.10.0-957.21.2.el7.x86_64 #1 SMP Wed Jun 5

14:26:44 UTC 2019 x86_64 x86_64

x86_64 GNU/Linux

[hh19285@it038146 bin]$ gcc -v

Using built-in specs.

COLLECT_GCC=gcc

COLLECT_LTO_WRAPPER=/usr/libexec/gcc/x86_64-redhat-linux/4.8.5/lto-wrapper

Target: x86_64-redhat-linux

Configured with: ../configure --prefix=/usr --mandir=/usr/share/man

--infodir=/usr/share/info

--with-bugurl=http://bugzilla.redhat.com/bugzilla --enable-bootstrap

--enable-shared --enable-threads=posix

--enable-checking=release --with-system-zlib --enable-__cxa_atexit

--disable-libunwind-exceptions

--enable-gnu-unique-object --enable-linker-build-id --with-linker-hash-style=gnu

--enable-languages=c,c++,objc,obj-c++,java,fortran,ada,go,lto --enable-plugin

--enable-initfini-array

--disable-libgcj

--with-isl=/builddir/build/BUILD/gcc-4.8.5-20150702/obj-x86_64-redhat-linux/isl-install

--with-cloog=/builddir/build/BUILD/gcc-4.8.5-20150702/obj-x86_64-redhat-linux/cloog-install

--enable-gnu-indirect-function --with-tune=generic --with-arch_32=x86-64

--build=x86_64-redhat-linux

Thread model: posix

gcc version 4.8.5 20150623 (Red Hat 4.8.5-36) (GCC)

[hh19285@it038146 bin]$ lsb_release -a

LSB Version: :core-4.1-amd64:core-4.1-noarch

Distributor ID: CentOS

Description: CentOS Linux release 7.6.1810 (Core)

Release: 7.6.1810

Codename: Core |

|

1592

|

05 Jul 2019 |

Konstantin Olchanski | Bug Report | Header files missing when trying to compile rootana, roody and analyzer | > /home/hh19285/packages/rootana/include/TRootanaEventLoop.hxx:24:25: fatal error:

> THttpServer.h: No such file or directory

> #include "THttpServer.h"

>

> include/TPeakFindPanel.h:46:23: fatal error: TSpectrum.h: No such file or directory

> #include "TSpectrum.h"

>

Your ROOT is strange, missing some standard features. Also installed in a strange place, /usr/include/root.

Did you install ROOT from the EPEL RPM packages? In the last I have seen this ROOT built very strangely, with some standard features disabled for no obvious

reason.

For this reason, I recommend that you install ROOT from the binary distribution at root.cern.ch or build it from source.

For more debugging, please post the output of:

which root-config

root-config --version

root-config --features

root-config --cflags

For reference, here is my output for a typical CentOS7 machine:

daq16:~$ which root-config

/daq/daqshare/olchansk/root/root_v6.12.04_el74_64/bin/root-config

daq16:~$ root-config --version

6.12/04

daq16:~$ root-config --features

asimage astiff builtin_afterimage builtin_ftgl builtin_gl2ps builtin_glew builtin_llvm builtin_lz4 builtin_unuran cling cxx11 exceptions explicitlink fftw3 gdmlgenvector

http imt mathmore minuit2 opengl pch pgsql python roofit shared sqlite ssl thread tmva x11 xft xml

daq16:~$ root-config --cflags -pthread -std=c++11 -m64 -I/daq/daqshare/olchansk/root/root_v6.12.04_el74_64/include

The important one is the --features, see that "http" and "xml" are enabled. "spectrum" used to be an optional feature, I do not think it can be disabled these

days, so your missing "TSpectrum.h" is strange. (But I just think the EPEL ROOT RPMs are built wrong).

K.O. |

|

1607

|

10 Jul 2019 |

Hassan | Bug Report | Header files missing when trying to compile rootana, roody and analyzer | Hi, we have now done a clean install of Root and after some dynamic linking we have been able to make Rootana and analyzer. However we get an error when we try to run analyzer.

--------------------------------------------------------------------------------------------------------------------------------------------------

First of all heres the information requested:

[hh19285@it038146 ~]$ which root-config

/software/root/v6.06.08/bin/root-config

[hh19285@it038146 ~]$ root-config --version

6.06/08

[hh19285@it038146 ~]$ root-config --features

asimage astiff builtin_afterimage builtin_fftw3 builtin_ftgl builtin_freetype builtin_glew builtin_pcre builtin_lzma builtin_davix builtin_gsl builtin_cfitsio builtin_xrootd

builtin_llvm cxx11 cling davix exceptions explicitlink fftw3 fitsio fortran gdml genvector http krb5 mathmore memstat minuit2 opengl pch python roofit shadowpw shared ssl

table thread tmva unuran vc vdt xft xml x11 xrootd

[hh19285@it038146 ~]$ root-config --cflags

-pthread -std=c++11 -Wno-deprecated-declarations -m64 -I/software/root/v6.06.08/include

------------------------------------------------------------------------------------------------------------------------------------------------------

[hh19285@it038146 ~]$ cd ~/online/build/

[hh19285@it038146 build]$ ls

analyzer CMakeCache.txt CMakeFiles cmake_install.cmake data.txt d.txt experimentaldata frontend ft232h.py f.txt iptable_state_2july19.txt Makefile midas.log

[hh19285@it038146 build]$ make

[ 71%] Built target analyzer

[100%] Built target frontend

[hh19285@it038146 build]$ ./analyzer

Warning in <TClassTable::Add>: class TApplication already in TClassTable

Warning in <TClassTable::Add>: class TApplicationImp already in TClassTable

Warning in <TClassTable::Add>: class TAttFill already in TClassTable

Warning in <TClassTable::Add>: class TAttLine already in TClassTable

Warning in <TClassTable::Add>: class TAttMarker already in TClassTable

Warning in <TClassTable::Add>: class TAttPad already in TClassTable

Warning in <TClassTable::Add>: class TAttAxis already in TClassTable

Warning in <TClassTable::Add>: class TAttText already in TClassTable

Warning in <TClassTable::Add>: class TAtt3D already in TClassTable

Warning in <TClassTable::Add>: class TAttBBox already in TClassTable

Warning in <TClassTable::Add>: class TAttBBox2D already in TClassTable

Warning in <TClassTable::Add>: class TBenchmark already in TClassTable

Warning in <TClassTable::Add>: class TBrowser already in TClassTable

Warning in <TClassTable::Add>: class TBrowserImp already in TClassTable

Warning in <TClassTable::Add>: class TBuffer already in TClassTable

Warning in <TClassTable::Add>: class TRootIOCtor already in TClassTable

Warning in <TClassTable::Add>: class TCanvasImp already in TClassTable

Warning in <TClassTable::Add>: class TColor already in TClassTable

Warning in <TClassTable::Add>: class TColorGradient already in TClassTable

Warning in <TClassTable::Add>: class TLinearGradient already in TClassTable

Warning in <TClassTable::Add>: class TRadialGradient already in TClassTable

Warning in <TClassTable::Add>: class TContextMenu already in TClassTable

Warning in <TClassTable::Add>: class TContextMenuImp already in TClassTable

Warning in <TClassTable::Add>: class TControlBarImp already in TClassTable

Warning in <TClassTable::Add>: class TInspectorImp already in TClassTable

Warning in <TClassTable::Add>: class TDatime already in TClassTable

Warning in <TClassTable::Add>: class TDirectory already in TClassTable

Warning in <TClassTable::Add>: class TEnv already in TClassTable

Warning in <TClassTable::Add>: class TEnvRec already in TClassTable

Warning in <TClassTable::Add>: class TFileHandler already in TClassTable

Warning in <TClassTable::Add>: class TGuiFactory already in TClassTable

Warning in <TClassTable::Add>: class TStyle already in TClassTable

Warning in <TClassTable::Add>: class TVirtualX already in TClassTable

Warning in <TClassTable::Add>: class TVirtualPad already in TClassTable

Warning in <TClassTable::Add>: class TVirtualViewer3D already in TClassTable

Warning in <TClassTable::Add>: class TBuffer3D already in TClassTable

Warning in <TClassTable::Add>: class TGLManager already in TClassTable

Warning in <TClassTable::Add>: class TVirtualGLPainter already in TClassTable

Warning in <TClassTable::Add>: class TVirtualGLManip already in TClassTable

Warning in <TClassTable::Add>: class TVirtualPS already in TClassTable

Warning in <TClassTable::Add>: class TGLPaintDevice already in TClassTable

Warning in <TClassTable::Add>: class TVirtualPadPainter already in TClassTable

Warning in <TClassTable::Add>: class TVirtualPadEditor already in TClassTable

Warning in <TClassTable::Add>: class TVirtualFFT already in TClassTable

Warning in <TClassTable::Add>: class __gnu_cxx::__normal_iterator<char*,string> already in TClassTable

Warning in <TClassTable::Add>: class __gnu_cxx::__normal_iterator<const char*,string> already in TClassTable

Warning in <TClassTable::Add>: class __gnu_cxx::__normal_iterator<string*,vector<string> > already in TClassTable

Warning in <TClassTable::Add>: class __gnu_cxx::__normal_iterator<const string*,vector<string> > already in TClassTable

Warning in <TClassTable::Add>: class reverse_iterator<__gnu_cxx::__normal_iterator<string*,vector<string> > > already in TClassTable

Warning in <TClassTable::Add>: class __gnu_cxx::__normal_iterator<TString*,vector<TString> > already in TClassTable

Warning in <TClassTable::Add>: class __gnu_cxx::__normal_iterator<const TString*,vector<TString> > already in TClassTable

Warning in <TClassTable::Add>: class reverse_iterator<__gnu_cxx::__normal_iterator<TString*,vector<TString> > > already in TClassTable

Warning in <TClassTable::Add>: class FileStat_t already in TClassTable

Warning in <TClassTable::Add>: class UserGroup_t already in TClassTable

Warning in <TClassTable::Add>: class SysInfo_t already in TClassTable

Warning in <TClassTable::Add>: class CpuInfo_t already in TClassTable

Warning in <TClassTable::Add>: class MemInfo_t already in TClassTable

Warning in <TClassTable::Add>: class ProcInfo_t already in TClassTable

Warning in <TClassTable::Add>: class RedirectHandle_t already in TClassTable

Warning in <TClassTable::Add>: class TExec already in TClassTable

Warning in <TClassTable::Add>: class TFolder already in TClassTable

Warning in <TClassTable::Add>: class TMacro already in TClassTable

Warning in <TClassTable::Add>: class TMD5 already in TClassTable

Warning in <TClassTable::Add>: class TMemberInspector already in TClassTable

Warning in <TClassTable::Add>: class TMessageHandler already in TClassTable

Warning in <TClassTable::Add>: class TNamed already in TClassTable

Warning in <TClassTable::Add>: class TObjString already in TClassTable

Warning in <TClassTable::Add>: class TObject already in TClassTable

Warning in <TClassTable::Add>: class TRemoteObject already in TClassTable

Warning in <TClassTable::Add>: class TPoint already in TClassTable

Warning in <TClassTable::Add>: class TProcessID already in TClassTable

Warning in <TClassTable::Add>: class TProcessUUID already in TClassTable

Warning in <TClassTable::Add>: class TProcessEventTimer already in TClassTable

Warning in <TClassTable::Add>: class TRef already in TClassTable

Warning in <TClassTable::Add>: class TROOT already in TClassTable

Warning in <TClassTable::Add>: class TRegexp already in TClassTable

Warning in <TClassTable::Add>: class TPRegexp already in TClassTable

Warning in <TClassTable::Add>: class TPMERegexp already in TClassTable

Warning in <TClassTable::Add>: class TRefCnt already in TClassTable

Warning in <TClassTable::Add>: class TSignalHandler already in TClassTable

Warning in <TClassTable::Add>: class TStdExceptionHandler already in TClassTable

Warning in <TClassTable::Add>: class TStopwatch already in TClassTable

Warning in <TClassTable::Add>: class TStorage already in TClassTable

Warning in <TClassTable::Add>: class TString already in TClassTable

Warning in <TClassTable::Add>: class TStringLong already in TClassTable

Warning in <TClassTable::Add>: class TStringToken already in TClassTable

Warning in <TClassTable::Add>: class TSubString already in TClassTable

Warning in <TClassTable::Add>: class TSysEvtHandler already in TClassTable

Warning in <TClassTable::Add>: class TSystem already in TClassTable

Warning in <TClassTable::Add>: class TSystemFile already in TClassTable

Warning in <TClassTable::Add>: class TSystemDirectory already in TClassTable

Warning in <TClassTable::Add>: class TTask already in TClassTable

Warning in <TClassTable::Add>: class TTime already in TClassTable

Warning in <TClassTable::Add>: class TTimer already in TClassTable

Warning in <TClassTable::Add>: class TQObject already in TClassTable

Warning in <TClassTable::Add>: class TQObjSender already in TClassTable

Warning in <TClassTable::Add>: class TQClass already in TClassTable

Warning in <TClassTable::Add>: class TQConnection already in TClassTable

Warning in <TClassTable::Add>: class TQCommand already in TClassTable

Warning in <TClassTable::Add>: class TQUndoManager already in TClassTable

Warning in <TClassTable::Add>: class TUUID already in TClassTable

Warning in <TClassTable::Add>: class TPluginHandler already in TClassTable

Warning in <TClassTable::Add>: class TPluginManager already in TClassTable

Warning in <TClassTable::Add>: class Event_t already in TClassTable

Warning in <TClassTable::Add>: class SetWindowAttributes_t already in TClassTable

Warning in <TClassTable::Add>: class WindowAttributes_t already in TClassTable

Warning in <TClassTable::Add>: class GCValues_t already in TClassTable

Warning in <TClassTable::Add>: class ColorStruct_t already in TClassTable

Warning in <TClassTable::Add>: class PictureAttributes_t already in TClassTable

Warning in <TClassTable::Add>: class Segment_t already in TClassTable

Warning in <TClassTable::Add>: class Point_t already in TClassTable

Warning in <TClassTable::Add>: class Rectangle_t already in TClassTable

Warning in <TClassTable::Add>: class timespec already in TClassTable

Warning in <TClassTable::Add>: class TTimeStamp already in TClassTable

Warning in <TClassTable::Add>: class TFileInfo already in TClassTable

Warning in <TClassTable::Add>: class TFileInfoMeta already in TClassTable

Warning in <TClassTable::Add>: class TFileCollection already in TClassTable

Warning in <TClassTable::Add>: class TVirtualAuth already in TClassTable

Warning in <TClassTable::Add>: class TVirtualMutex already in TClassTable

Warning in <TClassTable::Add>: class TLockGuard already in TClassTable

Warning in <TClassTable::Add>: class TRedirectOutputGuard already in TClassTable

Warning in <TClassTable::Add>: class TVirtualPerfStats already in TClassTable

Warning in <TClassTable::Add>: class TVirtualMonitoringWriter already in TClassTable

Warning in <TClassTable::Add>: class TVirtualMonitoringReader already in TClassTable

Warning in <TClassTable::Add>: class TObjectSpy already in TClassTable

Warning in <TClassTable::Add>: class TObjectRefSpy already in TClassTable

Warning in <TClassTable::Add>: class TUri already in TClassTable

Warning in <TClassTable::Add>: class TUrl already in TClassTable

Warning in <TClassTable::Add>: class TInetAddress already in TClassTable

Warning in <TClassTable::Add>: class TVirtualTableInterface already in TClassTable

Warning in <TClassTable::Add>: class TBase64 already in TClassTable

Warning in <TClassTable::Add>: class TParameter<bool> already in TClassTable

Warning in <TClassTable::Add>: class TParameter<float> already in TClassTable

Warning in <TClassTable::Add>: class TParameter<double> already in TClassTable

Warning in <TClassTable::Add>: class TParameter<int> already in TClassTable

Warning in <TClassTable::Add>: class TParameter<long> already in TClassTable

Warning in <TClassTable::Add>: class TParameter<Long64_t> already in TClassTable

Warning in <TClassTable::Add>: class TArray already in TClassTable

Warning in <TClassTable::Add>: class TArrayC already in TClassTable

Warning in <TClassTable::Add>: class TArrayD already in TClassTable

Warning in <TClassTable::Add>: class TArrayF already in TClassTable

Warning in <TClassTable::Add>: class TArrayI already in TClassTable

Warning in <TClassTable::Add>: class TArrayL already in TClassTable

Warning in <TClassTable::Add>: class TArrayL64 already in TClassTable

Warning in <TClassTable::Add>: class TArrayS already in TClassTable

Warning in <TClassTable::Add>: class TBits already in TClassTable

Warning in <TClassTable::Add>: class TCollection already in TClassTable

Warning in <TClassTable::Add>: class TBtree already in TClassTable

Warning in <TClassTable::Add>: class TBtreeIter already in TClassTable

Warning in <TClassTable::Add>: class TClassTable already in TClassTable

Warning in <TClassTable::Add>: class TClonesArray already in TClassTable

Warning in <TClassTable::Add>: class THashTable already in TClassTable

Warning in <TClassTable::Add>: class THashTableIter already in TClassTable

Warning in <TClassTable::Add>: class TIter already in TClassTable

Warning in <TClassTable::Add>: class TIterator already in TClassTable

Warning in <TClassTable::Add>: class TList already in TClassTable

Warning in <TClassTable::Add>: class TListIter already in TClassTable

Warning in <TClassTable::Add>: class THashList already in TClassTable

Warning in <TClassTable::Add>: class TMap already in TClassTable

Warning in <TClassTable::Add>: class TMapIter already in TClassTable

Warning in <TClassTable::Add>: class TPair already in TClassTable

Warning in <TClassTable::Add>: class TObjArray already in TClassTable

Warning in <TClassTable::Add>: class TObjArrayIter already in TClassTable

Warning in <TClassTable::Add>: class TObjectTable already in TClassTable

Warning in <TClassTable::Add>: class TOrdCollection already in TClassTable

Warning in <TClassTable::Add>: class TOrdCollectionIter already in TClassTable

Warning in <TClassTable::Add>: class TSeqCollection already in TClassTable

Warning in <TClassTable::Add>: class TSortedList already in TClassTable

Warning in <TClassTable::Add>: class TExMap already in TClassTable

Warning in <TClassTable::Add>: class TExMapIter already in TClassTable

Warning in <TClassTable::Add>: class TRefArray already in TClassTable

Warning in <TClassTable::Add>: class TRefArrayIter already in TClassTable

Warning in <TClassTable::Add>: class TRefTable already in TClassTable

Warning in <TClassTable::Add>: class TVirtualCollectionProxy already in TClassTable

Warning in <TClassTable::Add>: class __gnu_cxx::__normal_iterator<int*,vector<int> > already in TClassTable

Warning in <TClassTable::Add>: class __gnu_cxx::__normal_iterator<const int*,vector<int> > already in TClassTable

Warning in <TClassTable::Add>: class reverse_iterator<__gnu_cxx::__normal_iterator<int*,vector<int> > > already in TClassTable

Warning in <TClassTable::Add>: class TBits::TReference already in TClassTable

Warning in <TClassTable::Add>: class TBaseClass already in TClassTable

Warning in <TClassTable::Add>: class TClass already in TClassTable

Warning in <TClassTable::Add>: class TClassStreamer already in TClassTable

Warning in <TClassTable::Add>: class TMemberStreamer already in TClassTable

Warning in <TClassTable::Add>: class TDictAttributeMap already in TClassTable

Warning in <TClassTable::Add>: class TClassRef already in TClassTable

Warning in <TClassTable::Add>: class TClassGenerator already in TClassTable

Warning in <TClassTable::Add>: class TDataMember already in TClassTable

Warning in <TClassTable::Add>: class TOptionListItem already in TClassTable

Warning in <TClassTable::Add>: class TDataType already in TClassTable

Warning in <TClassTable::Add>: class TDictionary already in TClassTable

Warning in <TClassTable::Add>: class TEnumConstant already in TClassTable

Warning in <TClassTable::Add>: class TEnum already in TClassTable

Warning in <TClassTable::Add>: class TFunction already in TClassTable

Warning in <TClassTable::Add>: class TFunctionTemplate already in TClassTable

Warning in <TClassTable::Add>: class ROOT::TSchemaRule already in TClassTable

Warning in <TClassTable::Add>: class ROOT::TSchemaRule::TSources already in TClassTable

Warning in <TClassTable::Add>: class ROOT::Detail::TSchemaRuleSet already in TClassTable

Warning in <TClassTable::Add>: class TGlobal already in TClassTable

Warning in <TClassTable::Add>: class TMethod already in TClassTable

Warning in <TClassTable::Add>: class TMethodArg already in TClassTable

Warning in <TClassTable::Add>: class TMethodCall already in TClassTable

Warning in <TClassTable::Add>: class TInterpreter already in TClassTable

Warning in <TClassTable::Add>: class TClassMenuItem already in TClassTable

Warning in <TClassTable::Add>: class TVirtualIsAProxy already in TClassTable

Warning in <TClassTable::Add>: class TVirtualStreamerInfo already in TClassTable

Warning in <TClassTable::Add>: class TIsAProxy already in TClassTable

Warning in <TClassTable::Add>: class TProtoClass already in TClassTable

Warning in <TClassTable::Add>: class TProtoClass::TProtoRealData already in TClassTable

Warning in <TClassTable::Add>: class TRealData already in TClassTable

Warning in <TClassTable::Add>: class TStreamerArtificial already in TClassTable

Warning in <TClassTable::Add>: class TStreamerBase already in TClassTable

Warning in <TClassTable::Add>: class TStreamerBasicPointer already in TClassTable

Warning in <TClassTable::Add>: class TStreamerLoop already in TClassTable

Warning in <TClassTable::Add>: class TStreamerBasicType already in TClassTable

Warning in <TClassTable::Add>: class TStreamerObject already in TClassTable

Warning in <TClassTable::Add>: class TStreamerObjectAny already in TClassTable

Warning in <TClassTable::Add>: class TStreamerObjectPointer already in TClassTable

Warning in <TClassTable::Add>: class TStreamerObjectAnyPointer already in TClassTable

Warning in <TClassTable::Add>: class TStreamerString already in TClassTable

Warning in <TClassTable::Add>: class TStreamerSTL already in TClassTable

Warning in <TClassTable::Add>: class TStreamerSTLstring already in TClassTable

Warning in <TClassTable::Add>: class TStreamerElement already in TClassTable

Warning in <TClassTable::Add>: class TToggle already in TClassTable

Warning in <TClassTable::Add>: class TToggleGroup already in TClassTable

Warning in <TClassTable::Add>: class TFileMergeInfo already in TClassTable

Warning in <TClassTable::Add>: class TListOfFunctions already in TClassTable

Warning in <TClassTable::Add>: class TListOfFunctionsIter already in TClassTable

Warning in <TClassTable::Add>: class TListOfFunctionTemplates already in TClassTable

Warning in <TClassTable::Add>: class TListOfDataMembers already in TClassTable

Warning in <TClassTable::Add>: class TListOfEnums already in TClassTable

Warning in <TClassTable::Add>: class TListOfEnumsWithLock already in TClassTable

Warning in <TClassTable::Add>: class TListOfEnumsWithLockIter already in TClassTable

Warning in <TClassTable::Add>: class TUnixSystem already in TClassTable

Warning in <TClassTable::Add>: class TThread already in TClassTable

Warning in <TClassTable::Add>: class TConditionImp already in TClassTable

Warning in <TClassTable::Add>: class TCondition already in TClassTable

Warning in <TClassTable::Add>: class TMutex already in TClassTable

Warning in <TClassTable::Add>: class TMutexImp already in TClassTable

Warning in <TClassTable::Add>: class TPosixCondition already in TClassTable

Warning in <TClassTable::Add>: class TPosixMutex already in TClassTable

Warning in <TClassTable::Add>: class TPosixThread already in TClassTable

Warning in <TClassTable::Add>: class TPosixThreadFactory already in TClassTable

Warning in <TClassTable::Add>: class TSemaphore already in TClassTable

Warning in <TClassTable::Add>: class TThreadFactory already in TClassTable

Warning in <TClassTable::Add>: class TThreadImp already in TClassTable

Warning in <TClassTable::Add>: class TRWLock already in TClassTable

Warning in <TClassTable::Add>: class TAtomicCount already in TClassTable

Warning in <TClassTable::Add>: class TBufferFile already in TClassTable

Warning in <TClassTable::Add>: class TDirectoryFile already in TClassTable

Warning in <TClassTable::Add>: class TFile already in TClassTable

Warning in <TClassTable::Add>: class TFileCacheRead already in TClassTable

Warning in <TClassTable::Add>: class TFileCacheWrite already in TClassTable

Warning in <TClassTable::Add>: class TFileMerger already in TClassTable

Warning in <TClassTable::Add>: class TFree already in TClassTable

Warning in <TClassTable::Add>: class TKey already in TClassTable

Warning in <TClassTable::Add>: class TKeyMapFile already in TClassTable

Warning in <TClassTable::Add>: class TMapFile already in TClassTable

Warning in <TClassTable::Add>: class TMapRec already in TClassTable

Warning in <TClassTable::Add>: class TMemFile already in TClassTable

Warning in <TClassTable::Add>: class TArchiveFile already in TClassTable

Warning in <TClassTable::Add>: class TArchiveMember already in TClassTable

Warning in <TClassTable::Add>: class TZIPFile already in TClassTable

Warning in <TClassTable::Add>: class TZIPMember already in TClassTable

Warning in <TClassTable::Add>: class TLockFile already in TClassTable

Warning in <TClassTable::Add>: class TStreamerInfo already in TClassTable

Warning in <TClassTable::Add>: class TCollectionProxyFactory already in TClassTable

Warning in <TClassTable::Add>: class TEmulatedCollectionProxy already in TClassTable

Warning in <TClassTable::Add>: class TEmulatedMapProxy already in TClassTable

Warning in <TClassTable::Add>: class TGenCollectionProxy already in TClassTable

Warning in <TClassTable::Add>: class TGenCollectionProxy::Value already in TClassTable

Warning in <TClassTable::Add>: class TGenCollectionProxy::Method already in TClassTable

Warning in <TClassTable::Add>: class TCollectionStreamer already in TClassTable

Warning in <TClassTable::Add>: class TCollectionClassStreamer already in TClassTable

Warning in <TClassTable::Add>: class TCollectionMemberStreamer already in TClassTable

Warning in <TClassTable::Add>: class TVirtualObject already in TClassTable

Warning in <TClassTable::Add>: class TVirtualArray already in TClassTable

Warning in <TClassTable::Add>: class TFPBlock already in TClassTable

Warning in <TClassTable::Add>: class TFilePrefetch already in TClassTable

Warning in <TClassTable::Add>: class TStreamerInfoActions::TConfiguredAction already in TClassTable

Warning in <TClassTable::Add>: class TStreamerInfoActions::TActionSequence already in TClassTable

Warning in <TClassTable::Add>: class TStreamerInfoActions::TConfiguration already in TClassTable

*** Break *** segmentation violation

===========================================================

There was a crash.

This is the entire stack trace of all threads:

===========================================================

#0 0x00007f7e8c322bbc in waitpid () from /lib64/libc.so.6

#1 0x00007f7e8c2a0ea2 in do_system () from /lib64/libc.so.6

#2 0x00007f7e911b21a4 in TUnixSystem::StackTrace() () from /usr/lib64/root/libCore.so.6.16

#3 0x00007f7e911b3fec in TUnixSystem::DispatchSignals(ESignals) () from /usr/lib64/root/libCore.so.6.16

#4 <signal handler called>

#5 0x00007f7e8c3cca81 in __strlen_sse2_pminub () from /lib64/libc.so.6

#6 0x00007f7e853e0c27 in TCling::TCling(char const*, char const*) () from /software/root/v6.06.08/lib/libCling.so

#7 0x00007f7e853e179e in CreateInterpreter () from /software/root/v6.06.08/lib/libCling.so

#8 0x00007f7e90feb1fc in TROOT::InitInterpreter() () from /usr/lib64/root/libCore.so.6.16

#9 0x00007f7e90feb806 in ROOT::Internal::GetROOT2() () from /usr/lib64/root/libCore.so.6.16

#10 0x00007f7e9107b32d in TApplication::TApplication(char const*, int*, char**, void*, int) () from /usr/lib64/root/libCore.so.6.16

#11 0x00007f7e8e71bdf4 in TRint::TRint(char const*, int*, char**, void*, int, bool) () from /usr/lib64/root/libRint.so.6.16

#12 0x000000000040d362 in main (argc=1, argv=0x7fff4d1d53b8) at /home/hh19285/packages/midas1/src/mana.cxx:5349

===========================================================

The lines below might hint at the cause of the crash.

You may get help by asking at the ROOT forum http://root.cern.ch/forum

Only if you are really convinced it is a bug in ROOT then please submit a

report at http://root.cern.ch/bugs Please post the ENTIRE stack trace

from above as an attachment in addition to anything else

that might help us fixing this issue.

===========================================================

#5 0x00007f7e8c3cca81 in __strlen_sse2_pminub () from /lib64/libc.so.6

#6 0x00007f7e853e0c27 in TCling::TCling(char const*, char const*) () from /software/root/v6.06.08/lib/libCling.so

#7 0x00007f7e853e179e in CreateInterpreter () from /software/root/v6.06.08/lib/libCling.so

#8 0x00007f7e90feb1fc in TROOT::InitInterpreter() () from /usr/lib64/root/libCore.so.6.16

#9 0x00007f7e90feb806 in ROOT::Internal::GetROOT2() () from /usr/lib64/root/libCore.so.6.16

#10 0x00007f7e9107b32d in TApplication::TApplication(char const*, int*, char**, void*, int) () from /usr/lib64/root/libCore.so.6.16

#11 0x00007f7e8e71bdf4 in TRint::TRint(char const*, int*, char**, void*, int, bool) () from /usr/lib64/root/libRint.so.6.16

#12 0x000000000040d362 in main (argc=1, argv=0x7fff4d1d53b8) at /home/hh19285/packages/midas1/src/mana.cxx:5349

===========================================================

> > /home/hh19285/packages/rootana/include/TRootanaEventLoop.hxx:24:25: fatal error:

> > THttpServer.h: No such file or directory

> > #include "THttpServer.h"

> >

> > include/TPeakFindPanel.h:46:23: fatal error: TSpectrum.h: No such file or directory

> > #include "TSpectrum.h"

> >

>

> Your ROOT is strange, missing some standard features. Also installed in a strange place, /usr/include/root.

>

> Did you install ROOT from the EPEL RPM packages? In the last I have seen this ROOT built very strangely, with some standard features disabled for no obvious

> reason.

>

> For this reason, I recommend that you install ROOT from the binary distribution at root.cern.ch or build it from source.

>

> For more debugging, please post the output of:

> which root-config

> root-config --version

> root-config --features

> root-config --cflags

>

> For reference, here is my output for a typical CentOS7 machine:

> daq16:~$ which root-config

> /daq/daqshare/olchansk/root/root_v6.12.04_el74_64/bin/root-config

> daq16:~$ root-config --version

> 6.12/04

> daq16:~$ root-config --features

> asimage astiff builtin_afterimage builtin_ftgl builtin_gl2ps builtin_glew builtin_llvm builtin_lz4 builtin_unuran cling cxx11 exceptions explicitlink fftw3 gdmlgenvector

> http imt mathmore minuit2 opengl pch pgsql python roofit shared sqlite ssl thread tmva x11 xft xml

> daq16:~$ root-config --cflags -pthread -std=c++11 -m64 -I/daq/daqshare/olchansk/root/root_v6.12.04_el74_64/include

>

> The important one is the --features, see that "http" and "xml" are enabled. "spectrum" used to be an optional feature, I do not think it can be disabled these

> days, so your missing "TSpectrum.h" is strange. (But I just think the EPEL ROOT RPMs are built wrong).

>

> K.O. |

|

1611

|

10 Jul 2019 |

Konstantin Olchanski | Bug Report | Header files missing when trying to compile rootana, roody and analyzer | >> [hh19285@it038146 ~]$ which root-config

> /software/root/v6.06.08/bin/root-config

> [hh19285@it038146 ~]$ root-config --cflags

> -pthread -std=c++11 -Wno-deprecated-declarations -m64 -I/software/root/v6.06.08/include

>

> [hh19285@it038146 build]$ ./analyzer

> Warning in <TClassTable::Add>: class TApplication already in TClassTable

> ...

> ...

> #2 0x00007f7e911b21a4 in TUnixSystem::StackTrace() () from /usr/lib64/root/libCore.so.6.16

You have a mismatch. Your root-config thinks ROOT is installed in /software/..., but the crash

dump says your ROOT libraries are in /usr/lib64/root (not in /software/...).

You can confirm that you are linking against the correct ROOT by running cmake with VERBOSE=1

and examine the linker command line to see what library link path is specified for ROOT.

You can confirm which ROOT library is actually used when you run the analyzer

by running "ldd ./analyzer". You should see the same library paths as specified

to the linker (/software/.../lib*.so). A mismatch can be caused by the setting of LD_LIBRARY_PATH

and by 100 other reasons.

I suggest that you remove the "wrong" ROOT before you continue debugging this.

K.O. |

|