| ID |

Date |

Author |

Topic |

Subject |

|

2507

|

10 May 2023 |

Lukas Gerritzen | Suggestion | Desktop notifications for messages | It would be nice to have MIDAS notifications pop up outside of the browser window.

To get enable this myself, I hijacked the speech synthesis and I added the following to mhttpd_speak_now(text) inside mhttpd.js:

let notification = new Notification('MIDAS Message', {

body: text,

});

I couldn't ask for the permission for notifications here, as Firefox threw the error "The Notification permission may only be requested from inside a short running user-generated event handler". Therefore, I added a button to config.html:

<button class="mbutton" onclick="Notification.requestPermission()">Request notification permission</button>

There might be a more elegant solution to request the permission. |

|

2510

|

10 May 2023 |

Stefan Ritt | Suggestion | Desktop notifications for messages |

| Lukas Gerritzen wrote: | | It would be nice to have MIDAS notifications pop up outside of the browser window. |

There are certainly dozens of people who do "I don't like pop-up windows all the time". So this has to come with a switch in the config page to turn it off. If there is a switch "allow pop-up windows", then we have the other fraction of people using Edge/Chrome/Safari/Opera saying "it's not working on my specific browser on version x.y.z". So I'm only willing to add that feature if we are sure it's a standard things working in most environments.

Best,

Stefan |

|

2512

|

10 May 2023 |

Lukas Gerritzen | Suggestion | Desktop notifications for messages |

| Stefan Ritt wrote: |

people using Edge/Chrome/Safari/Opera saying "it's not working on my specific browser on version x.y.z". So I'm only willing to add that feature if we are sure it's a standard things working in most environments.

|

[The API looks pretty standard to me. Firefox, Chrome, Opera have been supporting it for about 9 years, Safari for almost 6. I didn't find out when Edge 14 was released, but they're at version 112 now.

Since browsers don't want to annoy their users, many don't allow websites to ask for permissions without user interaction. So the workflow would be something like: The user has to press a button "please ask for permission", then the browser opens a dialog "do you want to grant this website permission to show notifications?" and only then it works. So I don't think it's an annoying popup-mess, especially since system notifications don't capture the focus and typically vanish after a few seconds. If that feature is hidden behind a button on the config page, it shouldn't lead to surprises. Especially since users can always revoke that permission. |

|

2513

|

11 May 2023 |

Stefan Ritt | Suggestion | Desktop notifications for messages | Ok, I implemented desktop notifications. In the MIDAS config page, you can now enable browser notifications for the different types of messages. Not sure this works perfectly, but a staring point. So please let me know if there is any issue.

Stefan |

|

1385

|

28 Aug 2018 |

Lukas Gerritzen | Bug Report | Deleting Links in ODB via mhttpd | Asume you have a variable foo and a link bar -> foo. When you go to the ODB in

mhttpd, click "Delete" and select bar, it actually deletes foo. bar stays,

stating "<cannot resolve link>". Trying the same in odbedit with rm gives the

expected result (bar is gone, foo is still there).

I'm on the develop branch. |

|

1387

|

28 Aug 2018 |

Konstantin Olchanski | Bug Report | Deleting Links in ODB via mhttpd | > Asume you have a variable foo and a link bar -> foo. When you go to the ODB in

> mhttpd, click "Delete" and select bar, it actually deletes foo. bar stays,

> stating "<cannot resolve link>". Trying the same in odbedit with rm gives the

> expected result (bar is gone, foo is still there).

>

> I'm on the develop branch.

I think I can confirm this. Created a bug report on bitbucket:

https://bitbucket.org/tmidas/midas/issues/148/mhttpd-odb-editor-deletes-wrong-symlink

K.O. |

|

1392

|

29 Aug 2018 |

Stefan Ritt | Bug Report | Deleting Links in ODB via mhttpd | > > Asume you have a variable foo and a link bar -> foo. When you go to the ODB in

> > mhttpd, click "Delete" and select bar, it actually deletes foo. bar stays,

> > stating "<cannot resolve link>". Trying the same in odbedit with rm gives the

> > expected result (bar is gone, foo is still there).

> >

> > I'm on the develop branch.

>

> I think I can confirm this. Created a bug report on bitbucket:

>

> https://bitbucket.org/tmidas/midas/issues/148/mhttpd-odb-editor-deletes-wrong-symlink

>

> K.O.

I fixed this and committed the change. Took me a while since it was in KO's code.

Stefan |

|

633

|

06 Sep 2009 |

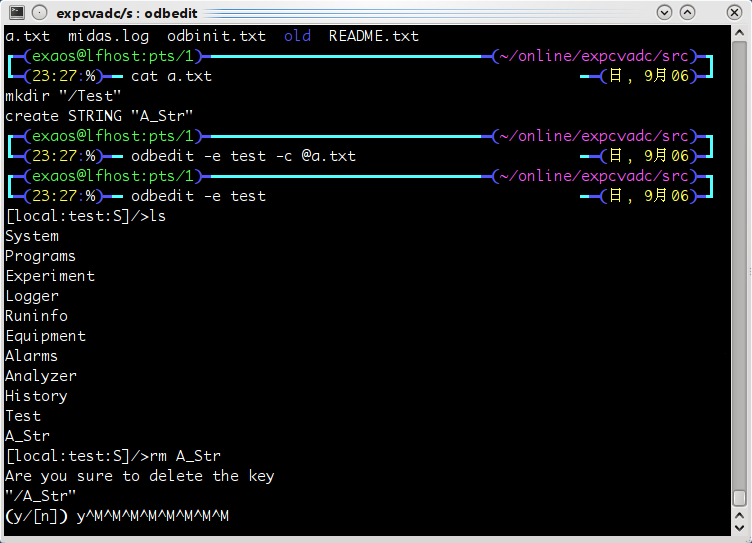

Exaos Lee | Bug Report | Delete key "/A_Str" problem | Another problem while using odbedit.

I tried the batch mode of "odbedit". I created a key as "/A_Str" by mistake and

wanted to delete it. Then "odbedit" failed to accept the "Return" key. Please see

the screen-shot attached. :-( |

| Attachment 1: odbedit.png

|

|

|

639

|

06 Sep 2009 |

Exaos Lee | Bug Report | Delete key "/A_Str" problem | > Another problem while using odbedit.

> I tried the batch mode of "odbedit". I created a key as "/A_Str" by mistake and

> wanted to delete it. Then "odbedit" failed to accept the "Return" key. Please see

> the screen-shot attached. :-(

This bug has been fixed in the latest repository.

I encountered it in svn-r4488. |

|

2943

|

19 Feb 2025 |

Lukas Gerritzen | Bug Report | Default write cache size for new equipments breaks compatibility with older equipments | We have a frontend for slow control with a lot of legacy code. I wanted to add a new equipment using the

mdev_mscb class. It seems like the default write cache size is 1000000B now, which produces error

messages like this:

12:51:20.154 2025/02/19 [SC Frontend,ERROR] [mfe.cxx:620:register_equipment,ERROR] Write cache size mismatch for buffer "SYSTEM": equipment "Environment" asked for 0, while eqiupment "LED" asked for 10000000

12:51:20.154 2025/02/19 [SC Frontend,ERROR] [mfe.cxx:620:register_equipment,ERROR] Write cache size mismatch for buffer "SYSTEM": equipment "LED" asked for 10000000, while eqiupment "Xenon" asked for 0

I can manually change the write cache size in /Equipment/LED/Common/Write cache size to 0. However, if I delete the LED tree in the ODB, then I get the same problems again. It would be nice if I could either choose the size as 0 in the frontend code, or if the defaults were compatible with our legacy code.

The commit that made the write cache size configurable seems to be from 2019: https://bitbucket.org/tmidas/midas/commits/3619ecc6ba1d29d74c16aa6571e40920018184c0 |

|

2944

|

24 Feb 2025 |

Stefan Ritt | Bug Report | Default write cache size for new equipments breaks compatibility with older equipments | The commit that introduced the write cache size check is https://bitbucket.org/tmidas/midas/commits/3619ecc6ba1d29d74c16aa6571e40920018184c0

Unfortunately K.O. added the write cache size to the equipment list, but there is currently no way to change this programmatically from the user frontend code. The options I see are

1) Re-arrange the equipment settings so that the write case size comes to the end of the list which the user initializes, like

{"Trigger", /* equipment name */

{1, 0, /* event ID, trigger mask */

"SYSTEM", /* event buffer */

EQ_POLLED, /* equipment type */

0, /* event source */

"MIDAS", /* format */

TRUE, /* enabled */

RO_RUNNING | /* read only when running */

RO_ODB, /* and update ODB */

100, /* poll for 100ms */

0, /* stop run after this event limit */

0, /* number of sub events */

0, /* don't log history */

"", "", "", "", "", 0, 0},

read_trigger_event, /* readout routine */

10000000, /* write cache size */

},

2) Add a function fe_set_write_case(int size); which goes through the local equipment list and sets the cache size for all equipments to be the same.

I would appreciate some guidance from K.O. who introduced that code above.

/Stefan |

|

2972

|

20 Mar 2025 |

Konstantin Olchanski | Bug Report | Default write cache size for new equipments breaks compatibility with older equipments | I think I added the cache size correctly:

{"Trigger", /* equipment name */

{1, 0, /* event ID, trigger mask */

"SYSTEM", /* event buffer */

EQ_POLLED, /* equipment type */

0, /* event source */

"MIDAS", /* format */

TRUE, /* enabled */

RO_RUNNING | /* read only when running */

RO_ODB, /* and update ODB */

100, /* poll for 100ms */

0, /* stop run after this event limit */

0, /* number of sub events */

0, /* don't log history */

"", "", "", "", "", // frontend_host, name, file_name, status, status_color

0, // hidden

0 // write_cache_size <<--------------------- set this to zero -----------

},

}

K.O. |

|

2973

|

20 Mar 2025 |

Konstantin Olchanski | Bug Report | Default write cache size for new equipments breaks compatibility with older equipments | the main purpose of the event buffer write cache is to prevent high contention for the

event buffer shared memory semaphore in the pathological case of very high rate of very

small events.

there is a computation for this, I have posted it here several times, please search for

it.

in the nutshell, you want the semaphore locking rate to be around 10/sec, 100/sec

maximum. coupled with smallest event size and maximum practical rate (1 MHz), this

yields the cache size.

for slow control events generated at 1 Hz, the write cache is not needed,

write_cache_size value 0 is the correct setting.

for "typical" physics events generated at 1 kHz, write cache size should be set to fit

10 events (100 Hz semaphore locking rate) to 100 events (10 Hz semaphore locking rate).

unfortunately, one cannot have two cache sizes for an event buffer, so typical frontends

that generate physics data at 1 kHz and scalers and counters at 1 Hz must have a non-

zero write cache size (or semaphore locking rate will be too high).

the other consideration, we do not want data to sit in the cache "too long", so the

cache is flushed every 1 second or so.

all this cache stuff could be completely removed, deleted. result would be MIDAS that

works ok for small data sizes and rates, but completely falls down at 10 Gige speeds and

rates.

P.S. why is high semaphore locking rate bad? it turns out that UNIX and Linux semaphores

are not "fair", they do not give equal share to all users, and (for example) an event

buffer writer can "capture" the semaphore so the buffer reader (mlogger) will never get

it, a pathologic situation (to help with this, there is also a "read cache"). Read this

discussion: https://stackoverflow.com/questions/17825508/fairness-setting-in-semaphore-

class

K.O. |

|

2974

|

20 Mar 2025 |

Konstantin Olchanski | Bug Report | Default write cache size for new equipments breaks compatibility with older equipments | > the main purpose of the event buffer write cache

how to control the write cache size:

1) in a frontend, all equipments should ask for the same write cache size, both mfe.c and

tmfe frontends will complain about mismatch

2) tmfe c++ frontend, per tmfe.md, set fEqConfWriteCacheSize in the equipment constructor, in

EqPreInitHandler() or EqInitHandler(), or set it in ODB. default value is 10 Mbytes or value

of MIN_WRITE_CACHE_SIZE define. periodic cache flush period is 0.5 sec in

fFeFlushWriteCachePeriodSec.

3) mfe.cxx frontend, set it in the equipment definition (number after "hidden"), set it in

ODB, or change equipment[i].write_cache_size. Value 0 sets the cache size to

MIN_WRITE_CACHE_SIZE, 10 Mbytes.

4) in bm_set_cache_size(), acceptable values are 0 (disable the cache), MIN_WRITE_CACHE_SIZE

(10 Mbytes) or anything bigger. Attempt to set the cache smaller than 10 Mbytes will set it

to 10 Mbytes and print an error message.

All this is kind of reasonable, as only two settings of write cache size are useful: 0 to

disable it, and 10 Mbytes to limit semaphore locking rate to reasonable value for all event

rate and size values practical on current computers.

In mfe.cxx it looks to be impossible to set the write cache size to 0 (disable it), but

actually all you need is call "bm_set_cache_size(equipment[0].buffer_handle, 0, 0);" in

frontend_init() (or is it in begin_of_run()?).

K.O. |

|

2975

|

20 Mar 2025 |

Konstantin Olchanski | Bug Report | Default write cache size for new equipments breaks compatibility with older equipments | > > the main purpose of the event buffer write cache

> how to control the write cache size:

OP provided insufficient information to say what went wrong for them, but do try this:

1) in ODB, for all equipments, set write_cache_size to 0

2) in the frontend equipment table, set write_cache_size to 0

That is how it is done in the example frontend: examples/experiment/frontend.cxx

If this configuration still produces an error, we may have a bug somewhere, so please let us know how it shakes out.

K.O. |

|

2979

|

21 Mar 2025 |

Stefan Ritt | Bug Report | Default write cache size for new equipments breaks compatibility with older equipments | > All this is kind of reasonable, as only two settings of write cache size are useful: 0 to

> disable it, and 10 Mbytes to limit semaphore locking rate to reasonable value for all event

> rate and size values practical on current computers.

Indeed KO is correct that only 0 and 10MB make sense, and we cannot mix it. Having the cache setting in the equipment table is

cumbersome. If you have 10 slow control equipment (cache size zero), you need to add many zeros at the end of 10 equipment

definitions in the frontend.

I would rather implement a function or variable similar to fEqConfWriteCacheSize in the tmfe framework also in the mfe.cxx

framework, then we need only to add one line llike

gEqConfWriteCacheSize = 0;

in the frontend.cxx file and this will be used for all equipments of that frontend. If nobody complains, I will do that in April when I'm

back from Japan.

Stefan |

|

3004

|

25 Mar 2025 |

Konstantin Olchanski | Bug Report | Default write cache size for new equipments breaks compatibility with older equipments | > > All this is kind of reasonable, as only two settings of write cache size are useful: 0 to

> > disable it, and 10 Mbytes to limit semaphore locking rate to reasonable value for all event

> > rate and size values practical on current computers.

>

> Indeed KO is correct that only 0 and 10MB make sense, and we cannot mix it. Having the cache setting in the equipment table is

> cumbersome. If you have 10 slow control equipment (cache size zero), you need to add many zeros at the end of 10 equipment

> definitions in the frontend.

>

> I would rather implement a function or variable similar to fEqConfWriteCacheSize in the tmfe framework also in the mfe.cxx

> framework, then we need only to add one line llike

>

> gEqConfWriteCacheSize = 0;

>

> in the frontend.cxx file and this will be used for all equipments of that frontend. If nobody complains, I will do that in April when I'm

> back from Japan.

Cache size is per-buffer. If different equipments write into different event buffers, should be possible to set different cache sizes.

Perhaps have:

set_write_cache_size("SYSTEM", 0);

set_write_cache_size("BUF1", bigsize);

with an internal std::map<std::string,size_t>; for write cache size for each named buffer

K.O. |

|

3064

|

21 Jul 2025 |

Stefan Ritt | Bug Report | Default write cache size for new equipments breaks compatibility with older equipments | > Perhaps have:

>

> set_write_cache_size("SYSTEM", 0);

> set_write_cache_size("BUF1", bigsize);

>

> with an internal std::map<std::string,size_t>; for write cache size for each named buffer

Ok, this is implemented now in mfed.cxx and called from examples/experiment/frontend.cxx

Stefan |

|

2451

|

13 Jan 2023 |

Denis Calvet | Suggestion | Debug printf remaining in mhttpd.cxx | Hi everyone,

It has been a long time since my last message. While porting Midas front-end

programs developed for the T2K experiment in 2008 to a modern version of Midas,

I noticed that some debug printf remain in mhttpd.cxx.

A number of debug messages are printed on stdout each time a graph is displayed

in the OldHistory window (which is the style of history plots that will continue

to be used in the upgraded T2K experiment for some technical reasons).

Here is an example of such debug messages:

Load from ODB History/Display/HA_EP_0/V33: hist plot: 8 variables

timescale: 1h, minimum: 0.000000, maximum: 0.000000, zero_ylow: 0, log_axis: 0,

show_run_markers: 1, show_values: 1, show_fill: 0, show_factor 0, enable_factor:

1

var[0] event [HA_TPC_SC][E0M00FEM_V33] formula [], colour [#00AAFF] label

[Mod_0] show_raw_value 0 factor 1.000000 offset 0.000000 voffset 0.000000 order

10

var[1] event [HA_TPC_SC][E0M01FEM_V33] formula [], colour [#FF9000] label

[Mod_1] show_raw_value 0 factor 1.000000 offset 0.000000 voffset 0.000000 order

20

var[2] event [HA_TPC_SC][E0M02FEM_V33] formula [], colour [#FF00A0] label

[Mod_2] show_raw_value 0 factor 1.000000 offset 0.000000 voffset 0.000000 order

30

var[3] event [HA_TPC_SC][E0M03FEM_V33] formula [], colour [#00C030] label

[Mod_3] show_raw_value 0 factor 1.000000 offset 0.000000 voffset 0.000000 order

40

var[4] event [HA_TPC_SC][E0M04FEM_V33] formula [], colour [#A0C0D0] label

[Mod_4] show_raw_value 0 factor 1.000000 offset 0.000000 voffset 0.000000 order

50

var[5] event [HA_TPC_SC][E0M05FEM_V33] formula [], colour [#D0A060] label

[Mod_5] show_raw_value 0 factor 1.000000 offset 0.000000 voffset 0.000000 order

60

var[6] event [HA_TPC_SC][E0M06FEM_V33] formula [], colour [#C04010] label

[Mod_6] show_raw_value 0 factor 1.000000 offset 0.000000 voffset 0.000000 order

70

var[7] event [HA_TPC_SC][E0M07FEM_V33] formula [], colour [#807060] label

[Mod_7] show_raw_value 0 factor 1.000000 offset 0.000000 voffset 0.000000 order

80

read_history: nvars 10, hs_read() status 1

read_history: 0: event [HA_TPC_SC], var [E0M00FEM_V33], index 0, odb index 0,

status 1, num_entries 475

read_history: 1: event [HA_TPC_SC], var [E0M01FEM_V33], index 0, odb index 1,

status 1, num_entries 475

read_history: 2: event [HA_TPC_SC], var [E0M02FEM_V33], index 0, odb index 2,

status 1, num_entries 475

read_history: 3: event [HA_TPC_SC], var [E0M03FEM_V33], index 0, odb index 3,

status 1, num_entries 475

read_history: 4: event [HA_TPC_SC], var [E0M04FEM_V33], index 0, odb index 4,

status 1, num_entries 475

read_history: 5: event [HA_TPC_SC], var [E0M05FEM_V33], index 0, odb index 5,

status 1, num_entries 475

read_history: 6: event [HA_TPC_SC], var [E0M06FEM_V33], index 0, odb index 6,

status 1, num_entries 475

read_history: 7: event [HA_TPC_SC], var [E0M07FEM_V33], index 0, odb index 7,

status 1, num_entries 475

read_history: 8: event [Run transitions], var [State], index 0, odb index -1,

status 1, num_entries 0

read_history: 9: event [Run transitions], var [Run number], index 0, odb index

-2, status 1, num_entries 0

Looking at the source code of mhttpd, these messages originate from:

[mhttpd.cxx line 10279] printf("Load from ODB %s: ", path.c_str());

[mhttpd.cxx line 10280] PrintHistPlot(*hp);

...

[mhttpd.cxx line 8336] int read_history(...

...

[mhttpd.cxx line 8343] int debug = 1;

...

Although seeing many debug messages in the mhttpd does not hurt, these can hide

important error messages. I would rather suggest to turn off these debug

messages by commenting out the relevant lines of code and setting the debug

variable to 0 in read_history().

That is a minor detail and it is always a pleasure to use Midas.

Best regards,

Denis.

|

|

2452

|

13 Jan 2023 |

Stefan Ritt | Suggestion | Debug printf remaining in mhttpd.cxx | These debug statements were added by K.O. on June 24, 2021. He should remove it.

https://bitbucket.org/tmidas/midas/commits/21f7ba89a745cfb0b9d38c66b4297e1aa843cffd

Best,

Stefan |

|