| ID |

Date |

Author |

Topic |

Subject |

|

3205

|

12 Feb 2026 |

Stefan Ritt | Bug Report | omnibus bugs from running DarkLight | Now I had a similar case that the browser froze when showing 24h of data. Tuned out that 80k points are a bit much. I changed the code so that it starts binning when showing 8h or more. This is not a perfect solution. The code should check at which interval data is written, then

automatically start binning when approaching 4000 points or more. That would however require more complicated code, so I leave it as it is right now. Feedback welcome.

Stefan |

|

3204

|

06 Feb 2026 |

Stefan Ritt | Bug Report | omnibus bugs from running DarkLight | > 5) ODB editor "create link" link target name is limited to 32 bytes, links cannot be created (dl-server-2), ok

> on daq17 with current MIDAS.

Works for me with the current version.

> 6) MIDAS on dl-server-2 is "installed" in such a way that there is no connection to the git repository, no way

> to tell what git checkout it corresponds to. Help page just says "branch master", git-revision.h is empty. We

> should discourage such use of MIDAS and promote our "normal way" where for all MIDAS binary programs we know

> what source code and what git commit was used to build them.

Not sure if you have seen it. I make a "install" script to clone, compile and install midas. Some people use this already. Maybe give it a shot. Might need

adjustment for different systems, I certainly haven't covered all corner cases. But on a RaspberryPi it's then just one command to install midas, modify

the environment, install mhttpd as a service and load the ODB defaults. I know that some people want it "their way" and that's ok, but for the novice user

that might be a good starting point. It's documented here: https://daq00.triumf.ca/MidasWiki/index.php/Install_Script

The install script is plain shell, so should be easy to be understandable.

> 6a) MIDAS on dl-server-2 had a pretty much non-functional history display, I reported it here, Stefan provided

> a fix, I manually retrofitted it into dl-server-2 MIDAS and we were able to run the experiment. (good)

>

> 6b) bug (5) suggests that there is more bugs being introduced and fixed without any notice to other midas

> users (via this forum or via the bitbucket bug tracker).

If I would notify everybody about a new bug I introduced, I would know that it's a bug and I would not introduce it ;-)

For all the fixes I encourage people to check the commit log. Doing an elog entry for every bug fix would be considered spam by many people because

that can be many emails per week. The commit log is here: https://bitbucket.org/tmidas/midas/commits/branch/develop

If somebody volunteers to consolidate all commits and make a monthly digest to be posted here, I'm all in favor, but I'm not that individual.

Stefan |

|

3203

|

06 Feb 2026 |

Stefan Ritt | Bug Report | omnibus bugs from running DarkLight | > 4) ODB editor "right click" to "delete" or "rename" key does not work, the right-click menu disappears

> immediately before I can use it (dl-server-2), click on item (it is now blue), right-click menu disappears

> before I can use it (daq17). it looks like a timing or race condition.

Confirmed and fixed: https://bitbucket.org/tmidas/midas/commits/4ba30761683ac9aa558471d2d2d35ce05e72096a

/Stefan |

|

3202

|

06 Feb 2026 |

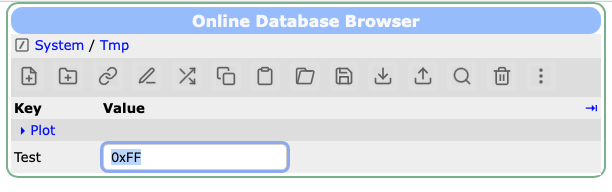

Stefan Ritt | Bug Report | omnibus bugs from running DarkLight | > 3) ODB editor clicking on hex number versus decimal number no longer allows editing in hex, Stefan implemented

> this useful feature and it worked for a while, but now seems broken.

I cannot confirm. See below. There was some issue some time ago, but that's fixed since a while. Please pull on develop and try again.

Here is the change: https://bitbucket.org/tmidas/midas/commits/882974260876529c43811c63a16b4a32395d416a

Stefan |

| Attachment 1: Screenshot_2026-02-06_at_12.44.16.png

|

|

|

3201

|

06 Feb 2026 |

Stefan Ritt | Bug Report | omnibus bugs from running DarkLight | Thanks for the detailed report. Let me reply one-by-one.

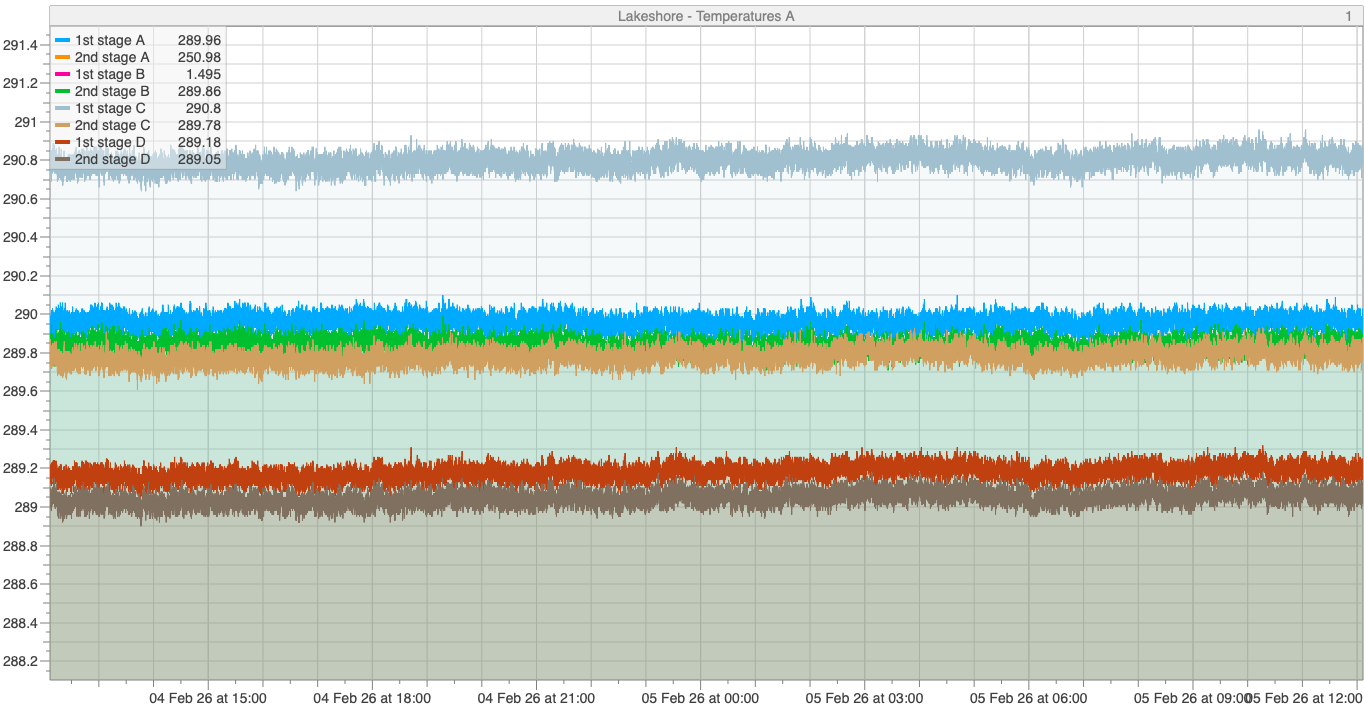

> 1) history plots on 12 hrs, 24 hrs tend to hang with "page not responsive". most plots have 16-20 variables,

> which are recorded at 1/sec interval. (yes, we must see all the variables at the same time and yes, we want to

> record them with fine granularity).

Attached is a similar plot. 8 values recorded every second, displayed for 24h. The backend is actually a Raspberry Pi! I see no issues there. Do you have

the current history version which does the re-binning? Actually the plot below is still without rebinding (see the "1" at the top right), and it contains ~72000 points x 8. The browser does not have any issue

with it.

Stefan |

| Attachment 1: Lakeshore-Temperatures_A-20260204-120628-20260205-120628.png

|

|

|

3200

|

05 Feb 2026 |

Konstantin Olchanski | Bug Report | omnibus bugs from running DarkLight | We finished running the DarkLight experiment and I am reporting accumulated bugs that we have run into.

1) history plots on 12 hrs, 24 hrs tend to hang with "page not responsive". most plots have 16-20 variables,

which are recorded at 1/sec interval. (yes, we must see all the variables at the same time and yes, we want to

record them with fine granularity).

2) starting runs gets into a funny mode if a GEM frontend aborts (hardware problems), transition page reports

"wwrrr, timeout 0", and stays stuck forever, "cancel transition" does nothing. observe it goes from "w"

(waiting) to "r" (RPC running) without a "c" (connecting...) and timeout should never be zero (120 sec in

ODB).

3) ODB editor clicking on hex number versus decimal number no longer allows editing in hex, Stefan implemented

this useful feature and it worked for a while, but now seems broken.

4) ODB editor "right click" to "delete" or "rename" key does not work, the right-click menu disappears

immediately before I can use it (dl-server-2), click on item (it is now blue), right-click menu disappears

before I can use it (daq17). it looks like a timing or race condition.

5) ODB editor "create link" link target name is limited to 32 bytes, links cannot be created (dl-server-2), ok

on daq17 with current MIDAS.

6) MIDAS on dl-server-2 is "installed" in such a way that there is no connection to the git repository, no way

to tell what git checkout it corresponds to. Help page just says "branch master", git-revision.h is empty. We

should discourage such use of MIDAS and promote our "normal way" where for all MIDAS binary programs we know

what source code and what git commit was used to build them.

6a) MIDAS on dl-server-2 had a pretty much non-functional history display, I reported it here, Stefan provided

a fix, I manually retrofitted it into dl-server-2 MIDAS and we were able to run the experiment. (good)

6b) bug (5) suggests that there is more bugs being introduced and fixed without any notice to other midas

users (via this forum or via the bitbucket bug tracker).

K.O. |

|

3199

|

26 Jan 2026 |

Stefan Ritt | Info | Homebrew support for midas | > Actually, these two approaches are slightly different I guess:

> - the installation script you are linking manages the

> installation and the subsequent steps, but doesn't manage the dependencies: for instance on my machine, it didn't find root and so manalyzer

> is built without root support.

> Maybe this is just something to adapt?

Yes indeed. From your perspective, you probably always want ROOT with MIDAS. But at PSI here we have several installation where we do not

need ROOT. These are mainly beamline control PCs which just connect to EPICS or pump station controls replacing Labview installations. All

graphics there is handled with the new mplot graphs which is better in some case.

I therefore added a check into install.sh which tells you explicitly if ROOT is found and included or not. Then it's up to the user to choose to

install ROOT or not.

> Brew on the other hand manages root and so knows how to link these two

> together.

If you really need it, yes.

> - The nice thing I like about brew is that one can "ship bottles" aka compiled version of the code; it is great and fast for

> deployment and avoid compilation issues.

> - I like that your setup does deploy and launch all the necessary executables ! I know brew can do

> this too via brew services (see an example here: https://github.com/Homebrew/homebrew-core/blob/HEAD/Formula/r/rabbitmq.rb#L83 ), maybe worth

> investigating...?

Indeed this is an advantage of brew, and I wholeheartedly support it therefore. If you decide to support this for the midas

community, I would like you to document it at

https://daq00.triumf.ca/MidasWiki/index.php/Installation

Please talk to Ben <bsmith@triumf.ca> who manages the documentation and can give you write access there. The downside is that you will

then become the supporter for the brew and all user requests will be forwarded to you as long as you are willing to maintain the package ;-)

> - Brew relies on code tagging to better manage the bottles, so that it uses the tag to get a well-defined version of the

> code and give a name to the version.

> I had to implement my own tags e.g. midas-mod-2025-12-a to get a release.

> I am not sure how to do in the

> case of midas where the tags are not that frequent...

Yes we always struggle with the tagging (what is a "release", when should we release, ...). Maybe it's the simplest if we tag once per month

blindly with midas-2026-02a or so. In the past KO took care of the tagging, he should reply here with his thoughts.

> Thank you for the feedback, I will make the modifications (aka naming my formula

> ``midas-mod'') so that it doesn't collide with a future official midas one.

Nope. The idea is that YOU do the future official midas realize from now on ;-)

> Concerning the MidasConfig.cmake issue, this is what I need ...

Let's take this offline not to spam others.

Best,

Stefan |

|

3198

|

23 Jan 2026 |

Mathieu Guigue | Info | Homebrew support for midas | Thanks Stefan!

Actually, these two approaches are slightly different I guess:

- the installation script you are linking manages the

installation and the subsequent steps, but doesn't manage the dependencies: for instance on my machine, it didn't find root and so manalyzer

is built without root support.

Maybe this is just something to adapt?

Brew on the other hand manages root and so knows how to link these two

together.

- The nice thing I like about brew is that one can "ship bottles" aka compiled version of the code; it is great and fast for

deployment and avoid compilation issues.

- I like that your setup does deploy and launch all the necessary executables ! I know brew can do

this too via brew services (see an example here: https://github.com/Homebrew/homebrew-core/blob/HEAD/Formula/r/rabbitmq.rb#L83 ), maybe worth

investigating...?

- Brew relies on code tagging to better manage the bottles, so that it uses the tag to get a well-defined version of the

code and give a name to the version.

I had to implement my own tags e.g. midas-mod-2025-12-a to get a release.

I am not sure how to do in the

case of midas where the tags are not that frequent...

Thank you for the feedback, I will make the modifications (aka naming my formula

``midas-mod'') so that it doesn't collide with a future official midas one.

Concerning the MidasConfig.cmake issue, this is what I need

(note that the INTERFACE_INCLUDE_DIRECTORIES is pointing to

/opt/homebrew/Cellar/midas/midas-mod-2025-12-a/)

set_target_properties(midas::midas PROPERTIES

INTERFACE_COMPILE_DEFINITIONS "HAVE_CURL;HAVE_MYSQL;HAVE_SQLITE;HAVE_FTPLIB"

INTERFACE_COMPILE_OPTIONS "-I/opt/homebrew/Cellar/mariadb/12.1.2/include/mysql;-I/opt/homebrew/Cellar/mariadb/12.1.2/include/mysql/mysql"

INTERFACE_INCLUDE_DIRECTORIES "/opt/homebrew/Cellar/midas/midas-mod-2025-12-a/;${_IMPORT_PREFIX}/include"

INTERFACE_LINK_LIBRARIES "/opt/

homebrew/opt/zlib/lib/libz.dylib;-lcurl;-L/opt/homebrew/Cellar/mariadb/12.1.2/lib/ -lmariadb;/opt/homebrew/opt/sqlite/lib/libsqlite3.dylib"

)

whereas by default INTERFACE_INCLUDE_DIRECTORIES points to the source code location (in the case of brew, something like /private/<some-

hash> ).

Brew deletes the source code at the end of the installation, whereas midas seems to rely on the fact that the source code is still

present...

Does it help?

A way to fix is to search for this ``/private'' path and replace it, but this isn't ideal I guess...

This is what I

did in the midas formula:

--------

# Fix broken CMake export paths if they exist

cmake_files = Dir["#{lib}/**/*manalyzer*.cmake"]

cmake_files.each do |file|

if File.read(file).match?(%r{/private/tmp/midas-[^/"]+})

inreplace file, %r{/private/tmp/midas-

[^/"]+},

prefix.to_s

end

inreplace file, %r{/tmp/midas-[^/"]+}, prefix.to_s if File.read(file).match?(%r{/tmp/midas-[^/"]+})

end

cmake_files = Dir["#{lib}/**/*midas*.cmake"]

cmake_files.each do |file|

if File.read(file).match?(%r{/private/tmp/midas-

[^/"]+})

inreplace file, %r{/private/tmp/midas-[^/"]+},

prefix.to_s

end

inreplace file, %r{/tmp/midas-[^/"]+},

prefix.to_s if File.read(file).match?(%r{/tmp/midas-[^/"]+})

end

-----

I guess this code could be changed into some bash commands and

added to your script?

Thank you very much again!

Mathieu

> Hi Mathieu,

>

> thanks for your contribution. Have you looked at the

install.sh script I developed last week:

>

> https://daq00.triumf.ca/MidasWiki/index.php/Install_Script

>

> which basically does the

same, plus it modifies the environment and installs mhttpd as a service.

>

> Actually I modeled the installation after the way Homebrew is

installed in the first place (using curl).

>

> I wonder if the two things can kind of be integrated. Would be great to get with brew always

the newest midas version, and it would also

> check and modify the environment.

>

> If you tell me exactly what is wrong

MidasConfig.cmake.in I'm happy to fix it.

>

> Best,

> Stefan |

|

3197

|

23 Jan 2026 |

Stefan Ritt | Info | Homebrew support for midas | Hi Mathieu,

thanks for your contribution. Have you looked at the install.sh script I developed last week:

https://daq00.triumf.ca/MidasWiki/index.php/Install_Script

which basically does the same, plus it modifies the environment and installs mhttpd as a service.

Actually I modeled the installation after the way Homebrew is installed in the first place (using curl).

I wonder if the two things can kind of be integrated. Would be great to get with brew always the newest midas version, and it would also

check and modify the environment.

If you tell me exactly what is wrong MidasConfig.cmake.in I'm happy to fix it.

Best,

Stefan |

|

3196

|

23 Jan 2026 |

Mathieu Guigue | Info | Homebrew support for midas | Dear all,

For my personal convenience, I started to add an homebrew formula

for

midas (*):

https://github.com/guiguem/homebrew-tap/blob/main/Formula/

midas.rb

It

is convenient in particular to deploy as it automatically gets all

the right

dependencies; for MacOS (**), there are bottles already available.

The

installation would then be

brew tap guiguem/tap

brew install midas

I

thought I

would share it here, if this is helpful to someone else (***).

This

was tested

rather extensively, including the development of manalyzer modules

using this

bottled version as backend.

A possible upgrade (if people are

interested) would

be to develop/deploy a "mainstream" midas version (and I would

rename mine

"midas-mod").

Cheers

Mathieu

-----

Notes:

(*) The version installed

by this

formula is a very slightly modified version of midas, designed to

support more

than 100 front-ends (needed for HK).

See commits here:

https://

gitlab.in2p3.fr/

hk/clocks/midas/-/

commit/060b77afb38e38f9a3155d2606860f12d680f4de

https://

gitlab.in2p3.fr/hk/

clocks/midas/-/

commit/1da438ad1946de7ba697e849de6a6675ac45ebb8

I have the

recollection this

version might not be compatible with the main midas one.

(**) I also have some

stuff for Ubuntu, but Ubuntu seems to do additional

linkage to curl which needs

to be handled (easy).

That being said the

installation from sources works fine!

(***) Some oddities were unraveled such as

the fact that the build_interface

pointing to the source include directory are

still appearing in the

midasConfig.cmake files (leading to issues in brew). This

was fixed by replacing

the faulty path to the final installation location. Maybe

this should be fixed ? |

|

3195

|

20 Jan 2026 |

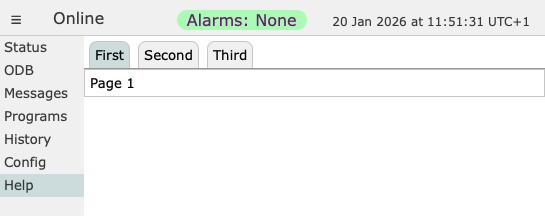

Stefan Ritt | Info | New tabbed custom pages | Tabbed custom pages have been implemented in MIDAS. Below you see and example. The documentation

is here:

https://daq00.triumf.ca/MidasWiki/index.php/Custom_Page#Tabbed_Pages

Stefan |

| Attachment 1: tabbed_page.png

|

|

|

3194

|

14 Jan 2026 |

Derek Fujimoto | Bug Report | DEBUG messages not showing and related | Ok thanks for the quick and clear response!

Derek

> MT_DEBUG messages are there for debugging, not logging. They only go into the SYSMSG buffer and NOT to the log file. If you want anything logged, just use MT_INFO.

>

> Not sure if that's missing in the documentation. Anyhow, there are my original ideas (from 1995 ;-) )

>

> MT_ERROR

> Error message, to be displayed in red

>

> MT_INFO

> Info or status message

>

> MT_DEBUG

> Only sent to SYSMSG buffer, not to midas.log file. Handy if you produce lots of message and don't want to flood the message file. Plus it does not change the timing of your app, since the SYSMSG buffer is much faster than writing

> to a file.

>

> MT_USER

> Message generated interactively by a user, like in the chat window or via the odbedit "msg" command

>

> MT_LOG

> Messages with are only logged but not put into the SYSMSG buffer

>

> MT_TALK

> Messages which should go through the speech synthesis in the browser and are "spoken"

>

> MT_CALL

> Message which would be forwarded to the user via a messaging app (historically this was an actual analog telephone call via a modem ;-) )

>

> If that is missing in the documentation, please feel free to copy/paste it to the appropriate place.

>

>

> Stefan |

|

3193

|

14 Jan 2026 |

Stefan Ritt | Bug Report | DEBUG messages not showing and related | MT_DEBUG messages are there for debugging, not logging. They only go into the SYSMSG buffer and NOT to the log file. If you want anything logged, just use MT_INFO.

Not sure if that's missing in the documentation. Anyhow, there are my original ideas (from 1995 ;-) )

MT_ERROR

Error message, to be displayed in red

MT_INFO

Info or status message

MT_DEBUG

Only sent to SYSMSG buffer, not to midas.log file. Handy if you produce lots of message and don't want to flood the message file. Plus it does not change the timing of your app, since the SYSMSG buffer is much faster than writing

to a file.

MT_USER

Message generated interactively by a user, like in the chat window or via the odbedit "msg" command

MT_LOG

Messages with are only logged but not put into the SYSMSG buffer

MT_TALK

Messages which should go through the speech synthesis in the browser and are "spoken"

MT_CALL

Message which would be forwarded to the user via a messaging app (historically this was an actual analog telephone call via a modem ;-) )

If that is missing in the documentation, please feel free to copy/paste it to the appropriate place.

Stefan |

|

3192

|

14 Jan 2026 |

Derek Fujimoto | Bug Report | DEBUG messages not showing and related | I have an application where I want to (optionally) send my own debugging messages to the midas.log file, but am having some problems with this:

* Messages with type MT_DEBUG don't show up in midas.log or on the messages page (calling cm_msg from python)

* messages.html is missing the DEBUG filter option

* Messages sent to other log files (not midas.log) don't get message banners on the web page. Is this intentional?

So I think either there is a bug, or I need to start MIDAS with some flag to enable debugging. Looking at the source, I don't see why these messages wouldn't get logged.

Any insight would be appreciated! |

|

3191

|

13 Jan 2026 |

Stefan Ritt | Forum | MIDAS installation | I put the documentation under

https://daq00.triumf.ca/MidasWiki/index.php/Install_Script

Would be good if anybody could check that.

Stefan |

|

3190

|

13 Jan 2026 |

Stefan Ritt | Forum | MIDAS installation | Thanks for your feedback. I reworked the installation script, and now also called it "install.sh" since it includes also the git clone. I modeled it after

homebrew a mit (https://brew.sh). This means you can now run the script on a prison linux system with:

/bin/bash -c "$(curl -sS https://bitbucket.org/tmidas/midas/raw/HEAD/install.sh)"

It contains three defaults for MIDASSYS, MIDAS_DIR and MIDAS_EXPT_NAME, but when you run in, you can overwrite these

defaults interactively. The script creates all directories, clones midas, compiles and installs it, installs and runs mhttpd as a system

service, then starts the logger and the example frontend. I also added your PYTHONPATH variable. The RC file is now automatically

detected.

Yes one could add more config files, but I want to have this basic install as simple as possible. If more things are needed, they

should be added as separate scripts or .ODB files.

Please have a look and let me know what you think about. I tested it on a RaspberryPi, but not yet on other systems.

Stefan |

| Attachment 1: install.sh

|

#!/bin/sh

#

# This is a MIDAS install script which installes MIDAS and sets up

# a proper environment for a simple experiment

#

# On a new system you can execut it with

#

# /bin/bash -c "$(curl -sS https://bitbucket.org/tmidas/midas/raw/HEAD/install.sh)"

#

#

# Default directories

#

DEFAULT_MIDASSYS="$HOME/midas"

DEFAULT_MIDAS_DIR="$HOME/online"

DEFAULT_MIDAS_EXPT_NAME="Online"

#

# Query directories

#

# ---- MIDASSYS ----

printf "Clone MIDAS into directory [%s]: " "$DEFAULT_MIDASSYS"

read USER_MIDASSYS

# If user entered something, it must start with a /

if [ -n "$USER_MIDASSYS" ] && [ "${USER_MIDASSYS#/}" = "$USER_MIDASSYS" ]; then

echo "Error: directory must be an absolute path starting with '/'"

exit 1

fi

# Use default if user input is emtpy

MIDASSYS=${USER_MIDASSYS:-$DEFAULT_MIDASSYS}

# ---- MIDAS_DIR ----

printf "MIDAS experiment directory [%s]: " "$DEFAULT_MIDAS_DIR"

read USER_MIDAS_DIR

# If user entered something, it must start with a /

if [ -n "$USER_MIDAS_DIR" ] && [ "${USER_MIDAS_DIR#/}" = "$USER_MIDASDIR" ]; then

echo "Error: directory must be an absolute path starting with '/'"

exit 1

fi

# Use default if user input is emtpy

MIDAS_DIR=${USER_MIDAS_DIR:-$DEFAULT_MIDAS_DIR}

# ---- MIDAS_EXPT_NAME ----

printf "MIDAS experiment name [%s]: " "$DEFAULT_MIDAS_EXPT_NAME"

read USER_MIDAS_EXPT_NAME

# Use default if user input is emtpy

MIDAS_EXPT_NAME=${USER_MIDAS_EXPT_NAME:-$DEFAULT_MIDAS_EXPT_NAME}

echo "\n---------------------------------------------------------------"

printf '\033[1;33m*\033[0m %s\n' "MIDAS system directory : $MIDASSYS"

printf '\033[1;33m*\033[0m %s\n' "MIDAS experiment directory : $MIDAS_DIR"

printf '\033[1;33m*\033[0m %s\n' "MIDAS experiment name : $MIDAS_EXPT_NAME"

#

# Change environment

#

detect_rc_file() {

# $SHELL is usually reliable

case "$(basename "$SHELL")" in

bash)

[ -f "$HOME/.bashrc" ] && echo "$HOME/.bashrc" || echo "$HOME/.bash_profile"

;;

zsh)

# zsh always reads .zshenv

echo "$HOME/.zshenv"

;;

fish)

# fish is not POSIX, but handle gracefully

echo "$HOME/.config/fish/config.fish"

;;

*)

# fallback for sh, dash, etc.

echo "$HOME/.profile"

;;

esac

}

RC_FILE=$(detect_rc_file)

grep -q '>>> MIDAS >>>' "$RC_FILE" 2>/dev/null || cat >>"$RC_FILE" <<'EOF'

# >>> MIDAS >>>

export PATH="__MIDASSYS__/bin:$PATH"

export MIDASSYS="__MIDASSYS__"

export MIDAS_DIR="__MIDAS_DIR__"

export MIDAS_EXPT_NAME="__MIDAS_EXPT_NAME__"

export PYTHONPATH="$PYTHONPATH:__MIDASSYS__/python"

# <<< MIDAS <<<

EOF

# substitue placeholders

sed -i.bak \

-e "s|__MIDASSYS__|$MIDASSYS|g" \

-e "s|__MIDAS_DIR__|$MIDAS_DIR|g" \

-e "s|__MIDAS_EXPT_NAME__|$MIDAS_EXPT_NAME|g" \

"$RC_FILE"

# source environment file

. "$RC_FILE"

printf '\033[1;33m*\033[0m %s\n' "Environment variables written to : $RC_FILE"

echo "---------------------------------------------------------------\n"

printf '\033[1;33m*\033[0m %s\n\n' "Cloning and compiling MIDAS..."

# create experiment directory

mkdir -p "$MIDAS_DIR"

# clone MIDAS

git clone https://bitbucket.org/tmidas/midas.git "$MIDASSYS" --recurse-submodules

# compile MIDAS

mkdir -p "$MIDASSYS/build"

cd "$MIDASSYS/build"

cmake .. -DCMAKE_BUILD_TYPE=RelWithDebInfo

make install

#

# Load initial ODB, opens port 8081 for mhttpd

#

printf '\n\033[1;33m*\033[0m %s\n' "Loading initial ODB"

cd "$MIDASSYS"

odbedit -c "load install.odb" > /dev/null

# start example frontend and logger

printf '\033[1;33m*\033[0m %s\n' "Starting frontend and logger"

$MIDASSYS/build/examples/experiment/frontend -D 1>/dev/null

$MIDASSYS/bin/mlogger -D 1>/dev/null

#

# Installing mhttpd servcice

#

printf '\033[1;33m*\033[0m %s\n' "Installing mhttpd service"

sudo cp mhttpd.service /etc/systemd/system/

sudo systemctl daemon-reload

sudo systemctl enable mhttpd

printf '\033[1;33m*\033[0m %s\n' "Starting mhttpd service"

sudo systemctl start mhttpd

printf '\033[1;33m*\033[0m %s\n' "Finished MIDAS setup"

|

|

3189

|

10 Jan 2026 |

Marius Koeppel | Forum | MIDAS installation | Dear Stefan,

That’s a great idea. For a private home automation project using a Raspberry Pi Zero, I used the

following setup:

https://github.com/makoeppel/midasHome/

This server has been running for about a year now

and reports the temperature in my home. Looking at your script, I think we are conceptually doing the same

thing.

I see three parts I would do slightly differently:

1. I would create an .env file to hold the

variables:

export PATH="$HOME/midas/bin:$PATH"

export MIDASSYS="$HOME/midas"

export MIDAS_DIR="$HOME/online"

export MIDAS_EXPT_NAME="Online"

2. For odbedit -c "load midas_setup.odb" > /dev/null

I would consider making

this a bit more explicit (using odbedit) so users can change the configuration if needed—possibly by

introducing a .conf file.

3. In my project, I used the MIDAS Python bindings, which are currently missing in

your script:

export PYTHONPATH=$PYTHONPATH:$MIDASSYS/python

I also have one additional comment regarding

Docker. I think it would make sense to support a Docker image for MIDAS. This would give non-expert users an

even simpler setup. I created a related project some time ago:

https://github.com/makoeppel/midasDocker

I'd

be happy to help with this part as well.

Best regards,

Marius |

|

3188

|

09 Jan 2026 |

Stefan Ritt | Forum | MIDAS installation | Since we have no many RaspberryPi based control systems running at our lab with midas, I want to

streamline the midas installation, such that a non-expert can install it on these devices.

First, midas has to be cloned under "midas" in the user's home directory with

git clone https://bitbucket.org/tmidas/midas.git --recurse-submodules

For simplicity, this puts midas right into /home/<user>/midas, and not into any "packages" subdirectory

which I believe is not necessary.

Then I wrote a setup script midas/midas_setup.sh which does the following:

- Add midas environment variables to .bashrc / .zschenv depending on the shell being used

- Compile and install midas to midas/bin

- Load an initial ODB which allows insecure http access to port 8081

- Install mhttpd as a system service and start it via systemctl

Since I'm not a linux system expert, the current file might be a bit clumsy. I know that automatic shell

detection can be made much more elaborate, but I wanted a script which can easy be understood even by

non-experts and adapted slightly if needed.

If you know about shell scripts and linux administration, please have a quick look at the attached script

and give me any feedback.

Stefan |

| Attachment 1: midas_setup.sh

|

#!/bin/sh

#

# Change environment

#

f="$HOME/.bashrc"

[ -f "$HOME/.zshenv" ] && f="$HOME/.zshenv"

grep -q '>>> MIDAS >>>' "$f" 2>/dev/null || cat >>"$f" <<'EOF'

# >>> MIDAS >>>

export PATH="$HOME/midas/bin:$PATH"

export MIDASSYS="$HOME/midas"

export MIDAS_DIR="$HOME/online"

export MIDAS_EXPT_NAME="Online"

# <<< MIDAS <<<

EOF

. "$f"

printf '\033[1;33m★\033[0m %s\n' "Environment variables written to $f"

#

# Compile MIDAS

#

printf '\033[1;33m★\033[0m %s\n' "Compiling and installing MIDAS"

mkdir -p "$HOME/midas/build"

cd "$HOME/midas/build"

cmake ..

make install

cd "$HOME/midas"

#

# Load initial ODB, opens port 8081 for mhttpd

#

printf '\033[1;33m★\033[0m %s\n' "Loading initial ODB"

mkdir -p "$HOME/online"

odbedit -c "load midas_setup.odb" > /dev/null

#

# Installing mhttpd servcice

#

printf '\033[1;33m★\033[0m %s\n' "Installing mhttpd service"

sudo cp mhttpd.service /etc/systemd/system/

sudo systemctl daemon-reload

sudo systemctl enable mhttpd

sudo systemctl start mhttpd

printf '\033[1;33m★\033[0m %s\n' "Finished MIDAS setup"

|

|

3187

|

04 Jan 2026 |

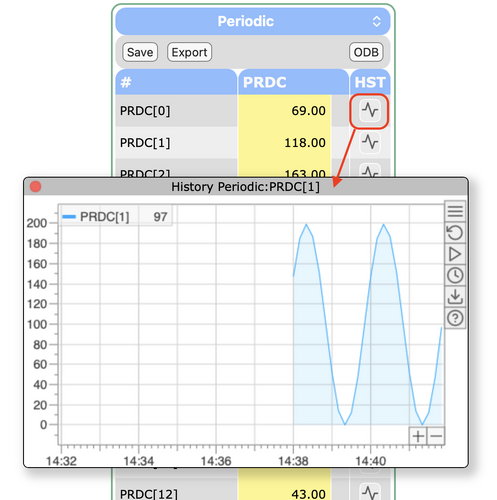

Stefan Ritt | Info | Ad-hoc history plots of slow control equipment | After popular demand and during some quite holidays I implemented ad-hoc history plots. To enable this

for a certain equipment, put

/Equipment/<name>/Settings/History buttons = true

into the ODB. You will then see a graph button after each variable. Pressing this button reveals the history

for this variable, see attachment.

Stefan |

| Attachment 1: Screenshot_2026-01-04_at_14.41.55.png

|

|

|

3186

|

17 Dec 2025 |

Derek Fujimoto | Info | mplot updates | Hello everyone,

Stefan and I have make a few updates to mplot to clean up some of the code and make it more usable directly from Javascript. With one exception this does not change the html interface. The below describes changes up to commit cd9f85c.

Breaking Changes:

- The idea is to have a "graph" be the overarching figure object, whereas the "plot" is the line or points associated with a single dataset.

- Some internal variable names have been changed to reflect this while minimizing breaking changes

- defaultParam renamed defaultGraphParam.

- There is no longer an initialized defaultParam.plot[0], these defaults are now defaultPlotParam which is a separate global variable

- MPlotGraph constructor signature MPlotGraph(divElement, param) changed to MPlotGraph(divElement, graphParam)

- HTML key data-bgcolor changed to data-zero-color as the former was misleading

New Features

- New addPlot() function.

- While the functionality of setData is preserved you can now use addPlot(plotParam) to add a new plot to the graph with minimal copying of the old defaultParam.plot[0]

- Minimal example, from plot_example.html: given some div container with id "P6":

let d = document.getElementById("P6"); // get div

d.mpg = new MPlotGraph(d, { title: { text: "Generated" }}); // make graph

d.mpg.addPlot( { xData: [0, 1, 2, 3, 4], yData: [10, 12, 12, 14, 11] } ); // add plot to the graph

- modifyPlot() and deletePlot() still to come

- New lines styles: none, solid, dashed, dotted

- Barplot-style category plots

|

|