| ID |

Date |

Author |

Topic |

Subject |

|

3144

|

26 Nov 2025 |

Stefan Ritt | Info | switch midas to c++17 |

I switched from

target_compile_features(<target> PUBLIC css_std_17)

to

set(CMAKE_CXX_STANDARD 17)

set(CMAKE_CXX_STANDARD_REQUIRED ON)

set(CMAKE_CXX_EXTENSIONS OFF) # optional: disables GNU extensions

Which is now global in the CMakeLists.txt, so we only have to deal with one location if we want to change it. It also turns off the g++ options. On my

Mac I get now a clean

-std=c++17

Please everybody test on your side. Change is committed.

Stefan |

|

3143

|

25 Nov 2025 |

Konstantin Olchanski | Suggestion | Improve process for adding new variables that can be shown in history plots |

> One aspect of the MIDAS history plotting I find frustrating is the sequence for adding a new history

> variable and then plotting them. ...

this has been a problem in MIDAS for a very long time, we have tried and failed to fix/streamline/improve

it many times and obviously failed. many times.

this is what must happen when adding a new history variable:

1) new /eq/xxx/variables/vvv entry must show up in ODB

1a) add the code for the new data to the frontend

1b) start the frontend

1c) if new variable is added in the frontend init() method, it will be created in ODB, done.

1d) if new variable is added by the event readout code (i.e. via MIDAS event data bank automatically

written to ODB by RO_ODB flags), then we need to start a run.

1e) if this is not periodic event, but beam event or laser event or some other triggered event, we must

also turn on the beam, turn on the laser, etc.

1z) observe that ODB entry exists

3) mlogger must discover this new ODB entry:

3a) mlogger used to rescan ODB each time something in ODB changes, this code was removed

3b) mlogger used to rescan ODB each time a new run is started, this code was removed

3c) mlogger rescans ODB each time it is restarted, this still works.

so sequence is like this: modify, restart frontend, starts a run, stop the run, observe odb entry is

created, restart mlogger, observe new mhf files are created in the history directory.

4) mhttpd must discover that a new mhf file now exists, read it's header to discover history event and

variable names and make them available to the history panel editor.

it is not clear to me that this part currently works:

4a) mhttpd caches the history event list and will not see new variables unless this cache is updated.

4b) when web history panel editor is opened, it is supposed to tell mhttpd to update the cache. I am

pretty sure it worked when I wrote this code...

4c) but obviously it does not work now.

restarting mhttpd obviously makes it load the history data anew, but there is no button to make it happen

on the MIDAS web pages.

so it sounds like I have to sit down and at least retest this whole scheme to see that it works at least

in some way.

then try to improve it:

a) the frontend dance in (1) is unavoidable

b) mlogger must be restarted, I think Stefan and myself agree on this. In theory we could add a web page

button to call an mlogger RPC and have it reload the history. but this button already exists, it's called

"restart mlogger".

c) newly create history event should automatically show up in the history panel editor without any

additional user action

d) document the two intermediate debugging steps:

d1) check that the new variable was created in ODB

d2) check that mlogger created (and writes to) the new history file

this is how I see it and I am open to suggestion, changes, improvements, etc.

K.O. |

|

3142

|

25 Nov 2025 |

Konstantin Olchanski | Suggestion | manalyzer root output file with custom filename including run number |

Hi, Jonas, thank you for reminding me about this. I hope to work on manalyzer in the next few weeks and I will review the ROOT output file name scheme.

K.O.

> Hi all,

>

> Could you please get back to me about whether something like my earlier suggestion might be considered, or if I should set up some workaround to rename files at EOR for our experiments?

>

> https://daq00.triumf.ca/elog-midas/Midas/3042 :

> -----------------------------------------------

> > Hi all,

> >

> > Would it be possible to extend manalyzer to support custom .root file names that include the run number?

> >

> > As far as I understand, the current behavior is as follows:

> > The default filename is ./root_output_files/output%05d.root , which can be customized by the following two command line arguments.

> >

> > -Doutputdirectory: Specify output root file directory

> > -Ooutputfile.root: Specify output root file filename

> >

> > If an output file name is specified with -O, -D is ignored, so the full path should be provided to -O.

> >

> > I am aiming to write files where the filename contains sufficient information to be unique (e.g., experiment, year, and run number). However, if I specify it with -O, this would require restarting manalyzer after every run; a scenario that I would like to avoid if possible.

> >

> > Please find a suggestion of how manalyzer could be extended to introduce this functionality through an additional command line argument at

> > https://bitbucket.org/krieger_j/manalyzer/commits/24f25bc8fe3f066ac1dc576349eabf04d174deec

> >

> > Above code would allow the following call syntax: ' ./manalyzer.exe -O/data/experiment1_%06d.root --OutputNumbered '

> > But note that as is, it would fail if a user specifies an incompatible format such as -Ooutput%s.root .

> >

> > So a safer, but less flexible option might be to instead have the user provide only a prefix, and then attach %05d.root in the code.

> >

> > Thank you for considering these suggestions! |

|

3141

|

25 Nov 2025 |

Konstantin Olchanski | Info | switch midas to c++17 |

> target_compile_features(<target> PUBLIC cxx_std_17)

> which correctly causes a

> c++ -std=gnu++17 ...

I think this is set in a couple of places, yet I do not see any -std=xxx flag passed to the compiler.

(and I am not keen on spending a full day fighting cmake)

(btw, -std=c++17 and -std=gnu++17 are not the same thing, I am not sure how well GNU extensions are supported on

macos)

K.O. |

|

3140

|

25 Nov 2025 |

Konstantin Olchanski | Info | switch midas to c++17 |

>

> > I notice the cmake does not actually pass "-std=c++17" to the c++ compiler, and on U-20, it is likely

> > the default c++14 is used. cmake always does the wrong thing and this will need to be fixed later.

>

> set(CMAKE_CXX_STANDARD 17)

> set(CMAKE_CXX_STANDARD_REQUIRED ON)

>

We used to have this, it is not there now.

>

> Get it to do the right thing?

>

Unlikely, as Stefan reported, asking for C++17 yields -std=gnu++17 which is close enough, but not the same

thing.

For now, it does not matter, U-22 and U-24 are c++17 by default, if somebody accidentally commits c++20

code, builds will fail and somebody will catch it and complain, plus the weekly bitbucket build will bomb-

out.

On U-20, default is c++14 and builds will start bombing out as soon as we commit some c++17 code.

el7 builds have not worked for some time now (a bizarre mysterious error)

el8, el9, el10 likely same situation as Ubuntu.

macos, not sure.

K.O. |

|

3139

|

25 Nov 2025 |

Konstantin Olchanski | Bug Report | Cannot edit values in a subtree containing only a single array of BOOLs using the ODB web interface |

> Thanks --- it looks like this commit resolves the issue for us ...

> Thanks to Ben Smith for pointing us at exactly the right commit

I would like to take the opportunity to encourage all to report bug fixes like this one to this mailing list.

This looks like a serious bug, many midas users would like to know when it was introduced, when found, when fixed

and who takes the credit.

K.O. |

|

3138

|

25 Nov 2025 |

Konstantin Olchanski | Bug Fix | ODB update, branch feature/db_delete_key merged into develop |

> Thanks for the fixes, which I all approve.

>

> There is still a "follow_links" in midas_c_compat.h line 70 for Python. Probably Ben has to look into that. Also

> client.py has it.

Correct, Ben will look at this on the python side.

And I will be updating mvodb soon and fix it there.

K.O. |

|

3137

|

25 Nov 2025 |

Konstantin Olchanski | Bug Fix | fixed db_find_keys() |

Function db_find_keys() added by person unnamed in April 2020 never worked correctly, it is now fixed,

repaired, also unsafe strcpy() replaced by mstrlcpy().

This function is used by msequencer ODBSet function and by odbedit "set" command.

Under all conditions it returned DB_NO_KEYS, only two use cases actually worked:

set runinfo/state 1 <--- no match pattern - works

set run*/state 1 <--- match multiple subdirectories - works

set runinfo/stat* 1 <--- bombs out with DB_NO_KEY

set run*/stat* 1 <--- bombs out with DB_NO_KEY

All four use cases now work.

commit b5b151c9bc174ca5fd71561f61b4288c40924a1a

K.O. |

|

3136

|

25 Nov 2025 |

Stefan Ritt | Bug Fix | ODB update, branch feature/db_delete_key merged into develop |

Thanks for the fixes, which I all approve.

There is still a "follow_links" in midas_c_compat.h line 70 for Python. Probably Ben has to look into that. Also

client.py has it.

Stefan |

|

3135

|

24 Nov 2025 |

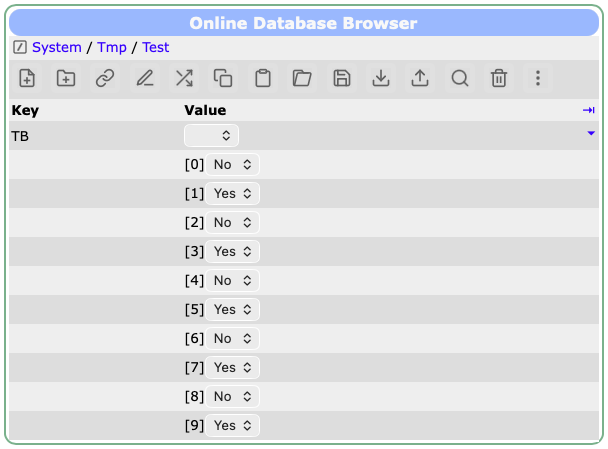

Scott Oser | Bug Report | Cannot edit values in a subtree containing only a single array of BOOLs using the ODB web interface |

| Stefan Ritt wrote: |

|

Can you please update to the latest develop versiokn of midas, and clear your browser cache so that the updated JavaScript midas library is loaded. Should be fixed by now. See attached screen shot where I changed every second value via the ODB editor.

Stefan

|

Thanks --- it looks like this commit (which we just missed by four days when we last updated MIDAS) resolves the issue for us:

https://bitbucket.org/tmidas/midas/commits/6af72c1d218798064a7762bae6e65ad3407de9d1

Thanks to Ben Smith for pointing us at exactly the right commit. |

|

3134

|

24 Nov 2025 |

Stefan Ritt | Bug Report | Cannot edit values in a subtree containing only a single array of BOOLs using the ODB web interface |

Can you please update to the latest develop versiokn of midas, and clear your browser cache so that the updated JavaScript midas library is loaded. Should be fixed by now. See attached screen shot where I changed every second value via the ODB editor.

Stefan

|

| Attachment 1: Screenshot_2025-11-24_at_15.32.12.png

|

|

|

3133

|

24 Nov 2025 |

Stefan Ritt | Info | switch midas to c++17 |

> > > Following discussions at the MIDAS workshop, we propose to move MIDAS from c++11 to c++17.

> > We shall move forward with this change.

>

> It is done. Last c++11 MIDAS is midas-2025-11-a (plus the db_delete_key merge).

>

> I notice the cmake does not actually pass "-std=c++17" to the c++ compiler, and on U-20, it is likely

> the default c++14 is used. cmake always does the wrong thing and this will need to be fixed later.

>

> K.O.

We should either use

set(CMAKE_CSS_STANDARD 17)

or

target_compile_features(<target> PUBLIC cxx_std_17)

but not mix both. We have already the second one for the midas library, like

target_compile_features(objlib PUBLIC cxx_std_17)

which correctly causes a

c++ -std=gnu++17 ...

(at leas in my case).

If the compiler flag is missing for a target, we should add the target_compile_feature above for that target.

Stefan |

|

3132

|

24 Nov 2025 |

Stefan Ritt | Forum | Control external process from inside MIDAS |

Dear all,

Stefan wants to run an external EPICS driver process as a detached process and somehow "glue" it to midas to control it. Actually a similar requirement led to the development of MIDAS in the '90s. We had too many configuration files lying around, to many process to control and interact together with each other and so on. With the development of MIDAS I wanted to integrate all that. There is one ODB to control an parasitize everything, one central process handling to see if processes are alive, raise an alarm if they die, automatically restart them if necessary and so on. Doing this now externally again is orthogonal to the original design concept of MIDAS and will cause many problems. I therefore strongly recommend to to juggle around with systemctl and syslog, but to make everything a MIDAS process. It's simply a "cm_connect_experiment()" and "cm_disconnect_experiment()" in the end. Then you set

/programs/requited = y

and

/programs/start command = <cmd>

You can set the "alarm class" to raise an alarm if the program crashes, and you will see all messages if you use "cm_msg()" inside the program rather than "printf()". Injecting a separate .log file into the system will show things on the message page, but these messages do not go through the SYSMSG buffer, and cannot received by other programs. Maybe you noticed that mhttpd on the status page always shows the last message it received, which can be very helpful. To see if a program is running, you only need a cm_exist() call, which also exists for custom web pages.

Rather than investing time to re-invent the wheel here, better try to modify your EPICS driver process to become a midas process.

If you have an external process which you absolutely cannot modify, I would rather write a wrapper midas program to start the external process, intercept it's output via a pipe, and put its output properly into the midas message system with cm_msg(). In the main loop of your wrapper function you check the external process via whatever you want, and if it dies trigger an alarm or restart it from your wrapper program. You can then set an alarm on your wrapper program to make sure this one is always running.

Best regards,

StefanR |

|

3131

|

21 Nov 2025 |

Konstantin Olchanski | Info | cppcheck |

> (rules for running cppcheck have gone missing, I hope I find them).

found them. I built cppcheck from sources.

520 ~/git/cppcheck/build/bin/cppcheck src/midas.cxx

523 ~/git/cppcheck/build/bin/cppcheck manalyzer/manalyzer.cxx manalyzer/mjsroot.cxx

524 ~/git/cppcheck/build/bin/cppcheck src/tmfe.cxx

525 ~/git/cppcheck/build/bin/cppcheck midasio/*.cxx

526 ~/git/cppcheck/build/bin/cppcheck mjson/*.cxx

K.O. |

|

3130

|

21 Nov 2025 |

Scott Oser | Bug Report | Cannot edit values in a subtree containing only a single array of BOOLs using the ODB web interface |

I think I've found a bug in MIDAS ...

Description: If you have an ODB subtree that contains only an array of BOOLs, you cannot edit them from the ODB webpage, although you can change them using odbedit (and probably from code as well).

(If you use the dropdown menu to change any value from No to Yes, it just flips back to No immediately.)

But if you create a new key in that directory (doesn't seem to matter what), then you can edit the BOOLs from webpage. Delete that key, and once again you can't edit the BOOLs. |

|

3129

|

20 Nov 2025 |

Stefan Mathis | Forum | Control external process from inside MIDAS |

Hi,

unfortunately I don't have a documentation link to the feature, I just know that it works on my machine ;-) The general idea is that you place a custom whatever.log file in Logger/Data Dir (where midas.log is stored). Then, in the Messages page, there will be a "midas" tab and a "whatever" tab - the latter showing the content of whatever.log. One problem here is that timestamping does not work automatically - you have to prepend every line with the same Hours:Minutes:Seconds.Milliseconds Year/Month/Day format that midas.log is using.

So you have a custom Programs page which does systemctl status on your systemd? Does the status then transfer over automatically to the Status page? Is there an example how to write such a custom page?

Best regards

Stefan

> Hi,

>

> > Nick. Regarding the messages: Zaher showed me that it is possible to simply place

> > a custom log file generated by the systemd next to midas.log - then it shows up

> > next to the "midas" tab in "Messages".

>

> Interesting. I'm not familiar with that feature. Do you have link to documentation?

>

> > One follow-up question: Is it possible to use the systemctl status for the

> > "Running on host" column? Or does this even happen automatically?

>

> On the programs page that column is populated by the odb key /System/Clients/<PID>/Host

> so no. However, there is nothing stopping you from writing your own version of

> programs.html to show whatever you want. For example I have a custom programs

> page the includes columns to enable/disable and to reset watchdog alarms.

>

> Cheers,

>

> Nick. |

|

3128

|

20 Nov 2025 |

Nick Hastings | Info | switch midas to c++17 |

> I notice the cmake does not actually pass "-std=c++17" to the c++ compiler, and on U-20, it is likely

> the default c++14 is used. cmake always does the wrong thing and this will need to be fixed later.

Does adding

set(CMAKE_CXX_STANDARD 17)

set(CMAKE_CXX_STANDARD_REQUIRED ON)

Get it to do the right thing?

Cheers,

Nick.. |

|

3127

|

20 Nov 2025 |

Nick Hastings | Forum | Control external process from inside MIDAS |

Hi,

> Nick. Regarding the messages: Zaher showed me that it is possible to simply place

> a custom log file generated by the systemd next to midas.log - then it shows up

> next to the "midas" tab in "Messages".

Interesting. I'm not familiar with that feature. Do you have link to documentation?

> One follow-up question: Is it possible to use the systemctl status for the

> "Running on host" column? Or does this even happen automatically?

On the programs page that column is populated by the odb key /System/Clients/<PID>/Host

so no. However, there is nothing stopping you from writing your own version of

programs.html to show whatever you want. For example I have a custom programs

page the includes columns to enable/disable and to reset watchdog alarms.

Cheers,

Nick. |

|

3126

|

20 Nov 2025 |

Konstantin Olchanski | Info | removal of ROOT support in mlogger |

> > we should finally bite the bullet and remove ROOT support from MIDAS ...

as discussed, HAVE_ROOT and OBSOLETE were removed from mlogger. rmana and ROOT support in manalyzed remain,

untouched.

last rmlogger is in MIDAS tagged midas-2025-11-a.

K.O. |

|

3125

|

20 Nov 2025 |

Konstantin Olchanski | Info | switch midas to c++17 |

> > Following discussions at the MIDAS workshop, we propose to move MIDAS from c++11 to c++17.

> We shall move forward with this change.

It is done. Last c++11 MIDAS is midas-2025-11-a (plus the db_delete_key merge).

I notice the cmake does not actually pass "-std=c++17" to the c++ compiler, and on U-20, it is likely

the default c++14 is used. cmake always does the wrong thing and this will need to be fixed later.

K.O. |