| ID |

Date |

Author |

Topic |

Subject |

|

1864

|

24 Mar 2020 |

Konstantin Olchanski | Forum | Save data to FTP | >

> Since ILL only provides access via SFTP and everything else is not existent or blocked (not even ssh is possible),

> this is the only thing we can work with by now.

>

Oops. SFTP != FTP.

SFTP uses SSH for data transport, so we cannot do it directly from C++ code in MIDAS. (we could use libssh, etc, but...)

I suggest you use lazylogger with the lazy_dache script, replace "dccp" with "sftp", replace "nsls" with an sftp "ls" command.

If you get it working, please consider contributing your lazylogger script to midas. (and does not have to be written in perl, python should work equally well).

For setting up lazylogger with the script method, I am pretty sure I posted the instructions to the forum (ages ago),

let me know if you cannot find them.

Good luck.

K.O. |

|

1751

|

06 Jan 2020 |

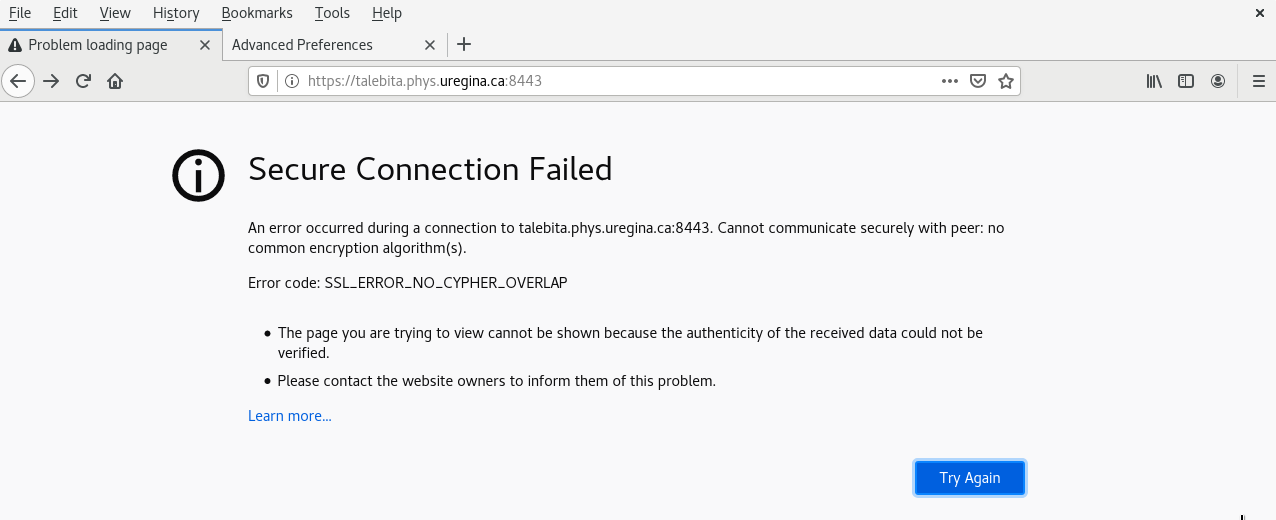

Alireza Talebitaher | Forum | SSL_ERROR_NO_CYPHER_OVERLAP | Hello,

I am quite new in both Linux and MIDAS.

I have install MIDAS on my desktop by going through this link:

https://midas.triumf.ca/MidasWiki/index.php/Quickstart_Linux

in the last step when I send "mhttpd" command and try to open the link

https://localhost:8443 (of course, changing the localhost with my host name), it

failed to connect and shows this error: SSL_ERROR_NO_CYPHER_OVERLAP (please see

attached file includes a screenshot of the error).

I have tried many ways to solve this problem: In Firefox: going to option/privacy

and security/ security and uncheck the option "Block dangerous and deceptive

content". but it does not help.

Looking forward your help

Thanks

Mehran |

| Attachment 1: MIDAS_SSL_ERROR.png

|

|

|

1752

|

06 Jan 2020 |

Konstantin Olchanski | Forum | SSL_ERROR_NO_CYPHER_OVERLAP | > I am quite new in both Linux and MIDAS.

> I have install MIDAS on my desktop by going through this link:

> https://midas.triumf.ca/MidasWiki/index.php/Quickstart_Linux

>

> in the last step when I send "mhttpd" command and try to open the link

> https://localhost:8443 (of course, changing the localhost with my host name), it

> failed to connect and shows this error: SSL_ERROR_NO_CYPHER_OVERLAP (please see

> attached file includes a screenshot of the error).

What Linux? (on most linuxes, run "lsb_release -a")

What version of midas? (run odbedit "ver" command)

What version of firefox? (from the "about firefox" menu)

> I have tried many ways to solve this problem: In Firefox: going to option/privacy

> and security/ security and uncheck the option "Block dangerous and deceptive

> content". but it does not help.

No you cannot fix it from inside firefox. The issue is that the overlap of encryption methods

supported by your firefox and by your openssl library (used by mhttpd) is an empty set.

No common language, so to say, communication is impossible.

So either you have a very old openssl but very new firefox, or a very new openssl but very old

firefox. Both very old or both very new can talk to each other, difficulties start with greater

difference in age, as new (better) encryption methods are added and old (no-longer-secure)

methods are banished.

BTW, for good security we recommend using apache httpd as the https proxy (instead of built-in

https support in mhttpd). (I am not sure what it says in the current documentation). (But apache

httpd will use the same openssl library, so this may not solve your problem. Let's see what

versions of software you are using, per questions above, first).

K.O. |

|

1753

|

07 Jan 2020 |

Alireza Talebitaher | Forum | SSL_ERROR_NO_CYPHER_OVERLAP | Hi Konstantin,

Thanks for your reply,

> What Linux? (on most linuxes, run "lsb_release -a")

> What version of midas? (run odbedit "ver" command)

I am using CentOS 8

> What version of firefox? (from the "about firefox" menu)

Firefox 71.0

Thanks

Mehran

> No you cannot fix it from inside firefox. The issue is that the overlap of encryption methods

> supported by your firefox and by your openssl library (used by mhttpd) is an empty set.

> No common language, so to say, communication is impossible.

>

> So either you have a very old openssl but very new firefox, or a very new openssl but very old

> firefox. Both very old or both very new can talk to each other, difficulties start with greater

> difference in age, as new (better) encryption methods are added and old (no-longer-secure)

> methods are banished.

>

> BTW, for good security we recommend using apache httpd as the https proxy (instead of built-in

> https support in mhttpd). (I am not sure what it says in the current documentation). (But apache

> httpd will use the same openssl library, so this may not solve your problem. Let's see what

> versions of software you are using, per questions above, first).

>

> K.O. |

|

1754

|

07 Jan 2020 |

Konstantin Olchanski | Forum | SSL_ERROR_NO_CYPHER_OVERLAP |

Hi, I have not run midas on Centos-8 yet. Maybe there is a problem with the openssl library there. The Centos-7

instructions for setting up apache httpd proxy are here, with luck they work on centos-8:

https://daq.triumf.ca/DaqWiki/index.php/SLinstall#Configure_HTTPS_server_.28CentOS7.29

K.O.

> Hi Konstantin,

> Thanks for your reply,

>

> > What Linux? (on most linuxes, run "lsb_release -a")

> > What version of midas? (run odbedit "ver" command)

> I am using CentOS 8

>

> > What version of firefox? (from the "about firefox" menu)

> Firefox 71.0

>

> Thanks

> Mehran

>

> > No you cannot fix it from inside firefox. The issue is that the overlap of encryption methods

> > supported by your firefox and by your openssl library (used by mhttpd) is an empty set.

> > No common language, so to say, communication is impossible.

> >

> > So either you have a very old openssl but very new firefox, or a very new openssl but very old

> > firefox. Both very old or both very new can talk to each other, difficulties start with greater

> > difference in age, as new (better) encryption methods are added and old (no-longer-secure)

> > methods are banished.

> >

> > BTW, for good security we recommend using apache httpd as the https proxy (instead of built-in

> > https support in mhttpd). (I am not sure what it says in the current documentation). (But apache

> > httpd will use the same openssl library, so this may not solve your problem. Let's see what

> > versions of software you are using, per questions above, first).

> >

> > K.O. |

|

1755

|

08 Jan 2020 |

Alireza Talebitaher | Forum | SSL_ERROR_NO_CYPHER_OVERLAP | Hi,

As, the link suggests, I perform "yum install -y mod_ssl certwatch crypto-utils" but it complains as:

No match for argument: certwatch

No match for argument: crypto-utils

You may have a look on this link: https://blog.cloudware.bg/en/whats-new-in-centos-linux-8/

Whatís gone?

In with the new, out with the old. CentOS 8 also says goodbye to some features. The OS removes several security functionalities. Among them is the Clevis HTTP pin, Coolkey and crypto-utils.

Cent OS 8 comes with securetty disabled by default. The configuration file is no longer included. You can add it back, but you will have to do it yourself. Another change is that shadow-utils no longer allow all-numeric user and group names.

Thanks

Mehran

> Hi, I have not run midas on Centos-8 yet. Maybe there is a problem with the openssl library there. The Centos-7

> instructions for setting up apache httpd proxy are here, with luck they work on centos-8:

> https://daq.triumf.ca/DaqWiki/index.php/SLinstall#Configure_HTTPS_server_.28CentOS7.29

>

> K.O.

> |

|

1756

|

12 Jan 2020 |

Konstantin Olchanski | Forum | SSL_ERROR_NO_CYPHER_OVERLAP | > I am using CentOS 8 [and]

> Firefox 71.0

I now have a centos-8 machine, I successfully built midas and I confirm that there is a problem.

But I get different errors from you:

- google chrome - does not connect at all (without any useful error message: "This site canít be reached. The

connection was reset.")

- firefox complains about the self-signed certificate, but connects ok, I see the midas status page and it works. "page

info" reports connection is TLS 1.3, encryption TLS_AES_128_GCM_SHA256. However, the function "view certificate"

does not work (without any useful error message).

I tried to run the SSLlabs tool to get some more information from mhttpd, but it does not want to run against mhttpd on

port 8443... I do have a port redirect program somewhere... need to find it...

K.O. |

|

1757

|

12 Jan 2020 |

Konstantin Olchanski | Forum | SSL_ERROR_NO_CYPHER_OVERLAP | > > The Centos-7 instructions for setting up apache httpd proxy are here, with luck they work on centos-8:

> > https://daq.triumf.ca/DaqWiki/index.php/SLinstall#Configure_HTTPS_server_.28CentOS7.29

I now have a centos-8 computer, I followed my instructions and they generally worked.

There is a number of problems with the certbot package that prevent me from writing coherent production quality instructions for centos-8.

But at the end I was successful, httpd runs, gets "A+" rating from SSLlabs, forwards the requests to mhttpd, I can access the midas status page etc.

With luck the certbot packages for centos-8 will be sorted out soon (the apache plugin seems to be missing, this causes the automatic

certificate renewal to not work) and I will update my instructions to include centos-8.

Until then, I recommend that people continue to use centos-7 or the current Ubuntu LTS release.

K.O. |

|

574

|

07 May 2009 |

Konstantin Olchanski | Info | SQL history documentation | Documentation for writing midas history data to SQL (mysql) is now documented in midas doxygen files

(make dox; firefox doxfiles/html/index.html). The corresponding logger and mhttpd code has been

committed for some time now and it is used in production environment by the t2k/nd280 slow controls

daq system at TRIUMF.

svn rev 4487

K.O. |

|

662

|

11 Oct 2009 |

Konstantin Olchanski | Info | SQL history documentation | > Documentation for writing midas history data to SQL (mysql) is now documented in midas doxygen files

> (make dox; firefox doxfiles/html/index.html). The corresponding logger and mhttpd code has been

> committed for some time now and it is used in production environment by the t2k/nd280 slow controls

> daq system at TRIUMF.

> svn rev 4487

An updated version of the SQL history code is now committed to midas svn.

The new code is in history_sql.cxx. It implements a C++ interface to the MIDAS history (history.h),

and improves on the old code history_odbc.cxx by adding:

- an index table for remembering MIDAS names of SQL tables and columns (our midas users like to use funny characters in history

names that are not permitted in SQL table and column names),

- caching of database schema (event names, etc) with a noticeable speedup of mhttpd (there is a new button on the history panel editor

"clear history cache" to make mhttpd reload the database schema.

The updated documentation for using SQL history is committed to midas svn doxfiles/internal.dox (svn up; make dox; firefox

doxfiles/html/index.html), or see my copy on the web at

http://ladd00.triumf.ca/~olchansk/midas/Internal.html#History_sql_internal

svn rev 4595

K.O. |

|

364

|

02 Apr 2007 |

Exaos Lee | Bug Fix | SIGABT of "mlogger" and possible fix | Version: svn 3658

Code: mlogger.c

Problem: After executation of "mlogger", a "SIGABT" appears.

Compiler: GCC 4.1.2, under Ubuntu Linux 7.04 AMD64

Possible fix:

Change the code in "mlogger.c" from

/* append argument "-b" for batch mode without graphics */

rargv[rargc] = (char *) malloc(3);

rargv[rargc++] = "-b";

TApplication theApp("mlogger", &rargc, rargv);

/* free argument memory */

free(rargv[0]);

free(rargv[1]);

free(rargv);

to

/* append argument "-b" for batch mode without graphics */

rargv[rargc] = (char *) malloc(3);

rargv[rargc++] = "-b";

TApplication theApp("mlogger", &rargc, rargv);

/* free argument memory */

free(rargv[0]);

/*free(rargv[1]);*/

free(rargv);

I think, it might be the problem of 'rargv[rargc++]="-b"'. You may try the following test program:

#include <stdio.h>

#include <malloc.h>

int main(int argc, char** argv)

{

char* pp;

pp = (char *)malloc(sizeof(char)*3);

/* pp = "-b"; */

strcpy(pp,"-b");

printf("PP=%s\n",pp);

free(pp);

return 0;

}

If using "pp=\"-b\"", a SIGABRT appears. |

|

366

|

03 Apr 2007 |

Stefan Ritt | Bug Fix | SIGABT of "mlogger" and possible fix |

| Exaos Lee wrote: | Version: svn 3658

Code: mlogger.c

Problem: After executation of "mlogger", a "SIGABT" appears.

Compiler: GCC 4.1.2, under Ubuntu Linux 7.04 AMD64

Possible fix:

Change the code in "mlogger.c" from

/* append argument "-b" for batch mode without graphics */

rargv[rargc] = (char *) malloc(3);

rargv[rargc++] = "-b";

TApplication theApp("mlogger", &rargc, rargv);

/* free argument memory */

free(rargv[0]);

free(rargv[1]);

free(rargv);

to

/* append argument "-b" for batch mode without graphics */

rargv[rargc] = (char *) malloc(3);

rargv[rargc++] = "-b";

TApplication theApp("mlogger", &rargc, rargv);

/* free argument memory */

free(rargv[0]);

/*free(rargv[1]);*/

free(rargv);

I think, it might be the problem of 'rargv[rargc++]="-b"'. |

Actually the line

rargv[rargc] = (char *) malloc(3);

needs also to be removed, since rargv[1] points to "-b" which is some static memory and does not need any allocation. I committed the change. |

|

2387

|

30 Apr 2022 |

Giovanni Mazzitelli | Forum | S3 Object Storage | Dear all,

We are storing raw MIDAS files to S3 Object Storage, but MIDAS file are not

optimised for readout from such kind of storage. There is any work around on

evolution of midas raw output or, beyond simulated posix fs, to develop midas

python library optimised to stream data from S3 (is not really clear to me if this

is possible). |

|

2388

|

30 Apr 2022 |

Konstantin Olchanski | Forum | S3 Object Storage | > We are storing raw MIDAS files to S3 Object Storage, but MIDAS file are not

> optimised for readout from such kind of storage. There is any work around on

> evolution of midas raw output or, beyond simulated posix fs, to develop midas

> python library optimised to stream data from S3 (is not really clear to me if this

> is possible).

We have plans for adding S3 object storage support to lazylogger, but have not gotten

around to it yet.

We do not plan to add this in mlogger. mlogger works well for writing data to locally-

attached storage (local ext4, XFS, ZFS) but always runs into problems with timeouts and

delays when writing to anything network-attached (even writing to NFS).

I envision that each midas raw data file (mid.gz or mid.lz4 or mid.bz2) will

be stored as an S3 object and there will be some kind of directory object

to map object ids to run and subrun numbers.

Choice of best file size is open, normally we use subruns to limit file size to 1-2

Gbytes. If cloud storage prefers some other object size, we can easily to up to 10

Gbytes and down to "a few megabytes" (ODB dumps will have to be turned off for this).

Other than that, in your view, what else is needed to optimize midas files for storage

in the Amazon S3 could?

P.S. For reading files from the cloud, code needs to be written and added to

midasio/midasio.cxx, for example, see the code that is already there for reading ssh-

attached files and dcache/dccp-attached files. (CERN EOS files can be read directly

from POSIX mount point /eos).

K.O. |

|

2393

|

01 May 2022 |

Giovanni Mazzitelli | Forum | S3 Object Storage | > > We are storing raw MIDAS files to S3 Object Storage, but MIDAS file are not

> > optimised for readout from such kind of storage. There is any work around on

> > evolution of midas raw output or, beyond simulated posix fs, to develop midas

> > python library optimised to stream data from S3 (is not really clear to me if this

> > is possible).

>

> We have plans for adding S3 object storage support to lazylogger, but have not gotten

> around to it yet.

>

> We do not plan to add this in mlogger. mlogger works well for writing data to locally-

> attached storage (local ext4, XFS, ZFS) but always runs into problems with timeouts and

> delays when writing to anything network-attached (even writing to NFS).

>

> I envision that each midas raw data file (mid.gz or mid.lz4 or mid.bz2) will

> be stored as an S3 object and there will be some kind of directory object

> to map object ids to run and subrun numbers.

>

> Choice of best file size is open, normally we use subruns to limit file size to 1-2

> Gbytes. If cloud storage prefers some other object size, we can easily to up to 10

> Gbytes and down to "a few megabytes" (ODB dumps will have to be turned off for this).

>

> Other than that, in your view, what else is needed to optimize midas files for storage

> in the Amazon S3 could?

>

> P.S. For reading files from the cloud, code needs to be written and added to

> midasio/midasio.cxx, for example, see the code that is already there for reading ssh-

> attached files and dcache/dccp-attached files. (CERN EOS files can be read directly

> from POSIX mount point /eos).

>

> K.O.

thanks,

actually a I made a small work around with python boto3 library with file of any size (with

the obviously limitation of opportunity and time to wait) eg:

key = 'TMP/run00060.mid.gz'

aws_session = creds.assumed_session("infncloud-iam")

s3 = aws_session.client('s3', endpoint_url="https://minio.cloud.infn.it/",

config=boto3.session.Config(signature_version='s3v4'),verify=True)

s3_obj = s3.get_object(Bucket='cygno-data',Key=key)

buf = BytesIO(s3_obj["Body"]._raw_stream.data)

for event in MidasSream(gzip.GzipFile(fileobj=buf)):

if event.header.is_midas_internal_event():

print("Saw a special event")

continue

bank_names = ", ".join(b.name for b in event.banks.values())

print("Event # %s of type ID %s contains banks %s" % (event.header.serial_number,

event.header.event_id, bank_names))

....

where in MidasSream I just bypass the open, and the code work, but obviously in this way I

need to have all the buffer in memory and it take time get all the buffer. I was interested to

understand if some one have already develop the stream event by event (better in python but

not mandatory). I'll look to the code you underline.

Thanks, G.

|

|

997

|

26 May 2014 |

Clemens Sauerzopf | Forum | Running a frontend on Arduino Yun | Hello,

I'm trying to get a frontend running on an arduino yun single board computer

(cpu is Atheros AR9331 and OS is a linux derivate

http://arduino.cc/en/Main/ArduinoBoardYun )

The idea is to use this device for some slow control for our experiment (ASACUSA

Antihydrogen) we are using midas as main DAQ system and we would like to

integrate the slow control with this small boards. My question is: How can I

compile the midas library with the openwrt crosscompiler? the system discspace

is very limited (6 MB) therefore I don't want to have mysql, zlib an so on.

Other software can be stored on an sd-card.

In the end what I would need is only creating hotlinks to the odb on our server

to get and report the current and desired values.

Do you have any suggestions on how to realize something like that?

Thanks! |

|

998

|

26 May 2014 |

Konstantin Olchanski | Forum | Running a frontend on Arduino Yun | > I'm trying to get a frontend running on an arduino yun single board computer

> (cpu is Atheros AR9331 and OS is a linux derivate

> http://arduino.cc/en/Main/ArduinoBoardYun )

What you want to do should be possible.

Here, the smallest machine we used to run a MIDAS frontend was a 300MHz PowerPC processor inside a

Virtex4 FPGA with 256 Mbytes of RAM. Looks like your machine is a 400MHz MIPS with 64 Mbytes of RAM

so there should be enough hardware available to run a MIDAS frontend underLinux.

One source of trouble could be if your MIPS CPU is running in big-endian mode (MIPS can do either big-

endian or little-endian). MIDAS supports big-endian frontends connecting to little-endian x86 PC hosts,

but with big-endian machines getting less common, this code does not get much testing. If you run into

trouble with this, please let us know and we will fix it for you.

> The idea is to use this device for some slow control for our experiment (ASACUSA

> Antihydrogen) we are using midas as main DAQ system and we would like to

> integrate the slow control with this small boards.

> My question is: How can I compile the midas library with the openwrt crosscompiler?

In the MIDAS Makefile, looks for the "crosscompile" target which we use to cross-build MIDAS for our

PowerPC target using the regular GCC cross compiler chain. If you have very new MIDAS, you will also see

some make targets for ARM Linux machines, also using GCC cross compilers.

> the system discspace is very limited (6 MB) therefore I don't want to have mysql, zlib an so on.

The MIDAS Makefile crosscompiler builds a very minimalistic version of MIDAS - no mysql, no sqlite, etc

requirements for the MIDAS libraries and frontend. zlib may be required but it is not used by frontend

code, so you may try to disable it.

If that is still too big, there is a possibility for building a super-minimal version of MIDAS just for running

cross-compiled frontends. We use this function to build MIDAS for VxWorks. If you want to try that, I

think it is not in the main Makefile, but in the VxWorks Makefile. Let me know if you want this and I can

probable restore this function into the main Makefile fairly quickly.

> Do you have any suggestions on how to realize something like that?

1) cross compile MIDAS (see the Makefile "make crosscompile" target)

2) cross compile your frontend

3) run it, with luck, it will fit into your 64 Mbytes of RAM

If you run into problems, please post them here (so other people can see the problems and the solutions)

K.O. |

|

1002

|

27 May 2014 |

Clemens Sauerzopf | Forum | Running a frontend on Arduino Yun | Ok, I'm currently trying to get things running, setting up a crosscompiler toolchain for the Arduino Yun is fairly

easy, just follow the tutorial on the OpenWrt webpage.

The main problem is that openwrt uses the uClibc library instead of glibc this produces lots of difficulties, first

one is that building of the shared library is complaining about symbol name mismatches, but I guess this can be

fixed somehow, I wont use the midas-shared library, therefore I just disabled it in the Makefile.

The next problem is the backtrace functions tjhat are used within system.c, the functions backtrace and

backtrace_symbols are only available in glibc for a quick fix I just changed the #ifdef directive in a way that this

code is not built.

There is a more tricky problem, the compiler complains about mismatched function defintions:

In file included from include/midasinc.h:17:0,

from include/msystem.h:35,

from src/sequencer.cxx:13:

/home/clemens/arduino/openwrt-yun/build_dir/toolchain-mips_r2_gcc-4.6-linaro_uClibc-0.9.33.2/uClibc-0.9.33.2/include/string.h:495:41:

error: declaration of 'size_t strlcat(char*, const char*, size_t) throw ()' has a different exception specifier

include/midas.h:1955:17: error: from previous declaration 'size_t strlcat(char*, const char*, size_t)'

/home/clemens/arduino/openwrt-yun/build_dir/toolchain-mips_r2_gcc-4.6-linaro_uClibc-0.9.33.2/uClibc-0.9.33.2/include/string.h:498:41:

error: declaration of 'size_t strlcpy(char*, const char*, size_t) throw ()' has a different exception specifier

include/midas.h:1954:17: error: from previous declaration 'size_t strlcpy(char*, const char*, size_t)'

This can be solved by editing the midas.h file:

size_t EXPRT strlcpy(char *dst, const char *src, size_t size); -> size_t EXPRT strlcpy(char *dst, const char *src,

size_t size) __THROW __nonnull ((1, 2));

and

size_t EXPRT strlcat(char *dst, const char *src, size_t size); -> size_t EXPRT strlcat(char *dst, const char *src,

size_t size) __THROW __nonnull ((1, 2));

the same trick has to be done in ../mxml/strlcpy.h

After changing this midas compiles with the crosscompiler and the resulting programs are executable on the Arduino

Yun. I'll report back if I got my frontend to run and connect to the midas server. |

|

1003

|

27 May 2014 |

Konstantin Olchanski | Forum | Running a frontend on Arduino Yun | > Ok, I'm currently trying to get things running, setting up a crosscompiler toolchain for the Arduino Yun is fairly

> easy, just follow the tutorial on the OpenWrt webpage.

>

> The main problem is that openwrt uses the uClibc library instead of glibc this produces lots of difficulties

>

Okey, I see. I do not think we used uClibc with MIDAS yet.

>

> one is that building of the shared library is complaining about symbol name mismatches, but I guess this can be

> fixed somehow, I wont use the midas-shared library, therefore I just disabled it in the Makefile.

>

The shared library is generally not used. The Makefile builds it as a convenience for things like pymidas, etc.

>

> The next problem is the backtrace functions tjhat are used within system.c, the functions backtrace and

> backtrace_symbols are only available in glibc for a quick fix I just changed the #ifdef directive in a way that this

> code is not built.

>

Yes. They should probably be behind an #ifdef GLIBC (whatever the GLIBC identifier is)

>

> There is a more tricky problem, the compiler complains about mismatched function defintions:

>

> error: declaration of 'size_t strlcat(char*, const char*, size_t) throw ()' has a different exception specifier

> error: declaration of 'size_t strlcpy(char*, const char*, size_t) throw ()' has a different exception specifier

>

> This can be solved by editing the midas.h file:

> size_t EXPRT strlcpy(char *dst, const char *src, size_t size); -> size_t EXPRT strlcpy(char *dst, const char *src,

> size_t size) __THROW __nonnull ((1, 2));

>

No need to edit anything, this is controlled by NEED_STRLCPY in the Makefile - to enable our own strlcpy on systems that do not provide it (hello, GLIBC!)

>

> After changing this midas compiles with the crosscompiler and the resulting programs are executable on the Arduino

> Yun. I'll report back if I got my frontend to run and connect to the midas server.

Congratulations!

K.O. |

|

1005

|

28 May 2014 |

Clemens Sauerzopf | Forum | Running a frontend on Arduino Yun | Thank you very much for your input, it finally works. I succeeded in crosscompiling the frontend and running it on the ArduinoYun. The 64 MB RAM is more than

enough to run the mserver and a frontend and connect to a remote midas server over ethernet or wifi.

Yust for reference if someone tries something similar: to directly access the serial interface between the Linux running processor and the Atmel processor it

is required to comment out a line in /etc/inittab: #ttyATH0::askfirst:/bin/ash --login

this line starts a shell on the serial connection, by preventing this it is possible to run more or less unmodified code (serial interface needs to be

Serial1) on the Atmel side and use the linux processor as slow control pc.

Thanks again for your help! |

|