| ID |

Date |

Author |

Topic |

Subject |

|

251

|

27 Mar 2006 |

Sergio Ballestrero | Info | svn@savannah.psi.ch down ? |

> I just tried now and it seemed to work fine. Do you still have the problem?

>

> - Stefan

The problem was still there this morning, shortly after seeing your mail, but seems

to be fixed now.

BTW, which is the best way to submit patches ? I have a version of khyt1331 for Linux

kernel 2.6 (we are running Scientific Linux 4.1), and a few smaller things, mostly in

the examples.

Thanks, Sergio |

|

343

|

11 Feb 2007 |

Konstantin Olchanski | Info | svn and "make indent" trashed my svn checkout tree... |

Fuming, fuming, fuming.

The combination of "make indent" and "svn update" completely trashed my work copy of midas. Half of

the files now show as status "M", half as status "C" ("in conflict"), even those I never edited myself (e.g.

mscb firmware files).

I think what happened as that once I ran "make indent", the indent program did things to the source

files (changed indentation, added spaces in "foo(a,b,c); --> foo(a, b, c);" etc, so now svn thinks that I

edited the files and they are in conflict with later modifications.

I suggest that nobody ever ever ever should use "make indent", and if they do, they should better

commit their "changes" made by indent very quickly, before their midas tree is trashed by the next "svn

update".

And if they commit the changes made by "make indent", beware that "make indent" is not idempotent,

running it multiple times, it keeps changing files (keeps moving some dox comments around).

Also beware of entering a tug-of-war with Stefan - at least on my machines, my "make indent" seems

to produce different output from his.

Still fuming, even after some venting...

K.O. |

|

1793

|

26 Jan 2020 |

Konstantin Olchanski | Bug Report | support for mbedtls - get an open ssl error while trying to compile Midas |

> >

> > .../c++ ... /opt/local/lib/libssl.dylib

> >

>

> I get the same, libssl from /opt/local, so we are not using openssl shipped with mac os.

>

I note that latest mongoose 6.16 finally has a virtual ssl layer and appears to support mbedtls (polarssl) in addition to openssl.

I now think I should see if it works - as it gives us a way to support https without relying on the user having

pre-installed working openssl packages - we consistently run into problems with openssl on macos, and even

on linux there was trouble with preinstalled openssl packages and libraries.

With mbedtls, one will have to "git pull" and "make" it, but historically this causes much less trouble.

Also, with luck, mbedtls has better support for certificate expiration (I would really love to have openssl report an error

or a warning or at least some hint if I feed it an expired certificate) and (gasp!) certbot integration.

K.O. |

|

1794

|

26 Jan 2020 |

Konstantin Olchanski | Bug Report | support for mbedtls - get an open ssl error while trying to compile Midas |

> ... support for certbot

The certbot tool to use instead of certbot is this: https://github.com/ndilieto/uacme

K.O. |

|

1795

|

28 Jan 2020 |

Berta Beltran | Bug Report | support for mbedtls - get an open ssl error while trying to compile Midas |

> > ... support for certbot

>

> The certbot tool to use instead of certbot is this: https://github.com/ndilieto/uacme

>

> K.O.

HI Stefan and Konstantin,

Thanks a lot for your messages. Sorry for my late reply, I only work on this project from Tuesday to Thursdays. I have

run " make cmake" instead of "cd build; cmake" and this is the output regarding mhttpd:

/Library/Developer/CommandLineTools/usr/bin/c++ -O2 -g -DNDEBUG -Wl,-search_paths_first -Wl,-

headerpad_max_install_names CMakeFiles/mhttpd.dir/mhttpd.cxx.o CMakeFiles/mhttpd.dir/mongoose6.cxx.o

CMakeFiles/mhttpd.dir/mgd.cxx.o CMakeFiles/mhttpd.dir/__/mscb/src/mscb.cxx.o -o mhttpd -lsqlite3 ../libmidas.a

/usr/lib/libssl.dylib /usr/lib/libcrypto.dylib -lz -lsqlite3 /usr/lib/libssl.dylib /usr/lib/libcrypto.dylib -lz

Undefined symbols for architecture x86_64:

"_OPENSSL_init_ssl", referenced from:

_mg_mgr_init in mongoose6.cxx.o

"_SSL_CTX_set_options", referenced from:

_mg_set_ssl in mongoose6.cxx.o

ld: symbol(s) not found for architecture x86_64

clang: error: linker command failed with exit code 1 (use -v to see invocation)

I see that in your outputs the openssl libs are in /opt/local/lib/ while mine are in /usr/lib/, and that is the only difference.

I have checked that the libraries libssl.dylib and libcrypto.dylib are in my /usr/lib/, and indeed they are, so I don't

understand the reason for the error, I will continue investigating.

Thanks

Berta |

|

1804

|

02 Feb 2020 |

Konstantin Olchanski | Bug Report | support for mbedtls - get an open ssl error while trying to compile Midas |

> I only work on this project from Tuesday to Thursdays.

No problem. No hurry.

> I have run " make cmake" instead of "cd build; cmake" and this is the output regarding mhttpd: ...

There should be also a line where mhttpd.cxx is compiled into mhttpd.o, we need to see what compiler

flags are used - I suspect the compiler uses header files from /usr/local/include while the linker

is using libraries from /usr/lib, a mismatch.

To save time, please attach the full output of "make cmake". There may be something else I want to see there.

If you do not use the mhttpd built-in https support (for best security I recommend using https from the apache httpd password protected https proxy),

then it is perfectly fine to build midas with NO_SSL=1.

K.O. |

|

3067

|

24 Jul 2025 |

Konstantin Olchanski | Bug Fix | support for large history files |

FILE history code (mhf_*.dat files) did not support reading history files bigger than about 2GB, this is now

fixed on branch "feature/history_off64_t" (in final testing, to be merged ASAP).

History files were never meant to get bigger than about 100 MBytes, but it turns out large files can still

happen:

1) files are rotated only when history is closed and reopened

2) we removed history close and open on run start

3) so files are rotated only when mlogger is restarted

In the old code, large files would still happen if some equipment writes a lot of data (I have a file from

Stefan with history record size about 64 kbytes, written at 1/second, MIDAS handles this just fine) or if

there is no runs started and stopped for a long time.

There are reasons for keeping file size smaller:

a) I would like to use mmap() to read history files, and mmap() of a 100 Gbyte file on a 64 Gbyte RAM

machine would not work very well.

b) I would like to implement compressed history files and decompression of a 100 Gbyte file takes much

longer than decompression of a 100 Mbyte file. it is better if data is in smaller chunks.

(it is easy to write a utility program to break-up large history files into smaller chunks).

Why use mmap()? I note that the current code does 1 read() syscall per history record (it is much better to

read data in bigger chunks) and does multiple seek()/read() syscalls to find the right place in the history

file (plays silly buggers with the OS read-ahead and data caching). mmap() eliminates all syscalls and has

the potential to speed things up quite a bit.

K.O. |

|

675

|

25 Nov 2009 |

Konstantin Olchanski | Bug Fix | subrun file size |

Please be aware of mlogger.c update rev 4566 on Sept 23rd 2009, when Stefan

fixed a buglet in the subrun file size computations. Before this fix, the first

subrun could be of a short length. If you use subruns, please update your

mlogger to at least rev 4566 (or newer, Stefan added the run and subrun time

limits just recently).

K.O. |

|

1999

|

24 Sep 2020 |

Gennaro Tortone | Forum | subrun |

Hi,

I was wondering if there is a "mechanism" to run an executable

file after each subrun is closed...

I need to convert .mid.lz4 subrun files to ROOT (TTree) files;

Thanks,

Gennaro |

|

2046

|

01 Dec 2020 |

Stefan Ritt | Forum | subrun |

There is no "mechanism" foreseen to be executed after each subrun. But you could

run a shell script after each run which loops over all subruns and converts them

one after the other.

Stefan

> Hi,

>

> I was wondering if there is a "mechanism" to run an executable

> file after each subrun is closed...

>

> I need to convert .mid.lz4 subrun files to ROOT (TTree) files;

>

> Thanks,

> Gennaro |

|

2047

|

01 Dec 2020 |

Ben Smith | Forum | subrun |

We use the lazylogger for something similar to this. You can specify the path to a custom script, and it will be run for each midas file that gets written:

https://midas.triumf.ca/MidasWiki/index.php/Lazylogger#Using_a_script

This means that you don't have to wait until the end of the run to start processing.

If the ROOT conversion is going to be slow, but you have a batch system available, you could use the lazylogger script to submit a job to the batch system for each file.

>

> > Hi,

> >

> > I was wondering if there is a "mechanism" to run an executable

> > file after each subrun is closed...

> >

> > I need to convert .mid.lz4 subrun files to ROOT (TTree) files;

> >

> > Thanks,

> > Gennaro |

|

3044

|

25 May 2025 |

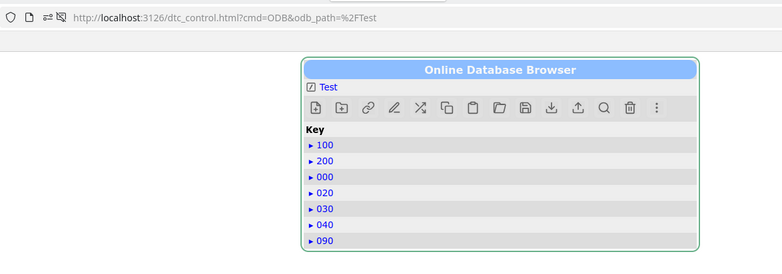

Pavel Murat | Bug Report | subdirectory ordering in ODB browser ? |

Dear MIDAS experts,

I'm running into a minor but annoying issue with the subdirectory name ordering by the ODB browser.

I have a straw-man hash map which includes ODB subdirectories named "000", "010", ... "300",

and I'm yet to succeed to have them displayed in a "natural" order: the subdirectories with names

starting from "0" always show up on the bottom of the list - see attached .png file.

Neither interactive re-ordering nor manual ordering of the items in the input .json file helps.

I have also attached a .json file which can be loaded with odbedit to reproduce the issue.

Although I'm using a relatively recent - ~ 20 days old - commit, 'db1819ac', is it possible

that this issue has already been sorted out ?

-- many thanks, regards, Pasha |

| Attachment 1: panel_map.json

|

{

"/MIDAS version" : "2.1",

"/MIDAS git revision" : "Sat May 3 17:22:36 2025 +0200 - midas-2025-01-a-192-gdb1819ac-dirty on branch HEAD",

"/filename" : "PanelMap.json",

"/ODB path" : "/Test/PanelMap",

"000" : {

},

"020" : {

},

"030" : {

},

"040" : {

},

"090" : {

},

"100" : {

},

"200" : {

}

}

|

| Attachment 2: panel_map.png

|

|

|

938

|

15 Nov 2013 |

Konstantin Olchanski | Bug Report | stuck data buffers |

We have seen several times a problem with stuck data buffers. The symptoms are very confusing -

frontends cannot start, instead hang forever in a state very hard to kill. Also "mdump -s -d -z

BUF03" for the affected data buffers is stuck.

We have identified the source of this problem - the semaphore for the buffer is locked and nobody

will ever unlock it - MIDAS relies on a feature of SYSV semaphores where they are automatically

unlocked by the OS and cannot ever be stuck ever. (see man semop, SEM_UNDO function).

I think this SEM_UNDO function is broken in recent Linux kernels and sometimes the semaphore

remains locked after the process that locked it has died. MIDAS is not programmed to deal with this

situation and the stuck semaphore has to be cleared manually.

Here, "BUF3" is used as example, but we have seen "SYSTEM" and ODB with stuck semaphores, too.

Steps:

a) confirm that we are using SYSV semaphores: "ipcs" should show many semaphores

b) identify the stuck semaphore: "strace mdump -s -d -z BUF03".

c) here will be a large printout, but ultimately you will see repeated entries of

"semtimedop(9633800, {{0, -1, SEM_UNDO}}, 1, {1, 0}^C <unfinished ...>"

d) erase the stuck semaphore "ipcrm -s 9633800", where the number comes from semtimedop() in

the strace output.

e) try again: "mdump -s -d -z BUF03" should work now.

Ultimately, I think we should switch to POSIX semaphores - they are easier to manage (the strace

and ipcrm dance becomes "rm /dev/shm/deap_BUF03.sem" - but they do not have the SEM_UNDO

function, so detection of locked and stuck semaphores will have to be done by MIDAS. (Unless we

can find some library of semaphore functions that already provides such advanced functionality).

K.O. |

|

3101

|

01 Oct 2025 |

Frederik Wauters | Forum | struct size mismatch of alarms |

So I started our DAQ with an updated midas, after ca. 6 months+.

No issues except all FEs complaining about the Alarm ODB structure.

* I adapted to the new structure ( trigger count & trigger count required )

* restarted fe's

* recompiled

18:17:40.015 2025/09/30 [EPICS Frontend,INFO] Fixing ODB "/Alarms/Alarms/logger"

struct size mismatch (expected 452, odb size 460)

18:17:40.009 2025/09/30 [SC Frontend,INFO] Fixing ODB "/Alarms/Alarms/logger"

struct size mismatch (expected 460, odb size 452)

how do I get the FEs + ODB back in line here?

thanks |

|

3102

|

01 Oct 2025 |

Nick Hastings | Forum | struct size mismatch of alarms |

> So I started our DAQ with an updated midas, after ca. 6 months+.

Would be worthwhile mentioning the git commit hash or tag you are using.

> No issues except all FEs complaining about the Alarm ODB structure.

> * I adapted to the new structure ( trigger count & trigger count required )

> * restarted fe's

> * recompiled

>

> 18:17:40.015 2025/09/30 [EPICS Frontend,INFO] Fixing ODB "/Alarms/Alarms/logger"

> struct size mismatch (expected 452, odb size 460)

>

> 18:17:40.009 2025/09/30 [SC Frontend,INFO] Fixing ODB "/Alarms/Alarms/logger"

> struct size mismatch (expected 460, odb size 452)

This seems to be https://daq00.triumf.ca/elog-midas/Midas/2980

> how do I get the FEs + ODB back in line here?

Recompile all frontends against new midas.

Nick. |

|

3103

|

01 Oct 2025 |

Nick Hastings | Forum | struct size mismatch of alarms |

Just to be clear, it seems that your "EPICS Frontend" was either not recompiled against the new midas yet or the old binary is being run, but "SC Frontend" is using the new midas.

> > So I started our DAQ with an updated midas, after ca. 6 months+.

>

> Would be worthwhile mentioning the git commit hash or tag you are using.

>

> > No issues except all FEs complaining about the Alarm ODB structure.

> > * I adapted to the new structure ( trigger count & trigger count required )

> > * restarted fe's

> > * recompiled

> >

> > 18:17:40.015 2025/09/30 [EPICS Frontend,INFO] Fixing ODB "/Alarms/Alarms/logger"

> > struct size mismatch (expected 452, odb size 460)

> >

> > 18:17:40.009 2025/09/30 [SC Frontend,INFO] Fixing ODB "/Alarms/Alarms/logger"

> > struct size mismatch (expected 460, odb size 452)

>

> This seems to be https://daq00.triumf.ca/elog-midas/Midas/2980

>

> > how do I get the FEs + ODB back in line here?

>

> Recompile all frontends against new midas.

>

> Nick. |

|

3104

|

02 Oct 2025 |

Stefan Ritt | Forum | struct size mismatch of alarms |

Sorry to intervene there, but the FEs are usually compiled against libmidas.a . Therefore you have to compile midas, usually do a "make install" to update the libmidas.a/so, then recompile the FEs. You probably forgot the "make install".

Stefan |

|

3105

|

02 Oct 2025 |

Frederik Wauters | Forum | struct size mismatch of alarms |

> Sorry to intervene there, but the FEs are usually compiled against libmidas.a . Therefore you have to compile midas, usually do a "make install" to update the libmidas.a/so, then recompile the FEs. You probably forgot the "make install".

>

> Stefan

OK, solved, closed. Turned out I messed up rebuilding some of the FEs.

More generally, and with the "Documentation" discussion of the workshop in mind, ODB mismatch error messages of all kind are a recurring phenomena confusing users. And MIDASGPT gave complete wrong suggestions. |

|

1038

|

12 Nov 2014 |

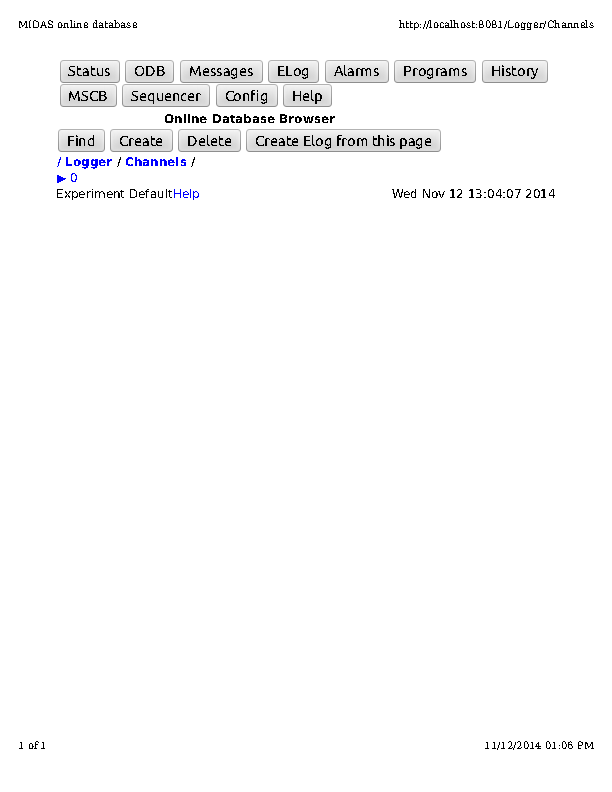

Robert Pattie | Forum | struct mismatch |

Hi all,

I've started receiving the following error that I can't track down. Does

anyone have a suggestion for where to start looking for the cause of this?

[Analyzer,ERROR] [odb.c:9460:db_open_record,ERROR] struct size mismatch for "/"

(expected size: 576, size in ODB: 0)

This error prevents me from running two runs in a row. I have to close the DAQ

and restart to take multiple runs. Also it prevents me from running the analyzer

in offline mode.

I also noticed that several for the ODB directories no longer have the same html

format when viewed through the browser. I've attached a screen print of the

"/Logger/Channels" page.

Thanks,

Robert |

| Attachment 1: logger_channels.pdf

|

|

|

210

|

02 May 2005 |

Stefan Ritt | Info | strlcpy/strlcat moved into separate file |

I had to move strlcpy & strlcat into a separate file "strlcpy.c". A header file

"strlcpy.h" was added as well. This way one can omit the old HAVE_STRLCPY which

made life hard. The windows and linux makefiles were adjusted accordingly, but

for Max OS X there might be some fixes necessary which I could not test. |