| ID |

Date |

Author |

Topic |

Subject |

|

2556

|

18 Jul 2023 |

Konstantin Olchanski | Forum | pull request for PostgreSQL support | > is there any news regarding this pull request ?

> (https://bitbucket.org/tmidas/midas/pull-requests/30)

apologies for taking a very long time to review the proposed changes.

the main problem with this pull request remains, it tangles together too many changes to the code and I cannot simply

say "this is okey", merge and commit it.

example of unrelated change is diff of mlogger.cxx, change of function in: "db_get_value(hDB, 0, "/Logger/Multithread

transitions" ... )". there is also unrelated changes to whitespace sprinkled around.

can you review your diffs again and try to remove as much unrelated and unnecessary changes as you can?

I could do this for you, and merge my version, but next time you merge base midas, you will have a collision.

unrelated change of function is introduction of something called "downsampling", what is the purpose of this? How is it

different from requesting binned data? Is it just a kludge to reduce the data size? Before we merge it, can you post a

description/discussion to this forum here? (as a separate topic, separate from discussion of PostgreSQL merge).

the changes to add PostgreSQL so fat look reasonable:

- CMakeLists, is always painful but if you do same a MySQL, should be okey, we always end up rejigging this several

times before it works everywhere.

- history.h, ok, minus changes for adding the "downsample" feature

- mlogger.cxx, changes are too tangled with "downsample" feature, cannot review

- SetDownsample() API is defective, should have separate Get() and Set() functions

- history_common.cxx, please do not add downsampling code to history providers that do not/will not support it.

- history_odbc.cxx, please do not change it. it does not support downsampling and never will.

- history.cxx, ditto

- mjsonrpc.cxx, history API is changed, we must know: is new JS compatible with old mhttpd? is old JS compatible with

new mhttpd? (mixed versions are very common in practice). if there is incompatibility, can you recoded it to be

compatible?

- history_schema.cxx: bitbucket diff is a dog's breakfast, cannot review. I will have to checkout your branch and diff

by hand.

changes to mhistory.js appear to be extensive and some explanation is needed for what is changed, what bugs/problems

are fixed, what new features are added.

to move forward, can you generate a pull requests that only adds pgsql to history_schema.cxx, history_common.cxx and

mlogger.cxx and does not add any other functions, features and does not change any whistespace?

K.O.

>

> If you agree to merge I can resolve conflicts that now

> (after two months) are listed...

>

> Regards,

> Gennaro

>

> >

> > Hi,

> > I have updated the PR with a new one that includes TimescaleDB support and some

> > changes to mhistory.js to support downsampling queries...

> >

> > Cheers,

> > Gennaro

> >

> > > > some minutes ago I published a PR for PostgreSQL support I developed

> > > > at INFN-Napoli for Darkside experiment...

> > > >

> > > > I don't know if you receive a notification about this PR and in doubt

> > > > I wrote this message...

> > >

> > > Hi, Gennaro, thank you for the very useful contribution. I saw the previous version

> > > of your pull request and everything looked quite good. But that pull request was

> > > for an older version of midas and it would not have applied cleanly to the current

> > > version. I will take a look at your updated pull request. In theory it should only

> > > add the Postgres class and modify a few other places in history_schema.cxx and have

> > > no changes to anything else. (if you need those changes, it should be a separate

> > > pull request).

> > >

> > > Also I am curious what benefits and drawbacks of Postgres vs mysql/mariadb you have

> > > observed for storing and using midas history data.

> > >

> > > K.O. |

|

2559

|

21 Jul 2023 |

Konstantin Olchanski | Forum | pull request for PostgreSQL support | > > is there any news regarding this pull request ?

> > (https://bitbucket.org/tmidas/midas/pull-requests/30)

>

> apologies for taking a very long time to review the proposed changes.

>

I merged the PgSql bits by hand - the automatic tools make a dog's breakfast from the history_schema.cxx diffs. Ouch.

history_schema.cxx merged pretty much cleanly, but I have one question about CreateSqlColumn() with sql_strict set to "true". Can you say

more why this is needed? Should this also be made the default for MySQL? The best I can tell the default values are only needed if we write

to SQL but forget to provide values that should not be NULL? But our code never does this? Or this is for reading from SQL, where NULL values

are replaced with the default values? I do not have time to look into this right now, I hope you can clarify it for me?

Also notice the fDownsample is set to zero and cannot be changed. I recommend we set it through the MakeMidasHistoryPgsql() factory method.

Please pull, merge, retest, update the pull request, check that there is no unrelated changes (changes in mlogger.cxx is a direct red flag!)

and we should be able to merge the rest of your stuff pronto.

K.O.

commit e85bb6d37c85f02fc4895cae340ba71ab36de906 (HEAD -> develop, origin/develop, origin/HEAD)

Author: Konstantin Olchanski <olchansk@triumf.ca>

Date: Fri Jul 21 09:45:08 2023 -0700

merge PQSQL history in history_schema.cxx

commit f254ebd60a23c6ee2d4870f3b6b5e8e95a8f1f09

Author: Konstantin Olchanski <olchansk@triumf.ca>

Date: Fri Jul 21 09:19:07 2023 -0700

add PGSQL Makefile bits

commit aa5a35ba221c6f87ae7a811236881499e3d8dcf7

Author: Konstantin Olchanski <olchansk@triumf.ca>

Date: Fri Jul 21 08:51:23 2023 -0700

merge PGSQL support from https://bitbucket.org/gtortone/midas/branch/feature/timescaledb_support except for history_schema.cxx |

|

2560

|

21 Jul 2023 |

Gennaro Tortone | Forum | pull request for PostgreSQL support |

Hi Konstantin,

thanks a lot for your work on PostgreSQL and TimescaleDB integration...

and sorry for unrelated changes on source code !

I will return on this task at end of this year (maybe October or November) because

I'm working on different tasks... but I will keep in mind your suggestions in order

to provide good source code.

Thanks,

Gennaro

>

> I merged the PgSql bits by hand - the automatic tools make a dog's breakfast from the history_schema.cxx diffs. Ouch.

>

> history_schema.cxx merged pretty much cleanly, but I have one question about CreateSqlColumn() with sql_strict set to "true". Can you say

> more why this is needed? Should this also be made the default for MySQL? The best I can tell the default values are only needed if we write

> to SQL but forget to provide values that should not be NULL? But our code never does this? Or this is for reading from SQL, where NULL values

> are replaced with the default values? I do not have time to look into this right now, I hope you can clarify it for me?

>

> Also notice the fDownsample is set to zero and cannot be changed. I recommend we set it through the MakeMidasHistoryPgsql() factory method.

>

> Please pull, merge, retest, update the pull request, check that there is no unrelated changes (changes in mlogger.cxx is a direct red flag!)

> and we should be able to merge the rest of your stuff pronto.

>

> K.O.

>

> commit e85bb6d37c85f02fc4895cae340ba71ab36de906 (HEAD -> develop, origin/develop, origin/HEAD)

> Author: Konstantin Olchanski <olchansk@triumf.ca>

> Date: Fri Jul 21 09:45:08 2023 -0700

>

> merge PQSQL history in history_schema.cxx

>

> commit f254ebd60a23c6ee2d4870f3b6b5e8e95a8f1f09

> Author: Konstantin Olchanski <olchansk@triumf.ca>

> Date: Fri Jul 21 09:19:07 2023 -0700

>

> add PGSQL Makefile bits

>

> commit aa5a35ba221c6f87ae7a811236881499e3d8dcf7

> Author: Konstantin Olchanski <olchansk@triumf.ca>

> Date: Fri Jul 21 08:51:23 2023 -0700

>

> merge PGSQL support from https://bitbucket.org/gtortone/midas/branch/feature/timescaledb_support except for history_schema.cxx |

|

2563

|

28 Jul 2023 |

Stefan Ritt | Forum | pull request for PostgreSQL support | The compilation of midas was broken by the last modification. The reason is that

Pgsql *fPgsql = NULL;

was not protected by

#ifdef HAVE_PGSQL

So I put all PGSQL code under a big #ifdef and now it compiles again. You might want to double check my modification at

https://bitbucket.org/tmidas/midas/commits/e3c7e73459265e0d7d7a236669d1d1f2d9292a74

Best,

Stefan |

|

2566

|

02 Aug 2023 |

Caleb Marshall | Forum | Issues with Universe II Driver | Hello,

At our lab we are currently in the process of migrating more of our systems over to Midas. However, all of our working systems are dependent on SBCs with the Tsi-148 chips of which we only have a handful. In order to have some backups and spares for testing, we have been attempting to get Midas working with some borrowed SBCs (Concurrent Technologies VX 40x/04x) with Universe-II chips. The SBC is running CentOS 7. I have tried to follow the instructions posted here. The universe-II kernel module appears to load correctly, dmesg gives:

[ 32.384826] VME: Board is system controller

[ 32.384875] VME: Driver compiled for SMP system

[ 32.384877] VME: Installed VME Universe module version: 3.6.KO6

However, running vmescan.exe fails with a segfault. Running with gdb shows:

vmic_mmap: Mapped VME AM 0x0d addr 0x00000000 size 0x00ffffff at address 0x80a01000

mvme_open:

Bus handle = 0x7

DMA handle = 0x6045d0

DMA area size = 1048576 bytes

DMA physical address = 0x7ffff7eea000

vmic_mmap: Mapped VME AM 0x2d addr 0x00000000 size 0x0000ffff at address 0x86ff0000

Program received signal SIGSEGV, Segmentation fault.

mvme_read_value (mvme=0x604010, vme_addr=<optimized out>)

at /home/jam/midas/packages/midas/drivers/vme/vmic/vmicvme.c:352

352 dst = *((WORD *)addr);

With the pointer addr originating from a call to vmic_mapcheck within the mvme_read_value functions in the vmicvme.c file. Help with where to go from here would be appreciated.

-Caleb

|

|

2567

|

02 Aug 2023 |

Konstantin Olchanski | Forum | Issues with Universe II Driver | I maintain the tsi148 and the universe-II drivers. I confirm -KO6 is my latest

version, last updated for 32-bit Debian-11, and we still use it at TRIUMF.

It is good news that the vme_universe kernel module built, loaded and reported

correct stuff to dmesg.

It is not clear why mvme_read_value() crashed. We need to know the value of

vme_addr and addr, can you add printf()s for them using format "%08x" and try

again?

K.O.

<p> </p>

<table align="center" cellspacing="1" style="border:1px solid #486090;

width:98%">

<tbody>

<tr>

<td style="background-color:#486090">Caleb Marshall

wrote:</td>

</tr>

<tr>

<td style="background-color:#FFFFB0">

<p>Hello,</p>

<p>At our lab we are currently in the process of

migrating more of our systems over to Midas. However, all of our working systems

are dependent on SBCs with the Tsi-148 chips of which we only have a handful. In

order to have some backups and spares for testing, we have been attempting to

get Midas working with some borrowed SBCs (Concurrent Technologies VX 40x/04x)

with Universe-II chips. The SBC is running CentOS 7. I have tried to

follow the instructions posted <a

href="https://daq00.triumf.ca/DaqWiki/index.php/VME-

CPU#V7648_and_V7750_BIOS_Settings">here</a>. The universe-II kernel module

appears to load correctly, dmesg gives:</p>

<p>[ 32.384826] VME: Board is system

controller<br />

[ 32.384875] VME: Driver compiled for SMP

system<br />

[ 32.384877] VME: Installed VME Universe module

version: 3.6.KO6<br />

</p>

<p>However, running vmescan.exe fails with a segfault.

Running with gdb shows:</p>

<p>vmic_mmap: Mapped VME AM 0x0d addr 0x00000000 size

0x00ffffff at address 0x80a01000<br />

mvme_open:<br />

Bus handle

= 0x7<br />

DMA handle

= 0x6045d0<br />

DMA area size =

1048576 bytes<br />

DMA physical address = 0x7ffff7eea000<br />

vmic_mmap: Mapped VME AM 0x2d addr 0x00000000 size

0x0000ffff at address 0x86ff0000</p>

<p>Program received signal SIGSEGV, Segmentation fault.

<br />

mvme_read_value (mvme=0x604010, vme_addr=<optimized

out>)<br />

at

/home/jam/midas/packages/midas/drivers/vme/vmic/vmicvme.c:352<br />

352 dst = *((WORD

*)addr);<br />

</p>

<p>With the pointer addr originating from a call

to vmic_mapcheck within the mvme_read_value functions in the

vmicvme.c file. Help with where to go from here would be appreciated.</p>

<p>-Caleb </p>

<p> </p>

</td>

</tr>

</tbody>

</table>

<p> </p> |

|

2570

|

03 Aug 2023 |

Caleb Marshall | Forum | Issues with Universe II Driver | Here is the output:

vmic_mmap: Mapped VME AM 0x0d addr 0x00000000 size 0x00ffffff at address 0x80a01000

mvme_open:

Bus handle = 0x3

DMA handle = 0x158f5d0

DMA area size = 1048576 bytes

DMA physical address = 0x7f91db553000

vmic_mmap: Mapped VME AM 0x2d addr 0x00000000 size 0x0000ffff at address 0x86ff0000

vme addr: 00000000

addr: db543000 |

|

2571

|

03 Aug 2023 |

Konstantin Olchanski | Forum | Issues with Universe II Driver | > Here is the output:

>

> vmic_mmap: Mapped VME AM 0x0d addr 0x00000000 size 0x00ffffff at address 0x80a01000

> mvme_open:

> Bus handle = 0x3

> DMA handle = 0x158f5d0

> DMA area size = 1048576 bytes

> DMA physical address = 0x7f91db553000

> vmic_mmap: Mapped VME AM 0x2d addr 0x00000000 size 0x0000ffff at address 0x86ff0000

> vme addr: 00000000

> addr: db543000

I see the problem. A24 is mapped at 0x80xxxxxx, A16 is mapped at 0x86ffxxxx, but

mvme_read computed address 0xdb543000, out of range of either mapped vme address. ouch.

One more thing to check, AFAIK, this universe-II codes were never used on 64-bit CPU

before, we only have 32-bit Pentium-3 and Pentium-4 machines with these chips. The

tsi148 codes used to work both 32-bit and 64-bit, we used to have both flavours of

CPUs, but now only have 64-bit.

What is your output for "uname -a"? does it report 32-bit or 64-bit kernel?

If you feel adventurous, you can build 32-bit midas (cd .../midas; make linux32),

compile vmescan.o with "-m32" and link vmescan.exe against .../midas/linux-m32/lib, and

see if that works. Meanwhile, I can check if vmicvme.c is 64-bit clean. Checking if

kernel module is 64-bit clean would be more difficult...

K.O. |

|

2572

|

03 Aug 2023 |

Caleb Marshall | Forum | Issues with Universe II Driver | I am looking into compiling the 32 bit midas.

In the meantime, here is the kernel info:

3.10.0-1160.11.1.el7.x86_64

Thank you for the help.

-Caleb |

|

2574

|

04 Aug 2023 |

Caleb Marshall | Forum | Issues with Universe II Driver | I can compile 32 bit midas. Unless I am interpreting the linking error, I don't

think I can use the driver as built.

While trying to compile vme_scan, most of the programs fail with:

/usr/bin/ld: skipping incompatible /usr/lib/gcc/x86_64-redhat-

linux/4.8.5/../../../../lib/libvme.so when searching for -lvme

/usr/bin/ld: skipping incompatible /lib/../lib/libvme.so when searching for -lvme

/usr/bin/ld: skipping incompatible /usr/lib/../lib/libvme.so when searching for -

lvme

/usr/bin/ld: skipping incompatible /usr/lib/gcc/x86_64-redhat-

linux/4.8.5/../../../libvme.so when searching for -lvme

/usr/bin/ld: skipping incompatible //lib/libvme.so when searching for -lvme

/usr/bin/ld: skipping incompatible //usr/lib/libvme.so when searching for -lvme

with libvme.so being built by the universe-II driver. Not sure if I can get around

this without messing with the driver? Is it possible to build a 32 bit version of

that shared library without having to touch the actual kernel module?

-Caleb |

|

2575

|

04 Aug 2023 |

Konstantin Olchanski | Forum | Issues with Universe II Driver | > I can compile 32 bit midas. Unless I am interpreting the linking error, I don't

> think I can use the driver as built.

I think you are right, Makefile from the Universe package does not build a -m32 version

of libvme.so. I think I can fix that...

K.O. |

|

2576

|

09 Aug 2023 |

Konstantin Olchanski | Forum | pull request for PostgreSQL support | > The compilation of midas was broken by the last modification. The reason is that

> Pgsql *fPgsql = NULL;

> was not protected by #ifdef HAVE_PGSQL

confirmed, my mistake, I forgot to test with "make cmake NO_PGSQL". your fix is correct, thanks.

K.O. |

|

2595

|

08 Sep 2023 |

Nick Hastings | Forum | Hide start and stop buttons | The wiki documents an odb variable to enable the hiding of the Start and Stop buttons on the mhttpd status page

https://daq00.triumf.ca/MidasWiki/index.php//Experiment_ODB_tree#Start-Stop_Buttons

However mhttpd states this option is obsolete. See commit:

https://bitbucket.org/tmidas/midas/commits/2366eefc6a216dc45154bc4594e329420500dcf7

I note that that commit also made mhttpd report that the "Pause-Resume Buttons" variable is also obsolete, however that code seems to have since been removed.

Is there now some other mechanism to hide the start and stop buttons?

Note that this is for a pure slow control system that does not take runs. |

|

2596

|

08 Sep 2023 |

Nick Hastings | Forum | Hide start and stop buttons | > Is there now some other mechanism to hide the start and stop buttons?

> Note that this is for a pure slow control system that does not take runs.

Just wanted to add that I realize that this can be done by copying

status.html and/or midas.css to the experiment directory and then modifying

them/it, but wonder if the is some other preferred way. |

|

2599

|

13 Sep 2023 |

Stefan Ritt | Forum | Hide start and stop buttons | Indeed the ODB settings are obsolete. Now that the status page is fully dynamic

(JavaScript), it's much more powerful to modify the status.html page directly. You

can not only hide the buttons, but also remove the run numbers, the running time,

and so on. This is much more flexible than steering things through the ODB.

If there is a general need for that, I can draft a "non-run" based status page, but

it's a bit hard to make a one-fits-all. Like some might even remove the logging

channels and the clients, but add certain things like if their slow control front-

end is running etc.

Best,

Stefan |

|

2600

|

13 Sep 2023 |

Nick Hastings | Forum | Hide start and stop buttons | Hi Stefan,

> Indeed the ODB settings are obsolete.

I just applied for an account for the wiki.

I'll try add a note regarding this change.

> Now that the status page is fully dynamic

> (JavaScript), it's much more powerful to modify the status.html page directly. You

> can not only hide the buttons, but also remove the run numbers, the running time,

> and so on. This is much more flexible than steering things through the ODB.

Very true. Currently I copied the resources/midas.css into the experiment directory and appended:

#runNumberCell { display: none;}

#runStatusStartTime { display: none;}

#runStatusStopTime { display: none;}

#runStatusSequencer { display: none;}

#logChannel { display: none;}

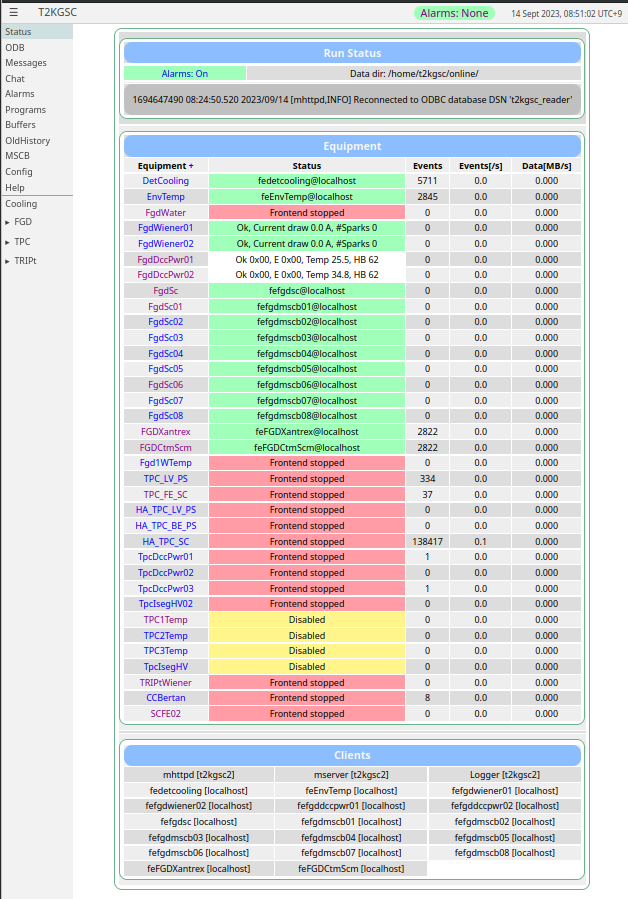

See screenshot attached. :-)

But if feels a little clunky to copy the whole file just to add five lines.

It might be more elegant if status.html looked for a user css file in addition

to the default ones.

> If there is a general need for that, I can draft a "non-run" based status page, but

> it's a bit hard to make a one-fits-all. Like some might even remove the logging

> channels and the clients, but add certain things like if their slow control front-

> end is running etc.

The logging channels are easily removed with the css (see attachment), but it might be

nice if the string "Run Status" table title was also configurable by css. For this

slow control system I'd probably change it to something like "GSC Status". Again

this is a minor thing, I could trivially do this by copying the resources/status.html

to the experiment directory and editing it.

Lots of fun new stuff migrating from circa 2012 midas to midas-2022-05-c :-)

Cheers,

Nick. |

| Attachment 1: screenshot-20230914-085054.png

|

|

|

2601

|

13 Sep 2023 |

Stefan Ritt | Forum | Hide start and stop buttons | > Hi Stefan,

>

> > Indeed the ODB settings are obsolete.

>

> I just applied for an account for the wiki.

> I'll try add a note regarding this change.

Please coordinate with Ben Smith at TRIUMF <bsmith@triumf.ca>, who coordinates the documentation.

> Very true. Currently I copied the resources/midas.css into the experiment directory and appended:

>

> #runNumberCell { display: none;}

> #runStatusStartTime { display: none;}

> #runStatusStopTime { display: none;}

> #runStatusSequencer { display: none;}

> #logChannel { display: none;}

>

> See screenshot attached. :-)

>

> But if feels a little clunky to copy the whole file just to add five lines.

> It might be more elegant if status.html looked for a user css file in addition

> to the default ones.

I would not go to change the CSS file. You only can hide some tables. But in a while I'm sure you

want to ADD new things, which you only can do by editing the status.html file. You don't have to

change midas/resources/status.html, but can make your own "custom status", name it differently, and

link /Custom/Default in the ODB to it. This way it does not get overwritten if you pull midas.

> The logging channels are easily removed with the css (see attachment), but it might be

> nice if the string "Run Status" table title was also configurable by css. For this

> slow control system I'd probably change it to something like "GSC Status". Again

> this is a minor thing, I could trivially do this by copying the resources/status.html

> to the experiment directory and editing it.

See above. I agree that the status.html file is a bit complicated and not so easy to understand

as the CSS file, but you can do much more by editing it.

> Lots of fun new stuff migrating from circa 2012 midas to midas-2022-05-c :-)

I always advise people to frequently pull, they benefit from the newest features and avoid the

huge amount of work to migrate from a 10 year old version.

Best,

Stefan |

|

2602

|

14 Sep 2023 |

Konstantin Olchanski | Forum | Hide start and stop buttons | I believe the original "hide run start / stop" was added specifically for ND280 GSC MIDAS. I do not know

why it was removed. "hide pause / resume" is still there. I will restore them. Hiding logger channel

section should probably be automatic of there is no /logger/channels, I can check if it works and what

happens if there is more than one logger channel. K.O. |

|

2603

|

14 Sep 2023 |

Nick Hastings | Forum | Hide start and stop buttons | Hi

> > > Indeed the ODB settings are obsolete.

> >

> > I just applied for an account for the wiki.

> > I'll try add a note regarding this change.

>

> Please coordinate with Ben Smith at TRIUMF <bsmith@triumf.ca>, who coordinates the documentation.

I will tread lightly.

> I would not go to change the CSS file. You only can hide some tables. But in a while I'm sure you

> want to ADD new things, which you only can do by editing the status.html file. You don't have to

> change midas/resources/status.html, but can make your own "custom status", name it differently, and

> link /Custom/Default in the ODB to it. This way it does not get overwritten if you pull midas.

We have *many* custom pages. The submenus on the status page:

▸ FGD

▸ TPC

▸ TRIPt

hide custom pages with all sorts of good stuff.

> > The logging channels are easily removed with the css (see attachment), but it might be

> > nice if the string "Run Status" table title was also configurable by css. For this

> > slow control system I'd probably change it to something like "GSC Status". Again

> > this is a minor thing, I could trivially do this by copying the resources/status.html

> > to the experiment directory and editing it.

>

> See above. I agree that the status.html file is a bit complicated and not so easy to understand

> as the CSS file, but you can do much more by editing it.

I may end up doing this since the events and data columns do not provide particularly

useful information in this instance. But for now, the css route seems like a quick and

fairly clean way to remove irrelevant stuff from a prominent place at the top of the page.

> > Lots of fun new stuff migrating from circa 2012 midas to midas-2022-05-c :-)

>

> I always advise people to frequently pull, they benefit from the newest features and avoid the

> huge amount of work to migrate from a 10 year old version.

The long delay was not my choice. The group responsible for the system departed in 2018, and

and were not replaced by the experiment management. Lack of personnel/expertise resulted in

a "if it's not broken then don't fix it" situation. Eventually, the need to update the PCs/OSs

and the imminent introduction of new sub-detectors resulted people agreeing to the update.

Cheers,

Nick. |

|

2604

|

14 Sep 2023 |

Stefan Ritt | Forum | Hide start and stop buttons | > I believe the original "hide run start / stop" was added specifically for ND280 GSC MIDAS. I do not know

> why it was removed. "hide pause / resume" is still there. I will restore them. Hiding logger channel

> section should probably be automatic of there is no /logger/channels, I can check if it works and what

> happens if there is more than one logger channel. K.O.

Very likely it was "forgotten" when the status page was converted to a dynamic page by Shouyi Ma. Since he is

not around any more, it's up to us to adapt status.html if needed.

Stefan |

|