| ID |

Date |

Author |

Topic |

Subject |

|

2185

|

28 May 2021 |

Konstantin Olchanski | Info | MidasConfig.cmake usage | How does "find_package (Midas REQUIRED)" find the location of MIDAS?

The best I can tell from the current code, the package config files are installed

inside $MIDASSYS somewhere and I see "find_package MIDAS" never find them (indeed,

find_package() does not know about $MIDASSYS, so it has to use telepathy or something).

Does anybody actually use "find_package(midas)", does it actually work for anybody?

Also it appears that "the cmake way" of importing packages is to use

the install(EXPORT) method.

In this scheme, the user package does this:

include(${MIDASSYS}/lib/midas-targets.cmake)

target_link_libraries(myprogram PUBLIC midas)

this causes all the midas include directories (including mxml, etc)

and dependancy libraries (-lutil, -lpthread, etc) to be automatically

added to "myprogram" compilation and linking.

of course MIDAS has to generate a sensible targets export file,

working on it now.

K.O. |

|

2186

|

28 May 2021 |

Marius Koeppel | Info | MidasConfig.cmake usage | > Does anybody actually use "find_package(midas)", does it actually work for anybody?

What we do is to include midas as a submodule and than we call find_package:

add_subdirectory(midas)

list(APPEND CMAKE_PREFIX_PATH ${CMAKE_CURRENT_SOURCE_DIR}/midas)

find_package(Midas REQUIRED)

For us it works fine like this but we kind of always compile Midas fresh and don't use a version on our system (keeping the newest version).

Without the find_package the build does not work for us. |

|

2187

|

28 May 2021 |

Konstantin Olchanski | Info | MidasConfig.cmake usage | > > Does anybody actually use "find_package(midas)", does it actually work for anybody?

>

> What we do is to include midas as a submodule and than we call find_package:

>

> add_subdirectory(midas)

> list(APPEND CMAKE_PREFIX_PATH ${CMAKE_CURRENT_SOURCE_DIR}/midas)

> find_package(Midas REQUIRED)

>

> For us it works fine like this but we kind of always compile Midas fresh and don't use a version on our system (keeping the newest version).

>

> Without the find_package the build does not work for us.

Ok, I see. I now think that for us, this "find_package" business an unnecessary complication:

since one has to know where midas is in order to add it to CMAKE_PREFIX_PATH,

one might as well import the midas targets directly by include(.../midas/lib/midas-targets.cmake).

From what I see now, the cmake file is much simplifed by converting

it from "find_package(midas)" style MIDAS_INCLUDES & co to more cmake-ish

target_link_libraries(myexe midas) - all the compiler switches, include paths,

dependant libraires and gunk are handled by cmake automatically.

I am not touching the "find_package(midas)" business, so it should continue to work, then.

K.O. |

|

2190

|

31 May 2021 |

Stefan Ritt | Info | MidasConfig.cmake usage | MidasConfig.cmake might at some point get included in the standard Cmake installation (or some add-on). It will then reside in the Cmake system path

and you don't have to explicitly know where this is. Just the find_package(Midas) will then be enough.

Even if it's not there, the find_package() is the "traditional" way CMake discovers external packages and users are used to that (like ROOT does the

same). In comparison, your "midas-targets.cmake" way of doing things, although this works certainly fine, is not the "standard" way, but a midas-

specific solution, other people have to learn extra.

Stefan |

|

2192

|

02 Jun 2021 |

Konstantin Olchanski | Info | MidasConfig.cmake usage | > MidasConfig.cmake might at some point get included in the standard Cmake installation (or some add-on). It will then reside in the Cmake system path

> and you don't have to explicitly know where this is. Just the find_package(Midas) will then be enough.

Hi, Stefan, can you say more about this? If MidasConfig.cmake is part of the cmake distribution,

(did I understand you right here?) and is installed into a system-wide directory,

how can it know to use midas from /home/agmini/packages/midas or from /home/olchansk/git/midas?

Certainly we do not do system wide install of midas (into /usr/local/bin or whatever) because

typically different experiments running on the same computer use different versions of midas.

For ROOT, it looks as if for find_package(ROOT) to work, one has to add $ROOTSYS to the Cmake package

search path. This is what we do in our cmake build.

As for find_package() vs install(EXPORT), we may have the same situation as with my "make cmake",

where my one line solution is no good for people who prefer to type 3 lines of commands.

Specifically, the install(EXPORT) method defines the "midas" target which brings with it

all it's dependent include paths, libraries and compile flags. So to link midas you need

two lines:

include(.../midas/lib/midas-targets.cmake)

target_link_libraries(myexe midas)

target_link_libraries(myfrontend mfe)

whereas find_package() defines a bunch of variables (the best I can tell) and one has

to add them to the include paths and library paths and compile flags "by hand".

I do not know how find_package() handles the separate libmidas, libmfe and librmana. (and

the separate libmanalyzer and libmanalyzer_main).

K.O. |

|

2205

|

04 Jun 2021 |

Konstantin Olchanski | Info | MidasConfig.cmake usage | > find_package(Midas)

I am testing find_package(Midas). There is a number of problems:

1) ${MIDAS_LIBRARIES} is set to "midas;midas-shared;midas-c-compat;mfe".

This seem to be an incomplete list of all libraries build by midas (rmana is missing).

This means ${MIDAS_LIBRARIES} should not be used for linking midas programs (unlike ${ROOT_LIBRARIES}, etc):

- we discourage use of midas shared library because it always leads to problems with shared library version mismatch (static linking is preferred)

- midas-c-compat is for building python interfaces, not for linking midas programs

- mfe contains a main() function, it will collide with the user main() function

So I think this should be changed to just "midas" and midas linking dependancy

libraries (-lutil, -lrt, -lpthread) should also be added to this list.

Of course the "install(EXPORT)" method does all this automatically. (so my fixing find_package(Midas) is a waste of time)

2) ${MIDAS_INCLUDE_DIRS} is missing the mxml, mjson, mvodb, midasio submodule directories

Again, install(EXPORT) handles all this automatically, in find_package(Midas) it has to be done by hand.

Anyhow, this is easy to add, but it does me no good in the rootana cmake if I want to build against old versions

of midas. So in the rootana cmake, I still have to add $MIDASSYS/mvodb & co by hand. Messy.

I do not know the history of cmake and why they have two ways of doing things (find_package and install(EXPORT)),

this second method seems to be much simpler, everything is exported automatically into one file,

and it is much easier to use (include the export file and say target_link_libraries(rootana PUBLIC midas)).

So how much time should I spend in fixing find_package(Midas) to make it generally usable?

- include path is incomplete

- library list is nonsense

- compiler flags are not exported (we do not need -DOS_LINUX, but we do need -DHAVE_ZLIB, etc)

- dependency libraries are not exported (-lz, -lutil, -lrt, -lpthread, etc)

K.O. |

|

2207

|

04 Jun 2021 |

Konstantin Olchanski | Info | MidasConfig.cmake usage | > > find_package(Midas)

>

> So how much time should I spend in fixing find_package(Midas) to make it generally usable?

>

> - include path is incomplete

> - library list is nonsense

> - compiler flags are not exported (we do not need -DOS_LINUX, but we do need -DHAVE_ZLIB, etc)

> - dependency libraries are not exported (-lz, -lutil, -lrt, -lpthread, etc)

>

I think I give up on find_package(Midas). It seems like a lot of work to straighten

all this out, when install(EXPORT) does it all automatically and is easier to use

for building user frontends and analyzers.

K.O. |

|

2225

|

20 Jun 2021 |

Lukas Gerritzen | Suggestion | MidasConfig.cmake usage | I agree that those two things are problems, but I don't see why it is preferable to leave the MidasConfig.cmake in this "broken" state. For us

problem 1 is less of an issue, becaues we run "link_directories(${MIDAS_LIBRARY_DIRS})" in the top CMakeLists.txt and then just link against "midas",

not "${MIDAS_LIBRARIES}". However, number 2 would be nice, to not manually hack in target_include_directories(target ${MIDASSYS}/mscb/include),

especially because ${MIDASSYS} is not set in cmake.

I see two solutions for problem 2: Treat mscb as a submodule and compile and install it together with midas, or add the include directory to

${MIDAS_INCLUDE_DIRS} (same applies to the other submodules, mscb is the one that made me open this elog just now)

Cheers

Lukas

> > find_package(Midas)

>

> I am testing find_package(Midas). There is a number of problems:

>

> 1) ${MIDAS_LIBRARIES} is set to "midas;midas-shared;midas-c-compat;mfe".

>

> This seem to be an incomplete list of all libraries build by midas (rmana is missing).

>

> This means ${MIDAS_LIBRARIES} should not be used for linking midas programs (unlike ${ROOT_LIBRARIES}, etc):

>

> - we discourage use of midas shared library because it always leads to problems with shared library version mismatch (static linking is preferred)

> - midas-c-compat is for building python interfaces, not for linking midas programs

> - mfe contains a main() function, it will collide with the user main() function

>

> So I think this should be changed to just "midas" and midas linking dependancy

> libraries (-lutil, -lrt, -lpthread) should also be added to this list.

>

> Of course the "install(EXPORT)" method does all this automatically. (so my fixing find_package(Midas) is a waste of time)

>

> 2) ${MIDAS_INCLUDE_DIRS} is missing the mxml, mjson, mvodb, midasio submodule directories

>

> Again, install(EXPORT) handles all this automatically, in find_package(Midas) it has to be done by hand.

>

> Anyhow, this is easy to add, but it does me no good in the rootana cmake if I want to build against old versions

> of midas. So in the rootana cmake, I still have to add $MIDASSYS/mvodb & co by hand. Messy.

>

> I do not know the history of cmake and why they have two ways of doing things (find_package and install(EXPORT)),

> this second method seems to be much simpler, everything is exported automatically into one file,

> and it is much easier to use (include the export file and say target_link_libraries(rootana PUBLIC midas)).

>

> So how much time should I spend in fixing find_package(Midas) to make it generally usable?

>

> - include path is incomplete

> - library list is nonsense

> - compiler flags are not exported (we do not need -DOS_LINUX, but we do need -DHAVE_ZLIB, etc)

> - dependency libraries are not exported (-lz, -lutil, -lrt, -lpthread, etc)

>

> K.O. |

|

2226

|

20 Jun 2021 |

Konstantin Olchanski | Suggestion | MidasConfig.cmake usage | > I agree that those two things are problems, but I don't see why it is preferable to leave the MidasConfig.cmake in this "broken" state. For us

> problem 1 is less of an issue, becaues we run "link_directories(${MIDAS_LIBRARY_DIRS})" in the top CMakeLists.txt and then just link against "midas",

> not "${MIDAS_LIBRARIES}". However, number 2 would be nice, to not manually hack in target_include_directories(target ${MIDASSYS}/mscb/include),

> especially because ${MIDASSYS} is not set in cmake.

So you say "nuke ${MIDAS_LIBRARIES}" and "fix ${MIDAS_INCLUDE}". Ok.

Problem still remains with required auxiliary libraries for linking "-lmidas". Sometimes you

need "-lutil" and "-lrt" and "-lpthread", sometimes not. Some way to pass this information

automatically would be nice.

Problem still remains that I cannot do these changes because I have no test harness

for any of this. Would be great if you could contribute this and post the documentation

blurb that we can paste into the midas wiki documentation.

And I still do not understand why we have to do all this work when cmake "import(EXPORT)"

already does all of this automatically. What am I missing?

K.O.

>

> I see two solutions for problem 2: Treat mscb as a submodule and compile and install it together with midas, or add the include directory to

> ${MIDAS_INCLUDE_DIRS} (same applies to the other submodules, mscb is the one that made me open this elog just now)

>

> Cheers

> Lukas

>

> > > find_package(Midas)

> >

> > I am testing find_package(Midas). There is a number of problems:

> >

> > 1) ${MIDAS_LIBRARIES} is set to "midas;midas-shared;midas-c-compat;mfe".

> >

> > This seem to be an incomplete list of all libraries build by midas (rmana is missing).

> >

> > This means ${MIDAS_LIBRARIES} should not be used for linking midas programs (unlike ${ROOT_LIBRARIES}, etc):

> >

> > - we discourage use of midas shared library because it always leads to problems with shared library version mismatch (static linking is preferred)

> > - midas-c-compat is for building python interfaces, not for linking midas programs

> > - mfe contains a main() function, it will collide with the user main() function

> >

> > So I think this should be changed to just "midas" and midas linking dependancy

> > libraries (-lutil, -lrt, -lpthread) should also be added to this list.

> >

> > Of course the "install(EXPORT)" method does all this automatically. (so my fixing find_package(Midas) is a waste of time)

> >

> > 2) ${MIDAS_INCLUDE_DIRS} is missing the mxml, mjson, mvodb, midasio submodule directories

> >

> > Again, install(EXPORT) handles all this automatically, in find_package(Midas) it has to be done by hand.

> >

> > Anyhow, this is easy to add, but it does me no good in the rootana cmake if I want to build against old versions

> > of midas. So in the rootana cmake, I still have to add $MIDASSYS/mvodb & co by hand. Messy.

> >

> > I do not know the history of cmake and why they have two ways of doing things (find_package and install(EXPORT)),

> > this second method seems to be much simpler, everything is exported automatically into one file,

> > and it is much easier to use (include the export file and say target_link_libraries(rootana PUBLIC midas)).

> >

> > So how much time should I spend in fixing find_package(Midas) to make it generally usable?

> >

> > - include path is incomplete

> > - library list is nonsense

> > - compiler flags are not exported (we do not need -DOS_LINUX, but we do need -DHAVE_ZLIB, etc)

> > - dependency libraries are not exported (-lz, -lutil, -lrt, -lpthread, etc)

> >

> > K.O. |

|

2228

|

22 Jun 2021 |

Lukas Gerritzen | Suggestion | MidasConfig.cmake usage | > So you say "nuke ${MIDAS_LIBRARIES}" and "fix ${MIDAS_INCLUDE}". Ok.

A more moderate option would be to remove mfe from ${MIDAS_LIBRARIES}, but as far as I understand mfe is not the only problem, so nuking might be the

better option after all. In addition, setting ${MIDASSYS} in MidasConfig.cmake would probably improve compatibility.

>Sometimes you need "-lutil" and "-lrt" and "-lpthread", sometimes not.

>Some way to pass this information automatically would be nice.

I do not properly understand when you need this and when not, but can't this be communicated with the PUBLIC keyword of target_link_libraries()? If I

understand if we can use PUBLIC for -lutil, -lrt and -lpthread, I can write something, test it here and create a pull request.

> And I still do not understand why we have to do all this work when cmake "import(EXPORT)"

> already does all of this automatically. What am I missing?

Does this not require midas to be built every time you import it? I know, it's a bit the "billions of flies can't be wrong" argument, but I've never seen

any package that uses import(EXPORT) over find_package().

> > I agree that those two things are problems, but I don't see why it is preferable to leave the MidasConfig.cmake in this "broken" state. For us

> > problem 1 is less of an issue, becaues we run "link_directories(${MIDAS_LIBRARY_DIRS})" in the top CMakeLists.txt and then just link against "midas",

> > not "${MIDAS_LIBRARIES}". However, number 2 would be nice, to not manually hack in target_include_directories(target ${MIDASSYS}/mscb/include),

> > especially because ${MIDASSYS} is not set in cmake.

>

> So you say "nuke ${MIDAS_LIBRARIES}" and "fix ${MIDAS_INCLUDE}". Ok.

>

> Problem still remains with required auxiliary libraries for linking "-lmidas". Sometimes you

> need "-lutil" and "-lrt" and "-lpthread", sometimes not. Some way to pass this information

> automatically would be nice.

>

> Problem still remains that I cannot do these changes because I have no test harness

> for any of this. Would be great if you could contribute this and post the documentation

> blurb that we can paste into the midas wiki documentation.

>

> And I still do not understand why we have to do all this work when cmake "import(EXPORT)"

> already does all of this automatically. What am I missing?

>

> K.O.

>

> >

> > I see two solutions for problem 2: Treat mscb as a submodule and compile and install it together with midas, or add the include directory to

> > ${MIDAS_INCLUDE_DIRS} (same applies to the other submodules, mscb is the one that made me open this elog just now)

> >

> > Cheers

> > Lukas

> >

> > > > find_package(Midas)

> > >

> > > I am testing find_package(Midas). There is a number of problems:

> > >

> > > 1) ${MIDAS_LIBRARIES} is set to "midas;midas-shared;midas-c-compat;mfe".

> > >

> > > This seem to be an incomplete list of all libraries build by midas (rmana is missing).

> > >

> > > This means ${MIDAS_LIBRARIES} should not be used for linking midas programs (unlike ${ROOT_LIBRARIES}, etc):

> > >

> > > - we discourage use of midas shared library because it always leads to problems with shared library version mismatch (static linking is preferred)

> > > - midas-c-compat is for building python interfaces, not for linking midas programs

> > > - mfe contains a main() function, it will collide with the user main() function

> > >

> > > So I think this should be changed to just "midas" and midas linking dependancy

> > > libraries (-lutil, -lrt, -lpthread) should also be added to this list.

> > >

> > > Of course the "install(EXPORT)" method does all this automatically. (so my fixing find_package(Midas) is a waste of time)

> > >

> > > 2) ${MIDAS_INCLUDE_DIRS} is missing the mxml, mjson, mvodb, midasio submodule directories

> > >

> > > Again, install(EXPORT) handles all this automatically, in find_package(Midas) it has to be done by hand.

> > >

> > > Anyhow, this is easy to add, but it does me no good in the rootana cmake if I want to build against old versions

> > > of midas. So in the rootana cmake, I still have to add $MIDASSYS/mvodb & co by hand. Messy.

> > >

> > > I do not know the history of cmake and why they have two ways of doing things (find_package and install(EXPORT)),

> > > this second method seems to be much simpler, everything is exported automatically into one file,

> > > and it is much easier to use (include the export file and say target_link_libraries(rootana PUBLIC midas)).

> > >

> > > So how much time should I spend in fixing find_package(Midas) to make it generally usable?

> > >

> > > - include path is incomplete

> > > - library list is nonsense

> > > - compiler flags are not exported (we do not need -DOS_LINUX, but we do need -DHAVE_ZLIB, etc)

> > > - dependency libraries are not exported (-lz, -lutil, -lrt, -lpthread, etc)

> > >

> > > K.O. |

|

2230

|

24 Jun 2021 |

Konstantin Olchanski | Suggestion | MidasConfig.cmake usage | > > So you say "nuke ${MIDAS_LIBRARIES}" and "fix ${MIDAS_INCLUDE}". Ok.

> A more moderate option ...

For the record, I did not disappear. I have a very short time window

to complete commissioning the alpha-g daq (now that the network

and the event builder are cooperating). To add to the fun, our high voltage

power supply turned into a pumpkin, so plotting voltages and currents

on the same history plot at the same time (like we used to be able to do)

went up in priority.

K.O. |

|

2259

|

11 Jul 2021 |

Konstantin Olchanski | Suggestion | MidasConfig.cmake usage | > > > So you say "nuke ${MIDAS_LIBRARIES}" and "fix ${MIDAS_INCLUDE}". Ok.

> > A more moderate option ...

>

> For the record, I did not disappear. I have a very short time window

> to complete commissioning the alpha-g daq (now that the network

> and the event builder are cooperating). To add to the fun, our high voltage

> power supply turned into a pumpkin, so plotting voltages and currents

> on the same history plot at the same time (like we used to be able to do)

> went up in priority.

>

in the latest update, find_package(midas) should work correctly, the include path is right,

the library list is right.

please test.

I find that the cmake install(export) method is simpler on the user side (just one line of

code) and is easier to support on the midas side (config file is auto-generated).

I request that proponents of the find_package(midas) method contribute the documentation and

example on how to use it. (see my other message).

K.O. |

|

2261

|

13 Jul 2021 |

Stefan Ritt | Info | MidasConfig.cmake usage | Thanks for the contribution of MidasConfig.cmake. May I kindly ask for one extension:

Many of our frontends require inclusion of some midas-supplied drivers and libraries

residing under

$MIDASSYS/drivers/class/

$MIDASSYS/drivers/device

$MIDASSYS/mscb/src/

$MIDASSYS/src/mfe.cxx

I guess this can be easily added by defining a MIDAS_SOURCES in MidasConfig.cmake, so

that I can do things like:

add_executable(my_fe

myfe.cxx

$(MIDAS_SOURCES}/src/mfe.cxx

${MIDAS_SOURCES}/drivers/class/hv.cxx

...)

Does this make sense or is there a more elegant way for that?

Stefan |

|

2262

|

13 Jul 2021 |

Konstantin Olchanski | Info | MidasConfig.cmake usage | > $MIDASSYS/drivers/class/

> $MIDASSYS/drivers/device

> $MIDASSYS/mscb/src/

> $MIDASSYS/src/mfe.cxx

>

> I guess this can be easily added by defining a MIDAS_SOURCES in MidasConfig.cmake, so

> that I can do things like:

>

> add_executable(my_fe

> myfe.cxx

> $(MIDAS_SOURCES}/src/mfe.cxx

> ${MIDAS_SOURCES}/drivers/class/hv.cxx

> ...)

1) remove $(MIDAS_SOURCES}/src/mfe.cxx from "add_executable", add "mfe" to

target_link_libraries() as in examples/experiment/frontend:

add_executable(frontend frontend.cxx)

target_link_libraries(frontend mfe midas)

2) ${MIDAS_SOURCES}/drivers/class/hv.cxx surely is ${MIDASSYS}/drivers/...

If MIDAS is built with non-default CMAKE_INSTALL_PREFIX, "drivers" and co are not

available, as we do not "install" them. Where MIDASSYS should point in this case is

anybody's guess. To run MIDAS, $MIDASSYS/resources is needed, but we do not install

them either, so they are not available under CMAKE_INSTALL_PREFIX and setting

MIDASSYS to same place as CMAKE_INSTALL_PREFIX would not work.

I still think this whole business of installing into non-default CMAKE_INSTALL_PREFIX

location has not been thought through well enough. Too much thinking about how cmake works

and not enough thinking about how MIDAS works and how MIDAS is used. Good example

of "my tool is a hammer, everything else must have the shape of a nail".

K.O. |

|

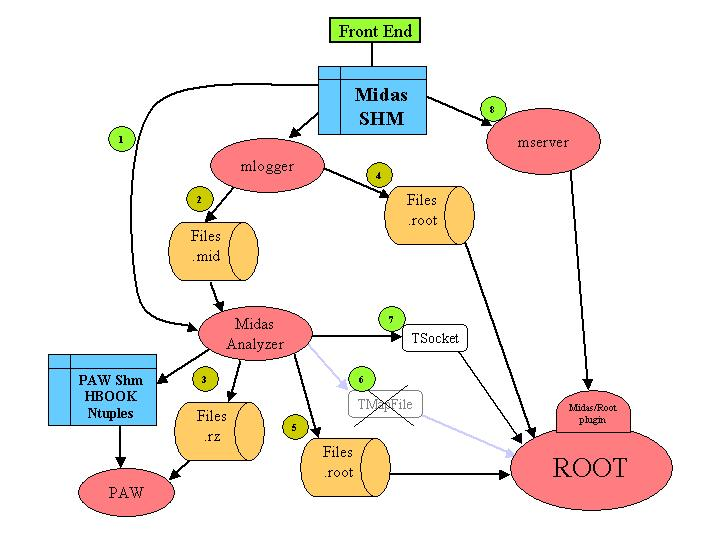

37

|

02 Jul 2003 |

Pierre-André Amaudruz | | Midas/ROOT Analyser situation | The current and future situation of the Midas analyzer is summarized in the

attachment below.

Box explanation:

================

Front end:

---------

Midas code for accessing/gathering the hardware information into the Midas

format.

Midas SHM:

---------

Midas back end shared memory where the front end data are sent to.

mlogger:

-------

Data logger collecting the midas events and storing them on a physical

logging device (Disk, Tape)

Midas Analyzer:

--------------

Midas client for event-by-event analysis. Incoming data can be either online

or offline.

mserver:

-------

Subprocess interfacing external (remote) midas client to the centralized

data collection and database system.

PAW:

---

Standalone physics data analyzer (CERN).

ROOT:

----

Standalone Physics data analyser (CERN).

This diagram represents the data path from the Frontend to the analyzer in

online and offline mode. Each data path is annoted with a circled number

discussed below. In all cases, the data will flow from the front end

application to the midas back end data buffers which reside in a specific

share memory for a given experiment.

Path:

(1): From the shared memory, the midas analyzer can request events directly

and process them for output to divers destination.

(2): The data logger is a specific application which stores all the data to

a storage media such as a disk or tape. This path is specific to the

creation of file.mid file format. The actual storage file in this .mid

format can be readout later on by the midas analyzer.

(3): The Midas analyzer has been developed originally for interfacing to the

PAW analyzer which uses its own shared memory segment for online display.

The analyzer can also save the data into a specific data format consistent

with PAW (HBOOK and Ntuples, extension .rz).

(4): Presently the data logger support a creation of the ROOT file format.

This file contains in the form of a Tree the midas event-by-event data. This

file is fully compatible with ROOT and therefore can be read out by the

standard ROOT application.

(5): Equivalent to the data logger, the analyzer receiving from the data

buffer or reading from a .mid file data can apply an event-by-event analysis

and on request produce a compliant ROOT file for further analysis. This

.root file can be composed of Trees as well as histograms.

(6): The possibility of ONLINE ROOT analysis has been implemented in a first

stage through the TMapFile (ROOT shared memory). While this configuration is

still in use an experiment, the intention is to deprecate it and replace it

with the data path (7).

(7): This path uses the network socket channel to transfer data out of the

analyzer to the ROOT environment. The current analyzer has a limited support

for ROOT analysis by only publishing on request the Midas analysis built in

histograms. No mean is yet implemented for Tree passing mechanism.

(8): The pass has not been yet investigated, but ROOT does provide

accessibility to external function calls which makes this option possible.

The ROOT framework will then perform dedicated event call to the main midas

data buffer using the standard midas communication scheme. The data format

translation from Midas banks to ROOT format will have to be taken care at

the user level in the ROOT environment.

Discussion:

==========

Presently the Socket communication between Midas and ROOT (7) is under

revision by Stefan Ritt and René Brun. This revision will simplify the

remote access of an object such as an histogram. For the Tree itself, the

requirement would be to implement a "ring buffer" mechanism for remote tree

request. This is currently under discussion.

The path (8) has been suggested by Triumf to address small experiment setup

where only a single analyzer is required. This path minimize the DAQ

requirements by moving all the data analysis handling to the user.

The same ROOT analysis code would be applicable to a ONLINE as well as

OFFLINE analysis.

Cons:

- Necessity of publishing raw data through the network for every instance of

the remote analyzer.

- Result sharing of the analysis cannot be done yet in real time.

Pros:

- No need of extra task for data translation (midas/root).

- Unique data unpacking code part of the user code.

- Less CPU requirement.

Other issues:

============

- The current necessity of the Midas shared memory for the midas analyzer to

run is a concern in particular for offline analysis where a priori no midas

is available.

- The handling of the run/analyzer parameters. Possible parameter extraction

from file.odb. |

| Attachment 1: midas-root.jpg

|

|

|

1064

|

09 Jun 2015 |

Michael McEvoy | Forum | Midas-MSCB SCS2000 integration | I am using the MSCB SCS2000 to monitor slow control variables (temperatures, voltages, etc). I am trying to

get it set up at fermilab as a test stand in the MC1 building and was wondering if anyone has integrated

Midas with a MSCB SCS2000 before. We have two systems at fermilab, one system that is currently running

in the g-2 experimental hall, but running an out of date version of midas. The second test stand I am

setting up is working with the current version of midas. I believe we will easily be able to figure out the

external probes for temperatures and voltages just fine. But the MSCB SCS2000 box itself has 1

temperature value, 1 current value, and 5 voltages internally that we also need to monitor. If I use the msc

command I can read back the external values through the daughter cards I have installed on the SCS2000

box but has no way of reading back the internal values that I need. I also have been looking through the

MIDAS files trying to find a possible way to read these out to no avail.

If anyone has any ideas or has had previous work with the SCS2000 and knows how to read back the

internal values please let me know.

Thanks,

Michael McEvoy

NIU Graduate Student |

|

1065

|

10 Jun 2015 |

Stefan Ritt | Forum | Midas-MSCB SCS2000 integration | > If anyone has any ideas or has had previous work with the SCS2000 and knows how to read back the

> internal values please let me know.

The current MIDAS distribution contains a file /midas/examples/slowcont/mscb_fe.c which contains example code of how to read some MSCB devices.

/Stefan |

|

293

|

12 Aug 2006 |

Pierre-André Amaudruz | Release | Midas updates | Midas development:

Over the last 2 weeks (Jul26-Aug09), Stefan Ritt has been at Triumf for the "becoming" traditional Midas development 'brainstorming/hackathon' (every second year).

A list with action items has been setup combining the known problems and the wish list from several Midas users.

The online documentation has been updated to reflect the modifications.

Not all the points have been covered, as more points were added daily but the main issues that have been dealt or at least discussed are:

- ODB over Frontend precedence.

When starting a FE client, the equipment settings are taken from the ODB if this equipment already existed. This meant the ODB has precedence over the EQUIPEMENT structure and whatever change you apply to the C-Structure, it will NOT be taken in consideration until you clean (remove) the equipment tree in ODB.

- Revived 64 bit support. This was required as more OS are already supporting such architecture. Originally Midas did support Alpha/OSF/1 which operated on 64 bit machine. This new code has been tested on SL4.2 with Dual-Core 64-bit AMD Opterons.

- Multi-threading in Slow Control equipments.

Check entry 289 in Midas Elog from Stefan.

- mhttpd using external Elog.

The standalone ELOG package can be coupled to an existing experiment and therefore supersede the internal elog functionality from mhttpd.

This requires a particular configuration which is described in the documentation.

- MySQL test in mlogger

A reminder that mlogger can generate entries in a MySQL database as long as the pre-compilation flag -HAVE_MYSQL is enabled during system built. The access and form filling is then defined from the ODB under Logger/SQL once the logger is running, see documentation.

- Directory destination for midas.log and odb dump files

It is now possible to specify an individual directory to the default midas.log file as well as to the "ODB Dump file" destination. If either of these fields contains a preceding directory, it will take the string as an absolute path to the file.

- User defined "event Data buffer size" (ODB)

The event buffer size has been until now defined at the system level in midas.h. It is now possible to optimize the memory allocation specific to the event buffer with an entry in the ODB under /experiment, see documentation.

- History group display

It is now possible to display an individual group of history plots. No documentation on that topics as it should be self explanatory.

- History export option

From the History web page, it is possible to export to a ASCII .csv file the history content. This file can later be imported into excel for example. No documentation on that topics as it should be self explanatory.

- Multiple "minor" corrections:

- Alarm reset for multiple experiment (return directly to the experiment).

- mdump -b option bug fixed.

- Alarm evaluation function fixed.

- mlogger/SQL boolean handling fixed.

- bm_get_buffer_level() was returning a wrong value which has been fixed now.

- Event buffer bug traced and exterminated (Thanks to Konstantin).

|

|

2348

|

23 Feb 2022 |

Stefan Ritt | Info | Midas slow control event generation switched to 32-bit banks | The midas slow control system class drivers automatically read their equipment and generate events containing midas banks. So far these have been 16-bit banks using bk_init(). But now more and more experiments use large amount of channels, so the 16-bit address space is exceeded. Until last week, there was even no check that this happens, leading to unpredictable crashes.

Therefore I switched the bank generation in the drivers generic.cxx, hv.cxx and multi.cxx to 32-bit banks via bk_init32(). This should be in principle transparent, since the midas bank functions automatically detect the bank type during reading. But I thought I let everybody know just in case.

Stefan |

|

1050

|

05 May 2015 |

Pierre-Andre Amaudruz | Forum | Midas seminar | Dear Midas users,

As part of our commitment to Midas improvements, this year Dr. Stefan Ritt is coming to Vancouver

BC, CANADA for his biennial visit from the end of June to mid-July 2015.

A Data acquisition system now a days is expected to do more than just collect data, it has become an

integrated process with various types of data source for monitoring, control, storage and analysis,

as well as data visualization using modern techniques.

MIDAS stands for "Maximum Integration Data Acquisition System". It is interesting to think that this

name was given 20 years ago when none of the interconnectability was available at today's level.

So in order to keep MIDAS current with new technology and provide a better DAQ tool, we plan to

discuss topics that would address integration in a larger format, the goal being to provide to the

users a more robust and "simple" way of doing their work. We will also be working on improvements

and the addition of new features.

Towards the end of Stefan's visit, we will have a "Midas seminar" with a few presentations related

to specific experiments managed by Triumf. Each talk will bring a different aspect of the DAQ that

Midas had to deal with. This will potentially be a good starting point for further discussions.

We will broadcast this seminar. Webcast information will be provided in a later message, preliminary

date: 13 or 14 July. I would encourage you to participate in this event, if not in person, at least

virtually. It is a good time for you to send to Stefan (midas@psi.ch) or myself (midas@triumf.ca),

questions, requests, wishes, issues that you experience, general comment that has been on the back

of your mind but you didn't manage to submit to us. This would help us to better understand how

Midas is used, where it is used, and what can be addressed to better serve your needs as a user.

Let us know how Midas is helping you, we would really appreciate it. Let us also know if you are

thinking of attending this virtual seminar.

If you happen to be in the Vancouver vicinity around the end of June, you are most welcome to join

us at Triumf. The Midas team will take the time to chat about Data Acquisition and perhaps the

benefit of our west coast weather!

Best Regards, Pierre-André Amaudruz |

|