| ID |

Date |

Author |

Topic |

Subject |

|

2202

|

02 Jun 2021 |

Konstantin Olchanski | Info | bitbucket build truncated | I truncated the bitbucket build to only build on ubuntu LTS 20.04.

Somehow all the other build targets - centos-7, centos-8, ubuntu-18 - have

an obsolete version of cmake. I do not know where the bitbucket os images

get these obsolete versions of cmake - my centos-7 and centos-8 have much

more recent versions of cmake.

If somebody has time to figure it out, please go at it, I would like very

much to have centos-7 and centos-8 builds restored (with ROOT), also

to have a ubuntu LTS 20.04 build with ROOT. (For me, debugging bitbucket

builds is extremely time consuming).

Right now many midas cmake files require cmake 3.12 (released in late 2018).

I do not know why that particular version of cmake (I took the number

from the tutorials I used).

I do not know what is the actual version of cmake that MIDAS (and ROOTANA)

require/depend on.

I wish there were a tool that would look at a cmake file, examine all the

features it uses and report the lowest version of cmake that supports them.

K.O. |

|

2203

|

04 Jun 2021 |

Andreas Suter | Bug Report | cmake with CMAKE_INSTALL_PREFIX fails | Hi,

if I check out midas and try to configure it with

cmake ../ -DCMAKE_INSTALL_PREFIX=/usr/local/midas

I do get the error messages:

Target "midas" INTERFACE_INCLUDE_DIRECTORIES property contains path:

"<path>/tmidas/midas/include"

which is prefixed in the source directory.

Is the cmake setup not relocatable? This is new and was working until recently:

MIDAS version: 2.1

GIT revision: Thu May 27 12:56:06 2021 +0000 - midas-2020-08-a-295-gfd314ca8-dirty on branch HEAD

ODB version: 3 |

|

2204

|

04 Jun 2021 |

Konstantin Olchanski | Bug Report | cmake with CMAKE_INSTALL_PREFIX fails | > cmake ../ -DCMAKE_INSTALL_PREFIX=/usr/local/midas

good timing, I am working on cmake for manalyzer and rootana and I have not tested

the install prefix business.

now I know to test it for all 3 packages.

I will also change find_package(Midas) slightly, (see my other message here),

I hope you can confirm that I do not break it for you.

K.O. |

|

2205

|

04 Jun 2021 |

Konstantin Olchanski | Info | MidasConfig.cmake usage | > find_package(Midas)

I am testing find_package(Midas). There is a number of problems:

1) ${MIDAS_LIBRARIES} is set to "midas;midas-shared;midas-c-compat;mfe".

This seem to be an incomplete list of all libraries build by midas (rmana is missing).

This means ${MIDAS_LIBRARIES} should not be used for linking midas programs (unlike ${ROOT_LIBRARIES}, etc):

- we discourage use of midas shared library because it always leads to problems with shared library version mismatch (static linking is preferred)

- midas-c-compat is for building python interfaces, not for linking midas programs

- mfe contains a main() function, it will collide with the user main() function

So I think this should be changed to just "midas" and midas linking dependancy

libraries (-lutil, -lrt, -lpthread) should also be added to this list.

Of course the "install(EXPORT)" method does all this automatically. (so my fixing find_package(Midas) is a waste of time)

2) ${MIDAS_INCLUDE_DIRS} is missing the mxml, mjson, mvodb, midasio submodule directories

Again, install(EXPORT) handles all this automatically, in find_package(Midas) it has to be done by hand.

Anyhow, this is easy to add, but it does me no good in the rootana cmake if I want to build against old versions

of midas. So in the rootana cmake, I still have to add $MIDASSYS/mvodb & co by hand. Messy.

I do not know the history of cmake and why they have two ways of doing things (find_package and install(EXPORT)),

this second method seems to be much simpler, everything is exported automatically into one file,

and it is much easier to use (include the export file and say target_link_libraries(rootana PUBLIC midas)).

So how much time should I spend in fixing find_package(Midas) to make it generally usable?

- include path is incomplete

- library list is nonsense

- compiler flags are not exported (we do not need -DOS_LINUX, but we do need -DHAVE_ZLIB, etc)

- dependency libraries are not exported (-lz, -lutil, -lrt, -lpthread, etc)

K.O. |

|

2206

|

04 Jun 2021 |

Konstantin Olchanski | Bug Report | cmake with CMAKE_INSTALL_PREFIX fails | > cmake ../ -DCMAKE_INSTALL_PREFIX=/usr/local/midas

> Is the cmake setup not relocatable? This is new and was working until recently:

Indeed. Not relocatable. This is because we do not install the header files.

When you use the CMAKE_INSTALL_PREFIX, you get MIDAS "installed" in:

prefix/lib

prefix/bin

$MIDASSYS/include <-- this is the source tree and so not "relocatable"!

Before, this was kludged and cmake did not complain about it.

Now I changed cmake to handle the include path "the cmake way", and now it knows to complain about it.

I am not sure how to fix this: we have a conflict between:

- our normal way of using midas (include $MIDASSYS/include, link $MIDASSYS/lib, run $MIDASSYS/bin)

- the cmake way (packages *must be installed* or else! but I do like install(EXPORT)!)

- and your way (midas include files are in $MIDASSYS/include, everything else is in your special location)

I think your case is strange. I am curious why you want midas libraries to be in prefix/lib instead of in

$MIDASSYS/lib (in the source tree), but are happy with header files remaining in the source tree.

K.O. |

|

2207

|

04 Jun 2021 |

Konstantin Olchanski | Info | MidasConfig.cmake usage | > > find_package(Midas)

>

> So how much time should I spend in fixing find_package(Midas) to make it generally usable?

>

> - include path is incomplete

> - library list is nonsense

> - compiler flags are not exported (we do not need -DOS_LINUX, but we do need -DHAVE_ZLIB, etc)

> - dependency libraries are not exported (-lz, -lutil, -lrt, -lpthread, etc)

>

I think I give up on find_package(Midas). It seems like a lot of work to straighten

all this out, when install(EXPORT) does it all automatically and is easier to use

for building user frontends and analyzers.

K.O. |

|

2208

|

04 Jun 2021 |

Andreas Suter | Bug Report | cmake with CMAKE_INSTALL_PREFIX fails | > > cmake ../ -DCMAKE_INSTALL_PREFIX=/usr/local/midas

> > Is the cmake setup not relocatable? This is new and was working until recently:

>

> Indeed. Not relocatable. This is because we do not install the header files.

>

> When you use the CMAKE_INSTALL_PREFIX, you get MIDAS "installed" in:

>

> prefix/lib

> prefix/bin

> $MIDASSYS/include <-- this is the source tree and so not "relocatable"!

>

> Before, this was kludged and cmake did not complain about it.

>

> Now I changed cmake to handle the include path "the cmake way", and now it knows to complain about it.

>

> I am not sure how to fix this: we have a conflict between:

>

> - our normal way of using midas (include $MIDASSYS/include, link $MIDASSYS/lib, run $MIDASSYS/bin)

> - the cmake way (packages *must be installed* or else! but I do like install(EXPORT)!)

> - and your way (midas include files are in $MIDASSYS/include, everything else is in your special location)

>

> I think your case is strange. I am curious why you want midas libraries to be in prefix/lib instead of in

> $MIDASSYS/lib (in the source tree), but are happy with header files remaining in the source tree.

>

> K.O.

We do it this way, since the lib and bin needs to be in a place where standard users have no access to.

If I think an all other packages I am working with, e.g. ROOT, the includes are also installed under CMAKE_INSTALL_PREFIX.

Up until recently there was no issue to work with CMAKE_INSTALL_PREFIX, accepting that the includes stay under

$MIDASSYS/include, even though this is not quite the standard way, but no problem here. Anyway, since CMAKE_INSTALL_PREFIX

is a standard option from cmake, I think things should not "break" if you want to use it.

A.S. |

|

Draft

|

08 Jun 2021 |

Joseph McKenna | Suggestion | Have a list of 'users responsible' in Alarms and Programs odb entries | > > This list of responsible being attached to alarm message strings ...

>

> This is a great idea. But I think we do not need to artificially limit ourselves

> to string and array lengths.

>

> The code in alarm.c should be changes to use std::string and std::vector<std::string> (STRING_LIST

> #define), db_get_record() should be replaced with individual ODB reads (that's what it does behind

> the scenes, but in a non-type and -size safe way).

>

> I think the web page code will work correctly, it does not care about string lengths.

>

> K.O.

Ok, I'm working on this... I see a design choice to make, 1. Keep 'ALARM' as a struct, or 2. Replace ALARM struct with a class (keeping memory layout the same). Since we are adding STRING_LIST, I'd lean towards a C++ style with a class

1. Keep 'ALARM' as a struct:

Get rid of ALARM_ODB_STR for the default values

Add static functions (that take a hkey) to interact with the ODB (to save duplicating logic in al_reset_alarm, al_check, al_define_odb_alarm and al_trigger_alarm)

2. Replace ALARM struct with a class:

default ctor: Do nothing special (so it behaves like the old struct)

default dtor: std::vector<std::string> dtor will

It seems an opportunity to convert the alarm struct to a class with member functions that take hkey pointers?

default ctor: Do nothing special (so it behaves like the old struct)

default dtor: std::vector<std::string> dtor will

SetToDefault: This is where default values are hard coded (functionally replace ALARM_ODB_STR ) |

|

2210

|

08 Jun 2021 |

Konstantin Olchanski | Bug Report | cmake with CMAKE_INSTALL_PREFIX fails | > > > cmake ../ -DCMAKE_INSTALL_PREFIX=/usr/local/midas

> > > Is the cmake setup not relocatable? This is new and was working until recently:

> > Not relocatable. This is because we do not install the header files.

>

> We do it this way, since the lib and bin needs to be in a place where standard users have no access to.

hmm... i did not get this. "needs to be in a place where standard users have no access to". what do you

mean by this? you install midas in a secret location to prevent somebody from linking to it?

> If I think an all other packages I am working with, e.g. ROOT, the includes are also installed under CMAKE_INSTALL_PREFIX.

cmake and other frameworks tend to be like procrustean beds (https://en.wikipedia.org/wiki/Procrustes),

pre-cmake packages never quite fit perfectly, and either the legs or the heads get cut off. post-cmake packages

are constructed to fit the bed, whether it makes sense or not.

given how this situation is known since antiquity, I doubt we will solve it today here.

(I exercise my freedom of speech rights to state that I object being put into

such situations. And I would like to have it clear that I hate cmake (ask me why)).

>

> Up until recently there was no issue to work with CMAKE_INSTALL_PREFIX, accepting that the includes stay under

> $MIDASSYS/include, even though this is not quite the standard way, but no problem here.

>

I think a solution would be to add install rules for include files. There will be a bit of trouble,

normal include path is $MIDASSYS/include,$MIDASSYS/mxml,$MIDASSYS/mjson,etc, after installing

it will be $CMAKE_INSTALL_PREFIX/include (all header files from different git submodules all

dumped into one directory). I do not know what problems will show up from that.

I think if midas is used as a subproject of a bigger project, this is pretty much required

(and I have seen big experiments, like STAR and ND280, do this type of stuff with CMT,

another horror and the historical precursor of cmake)

The problem is that we do not have any super-project like this here, so I cannot ever

be sure that I have done everything correctly. cmake itself can be helpful, like

in the current situation where it told us about a problem. but I will never trust

cmake completely, I see cmake do crazy and unreasonable things way too often.

One solution would be for you or somebody else to contribute such a cmake super-project,

that would build midas as a subproject, install it with a CMAKE_INSTALL_PREFIX and

try to link some trivial frontend or analyzer to check that everything is installed

correctly. It would become an example for "how to use midas as a subproject").

Ideally, it should be usable in a bitbucket automatic build (assuming bitbucket

has correct versions of cmake, which it does not half the time).

P.S. I already spent half-a-week tinkering with cmake rules, only to discover

that I broke a kludge that allows you to do something strange (if I have it right,

the CMAKE_PREFIX_INSTALL code is your contribution). This does not encourage

me to tinker with cmake even more. who knows against what other

kludge I bump into. (oh, yes, I know, I already bumped into the nonsense

find_package(Midas) implementation).

K.O. |

|

2211

|

09 Jun 2021 |

Andreas Suter | Bug Report | cmake with CMAKE_INSTALL_PREFIX fails | > > > > cmake ../ -DCMAKE_INSTALL_PREFIX=/usr/local/midas

> > > > Is the cmake setup not relocatable? This is new and was working until recently:

> > > Not relocatable. This is because we do not install the header files.

> >

> > We do it this way, since the lib and bin needs to be in a place where standard users have no access to.

>

> hmm... i did not get this. "needs to be in a place where standard users have no access to". what do you

> mean by this? you install midas in a secret location to prevent somebody from linking to it?

>

This was a wrong wording from my side. We do not want the the users have write access to the midas installation libs and bins.

I have submitted the pull request which should resolve this without interfere with your usage.

Hope this will resolve the issue. |

|

2212

|

09 Jun 2021 |

Joseph McKenna | Suggestion | Have a list of 'users responsible' in Alarms and Programs odb entries | > > This list of responsible being attached to alarm message strings ...

>

> This is a great idea. But I think we do not need to artificially limit ourselves

> to string and array lengths.

>

> The code in alarm.c should be changes to use std::string and std::vector<std::string> (STRING_LIST

> #define), db_get_record() should be replaced with individual ODB reads (that's what it does behind

> the scenes, but in a non-type and -size safe way).

>

> I think the web page code will work correctly, it does not care about string lengths.

>

> K.O.

Auto growing lists is an excellent plan. I am making decent progress and should have something to

report soon |

|

2213

|

10 Jun 2021 |

Konstantin Olchanski | Bug Report | cmake with CMAKE_INSTALL_PREFIX fails | > > > > > cmake ../ -DCMAKE_INSTALL_PREFIX=/usr/local/midas

> > > > > Is the cmake setup not relocatable? This is new and was working until recently:

> > > > Not relocatable. This is because we do not install the header files.

> > >

> > > We do it this way, since the lib and bin needs to be in a place where standard users have no access to.

> >

> > hmm... i did not get this. "needs to be in a place where standard users have no access to". what do you

> > mean by this? you install midas in a secret location to prevent somebody from linking to it?

> >

>

> This was a wrong wording from my side. We do not want the the users have write access to the midas installation libs and bins.

> I have submitted the pull request which should resolve this without interfere with your usage.

> Hope this will resolve the issue.

Excellent. I think it is good to have midas "install" in a sane manner.

But I still struggle to understand what you do. Presumably you can "install" midas

in the "midas account", which is not writable by the experiment and user accounts.

Then it does not matter if you "install" it in it's build directory (like we do)

or in some other location (like you do now).

This does not work of course if you only have one account, so do you build midas

as root? or install it as root?

I do ask because in the current computing world, doing things as root requires

a certain amount of trust, which may not be there anymore, see the recent "supply side" attacks

against python packages, solar winds hack, linux kernel malicious patches from umn, etc.

Personally, I do not want to answer questions "is midas safe to run as root?",

"can I trust the midas install scripts to run as root?" and certainly I do not want to hear

about "I installed midas and 100 other packages as root and got hacked 7 days later".

(and running midas as root was never safe. neither mhttpd nor mserver will pass

a security audit).

Anyhow, looks like I will look at cmake again next week. Right now I have a major

breakthrough in the ALPHA-g experiment, my big 96-port Juniper switch suddenly

has working ethernet flow control and I can record data at 600 Mbytes/sec without

any UDP packet loss. Above that, my event builder explodes. I want to fix it and get

it up to 1000 Mbytes/sec, the limit of my 10gige network link. (In this system I do not

have the disk subsystem to record data at this rate, but I have build 8-disk ZFS arrays

that would sink it, no problem). And the day has come when I ran out of CPU cores.

The UDP packet receivers are multithreaded, the event builder is multithreaded and I am using

all 4 of the available cores (intel cpu). As soon as I can get a rackmounted AMD Ryzen

or Threadripper machine, we will likely upgrade. (need at least one more CPU core to run

the online analyzer!). Exciting.

K.O. |

|

2214

|

10 Jun 2021 |

Andreas Suter | Bug Report | cmake with CMAKE_INSTALL_PREFIX fails | > > > > > > cmake ../ -DCMAKE_INSTALL_PREFIX=/usr/local/midas

> > > > > > Is the cmake setup not relocatable? This is new and was working until recently:

> > > > > Not relocatable. This is because we do not install the header files.

> > > >

> > > > We do it this way, since the lib and bin needs to be in a place where standard users have no access to.

> > >

> > > hmm... i did not get this. "needs to be in a place where standard users have no access to". what do you

> > > mean by this? you install midas in a secret location to prevent somebody from linking to it?

> > >

> >

> > This was a wrong wording from my side. We do not want the the users have write access to the midas installation libs and bins.

> > I have submitted the pull request which should resolve this without interfere with your usage.

> > Hope this will resolve the issue.

>

> Excellent. I think it is good to have midas "install" in a sane manner.

>

> But I still struggle to understand what you do. Presumably you can "install" midas

> in the "midas account", which is not writable by the experiment and user accounts.

> Then it does not matter if you "install" it in it's build directory (like we do)

> or in some other location (like you do now).

>

> This does not work of course if you only have one account, so do you build midas

> as root? or install it as root?

>

We work the following way: there is a production Midas under let's say /usr/local/midas (make install as sudo/root). This is for the running experiment. Since we are doing muSR, we

have experiments on a daily base, rather than month and years as it is the case for a particle physics experiment. Now, still we would like to test updates, new features of Midas on

the same machine. For this we us the repo directly. If we are happy with the new feature, and fixes, we again do a 'make install' and hence freeze for the production a specific

snapshot. Of course we could use various local copies of the Midas repo, but over the last years this approach was very convenient and productive. Hope this explains a bit better

why we want to work with a CMAKE_INSTALL_PREFIX.

AS |

|

2215

|

15 Jun 2021 |

Konstantin Olchanski | Info | blog - convert tmfe_rev0 event builder to develop-branch tmfe c++ framework | Now we are converting the alpha-g event builder from rev0 tmfe (midas-2020-xx) to the new tmfe c++

framework in midas-develop. Earlier, I followed the steps outlined in this blog

to convert this event builder from mfe.c framework to rev0 tmfe.

- get latest midas-develop

- examine progs/tmfe_example_everything.cxx

- open feevb.cxx

- comment-out existing main() function

- from tmfe_example_everything.cxx, copy class FeEverything and main() to the bottom of feevb.cxx

- comment-out old main()

- make sure we include the correct #include "tmfe.h"

- rename example frontend class FeEverything to FeEvb

- rename feevb's "rpc handler" and "periodic handler" class EvbEq to EqEvb

- update class declaration and constructor of EqEvb from EqEverything in example_everything: EqEvb extends TMFeEquipment,

EqEvb constructor calls constructor of base class (c++ bogosity), keep the bits of the example that initialize the

equipment "common"

- in EqEvb, remove data members fMfe and fEq: fMfe is now inherited from the base class, fEq is now "this"

- in FeEvb constructor, wire-in the EqEvb constructor: FeSetName("feevb") and FeAddEquipment(new EqEvb("EVB",__FILE__))

- migrate function names:

- fEq->SendEvent() with EqSendEvent()

- fEq->SetStatus() with EqSetStatus()

- fEq->ZeroStatistics() with EqZeroStatistics() -- can be removed, taken care of in the framework

- fEq->WriteStatistics() with EqWriteStatistics() -- can be removed, taken care of in the framework

- (my feevb.o now compiles, but will not work, yet, keep going:)

- EqEvb - update prototypes of all HandleFoo() methods per example_everything.cxx or per tmfe.h: otherwise the framework

will not call them. c++ compiler will not warn about this!

- migrate old main():

- restore initialization of "common" and other things done in the old main():

- TMFeCommon was merged into TMFeEquipment, move common->Foo = ... to the EqEvb constructor, consult tmfe.h and tmfe.md

for current variable names.

- consider adding "fEqConfReadConfigFromOdb = false;" (see tmfe.md)

- if EqEvb has a method Init() called from old main(), change it's name to HandleInit() with correct arguments.

- split EqEvb constructor: leave initialization of "common" in the constructor, move all functions, etc into HandleInit()

- move fMfe->SetTransitionSequenceFoo() calls to HandleFrontendInit()

- move fMfe->DeregisterTransition{Pause,Resume}() to HandleFrontendInit()

- old main should be empty now

- remove linking tmfe_rev0.o from feevb Makefile, now it builds!

- try to run it!

- it works!

- done.

K.O. |

|

2216

|

15 Jun 2021 |

Konstantin Olchanski | Info | 1000 Mbytes/sec through midas achieved! | I am sure everybody else has 10gige and 40gige networks and are sending terabytes of data before breakfast.

Myself, I only have one computer with a 10gige network link and sufficient number of daq boards to fill

it with data. Here is my success story of getting all this data through MIDAS.

This is the anti-matter experiment ALPHA-g now under final assembly at CERN. The main particle detector is a long but

thin cylindrical TPC. It surrounds the magnetic bottle (particle trap) where we make and study anti-hydrogen. There are

64 daq boards to read the TPC cathode pads and 8 daq boards to read the anode wires and to form the trigger. Each daq

board can produce data at 80-90 Mbytes/sec (1gige links). Data is sent as UDP packets (no jumbo frames). Altera FPGA

firmware was done here at TRIUMF by Bryerton Shaw, Chris Pearson, Yair Lynn and myself.

Network interconnect is a 96-port Juniper switch with a 10gige uplink to the main daq computer (quad core Intel(R)

Xeon(R) CPU E3-1245 v6 @ 3.70GHz, 64 GBytes of DDR4 memory).

MIDAS data path is: UDP packet receiver frontend -> event builder -> mlogger -> disk -> lazylogger -> CERN EOS cloud

storage.

First chore was to get all the UDP packets into the main computer. "U" in UDP stands for "unreliable", and at first, UDP

packets have been disappearing pretty much anywhere they could. To fix this, in order:

- reading from the udp socket must be done in a dedicated thread (in the midas context, pauses to write statistics or

check alarms result in lost udp packets)

- udp socket buffer has to be very big

- maximum queue sizes must be enabled in the 10gige NIC

- ethernet flow control must be enabled on the 10gige link

- ethernet flow control must be enabled in the switch (to my surprise many switches do not have working end-to-end

ethernet flow control and lose UDP packets, ask me about this. our big juniper switch balked at first, but I got it

working eventually).

- ethernet flow control must be enabled on the 1gige links to each daq module

- ethernet flow control must be enabled in the FPGA firmware (it's a checkbox in qsys)

- FPGA firmware internally must have working back pressure and flow control (avalon and axi buses)

- ideally, this back-pressure should feed back to the trigger. ALPHA-g does not have this (it does not need it).

Next chore was to multithread the UDP receiver frontend and to multithread the event builder. Stock single-threaded

programs quickly max out with 100% CPU use and reach nowhere near 10gige data speeds.

Naive multithreading, with two threads, reader (read UDP packet, lock a mutex, put it into a deque, unlock, repeat) and

sender (lock a mutex, get a packet from deque, unlock, bm_send_event(), repeat) spends all it's time locking and

unlocking the mutex and goes nowhere fast (with 1500 byte packets, about 600 kHz of lock/unlock at 10gige speed).

So one has to do everything in batches: reader thread: accumulate 1000 udp packets in an std::vector, lock the mutex,

dump this batch into a deque, unlock, repeat; sender thread: lock mutex, get 1000 packets from the deque, unlock, stuff

the 1000 packets into 1 midas event, bm_send_event(), repeat.

It takes me 5 of these multithreaded udp reader frontends to keep up with a 10gige link without dropping any UDP packets.

My first implementation chewed up 500% CPU, that's all of it, there is only 4 CPU cores available, leaving nothing

for the event builder (and mlogger, and ...)

I had to:

a) switch from plain socket read() to socket recvmmsg() - 100000 udp packets per syscall vs 1 packet per syscall, and

b) switch from plain bm_send_event() to bm_send_event_sg() - using a scatter-gather list to avoid a memcpy() of each udp

packet into one big midas event.

Next is the event builder.

The event builder needs to read data from the 5 midas event buffers (one buffer per udp reader frontend, each midas event

contains 1000 udp packets as indovidual data banks), examine trigger timestamps inside each udp packet, collect udp

packets with matching timestamps into a physics event, bm_send_event() it to the SYSTEM buffer. rinse and repeat.

Initial single threaded implementation maxed out at about 100-200 Mbytes/sec with 100% busy CPU.

After trying several threading schemes, the final implementation has these threads:

- 5 threads to read the 5 event buffers, these threads also examine the udp packets, extract timestamps, etc

- 1 thread to sort udp packets by timestamp and to collect them into physics events

- 1 thread to bm_send_event() physics events to the SYSTEM buffer

- main thread and rpc handler thread (tmfe frontend)

(Again, to reduce lock contention, all data is passed between threads in large batches)

This got me up to about 800 Mbytes/sec. To get more, I had to switch the event builder from old plain bm_send_event() to

the scatter-gather bm_send_event_sg(), *and* I had to reduce CPU use by other programs, see steps (a) and (b) above.

So, at the end, success, full 10gige data rate from daq boards to the MIDAS SYSTEM buffer.

(But wait, what about the mlogger? In this experiment, we do not have a disk storage array to sink this

much data. But it is an already-solved problem. On the data storage machines I built for GRIFFIN - 8 SATA NAS HDDs using

raidz2 ZFS - the stock MIDAS mlogger can easily sink 1000 Mbytes/sec from SYSTEM buffer to disk).

Lessons learned:

- do not use UDP. dealing with packet loss will cost you a fortune in headache medicines and hair restorations.

- use jumbo frames. difference in per-packet overhead between 1500 byte and 9000 byte packets is almost a factor of 10.

- everything has to be done in bulk to reduce per-packet overheads. recvmmsg(), batched queue push/pop, etc

- avoid memory allocations (I has a per-packet std::string, replaced it with char[5])

- avoid memcpy(), use writev(), bm_send_event_sg() & co

K.O.

P.S. Let's counting the number of data copies in this system:

x udp reader frontend:

- ethernet NIC DMA into linux network buffers

- recvmmsg() memcpy() from linux network buffer to my memory

- bm_send_event_sg() memcpy() from my memory to the MIDAS shared memory event buffer

x event builder:

- bm_receive_event() memcpy() from MIDAS shared memory event buffer to my event buffer

- my memcpy() from my event buffer to my per-udp-packet buffers

- bm_send_event_sg() memcpy() from my per-udp-packet buffers to the MIDAS shared memory event buffer (SYSTEM)

x mlogger:

- bm_receive_event() memcpy() from MIDAS SYSTEM buffer

- memcpy() in the LZ4 data compressor

- write() syscall memcpy() to linux system disk buffer

- SATA interface DMA from linux system disk buffer to disk.

Would a monolithic massively multithreaded daq application be more efficient?

("udp receiver + event builder + logger"). Yes, about 4 memcpy() out of about 10 will go away.

Would I be able to write such a monolithic daq application?

I think not. Already, at 10gige data rates, for all practical purposes, it is impossible

to debug most problems, especially subtle trouble in multithreading (race conditions)

and in memory allocations. At best, I can sprinkle assert()s and look at core dumps.

So the good old divide-and-conquer approach is still required, MIDAS still rules.

K.O. |

|

2217

|

15 Jun 2021 |

Stefan Ritt | Info | 1000 Mbytes/sec through midas achieved! | In MEG II we also kind of achieved this rate. Marco F. will post an entry soon to describe the details. There is only one thing

I want to mention, which is our network switch. Instead of an expensive high-grade switch, we chose a cheap "Chinese" high-grade

switch. We have "rack switches", which are collector switch for each rack receiving up to 10 x 1GBit inputs, and outputting 1 x

10 GBit to an "aggregation switch", which collects all 10 GBit lines form rack switches and forwards it with (currently a single

) 10 GBit line. For the rack switch we use a

MikroTik CRS354-48G-4S+2Q+RM 54 port

and for the aggregation switch

MikroTik CRS326-24S-2Q+RM 26 Port

both cost in the order of 500 US$. We were astonished that they don't loose UDP packets when all inputs send a packet at the

same time, and they have to pipe them to the single output one after the other, but apparently the switch have enough buffers

(which is usually NOT written in the data sheets).

To avoid UDP packet loss for several events, we do traffic shaping by arming the trigger only when the previous event is

completely received by the frontend. This eliminates all flow control and other complicated methods. Marco can tell you the

details.

Another interesting aspect: While we get the data into the frontend, we have problems in getting it through midas. Your

bm_send_event_sg() is maybe a good approach which we should try. To benchmark the out-of-the-box midas, I run the dummy frontend

attached on my MacBook Pro 2.4 GHz, 4 cores, 16 GB RAM, 1 TB SSD disk. I got

Event size: 7 MB

No logging: 900 events/s = 6.7 GBytes/s

Logging with LZ4 compression: 155 events/s = 1.2 GBytes/s

Logging without compression: 170 events/s = 1.3 GBytes/s

So with this simple approach I got already more than 1 GByte of "dummy data" through midas, indicating that the buffer

management is not so bad. I did use the plain mfe.c frontend framework, no bm_send_event_sg() (but mfe.c uses rpc_send_event() which is an

optimized version of bm_send_event()).

Best,

Stefan |

| Attachment 1: frontend.cxx

|

#include <stdio.h>

#include <stdlib.h>

#include <math.h>

#include <assert.h> // assert()

#include "midas.h"

#include "experim.h"

#include "mfe.h"

/*-- Globals -------------------------------------------------------*/

/* The frontend name (client name) as seen by other MIDAS clients */

const char *frontend_name = "Sample Frontend";

/* The frontend file name, don't change it */

const char *frontend_file_name = __FILE__;

/* frontend_loop is called periodically if this variable is TRUE */

BOOL frontend_call_loop = FALSE;

/* a frontend status page is displayed with this frequency in ms */

INT display_period = 3000;

/* maximum event size produced by this frontend */

INT max_event_size = 8 * 1024 * 1024;

/* maximum event size for fragmented events (EQ_FRAGMENTED) */

INT max_event_size_frag = 5 * 1024 * 1024;

/* buffer size to hold events */

INT event_buffer_size = 20 * 1024 * 1024;

/*-- Function declarations -----------------------------------------*/

INT frontend_init(void);

INT frontend_exit(void);

INT begin_of_run(INT run_number, char *error);

INT end_of_run(INT run_number, char *error);

INT pause_run(INT run_number, char *error);

INT resume_run(INT run_number, char *error);

INT frontend_loop(void);

INT read_trigger_event(char *pevent, INT off);

INT read_periodic_event(char *pevent, INT off);

INT poll_event(INT source, INT count, BOOL test);

INT interrupt_configure(INT cmd, INT source, POINTER_T adr);

/*-- Equipment list ------------------------------------------------*/

BOOL equipment_common_overwrite = TRUE;

EQUIPMENT equipment[] = {

{"Trigger", /* equipment name */

{1, 0, /* event ID, trigger mask */

"SYSTEM", /* event buffer */

EQ_POLLED, /* equipment type */

0, /* event source */

"MIDAS", /* format */

TRUE, /* enabled */

RO_RUNNING, /* read only when running */

100, /* poll for 100ms */

0, /* stop run after this event limit */

0, /* number of sub events */

0, /* don't log history */

"", "", "",},

read_trigger_event, /* readout routine */

},

{""}

};

INT frontend_init() { return SUCCESS; }

INT frontend_exit() { return SUCCESS; }

INT begin_of_run(INT run_number, char *error) { return SUCCESS; }

INT end_of_run(INT run_number, char *error) { return SUCCESS; }

INT pause_run(INT run_number, char *error) { return SUCCESS; }

INT resume_run(INT run_number, char *error) { return SUCCESS; }

INT frontend_loop() { return SUCCESS; }

INT interrupt_configure(INT cmd, INT source, POINTER_T adr) { return SUCCESS; }

/*------------------------------------------------------------------*/

INT poll_event(INT source, INT count, BOOL test)

{

int i;

DWORD flag;

for (i = 0; i < count; i++) {

/* poll hardware and set flag to TRUE if new event is available */

flag = TRUE;

if (flag)

if (!test)

return TRUE;

}

return 0;

}

/*-- Event readout -------------------------------------------------*/

INT read_trigger_event(char *pevent, INT off)

{

UINT8 *pdata;

bk_init32(pevent);

bk_create(pevent, "ADC0", TID_UINT32, (void **)&pdata);

// generate 7 MB of dummy data

pdata += (7 * 1024 * 1024);

bk_close(pevent, pdata);

return bk_size(pevent);

}

|

|

2218

|

16 Jun 2021 |

Marco Francesconi | Info | 1000 Mbytes/sec through midas achieved! | As reported by Stefan, in MEG II we have very similar ethernet throughputs.

In total, we have 34 crates each with 32 DRS4 digitiser chips and a single 1 Gbps readout link through a Xilinx Zynq SoC.

The data arrives in push mode without any external intervention, the only throttling being an optional prescaling on the trigger rate.

We discovered the hard way that 1 Gbps throughput on Zynq is not trivial at all: the embedded ethernet MAC does not support jumbo frames (always read the fine prints in the manuals!) and the embedded Linux ethernet stack seems to struggle when we go beyond 250 Mbps of UDP traffic.

Anyhow, even with the reduced speed, the maximum throughput at network input is around 8.5 Gbps which passes through the Mikrotik switches mentioned by Stefan.

We had very bad experiences in the past with similar price-point switches, observing huge packet drops when the instantaneous switching capacity cannot cope with the traffic, but so far we are happy with the Mikrotik ones.

On the receiver side, we have the DAQ server with an Intel E5-2630 v4 CPU and a 10 Gbit connection to the network using an Intel X710 Network card.

In the past, we used also a "cheap" 10 Gbit card from Tehuti but the driver performance was so bad that it could not digest more than 5 Gbps of data.

The current frontend is based on the mfe.c scheme for historical reasons (the very first version dates back to 2015).

We opted for a monolithic multithread solution so we can reuse the underlying DAQ code for other experiments which may not have the complete Midas backend.

Just to mention them: one is the FOOT experiment (which afaik uses an adapted version of Altas DAQ) and the other is the LOLX experiment (for which we are going to ship to Canada soon a small 32 channel system using Midas).

A major modification to Konstantin scheme is that we need to calibrate all WFMs online so that a software zero suppression can be applied to reduce the final data size (that part is still to be implemented).

This requirement results in additional resource usage to parse the UDP content into floats and calibrate them.

Currently, we have 7 packet collector threads to digest the full packet flow (using recvmmsg), followed by an event building stage that uses 4 threads and 3 other threads for WFM calibration.

We have progressive packet numbers on each packet generated by the hardware and a set of flags marking the start and end of the event; combining the packet number difference between the start and end of the event and the total received packets for that event it is really easy to understand if packet drops are happening.

All the thread infrastructure was tested and we could digest the complete throughput, we still have to finalise the full 10 Gbit connection to Midas because the final system has been installed only recently (April).

We are using EQ_USER flag to push events into mfe.c buffers with up to 4 threads, but I was observing that above ~1.5 Gbps the rb_get_wp() returns almost always DB_TIMEOUT and I'm forced to drop the event.

This conflicts with the measurements reported by Stefan (we were discussing this yesterday), so we are still investigating the possible cause.

It is difficult to report three years of development in a single Elog, I hope I put all the relevant point here.

It looks to me that we opted for very complementary approaches for high throughput ethernet with Midas, and I think there are still a lot of details that could be worth reporting.

In case someone organises some kind of "virtual workshop" on this, I'm willing to participate.

Best,

Marco

> In MEG II we also kind of achieved this rate. Marco F. will post an entry soon to describe the details. There is only one thing

> I want to mention, which is our network switch. Instead of an expensive high-grade switch, we chose a cheap "Chinese" high-grade

> switch. We have "rack switches", which are collector switch for each rack receiving up to 10 x 1GBit inputs, and outputting 1 x

> 10 GBit to an "aggregation switch", which collects all 10 GBit lines form rack switches and forwards it with (currently a single

> ) 10 GBit line. For the rack switch we use a

>

> MikroTik CRS354-48G-4S+2Q+RM 54 port

>

> and for the aggregation switch

>

> MikroTik CRS326-24S-2Q+RM 26 Port

>

> both cost in the order of 500 US$. We were astonished that they don't loose UDP packets when all inputs send a packet at the

> same time, and they have to pipe them to the single output one after the other, but apparently the switch have enough buffers

> (which is usually NOT written in the data sheets).

>

> To avoid UDP packet loss for several events, we do traffic shaping by arming the trigger only when the previous event is

> completely received by the frontend. This eliminates all flow control and other complicated methods. Marco can tell you the

> details.

>

> Another interesting aspect: While we get the data into the frontend, we have problems in getting it through midas. Your

> bm_send_event_sg() is maybe a good approach which we should try. To benchmark the out-of-the-box midas, I run the dummy frontend

> attached on my MacBook Pro 2.4 GHz, 4 cores, 16 GB RAM, 1 TB SSD disk. I got

>

> Event size: 7 MB

>

> No logging: 900 events/s = 6.7 GBytes/s

>

> Logging with LZ4 compression: 155 events/s = 1.2 GBytes/s

>

> Logging without compression: 170 events/s = 1.3 GBytes/s

>

> So with this simple approach I got already more than 1 GByte of "dummy data" through midas, indicating that the buffer

> management is not so bad. I did use the plain mfe.c frontend framework, no bm_send_event_sg() (but mfe.c uses rpc_send_event() which is an

> optimized version of bm_send_event()).

>

> Best,

> Stefan |

|

Draft

|

16 Jun 2021 |

Joseph McKenna | Suggestion | Have a list of 'users responsible' in Alarms and Programs odb entries | > > > This list of responsible being attached to alarm message strings ...

> >

> > This is a great idea. But I think we do not need to artificially limit ourselves

> > to string and array lengths.

> >

> > The code in alarm.c should be changes to use std::string and std::vector<std::string> (STRING_LIST

> > #define), db_get_record() should be replaced with individual ODB reads (that's what it does behind

> > the scenes, but in a non-type and -size safe way).

> >

> > I think the web page code will work correctly, it does not care about string lengths.

> >

> > K.O.

>

> Auto growing lists is an excellent plan. I am making decent progress and should have something to

> report soon

This has sent me down a little rabbit hole, and I'd like to check in with efforts to improve the efficiency and simplicity of the alarm code.

I can keep with the current 'C' style of the alarm.cxx code, replace struct read and writes to the odb with individual odb entries... put functions in alarm.cxx to create, read and write to the odb...

If we go this 'C' style route, then I'll have duplication of the 'users responsible' setters and getter functions for structs ALARM and PROGRAM_INFO

What would the MIDAS developers thing of creating classes for ALARM and PROGRAM_INFO (I am thinking for binary compatibilities of not touching ALARM and PROGRAM_INFO structs, and inheriting from them:

class UsersResponsible

{

public:

STRING_LIST fUsersResponsible;

}

class Alarm: public ALARM, public UsersResponsible

{

}

class ProgramInfo: public PROGRAM_INFO, public UsersResponsible

{

}

Each of these three classes would have member to functions to Create, Read and Write to the ODB. We could get rid of the PROGRAM_INFO_STR precompiler macro and instead have a SetToDefault member function.

It seems clear we should set the ODB path in the constructor of Alarm and ProgramInfo |

|

2220

|

17 Jun 2021 |

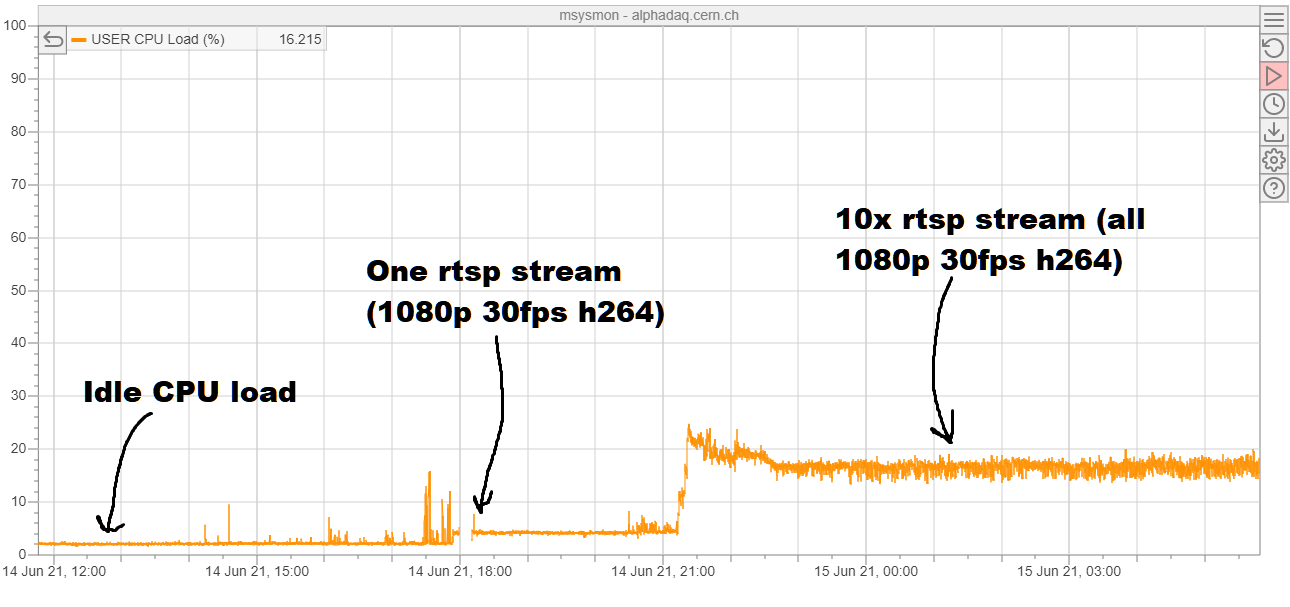

Joseph McKenna | Info | Add support for rtsp camera streams in mlogger (history_image.cxx) | mlogger (history_image) now supports rtsp cameras, in ALPHA we have

acquisitioned several new network connected cameras. Unfortunately they dont

have a way of just capturing a single frame using libcurl

========================================

Motivation to link to OpenCV libraries

========================================

After looking at the ffmpeg libraries, it seemed non trivial to use them to

listen to a rtsp stream and write a series of jpgs.

OpenCV became an obvious choice (it is itself linked to ffmpeg and

gstreamer), its a popular, multiplatform, open source library that's easy to

use. It is available in the default package managers in centos 7 and ubuntu

(an is installed by default on lxplus).

========================================

How it works:

========================================

The framework laid out in history_image.cxx is great. A separate thread is

dedicated for each camera. This is continued with the rtsp support, using

the same periodicity:

if (ss_time() >= o["Last fetch"] + o["Period"]) {

An rtsp camera is detected by its URL, if the URL starts with ‘rtsp://’ its

obvious its using the rtsp protocol and the cv::VideoCapture object is

created (line 147).

If the connection fails, it will continue to retry, but only send an error

message on the first 10 attempts (line 150). This counter is reset on

successful connection

If MIDAS has been built without OpenCV, mlogger will send an error message

that OpenCV is required if a rtsp URL is given (line 166)

The VideoCapture ‘stays live' and will grab frames from the camera based on

the sleep, saving to file based on the Period set in the ODB.

If the VideoCapture object is unable to grab a frame, it will release() the

camera, send an error message to MIDAS, then destroy itself, and create a

new version (this destroy and create fully resets the connection to a

camera, required if its on flaky wifi)

If the VideoCapture gets an empty frame, it also follows the same reset

steps.

If the VideoCaption fills a cv::Frame object successfully, the image is

saved to disk in the same way as the curl tools.

========================================

Concerns for the future:

========================================

VideoCapture is decoding the video stream in the background, allowing us to

grab frames at will. This is nice as we can be pretty agnostic to the video

format in the stream (I tested with h264 from a TP-LINK TAPO C100, but the

CPU usage is not negligible.

I noticed that this used ~2% of the CPU time on an intel i7-4770 CPU, given

enough cameras this is considerable. In ALPHA, I have been testing with 10

cameras:

elog:2220/1

My suggestion / request would be to move the camera management out of

mlogger and into a new program (mcamera?), so that users can choose to off

load the CPU load to another system (I understand the OpenCV will use GPU

decoders if available also, which can also lighten the CPU load). |

| Attachment 1: unnamed.png

|

|

|

2221

|

18 Jun 2021 |

Konstantin Olchanski | Bug Report | my html modbvalue thing is not working? | I have a web page and I try to use modbvalue, but nothing happens. The best I can tell, I follow the documentation

(https://midas.triumf.ca/MidasWiki/index.php/Custom_Page#modbvalue).

<td id=setv0><div class="modbvalue" data-odb-path="/Equipment/CAEN_hvps01/Settings/VSET[0]" data-odb-editable="1">(ch0)</div></td>

I suppose I could add debug logging to the javascript framework for modbvalue to find out why it is not seeing

or how it is not liking my web page.

But how would a non-expert user (or an expert user in a hurry) would debug this?

Should the modbvalue framework log more error messages to the javascrpt console ("I am ignoring your modbvalue entry because...")?

Should it have a debug mode where it reports to the javascript console all the tags it scanned, all the tags it found, etc

to give me some clue why it does not find my modbvalue tag?

Right now I am not even sure if this framework is activated, perhaps I did something wrong in how I load the page

and the modbvalue framework is not loaded. The documentation gives some magic incantations but does not explain

where and how this framework is loaded and activated. (But I do not see any differences between my page and

the example in the documentation. Except that I do not load control.js, I do not need all the thermometer bars, etc.

If I do load it, still my modbvalue does not work).

K.O. |

|