| ID |

Date |

Author |

Topic |

Subject |

|

2674

|

17 Jan 2024 |

Andreas Suter | Bug Report | mhttpd eqtable |

Hi,

I like the new eqtable, but stumbled over some issues.

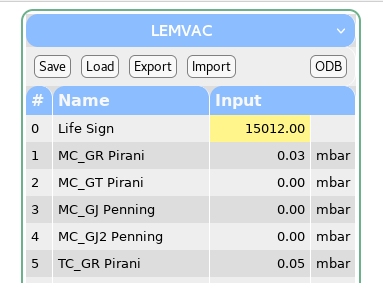

1) In the attached snapshot you see that the values shown from our vacuum Pirani and Penning cells are all zero, which of course is not true.

It would be nice to have under the equipment settings some formatting options, like the possibility to add units.

2) If one of the number evaluates to Infinity, the table is not shown properly anymore.

Best,

Andreas |

| Attachment 1: midas-eqtable.png

|

|

|

2673

|

16 Jan 2024 |

Stefan Ritt | Forum | a scroll option for "add history variables" window? |

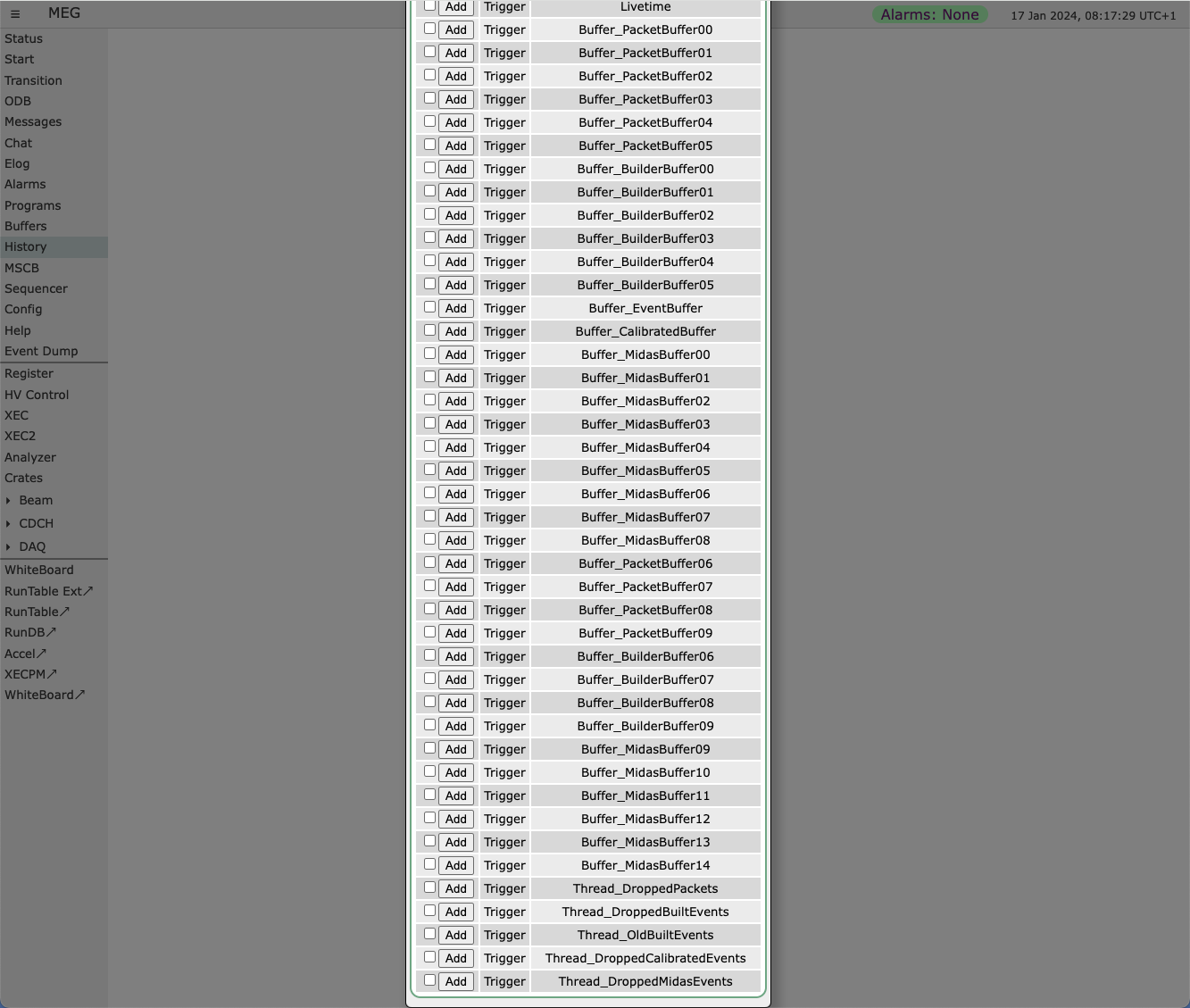

Have you updated to the current midas version? This issue has been fixed a while ago. Below

you see a screenshot of a long list scrolled all the way to the bottom.

Revision: Thu Dec 7 14:26:37 2023 +0100 - midas-2022-05-c-762-g1eb9f627-dirty on branch

develop

Chrome on MacOSX 14.2.1

The fix is actually in "controls.js", so make sure your browser does not cache an old

version of that file. I usually have to clear my browser history to get the new file from

mhttpd.

Best regards,

Stefan |

| Attachment 1: Screenshot_2024-01-17_at_08.17.30.png

|

|

|

2672

|

16 Jan 2024 |

Pavel Murat | Forum | a scroll option for "add history variables" window? |

Dear all,

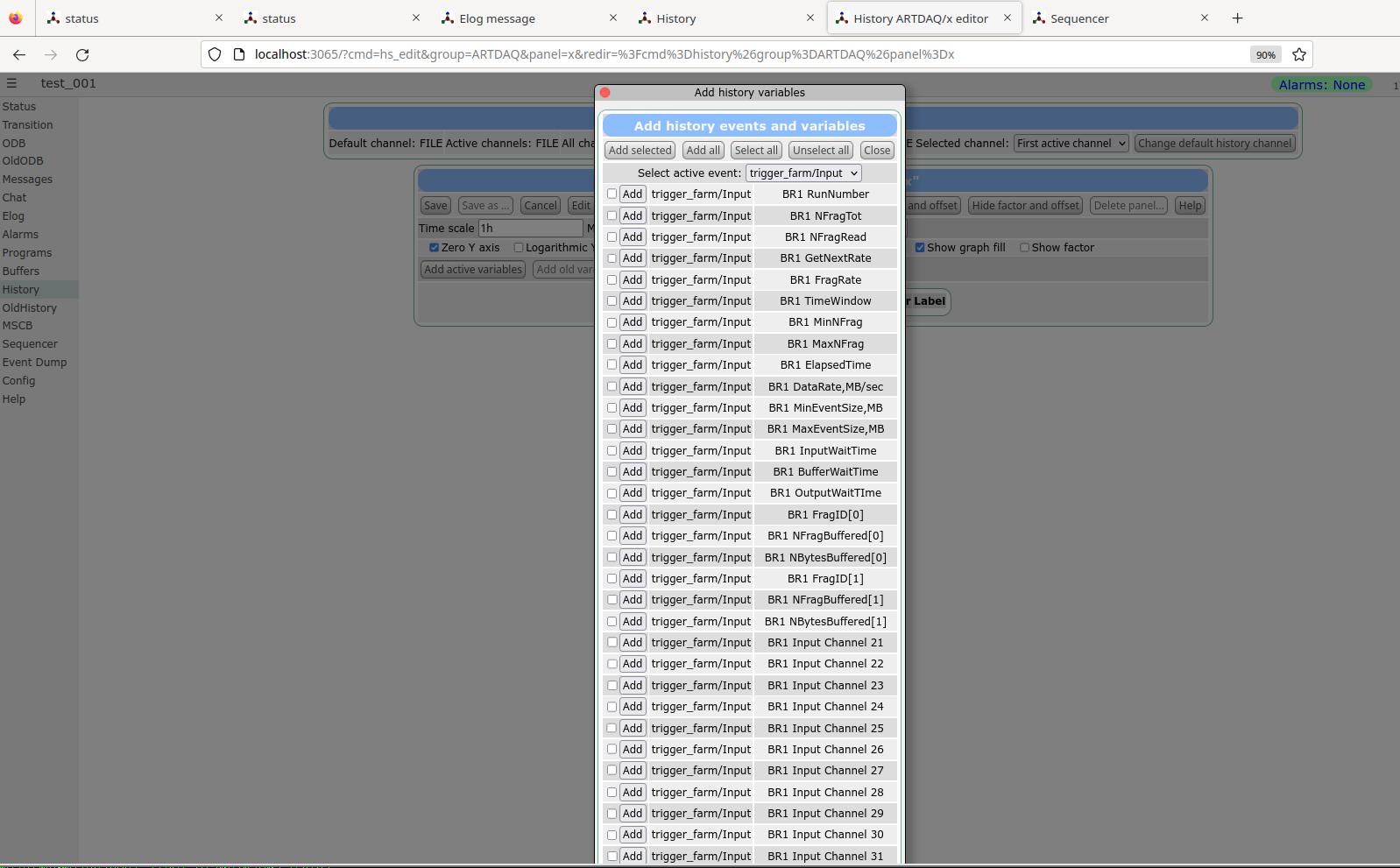

I have a "slow control" frontend which reads out 100 slow control parameters.

When I'm interactively adding a parameter to a history plot,

a nice "Add history variable" pops up .. , but with 100 parameters in the list,

it doesn't fit within the screen...

The browser becomes passive, and I didn't find any easy way of scrolling.

In the attached example, adding a channel 32 variable becomes rather cumbersome,

not speaking about channel 99.

Two questions:

a) how do people get around this "no-scrolling" issue? - perhaps there is a workaround

b) how big of a deal is it to add a scroll bar to the "Add history variables" popup ?

- I do not know javascript myself, but could find help to contribute..

-- many thanks, regards, Pasha |

| Attachment 1: adding_a_variable_to_the_history_plot.png

|

|

|

2671

|

15 Jan 2024 |

Frederik Wauters | Forum | dump history FILE files |

We switched from the history files from MIDAS to FILE, so we have *.dat files now (per variable), instead of the old *.hst.

How shoul

d one now extract data from these data files? With the old *,hst files I can e.g. mhdump -E 102 231010.hst

but with the new *.dat files I get

...2023/history$ mhdump -E 0 -T "Run number" mhf_1697445335_20231016_run_transitions.dat | head -n 15

event name: [Run transitions], time [1697445335]

tag: tag: /DWORD 1 4 /timestamp

tag: tag: UINT32 1 4 State

tag: tag: UINT32 1 4 Run number

record size: 12, data offset: 1024

record 0, time 1697557722, incr 112387

record 1, time 1697557783, incr 61

record 2, time 1697557804, incr 21

record 3, time 1697557834, incr 30

record 4, time 1697557888, incr 54

record 5, time 1697558318, incr 430

record 6, time 1697558323, incr 5

record 7, time 1697558659, incr 336

record 8, time 1697558668, incr 9

record 9, time 1697558753, incr 85

not very intelligible

Yes, I can do csv export on the webpage. But it would be nice to be able to extract from just the files. Also, the webpage export only saves the data shown ( range limited and/or downsampled) |

|

2670

|

12 Jan 2024 |

Stefan Ritt | Forum | slow control frontends - how much do they sleep and how often their drivers are called? |

> Hi Stefan, thanks a lot !

>

> I just thought that for the EQ_SLOW type equipment calls to sleep() could be hidden in mfe.cxx

> and handled based on the requested frequency of the history updates.

Most people combine EQ_SLOW with EQ_POLLED, so they want to read out as quickly as possible. Since

the framework cannot "guess" what the users want there, I removed all sleep() in the framework.

> Doing the same in the user side is straighforward - the important part is to know where the

> responsibility line goes (: smile :)

Pushing this to the user gives you more freedom. Like you can add sleep() for some frontends, but not

for others, only when the run is stopped and more.

Stefan |

|

2669

|

11 Jan 2024 |

Pavel Murat | Forum | slow control frontends - how much do they sleep and how often their drivers are called? |

Hi Stefan, thanks a lot !

I just thought that for the EQ_SLOW type equipment calls to sleep() could be hidden in mfe.cxx

and handled based on the requested frequency of the history updates.

Doing the same in the user side is straighforward - the important part is to know where the

responsibility line goes (: smile :)

-- regards, Pasha |

|

2668

|

11 Jan 2024 |

Stefan Ritt | Forum | slow control frontends - how much do they sleep and how often their drivers are called? |

Put a

ss_sleep(10);

into your frontend_loop(), then you should be fine.

The event loop runs as fast as possible in order not to miss any (triggered) event, so no seep in the

event loop, because this would limit the (triggered) event rate to 100 Hz (minimum sleep is 10 ms).

Therefore, you have to slow down the event loop manually with the method described above.

Best,

Stefan |

|

2667

|

10 Jan 2024 |

Pavel Murat | Forum | slow control frontends - how much do they sleep and how often their drivers are called? |

Dear all,

I have implemented a number of slow control frontends which are directed to update the

history once in every 10 sec, and they do just that.

I expected that such frontends would be spending most of the time sleeping and waking up

once in ten seconds to call their respective drivers and send the data to the server.

However I observe that each frontend process consumes almost 100% of a single core CPU time

and the frontend driver is called many times per second.

Is that the expected behavior ?

So far, I couldn't find the place in the system part of the frontend code (is that the right

place to look for?) which regulates the frequency of the frontend driver calls, so I'd greatly

appreciate if someone could point me to that place.

I'm using the following commit:

commit 30a03c4c develop origin/develop Make sure line numbers and sequence lines are aligned.

-- many thanks, regards, Pasha |

|

2666

|

03 Jan 2024 |

Konstantin Olchanski | Forum | midas.triumf.ca alias moved to daq00.triumf.ca |

> I found the first issue: The link to

> https://midas.triumf.ca/MidasWiki/index.php/Custom_plots_with_mplot

fixed.

https://midas.triumf.ca/Custom_plots_with_mplot

also works.

I tried to get rid of redirect to daq00 completely and make the whole MidasWiki show up

under midas.triumf.ca, but discovered/remembered that I cannot do this without changing

MidasWiki config [$wgServer = "https://daq00.triumf.ca";] which causes mediawiki to

redirect everything to daq00 (using the 301 "moved permanently" reply, ouch!). In theory,

if I change it to "https://midas.triumf.ca" it will redirect everything there instead,

but I am hesitant to make this change. It has been like this since forever and I have no

idea what else will break if I change it.

K.O. |

|

2665

|

03 Jan 2024 |

Stefan Ritt | Bug Report | Compilation error on RPi |

> > git pull

> > git submodule update

>

> confirmed. just run into this myself. I think "make" should warn about out of

> date git modules. Also check that the build git version is tagged with "-dirty".

>

> K.O.

The submodule business becomes kind of annoying. I updated the documentation at

https://daq00.triumf.ca/MidasWiki/index.php/Quickstart_Linux#MIDAS_Package_Installatio

n

to tell people to use

1) "git clone ... --recurse-submodules" during the first clone

2) "git submodule update --init --recursive" in case they forgot 1)

3) "git pull --recurse-submodules" for each update or to use

4) "git config submodule.recurse true" to make the --recurse-submodules the default

I use 4) since a while and it works nicely, so one does not have to remember to pull

recursively each time.

Stefan |

|

2664

|

03 Jan 2024 |

Stefan Ritt | Forum | midas.triumf.ca alias moved to daq00.triumf.ca |

> the DNS alias for midas.triumf.ca moved from old ladd00.triumf.ca to new

> daq00.triumf.ca. same as before it redirects to the MidasWiki and to the midas

> forum (elog) that moved from ladd00 to daq00 quite some time ago. if you see any

> anomalies in accessing them (broken links, bad https certificates), please report

> them to this forum or to me directly at olchansk@triumf.ca. K.O.

I found the first issue: The link to

https://midas.triumf.ca/MidasWiki/index.php/Custom_plots_with_mplot

does not work any more. The link

https://daq00.triumf.ca/MidasWiki/index.php/Custom_plots_with_mplot

however does work. Same with

https://midas.triumf.ca/MidasWiki/index.php/Sequencer

and

https://daq00.triumf.ca/MidasWiki/index.php/Sequencer

I have a few cases in mhttpd where I link directly to our documentation. I prefer

to have those link with "midas.triumf.ca" instead of "daq00.triumf.ca" in case you

change the machine again in the future.

Best,

Stefan |

|

2663

|

02 Jan 2024 |

Konstantin Olchanski | Forum | midas.triumf.ca alias moved to daq00.triumf.ca |

the DNS alias for midas.triumf.ca moved from old ladd00.triumf.ca to new

daq00.triumf.ca. same as before it redirects to the MidasWiki and to the midas

forum (elog) that moved from ladd00 to daq00 quite some time ago. if you see any

anomalies in accessing them (broken links, bad https certificates), please report

them to this forum or to me directly at olchansk@triumf.ca. K.O. |

|

2662

|

29 Dec 2023 |

Konstantin Olchanski | Bug Report | Compilation error on RPi |

> git pull

> git submodule update

confirmed. just run into this myself. I think "make" should warn about out of

date git modules. Also check that the build git version is tagged with "-dirty".

K.O. |

|

2661

|

27 Dec 2023 |

Konstantin Olchanski | Forum | MidasWiki updated to 1.39.6 |

MidasWiki was updated to current mediawiki LTS 1.39.6 supported until Nov 2025,

see https://www.mediawiki.org/wiki/Version_lifecycle

as downside, after this update, I see large amounts of "account request" spam,

something that did not exist before. I suspect new mediawiki phones home to

subscribe itself to some "please spam me" list.

if you want a user account on MidasWiki, please email me or Stefan directly, we

will make it happen.

K.O. |

|

2660

|

15 Dec 2023 |

Stefan Ritt | Info | Implementation of custom scatter, histogram and color map plots |

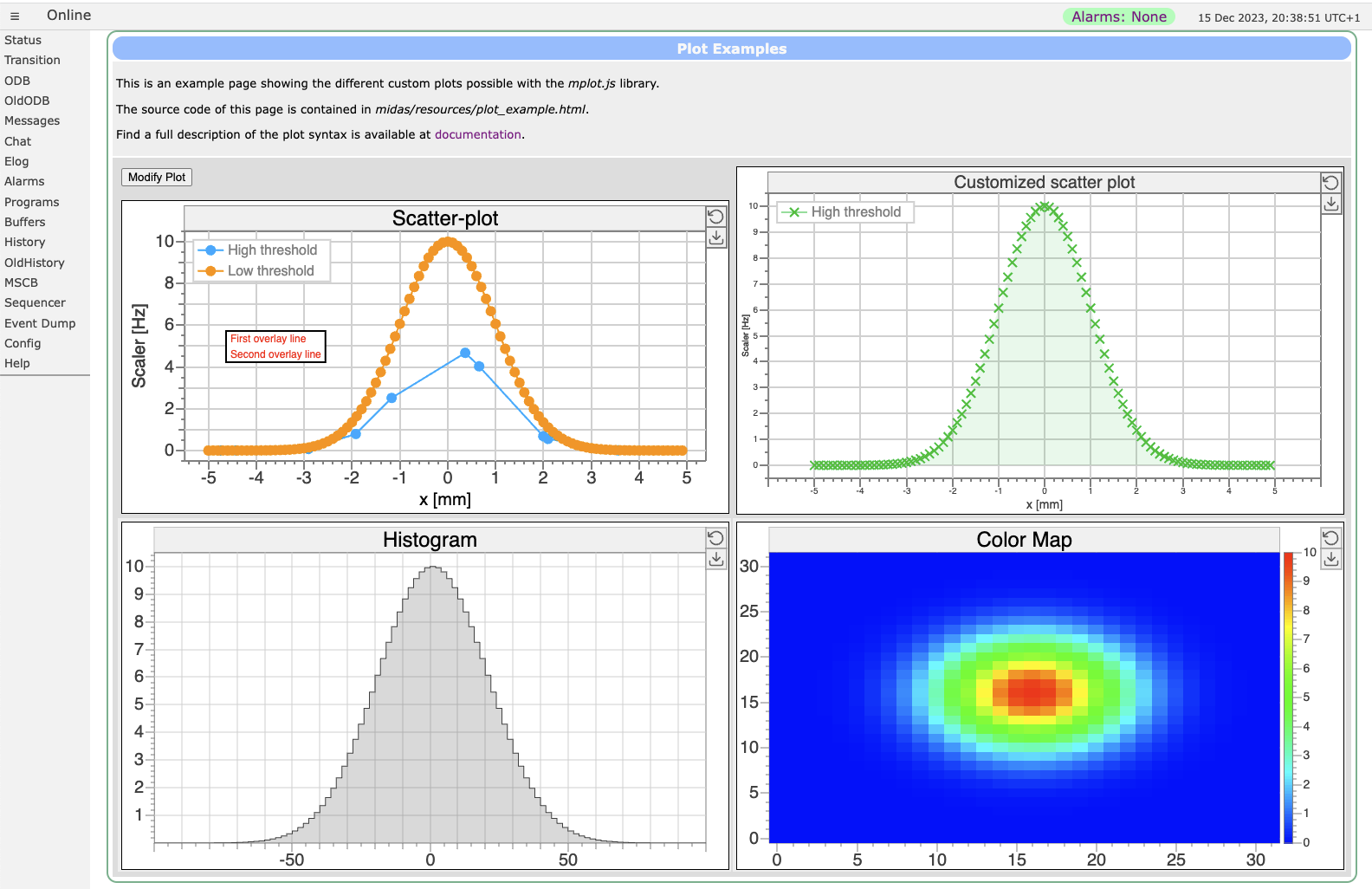

Custom plots including scatter, histogram and color map plots have been

implemented. This lets you plot graphs of X/Y data or histogram data stored in the

ODB on a custom page. For some examples and documentation please go to

https://daq00.triumf.ca/MidasWiki/index.php/Custom_plots_with_mplot

Enjoy!

Stefan |

| Attachment 1: plots.png

|

|

|

2658

|

14 Dec 2023 |

Zaher Salman | Bug Report | Compilation error on RPi |

This issue was resolved thanks to Konstantin and Stefan. I simply had to update submodules:

git submodule update

and then recompile.

Zaher |

|

2657

|

13 Dec 2023 |

Stefan Ritt | Forum | the logic of handling history variables ? |

> Also, I think that having a sine wave displayed by midas/examples/slowcont/scfe.cxx

> would make this example even more helpful.

Indeed. I reworked the example to have a out-of-the-box sine wave plotter, including the

automatic creation of a history panel. Thanks for the hint.

Best,

Stefan |

|

2656

|

12 Dec 2023 |

Pavel Murat | Forum | the logic of handling history variables ? |

Hi Sfefan, thanks a lot for taking time to reproduce the issue!

Here comes the resolution, and of course, it was something deeply trivial :

the definition of the HV equipment in midas/examples/slowcont/scfe.cxx has

the history logging time in seconds, however the comment suggests milliseconds (see below),

and for a few days I believed to the comment (:smile:)

Easy to fix.

Also, I think that having a sine wave displayed by midas/examples/slowcont/scfe.cxx

would make this example even more helpful.

-- thanks again, regards, Pasha

--------------------------------------------------------------------------------------------------------

EQUIPMENT equipment[] = {

{"HV", /* equipment name */

{3, 0, /* event ID, trigger mask */

"SYSTEM", /* event buffer */

EQ_SLOW, /* equipment type */

0, /* event source */

"FIXED", /* format */

TRUE, /* enabled */

RO_RUNNING | RO_TRANSITIONS, /* read when running and on transitions */

60000, /* read every 60 sec */

0, /* stop run after this event limit */

0, /* number of sub events */

10000, /* log history at most every ten seconds */ // <------------ this is 10^4 seconds, not 10 seconds

"", "", ""} ,

cd_hv_read, /* readout routine */

cd_hv, /* class driver main routine */

hv_driver, /* device driver list */

NULL, /* init string */

},

https://bitbucket.org/tmidas/midas/src/7f0147eb7bc7395f262b3ae90dd0d2af0625af39/examples/slowcont/scfe.cxx#lines-81 |

|

2655

|

12 Dec 2023 |

Zaher Salman | Bug Report | Compilation error on RPi |

Hello,

Since commit bc227a8a34def271a598c0200ca30d73223c3373 I've been getting the compilation error below (on a Raspberry Pi 3 Model B Plus Rev 1.3).

The fix is obvious from the reported error, but I am wondering whether this should be fixed in the main git??

Thanks,

Zaher

[ 7%] Building CXX object CMakeFiles/objlib.dir/src/json_paste.cxx.o

/home/nemu/nemu/tmidas/midas/src/json_paste.cxx: In function ‘int GetQWORD(const MJsonNode*

, const char*, UINT64*)’:

/home/nemu/nemu/tmidas/midas/src/json_paste.cxx:324:19: error: ‘const class MJsonNode’ has

no member named ‘GetLL’; did you mean ‘GetInt’?

*qw = node->GetLL();

^~~~~

GetInt

make[2]: *** [CMakeFiles/objlib.dir/build.make:271: CMakeFiles/objlib.dir/src/json_paste.cx

|

|

2654

|

12 Dec 2023 |

Stefan Ritt | Info | Midas Holiday Update |

> > 3) Make /Sequencer/State/Path relative to <exp>/userfiles. Like if /Sequencer/State/Path=test would then result to a final directory <exp>/userfiles/sequencer/test

> >

> > I'm kind of tempted to go with 3), since this allows the experiment to define different subdirectories under <exp>/userfiles/sequencer/... depending on the situation of the experiment.

> >

> > Best,

> > Stefan

>

> For me the option 3) seems the most coherent one.

> Andreas

Ok, I implemented option 3) above. This means everybody using the midas sequencer has to change /Sequencer/State/Path to an empty string and move the

sequencer files under <exp>/userfiles/sequencer as a starting point. I tested most thing, including the INCLUDE statements, but there could still be

a bug here or there, so please give it a try and report any issue to me.

Best,

Stefan |