| ID |

Date |

Author |

Topic |

Subject |

|

2310

|

26 Jan 2022 |

Frederik Wauters | Forum | .gz files | I adapted our analyzer to compile against the manalyzer included in the midas repo.

All our data files are .mid.gz, which now fail to process :(

frederik@frederik-ThinkPad-T550:~/new_daq/build/analyzer$ ./analyzer -e100 -s100 ../../run_backup_11783.mid.gz

...

...n

Registered modules: 1

file[0]: ../../run_backup_11783.mid.gz

Setting up the analysis!

TMReadEvent: error: short read 0 instead of -1193512213

Which is in the TMEvent* TMReadEvent(TMReaderInterface* reader) class in the midasio.cxx file

Reading the unzipped files works. But we have always processed our .gz files directly, for the unzipping we would need ~2x disk space.

Am I doing something wrong? I see that there is some activity on lz4 in the midasio repo, is gunzip next? |

|

2309

|

16 Dec 2021 |

Zaher Salman | Forum | Device driver for modbus | Dear all, does anyone have an example of for a device driver using modbus or modbus tcp to communicate with a device and willing to share it? Thanks. |

|

2308

|

12 Dec 2021 |

Marius Koeppel | Bug Report | Writting MIDAS Events via FPGAs | Dear all,

in 13 Feb 2020 to 21 Feb 2020 we had a talk about how I try to create MIDAS events directly on a FPGA and

than use DMA to hand the event over to MIDAS. In the thread I also explained how I do it in my MIDAS frontend.

For testing the DAQ I created a dummy frontend which was emulating my FPGA (see attached file). The interesting code is

in the function read_stream_thread and there I just fill a array according to the 32b BANKS which are 64b aligned (more or less

the lines 306-369). And than I do:

uint32_t * dma_buf_volatile;

dma_buf_volatile = dma_buf_dummy;

copy_n(&dma_buf_volatile[0], sizeof(dma_buf_dummy)/4, pdata);

pdata+=sizeof(dma_buf_dummy);

rb_increment_wp(rbh, sizeof(dma_buf_dummy)); // in byte length

to send the data to the buffer.

This summer (Mai - July) everything was working fine but today I did not get the data into MIDAS.

I was hopping around a bit with the commits and everything was at least working until: 3921016ce6d3444e6c647cbc7840e73816564c78.

Thanks,

Marius |

| Attachment 1: dummy_fe.cpp

|

/********************************************************************\

Name: dummy_fe.cxx

Created by: Frederik Wauters

Changed by: Marius Koeppel

Contents: Dummy frontend producing stream data

\********************************************************************/

#include <stdio.h>

#include <math.h>

#include <stdlib.h>

#include <algorithm>

#include <random>

#include <iostream>

#include <unistd.h>

#include <bitset>

#include "midas.h"

#include "msystem.h"

#include "mcstd.h"

#include <thread>

#include <chrono>

#include "mfe.h"

using namespace std;

/*-- Globals -------------------------------------------------------*/

/* The frontend name (client name) as seen by other MIDAS clients */

const char *frontend_name = "Dummy Stream Frontend";

/* The frontend file name, don't change it */

const char *frontend_file_name = __FILE__;

/* frontend_loop is called periodically if this variable is TRUE */

BOOL frontend_call_loop = FALSE;

/* a frontend status page is displayed with this frequency in ms */

INT display_period = 0;

/* maximum event size produced by this frontend */

INT max_event_size = 1 << 25; // 32MB

/* maximum event size for fragmented events (EQ_FRAGMENTED) */

INT max_event_size_frag = 5 * 1024 * 1024;

/* buffer size to hold events */

INT event_buffer_size = 10000 * max_event_size;

/*-- Function declarations -----------------------------------------*/

INT read_stream_thread(void *param);

uint64_t generate_random_pixel_hit(uint64_t time_stamp);

uint32_t generate_random_pixel_hit_swb(uint32_t time_stamp);

BOOL equipment_common_overwrite = TRUE; //true is overwriting the common odb

/* DMA Buffer and related */

volatile uint32_t *dma_buf;

#define MUDAQ_DMABUF_DATA_ORDER 25 // 29, 25 for 32 MB

#define MUDAQ_DMABUF_DATA_LEN (1 << MUDAQ_DMABUF_DATA_ORDER) // in bytes

size_t dma_buf_size = MUDAQ_DMABUF_DATA_LEN;

uint32_t dma_buf_nwords = dma_buf_size/sizeof(uint32_t);

/*-- Equipment list ------------------------------------------------*/

EQUIPMENT equipment[] = {

{"Stream", /* equipment name */

{1, 0, /* event ID, trigger mask */

"SYSTEM", /* event buffer */

EQ_USER, /* equipment type */

0, /* event source */

"MIDAS", /* format */

TRUE, /* enabled */

RO_RUNNING , /* read always and update ODB */

100, /* poll for 100ms */

0, /* stop run after this event limit */

0, /* number of sub events */

0, /* log history every event */

"", "", ""} ,

NULL, /* readout routine */

},

{""}

};

/*-- Dummy routines ------------------------------------------------*/

INT poll_event(INT source, INT count, BOOL test)

{

return 1;

};

INT interrupt_configure(INT cmd, INT source, POINTER_T adr)

{

return 1;

};

/*-- Frontend Init -------------------------------------------------*/

INT frontend_init()

{

// create ring buffer for readout thread

create_event_rb(0);

// create readout thread

ss_thread_create(read_stream_thread, NULL);

set_equipment_status(equipment[0].name, "Ready for running", "var(--mgreen)");

return CM_SUCCESS;

}

/*-- Frontend Exit -------------------------------------------------*/

INT frontend_exit()

{

return CM_SUCCESS;

}

/*-- Frontend Loop -------------------------------------------------*/

INT frontend_loop()

{

return CM_SUCCESS;

}

/*-- Begin of Run --------------------------------------------------*/

INT begin_of_run(INT run_number, char *error)

{

set_equipment_status(equipment[0].name, "Running", "var(--mgreen)");

return CM_SUCCESS;

}

/*-- End of Run ----------------------------------------------------*/

INT end_of_run(INT run_number, char *error)

{

set_equipment_status(equipment[0].name, "Ready for running", "var(--mgreen)");

return CM_SUCCESS;

}

/*-- Pause Run -----------------------------------------------------*/

INT pause_run(INT run_number, char *error)

{

return CM_SUCCESS;

}

/*-- Resume Run ----------------------------------------------------*/

INT resume_run(INT run_number, char *error)

{

return CM_SUCCESS;

}

uint64_t generate_random_pixel_hit(uint64_t time_stamp)

{

// Bits 63 - 35: TimeStamp (29 bits)

// Bits 34 - 30: Tot (5 bits)

// Bits 29 - 26: Layer (4 bits)

// Bits 25 - 21: Phi (5 bits)

// Bits 20 - 16: ChipID (5 bits)

// Bits 15 - 8: Col (8 bits)

// Bits 7 - 0: Row (8 bits)

uint64_t tot = rand() % 31; // 0 to 31

uint64_t layer = rand() % 15; // 0 to 15

uint64_t phi = rand() % 31; // 0 to 31

uint64_t chipID = rand() % 5; // 0 to 31

uint64_t col = rand() % 250; // 0 to 255

uint64_t row = rand() % 250; // 0 to 255

uint64_t hit = (time_stamp << 35) | (tot << 30) | (layer << 26) | (phi << 21) | (chipID << 16) | (col << 8) | row;

//printf("tot: 0x%2.2x,layer: 0x%2.2x,phi: 0x%2.2x,chipID: 0x%2.2x,col: 0x%2.2x,row: 0x%2.2x\n",tot,layer,phi,chipID,col,row);

//cout << hex << hit << endl;

//cout << hex << time_stamp << endl;

//cout << hex << (hit >> 35 & 0x7FFFFFFFF) << endl;

//cout << hex << (hit >> 32) << endl;

return hit;

}

uint32_t generate_random_pixel_hit_swb(uint32_t time_stamp)

{

uint32_t tot = rand() % 31; // 0 to 31

uint32_t chipID = rand() % 5; // 0 to 5

uint32_t col = rand() % 250; // 0 to 250

uint32_t row = rand() % 250; // 0 to 250

uint32_t hit = (time_stamp << 28) | (chipID << 22) | (row << 13) | (col << 5) | tot << 1;

// if ( print ) {

// printf("ts:%8.8x,chipID:%8.8x,row:%8.8x,col:%8.8x,tot:%8.8x\n", time_stamp,chipID,row,col,tot);

// printf("hit:%8.8x\n", hit);

// std::cout << std::bitset<32>(hit) << std::endl;

// }

return hit;

}

uint64_t generate_random_scifi_hit(uint32_t time_stamp, uint32_t counter1, uint32_t counter2)

{

uint64_t asic = rand() % 8;

uint64_t hit_type = 1;

uint64_t channel_number = rand() % 32;

uint64_t timestamp_bad = 0;

uint64_t coarse_counter_value = (time_stamp >> 5) & 0x7FFF;

uint64_t fine_counter_value = time_stamp & 0x1F;

uint64_t energy_flag = rand() % 2;

uint64_t fpga_id = 10 + rand() % 4;

if (counter1 > 0) {

fpga_id = counter1;

}

return (asic << 60) | (hit_type << 59) | (channel_number << 54) | (timestamp_bad << 53) | (coarse_counter_value << 38) | (fine_counter_value << 33) | (energy_flag << 32) | (fpga_id << 28) | ((time_stamp & 0x0FFFFFFF));

}

uint64_t generate_random_delta_t_exponential(float lambda)

{

float r = rand()/(1.0+RAND_MAX);

return ceil(-log(1.0-r)/lambda);

}

uint64_t generate_random_delta_t_gauss(float mu, float sigma)

{

std::default_random_engine generator;

generator.seed(rand());

std::normal_distribution<float> distribution(mu,sigma);

float r = ceil(distribution(generator));

if (r<0.0) r=0.0;

return r;

}

INT read_stream_thread(void *param)

{

uint32_t* pdata;

// init bank structure - 64bit alignment

uint32_t SERIAL = 0x00000001;

uint32_t TIME = 0x00000001;

uint64_t hit;

// tell framework that we are alive

signal_readout_thread_active(0, TRUE);

// obtain ring buffer for inter-thread data exchange

int rbh = get_event_rbh(0);

int status;

while (is_readout_thread_enabled()) {

// obtain buffer space

status = rb_get_wp(rbh, (void **)&pdata, 10);

// just sleep and try again if buffer has no space

if (status == DB_TIMEOUT) {

set_equipment_status(equipment[0].name, "Buffer full", "var(--myellow)");

continue;

}

if (status != DB_SUCCESS){

cout << "!DB_SUCCESS" << endl;

break;

}

// don't readout events if we are not running

if (run_state != STATE_RUNNING) {

set_equipment_status(equipment[0].name, "Not running", "var(--myellow)");

continue;

}

set_equipment_status(equipment[0].name, "Running", "var(--mgreen)");

int nEvents = 5000;

size_t eventSize=76;

uint32_t dma_buf_dummy[nEvents*eventSize];

uint64_t prev_mutrig_hit = 0;

uint64_t delta_t_1 = 100;

... 212 more lines ...

|

|

2307

|

02 Dec 2021 |

Alexey Kalinin | Bug Report | some frontend kicked by cm_periodic_tasks | Hello,

We have a small experiment with MIDAS based DAQ.

Status page shows :

ES ESFrontend@192.168.0.37 207 0.2 0.000

Trigger06 Sample Frontend06@192.168.0.37 1.297M 0.3 0.000

Trigger01 Sample Frontend01@192.168.0.37 1.297M 0.3 0.000

Trigger16 Sample Frontend16@192.168.0.37 1.297M 0.3 0.000

Trigger38 Sample Frontend38@192.168.0.37 1.297M 0.3 0.000

Trigger37 Sample Frontend37@192.168.0.37 1.297M 0.3 0.000

Trigger03 Sample Frontend03@192.168.0.38 1.297M 0.3 0.000

Trigger07 Sample Frontend07@192.168.0.38 1.297M 0.3 0.000

Trigger04 Sample Frontend04@192.168.0.38 59898 0.0 0.000

Trigger08 Sample Frontend08@192.168.0.38 59898 0.0 0.000

Trigger17 Sample Frontend17@192.168.0.38 59898 0.0 0.000

And SYSTEM buffers page shows:

ESFrontend 1968 198 47520 0 0x00000000 0

193 ms

Sample Frontend06 1332547 1330826 379729872 0 0x00000000

0 1.1 sec

Sample Frontend16 1332542 1330839 361988208 0 0x00000000

0 94 ms

Sample Frontend37 1332530 1330841 337798408 0 0x00000000

0 1.1 sec

Sample Frontend01 1332543 1330829 467136688 0 0x00000000

0 34 ms

Sample Frontend38 1332528 1330830 291453608 0 0x00000000

0 1.1 sec

Sample Frontend04 63254 61467 20882584 0 0x00000000

0 208 ms

Sample Frontend08 63262 61476 27904056 0 0x00000000

0 205 ms

Sample Frontend17 63271 61473 20433840 0 0x00000000

0 213 ms

Sample Frontend03 1332549 1330818 386821728 0 0x00000000

0 82 ms

Sample Frontend07 1332554 1330821 462210896 0 0x00000000

0 37 ms

Logger 968742 0w+9500418r 0w+2718405736r 0 0x00000000 0

GET_ALL Used 0 bytes 0.0% 303 ms

rootana 254561 0w+29856958r 0w+8718288352r 0 0x00000000 0

762 ms

The problem is that eventually some of frontend closed with message

:19:22:31.834 2021/12/02 [rootana,INFO] Client 'Sample Frontend38' on buffer

'SYSMSG' removed by cm_periodic_tasks because process pid 9789 does not exist

in the meantime mserver loggging :

mserver started interactively

mserver will listen on TCP port 1175

double free or corruption (!prev)

double free or corruption (!prev)

free(): invalid next size (normal)

double free or corruption (!prev)

I can find some correlation between number of events/event size produced by

frontend, cause its failed when its become big enough.

frontend scheme is like this:

poll event time set to 0;

poll_event{

//if buffer not transferred return (continue cutting the main buffer)

//read main buffer from hardware

//buffer not transfered

}

read event{

// cut the main buffer to subevents (cut one event from main buffer) return;

//if (last subevent) {buffer transfered ;return}

}

What is strange to me that 2 frontends (1 per remote pc) causing this.

Also, I'm executing one FEcode with -i # flag , put setting eventid in

frontend_init , and using SYSTEM buffer for all.

Is there something I'm missing?

Thanks.

A. |

|

2306

|

02 Dec 2021 |

Stefan Ritt | Forum | Sequencer error with ODB Inc | Thanks for reporting that bug. Indeed there was a problem in the sequencer code which I fixed now. Please try the updated develop branch.

Stefan |

|

2305

|

02 Dec 2021 |

Stefan Ritt | Bug Report | Off-by-one in sequencer documentation | > The documentation for the sequencer loop says:

>

> <quote>

> LOOP [name ,] n ... ENDLOOP To execute a loop n times. For infinite loops, "infinite"

> can be specified as n. Optionally, the loop variable running from 0...(n-1) can be accessed

> inside the loop via $name.

> </quote>

>

> In fact the loop variable runs from 1...n, as can be seen by running this exciting

> sequencer code:

>

> 1 COMMENT "Figuring out MSL"

> 2

> 3 LOOP n,4

> 4 MESSAGE $n,1

> 5 ENDLOOP

Indeed you're right. The loop variable runs from 1...n. I fixed that in the documentation.

Stefan |

|

2304

|

01 Dec 2021 |

Lars Martin | Bug Report | Off-by-one in sequencer documentation | The documentation for the sequencer loop says:

<quote>

LOOP [name ,] n ... ENDLOOP To execute a loop n times. For infinite loops, "infinite"

can be specified as n. Optionally, the loop variable running from 0...(n-1) can be accessed

inside the loop via $name.

</quote>

In fact the loop variable runs from 1...n, as can be seen by running this exciting

sequencer code:

1 COMMENT "Figuring out MSL"

2

3 LOOP n,4

4 MESSAGE $n,1

5 ENDLOOP |

|

2303

|

19 Nov 2021 |

Jacob Thorne | Forum | Sequencer error with ODB Inc | Hi,

I am having problems with the midas sequencer, here is my code:

1 COMMENT "Example to move a Standa stage"

2 RUNDESCRIPTION "Example movement sequence - each run is one position of a single stage

3

4 PARAM numRuns

5 PARAM sequenceNumber

6 PARAM RunNum

7

8 PARAM positionT2

9 PARAM deltapositionT2

10

11 ODBSet "/Runinfo/Run number", $RunNum

12 ODBSet "/Runinfo/Sequence number", $sequenceNumber

13

14 ODBSet "/Equipment/Neutron Detector/Settings/Detector/Type of Measurement", 2

15 ODBSet "/Equipment/Neutron Detector/Settings/Detector/Number of Time Bins", 10

16 ODBSet "/Equipment/Neutron Detector/Settings/Detector/Number of Sweeps", 1

17 ODBSet "/Equipment/Neutron Detector/Settings/Detector/Dwell Time", 100000

18

19 ODBSet "/Equipment/MTSC/Settings/Devices/Stage 2 Translation/Device Driver/Set Position", $positionT2

20

21 LOOP $numRuns

22 WAIT ODBvalue, "/Equipment/MTSC/Settings/Devices/Stage 2 Translation/Ready", ==, 1

23 TRANSITION START

24 WAIT ODBvalue, "/Equipment/Neutron Detector/Statistics/Events sent", >=, 1

25 WAIT ODBvalue, "/Runinfo/State", ==, 1

26 WAIT ODBvalue, "/Runinfo/Transition in progress", ==, 0

27 TRANSITION STOP

28 ODBInc "/Equipment/MTSC/Settings/Devices/Stage 2 Translation/Device Driver/Set Position", $deltapositionT2

29

30 ENDLOOP

31

32 ODBSet "/Runinfo/Sequence number", 0

The issue comes with line 28, the ODBInc does not work, regardless of what number I put I get the following error:

[Sequencer,ERROR] [odb.cxx:7046:db_set_data_index1,ERROR] "/Equipment/MTSC/Settings/Devices/Stage 2 Translation/Device Driver/Set Position" invalid element data size 32, expected 4

I don't see why this should happen, the format is correct and the number that I input is an int.

Sorry if this is a basic question.

Jacob |

|

2302

|

11 Nov 2021 |

Thomas Lindner | Forum | MityCAMAC Login | Hi Hunter

This sounds like a Triumf specific problem;

not a MIDAS problem. Please email me directly

and we can try to solve this problem.

Thomas Lindner

TRIUMF DAQ

Hello all,

>

> I've recently acquired a MityCAMAC system

that was built at TRIUMF and I'm

> having issues accessing it over ethernet.

>

> The system: Ubuntu VM inside Windows 10

machine.

>

> I've tried reconfiguring the network

settings for the VM but nmap and arp/ip

> commands have yielded me no results in

finding the crate controller.

>

> I was getting help from Pierre Amaudruz but

I think he is now busy for some

> time. I have the mac address of the crate

controller and its name. The

> controller seems to initialize fine inside

of the CAMAC crate. The windows side

> of the workstation also tells me that an

unknown network is in fact connected.

>

> I suspect I either need to do something with

an ssh key (which I thought we

> accomplished but maybe not), or perhaps the

domain name in the controller needs

> to be changed.

>

> If anybody has experience working with

MityARM I would appreciate any advice I

> could get.

>

> Best,

> Hunter Lowe

> UNBC Graduate Physics |

|

2301

|

10 Nov 2021 |

Stefan Ritt | Forum | Issue in data writing speed | Midas uses various buffers (in the frontend, at the server side before the SYSTEM buffer, the SYSTEM buffer itself, on the

logger before writing to disk. All these buffers are in RAM and have fast access, so you can fill them pretty quickly. When

they are full, the logger writes to disk, which is slower. So I believe at 2 Hz your disk can keep up with your writing

speed, but at 4 Hz (2x8MBx4=32 MB/sec) your disk starts slowing down the writing process. Now 32MB/s is pretty slow for

a disk, so I presume you have turned compression on which takes quite some time.

To verify this, disable logging. The disable compression and keep logging. Then report back here again.

> Dear all,

> I've a frontend writing a quite big bunch of data into a MIDAS bank (16bit output from a 4MP photo camera).

> I'm experiencing a writing speed problem that I don't understand. When the photo camera is triggered at a low rate (< 2 Hz)

> writing into the bank takes a very short time for each event (indeed, what I measure is the time to write and go back

> into the polling function). If I increase the rate to 4 Hz, I see that writing the first two events takes a sort time,

> but the third event takes a very long time (hundreds of ms), then again the fourth and fifth events are very fast, and

> the sixth is very slow. If I further increase the rate, every other event is very slow. The problem is not in the readout

> of the camera, because if I just remove the bank writing and keep the camera readout, the problem disappears. Can you

> explain this behavior? Is there any way to improve it?

>

> Below you can also find the code I use to copy the data from the camera buffer into the bank. If you have any suggestion

> to improve it, it would be really appreciated.

>

> Thank you very much,

> Francesco

>

>

>

> const char* pSrc = (const char*)bufframe.buf;

>

> for(int y = 0; y < bufframe.height; y++ ){

>

> //Copy one row

> const unsigned short* pDst = (const unsigned short*)pSrc;

>

> //go through the row

> for(int x = 0; x < bufframe.width; x++ ){

>

> WORD tmpData = *pDst++;

>

> *pdata++ = tmpData;

>

> }

>

> pSrc += bufframe.rowbytes;

>

> }

> |

|

2300

|

09 Nov 2021 |

Hunter Lowe | Forum | MityCAMAC Login | Hello all,

I've recently acquired a MityCAMAC system that was built at TRIUMF and I'm

having issues accessing it over ethernet.

The system: Ubuntu VM inside Windows 10 machine.

I've tried reconfiguring the network settings for the VM but nmap and arp/ip

commands have yielded me no results in finding the crate controller.

I was getting help from Pierre Amaudruz but I think he is now busy for some

time. I have the mac address of the crate controller and its name. The

controller seems to initialize fine inside of the CAMAC crate. The windows side

of the workstation also tells me that an unknown network is in fact connected.

I suspect I either need to do something with an ssh key (which I thought we

accomplished but maybe not), or perhaps the domain name in the controller needs

to be changed.

If anybody has experience working with MityARM I would appreciate any advice I

could get.

Best,

Hunter Lowe

UNBC Graduate Physics |

|

2299

|

09 Nov 2021 |

Francesco Renga | Forum | Issue in data writing speed | Dear all,

I've a frontend writing a quite big bunch of data into a MIDAS bank (16bit output from a 4MP photo camera).

I'm experiencing a writing speed problem that I don't understand. When the photo camera is triggered at a low rate (< 2 Hz)

writing into the bank takes a very short time for each event (indeed, what I measure is the time to write and go back

into the polling function). If I increase the rate to 4 Hz, I see that writing the first two events takes a sort time,

but the third event takes a very long time (hundreds of ms), then again the fourth and fifth events are very fast, and

the sixth is very slow. If I further increase the rate, every other event is very slow. The problem is not in the readout

of the camera, because if I just remove the bank writing and keep the camera readout, the problem disappears. Can you

explain this behavior? Is there any way to improve it?

Below you can also find the code I use to copy the data from the camera buffer into the bank. If you have any suggestion

to improve it, it would be really appreciated.

Thank you very much,

Francesco

const char* pSrc = (const char*)bufframe.buf;

for(int y = 0; y < bufframe.height; y++ ){

//Copy one row

const unsigned short* pDst = (const unsigned short*)pSrc;

//go through the row

for(int x = 0; x < bufframe.width; x++ ){

WORD tmpData = *pDst++;

*pdata++ = tmpData;

}

pSrc += bufframe.rowbytes;

}

|

|

2298

|

29 Oct 2021 |

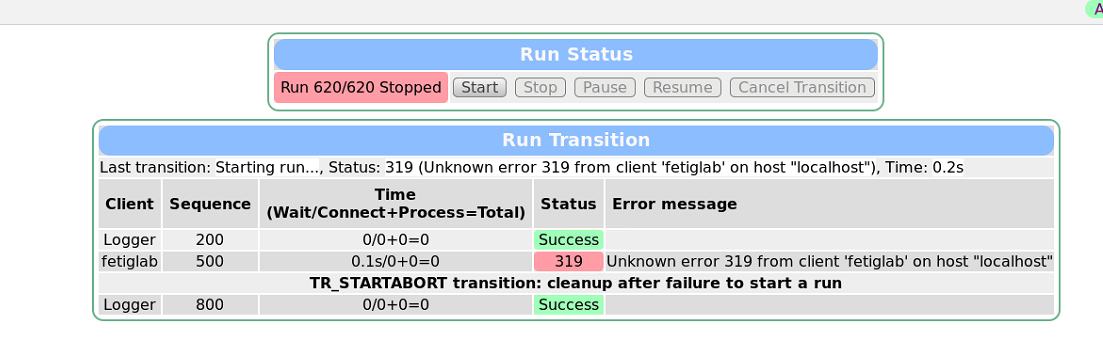

Kushal Kapoor | Bug Report | Unknown Error 319 from client | I’m trying to run MIDAS using a frontend code/client named “fetiglab”. Run stops

after 2/3sec with an error saying “Unknown error 319 from client “fetiglab” on

localhost.

Frontend code compiled without any errors and MIDAS reads the frontend

successfully, this only comes when I start the new run on MIDAS, here are a few

more details from the terminal-

11:46:32 [fetiglab,ERROR] [odb.cxx:11268:db_get_record,ERROR] struct size

mismatch for "/" (expected size: 1, size in ODB: 41920)

11:46:32 [Logger,INFO] Deleting previous file

"/home/rcmp/online3/run00621_000.root"

11:46:32 [ODBEdit,ERROR] [midas.cxx:5073:cm_transition,ERROR] transition START

aborted: client "fetiglab" returned status 319

11:46:32 [ODBEdit,ERROR] [midas.cxx:5246:cm_transition,ERROR] Could not start a

run: cm_transition() status 319, message 'Unknown error 319 from client

'fetiglab' on host "localhost"'

TR_STARTABORT transition: cleanup after failure to start a run

‌

I’ve also enclosed a screenshot for the same, any suggestions would be highly

appreciated. thanks |

| Attachment 1: Screenshot_2021-10-26_114015.png

|

|

|

2297

|

29 Oct 2021 |

Frederik Wauters | Bug Report | midas::odb::iterator + operator | work around | ok, so retrieving as a std::array (as it was defined) does not work

std::array<uint32_t,16> avalues = settings["FIR Energy"]["Energy Gap Value"];

but retrieving as an std::vector does, and then I have a standard c++ iterator which I can use in std stuff

std::vector<uint32_t> values = settings["FIR Energy"]["Energy Gap Value"];

> I have 16 array odb key

>

> {"FIR Energy", {

> {"Energy Gap Value", std::array<uint32_t,16>(10) },

>

> I can get the maximum of this array like

>

>

> uint32_t max_value = *std::max_element(values.begin(),values.end());

>

> but when I need the maximum of a sub range

>

> uint32_t max_value = *std::max_element(values.begin(),values.begin()+4);

>

> I get

>

> /home/labor/new_daq/frontends/SIS3316Module.cpp:584:62: error: no match for ‘operator+’ (operand types are ‘midas::odb::iterator’ and ‘int’)

> 584 | max_value = *std::max_element(values.begin(),values.begin()+4);

> | ~~~~~~~~~~~~~~^~

> | | |

> | | int

> |

>

> As the + operator is overloaded for midas::odb::iterator, I was expected this to work.

>

> (and yes, I can find the max element by accessing the elements on by one) |

|

2296

|

29 Oct 2021 |

Frederik Wauters | Bug Report | midas::odb::iterator + operator | I have 16 array odb key

{"FIR Energy", {

{"Energy Gap Value", std::array<uint32_t,16>(10) },

I can get the maximum of this array like

uint32_t max_value = *std::max_element(values.begin(),values.end());

but when I need the maximum of a sub range

uint32_t max_value = *std::max_element(values.begin(),values.begin()+4);

I get

/home/labor/new_daq/frontends/SIS3316Module.cpp:584:62: error: no match for ‘operator+’ (operand types are ‘midas::odb::iterator’ and ‘int’)

584 | max_value = *std::max_element(values.begin(),values.begin()+4);

| ~~~~~~~~~~~~~~^~

| | |

| | int

|

As the + operator is overloaded for midas::odb::iterator, I was expected this to work.

(and yes, I can find the max element by accessing the elements on by one) |

|

2295

|

25 Oct 2021 |

Stefan Ritt | Forum | Logger crash | The short term solution would be to increase the logger timeout in the ODB under

/Programs/Logger/Watchdog timeout

and set it to 6000 (one minute). But that is curing just the symptoms. It would be

interesting to understand the cause of this error. Probably the logger takes more than 10

seconds to start or stop the run. The reason could be that the history grow too big (what

we have right now in MEG II), or some disk problems. But that needs detailed debugging on

the logger side.

Stefan |

|

2294

|

25 Oct 2021 |

Francesco Renga | Forum | Logger crash | Hello,

I'm experiencing crashes of the mlogger program on the time scale of a couple

of days. The only messages from MIDAS are:

05:34:47.336 2021/10/24 [mhttpd,INFO] Client 'Logger' (PID 14281) on database

'ODB' removed by db_cleanup called by cm_periodic_tasks (idle 10.2s,TO 10s)

05:34:47.335 2021/10/24 [mhttpd,INFO] Client 'Logger' on buffer 'SYSMSG' removed

by cm_periodic_tasks (idle 10.2s, timeout 10s)

Any suggestion to further investigate this issue?

Thank you very much,

Francesco |

|

2293

|

25 Oct 2021 |

Francesco Renga | Forum | mhttpd error | It worked, thank you very much!

Francesco

> > Enable IPv6 y

>

> Probably the IPv6 problem, see here elog:2269

>

> I asked to turn off IPv6 by default, or at least mention this in the documentation,

> but unfortunately nothing happened.

>

> Stefan |

|

2292

|

22 Oct 2021 |

Stefan Ritt | Forum | mhttpd error | > Enable IPv6 y

Probably the IPv6 problem, see here elog:2269

I asked to turn off IPv6 by default, or at least mention this in the documentation,

but unfortunately nothing happened.

Stefan |

|

2291

|

22 Oct 2021 |

Francesco Renga | Forum | mhttpd error | Dear all,

I am trying to make the MIDAS web server for my DAQ project accessible from other machines. In the ODB, I activated the necessary flags:

[local:CYGNUS_RD:S]/WebServer>ls

Enable localhost port y

localhost port 8080

localhost port passwords n

Enable insecure port y

insecure port 8081

insecure port passwords y

insecure port host list y

Enable https port y

https port 8443

https port passwords y

https port host list n

Host list

localhost

Enable IPv6 y

Proxy

mime.types

Following the instructions on the Wiki I enabled the SSL support. When running mhttpd, I get these messages:

Mongoose web server will use HTTP Digest authentication with realm "CYGNUS_RD" and password file "/home/cygno/DAQ/online/htpasswd.txt"

Mongoose web server will use the hostlist, connections will be accepted only from: localhost

Mongoose web server listening on http address "localhost:8080", passwords OFF, hostlist OFF

Mongoose web server listening on http address "[::1]:8080", passwords OFF, hostlist OFF

Mongoose web server listening on http address "8081", passwords enabled, hostlist enabled

[mhttpd,ERROR] [mhttpd.cxx:19166:mongoose_listen,ERROR] Cannot mg_bind address "[::0]:8081"

Mongoose web server will use https certificate file "/home/cygno/DAQ/online/ssl_cert.pem"

Mongoose web server listening on https address "8443", passwords enabled, hostlist OFF

[mhttpd,ERROR] [mhttpd.cxx:19166:mongoose_listen,ERROR] Cannot mg_bind address "[::0]:8443"

and the server is not accessible from other machines. Any suggestion to solve or better investigate this problem?

Thank you very much,

Francesco |

|