| ID |

Date |

Author |

Topic |

Subject |

|

263

|

08 Jun 2006 |

Konstantin Olchanski | Bug Fix | fix compilation of musbstd.h, add it back to libmidas |

I fixed the compilation of musbstd.h (it required -DHAVE_LIBUSB on Linux, but

nothing knew about defining it) and put musbstd.o back into libmidas (USB

support should be part of the standard base midas library). K.O. |

|

267

|

09 Jun 2006 |

Stefan Ritt | Bug Fix | fix compilation of musbstd.h, add it back to libmidas |

> I fixed the compilation of musbstd.h (it required -DHAVE_LIBUSB on Linux, but

> nothing knew about defining it) and put musbstd.o back into libmidas (USB

> support should be part of the standard base midas library). K.O.

I'm not so sure about that. One could consider musbstd.o as a driver, and the

philosophy used for midas programs is that drivers get added explicitly when

compiling a frontend. We do not put mvmestd.c and mcstd.c into libmidas since for

different interfaces a different driver might be required. If we at some point use

an usb library different than libusb.a, we would have to compile different

libmidas for these different drivers.

I know it's convenient to have things in libmidas and not having to specify it

expliceitely for each frontend, but it is then somehow inconsistent with drivers

for vme and camac. So please reconsider this again.

- Stefan |

|

2424

|

15 Aug 2022 |

Zaher Salman | Bug Report | firefox hangs due to mhistory |

Firefox is hanging/becoming unresponsive due to javascript code. After stopping the script manually to get firefox back in control I have the following message in the console

17:21:28.821 Script terminated by timeout at:

MhistoryGraph.prototype.drawTAxis@http://lem03.psi.ch:8081/mhistory.js:2828:7

MhistoryGraph.prototype.draw@http://lem03.psi.ch:8081/mhistory.js:1792:9

mhistory.js:2828:7

Any ideas how to resolve this?? |

|

2425

|

15 Aug 2022 |

Stefan Ritt | Bug Report | firefox hangs due to mhistory |

> Firefox is hanging/becoming unresponsive due to javascript code. After stopping the script manually to get firefox back in control I have the following message in the console

>

> 17:21:28.821 Script terminated by timeout at:

> MhistoryGraph.prototype.drawTAxis@http://lem03.psi.ch:8081/mhistory.js:2828:7

> MhistoryGraph.prototype.draw@http://lem03.psi.ch:8081/mhistory.js:1792:9

> mhistory.js:2828:7

>

> Any ideas how to resolve this??

I have to reproduce the problem. Can you send me the full URL from your browser when you see that problem? Probably you have some "special" axis limits, so we don't see that

problem anywhere else.

Stefan |

|

2426

|

16 Aug 2022 |

Zaher Salman | Bug Report | firefox hangs due to mhistory |

> > Firefox is hanging/becoming unresponsive due to javascript code. After stopping the script manually to get firefox back in control I have the following message in the console

> >

> > 17:21:28.821 Script terminated by timeout at:

> > MhistoryGraph.prototype.drawTAxis@http://lem03.psi.ch:8081/mhistory.js:2828:7

> > MhistoryGraph.prototype.draw@http://lem03.psi.ch:8081/mhistory.js:1792:9

> > mhistory.js:2828:7

> >

> > Any ideas how to resolve this??

>

> I have to reproduce the problem. Can you send me the full URL from your browser when you see that problem? Probably you have some "special" axis limits, so we don't see that

> problem anywhere else.

>

> Stefan

Hi Stefan and Konstantin,

The URL (reachable only within PSI) is http://lem03.psi.ch:8081/?cmd=custom&page=Mudas

Firefox is version 91.12.0esr (64-bit), but I had similar issues with chrome/chromium too.

The hangs seem to happen randomly so I have not been able to reproduce it yet.

I have histories here http://lem03.psi.ch:8081/?cmd=custom&page=Mudas&tab=3 (30 minutes each), but I have also histories popping up in modals though they do not cause any issues.

I'll try to reproduce it in the coming few days and report again.

thanks,

Zaher |

|

2427

|

16 Aug 2022 |

Zaher Salman | Bug Report | firefox hangs due to mhistory |

I found the bug. The problem is triggered by changing the firefox window. This calls a function that is supposed to change the size of the history plot and it works well when the history plots are visible but not if the history plots are hidden in a javascript tab (not another firefox tab).

Is there a clean way to resize the history plot if the parent div changes size?? The offending code is

mhist[i].mhg = new MhistoryGraph(mhist[i]);

mhist[i].mhg.initializePanel(i);

mhist[i].mhg.resize();

mhist[i].resize = function () {

mhis.mhg.resize();

}; |

|

2428

|

16 Aug 2022 |

Konstantin Olchanski | Bug Report | firefox hangs due to mhistory |

> > > Firefox is hanging/becoming unresponsive due to javascript code.

>

> The URL (reachable only within PSI) is http://lem03.psi.ch:8081/?cmd=custom&page=Mudas

so malfunction is not in the midas history page, but in a custom page. I could help you debug it,

but you would have to provide the complete source code (javascript and html).

> Firefox is version 91.12.0esr (64-bit), but I had similar issues with chrome/chromium too.

my firefox is 103.something. when you say google-chrome has "similar issues",

I read it as "google-chrome does not show this same bug, but shows some other

bug somewhere else". (if I misread you, you have to write better).

but this gives you a front to attack your bugs. basically all browsers should render your

custom page exactly the same (unless you use some obscure or experimental feature, which I

recommend against).

so you tweak your page to identify the source of different rendering results, and try to eliminate it,

hopefully by the time you get your page render exactly the same everywhere, all the real bugs

have gotten shaken out, too. (this is similar to debugging a c++ program by compiling

it on linux, mac, windows, vax, raspbery pi, etc and checking that you get the same result everywhere).

> The hangs seem to happen randomly so I have not been able to reproduce it yet.

I find that javascript debuggers are not setup to debug hangs. I think debugger runs partially

inside the same javascript engine you are debugging, so both hang and debugging is impossible.

(latest google-chrome has another improvement, all pages from the same computer run in the same

javascript engine, so if one midas page stops (on exception or because I debug it), all midas pages

stop and I have to run two different browsers if I want to debug (i.e.) a history page crash

and look at odb at the same time. fun).

K.O. |

|

2429

|

17 Aug 2022 |

Stefan Ritt | Bug Report | firefox hangs due to mhistory |

The problem lies in your function mhistory_init_one() in Mudas.js:1965. You can only call "new MhistoryGraph(e)" with an element "e" which is something like

<div class="mjshistory" data-group="..." data-panel="..." data-base-u-r-l="https://host.psi.ch/?cmd=history" title="">

Please note the "data-base-u-r-l". This gets automatically added by the function mhistory_init() in mhistory.js:48. The URL is necessary sot that the upper right button in a history graph works which goes to a history page only showing the current graph.

In you function mhistory_init_one() you forgot the call

mhist.dataset.baseURL = baseURL;

where baseURL has to come from the current address bar like

let baseURL = window.location.href;

if (baseURL.indexOf("?cmd") > 0)

baseURL = baseURL.substr(0, baseURL.indexOf("?cmd"));

baseURL += "?cmd=history";

If you duplicate some functionality from mhistory.js, please make sure to duplicate it completely.

Best,

Stefan |

|

2430

|

17 Aug 2022 |

Zaher Salman | Bug Report | firefox hangs due to mhistory |

> The problem lies in your function mhistory_init_one() in Mudas.js:1965. You can only call "new MhistoryGraph(e)" with an element "e" which is something like

>

> <div class="mjshistory" data-group="..." data-panel="..." data-base-u-r-l="https://host.psi.ch/?cmd=history" title="">

>

> Please note the "data-base-u-r-l". This gets automatically added by the function mhistory_init() in mhistory.js:48. The URL is necessary sot that the upper right button in a history graph works which goes to a history page only showing the current graph.

>

> In you function mhistory_init_one() you forgot the call

>

> mhist.dataset.baseURL = baseURL;

>

> where baseURL has to come from the current address bar like

>

> let baseURL = window.location.href;

> if (baseURL.indexOf("?cmd") > 0)

> baseURL = baseURL.substr(0, baseURL.indexOf("?cmd"));

> baseURL += "?cmd=history";

>

> If you duplicate some functionality from mhistory.js, please make sure to duplicate it completely.

>

Thanks Stefan, but this was not the problem since I am setting the baseURL. You may have looked at the code during my debugging.

Some of my histories are placed in an IFrame object. I eventually realized that my code fails when it tries to resize a history which is placed in an invisible IFrame. I resolved the issue by making sure that I am resizing plots only if they are in a visible IFrame.

|

|

Draft

|

17 Aug 2022 |

Stefan Ritt | Bug Report | firefox hangs due to mhistory |

> Some of my histories are placed in an IFrame object. I eventually realized that my code fails

> when it tries to resize a history which is placed in an invisible IFrame. I resolved the issue

> by making sure that I am resizing plots only if they are in a visible IFrame.

Just to be clear: You could resolve everything on your side, or do you need to change anything in mhistory.js?

Just a tip: IFrames are not good to put anything in. I recommend just to dynamically crate a <div> element,

append it to the document body, make it floating and initially invisible. Then put all inside that div. Have

a look how control.js do it. T |

|

2432

|

17 Aug 2022 |

Stefan Ritt | Bug Report | firefox hangs due to mhistory |

> Some of my histories are placed in an IFrame object. I eventually realized that my code fails

> when it tries to resize a history which is placed in an invisible IFrame. I resolved the issue

> by making sure that I am resizing plots only if they are in a visible IFrame.

Just to be clear: You could resolve everything on your side, or do you need to change anything in mhistory.js?

Just a tip: IFrames are not good to put anything in. I recommend just to dynamically crate a <div> element,

append it to the document body, make it floating and initially invisible. Then put all inside that div. Have

a look how control.js do it. This takes less resources than a complete IFrame and is much easier to handle.

Stefan |

|

2775

|

21 May 2024 |

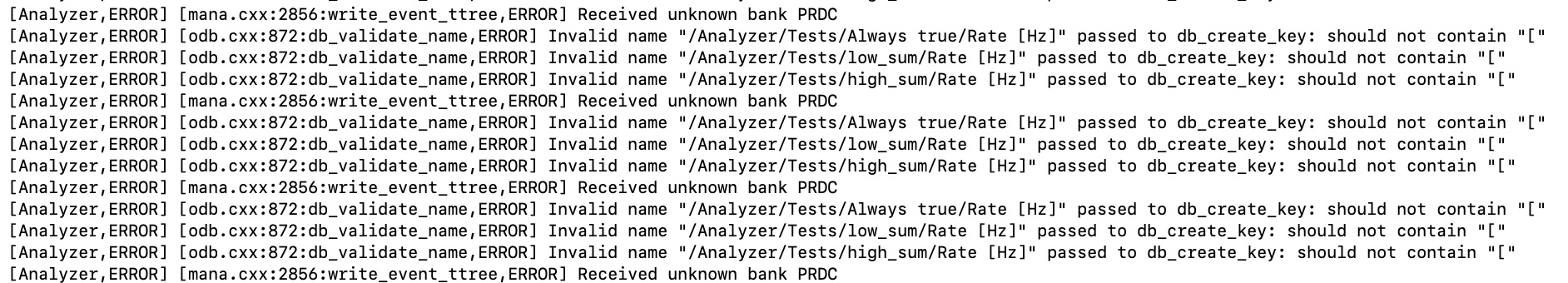

Nikolay | Bug Report | experiment from midas/examples |

There are 2 bugs in midas/examples/experiment:

1) In fronted bank named "PRDC" is created for scaler event. But in analyzer

module scaler.cxx the bank named "SCLR" is searched for the same event.

2) In mana.cxx linked from analyzer.cxx is "Invalid name "/Analyzer/Tests/Always

true/Rate [Hz]" passed to db_create_key: should not contain "["".

Looks like ODB doesn't like '[', ']' characters. |

| Attachment 1: analyzer.jpg

|

|

|

2516

|

16 May 2023 |

Konstantin Olchanski | Bug Report | excessive logging of http requests |

Our default configuration of apache httpd logs every request. MIDAS custom web pages can easily make a huge number of RPC calls creating a

huge log file and filling system disk to 100% capacity

this example has around 100 RPC requests per second. reasonable/unreasonable? available hardware can handle it (web browser, network

httpd, mhttpd, etc), so we should try to get this to work. perhaps filter the apache httpd logs to exclude mjsonrpc requests? of course we

can ask the affected experiment why they make so many RPC calls, is there a bug?

[14/May/2023:03:49:01 -0700] 142.90.111.176 TLSv1.2 ECDHE-RSA-AES256-GCM-SHA384 "POST /?mjsonrpc HTTP/1.1" 299

[14/May/2023:03:49:01 -0700] 142.90.111.176 TLSv1.2 ECDHE-RSA-AES256-GCM-SHA384 "POST /?mjsonrpc HTTP/1.1" 299

[14/May/2023:03:49:01 -0700] 142.90.111.176 TLSv1.2 ECDHE-RSA-AES256-GCM-SHA384 "POST /?mjsonrpc HTTP/1.1" 299

[14/May/2023:03:49:01 -0700] 142.90.111.176 TLSv1.2 ECDHE-RSA-AES256-GCM-SHA384 "POST /?mjsonrpc HTTP/1.1" 299

[14/May/2023:03:49:01 -0700] 142.90.111.176 TLSv1.2 ECDHE-RSA-AES256-GCM-SHA384 "POST /?mjsonrpc HTTP/1.1" 299

K.O. |

|

2517

|

16 May 2023 |

Konstantin Olchanski | Bug Report | excessive logging of http requests |

> Our default configuration of apache httpd logs every request. MIDAS custom web pages can easily make a huge number of RPC calls creating a

> huge log file and filling system disk to 100% capacity

perhaps use existing logrotate, add limit on file size (size) and limit of 2 old log files (rotate).

/etc/logrotate.d/httpd

/var/log/httpd/*log {

size 100M

rotate 2

missingok

notifempty

sharedscripts

delaycompress

postrotate

/bin/systemctl reload httpd.service > /dev/null 2>/dev/null || true

endscript

}

K.O. |

|

2518

|

16 May 2023 |

Stefan Ritt | Bug Report | excessive logging of http requests |

Maybe you remember the problems we had with a custom page in Japan loading it from TRIUMF. It took almost one minute since each RPC request took

about 1s round-trip. This got fixed by the modb* scheme where the framework actually collects all ODB variables in a custom page and puts them

into ONE rpc request (making the path an actual array of paths). That reduced the requests from 100 to 1 in the above example. Maybe the same

could be done in your current case. Pulling one ODB variable at a time is not very efficient.

Stefan |

|

2568

|

02 Aug 2023 |

Konstantin Olchanski | Bug Report | excessive logging of http requests |

> > Our default configuration of apache httpd logs every request. MIDAS custom web pages can easily make a huge number of RPC calls creating a

> > huge log file and filling system disk to 100% capacity

> perhaps use existing logrotate, add limit on file size (size) and limit of 2 old log files (rotate).

logrotate was ineffective.

following apache httpd config seems to disable logging of mjsonrpc requests. note that we cannot filter on the "mjsonrpc" string because

Request_URI excludes the query string (ouch!).

#SetEnvIf Request_URI "^POST /?mjsonrpc.*" nolog

SetEnvIf Request_Method "POST" envpost

SetEnvIf Request_URI "^\/$" envuri

SetEnvIfExpr "-T reqenv('envpost') && -T reqenv('envuri')" envnolog

CustomLog logs/ssl_request_log "%t %h %{SSL_PROTOCOL}x %{SSL_CIPHER}x \"%r\" %b" env=!envnolog

K.O. |

|

2573

|

03 Aug 2023 |

Konstantin Olchanski | Bug Report | excessive logging of http requests |

> > > Our default configuration of apache httpd logs every request. MIDAS custom web pages can easily make a huge number of RPC calls creating a

> > > huge log file and filling system disk to 100% capacity

> > perhaps use existing logrotate, add limit on file size (size) and limit of 2 old log files (rotate).

>

> CustomLog logs/ssl_request_log "%t %h %{SSL_PROTOCOL}x %{SSL_CIPHER}x \"%r\" %b" env=!envnolog

>

TransferLog is not conditional and has to be commented out to stop logging every jsonrpc request.

K.O. |

|

2581

|

14 Aug 2023 |

Konstantin Olchanski | Bug Report | excessive logging of http requests |

> Our default configuration of apache httpd logs every request.

> MIDAS custom web pages can easily make a huge number of RPC calls creating a

> huge log file and filling system disk to 100% capacity.

close but no cigar. mhttpd is not running and /var/log got filled to 100% capacity by http error messages. I do not see any apache facility to filter

error messages, hmm...

-rw-r--r-- 1 root root 1864421376 Aug 14 12:53 ssl_error_log

[Sun Aug 13 23:53:12.416247 2023] [proxy:error] [pid 18608] AH00940: HTTP: disabled connection for (localhost)

[Sun Aug 13 23:53:12.416538 2023] [proxy:error] [pid 19686] AH00940: HTTP: disabled connection for (localhost)

[Sun Aug 13 23:53:12.416603 2023] [proxy:error] [pid 19681] AH00940: HTTP: disabled connection for (localhost)

[Sun Aug 13 23:53:12.416775 2023] [proxy:error] [pid 19588] AH00940: HTTP: disabled connection for (localhost)

[Sun Aug 13 23:53:12.417022 2023] [proxy:error] [pid 19311] AH00940: HTTP: disabled connection for (localhost)

[Sun Aug 13 23:53:12.421864 2023] [proxy:error] [pid 18620] AH00940: HTTP: disabled connection for (localhost)

[Sun Aug 13 23:53:12.422051 2023] [proxy:error] [pid 19693] AH00940: HTTP: disabled connection for (localhost)

[Sun Aug 13 23:53:12.422199 2023] [proxy:error] [pid 19673] AH00940: HTTP: disabled connection for (localhost)

[Sun Aug 13 23:53:12.422222 2023] [proxy:error] [pid 18608] AH00940: HTTP: disabled connection for (localhost)

[Sun Aug 13 23:53:12.422230 2023] [proxy:error] [pid 19657] AH00940: HTTP: disabled connection for (localhost)

[Sun Aug 13 23:53:12.422259 2023] [proxy:error] [pid 18633] AH00940: HTTP: disabled connection for (localhost)

[Sun Aug 13 23:53:12.427513 2023] [proxy:error] [pid 19686] AH00940: HTTP: disabled connection for (localhost)

[Sun Aug 13 23:53:12.427549 2023] [proxy:error] [pid 19681] AH00940: HTTP: disabled connection for (localhost)

[Sun Aug 13 23:53:12.427645 2023] [proxy:error] [pid 19588] AH00940: HTTP: disabled connection for (localhost)

[Sun Aug 13 23:53:12.427774 2023] [proxy:error] [pid 19693] AH00940: HTTP: disabled connection for (localhost)

[Sun Aug 13 23:53:12.427800 2023] [proxy:error] [pid 18620] AH00940: HTTP: disabled connection for (localhost)

K.O. |

|

2587

|

16 Aug 2023 |

Konstantin Olchanski | Bug Report | excessive logging of http requests |

> > Our default configuration of apache httpd logs every request.

> > MIDAS custom web pages can easily make a huge number of RPC calls creating a

> > huge log file and filling system disk to 100% capacity.

added "daily" to /etc/logrotate.d/httpd, default was "weekly", not often enough.

K.O. |

|

2592

|

17 Aug 2023 |

Konstantin Olchanski | Bug Report | excessive logging of http requests |

> > > Our default configuration of apache httpd logs every request.

> > > MIDAS custom web pages can easily make a huge number of RPC calls creating a

> > > huge log file and filling system disk to 100% capacity.

> added "daily" to /etc/logrotate.d/httpd, default was "weekly", not often enough.

this should fix it good, make /var/log bigger:

[root@mpmt-test ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/sdc2 52403200 52296356 106844 100% /

[root@mpmt-test ~]#

[root@mpmt-test ~]# xfs_growfs /

data blocks changed from 13107200 to 106367750

[root@mpmt-test ~]#

[root@mpmt-test ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/sdc2 425445400 52300264 373145136 13% /

K.O. |