| ID |

Date |

Author |

Topic |

Subject |

|

2190

|

31 May 2021 |

Stefan Ritt | Info | MidasConfig.cmake usage |

MidasConfig.cmake might at some point get included in the standard Cmake installation (or some add-on). It will then reside in the Cmake system path

and you don't have to explicitly know where this is. Just the find_package(Midas) will then be enough.

Even if it's not there, the find_package() is the "traditional" way CMake discovers external packages and users are used to that (like ROOT does the

same). In comparison, your "midas-targets.cmake" way of doing things, although this works certainly fine, is not the "standard" way, but a midas-

specific solution, other people have to learn extra.

Stefan |

|

2189

|

28 May 2021 |

Joseph McKenna | Suggestion | Have a list of 'users responsible' in Alarms and Programs odb entries |

I've updated the branch / pull request to use an array of 10 entries (80 chars each). 32 felt a

little overkill when I saw it on screen, but absolutely happy to set it to any number you

recommend.

The array gets flattened out when an alarm is triggered, currently the formatting produces

AlarmClass : AlarmMessage (Flattened List Of Users Responsible Array With Space Separators)

If experiments want to use Discord / Slack / Mattermost tags and or add phone numbers, that

should fit in 80 characters |

|

2188

|

28 May 2021 |

Stefan Ritt | Suggestion | Have a list of 'users responsible' in Alarms and Programs odb entries |

> > > I can still make this an array and pass a std::vector<std::string> into

> > > al_trigger_class function?

> >

> > Maybe 256 chars are enough at the moment. If other people complain in the future, we can

> > re-visit.

> >

> > Stefan

>

> Thinking about it, an array of maybe 80 character would give enough space for a name, a tag

> and phone number. Do I need to budget memory very strictly? Would 32 entries of 80

> characters be too much?

On that level memory is cheap.

Stefan |

|

2187

|

28 May 2021 |

Konstantin Olchanski | Info | MidasConfig.cmake usage |

> > Does anybody actually use "find_package(midas)", does it actually work for anybody?

>

> What we do is to include midas as a submodule and than we call find_package:

>

> add_subdirectory(midas)

> list(APPEND CMAKE_PREFIX_PATH ${CMAKE_CURRENT_SOURCE_DIR}/midas)

> find_package(Midas REQUIRED)

>

> For us it works fine like this but we kind of always compile Midas fresh and don't use a version on our system (keeping the newest version).

>

> Without the find_package the build does not work for us.

Ok, I see. I now think that for us, this "find_package" business an unnecessary complication:

since one has to know where midas is in order to add it to CMAKE_PREFIX_PATH,

one might as well import the midas targets directly by include(.../midas/lib/midas-targets.cmake).

From what I see now, the cmake file is much simplifed by converting

it from "find_package(midas)" style MIDAS_INCLUDES & co to more cmake-ish

target_link_libraries(myexe midas) - all the compiler switches, include paths,

dependant libraires and gunk are handled by cmake automatically.

I am not touching the "find_package(midas)" business, so it should continue to work, then.

K.O. |

|

2186

|

28 May 2021 |

Marius Koeppel | Info | MidasConfig.cmake usage |

> Does anybody actually use "find_package(midas)", does it actually work for anybody?

What we do is to include midas as a submodule and than we call find_package:

add_subdirectory(midas)

list(APPEND CMAKE_PREFIX_PATH ${CMAKE_CURRENT_SOURCE_DIR}/midas)

find_package(Midas REQUIRED)

For us it works fine like this but we kind of always compile Midas fresh and don't use a version on our system (keeping the newest version).

Without the find_package the build does not work for us. |

|

2185

|

28 May 2021 |

Konstantin Olchanski | Info | MidasConfig.cmake usage |

How does "find_package (Midas REQUIRED)" find the location of MIDAS?

The best I can tell from the current code, the package config files are installed

inside $MIDASSYS somewhere and I see "find_package MIDAS" never find them (indeed,

find_package() does not know about $MIDASSYS, so it has to use telepathy or something).

Does anybody actually use "find_package(midas)", does it actually work for anybody?

Also it appears that "the cmake way" of importing packages is to use

the install(EXPORT) method.

In this scheme, the user package does this:

include(${MIDASSYS}/lib/midas-targets.cmake)

target_link_libraries(myprogram PUBLIC midas)

this causes all the midas include directories (including mxml, etc)

and dependancy libraries (-lutil, -lpthread, etc) to be automatically

added to "myprogram" compilation and linking.

of course MIDAS has to generate a sensible targets export file,

working on it now.

K.O. |

|

2184

|

28 May 2021 |

Joseph McKenna | Suggestion | Have a list of 'users responsible' in Alarms and Programs odb entries |

> > I can still make this an array and pass a std::vector<std::string> into

> > al_trigger_class function?

>

> Maybe 256 chars are enough at the moment. If other people complain in the future, we can

> re-visit.

>

> Stefan

Thinking about it, an array of maybe 80 character would give enough space for a name, a tag

and phone number. Do I need to budget memory very strictly? Would 32 entries of 80

characters be too much? |

|

2183

|

28 May 2021 |

Stefan Ritt | Suggestion | Have a list of 'users responsible' in Alarms and Programs odb entries |

> I can still make this an array and pass a std::vector<std::string> into

> al_trigger_class function?

Maybe 256 chars are enough at the moment. If other people complain in the future, we can

re-visit.

Stefan |

|

2182

|

28 May 2021 |

Joseph McKenna | Suggestion | Have a list of 'users responsible' in Alarms and Programs odb entries |

> I think this is a good idea and I support it. We have a similar problem in MEG and

> we solved that with external (bash) scripts called in case of alarms. One feature

> there we have is that for some alarms, several people want to get notified. So

> people can "subscribe" to certain alarms. The subscription are now handled inside

> Slack which I like better, but maybe it would be good to have more than one "user

> responsible". Like if one person is sleeping/traveling, it's good to have a

> substitute. Can you make an array out of that? Or a comma-separated list?

>

> Best,

> Stefan

Presently there are 256 characters in the 'users responsible' field, so you can just

list many users (no space, space or comma whatever). Discord, slack and mattermost

don't care, they just parse the user tags.

I can still make this an array and pass a std::vector<std::string> into

al_trigger_class function? |

|

2181

|

28 May 2021 |

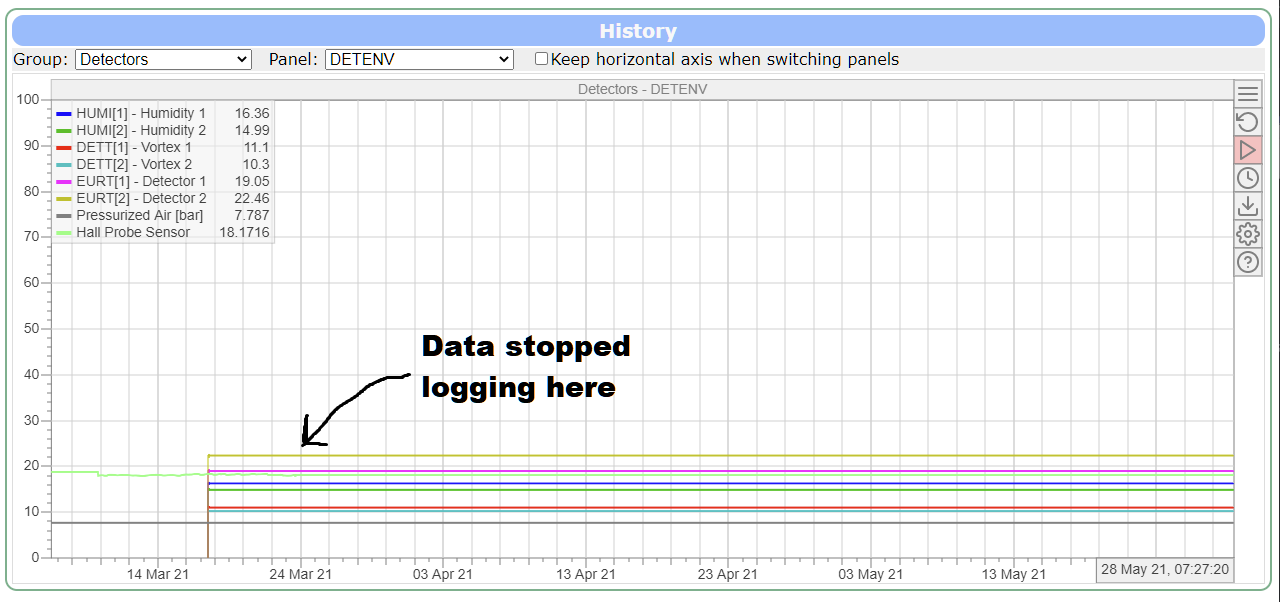

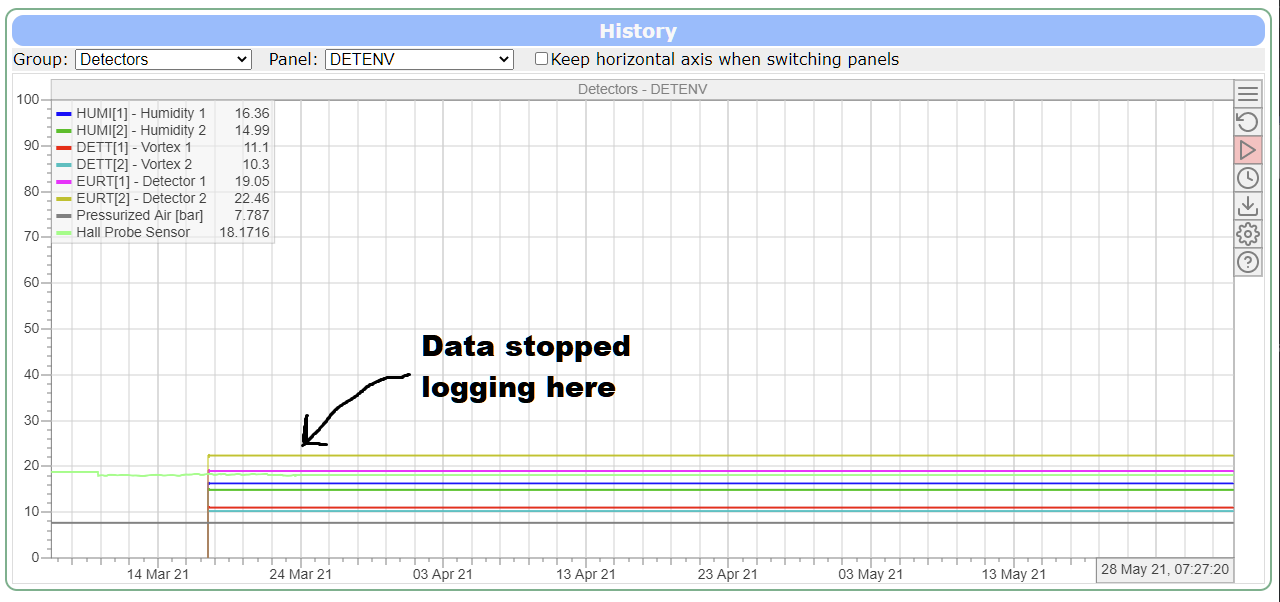

Stefan Ritt | Bug Report | History plots deceiving users into thinking data is still logging |

This is a known problem and I'm working on. See the discussion at:

https://bitbucket.org/tmidas/midas/issues/305/log_history_periodic-doesnt-account-for

Stefan |

|

2180

|

28 May 2021 |

Joseph McKenna | Bug Report | History plots deceiving users into thinking data is still logging |

I have been trying to fix this myself but my javascript isn't strong... The

'new' history plot render fills in missing data with the last ODB value (even

when this value is very old...

elog:2180/1 shows this... The data logging stopped, but the history plot can

fool

users into thinking data is logging (The export button generates CSVs with

entires every 10 seconds also). Grepping through the history files behind the

scenes, I found only one match for an example variable from this plot, so it

looks like there are no entries after March 24th (although I may be mistaken,

I've not studied the history files data structure in detail), ie this is a

artifact from the mhistory.js rather than the mlogger...

Have I missed something simple?

Would it be possible to not draw the line if there are no datapoints in a

significant time? Or maybe render a dashed line that doesn't export to CSV?

Thanks in advance

Edit, I see certificate errors this forum and I think its preventing my upload

an image... inlining it into the text here:

|

| Attachment 1: flatline.png

|

|

| Attachment 2: flatline.png

|

|

|

2179

|

28 May 2021 |

Stefan Ritt | Suggestion | Have a list of 'users responsible' in Alarms and Programs odb entries |

I think this is a good idea and I support it. We have a similar problem in MEG and

we solved that with external (bash) scripts called in case of alarms. One feature

there we have is that for some alarms, several people want to get notified. So

people can "subscribe" to certain alarms. The subscription are now handled inside

Slack which I like better, but maybe it would be good to have more than one "user

responsible". Like if one person is sleeping/traveling, it's good to have a

substitute. Can you make an array out of that? Or a comma-separated list?

Best,

Stefan |

|

2178

|

28 May 2021 |

Joseph McKenna | Suggestion | Have a list of 'users responsible' in Alarms and Programs odb entries |

There have been times in ALPHA that an alarm is triggered and the shift crew

are unclear who to contact if they aren't trained to fix the specific

failure mode.

I wish to add the property 'Users responsible' to the ODB for Alarms and

Programs.

I have drafted what this might look like in a new pull request:

https://bitbucket.org/tmidas/midas/pull-requests/22/add-users-responsible-

field-for-specific

It requires changing of several data structures, I think I have found all

instances of the definitions so the ODB should 'repair' any of the old

structures adding in users responsible.

If 'Users responsible' is set, MIDAS messages append them after the message

in brackets '()'. If used in conjunction with the MIDAS messenger

(mmessenger), the users responsible can be 'tagged' directly.

I.e, for slack, simply set the 'users responsible' to <@UserID|Nickname>,

for mattermost '@username', for discord '<@userid>'. Note that discord

doesn't allow you to tag by username, but numeric userid

I have expanded char array in 'al_trigger_class' to handle the potentially

longer MIDAS messages. Perhaps since I'm touching these lines I should

change these temporary containers to std::string (line 383 and 386 of

alarm.cxx)?

I have tested this quite a bit for my system, I am not sure how I can test

mjsonrpc. |

|

2177

|

28 May 2021 |

Joseph McKenna | Info | MIDAS Messenger - A program to forward MIDAS messages to Discord, Slack and or Mattermost merged |

A simple program to forward MIDAS messages to Discord, Slack and or Mattermost

(Python 3 required)

Pull request accepted! Documentation can be found on the wiki

https://midas.triumf.ca/MidasWiki/index.php/Mmessenger |

|

2176

|

27 May 2021 |

Nick Hastings | Bug Report | Wrong location for mysql.h on our Linux systems |

Hi,

> with the recent fix of the CMakeLists.txt, it seems like another bug

surfaced.

> In midas/progs/mlogger.cxx:48/49, the mysql header files are included without

a

> prefix. However, mysql.h and mysqld_error.h are in a subdirectory, so for our

> systems, the lines should be

> 48 #include <mysql/mysql.h>

> 49 #include <mysql/mysqld_error.h>

> This is the case with MariaDB 10.5.5 on OpenSuse Leap 15.2, MariaDB 10.5.5 on

> Fedora Workstation 34 and MySQL 5.5.60 on Raspbian 10.

>

> If this problem occurs for other Linux/MySQL versions as well, it should be

> fixed in mlogger.cxx and midas/src/history_schema.cxx.

> If this problem only occurs on some distributions or MySQL versions, it needs

> some more differentiation than #ifdef OS_UNIX.

What does "mariadb_config --cflags" or "mysql_config --cflags" return on

these systems? For mariadb 10.3.27 on Debian 10 it returns both paths:

% mariadb_config --cflags

-I/usr/include/mariadb -I/usr/include/mariadb/mysql

Note also that mysql.h and mysqld_error.h reside in /usr/include/mariadb *not*

/usr/include/mariadb/mysql so using "#include <mysql/mysql.h>" would not work.

On CentOS 7 with mariadb 5.5.68:

% mysql_config --include

-I/usr/include/mysql

% ls -l /usr/include/mysql/mysql*.h

-rw-r--r--. 1 root root 38516 May 6 2020 /usr/include/mysql/mysql.h

-r--r--r--. 1 root root 76949 Oct 2 2020 /usr/include/mysql/mysqld_ername.h

-r--r--r--. 1 root root 28805 Oct 2 2020 /usr/include/mysql/mysqld_error.h

-rw-r--r--. 1 root root 24717 May 6 2020 /usr/include/mysql/mysql_com.h

-rw-r--r--. 1 root root 1167 May 6 2020 /usr/include/mysql/mysql_embed.h

-rw-r--r--. 1 root root 2143 May 6 2020 /usr/include/mysql/mysql_time.h

-r--r--r--. 1 root root 938 Oct 2 2020 /usr/include/mysql/mysql_version.h

So this seems to be the correct setup for both Debian and RHEL. If this is to

be worked around in Midas I would think it would be better to do it at the

cmake level than by putting another #ifdef in the code.

Cheers,

Nick. |

|

2175

|

27 May 2021 |

Joseph McKenna | Info | MIDAS Messenger - A program to send MIDAS messages to Discord, Slack and or Mattermost |

I have created a simple program that parses the message buffer in MIDAS and

sends notifications by webhook to Discord, Slack and or Mattermost.

Active pull request can be found here:

https://bitbucket.org/tmidas/midas/pull-requests/21

Its written in python and CMake will install it in bin (if the Python3 binary

is found by cmake). The only dependency outside of the MIDAS python library is

'requests', full documentation are in the mmessenger.md |

|

2174

|

27 May 2021 |

Lukas Gerritzen | Bug Report | Wrong location for mysql.h on our Linux systems |

Hi,

with the recent fix of the CMakeLists.txt, it seems like another bug surfaced.

In midas/progs/mlogger.cxx:48/49, the mysql header files are included without a

prefix. However, mysql.h and mysqld_error.h are in a subdirectory, so for our

systems, the lines should be

48 #include <mysql/mysql.h>

49 #include <mysql/mysqld_error.h>

This is the case with MariaDB 10.5.5 on OpenSuse Leap 15.2, MariaDB 10.5.5 on

Fedora Workstation 34 and MySQL 5.5.60 on Raspbian 10.

If this problem occurs for other Linux/MySQL versions as well, it should be

fixed in mlogger.cxx and midas/src/history_schema.cxx.

If this problem only occurs on some distributions or MySQL versions, it needs

some more differentiation than #ifdef OS_UNIX.

Also, this somehow seems familiar, wasn't there such a problem in the past? |

|

2173

|

26 May 2021 |

Marco Chiappini | Info | label ordering in history plot |

Dear all,

is there any way to order the labels in the history plot legend? In the old

system there was the “order” column in the config panel, but I can not find it

in the new system. Thanks in advance for the support.

Best regards,

Marco Chiappini |

|

2172

|

24 May 2021 |

Mathieu Guigue | Bug Report | Bug "is of type" |

Hi,

I am running a simple FE executable that is supposed to define a PRAW DWORD bank.

The issue is that, right after the start of the run, the logger crashes without messages.

Then the FE reports this error, which is rather confusing.

```

12:59:29.140 2021/05/24 [feTestDatastruct,ERROR] [odb.cxx:6986:db_set_data1,ERROR] "/Equipment/Trigger/Variables/PRAW" is of type UINT32, not UINT32

``` |

|

2171

|

21 May 2021 |

Francesco Renga | Suggestion | MYSQL logger |

I solved this, it was a failed "make clean" before recompiling. Now it works.

Sorry for the noise.

Francesco

> Dear all,

> I'm trying to use the logging on a mysql DB. Following the instructions on

> the Wiki, I recompiled MIDAS after installing mysql, and cmake with NEED_MYSQL=1

> can find it:

>

> -- MIDAS: Found MySQL version 8.0.23

>

> Then, I compiled my frontend (cmake with no options + make) and run it, but in the

> ODB I cannot find the tree for mySQL. I have only:

>

> Logger/Runlog/ASCII

>

> while I would expect also:

>

> Logger/Runlog/SQL

>

> What could be missing? Maybe should I add something in the CMakeList file or run

> cmake with some option?

>

> Thank you,

> Francesco |