| ID |

Date |

Author |

Topic |

Subject |

|

2156

|

26 Apr 2021 |

Zaher Salman | Suggestion | embed modbvalue in SVG | I found a way to embed modbvalue into a SVG:

<text x="100" y="100" font-size="30rem">

Run=<tspan class="modbvalue" data-odb-path="/Runinfo/Run number"></tspan>

</text>

This seems to behave better that the suggestion below.

> You can't really embed it, but you can overlay it. You tag the SVG with a

> "relative" position and then move the modbvalue with an "absolute" position over

> it:

>

> <svg style="position:relative" width="400" height="100">

> <rect width="300" height="100" style="fill:rgb(255,0,0);stroke-width:3;stroke:rgb(0,0,0)" />

> <div class="modbvalue" style="position:absolute;top:50px;left:50px" data-odb-path="/Runinfo/Run number"></div>

> </svg> |

|

1172

|

22 Mar 2016 |

Konstantin Olchanski | Info | emacs web-mode.el | For those who use emacs to edit web pages - the built-in CSS and Javascript modes seem to work

just fine for editing files.css and files.js, but the built-in html modes fall flat on modern web pages

which contain a mix of html, javascript inside <script> tags and javascript inside button "onclick"

attributes.

So I looked at several emacs "html5 modes" and web-mode.el works well for me - html is indented

correctly (default indent level is easy to change), javascript inside <script> tags is indented

correctly (default indent level is easy to change), javascript inside "onclick" attributes has to be

indented manually.

web-mode code repository and instructions are here, the author is very responsive and fixed my

one request (permit manual intentation of javascript inside html attributes):

https://github.com/fxbois/web-mode

I now edit the html files in the MIDAS repository using these emacs settings:

8s-macbook-pro:web-mode 8ss$ more ~/.emacs

(setq-default indent-tabs-mode nil)

(setq-default tab-width 3)

(add-to-list 'load-path "~/git/web-mode")

(require 'web-mode)

(add-to-list 'auto-mode-alist '("\\.html\\'" . web-mode))

(setq web-mode-markup-indent-offset 2)

(setq web-mode-css-indent-offset 2)

(setq web-mode-code-indent-offset 3)

(setq web-mode-script-padding 0)

(setq web-mode-attr-indent-offset 2)

8s-macbook-pro:web-mode 8ss$

K.O. |

|

64

|

30 Mar 2004 |

Konstantin Olchanski | | elog fixes | I am about to commit the mhttpd Elog fixes we have been using in TWIST since

about October. The infamous Elog "last N days" problem is fixed, sundry

memory overruns are caught and assert()ed.

For the curious, the "last N days" problem was caused by uninitialized data

in the elog handling code. A non-zero-terminated string was read from a file

and passed to atoi(). Here is a simplifed illustration:

char str[256]; // uninitialized, filled with whatever happens on the stack

read(file,str,6); // read 6 bytes, non-zero terminated

// str now looks like this: "123456UUUUUUUUU....", "U" is uninitialized memory

int len = atoi(str); // if the first "U" happens to be a number, we lose.

The obvious fix is to add "str[6]=0" before the atoi() call.

Attached is the CVS diff for the proposed changes. Please comment.

K.O. |

| Attachment 1: elog-fixes.txt

|

Index: src/midas.c

===================================================================

RCS file: /usr/local/cvsroot/midas/src/midas.c,v

retrieving revision 1.203

diff -u -r1.203 midas.c

--- src/midas.c 19 Mar 2004 09:58:22 -0000 1.203

+++ src/midas.c 31 Mar 2004 05:11:00 -0000

@@ -14814,8 +14814,9 @@

\********************************************************************/

/********************************************************************/

-void el_decode(char *message, char *key, char *result)

+void el_decode(char *message, char *key, char *result, int size)

{

+ char *rstart = result;

char *pc;

if (result == NULL)

@@ -14828,6 +14829,8 @@

*result++ = *pc++;

*result = 0;

}

+

+ assert(strlen(rstart) < size);

}

/**dox***************************************************************/

@@ -15020,9 +15023,9 @@

size = atoi(str + 9);

read(fh, message, size);

- el_decode(message, "Date: ", date);

- el_decode(message, "Thread: ", thread);

- el_decode(message, "Attachment: ", attachment);

+ el_decode(message, "Date: ", date, sizeof(date));

+ el_decode(message, "Thread: ", thread, sizeof(thread));

+ el_decode(message, "Attachment: ", attachment, sizeof(attachment));

/* buffer tail of logfile */

lseek(fh, 0, SEEK_END);

@@ -15092,7 +15095,7 @@

sprintf(message + strlen(message), "========================================\n");

strcat(message, text);

- assert(strlen(message) < sizeof(message)); // bomb out on array overrun.

+ assert(strlen(message) < sizeof(message)); /* bomb out on array overrun. */

size = 0;

sprintf(start_str, "$Start$: %6d\n", size);

@@ -15104,6 +15107,9 @@

sprintf(tag, "%02d%02d%02d.%d", tms->tm_year % 100, tms->tm_mon + 1,

tms->tm_mday, (int) TELL(fh));

+ /* size has to fit in 6 digits */

+ assert(size < 999999);

+

sprintf(start_str, "$Start$: %6d\n", size);

sprintf(end_str, "$End$: %6d\n\f", size);

@@ -15339,13 +15345,20 @@

return EL_FILE_ERROR;

}

- if (strncmp(str, "$End$: ", 7) == 0) {

- size = atoi(str + 7);

- lseek(*fh, -size, SEEK_CUR);

- } else {

+ if (strncmp(str, "$End$: ", 7) != 0) {

close(*fh);

return EL_FILE_ERROR;

}

+

+ /* make sure the input string to atoi() is zero-terminated:

+ * $End$: 355garbage

+ * 01234567890123456789 */

+ str[15] = 0;

+

+ size = atoi(str + 7);

+ assert(size > 15);

+

+ lseek(*fh, -size, SEEK_CUR);

/* adjust tag */

sprintf(strchr(tag, '.') + 1, "%d", (int) TELL(*fh));

@@ -15364,14 +15377,21 @@

}

lseek(*fh, -15, SEEK_CUR);

- if (strncmp(str, "$Start$: ", 9) == 0) {

- size = atoi(str + 9);

- lseek(*fh, size, SEEK_CUR);

- } else {

+ if (strncmp(str, "$Start$: ", 9) != 0) {

close(*fh);

return EL_FILE_ERROR;

}

+ /* make sure the input string to atoi() is zero-terminated

+ * $Start$: 606garbage

+ * 01234567890123456789 */

+ str[15] = 0;

+

+ size = atoi(str+9);

+ assert(size > 15);

+

+ lseek(*fh, size, SEEK_CUR);

+

/* if EOF, goto next day */

i = read(*fh, str, 15);

if (i < 15) {

@@ -15444,7 +15464,7 @@

\********************************************************************/

{

- int size, fh, offset, search_status;

+ int size, fh = 0, offset, search_status, rd;

char str[256], *p;

char message[10000], thread[256], attachment_all[256];

@@ -15462,10 +15482,24 @@

/* extract message size */

offset = TELL(fh);

- read(fh, str, 16);

- size = atoi(str + 9);

+ rd = read(fh, str, 15);

+ assert(rd == 15);

+

+ /* make sure the input string is zero-terminated before we call atoi() */

+ str[15] = 0;

+

+ /* get size */

+ size = atoi(str+9);

+

+ assert(strncmp(str,"$Start$:",8) == 0);

+ assert(size > 15);

+ assert(size < sizeof(message));

+

memset(message, 0, sizeof(message));

- read(fh, message, size);

+

+ rd = read(fh, message, size);

+ assert(rd > 0);

+ assert((rd+15 == size)||(rd == size));

close(fh);

@@ -15473,14 +15507,14 @@

if (strstr(message, "Run: ") && run)

*run = atoi(strstr(message, "Run: ") + 5);

- el_decode(message, "Date: ", date);

- el_decode(message, "Thread: ", thread);

- el_decode(message, "Author: ", author);

- el_decode(message, "Type: ", type);

- el_decode(message, "System: ", system);

- el_decode(message, "Subject: ", subject);

- el_decode(message, "Attachment: ", attachment_all);

- el_decode(message, "Encoding: ", encoding);

+ el_decode(message, "Date: ", date, 80); /* size from show_elog_submit_query() */

+ el_decode(message, "Thread: ", thread, sizeof(thread));

+ el_decode(message, "Author: ", author, 80); /* size from show_elog_submit_query() */

+ el_decode(message, "Type: ", type, 80); /* size from show_elog_submit_query() */

+ el_decode(message, "System: ", system, 80); /* size from show_elog_submit_query() */

+ el_decode(message, "Subject: ", subject, 256); /* size from show_elog_submit_query() */

+ el_decode(message, "Attachment: ", attachment_all, sizeof(attachment_all));

+ el_decode(message, "Encoding: ", encoding, 80); /* size from show_elog_submit_query() */

/* break apart attachements */

if (attachment1 && attachment2 && attachment3) {

@@ -15496,6 +15530,10 @@

strcpy(attachment3, p);

}

}

+

+ assert(strlen(attachment1) < 256); /* size from show_elog_submit_query() */

+ assert(strlen(attachment2) < 256); /* size from show_elog_submit_query() */

+ assert(strlen(attachment3) < 256); /* size from show_elog_submit_query() */

}

/* conver thread in reply-to and reply-from */

|

|

65

|

30 Mar 2004 |

Stefan Ritt | | elog fixes | Thanks for fixing these long lasting bugs. The code is much cleaner now, please

commit it. |

|

2671

|

15 Jan 2024 |

Frederik Wauters | Forum | dump history FILE files | We switched from the history files from MIDAS to FILE, so we have *.dat files now (per variable), instead of the old *.hst.

How shoul

d one now extract data from these data files? With the old *,hst files I can e.g. mhdump -E 102 231010.hst

but with the new *.dat files I get

...2023/history$ mhdump -E 0 -T "Run number" mhf_1697445335_20231016_run_transitions.dat | head -n 15

event name: [Run transitions], time [1697445335]

tag: tag: /DWORD 1 4 /timestamp

tag: tag: UINT32 1 4 State

tag: tag: UINT32 1 4 Run number

record size: 12, data offset: 1024

record 0, time 1697557722, incr 112387

record 1, time 1697557783, incr 61

record 2, time 1697557804, incr 21

record 3, time 1697557834, incr 30

record 4, time 1697557888, incr 54

record 5, time 1697558318, incr 430

record 6, time 1697558323, incr 5

record 7, time 1697558659, incr 336

record 8, time 1697558668, incr 9

record 9, time 1697558753, incr 85

not very intelligible

Yes, I can do csv export on the webpage. But it would be nice to be able to extract from just the files. Also, the webpage export only saves the data shown ( range limited and/or downsampled) |

|

2691

|

28 Jan 2024 |

Konstantin Olchanski | Forum | dump history FILE files | $ cat mhf_1697445335_20231016_run_transitions.dat

event name: [Run transitions], time [1697445335]

tag: tag: /DWORD 1 4 /timestamp

tag: tag: UINT32 1 4 State

tag: tag: UINT32 1 4 Run number

record size: 12, data offset: 1024

...

data is in fixed-length record format. from the file header, you read "record size" is 12 and data starts at offset 1024.

the 12 bytes of the data record are described by the tags:

4 bytes of timestamp (DWORD, unix time)

4 bytes of State (UINT32)

4 bytes of "Run number" (UINT32)

endianess is "local endian", which means "little endian" as we have no big-endian hardware anymore to test endian conversions.

file format is designed for reading using read() or mmap().

and you are right mhdump, does not work on these files, I guess I can write another utility that does what I just described and spews the numbers to stdout.

K.O. |

|

2716

|

18 Feb 2024 |

Frederik Wauters | Forum | dump history FILE files | > $ cat mhf_1697445335_20231016_run_transitions.dat

> event name: [Run transitions], time [1697445335]

> tag: tag: /DWORD 1 4 /timestamp

> tag: tag: UINT32 1 4 State

> tag: tag: UINT32 1 4 Run number

> record size: 12, data offset: 1024

> ...

>

> data is in fixed-length record format. from the file header, you read "record size" is 12 and data starts at offset 1024.

>

> the 12 bytes of the data record are described by the tags:

> 4 bytes of timestamp (DWORD, unix time)

> 4 bytes of State (UINT32)

> 4 bytes of "Run number" (UINT32)

>

> endianess is "local endian", which means "little endian" as we have no big-endian hardware anymore to test endian conversions.

>

> file format is designed for reading using read() or mmap().

>

> and you are right mhdump, does not work on these files, I guess I can write another utility that does what I just described and spews the numbers to stdout.

>

> K.O.

Thanks for the answer. As this FILE system is advertised as the new default (eog:2617), this format does merit some more WIKI info. |

|

1141

|

20 Nov 2015 |

Konstantin Olchanski | Info | documented, merged: midas JSON-RPC interface | > The JSON RPC branch has been merged into main MIDAS.

The interface is now mostly documented, go here: https://midas.triumf.ca/MidasWiki/index.php/Mjsonrpc

Documentation for individual javascript functions in mhttpd.js not merged into the MIDAS documentation yet, because the API is being converted to the Javascript Promise

pattern (git branch feature/js_promise).

The functions available from mhttpd.js are documented via doxygen, also linked from the mjsonrpc wiki page.

K.O. |

|

2719

|

27 Feb 2024 |

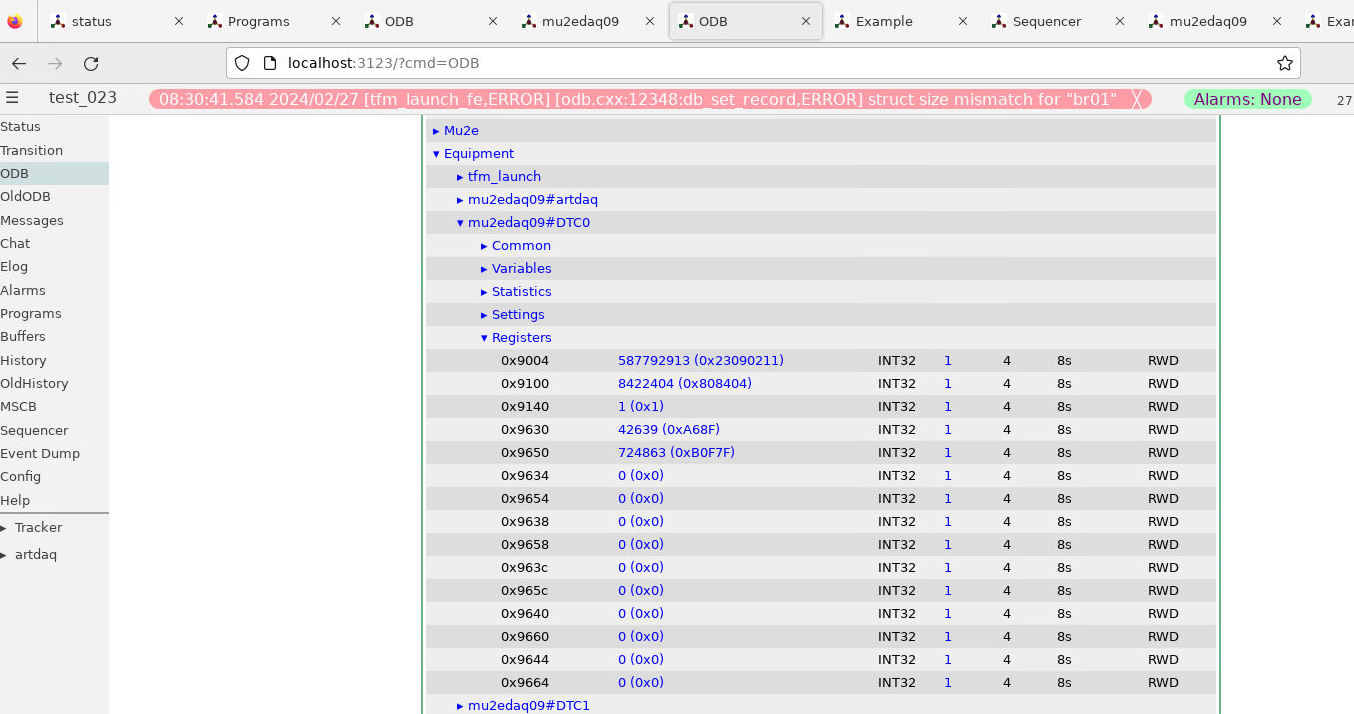

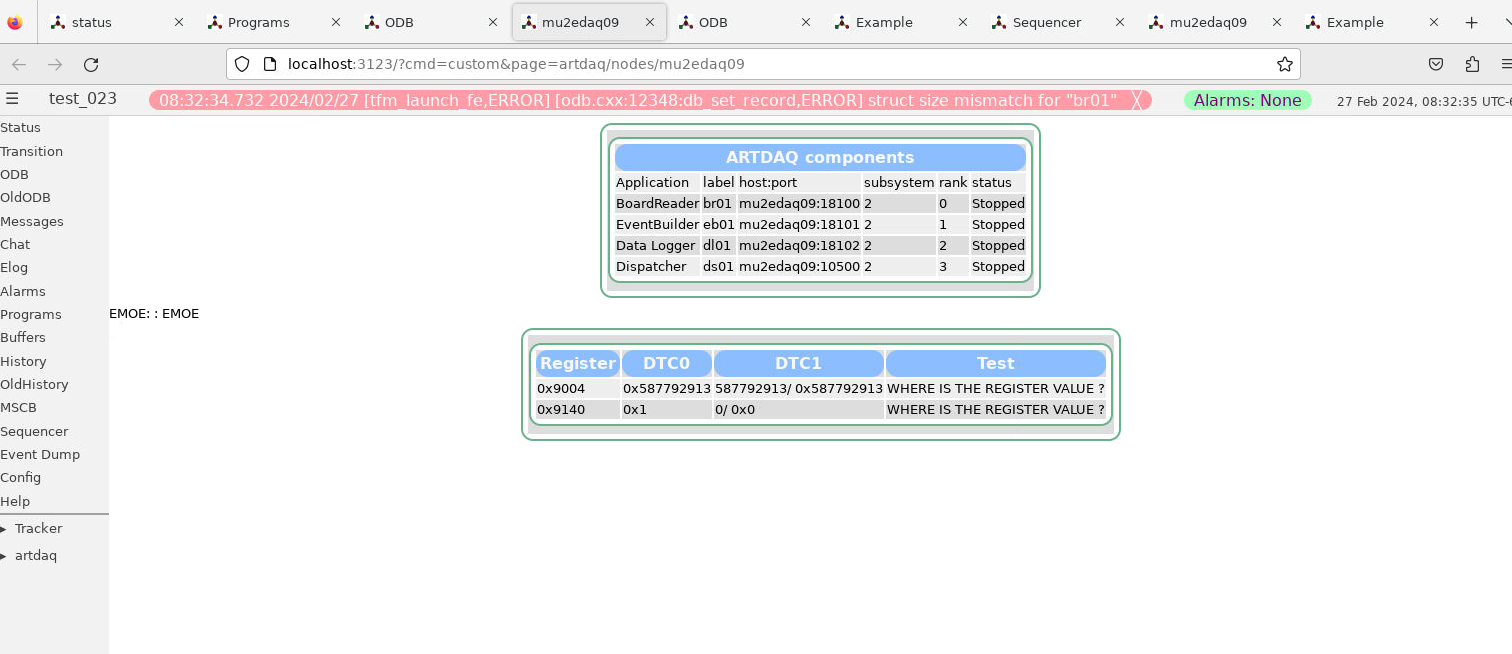

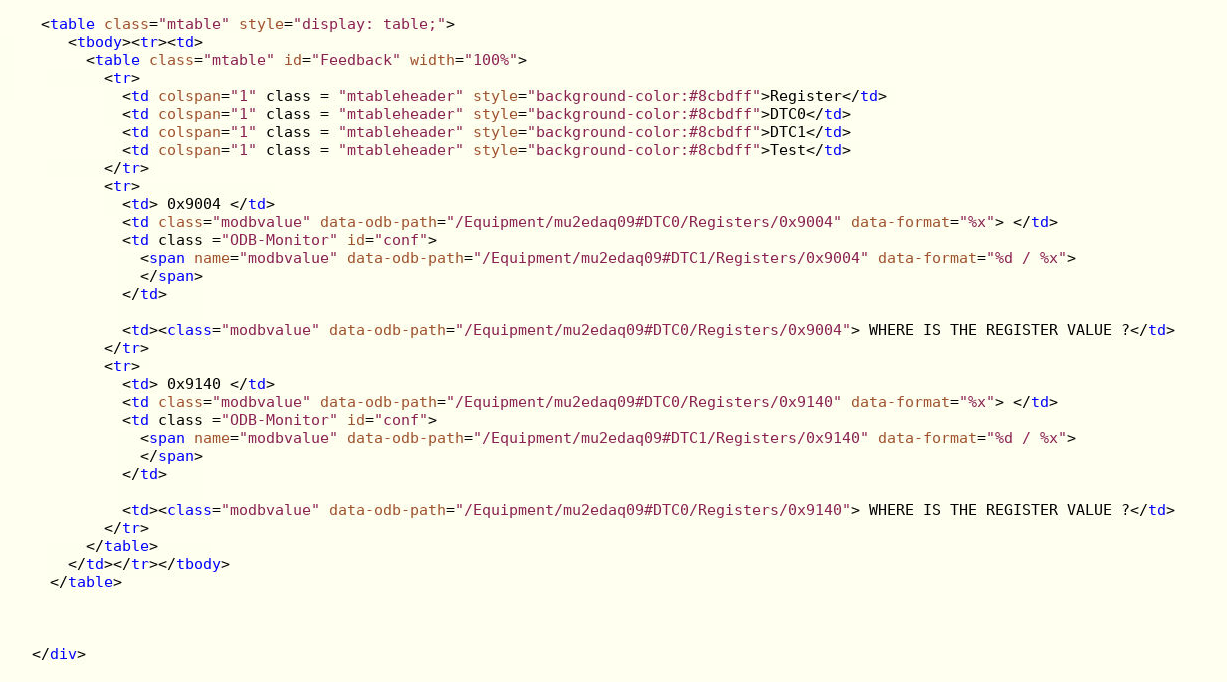

Pavel Murat | Forum | displaying integers in hex format ? | Dear MIDAS Experts,

I'm having an odd problem when trying to display an integer stored in ODB on a custom

web page: the hex specifier, "%x", displays integers as if it were "%d" .

- attachment 1 shows the layout and the contents of the ODB sub-tree in question

- attachment 2 shows the web page as it is displayed

- attachment 3 shows the snippet of html/js producing the web page

I bet I'm missing smth trivial - an advice is greatly appreciated!

Also, is there an equivalent of a "0x%04x" specifier to have the output formatted

into a fixed length string ?

-- thanks, regards, Pasha |

| Attachment 1: 2024_02_27_dtc_registers_in_odb.png

|

|

| Attachment 2: 2024_02_27_custom_page.png

|

|

| Attachment 3: 2024_02_27_custom_page_html.png

|

|

|

2720

|

27 Feb 2024 |

Stefan Ritt | Forum | displaying integers in hex format ? | Thanks for reporting that bug. I fixed it and committed the change to the develop branch.

Stefan |

|

2721

|

27 Feb 2024 |

Pavel Murat | Forum | displaying integers in hex format ? | Hi Stefan (and Ben),

thanks for reacting so promptly - your commits on Bitbucket fixed the problem.

For those of us who knows little about how the web browsers work:

- picking up the fix required flushing the cache of the MIDAS client web browser - apparently the web browser

I'm using - Firefox 115.6 - cached the old version of midas.js but wouldn't report it cached and wouldn't load

the updated file on its own.

-- thanks again, regards, Pasha |

|

672

|

20 Nov 2009 |

Konstantin Olchanski | Bug Fix | disallow client names with slash '/' characters | > odb.c rev 4622 fixes ODB corruption by db_connect_database() if client_name is

> too long. Also fixed is potential ODB corruption by too long key names in

> db_create_key(). Problem kindly reported by Tim Nichols of T2K/ND280 experiment.

Related bug fix - db_connect_database() should not permit client names that contain

the slash (/) character. Names like "aaa/bbb" create entries /Programs/aaa/bbb (aaa

is a subdirectory) and names like "../aaa" create entries in the ODB root directory.

svn rev 4623.

K.O. |

|

1948

|

15 Jun 2020 |

Martin Mueller | Bug Report | deprecated function stime() | Hi

I had a problem with the compilation of midas after an OS update to the recent version of OpenSuse tumbleweed. The function stime() in system.cxx:3196 is no longer available.

In the documentation it is also marked as deprecated with the suggestion to use clock_settime instead:

https://man7.org/linux/man-pages/man2/stime.2.html

replacing system.cxx:3196 with the clock_settime - method in system.cxx:3200 - 3204 also for OS_UNIX seems to solve the problem, but i'm not sure if this will cause problems on older OS's.

Martin |

|

1949

|

15 Jun 2020 |

Stefan Ritt | Bug Report | deprecated function stime() | The function stime() has been replaced by clock_settime() on Feb. 2020:

https://bitbucket.org/tmidas/midas/commits/c732120e7c68bbcdbbc6236c1fe894c401d9bbbd

Please always pull before submitting bug reports.

Best,

Stefan |

|

641

|

07 Sep 2009 |

Exaos Lee | Forum | deprecated conversion from string constant to ‘char*’ | I encountered many warning while building MIDAS (svn r4556). Please see the

attached log file. Most of them are caused by type conversion from string to

"char*".

Though I can ignore all the warning without any problem, I still hate to see

them. :-) |

| Attachment 1: make-warnings.log

|

Scanning dependencies of target midas-static

[ 1%] Building C object CMakeFiles/midas-static.dir/src/ftplib.c.o

[ 3%] Building C object CMakeFiles/midas-static.dir/src/midas.c.o

[ 5%] Building C object CMakeFiles/midas-static.dir/src/system.c.o

[ 7%] Building C object CMakeFiles/midas-static.dir/src/mrpc.c.o

[ 9%] Building C object CMakeFiles/midas-static.dir/src/odb.c.o

[ 11%] Building C object CMakeFiles/midas-static.dir/src/ybos.c.o

[ 13%] Building C object CMakeFiles/midas-static.dir/src/history.c.o

[ 15%] Building C object CMakeFiles/midas-static.dir/src/alarm.c.o

[ 17%] Building C object CMakeFiles/midas-static.dir/src/elog.c.o

[ 19%] Building C object CMakeFiles/midas-static.dir/opt/DAQ/bot/mxml/mxml.c.o

[ 21%] Building C object CMakeFiles/midas-static.dir/opt/DAQ/bot/mxml/strlcpy.c.o

[ 23%] Building CXX object CMakeFiles/midas-static.dir/src/history_odbc.cxx.o

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

Linking CXX static library lib/libmidas.a

[ 23%] Built target midas-static

Scanning dependencies of target dio

[ 25%] Building C object CMakeFiles/dio.dir/utils/dio.c.o

Linking C executable bin/dio

[ 25%] Built target dio

Scanning dependencies of target fal

[ 25%] Generating fal.o

[ 26%] Built target fal

Scanning dependencies of target hmana

[ 26%] Generating hmana.o

[ 28%] Built target hmana

Scanning dependencies of target lazylogger

[ 30%] Building C object CMakeFiles/lazylogger.dir/src/lazylogger.c.o

Linking C executable bin/lazylogger

[ 30%] Built target lazylogger

Scanning dependencies of target mana

[ 30%] Generating mana.o

[ 32%] Built target mana

Scanning dependencies of target mchart

[ 34%] Building C object CMakeFiles/mchart.dir/utils/mchart.c.o

Linking C executable bin/mchart

[ 34%] Built target mchart

Scanning dependencies of target mcnaf

[ 36%] Building C object CMakeFiles/mcnaf.dir/utils/mcnaf.c.o

[ 38%] Building C object CMakeFiles/mcnaf.dir/drivers/camac/camacrpc.c.o

Linking C executable bin/mcnaf

[ 38%] Built target mcnaf

Scanning dependencies of target mdump

[ 40%] Building C object CMakeFiles/mdump.dir/utils/mdump.c.o

Linking C executable bin/mdump

[ 40%] Built target mdump

Scanning dependencies of target melog

[ 42%] Building C object CMakeFiles/melog.dir/utils/melog.c.o

Linking C executable bin/melog

[ 42%] Built target melog

Scanning dependencies of target mfe

[ 42%] Generating mfe.o

[ 44%] Built target mfe

Scanning dependencies of target mh2sql

[ 46%] Building CXX object CMakeFiles/mh2sql.dir/utils/mh2sql.cxx.o

/opt/DAQ/bot/midas/utils/mh2sql.cxx: In function ‘int main(int, char**)’:

/opt/DAQ/bot/midas/utils/mh2sql.cxx:144: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/utils/mh2sql.cxx:144: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/utils/mh2sql.cxx:144: warning: deprecated conversion from string constant to ‘char*’

[ 48%] Building CXX object CMakeFiles/mh2sql.dir/src/history_odbc.cxx.o

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

Linking CXX executable bin/mh2sql

[ 48%] Built target mh2sql

Scanning dependencies of target mhdump

[ 50%] Building CXX object CMakeFiles/mhdump.dir/utils/mhdump.cxx.o

/opt/DAQ/bot/midas/utils/mhdump.cxx:91: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/utils/mhdump.cxx:91: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/utils/mhdump.cxx:91: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/utils/mhdump.cxx:91: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/utils/mhdump.cxx:91: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/utils/mhdump.cxx:91: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/utils/mhdump.cxx:91: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/utils/mhdump.cxx:91: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/utils/mhdump.cxx:91: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/utils/mhdump.cxx:91: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/utils/mhdump.cxx:91: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/utils/mhdump.cxx:91: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/utils/mhdump.cxx:91: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/utils/mhdump.cxx:91: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/utils/mhdump.cxx:91: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/utils/mhdump.cxx:91: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/utils/mhdump.cxx:91: warning: deprecated conversion from string constant to ‘char*’

Linking CXX executable bin/mhdump

[ 50%] Built target mhdump

Scanning dependencies of target mhist

[ 51%] Building C object CMakeFiles/mhist.dir/utils/mhist.c.o

Linking C executable bin/mhist

[ 51%] Built target mhist

Scanning dependencies of target mhttpd

[ 53%] Building C object CMakeFiles/mhttpd.dir/src/mhttpd.c.o

[ 55%] Building C object CMakeFiles/mhttpd.dir/src/mgd.c.o

Linking CXX executable bin/mhttpd

[ 55%] Built target mhttpd

Scanning dependencies of target midas-shared

[ 57%] Building C object CMakeFiles/midas-shared.dir/src/ftplib.c.o

[ 59%] Building C object CMakeFiles/midas-shared.dir/src/midas.c.o

[ 61%] Building C object CMakeFiles/midas-shared.dir/src/system.c.o

[ 63%] Building C object CMakeFiles/midas-shared.dir/src/mrpc.c.o

[ 65%] Building C object CMakeFiles/midas-shared.dir/src/odb.c.o

[ 67%] Building C object CMakeFiles/midas-shared.dir/src/ybos.c.o

[ 69%] Building C object CMakeFiles/midas-shared.dir/src/history.c.o

[ 71%] Building C object CMakeFiles/midas-shared.dir/src/alarm.c.o

[ 73%] Building C object CMakeFiles/midas-shared.dir/src/elog.c.o

[ 75%] Building C object CMakeFiles/midas-shared.dir/opt/DAQ/bot/mxml/mxml.c.o

[ 76%] Building C object CMakeFiles/midas-shared.dir/opt/DAQ/bot/mxml/strlcpy.c.o

[ 78%] Building CXX object CMakeFiles/midas-shared.dir/src/history_odbc.cxx.o

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:95: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:116: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/history_odbc.cxx:136: warning: deprecated conversion from string constant to ‘char*’

Linking CXX shared library lib/libmidas.so

[ 78%] Built target midas-shared

Scanning dependencies of target mlogger

[ 80%] Building CXX object CMakeFiles/mlogger.dir/src/mlogger.c.o

/opt/DAQ/bot/midas/src/mlogger.c:81: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:81: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:81: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:81: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:81: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:81: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:81: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:81: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:81: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:81: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:81: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:81: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:81: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:81: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:81: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:81: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:81: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:81: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:81: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:81: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:81: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:81: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:81: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:81: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:81: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:81: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c: In function ‘INT ftp_open(char*, FTP_CON**)’:

/opt/DAQ/bot/midas/src/mlogger.c:952: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:956: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:960: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:960: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:965: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:970: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c: In function ‘EVENT_DEF* db_get_event_definition(short int)’:

/opt/DAQ/bot/midas/src/mlogger.c:1339: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:1341: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:1343: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:1345: warning: deprecated conversion from string constant to ‘char*’

/opt/DAQ/bot/midas/src/mlogger.c:1347: warning: deprecated conversion from string constant to ‘char*’

... 75 more lines ...

|

|

646

|

27 Sep 2009 |

Konstantin Olchanski | Forum | deprecated conversion from string constant to ‘char*’ | > I encountered many warning while building MIDAS (svn r4556). Please see the

> attached log file. Most of them are caused by type conversion from string to

> "char*".

> Though I can ignore all the warning without any problem, I still hate to see

> them. :-)

There is no "type conversions". The compiler is whining about code like this:

/* data type names */

static char *tid_name[] = {

"NULL",

"BYTE",

...

I guess we should keep the compiler happy and make them "static const char*".

BTW, my compiler is SL5.2 gcc-4.1.2 and it does not complain. What's your compiler?

K.O. |

|

647

|

27 Sep 2009 |

Exaos Lee | Forum | deprecated conversion from string constant to ‘char*’ | > There is no "type conversions". The compiler is whining about code like this:

>

> /* data type names */

> static char *tid_name[] = {

> "NULL",

> "BYTE",

> ...

>

> I guess we should keep the compiler happy and make them "static const char*".

>

> BTW, my compiler is SL5.2 gcc-4.1.2 and it does not complain. What's your compiler?

>

> K.O.

Using built-in specs.

Target: x86_64-linux-gnu

Configured with: ../src/configure -v --with-pkgversion='Debian 4.3.4-2' --with-

bugurl=file:///usr/share/doc/gcc-4.3/README.Bugs --enable-

languages=c,c++,fortran,objc,obj-c++ --prefix=/usr --enable-shared --enable-multiarch -

-enable-linker-build-id --with-system-zlib --libexecdir=/usr/lib --without-included-

gettext --enable-threads=posix --enable-nls --with-gxx-include-dir=/usr/include/c++/4.3

--program-suffix=-4.3 --enable-clocale=gnu --enable-libstdcxx-debug --enable-objc-gc --

enable-mpfr --with-tune=generic --enable-checking=release --build=x86_64-linux-gnu --

host=x86_64-linux-gnu --target=x86_64-linux-gnu

Thread model: posix

gcc version 4.3.4 (Debian 4.3.4-2) |

|

87

|

25 Nov 2003 |

Suzannah Daviel | | delete key followed by create record leads to empty structure in experim.h | Hi,

I have noticed a problem with deleting a key to an array in odb, then

recreating the record as in the code below. The record is recreated

successfully, but when viewing it with mhttpd, a spurious blank line

(coloured orange) is visible, followed by the rest of the data as normal.

This blank line causes trouble with experim.h because it

produces an empty structure e.g. :

#define CYCLE_SCALERS_SETTINGS_DEFINED

typedef struct {

struct {

} ;

char names[60][32];

} CYCLE_SCALERS_SETTINGS;

rather than :

#define CYCLE_SCALERS_SETTINGS_DEFINED

typedef struct {

char names[60][32];

} CYCLE_SCALERS_SETTINGS;

This empty structure causes a compilation error when rebuilding clients that

use experim.h

SD

CYCLE_SCALERS_TYPE1_SETTINGS_STR(type1_str);

CYCLE_SCALERS_TYPE2_SETTINGS_STR(type2_str);

Both type1_str and type2_str have been defined as in

experim.h

i.e.

#define CYCLE_SCALERS_TYPE1_SETTINGS_STR(_name) char *_name[] = {\

"[.]",\

"Names = STRING[60] :",\

"[32] Back%BSeg00",\

"[32] Back%BSeg01",\

........

........

"[32] General%NeutBm Cycle Sum",\

"[32] General%NeutBm Cycle Asym",\

"",\

NULL }

#define CYCLE_SCALERS_TYPE2_SETTINGS_STR(_name) char *_name[] = {\

"[.]",\

"Names = STRING[60] :",\

"[32] Back%BSeg00",\

"[32] Back%BSeg01",\

...........

............

"[32] General%B/F Cumul -",\

"[32] General%Asym Cumul -",\

"",\

NULL }

if (db_find_key(hDB, 0, "/Equipment/Cycle_scalers/Settings/",&hKey) ==

DB_SUCCESS)

db_delete_key(hDB,hKey,FALSE);

if ( strncmp(fs.input.experiment_name,"1",1) == 0) {

exp_mode = 1; /* Imusr type - scans */

status =

db_create_record(hDB,0,"/Equipment/Cycle_scalers/Settings/",strcomb(type1_str));

}

else {

exp_mode = 2; /* TDmusr types - noscans */

status =

db_create_record(hDB,0,"/Equipment/Cycle_scalers/Settings/",strcomb(type2_str));

} |

|

88

|

01 Dec 2003 |

Stefan Ritt | | delete key followed by create record leads to empty structure in experim.h | > I have noticed a problem with deleting a key to an array in odb, then

> recreating the record as in the code below. The record is recreated

> successfully, but when viewing it with mhttpd, a spurious blank line

> (coloured orange) is visible, followed by the rest of the data as normal.

>

> db_create_record(hDB,0,"/Equipment/Cycle_scalers/Settings/",strcomb(type1_str));

> }

> else {

> exp_mode = 2; /* TDmusr types - noscans */

> status =

> db_create_record(hDB,0,"/Equipment/Cycle_scalers/Settings/",strcomb(type2_str));

> }

The first problem is that the db_create_record has a trailing "/" in the key name

after Settings. This causes the (empty) subsirectory which causes your trouble.

Simple removing it fixes the problem. I agree that this is not obvious, so I

added some code in db_create_record() which removes such a trailing slash if

present. New version under CVS.

Second, the db_create_record() call is deprecated. You should use the new

function db_check_record() instead, and remove your db_delete_key(). This avoids

possible ODB trouble since the structure is not re-created each time, but only

when necessary.

- Stefan |

|

1133

|

05 Nov 2015 |

Amy Roberts | Bug Report | deferred transition causes sequencer to fail | When using the sequencer to start and stop runs which use a deferred transition,

the sequencer fails with a "Cannot stop run: ..." error.

Checking for the "CM_DEFERRED_TRANSITION" case in the first-pass block of the

'Stop' code in sequencer.cxx is one way to solve the problem - though there may

well be better solutions.

My edited portion of sequencer.cxx is below. Is this an acceptable solution

that could be introduced to the master branch?

} else if (equal_ustring(mxml_get_value(pn), "Stop")) {

if (!seq.transition_request) {

seq.transition_request = TRUE;

size = sizeof(state);

db_get_value(hDB, 0, "/Runinfo/State", &state, &size, TID_INT, FALSE);

if (state != STATE_STOPPED) {

status = cm_transition(TR_STOP, 0, str, sizeof(str), TR_MTHREAD |

TR_SYNC, TRUE);

if (status == CM_DEFERRED_TRANSITION) {

// do nothing

} else if (status != CM_SUCCESS) {

sprintf(str, "Cannot stop run: %s", str);

seq_error(str);

}

}

} else {

// Wait until transition has finished

size = sizeof(state);

db_get_value(hDB, 0, "/Runinfo/State", &state, &size, TID_INT, FALSE);

if (state == STATE_STOPPED) {

seq.transition_request = FALSE;

if (seq.stop_after_run) {

seq.stop_after_run = FALSE;

seq.running = FALSE;

seq.finished = TRUE;

cm_msg(MTALK, "sequencer", "Sequencer is finished.");

} else

seq.current_line_number++;

db_set_record(hDB, hKeySeq, &seq, sizeof(seq), 0);

} else {

// do nothing

}

}

} |

|