| ID |

Date |

Author |

Topic |

Subject |

|

2618

|

06 Oct 2023 |

Konstantin Olchanski | Info | new history panel editor | the new history panel editor has been activated. it is meant to work the same as

the old editor, with some improvements to the history variables selection page.

this new version is written in html+javascript and it will be easier to improve,

update and maintain compared to the old version written in c++. the old history

panel editor is still usable and accessible by pressing the "edit in old editor"

button. please report any problem, quirks and improvements in this thread or in

the bitbucket bug reports. K.O. |

|

2619

|

06 Oct 2023 |

Stefan Ritt | Info | New equipment display | Since a long time we tried to convert all "static" mhttpd-generated pages to

dynamic JavaScript. With the new history panel editor we were almost there. Now I

committed the last missing piece - the equipment display. This is shown when you

click on some equipment on the main status page, or if you define some Alias with

?cmd=eqtable&eq=Trigger

This is now a dynamic display, so the values change if they change in the ODB. The

also flash briefly in yellow to visually highlight any change. In addition, these

pages have a unit display, and some values can be edited. This is controlled by

following settings:

/Equipment/<name>/settings/Unit <variable>

where <name> is the name of the equipment and <variable> the variable array name

under /Equipment/<name>/Variables/<variable>

If the unit setting is not present, just a blank column is shown.

The other setting is

/Equipment/<name>/settings/Editable

which may contain a comma-separated string of variables which can be editied on

the equipment page.

In addition, one can save/export the equipment in a json file, which is the same

as a ODB save of that branch. A load or import however only loads values into the

ODB which are under the "Editable" setting above. This allows a simple editor for

HV values etc.

Stefan |

|

2620

|

09 Oct 2023 |

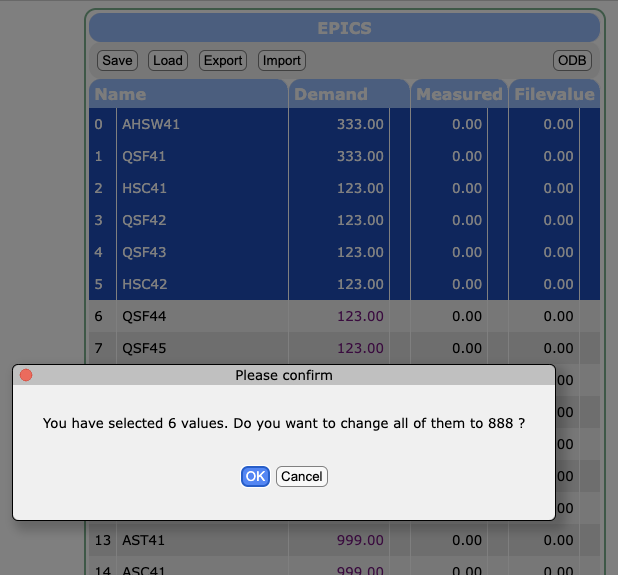

Stefan Ritt | Info | New equipment display | An additional functionality has been implemented on the equipment table:

You can now select several elements by Ctrl/Shift-Click on their names, then change the

first one. After a confirmation dialog, all selected variables are then set to the new

value. This way one can very easily change all values to zero etc.

Stefan |

| Attachment 1: Screenshot_2023-10-09_at_21.56.25.png

|

|

|

2645

|

07 Dec 2023 |

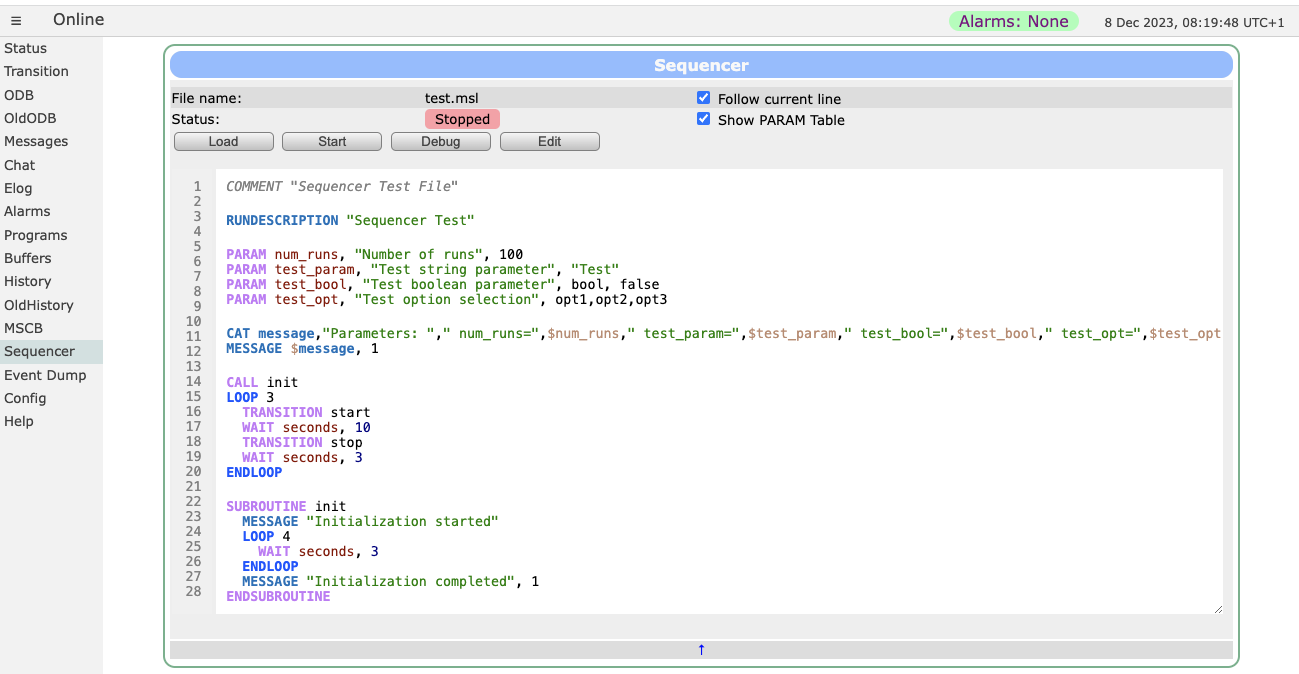

Stefan Ritt | Info | Midas Holiday Update | Dear beloved MIDAS users,

I'm happy to announce a "holiday update" for MIDAS. In countless hours, Zaher from

PSI worked hard to introduce syntax highlighting in the midas script editor. In

addition, there are additional features like a cleaner user interface, the option

to see all variables also in non-debug mode and more. Have a look at the picture

below, doesn't it beginning to look a lot like Christmas?

We have tested this quite a bit and went through many iterations, but no guarantee

that it's flawless. So please report any issue here.

I wish you all a happy holiday season,

Stefan |

| Attachment 1: Screenshot_2023-12-08_at_08.19.48.png

|

|

|

2648

|

10 Dec 2023 |

Andreas Suter | Info | Midas Holiday Update | Hi Stefan and Zaher,

there is a problem with the new sequencer interface for midas.

If I understand the msequencer code correctly:

Under '/Sequencer/State/Path' the path can be defined from where the msequencer gets the files, generates the xml, etc.

However, the new javascript code reads/writes the files to '<exp>/userfiles/sequencer/'

If the path in the ODB is different to '<exp>/userfiles/sequencer/', it leads to quite some unexpected behavior. If '<exp>/userfiles/sequencer/' is the place where things should go, the ODB entry of the msequencer and the internal handling should probably adopted, no?

Andreas

> Dear beloved MIDAS users,

>

> I'm happy to announce a "holiday update" for MIDAS. In countless hours, Zaher from

> PSI worked hard to introduce syntax highlighting in the midas script editor. In

> addition, there are additional features like a cleaner user interface, the option

> to see all variables also in non-debug mode and more. Have a look at the picture

> below, doesn't it beginning to look a lot like Christmas?

>

> We have tested this quite a bit and went through many iterations, but no guarantee

> that it's flawless. So please report any issue here.

>

> I wish you all a happy holiday season,

> Stefan |

|

2649

|

10 Dec 2023 |

Stefan Ritt | Info | Midas Holiday Update | > If I understand the msequencer code correctly:

> Under '/Sequencer/State/Path' the path can be defined from where the msequencer gets the files, generates the xml, etc.

> However, the new javascript code reads/writes the files to '<exp>/userfiles/sequencer/'

>

> If the path in the ODB is different to '<exp>/userfiles/sequencer/', it leads to quite some unexpected behavior. If '<exp>/userfiles/sequencer/' is the place where things should go, the ODB entry of the msequencer and the internal handling should probably adopted, no?

Indeed there is a change in philosophy. Previously, /Sequencer/State/Path could point anywhere in the file system. This was considered a security problem, since one could access system files under /etc for example via the midas interface. When the new file API was

introduced recently, it has therefor been decided that all files accessible remotely should reside under <exp>/userfiles. If an experiment needs some files outside of that directory, the experiment could define some symbolic link, but that's then in the responsibility of

the experiment.

To resolve now the issue between the sequencer path and the userfiles, we have different options, and I would like to get some feedback from the community, since *all experiments* have to do that change.

1) Leave thins as they are, but explain that everybody should modify /Sequencer/Stat/Path to some subdirectory of <exp>/userfiles/sequencer

2) Drop /Sequencer/State/Path completely and "hard-wire" it to <exp>/usefiles/sequencer

3) Make /Sequencer/State/Path relative to <exp>/userfiles. Like if /Sequencer/State/Path=test would then result to a final directory <exp>/userfiles/sequencer/test

I'm kind of tempted to go with 3), since this allows the experiment to define different subdirectories under <exp>/userfiles/sequencer/... depending on the situation of the experiment.

Best,

Stefan |

|

2651

|

10 Dec 2023 |

Andreas Suter | Info | Midas Holiday Update | > > If I understand the msequencer code correctly:

> > Under '/Sequencer/State/Path' the path can be defined from where the msequencer gets the files, generates the xml, etc.

> > However, the new javascript code reads/writes the files to '<exp>/userfiles/sequencer/'

> >

> > If the path in the ODB is different to '<exp>/userfiles/sequencer/', it leads to quite some unexpected behavior. If '<exp>/userfiles/sequencer/' is the place where things should go, the ODB entry of the msequencer and the internal handling should probably adopted, no?

>

> Indeed there is a change in philosophy. Previously, /Sequencer/State/Path could point anywhere in the file system. This was considered a security problem, since one could access system files under /etc for example via the midas interface. When the new file API was

> introduced recently, it has therefor been decided that all files accessible remotely should reside under <exp>/userfiles. If an experiment needs some files outside of that directory, the experiment could define some symbolic link, but that's then in the responsibility of

> the experiment.

>

> To resolve now the issue between the sequencer path and the userfiles, we have different options, and I would like to get some feedback from the community, since *all experiments* have to do that change.

>

> 1) Leave thins as they are, but explain that everybody should modify /Sequencer/Stat/Path to some subdirectory of <exp>/userfiles/sequencer

>

> 2) Drop /Sequencer/State/Path completely and "hard-wire" it to <exp>/usefiles/sequencer

>

> 3) Make /Sequencer/State/Path relative to <exp>/userfiles. Like if /Sequencer/State/Path=test would then result to a final directory <exp>/userfiles/sequencer/test

>

> I'm kind of tempted to go with 3), since this allows the experiment to define different subdirectories under <exp>/userfiles/sequencer/... depending on the situation of the experiment.

>

> Best,

> Stefan

For me the option 3) seems the most coherent one.

Andreas |

|

2654

|

12 Dec 2023 |

Stefan Ritt | Info | Midas Holiday Update | > > 3) Make /Sequencer/State/Path relative to <exp>/userfiles. Like if /Sequencer/State/Path=test would then result to a final directory <exp>/userfiles/sequencer/test

> >

> > I'm kind of tempted to go with 3), since this allows the experiment to define different subdirectories under <exp>/userfiles/sequencer/... depending on the situation of the experiment.

> >

> > Best,

> > Stefan

>

> For me the option 3) seems the most coherent one.

> Andreas

Ok, I implemented option 3) above. This means everybody using the midas sequencer has to change /Sequencer/State/Path to an empty string and move the

sequencer files under <exp>/userfiles/sequencer as a starting point. I tested most thing, including the INCLUDE statements, but there could still be

a bug here or there, so please give it a try and report any issue to me.

Best,

Stefan |

|

2660

|

15 Dec 2023 |

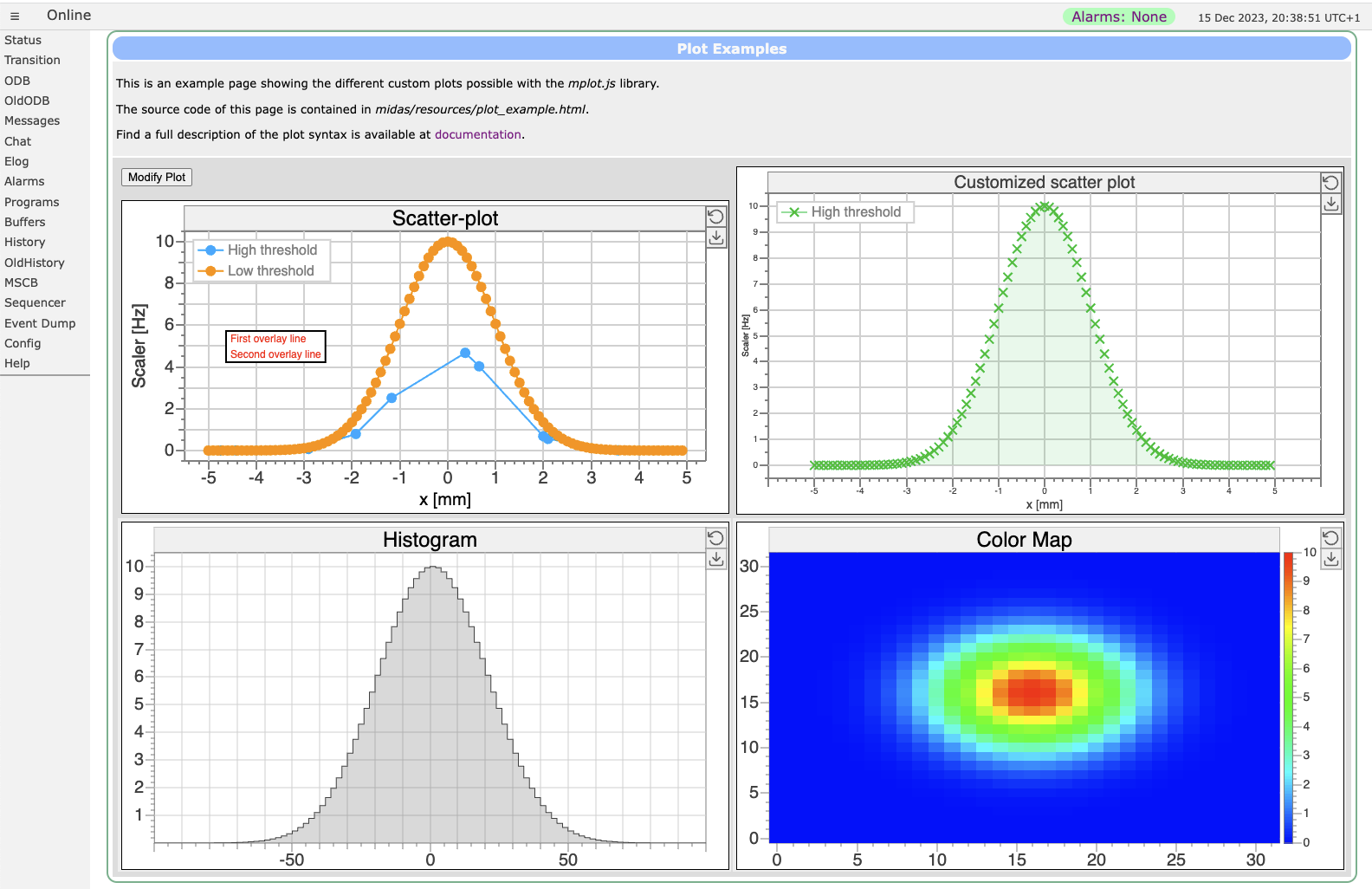

Stefan Ritt | Info | Implementation of custom scatter, histogram and color map plots | Custom plots including scatter, histogram and color map plots have been

implemented. This lets you plot graphs of X/Y data or histogram data stored in the

ODB on a custom page. For some examples and documentation please go to

https://daq00.triumf.ca/MidasWiki/index.php/Custom_plots_with_mplot

Enjoy!

Stefan |

| Attachment 1: plots.png

|

|

|

2707

|

12 Feb 2024 |

Konstantin Olchanski | Info | MIDAS and ROOT 6.30 | Starting around ROOT 6.30, there is a new dependency requirement for nlohmann-json3-dev from https://github.com/nlohmann/json.

If you use a Ubuntu-22 ROOT binary kit from root.cern.ch, MIDAS build will bomb with errors: Could not find a package configuration file provided by "nlohmann_json"

Per https://root.cern/install/dependencies/ install it:

apt install nlohmann-json3-dev

After this MIDAS builds ok.

K.O. |

|

2712

|

14 Feb 2024 |

Konstantin Olchanski | Info | bitbucket permissions | I pushed some buttons in bitbucket user groups and permissions to make it happy

wrt recent changes.

The intended configuration is this:

- two user groups: admins and developers

- admins has full control over the workspace, project and repositories ("Admin"

permission)

- developers have push permission for all repositories (not the "create

repository" permission, this is limited to admins) ("Write" permission).

- there seems to be a quirk, admins also need to be in the developers group or

some things do not work (like "run pipeline", which set me off into doing all

this).

- admins "Admin" permission is set at the "workspace" level and is inherited

down to project and repository level.

- developers "Write" permission is set at the "project" level and is inherited

down to repository level.

- individual repositories in the "MIDAS" project also seem to have explicit

(non-inhertited) permissions, I think this is redundant and I will probably

remove them at some point (not today).

K.O. |

|

2722

|

08 Mar 2024 |

Konstantin Olchanski | Info | MIDAS frontend for WIENER L.V. P.S. and VME crates | Our MIDAS frontend for WIENER power supplies is now available as a standalone git repository.

https://bitbucket.org/ttriumfdaq/fewienerlvps/src/master/

This frontend use the snmpwalk and snmpset programs to talk to the power supply.

Also included is a simple custom web page to display power supply status and to turn things on and off.

This frontend was originally written for the T2K/ND280 experiment in Japan.

In addition to controlling Wiener low voltage power supplies, it was also used to control the ISEG MPOD high

voltage power supplies.

In Japan, ISEG MPOD was (still is) connected to the MicroMegas TPC and is operated in a special "spark counting"

mode. This spark counting code is still present in this MIDAS frontend and can be restored with a small amount of

work.

K.o. |

|

2731

|

01 Apr 2024 |

Konstantin Olchanski | Info | xz-utils bomb out, compression benchmarks | you may have heard the news of a major problem with the xz-utils project, authors of the popular "xz" file compression,

https://nvd.nist.gov/vuln/detail/CVE-2024-3094

the debian bug tracker is interesting reading on this topic, "750 commits or contributions to xz by Jia Tan, who backdoored it",

https://bugs.debian.org/cgi-bin/bugreport.cgi?bug=1068024

and apparently there is problems with the deisng of the .xz file format, making it vulnerable to single-bit errors and unreliable checksums,

https://www.nongnu.org/lzip/xz_inadequate.html

this moved me to review status of file compression in MIDAS.

MIDAS does not use or recommend xz compression, MIDAS programs to not link to xz and lzma libraries provided by xz-utils.

mlogger has built-in support for:

- gzip-1, enabled by default, as the most safe and bog-standard compression method

- bzip2 and pbzip2, as providing the best compression

- lz4, for high data rate situations where gzip and bzip2 cannot keep up with the data

compression benchmarks on an AMD 7700 CPU (8-core, DDR5 RAM) confirm the usual speed-vs-compression tradeoff:

note: observe how both lz4 and pbzip2 compress time is the time it takes to read the file from ZFS cache, around 6 seconds.

note: decompression stacks up in the same order: lz4, gzip fastest, pbzip2 same speed using 10x CPU, bzip2 10x slower uses 1 CPU.

note: because of the fast decompression speed, gzip remains competitive.

no compression: 6 sec, 270 MiBytes/sec,

lz4, bpzip2: 6 sec, same, (pbzip2 uses 10 CPU vs lz4 uses 1 CPU)

gzip -1: 21 sec, 78 MiBytes/sec

bzip2: 70 sec, 23 MiBytes/sec (same speed as pbzip2, but using 1 CPU instead of 10 CPU)

file sizes:

(vslice) dsdaqdev@dsdaqgw:/zdata/vslice$ ls -lSr test.mid*

-rw-r--r-- 1 dsdaqdev users 483319523 Apr 1 14:06 test.mid.bz2

-rw-r--r-- 1 dsdaqdev users 631575929 Apr 1 14:06 test.mid.gz

-rw-r--r-- 1 dsdaqdev users 1002432717 Apr 1 14:06 test.mid.lz4

-rw-r--r-- 1 dsdaqdev users 1729327169 Apr 1 14:06 test.mid

(vslice) dsdaqdev@dsdaqgw:/zdata/vslice$

actual benchmarks:

(vslice) dsdaqdev@dsdaqgw:/zdata/vslice$ /usr/bin/time cat test.mid > /dev/null

0.00user 6.00system 0:06.00elapsed 99%CPU (0avgtext+0avgdata 1408maxresident)k

(vslice) dsdaqdev@dsdaqgw:/zdata/vslice$ /usr/bin/time gzip -1 -k test.mid

14.70user 6.42system 0:21.14elapsed 99%CPU (0avgtext+0avgdata 1664maxresident)k

(vslice) dsdaqdev@dsdaqgw:/zdata/vslice$ /usr/bin/time lz4 -k -f test.mid

2.90user 6.44system 0:09.39elapsed 99%CPU (0avgtext+0avgdata 7680maxresident)k

(vslice) dsdaqdev@dsdaqgw:/zdata/vslice$ /usr/bin/time bzip2 -k -f test.mid

64.76user 8.81system 1:13.59elapsed 99%CPU (0avgtext+0avgdata 8448maxresident)k

(vslice) dsdaqdev@dsdaqgw:/zdata/vslice$ /usr/bin/time pbzip2 -k -f test.mid

86.76user 15.39system 0:09.07elapsed 1125%CPU (0avgtext+0avgdata 114596maxresident)k

decompression benchmarks:

(vslice) dsdaqdev@dsdaqgw:/zdata/vslice$ /usr/bin/time lz4cat test.mid.lz4 > /dev/null

0.68user 0.23system 0:00.91elapsed 99%CPU (0avgtext+0avgdata 7680maxresident)k

(vslice) dsdaqdev@dsdaqgw:/zdata/vslice$ /usr/bin/time zcat test.mid.gz > /dev/null

6.61user 0.23system 0:06.85elapsed 99%CPU (0avgtext+0avgdata 1408maxresident)k

(vslice) dsdaqdev@dsdaqgw:/zdata/vslice$ /usr/bin/time bzcat test.mid.bz2 > /dev/null

27.99user 1.59system 0:29.58elapsed 99%CPU (0avgtext+0avgdata 4656maxresident)k

(vslice) dsdaqdev@dsdaqgw:/zdata/vslice$ /usr/bin/time pbzip2 -dc test.mid.bz2 > /dev/null

37.32user 0.56system 0:02.75elapsed 1377%CPU (0avgtext+0avgdata 157036maxresident)k

K.O. |

|

2732

|

02 Apr 2024 |

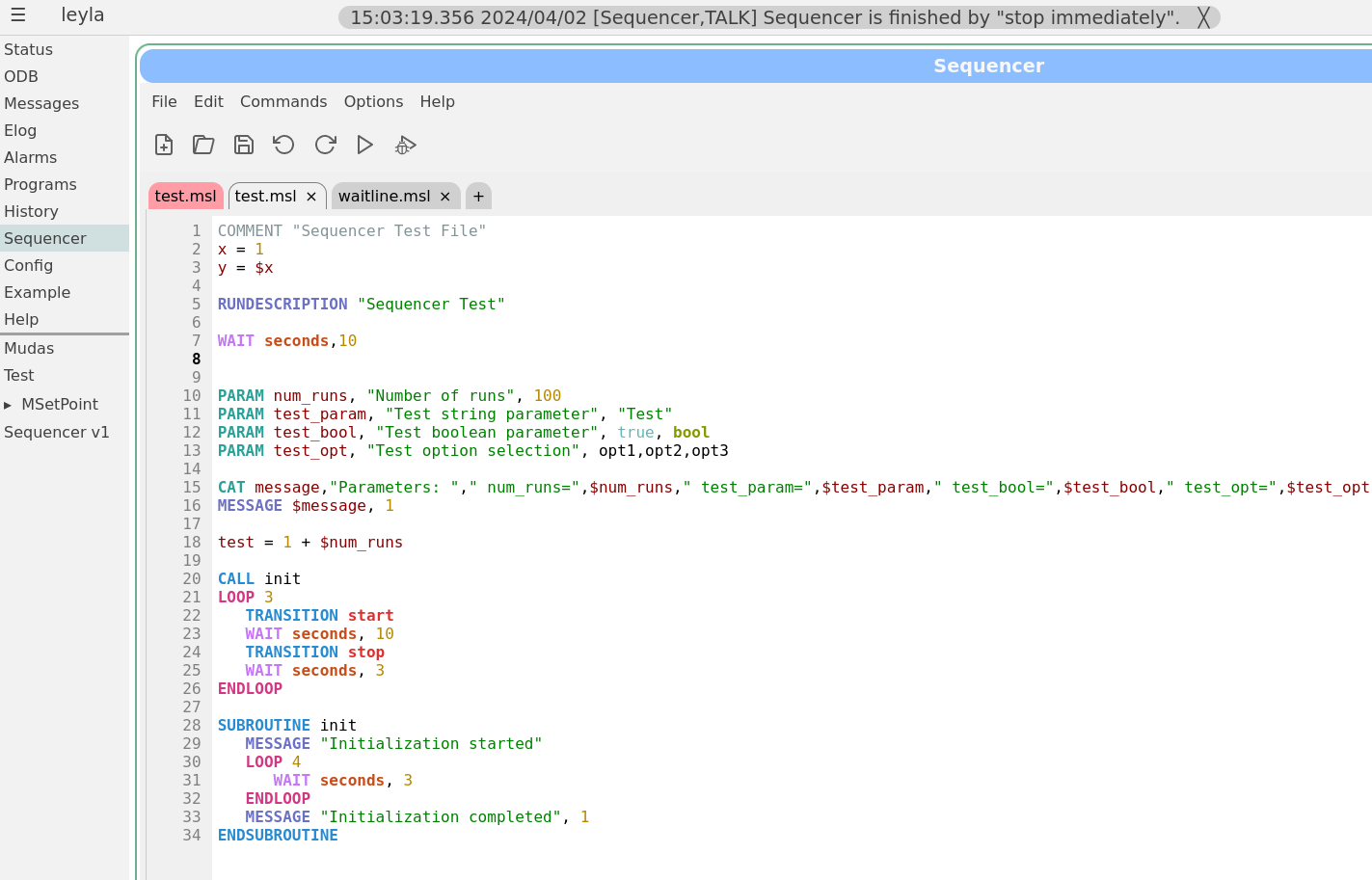

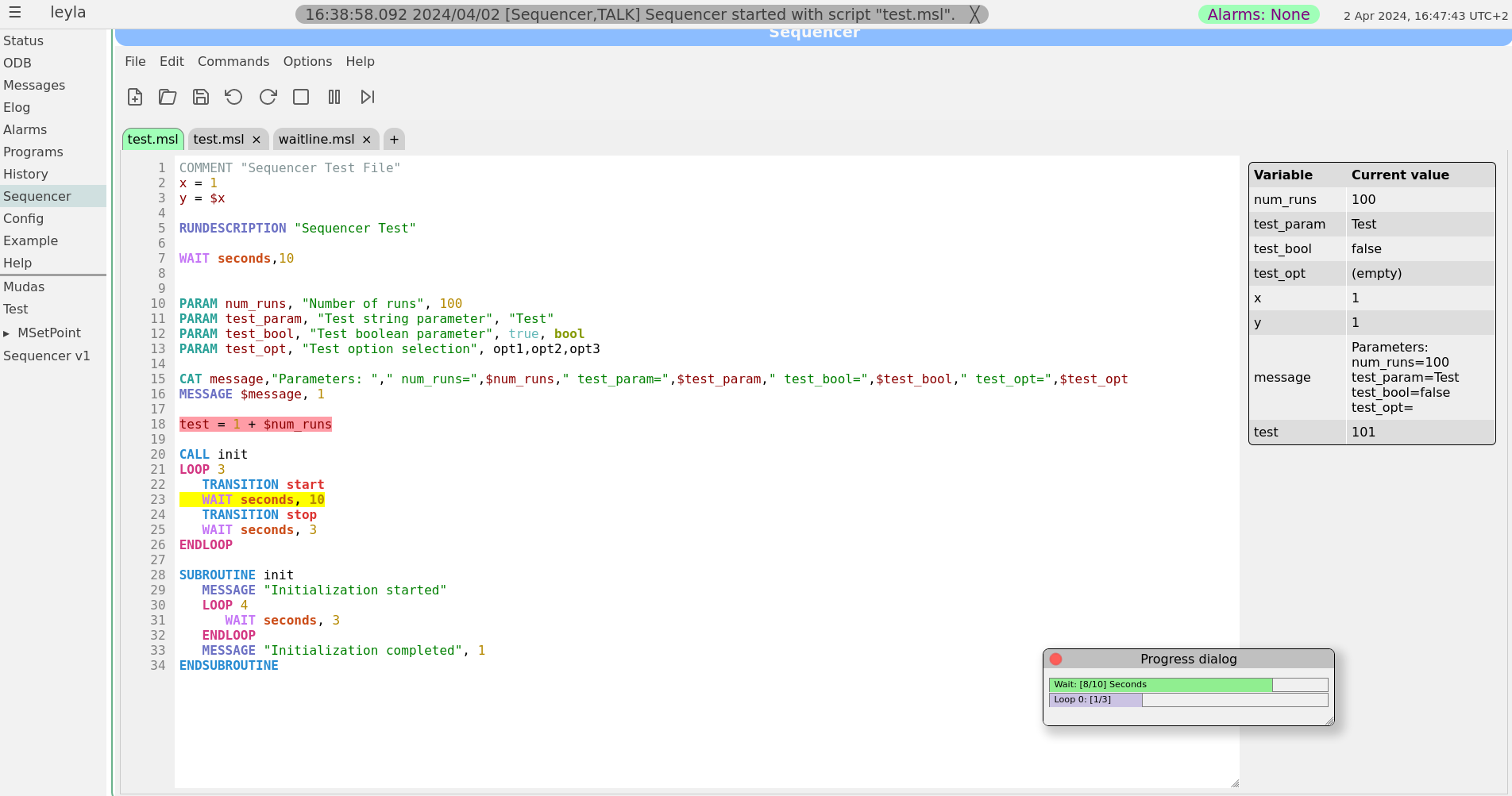

Zaher Salman | Info | Sequencer editor | Dear all,

Stefan and I have been working on improving the sequencer editor to make it look and feel more like a standard editor. This sequencer v2 has been finally merged into the develop branch earlier today.

The sequencer page has now a main tab which is used as a "console" to show the loaded sequence and it's progress when running. All other tabs are used only for editing scripts. To edit a currently loaded sequence simply double click on the editing area of the main tab or load the file in a new tab. A couple of screen shots of the new editor are attached.

For those who would like to stay with the older sequencer version a bit longer, you may simply copy resources/sequencer_v1.html to resources/sequencer.html. However, this version is not being actively maintained and may become obsolete at some point. Please help us improve the new version instead by reporting bugs and feature requests on bitbucket or here.

Best regards,

Zaher

|

|

2733

|

02 Apr 2024 |

Konstantin Olchanski | Info | Sequencer editor | > Stefan and I have been working on improving the sequencer editor ...

Looks grand! Congratulations with getting it completed. The previous version was

my rewrite of the old generated-C pages into html+javascript, nothing to write

home about, I even kept the 1990-ies-style html formatting and styling as much as

possible.

K.O. |

|

2735

|

04 Apr 2024 |

Konstantin Olchanski | Info | MIDAS RPC data format | I am not sure I have seen this documented before. MIDAS RPC data format.

1) RPC request (from client to mserver), in rpc_call_encode()

1.1) header:

4 bytes NET_COMMAND.header.routine_id is the RPC routine ID

4 bytes NET_COMMAND.header.param_size is the size of following data, aligned to 8 bytes

1.2) followed by values of RPC_IN parameters:

arg_size is the actual data size

param_size = ALIGN8(arg_size)

for TID_STRING||TID_LINK, arg_size = 1+strlen()

for TID_STRUCT||RPC_FIXARRAY, arg_size is taken from RPC_LIST.param[i].n

for RPC_VARARRAY|RPC_OUT, arg_size is pointed to by the next argument

for RPC_VARARRAY, arg_size is the value of the next argument

otherwise arg_size = rpc_tid_size()

data encoding:

RPC_VARARRAY:

4 bytes of ALIGN8(arg_size)

4 bytes of padding

param_size bytes of data

TID_STRING||TID_LINK:

param_size of string data, zero terminated

otherwise:

param_size of data

2) RPC dispatch in rpc_execute

for each parameter, a pointer is placed into prpc_param[i]:

RPC_IN: points to the data inside the receive buffer

RPC_OUT: points to the data buffer allocated inside the send buffer

RPC_IN|RPC_OUT: data is copied from the receive buffer to the send buffer, prpc_param[i] is a pointer to the copy in the send buffer

prpc_param[] is passed to the user handler function.

user function reads RPC_IN parameters by using the CSTRING(i), etc macros to dereference prpc_param[i]

user function modifies RPC_IN|RPC_OUT parameters pointed to by prpc_param[i] (directly in the send buffer)

user function places RPC_OUT data directly to the send buffer pointed to by prpc_param[i]

size of RPC_VARARRAY|RPC_OUT data should be written into the next/following parameter.

3) RPC reply

3.1) header:

4 bytes NET_COMMAND.header.routine_id contains the value returned by the user function (RPC_SUCCESS)

4 bytes NET_COMMAND.header.param_size is the size of following data aligned to 8 bytes

3.2) followed by data for RPC_OUT parameters:

data sizes and encodings are the same as for RPC_IN parameters.

for variable-size RPC_OUT parameters, space is allocated in the send buffer according to the maximum data size

that the user code expects to receive:

RPC_VARARRAY||TID_STRING: max_size is taken from the first 4 bytes of the *next* parameter

otherwise: max_size is same as arg_size and param_size.

when encoding and sending RPC_VARARRAY data, actual data size is taken from the next parameter, which is expected to be

TID_INT32|RPC_IN|RPC_OUT.

4) Notes:

4.1) RPC_VARARRAY should always be sent using two parameters:

a) RPC_VARARRAY|RPC_IN is pointer to the data we are sending, next parameter must be TID_INT32|RPC_IN is data size

b) RPC_VARARRAY|RPC_OUT is pointer to the data buffer for received data, next parameter must be TID_INT32|RPC_IN|RPC_OUT before the call should

contain maximum data size we expect to receive (size of malloc() buffer), after the call it may contain the actual data size returned

c) RPC_VARARRAY|RPC_IN|RPC_OUT is pointer to the data we are sending, next parameter must be TID_INT32|RPC_IN|RPC_OUT containing the maximum

data size we are expected to receive.

4.2) during dispatching, RPC_VARARRAY|RPC_OUT and TID_STRING|RPC_OUT both have 8 bytes of special header preceeding the actual data, 4 bytes of

maximum data size and 4 bytes of padding. prpc_param[] points to the actual data and user does not see this special header.

4.3) when encoding outgoing data, this special 8 byte header is removed from TID_STRING|RPC_OUT parameters using memmove().

4.4) TID_STRING parameters:

TID_STRING|RPC_IN can be sent using oe parameter

TID_STRING|RPC_OUT must be sent using two parameters, second parameter should be TID_INT32|RPC_IN to specify maximum returned string length

TID_STRING|RPC_IN|RPC_OUT ditto, but not used anywhere inside MIDAS

4.5) TID_IN32|RPC_VARARRAY does not work, corrupts following parameters. MIDAS only uses TID_ARRAY|RPC_VARARRAY

4.6) TID_STRING|RPC_IN|RPC_OUT does not seem to work.

4.7) RPC_VARARRAY does not work is there is preceding TID_STRING|RPC_OUT that returned a short string. memmove() moves stuff in the send buffer,

this makes prpc_param[] pointers into the send buffer invalid. subsequent RPC_VARARRAY parameter refers to now-invalid prpc_param[i] pointer to

get param_size and gets the wrong value. MIDAS does not use this sequence of RPC parameters.

4.8) same bug is in the processing of TID_STRING|RPC_OUT parameters, where it refers to invalid prpc_param[i] to get the string length.

K.O. |

|

2738

|

24 Apr 2024 |

Konstantin Olchanski | Info | MIDAS RPC data format | > 4.5) TID_IN32|RPC_VARARRAY does not work, corrupts following parameters. MIDAS only uses TID_ARRAY|RPC_VARARRAY

fixed in commit 0f5436d901a1dfaf6da2b94e2d87f870e3611cf1, TID_ARRAY|RPC_VARARRAY was okey (i.e. db_get_value()), bug happened only if rpc_tid_size()

is not zero.

>

> 4.6) TID_STRING|RPC_IN|RPC_OUT does not seem to work.

>

> 4.7) RPC_VARARRAY does not work is there is preceding TID_STRING|RPC_OUT that returned a short string. memmove() moves stuff in the send buffer,

> this makes prpc_param[] pointers into the send buffer invalid. subsequent RPC_VARARRAY parameter refers to now-invalid prpc_param[i] pointer to

> get param_size and gets the wrong value. MIDAS does not use this sequence of RPC parameters.

>

> 4.8) same bug is in the processing of TID_STRING|RPC_OUT parameters, where it refers to invalid prpc_param[i] to get the string length.

fixed in commits e45de5a8fa81c75e826a6a940f053c0794c962f5 and dc08fe8425c7d7bfea32540592b2c3aec5bead9f

K.O. |

|

2739

|

24 Apr 2024 |

Konstantin Olchanski | Info | MIDAS RPC add support for std::string and std::vector<char> | I now fully understand the MIDAS RPC code, had to add some debugging printfs,

write some test code (odbedit test_rpc), catch and fix a few bugs.

Fixes for the bugs are now committed.

Small refactor of rpc_execute() should be committed soon, this removes the

"goto" in the memory allocation of output buffer. Stefan's original code used a

fixed size buffer, I later added allocation "as-neeed" but did not fully

understand everything and implemented it as "if buffer too small, make it

bigger, goto start over again".

After that, I can implement support for std::string and std::vector<char>.

The way it looks right now, the on-the-wire data format is flexible enough to

make this change backward-compatible and allow MIDAS programs built with old

MIDAS to continue connecting to the new MIDAS and vice-versa.

MIDAS RPC support for std::string should let us improve security by removing

even more uses of fixed-size string buffers.

Support for std::vector<char> will allow removal of last places where

MAX_EVENT_SIZE is used and simplify memory allocation in other "give me data"

RPC calls, like RPC_JRPC and RPC_BRPC.

K.O. |

|

2766

|

14 May 2024 |

Konstantin Olchanski | Info | ROOT v6.30.6 requires libtbb-dev | root_v6.30.06.Linux-ubuntu22.04-x86_64-gcc11.4 the libtbb-dev package.

This is a new requirement and it is not listed in the ROOT dependancies page (I left a note on the ROOT forum, hopefully it will be

fixed quickly). https://root.cern/install/dependencies/

Symptom is when starting ROOT, you get an error:

cling::DynamicLibraryManager::loadLibrary(): libtbb.so.12: cannot open shared object file: No such file or directory

and things do not work.

Fix is to:

apt install libtbb-dev

K.O. |

|

2780

|

24 May 2024 |

Konstantin Olchanski | Info | added ubuntu 22 arm64 cross-compilation | Ubuntu 22 has almost everything necessary to cross-build arm64 MIDAS frontends:

# apt install g++-12-aarch64-linux-gnu gcc-12-aarch64-linux-gnu-base libstdc++-12-dev-arm64-cross

$ aarch64-linux-gnu-gcc-12 -o ttcp.aarch64 ttcp.c -static

to cross-build MIDAS:

make arm64_remoteonly -j

run programs from $MIDASSYS/linux-arm64-remoteonly/bin

link frontends to libraries in $MIDASSYS/linux-arm64-remoteonly/lib

Ubuntu 22 do not provide an arm64 libz.a, as a workaround, I build a fake one. (we do not have HAVE_ZLIB anymore...). or you

can link to libz.a from your arm64 linux image, assuming include/zlib.h are compatible.

K.O. |

|