| ID |

Date |

Author |

Topic |

Subject |

|

2595

|

08 Sep 2023 |

Nick Hastings | Forum | Hide start and stop buttons |

The wiki documents an odb variable to enable the hiding of the Start and Stop buttons on the mhttpd status page

https://daq00.triumf.ca/MidasWiki/index.php//Experiment_ODB_tree#Start-Stop_Buttons

However mhttpd states this option is obsolete. See commit:

https://bitbucket.org/tmidas/midas/commits/2366eefc6a216dc45154bc4594e329420500dcf7

I note that that commit also made mhttpd report that the "Pause-Resume Buttons" variable is also obsolete, however that code seems to have since been removed.

Is there now some other mechanism to hide the start and stop buttons?

Note that this is for a pure slow control system that does not take runs. |

|

2596

|

08 Sep 2023 |

Nick Hastings | Forum | Hide start and stop buttons |

> Is there now some other mechanism to hide the start and stop buttons?

> Note that this is for a pure slow control system that does not take runs.

Just wanted to add that I realize that this can be done by copying

status.html and/or midas.css to the experiment directory and then modifying

them/it, but wonder if the is some other preferred way. |

|

2600

|

13 Sep 2023 |

Nick Hastings | Forum | Hide start and stop buttons |

Hi Stefan,

> Indeed the ODB settings are obsolete.

I just applied for an account for the wiki.

I'll try add a note regarding this change.

> Now that the status page is fully dynamic

> (JavaScript), it's much more powerful to modify the status.html page directly. You

> can not only hide the buttons, but also remove the run numbers, the running time,

> and so on. This is much more flexible than steering things through the ODB.

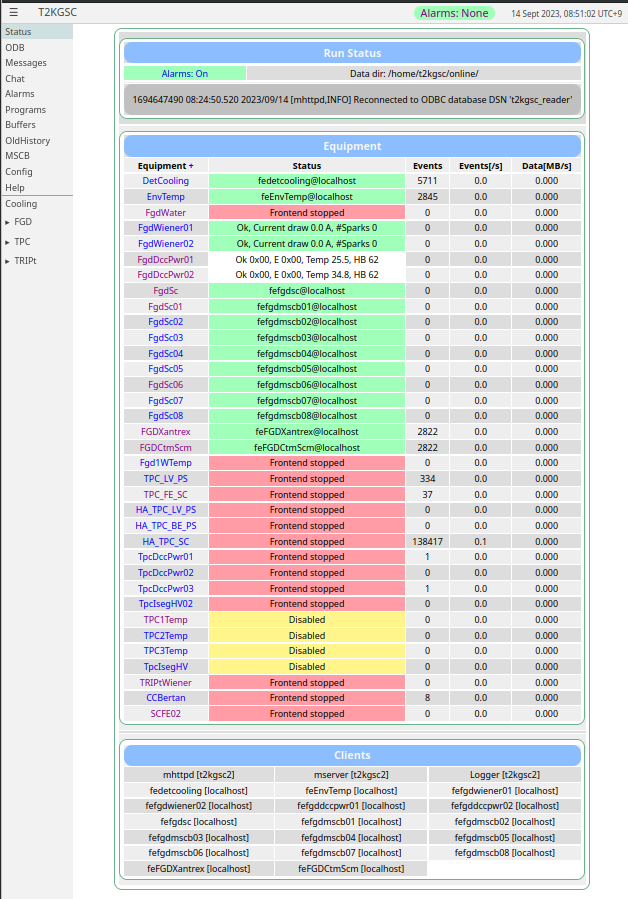

Very true. Currently I copied the resources/midas.css into the experiment directory and appended:

#runNumberCell { display: none;}

#runStatusStartTime { display: none;}

#runStatusStopTime { display: none;}

#runStatusSequencer { display: none;}

#logChannel { display: none;}

See screenshot attached. :-)

But if feels a little clunky to copy the whole file just to add five lines.

It might be more elegant if status.html looked for a user css file in addition

to the default ones.

> If there is a general need for that, I can draft a "non-run" based status page, but

> it's a bit hard to make a one-fits-all. Like some might even remove the logging

> channels and the clients, but add certain things like if their slow control front-

> end is running etc.

The logging channels are easily removed with the css (see attachment), but it might be

nice if the string "Run Status" table title was also configurable by css. For this

slow control system I'd probably change it to something like "GSC Status". Again

this is a minor thing, I could trivially do this by copying the resources/status.html

to the experiment directory and editing it.

Lots of fun new stuff migrating from circa 2012 midas to midas-2022-05-c :-)

Cheers,

Nick. |

| Attachment 1: screenshot-20230914-085054.png

|

|

|

2603

|

14 Sep 2023 |

Nick Hastings | Forum | Hide start and stop buttons |

Hi

> > > Indeed the ODB settings are obsolete.

> >

> > I just applied for an account for the wiki.

> > I'll try add a note regarding this change.

>

> Please coordinate with Ben Smith at TRIUMF <bsmith@triumf.ca>, who coordinates the documentation.

I will tread lightly.

> I would not go to change the CSS file. You only can hide some tables. But in a while I'm sure you

> want to ADD new things, which you only can do by editing the status.html file. You don't have to

> change midas/resources/status.html, but can make your own "custom status", name it differently, and

> link /Custom/Default in the ODB to it. This way it does not get overwritten if you pull midas.

We have *many* custom pages. The submenus on the status page:

▸ FGD

▸ TPC

▸ TRIPt

hide custom pages with all sorts of good stuff.

> > The logging channels are easily removed with the css (see attachment), but it might be

> > nice if the string "Run Status" table title was also configurable by css. For this

> > slow control system I'd probably change it to something like "GSC Status". Again

> > this is a minor thing, I could trivially do this by copying the resources/status.html

> > to the experiment directory and editing it.

>

> See above. I agree that the status.html file is a bit complicated and not so easy to understand

> as the CSS file, but you can do much more by editing it.

I may end up doing this since the events and data columns do not provide particularly

useful information in this instance. But for now, the css route seems like a quick and

fairly clean way to remove irrelevant stuff from a prominent place at the top of the page.

> > Lots of fun new stuff migrating from circa 2012 midas to midas-2022-05-c :-)

>

> I always advise people to frequently pull, they benefit from the newest features and avoid the

> huge amount of work to migrate from a 10 year old version.

The long delay was not my choice. The group responsible for the system departed in 2018, and

and were not replaced by the experiment management. Lack of personnel/expertise resulted in

a "if it's not broken then don't fix it" situation. Eventually, the need to update the PCs/OSs

and the imminent introduction of new sub-detectors resulted people agreeing to the update.

Cheers,

Nick. |

|

2684

|

23 Jan 2024 |

Nick Hastings | Bug Report | Warnings about ODB keys that haven't been touched for 10+ years |

Hi,

> What's the best way to make these messages go away?

1.

> - Change the logic in db_validate_and_repair_key_wlocked() to not worry if keys are 10+ years old?

2.

> - Write a script to "touch" all the old keys so they've been modified recently?

3.

> - Something else?

I wondered about this just under a year ago, and Konstantin forwarded my query here:

https://daq00.triumf.ca/elog-midas/Midas/2470

I am now of the opinion that 2 is not a good approach since it removes potentially

useful information.

I think some version of 1. is the correct choice. Whatever it fix is, I think it

should not care that timestamps of when variables are set are "old" (or at least

it should be user configurable via some odb setting).

Nick. |

|

2785

|

04 Jul 2024 |

Nick Hastings | Forum | mfe.cxx with RO_STOPPED and EQ_POLLED |

Dear Midas experts,

I noticed that a check was added to mfe.cxx in 1961af0d6:

+ /* check for consistent common settings */

+ if ((eq_info->read_on & RO_STOPPED) &&

+ (eq_info->eq_type == EQ_POLLED ||

+ eq_info->eq_type == EQ_INTERRUPT ||

+ eq_info->eq_type == EQ_MULTITHREAD ||

+ eq_info->eq_type == EQ_USER)) {

+ cm_msg(MERROR, "register_equipment", "Events \"%s\" cannot be read when run is stopped (RO_STOPPED flag)", equipment[idx].name);

+ return 0;

+ }

This commit was by Stefan in May 2022.

A commit few days later, 28d9c96bd, removed the "return 0;", and updated the

error message to:

"Equipment \"%s\" contains RO_STOPPED or RO_ALWAYS. This can lead to undesired side-effect and should be removed."

So such FEs can run but there is still an error at start up. The

documentation at https://daq00.triumf.ca/MidasWiki/index.php/ReadOn_Flags

states with RO_STOPPED "Readout Occurs" "Before stopping run".

Which seems to indicate that the removing the RO_STOPPED bit from a SC FE

would just result in an additional read not happening just prior to a run

stop. However reading scheduler() in mfe.cxx I see in the the main loop:

if (run_state == STATE_STOPPED && (eq_info->read_on & RO_STOPPED) == 0)

continue;

So it seems to me that the a EQ_PERIODIC equipment needs RO_STOPPED to be set

otherwise it will not read out data while there is no DAQ run.

Can someone explain the purpose of this check and error message? Perhaps it

was put in place with only DAQ FEs, not SC FEs in mind? And should the

documentation in the wiki actually be "s/Before stopping run/While run is stopped/"?

Thanks,

Nick. |

|

2786

|

04 Jul 2024 |

Nick Hastings | Bug Report | Fail to build in the examples/experiment |

I think this may only be an issue on the development branch.

Can you confirm that that is what you are using?

If so, I suggest you try the most recent stable tag 2022-05-c.

> Dear experts,

> I am a new user of MIDAS. I try to follow the instruction from

> https://daq00.triumf.ca/MidasWiki/index.php/Quickstart_Linux

> to install MIDAS in Fedora 39.

>

> When I try to have a try in the section of "Clients run on Localhost only"

> https://daq00.triumf.ca/MidasWiki/index.php/Quickstart_Linux#Clients_run_on_Localhost_only

>

> I get the error of "undefined reference to" several variables in the mfe.cxx. For example the variable "max_event_size_frag". May I know any idea about this issue? Thank you.

>

>

> Best,

> Terry |

|

Draft

|

04 Jul 2024 |

Nick Hastings | Forum | mfe.cxx with RO_STOPPED and EQ_POLLED |

I just discovered https://bitbucket.org/tmidas/midas/issues/338/mfec-ro_stopped-is-now-forbidden

> Dear Midas experts,

>

> I noticed that a check was added to mfe.cxx in 1961af0d6:

>

> + /* check for consistent common settings */

> + if ((eq_info->read_on & RO_STOPPED) &&

> + (eq_info->eq_type == EQ_POLLED ||

> + eq_info->eq_type == EQ_INTERRUPT ||

> + eq_info->eq_type == EQ_MULTITHREAD ||

> + eq_info->eq_type == EQ_USER)) {

> + cm_msg(MERROR, "register_equipment", "Events \"%s\" cannot be read when run is stopped (RO_STOPPED flag)", equipment[idx].name);

> + return 0;

> + }

>

> This commit was by Stefan in May 2022.

>

> A commit few days later, 28d9c96bd, removed the "return 0;", and updated the

> error message to:

>

> "Equipment \"%s\" contains RO_STOPPED or RO_ALWAYS. This can lead to undesired side-effect and should be removed."

>

> So such FEs can run but there is still an error at start up. The

> documentation at https://daq00.triumf.ca/MidasWiki/index.php/ReadOn_Flags

> states with RO_STOPPED "Readout Occurs" "Before stopping run".

> Which seems to indicate that the removing the RO_STOPPED bit from a SC FE

> would just result in an additional read not happening just prior to a run

> stop. However reading scheduler() in mfe.cxx I see in the the main loop:

>

> if (run_state == STATE_STOPPED && (eq_info->read_on & RO_STOPPED) == 0)

> continue;

>

> So it seems to me that the a EQ_PERIODIC equipment needs RO_STOPPED to be set

> otherwise it will not read out data while there is no DAQ run.

>

> Can someone explain the purpose of this check and error message? Perhaps it

> was put in place with only DAQ FEs, not SC FEs in mind? And should the

> documentation in the wiki actually be "s/Before stopping run/While run is stopped/"?

>

> Thanks,

>

> Nick. |

|

2904

|

26 Nov 2024 |

Nick Hastings | Bug Report | TMFE::Sleep() errors |

Hello,

I've noticed that SC FEs that use the TMFE class with midas-2022-05-c often report errors when calling TMFE:Sleep().

The error is :

[tmfe.cxx:1033:TMFE::Sleep,ERROR] select() returned -1, errno 22 (Invalid argument).

This seems to happen in two different ways:

1. Error being reported repeatedly

2. Occasional single errors being reported

When the first of these presents, we typically restart the FE to "solve" the problem.

Case 2. is typically ignored.

The code in question is:

void TMFE::Sleep(double time)

{

int status;

fd_set fdset;

struct timeval timeout;

FD_ZERO(&fdset);

timeout.tv_sec = time;

timeout.tv_usec = (time-timeout.tv_sec)*1000000.0;

while (1) {

status = select(1, &fdset, NULL, NULL, &timeout);

#ifdef EINTR

if (status < 0 && errno == EINTR) {

continue;

}

#endif

break;

}

if (status < 0) {

TMFE::Instance()->Msg(MERROR, "TMFE::Sleep", "select() returned %d, errno %d (%s)", status, errno, strerror(errno));

}

}

So it looks like either file descriptor of the timeval struct must have a problem.

From some reading it seems that invalid timeval structs are often caused by one or both

of tv_sec or tv_usec not being set. In the code above we can see that both appear to be

correctly set initially.

From the select() man page I see:

RETURN VALUE

On success, select() and pselect() return the number of file descriptors contained in

the three returned descriptor sets (that is, the total number of bits that are set in

readfds, writefds, exceptfds). The return value may be zero if the timeout expired

before any file descriptors became ready.

On error, -1 is returned, and errno is set to indicate the error; the file descriptor

sets are unmodified, and timeout becomes undefined.

The second paragraph quoted from the man page above would indicate to me that perhaps the

timeout needs to be reset inside the if block. eg:

if (status < 0 && errno == EINTR) {

timeout.tv_sec = time;

timeout.tv_usec = (time-timeout.tv_sec)*1000000.0;

continue;

}

Please note that I've only just briefly looked at this and was hoping someone more

familiar with using select() as a way to sleep() might be better able to understand

what is happening.

I wonder also if now that midas requires stricter/newer c++ standards if there maybe

some more straightforward method to sleep that is sufficiently robust and portable.

Thanks,

Nick. |

|

3102

|

01 Oct 2025 |

Nick Hastings | Forum | struct size mismatch of alarms |

> So I started our DAQ with an updated midas, after ca. 6 months+.

Would be worthwhile mentioning the git commit hash or tag you are using.

> No issues except all FEs complaining about the Alarm ODB structure.

> * I adapted to the new structure ( trigger count & trigger count required )

> * restarted fe's

> * recompiled

>

> 18:17:40.015 2025/09/30 [EPICS Frontend,INFO] Fixing ODB "/Alarms/Alarms/logger"

> struct size mismatch (expected 452, odb size 460)

>

> 18:17:40.009 2025/09/30 [SC Frontend,INFO] Fixing ODB "/Alarms/Alarms/logger"

> struct size mismatch (expected 460, odb size 452)

This seems to be https://daq00.triumf.ca/elog-midas/Midas/2980

> how do I get the FEs + ODB back in line here?

Recompile all frontends against new midas.

Nick. |

|

3103

|

01 Oct 2025 |

Nick Hastings | Forum | struct size mismatch of alarms |

Just to be clear, it seems that your "EPICS Frontend" was either not recompiled against the new midas yet or the old binary is being run, but "SC Frontend" is using the new midas.

> > So I started our DAQ with an updated midas, after ca. 6 months+.

>

> Would be worthwhile mentioning the git commit hash or tag you are using.

>

> > No issues except all FEs complaining about the Alarm ODB structure.

> > * I adapted to the new structure ( trigger count & trigger count required )

> > * restarted fe's

> > * recompiled

> >

> > 18:17:40.015 2025/09/30 [EPICS Frontend,INFO] Fixing ODB "/Alarms/Alarms/logger"

> > struct size mismatch (expected 452, odb size 460)

> >

> > 18:17:40.009 2025/09/30 [SC Frontend,INFO] Fixing ODB "/Alarms/Alarms/logger"

> > struct size mismatch (expected 460, odb size 452)

>

> This seems to be https://daq00.triumf.ca/elog-midas/Midas/2980

>

> > how do I get the FEs + ODB back in line here?

>

> Recompile all frontends against new midas.

>

> Nick. |

|

3121

|

19 Nov 2025 |

Nick Hastings | Forum | Control external process from inside MIDAS |

Hi,

what you describe is exactly how I normally run mhttpd, mlogger, mserver and some other

custom frontend programs. Eg:

[local:T2KGSC:Running]/>ls /programs/Logger/

Required y

Watchdog timeout 100000

Check interval 180000

Start command systemctl --user start mlogger

Auto start n

Auto stop n

Auto restart n

Alarm class AlarmNotify

First failed 0

The only exception is your last point about stdout and stderr

being midas messages. I use journalctl to see these.

Cheers,

Nick.

> I want to control (start / stop / monitor its stdout and stderr) an external process (systemd / EPICS IOC shell script) from within MIDAS.

>

> In order to make this as convenient as possible for the user, I want the process to behave just like any other MIDAS client:

> - I can start it from the ODB as a program

> - The process gets regularly polled from MIDAS to see whether it is still running

> - I can stop the process from the ODB like any other program

> - Optional, but highly appreciated: Its stdout and stderr should be a MIDAS message.

>

> Did anyone already solve a similar problem?

>

> Best regards

> Stefan |

|

3127

|

20 Nov 2025 |

Nick Hastings | Forum | Control external process from inside MIDAS |

Hi,

> Nick. Regarding the messages: Zaher showed me that it is possible to simply place

> a custom log file generated by the systemd next to midas.log - then it shows up

> next to the "midas" tab in "Messages".

Interesting. I'm not familiar with that feature. Do you have link to documentation?

> One follow-up question: Is it possible to use the systemctl status for the

> "Running on host" column? Or does this even happen automatically?

On the programs page that column is populated by the odb key /System/Clients/<PID>/Host

so no. However, there is nothing stopping you from writing your own version of

programs.html to show whatever you want. For example I have a custom programs

page the includes columns to enable/disable and to reset watchdog alarms.

Cheers,

Nick. |

|

3128

|

20 Nov 2025 |

Nick Hastings | Info | switch midas to c++17 |

> I notice the cmake does not actually pass "-std=c++17" to the c++ compiler, and on U-20, it is likely

> the default c++14 is used. cmake always does the wrong thing and this will need to be fixed later.

Does adding

set(CMAKE_CXX_STANDARD 17)

set(CMAKE_CXX_STANDARD_REQUIRED ON)

Get it to do the right thing?

Cheers,

Nick.. |

|

1237

|

15 Feb 2017 |

NguyenMinhTruong | Bug Report | increase event buffer size |

Dear all,

I have problem in event buffer size.

When run MIDAS, I got error "total event size (1307072) larger than buffer size

(1048576)", so I guess that the EVENT_BUFFER_SIZE is small.

I change EVENT_BUFFER_SIZE in midas.h from 0x100000 to 0x200000. After compiling

and run MIDAS, I got other error "Shared memory segment with key 0x4d040761

already exists, please remove it manually: ipcrm -M 0x4d040761 size0x204a3c" in

system.C

I check the shmget() function in system.C and it is said that error come from

Shared memory segments larger than 16,773,120 bytes and create teraspace shared

memory segments

Anyone has this problem before?

Thanks for your help

M.T |

|

1238

|

15 Feb 2017 |

NguyenMinhTruong | Bug Report | increase event buffer size |

Dear all,

I have problem in event buffer size.

When run MIDAS, I got error "total event size (1307072) larger than buffer size

(1048576)", so I guess that the EVENT_BUFFER_SIZE is small.

I change EVENT_BUFFER_SIZE in midas.h from 0x100000 to 0x200000. After compiling

and run MIDAS, I got other error "Shared memory segment with key 0x4d040761

already exists, please remove it manually: ipcrm -M 0x4d040761 size0x204a3c" in

system.C

I check the shmget() function in system.C and it is said that error come from

Shared memory segments larger than 16,773,120 bytes and create teraspace shared

memory segments

Anyone has this problem before?

Thanks for your help

M.T |

|

Draft

|

19 Feb 2017 |

NguyenMinhTruong | Bug Report | increase event buffer size |

I am sorry for my late reply memory in my PC is 16 GB I check the contents of .SHM_TYPE.TXT and it is "POSIXv2_SHM". But there is no buffer sizes in "/Experiment" After run "ipcrm -M 0x4d040761 size0x204a3c", remove .SYSTEM.SHM and run MIDAS again, I still get error "Shared memory segment with key 0x4d040761 already exists, please remove it manually: ipcrm -M 0x4d040761 size0x204a3c" M.T |

|

1241

|

20 Feb 2017 |

NguyenMinhTruong | Bug Report | increase event buffer size |

I am sorry for my late reply

memory in my PC is 16 GB

I check the contents of .SHM_TYPE.TXT and it is "POSIXv2_SHM".

But there is no buffer sizes in "/Experiment"

After run "ipcrm -M 0x4d040761 size0x204a3c", remove .SYSTEM.SHM and run MIDAS again, I still get error "Shared memory segment

with key 0x4d040761 already exists, please remove it manually: ipcrm -M 0x4d040761 size0x204a3c" M.T |

|

851

|

04 Jan 2013 |

Nabin Poudyal | Suggestion | how to start using midas |

Please, tell me how to choose a value of a "key" like DCM, pulser period,

presamples, upper thresholds to run a experiment? where can I find the related

informations? |

|

2740

|

29 Apr 2024 |

Musaab Al-Bakry | Forum | Midas Sequencer with less than 1 second wait |

Hello there,

I am working on a task that involves some ODB changes that happen within 20-500

ms. The wait command for Midas Sequencer only works for > 1 second. As a

workaround, I tried calling a python script that has a time.sleep() command, but

the sequencer doesn't wait for the python script to terminate before moving to the

next command. Obviously, I could just move the entire script to python, but that

would cause further issues to us. Is there a way to have a wait that has precision

in order of milliseconds from within the Midas Sequencer? If there is no Midas-

native methods for doing this, what workaround will you suggest to get this to

work? |