| ID |

Date |

Author |

Topic |

Subject |

|

2580

|

09 Aug 2023 |

Konstantin Olchanski | Bug Fix | Stefan's improved ODB flush to disk |

This is an important improvement, should have a post of it's own. K.O.

> > > RFE filed:

> > > https://bitbucket.org/tmidas/midas/issues/367/odb-should-be-saved-to-disk-

periodically

> >

> > Implemented and closed: https://bitbucket.org/tmidas/midas/issues/367/odb-

should-be-saved-to-disk-periodically

> >

> > Stefan

>

> Stefan's comments from the closed bug report:

>

> Ok I implemented some periodic flushing. Here is what I did:

>

> Created

>

> /System/Flush/Flush period : TID_UINT32 /System/Flush/Last flush : TID_UINT32

>

> which control the flushing to disk. The default value for “Flush period” is 60

seconds or one minute.

>

> All clients call db_flush_database() through their cm_yield() function

> db_flush_database() checks the “Last flush” and only flushes the ODB when the

period has expired. This test is

> done inside the ODB semaphore so that we don’t get a race condigiton

> If the period has expired, db_flush_database() calls ss_shm_flush()

> ss_shm_flush() tries to allocate a buffer of the shared memory. If the

allocation is not successful (out of

> memory), ss_shm_flush() writes directly to the binary file as before.

> If the allocation is successful, ss_shm_flush() copies the share memory to a

buffer and passes this buffer to a

> dedicated thread which writes the buffer to the binary file. This causes

ss_shm_flush() to return immediately and

> not block the calling program during the disk write operation.

> Added back the “if (destroy_flag) ss_shm_flush()” so that the ODB is flushed

for sure before the shared memory

> gets deleted.

> This means now that under normal circumstances, exiting programs like odbedit

do NOT flush the ODB. This allows to

> call many “odbedit -c” in a row without the flush penalty. Nevertheless, the

ODB then gets flushed by other

> clients latest 60 seconds (or whatever the flush period is) after odbedit

exits.

>

> Please note that ODB flushing has two purposes:

>

> When all programs exit, we need a persistent storage for the ODB. In most

experiments this only happens very

> seldom. Maybe at the end of a beam time period.

> If the computer crashes, a recent version of the ODB is kept on disk to

simplify recovery after the crash.

> Since crashes are not so often (during production periods we have maybe one

hardware failure every few years) the

> flushing of the ODB too often does not make sense and just consumes resources.

Flushing does also not help from

> corrupted ODBs, since the binary image will also get corrupted. So the only

reason for periodic flushes is to ease

> recovery after a total crash. I put the default to 60 seconds, but if people

are really paranoid they can decrease

> it to 10 seconds or so. Or increase it to 600 seconds if their system does not

crash every week and disks are

> slow.

>

> I made a dedicated branch feature/periodic_odb_flush so people can test the

new functionality. If there are no

> complaints within the next few days, I will merge that into develop.

>

> Stefan |

|

1581

|

28 Jun 2019 |

Thorsten Lux | Bug Report | Status page reloads every second |

Hello,

We observed a strange behavior, from our point of view:

After some issues with with a 100% full database and recovering from this by

creating a new odb file from a previous copy, the Midas status page started to

reload/refresh every second while on the other hand it solved the 100% full

issue.

There are no error messages and the pages looks like normal but it is impossible

to start a new run due to the permanent reloading.

It is possible to click for example on odb and check the settings.

Any idea what could be the problem and what the solution?

Thanks

Thorsten |

|

1582

|

28 Jun 2019 |

Konstantin Olchanski | Bug Report | Status page reloads every second |

> We observed a strange behavior, from our point of view:

> ... the Midas status page started to reload/refresh every second

What version of midas is this? Run the odbedit "ver" command please. Also which

browser on what OS is this? (chrome->about google chrome, firefox->about firefox).

The current versions of midas do not reload the status page ever, and I think

all the page-reload code has been removed and they cannot reload automatically.

Old versions of the midas status page were designed to reload every 60 seconds or so.

The reload interval is adjustable, but I do not think it was stored in ODB. It was

accessed from the status page "config" button and I think it stored the reload

period is a browser cookie.

This reload value may have gotten confused, and in this case, to fix it,

you can try to clear all the web cookies from the web page. Another test for this

would be to try an alternate web browser, which would presumable not have the bad cookie

and will not suffer from the reload problem.

You can also open the web page debugger (google chrome -> right click menu -> inspect ->>

console & etc) and see if anything shows up there. I think you can set a break point

on the page reload function and catch the place that causes the reload.

K.O. |

|

1583

|

29 Jun 2019 |

Thorsten Lux | Bug Report | Status page reloads every second |

I am sorry, yesterday evening I must have been a bit tired after a long day with a lot of

problems and error messages, so that I did not realize that yes, the frontend was finally

starting well again but by recovering the odb file from an old one, it was stuck in the

transition "stopping run" and this caused the continuous reloading of the status page.

A "obdedit -C stop" solved the problem.

Sorry for this!

> We observed a strange behavior, from our point of view:

> > ... the Midas status page started to reload/refresh every second

>

> What version of midas is this? Run the odbedit "ver" command please. Also which

> browser on what OS is this? (chrome->about google chrome, firefox->about firefox).

>

> The current versions of midas do not reload the status page ever, and I think

> all the page-reload code has been removed and they cannot reload automatically.

>

> Old versions of the midas status page were designed to reload every 60 seconds or so.

> The reload interval is adjustable, but I do not think it was stored in ODB. It was

> accessed from the status page "config" button and I think it stored the reload

> period is a browser cookie.

>

> This reload value may have gotten confused, and in this case, to fix it,

> you can try to clear all the web cookies from the web page. Another test for this

> would be to try an alternate web browser, which would presumable not have the bad cookie

> and will not suffer from the reload problem.

>

> You can also open the web page debugger (google chrome -> right click menu -> inspect ->>

> console & etc) and see if anything shows up there. I think you can set a break point

> on the page reload function and catch the place that causes the reload.

>

> K.O. |

|

1043

|

03 Mar 2015 |

Zaher Salman | Forum | Starting program from custom page |

I am trying to start a program (fronend) from a custom page. What is the best

way to do that? Would ODBRpc() do this? if so can anyone give me an example of

how to do this. Thanks. |

|

1044

|

03 Mar 2015 |

Stefan Ritt | Forum | Starting program from custom page |

> I am trying to start a program (fronend) from a custom page. What is the best

> way to do that? Would ODBRpc() do this? if so can anyone give me an example of

> how to do this. Thanks.

You have a look at the documentation:

http://ladd00.triumf.ca/~daqweb/doc/midas-old/html/RC_mhttpd_defining_script_buttons.html

Cheers,

Stefan |

|

1045

|

03 Mar 2015 |

Zaher Salman | Forum | Starting program from custom page |

> > I am trying to start a program (fronend) from a custom page. What is the best

> > way to do that? Would ODBRpc() do this? if so can anyone give me an example of

> > how to do this. Thanks.

>

> You have a look at the documentation:

>

> http://ladd00.triumf.ca/~daqweb/doc/midas-old/html/RC_mhttpd_defining_script_buttons.html

>

> Cheers,

> Stefan

Hi Stefan, thanks for the quick reply. I guess my question was not clear enough.

My aim is to create a button which mimics the "Start/Stop" button functionality in the

"Programs" page where we start all the front-ends for the various equipment. The idea is that

the user will use a simple interface in a custom page (not the status page) which sets up the

equipment needed for a specific type of measurement.

thanks

Zaher |

|

1046

|

03 Mar 2015 |

Stefan Ritt | Forum | Starting program from custom page |

> Hi Stefan, thanks for the quick reply. I guess my question was not clear enough.

>

> My aim is to create a button which mimics the "Start/Stop" button functionality in the

> "Programs" page where we start all the front-ends for the various equipment. The idea is that

> the user will use a simple interface in a custom page (not the status page) which sets up the

> equipment needed for a specific type of measurement.

All functions in midas are controlled through special URLs. So the URL

http://<host:port>/?cmd=Start&value=10

will start run #10. Similarly with ?cmd=Stop. Now all you need is to set up a custom button, and use the

OnClick="" JavaScript method to fire off an Ajax request with the above URL.

To send an Ajax request, you can use the function XMLHttpRequestGeneric which ships as part of midas in the

mhttpd.js file. Then the code would be

<input type="button" onclick="start()">

and in your JavaScript code:

...

function start()

{

var request = XMLHttpRequestGeneric();

url = '?cmd=Start&value=10';

request.open('GET', url, false);

request.send(null);

}

...

Cheers,

Stefan |

|

1047

|

03 Mar 2015 |

Zaher Salman | Forum | Starting program from custom page |

Thank you very much, this is exactly what I need and it works.

Zaher

> All functions in midas are controlled through special URLs. So the URL

>

> http://<host:port>/?cmd=Start&value=10

>

> will start run #10. Similarly with ?cmd=Stop. Now all you need is to set up a custom button, and use the

> OnClick="" JavaScript method to fire off an Ajax request with the above URL.

>

> To send an Ajax request, you can use the function XMLHttpRequestGeneric which ships as part of midas in the

> mhttpd.js file. Then the code would be

>

> <input type="button" onclick="start()">

>

> and in your JavaScript code:

>

> ...

> function start()

> {

> var request = XMLHttpRequestGeneric();

>

> url = '?cmd=Start&value=10';

> request.open('GET', url, false);

> request.send(null);

> }

> ...

>

>

> Cheers,

> Stefan |

|

1114

|

22 Sep 2015 |

Zaher Salman | Forum | Starting program from custom page |

Just in case anyone needs this in the future I am adding a comment about this issue. After a few months of working

with this solution we noticed that when using

request.open('GET', url, false);

on firefox it could cause the javascript to stop at that point, possibly due to a delayed response from the

server. A simple solution is to send an asynchronous requests

request.open('GET', url, true);

Zaher

> Thank you very much, this is exactly what I need and it works.

>

> Zaher

>

> > All functions in midas are controlled through special URLs. So the URL

> >

> > http://<host:port>/?cmd=Start&value=10

> >

> > will start run #10. Similarly with ?cmd=Stop. Now all you need is to set up a custom button, and use the

> > OnClick="" JavaScript method to fire off an Ajax request with the above URL.

> >

> > To send an Ajax request, you can use the function XMLHttpRequestGeneric which ships as part of midas in the

> > mhttpd.js file. Then the code would be

> >

> > <input type="button" onclick="start()">

> >

> > and in your JavaScript code:

> >

> > ...

> > function start()

> > {

> > var request = XMLHttpRequestGeneric();

> >

> > url = '?cmd=Start&value=10';

> > request.open('GET', url, false);

> > request.send(null);

> > }

> > ...

> >

> >

> > Cheers,

> > Stefan |

|

1116

|

23 Sep 2015 |

Konstantin Olchanski | Forum | Starting program from custom page |

Good news, on the new experimental branch feature/jsonrpc, I have implemented all 3 program management functions - start program,

stop program and "is running?" as JSON-RPC methods. On that branch, the midas "programs" page is done completely using

javascript.

You can try this new code right now or you can wait until the branch is merged into main midas - I am still ironing some last minute kinks

in JSON encoding of ODB. But all the programs management should work (and all previously existing stuff should work). Look in

mhttpd.js for the mjsonrpc_start_program() & etc.

K.O.

> Just in case anyone needs this in the future I am adding a comment about this issue. After a few months of working

> with this solution we noticed that when using

>

> request.open('GET', url, false);

>

> on firefox it could cause the javascript to stop at that point, possibly due to a delayed response from the

> server. A simple solution is to send an asynchronous requests

>

> request.open('GET', url, true);

>

> Zaher

>

> > Thank you very much, this is exactly what I need and it works.

> >

> > Zaher

> >

> > > All functions in midas are controlled through special URLs. So the URL

> > >

> > > http://<host:port>/?cmd=Start&value=10

> > >

> > > will start run #10. Similarly with ?cmd=Stop. Now all you need is to set up a custom button, and use the

> > > OnClick="" JavaScript method to fire off an Ajax request with the above URL.

> > >

> > > To send an Ajax request, you can use the function XMLHttpRequestGeneric which ships as part of midas in the

> > > mhttpd.js file. Then the code would be

> > >

> > > <input type="button" onclick="start()">

> > >

> > > and in your JavaScript code:

> > >

> > > ...

> > > function start()

> > > {

> > > var request = XMLHttpRequestGeneric();

> > >

> > > url = '?cmd=Start&value=10';

> > > request.open('GET', url, false);

> > > request.send(null);

> > > }

> > > ...

> > >

> > >

> > > Cheers,

> > > Stefan |

|

369

|

09 May 2007 |

Carl Metelko | Forum | Splitting data transfer and control onto different networks |

Hi,

I'm setting up a system with two networks with the intension of having

control info (odb, alarm) on the 192.168.0.x

and the frontend readout on 192.168.1.x

Is there any easy way of doing this?

I'm also trying to separate processes onto different machines, is there

any way to not have mserver,mhttpd and (mlogger,mevt) all run on the same

machine?

Thanks,

Carl Metelko |

|

370

|

09 May 2007 |

Stefan Ritt | Forum | Splitting data transfer and control onto different networks |

Hi Carl,

so far I did not experience any problems of running odb&alarm on the same link as

the readout, since the data goes usually frontend->backend, and all other messages

from backend->frontend. So before you do something complicated, try it first the

easy way and check if you have problems at all. So far I don't know anybody who

did separate the network interfaces so I have not description for that.

You can however separate processes. The easiest is to buy a multi-core machine. If

you want to use however separate computers, note that receiving events over the

network is not very optimized. So you should run mserver connected to the frontend

, the event builder and mlogger on the same machine. mhttpd can easily live on

another machine, but there is not much CPU consumption from that (unless you don't

plot long history trends). Running mserver, the event builder and mlogger on the

same machine (dual Xenon mainboard) gave me easily 50 MB/sec (actually disk

limited), and not both CPUs were near 100%. If you put any receiving process (like

the event builder or mlogger or the analyzer) on a separate machine, you might see

a bottlened on the event receiving side of maybe 10MB/sec or so (never really

tried recently).

Best regards,

Stefan

> Hi,

> I'm setting up a system with two networks with the intension of having

> control info (odb, alarm) on the 192.168.0.x

> and the frontend readout on 192.168.1.x

>

> Is there any easy way of doing this?

> I'm also trying to separate processes onto different machines, is there

> any way to not have mserver,mhttpd and (mlogger,mevt) all run on the same

> machine?

> Thanks,

> Carl Metelko |

|

371

|

09 May 2007 |

Konstantin Olchanski | Forum | Splitting data transfer and control onto different networks |

> I'm setting up a system with two networks with the intension of having

> control info (odb, alarm) on the 192.168.0.x

> and the frontend readout on 192.168.1.x

We have some experience with this at TRIUMF - the TWIST experiment we run with the main data

generating frontends on a private network - it is a supported configuration and it works fine.

We ran into one problem after adding some code to the frontends for stopping the run upon detecting

some data errors - stopping runs requires sending RPC transactions to every midas client, so we had to

add static network routes for routing packets between midas nodes on the private network and midas

nodes on the normal network.

> I'm also trying to separate processes onto different machines, is there

> any way to not have mserver,mhttpd and (mlogger,mevt) all run on the same machine?

mserver runs on the machine with the ODB shared memory by definition (think of it as "nfs server").

mhttpd typically runs on the machine with the ODB shared memory and until recently it had no code for

connecting to the mserver. I recently fixed some of it, and now you can run mhttpd in "history mode"

through the mserver. This is useful for offloading the generation of history plots to another cpu or

another machine. In our case, we run the "history mhttpd" on the machine that holds the history files.

mlogger could be made to run remotely via the mserver, but presently it will refuse to do so, as it has

some code that requires direct access to midas shared memory. If data has to be written to a remote

filesystem, the consensus is that it is more efficient to run mserver locally and let the OS handle remote

filesystem access (NFS, etc).

All other midas programs should be able to run remotely via the mserver.

K.O. |

|

375

|

14 May 2007 |

Carl Metelko | Forum | Splitting data transfer and control onto different networks |

Hi,

thanks for the advice. We do have dual core Xeons so we'll try running

most things on the server. Unless it proves to be a problem we'll run all

MIDAS signals on one network and NFS etc on the other.

I do have one more query about running systems like Konstantin.

What we would like to do is have a 'mirror' server serving multiple

online monitoring machines so that the load on the server is constant nomatter

the demands on the mirror.

Is there a way to set this up? Or would it be best to have a remote analyser

making short (1min) root files shared with the online monitoring? |

|

630

|

03 Sep 2009 |

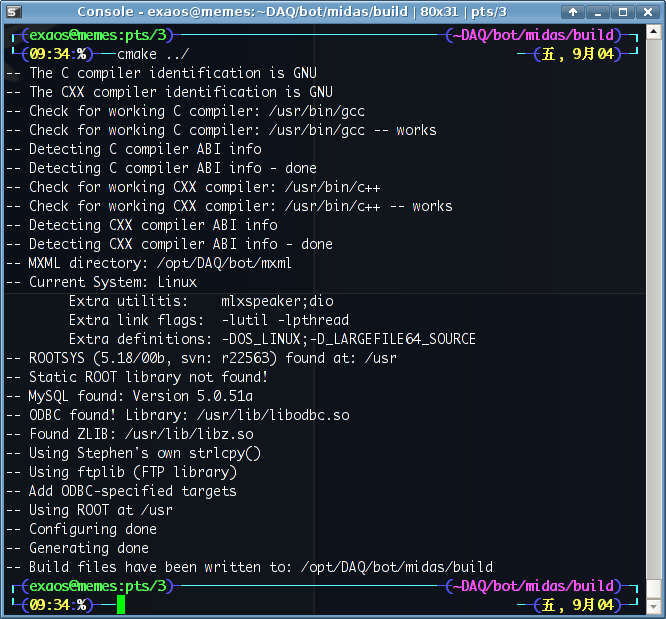

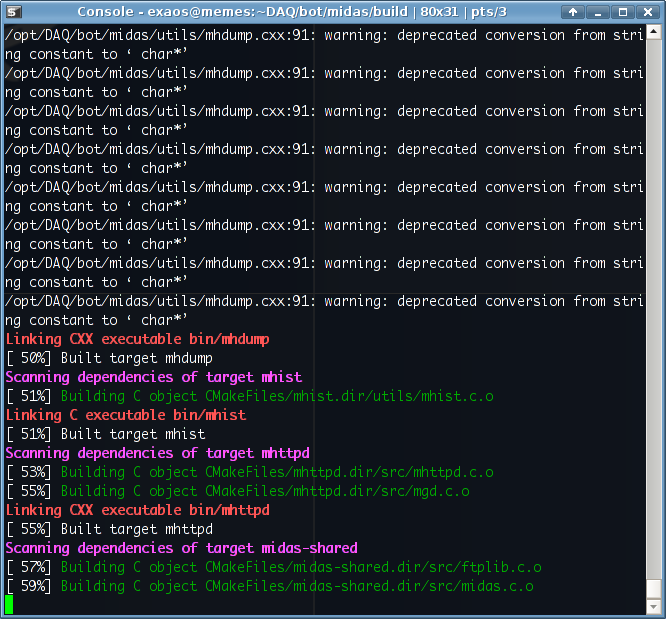

Exaos Lee | Suggestion | Some screenshot using CMake with MIDAS |

I didn't add optimization flags to compile, so I got link error while generating mcnaf as I reported before.

The screen-shots show that the configure files works because I have modified the "driver/camac/camacrpc.c". |

| Attachment 1: Screenshot-11.png

|

|

| Attachment 2: Screenshot-13.png

|

|

|

1151

|

10 Dec 2015 |

Stefan Ritt | Info | Small change in loading .odb files |

A small change in loading .odb files has been implemented. When you load an array from a .odb file, the indices in each line were not evaluated, only the complete array was loaded. In our experiment we need however to load only a few values, like some HV values for some channels but leaving the other values as they are. I changed slightly the code of db_paste() to correctly evaluate the index in each line of the .odb file. This way one can write for example following .odb file:

[/Equipment/HV/Variables]

Demand = FLOAT[256] :

[10] 100.1

[11] 100.2

[12] 100.3

[13] 100.4

[14] 100.5

[15] 100.6

then load it in odbedit via the "load" command, and then only change channels 10-15.

Stefan |

|

1404

|

30 Oct 2018 |

Joseph McKenna | Bug Report | Side panel auto-expands when history page updates |

One can collapse the side panel when looking at history pages with the button in

the top left, great! We want to see many pages so screen real estate is important

The issue we face is that when the page refreshes, the side panel expands. Can

we make the panel state more 'sticky'?

Many thanks

Joseph (ALPHA)

Version: 2.1

Revision: Mon Mar 19 18:15:51 2018 -0700 - midas-2017-07-c-197-g61fbcd43-dirty

on branch feature/midas-2017-10 |

|

1405

|

31 Oct 2018 |

Stefan Ritt | Bug Report | Side panel auto-expands when history page updates |

>

>

> One can collapse the side panel when looking at history pages with the button in

> the top left, great! We want to see many pages so screen real estate is important

>

> The issue we face is that when the page refreshes, the side panel expands. Can

> we make the panel state more 'sticky'?

>

> Many thanks

> Joseph (ALPHA)

>

> Version: 2.1

> Revision: Mon Mar 19 18:15:51 2018 -0700 - midas-2017-07-c-197-g61fbcd43-dirty

> on branch feature/midas-2017-10

Hi Joseph,

In principle a page refresh should now not be necessary, since pages should reload automatically

the contents which changes. If a custom page needs a reload, it is not well designed. If necessary, I

can explain the details.

Anyhow I implemented your "stickyness" of the side panel in the last commit to the develop branch.

Best regards,

Stefan |

|

1406

|

31 Oct 2018 |

Joseph McKenna | Bug Report | Side panel auto-expands when history page updates |

> >

> >

> > One can collapse the side panel when looking at history pages with the button in

> > the top left, great! We want to see many pages so screen real estate is important

> >

> > The issue we face is that when the page refreshes, the side panel expands. Can

> > we make the panel state more 'sticky'?

> >

> > Many thanks

> > Joseph (ALPHA)

> >

> > Version: 2.1

> > Revision: Mon Mar 19 18:15:51 2018 -0700 - midas-2017-07-c-197-g61fbcd43-dirty

> > on branch feature/midas-2017-10

>

> Hi Joseph,

>

> In principle a page refresh should now not be necessary, since pages should reload automatically

> the contents which changes. If a custom page needs a reload, it is not well designed. If necessary, I

> can explain the details.

>

> Anyhow I implemented your "stickyness" of the side panel in the last commit to the develop branch.

>

> Best regards,

> Stefan

Hi Stefan,

I apologise for miss using the word refresh. The re-appearing sidebar was also seen with the automatic

reload, I have implemented your fix here and it now works great!

Thank you very much!

Joseph |