| ID |

Date |

Author |

Topic |

Subject |

|

292

|

09 Aug 2006 |

Konstantin Olchanski | Bug Fix | Refactoring and rewrite of event buffer code | > In close cooperation with Stefan, I refactored and rewrote the MIDAS event

> buffering code (bm_send_event, bm_flush_cache, bm_receive_event and bm_push_event).

>

> All are welcome to try the new code. If it explodes, please send me the error

> messages, stack traces and core dumps.

Stefan quickly found one new error (a typoe in a check against infinite looping) and

then I found one old error present in the old code that caused event loss when the

buffer became exactly 100% full (0 bytes free).

Both errors are now fixed in svn commit 3294.

K.O. |

|

683

|

01 Dec 2009 |

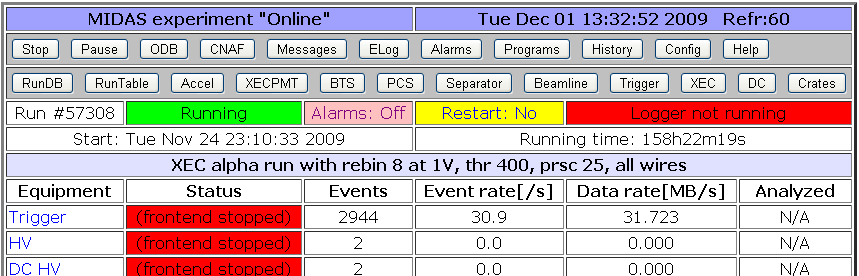

Stefan Ritt | Info | Redesign of status page links | The custom and alias links in the standard midas status page were shown as HTML

links so far. If there are many links with names having spaces in their names,

it's a bit hard to distinguish between them. Therefore, they are packed now into

individual buttons (see attachment) starting from SVN revision 4633 on. This makes

also the look more homogeneous. If there is any problem with that, please report. |

| Attachment 1: Capture.png

|

|

|

691

|

22 Dec 2009 |

Suzannah Daviel | Suggestion | Redesign of status page links | > The custom and alias links in the standard midas status page were shown as HTML

> links so far. If there are many links with names having spaces in their names,

> it's a bit hard to distinguish between them. Therefore, they are packed now into

> individual buttons (see attachment) starting from SVN revision 4633 on. This makes

> also the look more homogeneous. If there is any problem with that, please report.

Would you consider using a different colour for the alias buttons (or background

colour)? At present it's hard to know whether a button is an alias link, a custom page

link or a user-button especially if you are not familiar with the button layout. |

|

692

|

11 Jan 2010 |

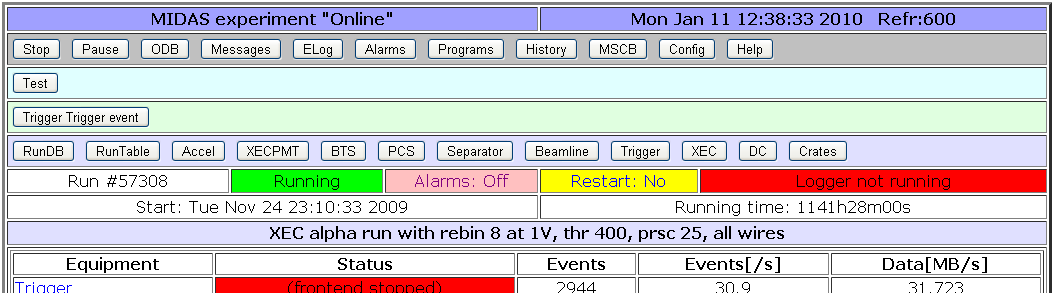

Stefan Ritt | Suggestion | Redesign of status page links | > > The custom and alias links in the standard midas status page were shown as HTML

> > links so far. If there are many links with names having spaces in their names,

> > it's a bit hard to distinguish between them. Therefore, they are packed now into

> > individual buttons (see attachment) starting from SVN revision 4633 on. This makes

> > also the look more homogeneous. If there is any problem with that, please report.

>

> Would you consider using a different colour for the alias buttons (or background

> colour)? At present it's hard to know whether a button is an alias link, a custom page

> link or a user-button especially if you are not familiar with the button layout.

Ok, I changed the background colors for the button rows. There are now four different

colors: Main menu buttons, Scripts, Manually triggered events, Alias & Custom pages. Hope

this is ok. Of course one could have each button in a different color, but then it gets

complicated... In that case I would recommend to make a dedicated custom page with all these

buttons, which you can then tailor exactly to your needs. |

| Attachment 1: Capture.png

|

|

|

684

|

04 Dec 2009 |

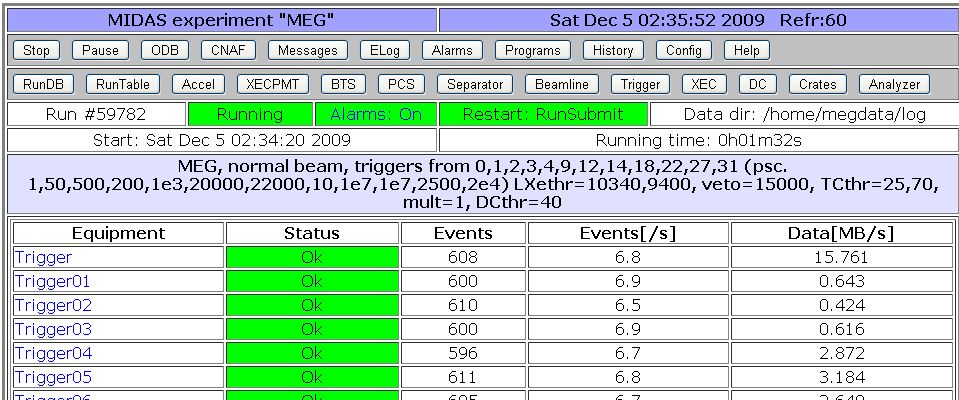

Stefan Ritt | Info | Redesign of status page columns | Since the column on the main midas status page with fraction of analyzed events is

barely used, I decided to drop it. Anyhow it does not make sense for all slow

control events. If this feature is required in some experiment, I propose to move it

into a custom page and calculate this ratio in JavaScript, where one has much more

flexibility.

This modification frees up more space on the status page for the "Status" column, where

front-end programs can report errors etc. |

| Attachment 1: Capture.png

|

|

|

2069

|

06 Jan 2021 |

Isaac Labrie Boulay | Info | Recovering a corrupted ODB using odbinit. | Hi all,

I am currently trying to recover my corrupted ODB using odbinit and I am still

getting issues after doing 'odbinit --cleanup' and trying to reload the saved

ODB (last.json). Here is the output:

************************************************

(odbinit cleanup) Note* the ERROR in system.cxx

************************************************

[caendaq@cu332 ANIS]$ odbinit --cleanup

Checking environment... experiment name is "ANIS", remote hostname is ""

Checking command line... experiment "ANIS", cleanup 1, dry_run 0, create_exptab

0, create_env 0

Checking MIDASSYS....../home/caendaq/packages/midas

Checking exptab... experiments defined in exptab file "/home/caendaq/ANIS/exptab

":

0: "ANIS" <-- selected experiment

Checking exptab... selected experiment "ANIS", experiment directory "/home/caend

aq/ANIS/"

Checking experiment directory "/home/caendaq/ANIS/"

Found existing ODB save file: "/home/caendaq/ANIS/.ODB.SHM"

Checking shared memory...

Deleting old ODB shared memory...

[system.cxx:1052:ss_shm_delete,ERROR] shm_unlink(/1001_ANIS_ODB__home_caendaq_AN

IS_) errno 2 (No such file or directory)

Good: no ODB shared memory

Deleting old ODB semaphore...

Deleting old ODB semaphore... create status 1, delete status 1

Preserving old ODB save file /home/caendaq/ANIS/.ODB.SHM" to "/home/caendaq/ANIS

/.ODB.SHM.1609951022"

Checking ODB size...

Requested ODB size is 0 bytes (0.00B)

ODB size file is "/home/caendaq/ANIS//.ODB_SIZE.TXT"

Saved ODB size from "/home/caendaq/ANIS//.ODB_SIZE.TXT" is 1048576 bytes (1.05MB

)

We will initialize ODB for experiment "ANIS" on host "" with size 1048576 bytes

(1.05MB)

Creating ODB...

Creating ODB... db_open_database() status 302

Saving ODB...

Saving ODB... db_close_database() status 1

Connecting to experiment...

Connected to ODB for experiment "ANIS" on host "" with size 1048576 bytes (1.05M

B)

Checking experiment name... status 1, found "ANIS"

Disconnecting from experiment...

Done

****************************************

(Loading the last copy of my ODB)

*************************************

[caendaq@cu332 data]$ odbedit

[local:ANIS:S]/>load last.json

[ODBEdit,INFO] Reloading RPC hosts access control list via hotlink callback

[ODBEdit,INFO] Reloading RPC hosts access control list via hotlink callback

[ODBEdit,INFO] Reloading RPC hosts access control list via hotlink callback

[ODBEdit,INFO] Reloading RPC hosts access control list via hotlink callback

[ODBEdit,INFO] Reloading RPC hosts access control list via hotlink callback

[ODBEdit,INFO] Reloading RPC hosts access control list via hotlink callback

[ODBEdit,INFO] Reloading RPC hosts access control list via hotlink callback

[ODBEdit,INFO] Reloading RPC hosts access control list via hotlink callback

[ODBEdit,INFO] Reloading RPC hosts access control list via hotlink callback

[ODBEdit,INFO] Reloading RPC hosts access control list via hotlink callback

11:38:12 [ODBEdit,INFO] Reloading RPC hosts access control list via hotlink

callback

11:38:12 [ODBEdit,INFO] Reloading RPC hosts access control list via hotlink

callback

11:38:12 [ODBEdit,INFO] Reloading RPC hosts access control list via hotlink

callback

11:38:12 [ODBEdit,INFO] Reloading RPC hosts access control list via hotlink

callback

11:38:12 [ODBEdit,INFO] Reloading RPC hosts access control list via hotlink

callback

11:38:12 [ODBEdit,INFO] Reloading RPC hosts access control list via hotlink

callback

11:38:12 [ODBEdit,INFO] Reloading RPC hosts access control list via hotlink

callback

11:38:12 [ODBEdit,INFO] Reloading RPC hosts access control list via hotlink

callback

11:38:12 [ODBEdit,INFO] Reloading RPC hosts access control list via hotlink

callback

11:38:12 [ODBEdit,INFO] Reloading RPC hosts access control list via hotlink

callback

**********************************************

(Now trying to run my frontend and analyzer)

*********************************************

[caendaq@cu332 ANIS]$ ./start_daq.sh

mlogger: no process found

fevme: no process found

manalyzer.exe: no process found

manalyzer_example_cxx.exe: no process found

roody: no process found

[ODBEdit,ERROR] [midas.cxx:6616:bm_open_buffer,ERROR] Buffer "SYSTEM" is

corrupted, mismatch of buffer name in shared memory ""

11:38:30 [ODBEdit,ERROR] [midas.cxx:6616:bm_open_buffer,ERROR] Buffer "SYSTEM"

is corrupted, mismatch of buffer name in shared memory ""

Becoming a daemon...

Becoming a daemon...

Please point your web browser to http://localhost:8081

To look at live histograms, run: roody -Hlocalhost

Or run: mozilla http://localhost:8081

[caendaq@cu332 ANIS]$ Frontend name : fevme

Event buffer size : 1048576

User max event size : 204800

User max frag. size : 1048576

# of events per buffer : 5

Connect to experiment ANIS...

OK

[fevme,ERROR] [midas.cxx:6616:bm_open_buffer,ERROR] Buffer "SYSTEM" is

corrupted, mismatch of buffer name in shared memory ""

[fevme,ERROR] [mfe.cxx:596:register_equipment,ERROR] Cannot open event buffer

"SYSTEM" size 33554432, bm_open_buffer() status 219

Has anyone ever encountered these issues?

Thanks for your time.

Isaac |

|

528

|

20 Nov 2008 |

Jimmy Ngai | Info | Recommended platform for running MIDAS | Dear All,

Is there any recommended platforms for running MIDAS? Have anyone encountered

problems when running MIDAS on Scientific Linux?

Thanks.

Jimmy |

|

529

|

20 Nov 2008 |

Stefan Ritt | Info | Recommended platform for running MIDAS | > Dear All,

>

> Is there any recommended platforms for running MIDAS? Have anyone encountered

> problems when running MIDAS on Scientific Linux?

>

> Thanks.

>

> Jimmy

I run MIDAS on scientific Linux 5.1 without any problem. |

|

882

|

06 May 2013 |

Konstantin Olchanski | Info | Recent-ish SVN changes at PSI | A little while ago, PSI made some changes to the SVN hosting. The main SVN URL seems to remain the

same, but SVN viewer moved to a new URL (it seems a bit faster compared to the old viewer):

https://savannah.psi.ch/viewvc/meg_midas/trunk/

Also the SSH host key has changed to:

savannah.psi.ch,192.33.120.96 ssh-rsa

AAAAB3NzaC1yc2EAAAABIwAAAQEAwVWEoaOmF9uggkUEV2/HhZo2ncH0zUfd0ExzzgW1m0HZQ5df1OYIb

pyBH6WD7ySU7fWkihbt2+SpyClMkWEJMvb5W82SrXtmzd9PFb3G7ouL++64geVKHdIKAVoqm8yGaIKIS0684

dyNO79ZacbOYC9l9YehuMHPHDUPPdNCFW2Gr5mkf/uReMIoYz81XmgAIHXPSgErv2Nv/BAA1PCWt6THMMX

E2O2jGTzJCXuZsJ2RoyVVR4Q0Cow1ekloXn/rdGkbUPMt/m3kNuVFhSzYGdprv+g3l7l1PWwEcz7V1BW9LNPp

eIJhxy9/DNUsF1+funzBOc/UsPFyNyJEo0p0Xw==

Fingerprint: a3:18:18:c4:14:f9:3e:79:2c:9c:fa:90:9a:d6:d2:fc

The change of host key is annoying because it makes "svn update" fail with an unhelpful message (some

mumble about ssh -q). To fix this fault, run "ssh svn@savannah.psi.ch", then fixup the ssh host key as

usual.

K.O. |

|

201

|

04 Mar 2005 |

Stefan Ritt | Info | Real-Time 2005 Conference in Stockholm | Dear Midas users,

may I kindly invite you present your work at the Real-Time 2005 Conference in

Stockholm, June 4-10. The conference deals with all kinds of real time

applications like DAQ, control systems etc. It is a small conference with no

paralles sessions, and with two interesting short courses. The deadline has been

prolonged until March 13, 2005. If you are interested, please register under

http://www.physto.se/RT2005/

Here is the official letter from the chairman:

=====================================================================

14th IEEE-NPSS Real Time Conference 2005

Stockholm, Sweden, 4-10 June, 2005

Conference web site: www.physto.se/RT2005/

**********************************************************************

* *

* ABSTRACT SUBMISSION PROLONGED! DEADLINE: March 13, 2005 *

* *

**********************************************************************

Considering that the Real Time conference is a highly meritorious and

multidisciplinary conference with purely plenary sessions and that the

accepted papers may be submitted to a special issue of the IEEE

Transactions on Nuclear Science we would like to give more people the

opportunity to participate. Therefore we have organized the program so

that there is now more time for talks than at the RT2003 and we are

extending the abstract submission to March 13. We strongly encourage

you to participate!

Submit your abstract and a summary through the conference web site

"Abstract submission" link. Please, make sure that your colleagues know

about the conference and invite them.

I would also like to take this opportunity to announce the two short

courses we have organized for Sunday 5/6:

- "Gigabit Networking for Data Acquisition Systems - A practical

introduction"

Artur Barczyk, CERN

- "System On Programmable Chip - A design tutorial"

Marco Riccioli, Xilinx

Please find the abstracts and more information about the conference on

www.physto.se/RT2005/

Thank you if you have already submitted an abstract.

Richard Jacobsson

General Chairman, RT2005 Conference

Email: RT2005@cern.ch

Phone: +41-22-767 36 19

Fax: +41-22-767 94 25

CERN Meyrin

1211 Geneva 23

Switzerland |

|

3045

|

26 May 2025 |

Francesco Renga | Forum | Reading two devices in parallel | Dear experts,

in the CYGNO experiment, we readout CMOS cameras for optical readout of GEM-TPCs. So far, we only developed the readout for a single camera. In the future, we will have multiple cameras to read out (up to 18 in the next phase of the experiment), and we are investigating how to optimize the readout by exploiting parallelization.

One idea is to start parallel threads within a single equipment. Alternatively, one could associate different equipment with each camera and run an Event Builder. Perhaps other solutions did not come to mind. Which one would you regard as the most effective and elegant?

Thank you very much,

Francesco |

|

3046

|

26 May 2025 |

Stefan Ritt | Forum | Reading two devices in parallel | > Dear experts,

> in the CYGNO experiment, we readout CMOS cameras for optical readout of GEM-TPCs. So far, we only developed the readout for a single camera. In the future, we will have multiple cameras to read out (up to 18 in the next phase of the experiment), and we are investigating how to optimize the readout by exploiting parallelization.

>

> One idea is to start parallel threads within a single equipment. Alternatively, one could associate different equipment with each camera and run an Event Builder. Perhaps other solutions did not come to mind. Which one would you regard as the most effective and elegant?

>

> Thank you very much,

> Francesco

In principle both will work. It's kind of matter of taste. In the multi-threaded approach one has a single frontend to start and stop, and in the second case you have to start 18 individual frontends and make sure that they are running.

For the multi-threaded frontend you have to ensure proper synchronization between the threads (like common run start/stop), and in the end you also have to do some event building, sending all 18 streams into a single buffer. As you know, multi-thread programming can be a bit of an art, using mutexes or semaphores, but it can be more flexible as the event builder which is a given piece of software.

Best,

Stefan |

|

570

|

07 May 2009 |

Konstantin Olchanski | Info | RPC.SHM gyration | When using remote midas clients with mserver, you may have noticed the zero-size .RPC.SHM files

these clients create in the directory where you run them. These files are associated with the semaphore

created by the midas rpc layer (rpc_call) to synchronize rpc calls between multiple threads. This

semaphore is always created, even for single-threaded midas applications. Also normally midas

semaphore files are created in the midas experiment directory specified in exptab (same place as

.ODB.SHM), but for remote clients, we do not know that location until we start making rpc calls, so the

semaphore file is created in the current directory (and it is on a remote machine anyway, so this

location may not be visible locally).

There are 2 problems with these semaphores:

1) in multiple experiments, we have observed the RPC.SHM semaphore stuck in a locked state,

requiring manual cleanup (ipcrm -s xxx). So far, I have failed to duplicate this lockup using test

programs and test experiments. The code appears to be coded correctly to automatically unlock the

semaphore when the program exits or is killed.

2) RPC.SHM is created as a global shared semaphore so it synchronizes rpc calls not just for all threads

inside one application, but across all threads in all applications (excessive locking - separate

applications are connected to separate mservers and do not need this locking); but only for applications

that run from the same current directory - RPC.SHM files in different directories are "connected" to

different semaphores.

To try to fix this, I implemented "private semaphores" in system.c and made rpc_call() use them.

This introduced a major bug - a semaphore leak - quickly using up all sysv semaphores (see sysctl

kernel.sem).

The code was now reverted back to using RPC.SHM as described above.

The "bad" svn revisions start with rev 4472, the problem is fixed in rev 4480.

If you use remote midas clients and have one of these bad revisions, either update midas.c to rev 4480

or apply this patch to midas.c::rpc_call():

ss_mutex_create("", &_mutex_rpc);

should read

ss_mutex_create("RPC", &_mutex_rpc);

Apologies for any inconvenience caused by this problem

K.O. |

|

586

|

02 Jun 2009 |

Konstantin Olchanski | Info | RPC.SHM gyration | > When using remote midas clients with mserver, you may have noticed the zero-size .RPC.SHM files

> these clients create in the directory where you run them. These files are associated with the semaphore

> created by the midas rpc layer (rpc_call) to synchronize rpc calls between multiple threads. This

> semaphore is always created, even for single-threaded midas applications. Also normally midas

> semaphore files are created in the midas experiment directory specified in exptab (same place as

> .ODB.SHM), but for remote clients, we do not know that location until we start making rpc calls, so the

> semaphore file is created in the current directory (and it is on a remote machine anyway, so this

> location may not be visible locally).

>

> There are 2 problems with these semaphores:

A 3rd problem surfaced - on SL5 Linux, the global limit is 128 or so semaphores and on at least one heavily used machine that hosts multiple

experiments we simply run out of semaphores.

For "normal" semaphores, their number is fixed to about 5 per experiment (one for each shared memory buffer), but the number of RPC

semaphores is not bounded by the number of experiments or even by the number of user accounts - they are created (and never deleted) for

each experiment, for each user that connects to each experiment, for each subdirectory where the each user happened to try to start a

program that connects to the each experiment. (to reuse the old children's rhyme).

Right now, MIDAS does not have an abstraction for "local multi-thread mutex" (i.e. pthread_mutex & co) and mostly uses global semaphores

for this task (with interesting coding results, i.e. for multithreaded locking of ODB). Perhaps such an abstraction should be introduced?

K.O. |

|

589

|

04 Jun 2009 |

Stefan Ritt | Info | RPC.SHM gyration | > Right now, MIDAS does not have an abstraction for "local multi-thread mutex" (i.e. pthread_mutex & co) and mostly uses global semaphores

> for this task (with interesting coding results, i.e. for multithreaded locking of ODB). Perhaps such an abstraction should be introduced?

Yes. In the old days when I designed the inter-process communication (~1993), there was no such thing like pthread_mutex (only under Windows).

Now it would be time to implement this thing, since it then will work under Posix and Windows (don't know about VxWorks). But that will at least

allow multi-threaded client applications, which can safely call midas functions through the RPC layer. For local thread-safeness, all midas

functions have to be checked an modified if necessary, which is a major work right now, but for remote clients it's rather simple. |

|

673

|

20 Nov 2009 |

Konstantin Olchanski | Info | RPC.SHM gyration | > When using remote midas clients with mserver, you may have noticed the zero-size .RPC.SHM files

> these clients create in the directory where you run them.

Well, RPC.SHM bites. Please reread the parent message for full details, but in the nutshell, it is a global

semaphore that permits only one midas rpc client to talk to midas at a time (it was intended for local

locking between threads inside one midas application).

I have about 10 remote midas frontends started by ssh all in the same directory, so they all share the same

.RPC.SHM semaphore and do not live through the night - die from ODB timeouts because of RPC semaphore contention.

In a test version of MIDAS, I disabled the RPC.SHM semaphore, and now my clients live through the night, very

good.

Long term, we should fix this by using application-local mutexes (i.e. pthread_mutex, also works on MacOS, do

Windows have pthreads yet?).

This will also cleanup some of the ODB locking, which currently confuses pid's, tid's etc and is completely

broken on MacOS because some of these values are 64-bit and do not fit into the 32-bit data fields in MIDAS

shared memories.

Short term, I can add a flag for enabling and disabling the RPC semaphore from the user application: enabled

by default, but user can disable it if they do not use threads.

Alternatively, I can disable it by default, then enable it automatically if multiple threads are detected or

if ss_thread_create() is called.

Could also make it an environment variable.

Any preferences?

K.O. |

|

2414

|

25 Jun 2022 |

Joseph McKenna | Bug Report | RPC timeout for manalyzer over network |

In ALPHA, I get RPC timeouts running a (reasonably heavy) analyzer on a remote machine (connected directly via a ~30 meter 10Gbe Ethernet cable) after ~5 minutes of running. If I run the analyser locally, I dont not see a timeout...

gdb trace:

#0 __GI_raise (sig=sig@entry=6) at ../sysdeps/unix/sysv/linux/raise.c:50

#1 0x00007ffff5d35859 in __GI_abort () at abort.c:79

#2 0x00005555555a2a22 in rpc_call (routine_id=11111) at /home/alpha/packages/midas/src/midas.cxx:13866

#3 0x000055555562699d in bm_receive_event_rpc (buffer_handle=buffer_handle@entry=2, buf=buf@entry=0x0, buf_size=buf_size@entry=0x0, ppevent=ppevent@entry=0x0, pvec=pvec@entry=0x7fffffffd700,

timeout_msec=timeout_msec@entry=100) at /home/alpha/packages/midas/src/midas.cxx:10510

#4 0x0000555555631082 in bm_receive_event_vec (buffer_handle=2, pvec=pvec@entry=0x7fffffffd700, timeout_msec=timeout_msec@entry=100) at /home/alpha/packages/midas/src/midas.cxx:10794

#5 0x0000555555673dbb in TMEventBuffer::ReceiveEvent (this=this@entry=0x555557388b30, e=e@entry=0x7fffffffd700, timeout_msec=timeout_msec@entry=100) at /home/alpha/packages/midas/src/tmfe.cxx:312

#6 0x0000555555607b56 in ReceiveEvent (b=0x555557388b30, e=0x7fffffffd6c0, timeout_msec=100) at /home/alpha/packages/midas/manalyzer/manalyzer.cxx:1411

#7 0x000055555560d8dc in ProcessMidasOnlineTmfe (args=..., progname=<optimized out>, hostname=<optimized out>, exptname=<optimized out>, bufname=<optimized out>, event_id=<optimized out>,

trigger_mask=<optimized out>, sampling_type_string=<optimized out>, num_analyze=0, writer=<optimized out>, multithread=<optimized out>, profiler=<optimized out>,

queue_interval_check=<optimized out>) at /home/alpha/packages/midas/manalyzer/manalyzer.cxx:1534

#8 0x000055555560f93b in manalyzer_main (argc=<optimized out>, argv=<optimized out>) at /usr/include/c++/9/bits/basic_string.h:2304

#9 0x00007ffff5d37083 in __libc_start_main (main=0x5555555b1130 <main(int, char**)>, argc=8, argv=0x7fffffffdda8, init=<optimized out>, fini=<optimized out>, rtld_fini=<optimized out>,

stack_end=0x7fffffffdd98) at ../csu/libc-start.c:308

#10 0x00005555555b184e in _start () at /usr/include/c++/9/bits/stl_vector.h:94

Any suggestions? Many thanks |

|

2415

|

18 Jul 2022 |

Konstantin Olchanski | Bug Report | RPC timeout for manalyzer over network | > In ALPHA, I get RPC timeouts running a (reasonably heavy) analyzer on a remote machine (connected directly via a ~30 meter 10Gbe Ethernet cable) after ~5 minutes of running. If I run the analyser locally, I dont not see a timeout...

there is a subtle bug in the mserver. under rare conditions, ss_suspend() will recurse in an unexpected way

and mserver will go to sleep waiting for data from a udp socket (that will never arrive, so sleep forever).

remote client will see it as an rpc timeout. in my tests (and in ALPHA-g at CERN, as reported by Joseph),

I see this rare condition to happen about every 5 minutes. in normal use, this is the first time we become

aware of this problem, the best I can tell this bug was in the mserver since day one.

commit https://bitbucket.org/tmidas/midas/commits/fbd06ad9d665b1341bd58b0e28d6625877f3cbd0

to develop and

to release/midas-2022-05

The stack trace that shows the mserver hang/crash (sleep() is the stand-in for the sleep-forever socket read).

(gdb) bt

#0 0x00007f922c53f9e0 in __nanosleep_nocancel () from /lib64/libc.so.6

#1 0x00007f922c53f894 in sleep () from /lib64/libc.so.6

#2 0x0000000000451922 in ss_suspend (millisec=millisec@entry=100, msg=msg@entry=1) at /home/agmini/packages/midas/src/system.cxx:4433

#3 0x0000000000411d53 in bm_wait_for_more_events_locked (pbuf_guard=..., pc=pc@entry=0x7f920639b93c, timeout_msec=timeout_msec@entry=100,

unlock_read_cache=unlock_read_cache@entry=1) at /home/agmini/packages/midas/src/midas.cxx:9429

#4 0x00000000004238c3 in bm_fill_read_cache_locked (timeout_msec=100, pbuf_guard=...) at /home/agmini/packages/midas/src/midas.cxx:9003

#5 bm_read_buffer (pbuf=pbuf@entry=0xdf8b50, buffer_handle=buffer_handle@entry=2, bufptr=bufptr@entry=0x0, buf=buf@entry=0x7f9203d75020,

buf_size=buf_size@entry=0x7f920639aa20, vecptr=vecptr@entry=0x0, timeout_msec=timeout_msec@entry=100, convert_flags=0,

dispatch=dispatch@entry=0) at /home/agmini/packages/midas/src/midas.cxx:10279

#6 0x0000000000424161 in bm_receive_event (buffer_handle=2, destination=0x7f9203d75020, buf_size=0x7f920639aa20, timeout_msec=100)

at /home/agmini/packages/midas/src/midas.cxx:10649

#7 0x0000000000406ae4 in rpc_server_dispatch (index=11111, prpc_param=0x7ffcad70b7a0) at /home/agmini/packages/midas/progs/mserver.cxx:575

#8 0x000000000041ce9c in rpc_execute (sock=10, buffer=buffer@entry=0xe11570 "g+", convert_flags=0)

at /home/agmini/packages/midas/src/midas.cxx:15003

#9 0x000000000041d7a5 in rpc_server_receive_rpc (idx=idx@entry=0, sa=0xde6ba0) at /home/agmini/packages/midas/src/midas.cxx:15958

#10 0x0000000000451455 in ss_suspend (millisec=millisec@entry=1000, msg=msg@entry=0) at /home/agmini/packages/midas/src/system.cxx:4575

#11 0x000000000041deb2 in rpc_server_loop () at /home/agmini/packages/midas/src/midas.cxx:15907

#12 0x0000000000405266 in main (argc=9, argv=<optimized out>) at /home/agmini/packages/midas/progs/mserver.cxx:390

(gdb)

K.O. |

|

1849

|

06 Mar 2020 |

Lars Martin | Forum | RPC error | I ported a bunch of frontends to C++ and now I'm occasionally getting this RPC

error message:

http error: readyState: 4, HTTP status: 502 (Proxy Error), batch request: method:

"db_get_values", params: [object Object], id: 1583456958869 method: "get_alarms",

params: null, id: 1583456958869 method: "cm_msg_retrieve", params: [object

Object], id: 1583456958869 method: "cm_msg_retrieve", params: [object Object],

id: 1583456958869

I'm assuming I'm doing wrong something somewhere, but does this message contain

information where to look? Does the ID mean something? |

|

1850

|

08 Mar 2020 |

Konstantin Olchanski | Forum | RPC error | I do not see this error, but there was one more report (they did not clearly say what http errors

they see) https://bitbucket.org/tmidas/midas/issues/209/get-rid-of-mjsonrpc-dialogs-put-it-to-

the

To debug this, I need to know: what version of MIDAS, what version of what web browser, what

computer is mhttpd running on? (so I can go look at the log files).

Also can you say more when you see these errors? Every time from every midas web page, or only

some pages or only when you do something specific (push some button, etc?).

> I ported a bunch of frontends to C++ and now I'm occasionally getting this RPC

> error message:

>

> http error: readyState: 4, HTTP status: 502 (Proxy Error), batch request: method:

> "db_get_values", params: [object Object], id: 1583456958869 method: "get_alarms",

> params: null, id: 1583456958869 method: "cm_msg_retrieve", params: [object

> Object], id: 1583456958869 method: "cm_msg_retrieve", params: [object Object],

> id: 1583456958869

>

> I'm assuming I'm doing wrong something somewhere, but does this message contain

> information where to look? Does the ID mean something?

It is unlikely that this error has anything to do with the frontends: usually web page interaction

goes through: web browser - network - apache httpd - localhost - mhttpd - midas odb.

http error 502 is very generic, does not tell us much about what happened, there may be more

information in the httpd log files.

the json-rpc request "id" is generated by midas code in the web browser and it currently is a

timestamp. it is not used for anything. but it is required by the json-rpc standard.

K.O.

K.O. |

|