| ID |

Date |

Author |

Topic |

Subject |

|

3044

|

25 May 2025 |

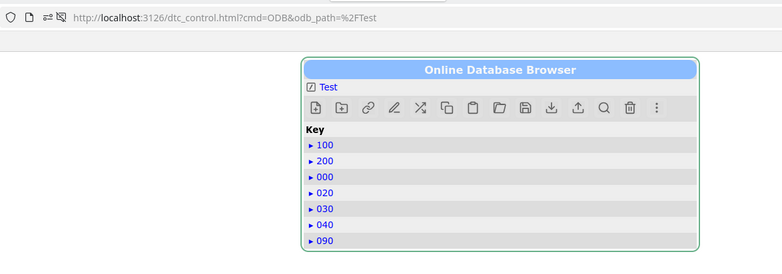

Pavel Murat | Bug Report | subdirectory ordering in ODB browser ? | Dear MIDAS experts,

I'm running into a minor but annoying issue with the subdirectory name ordering by the ODB browser.

I have a straw-man hash map which includes ODB subdirectories named "000", "010", ... "300",

and I'm yet to succeed to have them displayed in a "natural" order: the subdirectories with names

starting from "0" always show up on the bottom of the list - see attached .png file.

Neither interactive re-ordering nor manual ordering of the items in the input .json file helps.

I have also attached a .json file which can be loaded with odbedit to reproduce the issue.

Although I'm using a relatively recent - ~ 20 days old - commit, 'db1819ac', is it possible

that this issue has already been sorted out ?

-- many thanks, regards, Pasha |

| Attachment 1: panel_map.json

|

{

"/MIDAS version" : "2.1",

"/MIDAS git revision" : "Sat May 3 17:22:36 2025 +0200 - midas-2025-01-a-192-gdb1819ac-dirty on branch HEAD",

"/filename" : "PanelMap.json",

"/ODB path" : "/Test/PanelMap",

"000" : {

},

"020" : {

},

"030" : {

},

"040" : {

},

"090" : {

},

"100" : {

},

"200" : {

}

}

|

| Attachment 2: panel_map.png

|

|

|

3043

|

24 May 2025 |

Pavel Murat | Info | ROOT scripting for MIDAS seems to work pretty much out of the box | Dear All,

I'm pretty sure many know this already, however I found this feature by a an accident

and want to share with those who don't know about it yet - seems very useful.

- it looks that one can use ROOT scripting with rootcling and call from the

interactive ROOT prompt any function defined in midas.h and access ODB seemingly

WITHOUT DOING anything special

- more surprisingly, that also works for odbxx, with one minor exception in handling

the 64-bit types - the proof is in attachment. The script test_odbxx.C loaded

interactively is Stefan's

https://bitbucket.org/tmidas/midas/src/develop/examples/odbxx/odbxx_test.cxx

with one minor change - the line

o[Int64 Key] = -1LL;

is replaced with

int64_t x = -1LL;

o["Int64 Key"] = x;

- apparently the interpeter has its limitations.

My rootlogon.C file doesn't load any libraries, it only defines the appropriate

include paths. So it seems that everything works pretty much out of the box.

One issue has surfaced however. All that worked despite my experiment

had its name="test_025", while the example specifies experiment="test".

Is it possible that that only first 4 characters are being tested ?

-- regards, Pasha |

| Attachment 1: log.txt

|

mu2etrk@mu2edaq22:~/test_stand/daquser_001>root.exe

------------------------------------------------------------------

| Welcome to ROOT 6.32.06 https://root.cern |

| (c) 1995-2024, The ROOT Team; conception: R. Brun, F. Rademakers |

| Built for linuxx8664gcc on May 04 2025, 19:16:31 |

| From tags/6-32-06@6-32-06 |

| With g++ (Spack GCC) 13.1.0 |

| Try '.help'/'.?', '.demo', '.license', '.credits', '.quit'/'.q' |

------------------------------------------------------------------

05-24 23:26:22.397661 MetricManager:31 INFO MetricManager(): MetricManager CONSTRUCTOR

root [0] .L test_odbxx.C

root [1] main()

Delete key /Test/Settings not found in ODB

Created ODB key "/Test/Settings"

Created ODB key "/Test/Settings/Int32 Key"

Set ODB key "/Test/Settings/Int32 Key" = 42

Created ODB key "/Test/Settings/Bool Key"

Set ODB key "/Test/Settings/Bool Key" = true

Created ODB key "/Test/Settings/Subdir"

Created ODB key "/Test/Settings/Subdir/Int32 key"

Set ODB key "/Test/Settings/Subdir/Int32 key" = 123

Created ODB key "/Test/Settings/Subdir/Double Key"

Set ODB key "/Test/Settings/Subdir/Double Key" = 1.200000

Created ODB key "/Test/Settings/Subdir/Subsub"

Created ODB key "/Test/Settings/Subdir/Subsub/Float key"

Set ODB key "/Test/Settings/Subdir/Subsub/Float key" = 1.200000

Created ODB key "/Test/Settings/Subdir/Subsub/String Key"

Set ODB key "/Test/Settings/Subdir/Subsub/String Key" = "Hello"

Created ODB key "/Test/Settings/Int Array"

Set ODB key "/Test/Settings/Int Array[0...2]" = [1,2,3]

Created ODB key "/Test/Settings/Double Array"

Set ODB key "/Test/Settings/Double Array[0...2]" = [1.200000,2.300000,3.400000]

Created ODB key "/Test/Settings/String Array"

Set ODB key "/Test/Settings/String Array[0...2]" = ["Hello1","Hello2","Hello3"]

Created ODB key "/Test/Settings/Large Array"

Set ODB key "/Test/Settings/Large Array[0...9]" = [0,0,0,0,0,0,0,0,0,0]

Created ODB key "/Test/Settings/Large String"

Set ODB key "/Test/Settings/Large String" = ""

Created ODB key "/Test/Settings/String Array 10"

Set ODB key "/Test/Settings/String Array 10[0...9]" = ["","","","","","","","","",""]

Created ODB key "/Test/Settings/Large String Array 10"

Set ODB key "/Test/Settings/Large String Array 10[0...9]" = ["","","","","","","","","",""]

Get definition for ODB key "/Test/Settings"

Get definition for ODB key "/Test/Settings/Int32 Key"

Get definition for ODB key "/Test/Settings/Int32 Key"

Get ODB key "/Test/Settings/Int32 Key": 42

Get definition for ODB key "/Test/Settings/Bool Key"

Get definition for ODB key "/Test/Settings/Bool Key"

Get ODB key "/Test/Settings/Bool Key": true

Get definition for ODB key "/Test/Settings/Subdir"

Get definition for ODB key "/Test/Settings/Subdir/Int32 key"

Get definition for ODB key "/Test/Settings/Subdir/Int32 key"

Get ODB key "/Test/Settings/Subdir/Int32 key": 123

Get definition for ODB key "/Test/Settings/Subdir/Double Key"

Get definition for ODB key "/Test/Settings/Subdir/Double Key"

Get ODB key "/Test/Settings/Subdir/Double Key": 1.200000

Get definition for ODB key "/Test/Settings/Subdir/Subsub"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/Float key"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/Float key"

Get ODB key "/Test/Settings/Subdir/Subsub/Float key": 1.200000

Get definition for ODB key "/Test/Settings/Subdir/Subsub/String Key"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/String Key"

Get ODB key "/Test/Settings/Subdir/Subsub/String Key": "Hello"

Get definition for ODB key "/Test/Settings/Subdir/Subsub"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/Float key"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/Float key"

Get ODB key "/Test/Settings/Subdir/Subsub/Float key": 1.200000

Get definition for ODB key "/Test/Settings/Subdir/Subsub/String Key"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/String Key"

Get ODB key "/Test/Settings/Subdir/Subsub/String Key": "Hello"

Get definition for ODB key "/Test/Settings/Subdir"

Get definition for ODB key "/Test/Settings/Subdir/Subsub"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/Float key"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/Float key"

Get ODB key "/Test/Settings/Subdir/Subsub/Float key": 1.200000

Get definition for ODB key "/Test/Settings/Subdir/Subsub/String Key"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/String Key"

Get ODB key "/Test/Settings/Subdir/Subsub/String Key": "Hello"

Get definition for ODB key "/Test/Settings/Subdir/Int32 key"

Get definition for ODB key "/Test/Settings/Subdir/Int32 key"

Get ODB key "/Test/Settings/Subdir/Int32 key": 123

Get definition for ODB key "/Test/Settings/Subdir/Double Key"

Get definition for ODB key "/Test/Settings/Subdir/Double Key"

Get ODB key "/Test/Settings/Subdir/Double Key": 1.200000

Get definition for ODB key "/Test/Settings/Subdir/Subsub"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/Float key"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/Float key"

Get ODB key "/Test/Settings/Subdir/Subsub/Float key": 1.200000

Get definition for ODB key "/Test/Settings/Subdir/Subsub/String Key"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/String Key"

Get ODB key "/Test/Settings/Subdir/Subsub/String Key": "Hello"

Get definition for ODB key "/Test/Settings/Subdir/Subsub"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/Float key"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/Float key"

Get ODB key "/Test/Settings/Subdir/Subsub/Float key": 1.200000

Get definition for ODB key "/Test/Settings/Subdir/Subsub/String Key"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/String Key"

Get ODB key "/Test/Settings/Subdir/Subsub/String Key": "Hello"

Get definition for ODB key "/Test/Settings/Int Array"

Get definition for ODB key "/Test/Settings/Int Array"

Get ODB key "/Test/Settings/Int Array[0...2]": [1,2,3]

Get definition for ODB key "/Test/Settings/Double Array"

Get definition for ODB key "/Test/Settings/Double Array"

Get ODB key "/Test/Settings/Double Array[0...2]": [1.200000,2.300000,3.400000]

Get definition for ODB key "/Test/Settings/String Array"

Get definition for ODB key "/Test/Settings/String Array"

Get ODB key "/Test/Settings/String Array[0...2]": ["Hello1,Hello2,Hello3"]

Get definition for ODB key "/Test/Settings/Large Array"

Get definition for ODB key "/Test/Settings/Large Array"

Get ODB key "/Test/Settings/Large Array[0...9]": [0,0,0,0,0,0,0,0,0,0]

Get definition for ODB key "/Test/Settings/Large String"

Get definition for ODB key "/Test/Settings/Large String"

Get ODB key "/Test/Settings/Large String": ""

Get definition for ODB key "/Test/Settings/String Array 10"

Get definition for ODB key "/Test/Settings/String Array 10"

Get ODB key "/Test/Settings/String Array 10[0...9]": [",,,,,,,,,"]

Get definition for ODB key "/Test/Settings/Large String Array 10"

Get definition for ODB key "/Test/Settings/Large String Array 10"

Get ODB key "/Test/Settings/Large String Array 10[0...9]": [",,,,,,,,,"]

Get definition for ODB key "/Test/Settings"

Get definition for ODB key "/Test/Settings/Subdir"

Get definition for ODB key "/Test/Settings/Subdir/Subsub"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/Float key"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/Float key"

Get ODB key "/Test/Settings/Subdir/Subsub/Float key": 1.200000

Get definition for ODB key "/Test/Settings/Subdir/Subsub/String Key"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/String Key"

Get ODB key "/Test/Settings/Subdir/Subsub/String Key": "Hello"

Get definition for ODB key "/Test/Settings/Subdir/Int32 key"

Get definition for ODB key "/Test/Settings/Subdir/Int32 key"

Get ODB key "/Test/Settings/Subdir/Int32 key": 123

Get definition for ODB key "/Test/Settings/Subdir/Double Key"

Get definition for ODB key "/Test/Settings/Subdir/Double Key"

Get ODB key "/Test/Settings/Subdir/Double Key": 1.200000

Get definition for ODB key "/Test/Settings/Subdir/Subsub"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/Float key"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/Float key"

Get ODB key "/Test/Settings/Subdir/Subsub/Float key": 1.200000

Get definition for ODB key "/Test/Settings/Subdir/Subsub/String Key"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/String Key"

Get ODB key "/Test/Settings/Subdir/Subsub/String Key": "Hello"

Get definition for ODB key "/Test/Settings/Subdir/Subsub"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/Float key"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/Float key"

Get ODB key "/Test/Settings/Subdir/Subsub/Float key": 1.200000

Get definition for ODB key "/Test/Settings/Subdir/Subsub/String Key"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/String Key"

Get ODB key "/Test/Settings/Subdir/Subsub/String Key": "Hello"

Get definition for ODB key "/Test/Settings/Int32 Key"

Get definition for ODB key "/Test/Settings/Int32 Key"

Get ODB key "/Test/Settings/Int32 Key": 42

Get definition for ODB key "/Test/Settings/Bool Key"

Get definition for ODB key "/Test/Settings/Bool Key"

Get ODB key "/Test/Settings/Bool Key": true

Get definition for ODB key "/Test/Settings/Subdir"

Get definition for ODB key "/Test/Settings/Subdir/Int32 key"

Get definition for ODB key "/Test/Settings/Subdir/Int32 key"

Get ODB key "/Test/Settings/Subdir/Int32 key": 123

Get definition for ODB key "/Test/Settings/Subdir/Double Key"

Get definition for ODB key "/Test/Settings/Subdir/Double Key"

Get ODB key "/Test/Settings/Subdir/Double Key": 1.200000

Get definition for ODB key "/Test/Settings/Subdir/Subsub"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/Float key"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/Float key"

Get ODB key "/Test/Settings/Subdir/Subsub/Float key": 1.200000

Get definition for ODB key "/Test/Settings/Subdir/Subsub/String Key"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/String Key"

Get ODB key "/Test/Settings/Subdir/Subsub/String Key": "Hello"

Get definition for ODB key "/Test/Settings/Subdir/Subsub"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/Float key"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/Float key"

Get ODB key "/Test/Settings/Subdir/Subsub/Float key": 1.200000

Get definition for ODB key "/Test/Settings/Subdir/Subsub/String Key"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/String Key"

Get ODB key "/Test/Settings/Subdir/Subsub/String Key": "Hello"

Get definition for ODB key "/Test/Settings/Subdir"

Get definition for ODB key "/Test/Settings/Subdir/Subsub"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/Float key"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/Float key"

Get ODB key "/Test/Settings/Subdir/Subsub/Float key": 1.200000

Get definition for ODB key "/Test/Settings/Subdir/Subsub/String Key"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/String Key"

Get ODB key "/Test/Settings/Subdir/Subsub/String Key": "Hello"

Get definition for ODB key "/Test/Settings/Subdir/Int32 key"

Get definition for ODB key "/Test/Settings/Subdir/Int32 key"

Get ODB key "/Test/Settings/Subdir/Int32 key": 123

Get definition for ODB key "/Test/Settings/Subdir/Double Key"

Get definition for ODB key "/Test/Settings/Subdir/Double Key"

Get ODB key "/Test/Settings/Subdir/Double Key": 1.200000

Get definition for ODB key "/Test/Settings/Subdir/Subsub"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/Float key"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/Float key"

Get ODB key "/Test/Settings/Subdir/Subsub/Float key": 1.200000

Get definition for ODB key "/Test/Settings/Subdir/Subsub/String Key"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/String Key"

Get ODB key "/Test/Settings/Subdir/Subsub/String Key": "Hello"

Get definition for ODB key "/Test/Settings/Subdir/Subsub"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/Float key"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/Float key"

Get ODB key "/Test/Settings/Subdir/Subsub/Float key": 1.200000

Get definition for ODB key "/Test/Settings/Subdir/Subsub/String Key"

Get definition for ODB key "/Test/Settings/Subdir/Subsub/String Key"

Get ODB key "/Test/Settings/Subdir/Subsub/String Key": "Hello"

Get definition for ODB key "/Test/Settings/Int Array"

Get definition for ODB key "/Test/Settings/Int Array"

Get ODB key "/Test/Settings/Int Array[0...2]": [1,2,3]

Get definition for ODB key "/Test/Settings/Double Array"

Get definition for ODB key "/Test/Settings/Double Array"

Get ODB key "/Test/Settings/Double Array[0...2]": [1.200000,2.300000,3.400000]

Get definition for ODB key "/Test/Settings/String Array"

Get definition for ODB key "/Test/Settings/String Array"

Get ODB key "/Test/Settings/String Array[0...2]": ["Hello1,Hello2,Hello3"]

Get definition for ODB key "/Test/Settings/Large Array"

Get definition for ODB key "/Test/Settings/Large Array"

Get ODB key "/Test/Settings/Large Array[0...9]": [0,0,0,0,0,0,0,0,0,0]

Get definition for ODB key "/Test/Settings/Large String"

Get definition for ODB key "/Test/Settings/Large String"

Get ODB key "/Test/Settings/Large String": ""

Get definition for ODB key "/Test/Settings/String Array 10"

Get definition for ODB key "/Test/Settings/String Array 10"

Get ODB key "/Test/Settings/String Array 10[0...9]": [",,,,,,,,,"]

Get definition for ODB key "/Test/Settings/Large String Array 10"

Get definition for ODB key "/Test/Settings/Large String Array 10"

Get ODB key "/Test/Settings/Large String Array 10[0...9]": [",,,,,,,,,"]

Get ODB key "/Test/Settings/Int32 Key": 42

Get ODB key "/Test/Settings/Bool Key": true

Get ODB key "/Test/Settings/Subdir/Int32 key": 123

Get ODB key "/Test/Settings/Subdir/Double Key": 1.200000

Get ODB key "/Test/Settings/Subdir/Subsub/Float key": 1.200000

Get ODB key "/Test/Settings/Subdir/Subsub/String Key": "Hello"

Get ODB key "/Test/Settings/Int Array[0...2]": [1,2,3]

Get ODB key "/Test/Settings/Double Array[0...2]": [1.200000,2.300000,3.400000]

Get ODB key "/Test/Settings/String Array[0...2]": ["Hello1,Hello2,Hello3"]

Get ODB key "/Test/Settings/Large Array[0...9]": [0,0,0,0,0,0,0,0,0,0]

Get ODB key "/Test/Settings/Large String": ""

Get ODB key "/Test/Settings/String Array 10[0...9]": [",,,,,,,,,"]

Get ODB key "/Test/Settings/Large String Array 10[0...9]": [",,,,,,,,,"]

"Settings": {

"Int32 Key": 42,

"Bool Key": true,

"Subdir": {

"Int32 key": 123,

"Double Key": 1.200000,

"Subsub": {

"Float key": 1.200000,

"String Key": "Hello"

}

},

"Int Array": [1,2,3],

"Double Array": [1.200000,2.300000,3.400000],

"String Array": ["Hello1","Hello2","Hello3"],

"Large Array": [0,0,0,0,0,0,0,0,0,0],

"Large String": "",

"String Array 10": ["","","","","","","","","",""],

"Large String Array 10": ["","","","","","","","","",""]

}

Set ODB key "/Test/Settings/Int32 Key" = 42

Get ODB key "/Test/Settings/Int32 Key": 42

Set ODB key "/Test/Settings/Int32 Key" = 43

Get ODB key "/Test/Settings/Int32 Key": 43

Set ODB key "/Test/Settings/Int32 Key" = 44

Get ODB key "/Test/Settings/Int32 Key": 44

Set ODB key "/Test/Settings/Int32 Key" = 57

Should be 57: Get ODB key "/Test/Settings/Int32 Key": 57

57

Created ODB key "/Test/Settings/Int64 Key"

Set ODB key "/Test/Settings/Int64 Key" = -1

Get ODB key "/Test/Settings/Int64 Key": -1

AAAA:0xffffffffffffffff

Created ODB key "/Test/Settings/UInt64 Key"

Set ODB key "/Test/Settings/UInt64 Key" = 1311768465173141112

Get ODB key "/Test/Settings/UInt64 Key": 1311768465173141112

0x1234567812345678

Set ODB key "/Test/Settings/Bool Key" = false

Get ODB key "/Test/Settings/Bool Key": false

Set ODB key "/Test/Settings/Bool Key" = true

Set ODB key "/Test/Settings/Subdir/Subsub/String Key" = "Hello"

Get ODB key "/Test/Settings/Subdir/Subsub/String Key": "Hello"

Set ODB key "/Test/Settings/Subdir/Subsub/String Key" = "Hello world!"

Get ODB key "/Test/Settings/Subdir/Subsub/String Key": "Hello world!"

Set ODB key "/Test/Settings/Int32 Key" = 43

Get ODB key "/Test/Settings/Int Array[0...2]": [1,2,3]

Set ODB key "/Test/Settings/Int Array[0...2]" = [10,10,10]

Get ODB key "/Test/Settings/Int Array[1]": [10]

Set ODB key "/Test/Settings/Int Array[1]" = 2

Get ODB key "/Test/Settings/Int Array[1]": [2]

Get ODB key "/Test/Settings/Int Array[0...4]": [10,2,10,0,0]

Set ODB key "/Test/Settings/Int Array[0...4]" = [11,3,11,1,1]

Arrays size is 5

Set ODB key "/Test/Settings/Int Array[10]" = 0

Get ODB key "/Test/Settings/Int Array[10]": [0]

Set ODB key "/Test/Settings/Int Array[10]" = 10

Get ODB key "/Test/Settings/String Array[0...2]": ["Hello1,Hello2,Hello3"]

Set ODB key "/Test/Settings/String Array[0...2]" = ["Hello1","New String","Hello3"]

Get ODB key "/Test/Settings/String Array[2]": ["Hello3"]

Set ODB key "/Test/Settings/String Array[2]" = "Another String"

Set ODB key "/Test/Settings/String Array[3]" = ""

Get ODB key "/Test/Settings/String Array[3]": [""]

Set ODB key "/Test/Settings/String Array[3]" = "One more"

... 468 more lines ...

|

|

3042

|

19 May 2025 |

Jonas A. Krieger | Suggestion | manalyzer root output file with custom filename including run number | Hi all,

Would it be possible to extend manalyzer to support custom .root file names that include the run number?

As far as I understand, the current behavior is as follows:

The default filename is ./root_output_files/output%05d.root , which can be customized by the following two command line arguments.

-Doutputdirectory: Specify output root file directory

-Ooutputfile.root: Specify output root file filename

If an output file name is specified with -O, -D is ignored, so the full path should be provided to -O.

I am aiming to write files where the filename contains sufficient information to be unique (e.g., experiment, year, and run number). However, if I specify it with -O, this would require restarting manalyzer after every run; a scenario that I would like to avoid if possible.

Please find a suggestion of how manalyzer could be extended to introduce this functionality through an additional command line argument at

https://bitbucket.org/krieger_j/manalyzer/commits/24f25bc8fe3f066ac1dc576349eabf04d174deec

Above code would allow the following call syntax: ' ./manalyzer.exe -O/data/experiment1_%06d.root --OutputNumbered '

But note that as is, it would fail if a user specifies an incompatible format such as -Ooutput%s.root .

So a safer, but less flexible option might be to instead have the user provide only a prefix, and then attach %05d.root in the code.

Thank you for considering these suggestions! |

|

3041

|

16 May 2025 |

Konstantin Olchanski | Bug Report | history_schema.cxx fails to build | > > we have a CI setup which fails since 06.05.2025 to build the history_schema.cxx.

> > There was a major change in this code in the commits fe7f6a6 and 159d8d3.

>

> Missing from this report is critical information: HAVE_PGSQL is set.

>

> I will have to check why it is not set in my development account.

>

The following is needed to build MySQL and PgSQL support in MIDAS,

they were missing on my development machine. MySQL support was enabled

by accident because kde-bloat packages pull in the MySQL (not the MariaDB)

client and server. Fixed now, added to standard list of Ubuntu packages:

https://daq00.triumf.ca/DaqWiki/index.php/Ubuntu#install_missing_packages

apt -y install mariadb-client libmariadb-dev ### mysql client for MIDAS

apt -y install postgresql-common libpq-dev ### postgresql client for MIDAS

>

> I will have to check why it is not set in our bitbucket build.

>

Added MySQL and PgSQL to bitbucket Ubuntu-24 build (sqlite was already enabled).

>

> Thank you for reporting this problem.

>

Fix committed. Sorry about this problem.

K.O. |

|

3040

|

16 May 2025 |

Konstantin Olchanski | Bug Report | history_schema.cxx fails to build | > we have a CI setup which fails since 06.05.2025 to build the history_schema.cxx.

> There was a major change in this code in the commits fe7f6a6 and 159d8d3.

Missing from this report is critical information: HAVE_PGSQL is set.

I will have to check why it is not set in my development account.

I will have to check why it is not set in our bitbucket build.

Thank you for reporting this problem.

K.O. |

|

3039

|

16 May 2025 |

Marius Koeppel | Bug Report | history_schema.cxx fails to build | Hi all,

we have a CI setup which fails since 06.05.2025 to build the history_schema.cxx. There was a major change in this code in the commits fe7f6a6 and 159d8d3.

image: rootproject/root:latest

pipelines:

default:

- step:

name: 'Build and test'

runs-on:

- self.hosted

- linux

script:

- apt-get update

- DEBIAN_FRONTEND=noninteractive apt-get -y install python3-all python3-pip python3-pytest-dependency python3-pytest

- DEBIAN_FRONTEND=noninteractive apt-get -y install gcc g++ cmake git python3-all libssl-dev libz-dev libcurl4-gnutls-dev sqlite3 libsqlite3-dev libboost-all-dev linux-headers-generic

- gcc -v

- cmake --version

- git clone https://marius_koeppel@bitbucket.org/tmidas/midas.git

- cd midas

- git submodule update --init --recursive

- mkdir build

- cd build

- cmake ..

- make -j4 install

Error is:

/opt/atlassian/pipelines/agent/build/midas/src/history_schema.cxx:5991:10: error: ‘class HsSqlSchema’ has no member named ‘table_name’; did you mean ‘fTableName’?

5991 | s->table_name = xtable_name;

| ^~~~~~~~~~

| fTableName

/opt/atlassian/pipelines/agent/build/midas/src/history_schema.cxx: In member function ‘virtual int PgsqlHistory::read_column_names(HsSchemaVector*, const char*, const char*)’:

/opt/atlassian/pipelines/agent/build/midas/src/history_schema.cxx:6034:14: error: ‘class HsSqlSchema’ has no member named ‘table_name’; did you mean ‘fTableName’?

6034 | if (s->table_name != table_name)

| ^~~~~~~~~~

| fTableName

/opt/atlassian/pipelines/agent/build/midas/src/history_schema.cxx:6065:16: error: ‘struct HsSchemaEntry’ has no member named ‘fNumBytes’

6065 | se.fNumBytes = 0;

| ^~~~~~~~~

/opt/atlassian/pipelines/agent/build/midas/src/history_schema.cxx:6140:30: error: ‘__gnu_cxx::__alloc_traits<std::allocator<HsSchemaEntry>, HsSchemaEntry>::value_type’ {aka ‘struct HsSchemaEntry’} has no member named ‘fNumBytes’

6140 | s->fVariables[j].fNumBytes = tid_size;

| ^~~~~~~~~

At global scope:

cc1plus: note: unrecognized command-line option ‘-Wno-vla-cxx-extension’ may have been intended to silence earlier diagnostics

make[2]: *** [CMakeFiles/objlib.dir/build.make:384: CMakeFiles/objlib.dir/src/history_schema.cxx.o] Error 1

make[2]: *** Waiting for unfinished jobs....

make[1]: *** [CMakeFiles/Makefile2:404: CMakeFiles/objlib.dir/all] Error 2

make: *** [Makefile:136: all] Error 2 |

|

3038

|

05 May 2025 |

Stefan Ritt | Info | db_delete_key(TRUE) | I would handle this actually like symbolic links are handled under linux. If you delete a symbolic link, the link gets

detected and NOT the file the link is pointing to.

So I conclude that the "follow links" is a misconception and should be removed.

Stefan |

|

3037

|

05 May 2025 |

Stefan Ritt | Bug Report | abort and core dump in cm_disconnect_experiment() | I would be in favor of not curing the symptoms, but fixing the cause of the problem. I guess you put the watchdog disable into mhist, right? Usually mhist is called locally, so no mserver should be

involved. If not, I would prefer to propagate the watchdog disable to the mserver side as well, if that's not been done already. Actually I never would disable the watchdog, but set it to a reasonable

maximal value, like a few minutes or so. In that case, the client gets still removed if it crashes for some reason.

My five cents,

Stefan |

|

3036

|

05 May 2025 |

Konstantin Olchanski | Bug Report | abort and core dump in cm_disconnect_experiment() | I noticed that some programs like mhist, if they take too long, there is an abort and core dump at the very end. This is because they forgot to

set/disable the watchdog timeout, and they got remove from odb and from the SYSMSG event buffer.

mhist is easy to fix, just add the missing call to disable the watchdog, but I also see a similar crash in the mserver which of course requires

the watchdog.

In either case, the crash is in cm_disconnect_experiment() where we know we are shutting down and we know there is no useful information in the

core dump.

I think I will fix it by adding a flag to bm_close_buffer() to bypass/avoid the crash from "we are already removed from this buffer".

Stack trace from mhist:

[mhist,ERROR] [midas.cxx:5977:bm_validate_client_index,ERROR] My client index 6 in buffer 'SYSMSG' is invalid: client name '', pid 0 should be my

pid 3113263

[mhist,ERROR] [midas.cxx:5980:bm_validate_client_index,ERROR] Maybe this client was removed by a timeout. See midas.log. Cannot continue,

aborting...

bm_validate_client_index: My client index 6 in buffer 'SYSMSG' is invalid: client name '', pid 0 should be my pid 3113263

bm_validate_client_index: Maybe this client was removed by a timeout. See midas.log. Cannot continue, aborting...

Program received signal SIGABRT, Aborted.

Download failed: Invalid argument. Continuing without source file ./nptl/./nptl/pthread_kill.c.

__pthread_kill_implementation (no_tid=0, signo=6, threadid=<optimized out>) at ./nptl/pthread_kill.c:44

warning: 44 ./nptl/pthread_kill.c: No such file or directory

(gdb) bt

#0 __pthread_kill_implementation (no_tid=0, signo=6, threadid=<optimized out>) at ./nptl/pthread_kill.c:44

#1 __pthread_kill_internal (signo=6, threadid=<optimized out>) at ./nptl/pthread_kill.c:78

#2 __GI___pthread_kill (threadid=<optimized out>, signo=signo@entry=6) at ./nptl/pthread_kill.c:89

#3 0x00007ffff71df27e in __GI_raise (sig=sig@entry=6) at ../sysdeps/posix/raise.c:26

#4 0x00007ffff71c28ff in __GI_abort () at ./stdlib/abort.c:79

#5 0x00005555555768b4 in bm_validate_client_index_locked (pbuf_guard=...) at /home/olchansk/git/midas/src/midas.cxx:5993

#6 0x000055555557ed7a in bm_get_my_client_locked (pbuf_guard=...) at /home/olchansk/git/midas/src/midas.cxx:6000

#7 bm_close_buffer (buffer_handle=1) at /home/olchansk/git/midas/src/midas.cxx:7162

#8 0x000055555557f101 in cm_msg_close_buffer () at /home/olchansk/git/midas/src/midas.cxx:490

#9 0x000055555558506b in cm_disconnect_experiment () at /home/olchansk/git/midas/src/midas.cxx:2904

#10 0x000055555556d2ad in main (argc=<optimized out>, argv=<optimized out>) at /home/olchansk/git/midas/progs/mhist.cxx:882

(gdb)

Stack trace from mserver:

#0 __pthread_kill_implementation (no_tid=0, signo=6, threadid=138048230684480) at ./nptl/pthread_kill.c:44

44 ./nptl/pthread_kill.c: No such file or directory.

(gdb) bt

#0 __pthread_kill_implementation (no_tid=0, signo=6, threadid=138048230684480) at ./nptl/pthread_kill.c:44

#1 __pthread_kill_internal (signo=6, threadid=138048230684480) at ./nptl/pthread_kill.c:78

#2 __GI___pthread_kill (threadid=138048230684480, signo=signo@entry=6) at ./nptl/pthread_kill.c:89

#3 0x00007d8ddbc4e476 in __GI_raise (sig=sig@entry=6) at ../sysdeps/posix/raise.c:26

#4 0x00007d8ddbc347f3 in __GI_abort () at ./stdlib/abort.c:79

#5 0x000059beb439dab0 in bm_validate_client_index_locked (pbuf_guard=...) at /home/dsdaqdev/packages_common/midas/src/midas.cxx:5993

#6 0x000059beb43a859c in bm_get_my_client_locked (pbuf_guard=...) at /home/dsdaqdev/packages_common/midas/src/midas.cxx:6000

#7 bm_close_buffer (buffer_handle=<optimized out>) at /home/dsdaqdev/packages_common/midas/src/midas.cxx:7162

#8 0x000059beb43a89af in bm_close_all_buffers () at /home/dsdaqdev/packages_common/midas/src/midas.cxx:7256

#9 bm_close_all_buffers () at /home/dsdaqdev/packages_common/midas/src/midas.cxx:7243

#10 0x000059beb43afa20 in cm_disconnect_experiment () at /home/dsdaqdev/packages_common/midas/src/midas.cxx:2905

#11 0x000059beb43afdd8 in rpc_check_channels () at /home/dsdaqdev/packages_common/midas/src/midas.cxx:16317

#12 0x000059beb43b0cf5 in rpc_server_loop () at /home/dsdaqdev/packages_common/midas/src/midas.cxx:15858

#13 0x000059beb4390982 in main (argc=9, argv=0x7ffc07e5bed8) at /home/dsdaqdev/packages_common/midas/progs/mserver.cxx:387

K.O. |

|

3035

|

05 May 2025 |

Konstantin Olchanski | Info | db_delete_key(TRUE) | I was working on an odb corruption crash inside db_delete_key() and I noticed

that I did not test db_delete_key() with follow_links set to TRUE. Then I noticed

that nobody nowhere seems to use db_delete_key() with follow_links set to TRUE.

Instead of testing it, can I just remove it?

This feature existed since day 1 (1st commit) and it does something unexpected

compared to filesystem "/bin/rm": the best I can tell, it is removes the link

*and* whatever the link points to. For people familiar with "/bin/rm", this is

somewhat unexpected and by my thinking, if nobody ever added such a feature to

"/bin/rm", it is probably not considered generally useful or desirable. (I would

think it dangerous, it removes not 1 but 2 files, the 2nd file would be in some

other directory far away from where we are).

By this thinking, I should remove "follow_links" (actually just make it do thing

, to reduce the disturbance to other source code). db_delete_key() should work

similar to /bin/rm aka the unlink() syscall.

K.O. |

|

3034

|

05 May 2025 |

Konstantin Olchanski | Bug Fix | Bug fix in SQL history | A bug was introduced to the SQL history in 2022 that made renaming of variable names not work. This is now fixed.

break commit:

54bbc9ed5d65d8409e8c9fe60b024e99c9f34a85

fix commit:

159d8d3912c8c92da7d6d674321c8a26b7ba68d4

P.S.

This problem was caused by an unfortunate design of the c++ class system. If I want to add more data to an existing

class, I write this:

class old_class {

int i,j,k;

}

class bigger_class: public old_class {

int additional_variable;

}

But if I have this:

struct x { int i,j; }

class y {

std::vector<x> array_of_x;

}

and I want to add "k" to "x", c++ has not way to do this. history code has this workaround:

class bigger_y: public y

{

std::vector<int> array_of_k;

}

int bigger_y:foo(int n) {

printf("%d %d %d\", array_of_x[n].i, array_of_x[n].j, array_of_k[n]);

}

problem is that it is not obvious that "array_of_x" and "array_of_k" are connected

and they can easily get out of sync (if elements are added or removed). this is the

bug that happened in the history code. I now added assert(array_of_x.size()==array_of_k.size())

to offer at least some protection going forward.

P.S. As final solution I think I want to completely separate file history and sql history code,

they have more things different than common.

K.O. |

|

3033

|

30 Apr 2025 |

Stefan Ritt | Bug Report | ODBXX : ODB links in the path names ? | Indeed this was missing from the very beginning. I added it, please report back if it's not working.

Stefan |

|

3032

|

30 Apr 2025 |

Pavel Murat | Info | New ODB++ API | it is a very convenient interface! Does it support the ODB links in the path names ? -- thanks, regards, Pasha |

|

3031

|

30 Apr 2025 |

Stefan Ritt | Info | New ODB++ API | I had to change the ODBXX API: https://bitbucket.org/tmidas/midas/commits/273c4e434795453c0c6bceb46bac9a0d2d27db18

The old C API is case-insensitive, meaning db_find_key("name") returns a key "name" or "Name" or "NAME". We can discuss if this is good or bad, but that's how it is since 30 years.

I now realized the the ODBXX API keys is case sensitive, so a o["NAME"] does not return any key "name". Rather, it tries to create a new key which of course fails. I changed therefore

the ODBXX to become case-insensitive like the old C API.

Stefan |

|

3030

|

29 Apr 2025 |

Pavel Murat | Bug Report | ODBXX : ODB links in the path names ? | Dear MIDAS experts,

does the ODBXX interface to ODB currently ODB links in the path names? - From what I see so far, it currently fails to do so,

but I could be doing something else wrong...

-- thanks, regards, Pasha |

|

3029

|

16 Apr 2025 |

Thomas Lindner | Info | MIDAS workshop (online) Sept 22-23, 2025 | Dear MIDAS enthusiasts,

We are planning a fifth MIDAS workshop, following on from previous successful

workshops in 2015, 2017, 2019 and 2023. The goals of the workshop include:

- Getting updates from MIDAS developers on new features, bug fixes and planned

changes.

- Getting reports from MIDAS users on how they are using MIDAS and what problems

they are facing.

- Making plans for future MIDAS changes and improvements

We are planning to have an online workshop on Sept 22-23, 2025 (it will coincide

with a visit of Stefan to TRIUMF). We are tentatively planning to have a four

hour session on each day, with the sessions timed for morning in Vancouver and

afternoon/evening in Europe. Sorry, the sessions are likely to again not be well

timed for our colleagues in Asia.

We will provide exact times and more details closer to the date. But I hope

people can mark the dates in their calendars; we are keen to hear from as much of

the MIDAS community as possible.

Best Regards,

Thomas Lindner |

|

3028

|

08 Apr 2025 |

Lukas Mandokk | Info | MSL Syntax Highlighting Extension for VSCode (Release) | Hello everyone,

I just wanted to let you know, that I published a MSL Syntax Highlighting Extension for VSCode.

It is still in a quite early stage, so there might be some missing keywords and edge cases which are not fully handled. So in case you find any issues or have suggestions for improvements, I am happy to implement them. Also I only tested it with a custom theme (One Monokai), so it might look very different with the default theme and other ones.

The extension is called "MSL Syntax Highlighter" and can be found in the extension marketplace in VSCode. (vscode marketplace: https://marketplace.visualstudio.com/items?itemName=LukasMandok.msl-syntax-highlighter, github repo: https://github.com/LukasMandok/msl-syntax-highlighter)

One additional remark:

- To keep a consitent style with existing themes, one is a bit limited in regard to colors. For this reason a distinction betrween LOOP and IF Blocks is not really possible without writing a custom theme. A workaround would be to add the theming in the custom user settings (explained in the readme). |

|

3027

|

07 Apr 2025 |

Stefan Ritt | Suggestion | Sequencer ODBSET feature requests | If people are simultaneously editing scripts this is indeed an issue, which probably never can be resolved by technical means. It need communication between the users.

For the main script some ODB locking might look like:

- First person clicks on "Edit", system checks that file is not locked and sequencer is not running, then goes into edit mode

- When entering edit mode, the editor puts a lock in to the ODB, like "Scrip lock = pc1234".

- When another person clicks on "Edit", the system replies "File current being edited on pc1234"

- When the first person saves the file or closes the web browser, the lock gets removed.

- Since a browser can crash without removing a lock, we need some automatic lock recovery, like if the lock is there, the next users gets a message "file currently locked. Click "override" to "steal" the lock and edit the file".

All that is not 100% perfect, but will probably cover 99% of the cases.

There is still the problem on all other scripts. In principle we would need a lock for each file which is not so simple to implement (would need arrays of files and host names).

Another issue will arise if a user opens a file twice for editing. The second attempt will fail, but I believe this is what we want.

A hostname for the lock is the easiest we can get. Would be better to also have a user name, but since the midas API does not require a log in, we won't have a user name handy. At it would be too tedious to ask "if you want to edit this file, enter your username".

Just some thoughts.

Stefan |

|

3026

|

07 Apr 2025 |

Zaher Salman | Suggestion | Sequencer ODBSET feature requests |

| Lukas Gerritzen wrote: |

I also encountered a small annoyance in the current workflow of editing sequencer files in the browser:

- Load a file

- Double-click it to edit it, acknowledge the "To edit the sequence it must be opened in an editor tab" dialog

- A new tab opens

- Edit something, click "Start", acknowledge the "Save and start?" dialog (which pops up even if no changes are made)

- Run the script

- Double-click to make more changes -> another tab opens

After a while, many tabs with the same file are open. I understand this may be considered "user error", but perhaps the sequencer could avoid opening redundant tabs for the same file, or prompt before doing so?

Thanks for considering these suggestions! |

The original reason the restricting edits in the first tab is that it is used to reflect the state of the sequencer, i.e. the file that is currently loaded in the ODB.

Imagine two users are working in parallel on the same file, each preparing their own sequence. One finishes editing and starts the sequencer. How would the second person know that by now the file was changed and is running?

I am open to suggestions to minimize the number of clicks and/or other options to make the first tab editable while making it safe and visible to all other users. Maybe a lock mechanism in the ODB can help here.

Zaher |

|

3025

|

02 Apr 2025 |

Stefan Ritt | Suggestion | Sequencer ODBSET feature requests | And there is one more argument:

We have a Python expert in our development team who wrote already the Python-to-C bindings. That means when running a Python

script, we can already start/stop runs, write/read to the ODB etc. We only have to get the single stepping going which seems feasible to

me, since there are some libraries like inspect.currentframe() and traceback.extract_stack(). For single-stepping there are debug APIs

like debugpy. With Lua we really would have to start from scratch.

Stefan |

|