| ID |

Date |

Author |

Topic |

Subject |

|

1096

|

19 Aug 2015 |

Konstantin Olchanski | Bug Report | Sequencer limits | >

> See LOOP doc

> LOOP cnt, 10

> ODBGET /foo/bflag, bb

> IF $bb==1 THEN

> SET cnt, 10

> ELSE

> ...

>

Looks like we have PE |

|

1095

|

19 Aug 2015 |

Pierre-Andre Amaudruz | Bug Report | Sequencer limits | These issues have been addressed by Stefan during his visit at Triumf last month.

The latest git has those fixes.

> While I know some of those limits/problems have been already been reported from

> DEAP (and maybe corrected in the last version), I am recording them here:

>

> Bugs (not working as it should):

> - "SCRIPT" does not seem to take the parameters into account

Fixed

> - The operators for WAIT are incorrectly set:

> the default ">=" and ">" are correct, but "<=", "<", "==" and "!=" are all using

> ">=" for the test.

Fixed

>

> Possible improvements:

> - in LOOP, how can I get the index of the LOOP? I used an extra variable that I

> increment, but it there a better way?

See LOOP doc

LOOP cnt, 10

ODBGET /foo/bflag, bb

IF $bb==1 THEN

SET cnt, 10

ELSE

...

> - PARAM is giving "string" (or a bool) whose size is set by the user input. The

> side effect is that if I am making a loop starting at "1", the incrementation

> will loop at "9" -> "1". If I start at "01", the incrementation will give "2.",

> "3.",... "9.", "10"... The later is probably what most people would use.

Fixed

> - ODBGet (and ODBSet?) does seem to be able to take a variable as a path... I

> was trying to use an array whose index would be incremented.

To be checked. |

|

1094

|

19 Aug 2015 |

Pierre Gorel | Bug Report | Sequencer limits | While I know some of those limits/problems have been already been reported from

DEAP (and maybe corrected in the last version), I am recording them here:

Bugs (not working as it should):

- "SCRIPT" does not seem to take the parameters into account

- The operators for WAIT are incorrectly set:

the default ">=" and ">" are correct, but "<=", "<", "==" and "!=" are all using

">=" for the test.

Possible improvements:

- in LOOP, how can I get the index of the LOOP? I used an extra variable that I

increment, but it there a better way?

- PARAM is giving "string" (or a bool) whose size is set by the user input. The

side effect is that if I am making a loop starting at "1", the incrementation

will loop at "9" -> "1". If I start at "01", the incrementation will give "2.",

"3.",... "9.", "10"... The later is probably what most people would use.

- ODBGet (and ODBSet?) does seem to be able to take a variable as a path... I

was trying to use an array whose index would be incremented. |

|

1093

|

14 Aug 2015 |

Konstantin Olchanski | Info | Merged - improved midas network security | > [local:Online:S]RPC hosts>set "Allowed hosts[1]" "host.psi.ch"

> [ODBEdit,INFO] Reloading RPC hosts access control list via hotlink callback

Yes, this debug message can be removed after (say) one or two weeks. I find it useful at the moment.

> The trick not to have to "guess" a remote name is quite useful. I'm happy it even made it into the documentation.

Yes, this came from CERN/ALPHA felabview where we did not have a full list of labview machines that were sending us labview data.

> There is however an

> important shortcoming in the documentation. The old documentation had a "quick start" section, which allowed people quickly to

> set-up and run a midas system. This is still missing. And it should now contain a link to the "Security" page, so that people can set-up

> quickly remote programs.

Yes, Suzannah was just asking me about the same - we will need to review the quick start guide and the documentation about the mserver and midas rpc.

K.O. |

|

1092

|

14 Aug 2015 |

Stefan Ritt | Info | Merged - improved midas network security | I tested the new scheme and am quite happy with. Just a minor thing. When I change the ACL, I get messages from all attached programs, like:

[local:Online:S]RPC hosts>set "Allowed hosts[1]" "host.psi.ch"

[ODBEdit,INFO] Reloading RPC hosts access control list via hotlink callback

09:05:11 [mserver,INFO] Reloading RPC hosts access control list via hotlink callback

09:05:11 [ODBEdit,INFO] Reloading RPC hosts access control list via hotlink callback

[ODBEdit,INFO] Reloading RPC hosts access control list via hotlink callback

[ODBEdit,INFO] Reloading RPC hosts access control list via hotlink callback

09:05:11 [mserver,INFO] Reloading RPC hosts access control list via hotlink callback

09:05:11 [ODBEdit,INFO] Reloading RPC hosts access control list via hotlink callback

09:05:11 [mserver,INFO] Reloading RPC hosts access control list via hotlink callback

09:05:11 [ODBEdit,INFO] Reloading RPC hosts access control list via hotlink callback

While this is good for debugging, I would remove it not to confuse the average user.

The trick not to have to "guess" a remote name is quite useful. I'm happy it even made it into the documentation. There is however an

important shortcoming in the documentation. The old documentation had a "quick start" section, which allowed people quickly to

set-up and run a midas system. This is still missing. And it should now contain a link to the "Security" page, so that people can set-up

quickly remote programs. |

|

1091

|

12 Aug 2015 |

Konstantin Olchanski | Info | mhttpd HTTPS/SSL server updated | > > > mhttpd uses the latest release of mongoose 4.2

HTTPS support is completely broken in mongoose.c between July 28th (1bc9d8eae48f51ceb73ffd918046cfe74d286909)

and August 12th 2015 (fdc5a80a0a9ca54cba794d7c1131add7f55f112f).

I accidentally broke it by a wrong check against absence of EC_KEY in prehistoric openssl shipped with SL4.

As result, the ECDHE ciphers were enabled but did not work - google chrome complained about "obsolete cryptography",

firefox failed to connect at all.

Please update src/mongoose.c to the latest version if you are using https in mhttpd. (as you should)

Sorry about this problem.

K.O. |

|

1090

|

12 Aug 2015 |

Konstantin Olchanski | Info | Merged - improved midas network security | > New git branch "feature/rpcsecurity" implements these security features:

Branch was merged into main midas with a few minor changes:

1) RPC access control list is now an array of strings - "/Experiment/Security/RPC hosts/Allowed hosts" - this fixes the previous limit of 32 bytes for host name length.

1a) the access control list array is self-growing - it will have at least 10 empty entries at the end at all times.

2) All clients db_watch() the access control list and automatically reload it when it is changed - no need to restart clients. (suggested by Stefan)

3) "mserver -a hostname" switches for manually expanding the mserver access control list had to be removed because it stopped working with reloading from db_watch() - the ODB list will always overwrite

anything manually added by "-a".

The text below is corrected for these changes:

>

> - all UDP ports are bound to the localhost interface - connections from outside are not possible

> - by default out of the box MIDAS RPC TCP ports are bound to the localhost interface - connections from the outside are not possible.

>

> This configuration is suitable for testing MIDAS on a laptop and for running a simple experiment where all programs run on one machine.

>

> This configuration is secure (connections from the outside are not possible).

>

> This configuration makes corporate security people happy - MIDAS ports do not show up on network port scans (nmap & etc). (except for the mhttpd

> web ports).

>

> The change in binding UDP ports is incompatible with previous versions of MIDAS (except on MacOS, where UDP ports were always bound to localhost).

> All MIDAS programs should be rebuild and restarted, otherwise ODB hotlinks and some other stuff will not work. If rebuilding all MIDAS programs is

> impossible (for example I have one magic MIDAS frontend that cannot be rebuilt), one can force the old (insecure) behavior by creating a file

> .UDP_BIND_HOSTNAME in the experiment directory (next to .ODB.SHM).

>

> The mserver will still work in this localhost-restricted configuration - one should use "odbedit -h localhost" to connect. Multiple mserver instances on

> the same machine - using different TCP ports via "-p" and ODB "/Experiment/midas server port" - are still supported.

>

> To run MIDAS programs on remote machines, one should change the ODB setting "/Experiment/Security/Enable non-localhost RPC" to "yes" and

> add the hostnames of all remote machines that will run MIDAS programs to the MIDAS RPC access control list in ODB "/Experiment/Security/RPC hosts/Allowed hosts".

>

> To avoid "guessing" the host names expected by MIDAS, do this: set "enable non-localhost rpc" to "yes" and restart the mserver. Then go to the remote

> machine and try to start the MIDAS program, i.e. "odbedit -h daq06". This will bomb and there will be a message in the midas log file saying - rejecting

> connection from unallowed host 'ladd21.triumf.ca'. Add this host to "/Experiment/Security/RPC hosts/Allowed hosts". After you add this hostname to "RPC hosts"

> you should see messages in midas.log about reloading the access control list, try connecting again, it should work now.

>

> If MIDAS clients have to connect from random hosts (i.e. dynamically assigned random DHCP addresses), one can disable the host name checks by

> setting ODB "/experiment/security/Disable RPC hosts check" to "yes". This configuration is insecure and should only be done on a private network

> behind a firewall.

> |

|

1089

|

10 Aug 2015 |

Konstantin Olchanski | Forum | bk_create change | > bk_create()

> frontend.cpp:954: error: invalid conversion from ‘DWORD**’ to ‘void**’

Yes, the original bk_create() prototype was wrong, implying a pointer to the data instead of pointer-to-the-

pointer-to-the-data.

The prototype was corrected recently (within the last 2 years?), but as an unfortunate side-effect, nazi C

compilers refuse to automatically downgrade "xxx**" to "void**" (when downgrade of xxx* to void* is

accepted) and a cast is now required.

K.O. |

|

1088

|

10 Aug 2015 |

Wes Gohn | Forum | bk_create change | After pulling the newest version of midas, our compilation would fail on

bk_create, with the error:

frontend.cpp:954: error: invalid conversion from ‘DWORD**’ to ‘void**’

frontend.cpp:954: error: initializing argument 4 of ‘void bk_create(void*,

const char*, WORD, void**)’

I noticed a change to the function in midas.c that changes the type of pdata

from a pointer to a double pointer, and changes

*((void **) pdata) = pbk + 1;

to

*pdata = pbk + 1;

The fix is simple. In each call to bk_create, I changed it to:

bk_create(pevent, bk_name, TID_DWORD, (void**)&pdata);

I suggest updating the documentation. Also, why the change? Does it add some

improvement in efficiency? |

|

1086

|

29 Jul 2015 |

Konstantin Olchanski | Info | ROOT support in flux | The preliminary version of the .bashrc blurb looks like this

(a couple of flaws:

1) identification of CentOS7 is incomplete - please send me a patch

fixed -> 2) there should be a check for root-config already in the PATH, as on Ubuntu, the ROOT package

may be installed in /usr and root-

config may be already in the path - please send me patch).

if [ -x $(which root-config) ]; then

# root already in the PATH

true

elif [ `uname -i` == "i386" ]; then

. /daq/daqshare/olchansk/root/root_v5.34.01_SL62_32/bin/thisroot.sh

true

elif [ `lsb_release -r -s` == "7.1.1503" ]; then

#. /daq/daqshare/olchansk/root/root_v5.34.32_SL66_64/bin/thisroot.sh

true

else

. /daq/daqshare/olchansk/root/root_v5.34.32_SL66_64/bin/thisroot.sh

true

fi |

|

1085

|

29 Jul 2015 |

Konstantin Olchanski | Info | mlogger improvements - CRC32C, SHA-2 | > A set of improvements to mlogger is in:

Preliminary support for CRC32-zlib, CRC32C, SHA-256 and SHA-512 is in. Checksums are computed correctly, but plumbing configuration is

preliminary. Good enough for testing and benchmarking.

To enable checksums, set channel compression:

100 - no checksum (LZ4 compression)

11100 - CRC32-zlib checksum

22100 - CRC32C

33100 - SHA-256

44100 - SHA-512

checksums for both uncompressed and compressed data will be computed and reported into midas.log.

To compare:

CRC32-zlib is for compatibility with gzip and zlib tools

CRC32C is for maximum speed

SHA-256 and SHA-512 is for maximum data security

To remember:

- CRC32-zlib is the CRC32 computation from gzip/png/zlib library. I believe the technical name of the algorithm is "adler32".

- CRC32C is the most recently improved version of CRC32 family of checksums. Implementation is from Mark Adler (same Adler as adler32) uses

hardware acceleration on recent Intel CPUs.

- SHA-256 and SHA-512 are checksums currently accepted as cryptographically secure. One of them is supposed to be faster on 64-bit

machines. I implement both for benchmarking.

"Cryptographically secure" means "nobody has a practical way to construct two different files with the same checksum".

In simpler words, the file contents cannot change without breaking the checksum - by software bug, by hardware fault, by benign or malicious

intent.

The CRC family of checksum functions were never cryptographically secure.

MD5 and SHA-1 used to be secure but are no longer considered to be so. MD5 was definitely broken as different files with the same checksum

have been discovered or constructed.

K.O. |

|

1084

|

29 Jul 2015 |

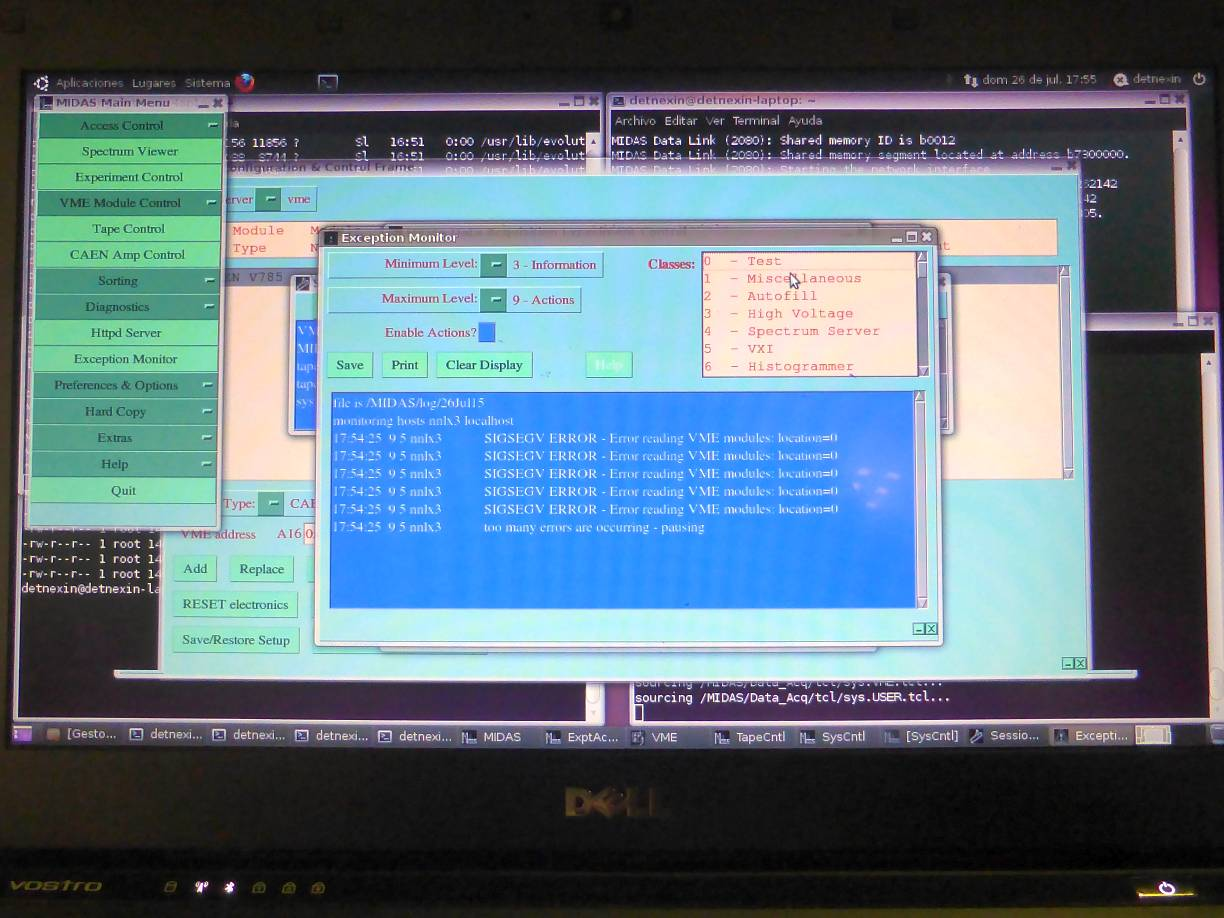

Konstantin Olchanski | Forum | error | > Hello, I am new in the forum. We are running an experiment for a week with no

> problems. Now we add a detector a we found an error. Even we come back to our

> previous configuration the error continues appearing. Please, may someone help

> us? You can find the error in the attachment. Thanks!

The error reported is SIGSEGV, which is a software fault (as opposed to a hardware fault like "printer is on fire" or "disk full").

Next step is to identify which program crashed and attach a debugger to the crashing executable or to the core dump.

You will use the debugger to generate the stack trace which will identify exactly the place where the program failed.

I recommend that one should always attach the stack trace to the problem reports on this forum. These stack traces are sometimes long and

scary and it is a bit of an art to read them, so do not worry if you do not understand what they say.

If you use "gdb", I recommend that you post your full debugger session:

bash> gdb myprogram

gdb> run my command line arguments

*crash*

gdb> where

... stack trace

gdb> quit

(If you use threads, please generate a stack trace for each thread)

If the crash location is inside midas code, congratulations, you may have found a bug in midas.

If the crash location is in your code, you have some debugging to do.

If you do not understand what I am talking about (gdb? core dump?), please read "unix/linux software development for dummies" book first.

K.O. |

|

1083

|

29 Jul 2015 |

Wes Gohn | Forum | error | SIGSEGV is a segmentation fault. Most often it means some ODB parameter is out of bounds or there is

an invalid memcpy somewhere in your code.

> Hello, I am new in the forum. We are running an experiment for a week with no

> problems. Now we add a detector a we found an error. Even we come back to our

> previous configuration the error continues appearing. Please, may someone help

> us? You can find the error in the attachment. Thanks! |

|

1082

|

29 Jul 2015 |

Stefan Ritt | Bug Report | jset/ODBSet using true/false for booleans | See bitbucket for the solution.

https://bitbucket.org/tmidas/midas/issues/29/jset-odbset-using-true-false-for-booleans#comment-20550474 |

|

1081

|

29 Jul 2015 |

Javier Praena | Forum | error | Hello, I am new in the forum. We are running an experiment for a week with no

problems. Now we add a detector a we found an error. Even we come back to our

previous configuration the error continues appearing. Please, may someone help

us? You can find the error in the attachment. Thanks! |

| Attachment 1: sigsegv-error.jpg

|

|

|

1080

|

28 Jul 2015 |

Konstantin Olchanski | Info | Plans for improving midas network security | New git branch "feature/rpcsecurity" implements these security features:

- all UDP ports are bound to the localhost interface - connections from outside are not possible

- by default out of the box MIDAS RPC TCP ports are bound to the localhost interface - connections from the outside are not possible.

This configuration is suitable for testing MIDAS on a laptop and for running a simple experiment where all programs run on one machine.

This configuration is secure (connections from the outside are not possible).

This configuration makes corporate security people happy - MIDAS ports do not show up on network port scans (nmap & etc). (except for the mhttpd

web ports).

The change in binding UDP ports is incompatible with previous versions of MIDAS (except on MacOS, where UDP ports were always bound to localhost).

All MIDAS programs should be rebuild and restarted, otherwise ODB hotlinks and some other stuff will not work. If rebuilding all MIDAS programs is

impossible (for example I have one magic MIDAS frontend that cannot be rebuilt), one can force the old (insecure) behavior by creating a file

.UDP_BIND_HOSTNAME in the experiment directory (next to .ODB.SHM).

The mserver will still work in this localhost-restricted configuration - one should use "odbedit -h localhost" to connect. Multiple mserver instances on

the same machine - using different TCP ports via "-p" and ODB "/Experiment/midas server port" - are still supported.

To run MIDAS programs on remote machines, one should change the ODB setting "/Experiment/Security/Enable non-localhost RPC" to "yes" and

add the hostnames of all remote machines that will run MIDAS programs to the MIDAS RPC access control list in ODB "/Experiment/Security/RPC hosts".

To avoid "guessing" the host names expected by MIDAS, do this: set "enable non-localhost rpc" to "yes" and restart the mserver. Then go to the remote

machine and try to start the MIDAS program, i.e. "odbedit -h daq06". This will bomb and there will be a message in the midas log file saying - rejecting

connection from unallowed host 'ladd21.triumf.ca'. Add this host to "/Experiment/Security/RPC hosts". After you add this hostname to "RPC hosts" and

restart the mserver, the connection should be successful. When "RPC hosts" is fully populated, one should restart all midas programs - the access

control list is only loaded at program startup.

If MIDAS clients have to connect from random hosts (i.e. dynamically assigned random DHCP addresses), one can disable the host name checks by

setting ODB "/experiment/security/Disable RPC hosts check" to "yes". This configuration is insecure and should only be done on a private network

behind a firewall.

After some more testing this branch will be merged into the main midas.

K.O. |

|

1079

|

24 Jul 2015 |

Konstantin Olchanski | Info | Plans for improving midas network security | There is a number of problems with network security in midas. (as separate from

web/http/https security).

1) too many network sockets are unnecessarily bound to the external network interface

instead of localhost (UDP ports are already bound to localhost on MacOS).

2) by default the RPC ports of each midas program accept connections and RPC commands

from anywhere in the world (an access control list is already implemented via

/Experiment/Security/Rpc Hosts, but not active by default)

3) mserver also has an access control list but it is not integrated with the access control list

for the RPC ports.

4) it is difficult to run midas in the presence of firewalls (midas programs listen on random

network ports - cannot be easily added to firewall rules)

There is a new git branch "feature/rpcsecurity" where I am addressing some of these

problems:

1) UDP sockets are only used for internal communications (hotlinks & etc) within one

machine, so they should be bound to the localhost address and become invisible to external

machines. This change breaks binary compatibility from old clients - they are have to be

relinked with the new midas library or hotlinks & etc will stop working. If some clients cannot

be rebuild (I have one like this), I am preserving the old way by checking for a special file in

the experiment directory (same place as ODB.SHM). (done)

2) if one runs on a single machine, does not use the mserver and does not have clients

running on other machines, then all the RPC ports can be bound to localhost. (this kills the

MacOS popups about "odbedit wants to connect to the Internet"). (partially done)

This (2) will become the new default - out of the box, midas will not listen to any external

network connections - making it very secure.

To use the mserver, one will have to change the ODB setting "/Experiment/Security/Enable

external RPC connections" and restart all midas programs (I am looking for a better name for

this odb setting).

3) the out-of-the-box default access control list for RPC connections will be set to

"localhost", which will reject all external connections, even when they are enabled by (2). One

will be required to enter the names of all machines that will run midas clients in

"/Experiment/Security/Rpc hosts". (already implemented in main midas, but default access

control list is empty meaning everybody is permitted)

4) the mserver will be required to attach to some experiment and will use this same access

control list to restrict access to the main mserver listener port. Right now the mserver listens

on this port without attaching to any experiment and accepts the access control list via

command line arguments. I think after this change a single mserver will still be able to service

multiple experiments (TBD).

5) I am adding an option to fix TCP port numbers for MIDAS programs via

"/Experiment/Security/Rpc ports/fename = (int)5555". Once a remote frontend is bound to a

fixed port, appropriate openings can be made in the firewall, etc. Default port number value

will be 0 meaning "use random port", same as now.

One problem remains with initial connecting to the mserver. The client connects to the main

mserver listener port (easy to firewall), but then the mserver connects back to the client - this

reverse connection is difficult to firewall and this handshaking is difficult to fix in the midas

sources. It will probably remain unresolved for now.

K.O. |

|

Draft

|

24 Jul 2015 |

Konstantin Olchanski | Info | Plans for improving midas network security | There is a number of problems with network security in midas. (as separate from web/http/https security).

1) too many network sockets are unnecessarily bound to the external network interface instead of localhost (UDP ports are already bound to localhost on MacOS).

2) by default the RPC ports of each midas program accept connections and RPC commands from anywhere in the world (an access control list is already implemented via /Experiment/Security/Rpc Hosts, but not active by default)

3) mserver also has an access control list but it is not integrated with the access control list for the RPC ports.

4) it is difficult to run midas in the presence of firewalls (midas programs listen on random network ports - cannot be easily added to firewall rules)

There is a new git branch "feature/rpcsecurity" where I am addressing some of these problems:

1) UDP sockets are only used for internal communications (hotlinks & etc) within one machine, so they should be bound to the localhost address and become invisible to external machines. This change breaks binary compatibility from old clients - they are have to be relinked with the new midas library or hotlinks & etc will stop working. If some clients cannot be rebuild (I have one like this), I am preserving the old way by checking for a special file in the experiment directory (same place as ODB.SHM). (done)

2) if one runs on a single machine, does not use the mserver and does not have clients running on other machines, then all the RPC ports can be bound to localhost. (this kills the MacOS popups about "odbedit wants to connect to the Internet"). (partially done)

This (2) will become the new default - out of the box, midas will not listen to any external network connections - making it very secure.

To use the mserver, one will have to change the ODB setting "/Experiment/Security/Enable external RPC connections" and restart all midas programs (I am looking for a better name for this odb setting).

3) the out-of-the-box default access control list for RPC connections will be set to "localhost", which will reject all external connections, even when they are enabled by (2). One will be required to enter the names of all machines that will run midas clients in "/Experiment/Security/Rpc hosts". (already implemented in main midas, but default access control list is empty meaning everybody is permitted)

4) the mserver will be required to attach to some experiment and will use this same access control list to restrict access to the main mserver listener port. Right now the mserver listens on this port without attaching to any experiment and accepts the access control list via command line arguments. I think after this change a single mserver will still be able to service multiple experiments (TBD).

5) I am adding an option to fix TCP port numbers for MIDAS programs via "/Experiment/Security/Rpc ports/fename = (int)5555". Once a remote frontend is bound to a fixed port, appropriate openings can be made in the firewall, etc. Default port number value will be 0 meaning "use random port", same as now.

One problem remains with initial connecting to the mserver. The client connects to the main mserver listener port (easy to firewall), but then the mserver connects back to the client - this reverse connection is difficult to firewall and this handshaking is difficult to fix in the midas sources. It will probably remain unresolved for now.

K.O. |

|

1077

|

24 Jul 2015 |

Konstantin Olchanski | Info | MAX_EVENT_SIZE removed | The define for MAX_EVENT_SIZE was removed from midas.h.

Replacing it is DEFAULT_MAX_EVENT_SIZE set to 4 MiBytes and DEFAULT_BUFFER_SIZE

set to 32 MiBytes.

For a long time now MIDAS does not have hardcoded maximum event size and buffer size

and this change merely renames the define to reflect it's current function.

The actual maximum event size is set by ODB /Experiment/MAX_EVENT_SIZE.

The actual event buffer sizes are set by ODB "/Experiment/Buffer sizes/SYSTEM" & co

K.O. |

|

1076

|

23 Jul 2015 |

Konstantin Olchanski | Info | mlogger improvements | > A set of improvements to mlogger is in:

> The current test version implements the following selections of "compression":

>

> 80 - ROOT output through the new driver (use rmlogger executable)

> ...

Additional output modes through the new output drivers:

81 - FTP output

82 - FTP output with LZ4 compression

The format of the "Channels/xxx/Settings/Filename" for FTP output is like this:

"/localhost, 5555, ftpuser, ftppwd, ., run%05dsub%05d.mid"

- the leading slash is required (for now)

- localhost is the FTP server hostname

- 5555 is the FTP server port number

- ftpuser and ftppwd are the FTP login. password is stored and transmitted in clear text for extra security

- "." is the output directory on the FTP server

- the rest is the file name in the usual format.

For testing this driver, I run the ftp server like this:

# vsftpd -olisten=YES -obackground=no -olisten_port=5555 -olisten_address=127.0.0.1 -oport_promiscuous=yes -oconnect_from_port_20=no -oftp_data_port=6666

K.O. |

|