| ID |

Date |

Author |

Topic |

Subject |

|

2259

|

11 Jul 2021 |

Konstantin Olchanski | Suggestion | MidasConfig.cmake usage | > > > So you say "nuke ${MIDAS_LIBRARIES}" and "fix ${MIDAS_INCLUDE}". Ok.

> > A more moderate option ...

>

> For the record, I did not disappear. I have a very short time window

> to complete commissioning the alpha-g daq (now that the network

> and the event builder are cooperating). To add to the fun, our high voltage

> power supply turned into a pumpkin, so plotting voltages and currents

> on the same history plot at the same time (like we used to be able to do)

> went up in priority.

>

in the latest update, find_package(midas) should work correctly, the include path is right,

the library list is right.

please test.

I find that the cmake install(export) method is simpler on the user side (just one line of

code) and is easier to support on the midas side (config file is auto-generated).

I request that proponents of the find_package(midas) method contribute the documentation and

example on how to use it. (see my other message).

K.O. |

|

2260

|

11 Jul 2021 |

Konstantin Olchanski | Bug Report | cmake with CMAKE_INSTALL_PREFIX fails | big thanks to Andreas S. for getting most of this figured out. I now understand

much better how cmake installs things and how it generates config files, both

find_package(midas) style and install(export) style.

with the latest updates, CMAKE_INSTALL_PREFIX should work correctly. I now understand how it works,

how to use it and how to test it, it should not break again.

for posterity, my commends to Andreas's pull request:

thank you for providing this code, it was very helpful. at the end I implemented things slightly differently. It took me a while to understand that I have to provide 2 “install” modes, for your case, I need to

“install” the header files and everything works “the cmake way”, for our normal case, we use include files in-place and have to include all the git submodules to the include path. I am quite happy with the

result. K.O.

K.O. |

|

2262

|

13 Jul 2021 |

Konstantin Olchanski | Info | MidasConfig.cmake usage | > $MIDASSYS/drivers/class/

> $MIDASSYS/drivers/device

> $MIDASSYS/mscb/src/

> $MIDASSYS/src/mfe.cxx

>

> I guess this can be easily added by defining a MIDAS_SOURCES in MidasConfig.cmake, so

> that I can do things like:

>

> add_executable(my_fe

> myfe.cxx

> $(MIDAS_SOURCES}/src/mfe.cxx

> ${MIDAS_SOURCES}/drivers/class/hv.cxx

> ...)

1) remove $(MIDAS_SOURCES}/src/mfe.cxx from "add_executable", add "mfe" to

target_link_libraries() as in examples/experiment/frontend:

add_executable(frontend frontend.cxx)

target_link_libraries(frontend mfe midas)

2) ${MIDAS_SOURCES}/drivers/class/hv.cxx surely is ${MIDASSYS}/drivers/...

If MIDAS is built with non-default CMAKE_INSTALL_PREFIX, "drivers" and co are not

available, as we do not "install" them. Where MIDASSYS should point in this case is

anybody's guess. To run MIDAS, $MIDASSYS/resources is needed, but we do not install

them either, so they are not available under CMAKE_INSTALL_PREFIX and setting

MIDASSYS to same place as CMAKE_INSTALL_PREFIX would not work.

I still think this whole business of installing into non-default CMAKE_INSTALL_PREFIX

location has not been thought through well enough. Too much thinking about how cmake works

and not enough thinking about how MIDAS works and how MIDAS is used. Good example

of "my tool is a hammer, everything else must have the shape of a nail".

K.O. |

|

2263

|

13 Jul 2021 |

Konstantin Olchanski | Bug Report | cmake question | > cmake check and mate in 1 move. please help.

> -std=c++11 and -std=c++14 collision...

I have a solution implemented for this, I am not happy with it, Stefan is not happy with it. See

discussion: https://bitbucket.org/tmidas/midas/commits/50a15aa70a4fe3927764605e8964b55a3bb1732b

K.O. |

|

2264

|

14 Jul 2021 |

Konstantin Olchanski | Bug Report | cmake question | > > cmake check and mate in 1 move. please help.

> > -std=c++11 and -std=c++14 collision...

>

> I have a solution implemented for this, I am not happy with it, Stefan is not happy with it. See

> discussion: https://bitbucket.org/tmidas/midas/commits/50a15aa70a4fe3927764605e8964b55a3bb1732b

>

I figured it out, solution is to use:

target_compile_features(midas PUBLIC cxx_std_11)

this is how it works:

- centos-7 (g++ has c++11 off by default): -std=gnu++11 is added automatically (not -std=c++11, but

probably correct, as some c++11 functions were available as gnu extensions)

- ubuntu-20.04 LTS without ROOT: nothing added (I guess correct, g++ has c++11 is enabled by default)

- ubuntu-20.04 LTS with -std=c++14 from ROOT: nothing added, c++14 as requested by ROOT is in affect.

- macos without ROOT: -std=gnu++11 is added automatically

- macos with -std=c++11 from ROOT: ditto, so both -std=c++11 and -std=gnu++11 are present in this order,

wrong-ish, but works.

and good luck figuring this out just from cmake documentation:

https://cmake.org/cmake/help/latest/command/target_compile_features.html

K.O. |

|

2265

|

14 Jul 2021 |

Konstantin Olchanski | Bug Fix | changes in history plots | Moving in the direction of this proposal. History plot editor is updated according to it. Remaining missing piece is the "show

raw value" buttons and code behind them.

Changes:

- "show factor and offset" moved to the top of the page, "off" by default

- factor and offset (if not zero) are automatically migrated to the formula field (if it is empty), one needs to save the panel

for this to take effect.

K.O.

> > I am updating the history plots.

> > So the idea is to use this computation:

> > y_position_on_plot = offset + factor*(formula(history_value) - voffset)

>

> Stefan and myself did some brain storming on zoom. Writing it down the way I remember it.

>

> - we distilled the gist of the problem - the numerical values we show in the plot labels and in hover-over-the-graph

> are before formula is applied or after the formula is applied?

>

> - I suggested a universal solution using a double formula: use formula1 for one case;

> use formula2 for the other case;

> use formula1 for "physics calibration", use formula2 for factor and offset for composite plots:

> numeric_value = formula1(history_value)

> plotted_value = formula2(numeric_value)

>

> - we agree that this is way too complicated, difficult to explain and difficult to coherently present in the history editor

>

> - Stefan suggested a simple solution, a checkbox labeled "show raw value" next to each history variable. by default, the

> value after the formula is plotted and displayed. if checked, the raw value (before the formula) is displayed, and the

> value after the formula is plotted. (so this works the same as the factor and offset on the old history plots).

>

> - if "show raw value" is enabled, the numerical values shown will be inconsistent against the labels on the vertical axis.

> Our solution it to turn the axis labels off. (for composite plots, like oscillator frequency in Hz vs oscillator

> temperature in degC, both scaled to see their correlation, the vertical axis is unit-less "arbitrary units", of course)

>

> - to simplify migration of old history plots that use custom factor and offset settings, we think in the direction of

> automatically moving them to the "formula". (factor=2, offset=10 automatically populates formula with "2*x+10", "show raw

> value" checked/enabled). Thus we can avoid implementing factor and offset in the new history code (an unwelcome

> complication).

>

> - I think this covers all the use cases I have seen in the past, so we will move in this direction.

>

> K.O. |

|

2266

|

14 Jul 2021 |

Konstantin Olchanski | Bug Fix | changes in history plots | > Moving in the direction of this proposal. Remaining missing piece is the "show

> raw value" buttons and code behind them.

added "show raw value" button, updated on-page instructions.

I think this is the final layout of the history panel editor, conversion

to html+javascript will be done "as is". If you have suggestions to improve

the layout (add/remove/move things around, etc), please shoult out (on the elog

here or by direct email to me).

I am thinking in the direction of changing the control flow of the history editor:

- midas "history" manu button click redirects to

- current history panel selection (with checkbox to open old history plots), click on "new plot" button redirects to

- new page for creating new plots. this will present a list of all history variables, click on variable name creates a new history

panel containing just this one variable and redirects to it.

In other words, to see the history for any history variable:

- click on "history" menu button

- click on "new"

- click on desired history variable

- see this history plot

From here, click on the "wheel" button to open the existing history panel editor and add any additional variables, change settings,

etc.

In the history panel editor, I am thinking in the direction of replacing the existing drop-down selection of history variables (now

very workable for large experiments) with an overlay dialog to show all history variables, with checkboxes to select them, basically

the same history variable select page as described above. Not sure yet how this will work visually.

K.O. |

|

2270

|

19 Aug 2021 |

Konstantin Olchanski | Bug Report | select() FD_SETSIZE overrun | I am looking at the mlogger in the ALPHA anti-hydrogen experiment at CERN. It is

mysteriously misbehaving during run start and stop.

The problem turns out to be with the select() system call.

The corresponding FD_SET(), FD_ISSET() & co operate on a an array of fixed size

FD_SETSIZE, value 1024, in my case. But the socket number is 1409, so we overrun

the FD_SET() array. Ouch.

I see that all uses of select() in midas have no protection against this.

(we should probably move away from select() to newer poll() or whatever it is)

Why does mlogger open so many file descriptors? The usual, scaling problems in the

history. The old midas history does not reuse file descriptors, so opens the same

3 history files (.hst, .idx, etc) for each history event. The new FILE history

opens just one file per history event. But if the number of events is bigger than

1024, we run into same trouble.

(BTW, the system limit on file descriptors is 4096 on the affected machine, 1024

on some other machines, see "limit" or "ulimit -a").

K.O. |

|

2274

|

06 Sep 2021 |

Konstantin Olchanski | Forum | mhttpd crash | > [mhttpd,ERROR] [mhttpd.cxx:18886:on_work_complete,ERROR] Should not send response to request from socket 28 to socket 26, abort!

> Can anybody hint me what is going wrong here?

> The bad thing on the crash is, that sometimes it is leading to a "chain-reaction" killing multiple midas frontends, which essentially stop the experiment.

This is my code. I am the culprit. I had a bit of discussion about this with Stefan.

Bottom line is something is rotten in the multithreading code inside mhttpd and under conditions unknown,

it sends the wrong data into the wrong socket. This causes midas web pages to be really confused (RPC replies

processed as CSS file, HTML code processed at RPC replies, a mess), this wrong data is cached by the browser,

so restarting mhttpd does not fix the web pages. So a mess.

I find this is impossible to replicate, and so cannot debug it, cannot fix it. Best I was able to do

is to add a check for socket numbers, and thankfully it catches the condition before web browser caches

become poisoned. So, broken web pages replaced by mhttpd crash.

This situation reinforces my opinion that multi-threading and C++ classes "do not mix" (like H2 and O2 do not mix).

If you write a multithreaded C++ program and it works, good for you, if there is a malfunction, good luck with it,

C++ just does not have any built-in support for debugging typical multithreading problems. I think others have come

to the same conclusion and invented all these new "safe" programming languages, like Rust and Go.

Back to your troubles.

1) If you see a way to replicate this crash, or some way to reliably cause

the crash within 5-10 minutes after starting mhttpd, please let me know. I can work with that

and I wish to fix this problem very much.

2) My "wrong socket" check calls abort() to produce a core dump. In my experience these core dumps

are useless for debugging the present problem. There is just no way to examine the state of each

thread and of each http request using gdb by hand.

3) this abort() causes linux to write a core dump, this takes a long time and I think it causes

other MIDAS program to stop, timeout and die. You can try to fix this by disabling core dumps (set "enable core dumps"

to "false" in ODB and set core dump size limit to 0), or change abort() to exit(). (You can also disable

the "wrong socket" check, but most likely you will not like the result).

4) run mhttpd inside a script: "while (1) { start mhttpd; sleep 1 sec; rinse, repeat; }" (run mhttpd without "-D", yes?)

In other news, the mongoose web server library have a new version available, they again changed their

multithreading scheme (I think it is an improvement). If I update mhttpd to this new version, it is very

likely the code with the "wrong socket" bug will be deleted. (with new bugs added to replace old bugs, of course).

K.O. |

|

2284

|

11 Oct 2021 |

Konstantin Olchanski | Forum | test | test, no email. K.O. |

|

2285

|

11 Oct 2021 |

Konstantin Olchanski | Forum | test | > test, no email. K.O.

test reply, no email. K.O. |

|

2286

|

11 Oct 2021 |

Konstantin Olchanski | Forum | test | > > test, no email. K.O.

>

> test reply, no email. K.O.

test attachment, no email. K.O. |

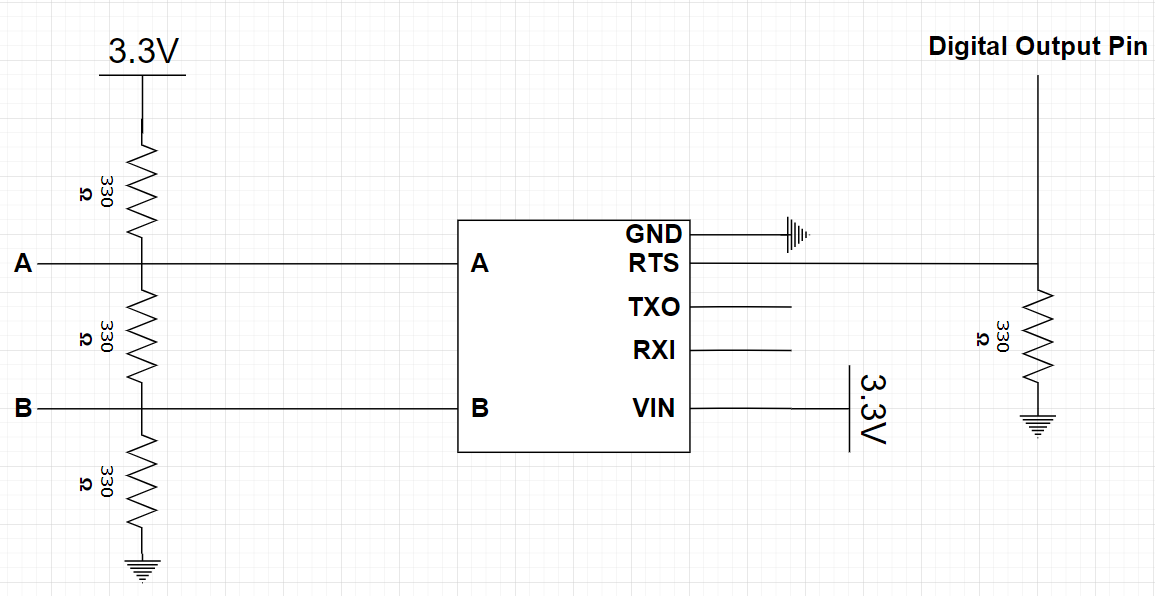

| Attachment 1: image.png

|

|

|

2287

|

11 Oct 2021 |

Konstantin Olchanski | Forum | test | > > > test, no email. K.O.

> >

> > test reply, no email. K.O.

>

> test attachment, no email. K.O.

test email. K.O. |

|

2288

|

11 Oct 2021 |

Konstantin Olchanski | Forum | midas forum updated, moved | The midas forum software (elogd) was updated to latest version and moved from our old server

(ladd00.triumf.ca) to our new server (daq00.triumf.ca).

The following URLs should work:

https://daq00.triumf.ca/elog-midas/Midas/ (new URL)

https://midas.triumf.ca/elog/Midas/ (old URL, redirects to daq00)

https://midas.triumf.ca/forum (link from midas wiki)

The configuration on the old server ladd00.triumf.ca is quite tangled between

several virtual hosts and several DNS CNAMEs. I think I got all the redirects

correct and all old URLs and links in old emails & etc still work.

If you see something wrong, please reply to this message here or email me directly.

K.O. |

|

2311

|

26 Jan 2022 |

Konstantin Olchanski | Forum | .gz files | > I adapted our analyzer to compile against the manalyzer included in the midas repo.

> TMReadEvent: error: short read 0 instead of -1193512213

I think this problem is fixed in the latest version of midasio and manalyzer, but this update

was not pulled into midas yet. (Canada is in the middle of a covid wave since December).

What happens is you do not have the gzip library installed on your computer and

your analyzer is built without support for gzip.

The fix is done the hard way, the gzip library is no longer optional, but required.

You do not say what linux you use, so I cannot give exact instructions, but for:

ubuntu: apt -y install libz-dev

centos7: installed by default

centos8: installed by default

debian11/raspbian: same as ubuntu

K.O. |

|

2312

|

26 Jan 2022 |

Konstantin Olchanski | Forum | Device driver for modbus | > Dear all, does anyone have an example of for a device driver using modbus or modbus tcp to communicate with a device and willing to share it? Thanks.

I have not seen any modbus devices recently, so all my code and examples are quite old.

Basic modbus/tcp communication driver is in the midas repo:

daq00:midas$ find . | grep -i modbus

./drivers/divers/ModbusTcp.cxx

./drivers/divers/ModbusTcp.h

daq00:midas$

This driver worked for communication to a modbus PLC (T2K/ND280/TPC experiment in Japan).

An example program to use this driver and test modbus communication is here:

https://bitbucket.org/expalpha/agdaq/src/master/src/modbus.cxx

Because at the end, we do not have any modbus devices in any recent experiment,

I do not have any example of using this driver in the midas frontend. Sorry.

K.O. |

|

2313

|

26 Jan 2022 |

Konstantin Olchanski | Bug Report | Writting MIDAS Events via FPGAs | > today I did not get the data into MIDAS.

Any error messages printed by the frontend? any error message in midas.log? core dumps? crashes?

I do not understand what you mean by "did not get the data into midas". You create events

and send them to a midas event buffer and you do not see them there? With mdump?

Do you see this both connected locally and connected remotely through the mserver?

BTW, I see you are using the mfe.c frontend. Event data handling in mfe.c frontends

is quite convoluted and impossible to straighten out. I recommend that you use

the tmfe c++ frontend instead. Event data handling is much simplified and is easier to debug

compared to the mfe.c frontend. There is examples in the midas repository and there are

tutorials for converting frontends from mfe.c to tmfe posted in this forum here.

BTW, the commit you refer to only changed some html files, could not have affected

your data.

K.O. |

|

2314

|

26 Jan 2022 |

Konstantin Olchanski | Bug Report | some frontend kicked by cm_periodic_tasks | > The problem is that eventually some of frontend closed with message

> :19:22:31.834 2021/12/02 [rootana,INFO] Client 'Sample Frontend38' on buffer

> 'SYSMSG' removed by cm_periodic_tasks because process pid 9789 does not exist

This messages means what it says. A client was registered with the SYSMSG buffer and this

client had pid 9789. At some point some other client (rootana, in this case) checked it and

process pid 9789 was no longer running. (it then proceeded to remove the registration).

There is 2 possibilities:

- simplest: your frontend has crashed. best to debug this by running it inside gdb, wait for

the crash.

- unlikely: reported pid is bogus, real pid of your frontend is different, the client

registration in SYSMSG is corrupted. this would indicate massive corruption of midas shared

memory buffers, not impossible if your frontend misbehaves and writes to random memory

addresses. ODB has protection against this (normally turned off, easy to enable, set ODB

"/experiment/protect odb" to yes), shared memory buffers do not have protection against this

(should be added?).

Do this. When you start your frontend, write down it's pid, when you see the crash message,

confirm pid number printed is the same. As additional test, run your frontend inside gdb,

after it crashes, you can print the stack trace, etc.

>

> in the meantime mserver loggging :

> mserver started interactively

> mserver will listen on TCP port 1175

> double free or corruption (!prev)

> double free or corruption (!prev)

> free(): invalid next size (normal)

> double free or corruption (!prev)

>

Are these "double free" messages coming from the mserver or from your frontend? (i.e. you run

them in different terminals, not all in the same terminal?).

If messages are coming from the mserver, this confirms possibility (1),

except that for frontends connected remotely, the pid is the pid of the mserver,

and what we see are crashes of mserver, not crashes of your frontend. These are much harder to

debug.

You will need to enable core dumps (ODB /Experiment/Enable core dumps set to "y"),

confirm that core dumps work (i.e. "killall -SEGV mserver", observe core files are created

in the directory where you started the mserver), reproduce the crash, run "gdb mserver

core.NNNN", run "bt" to print the stack trace, post the stack trace here (or email to me

directly).

>

> I can find some correlation between number of events/event size produced by

> frontend, cause its failed when its become big enough.

>

There is no limit on event size or event rate in midas, you should not see any crash

regardless of what you do. (there is a limit of event size, because an event has

to fit inside an event buffer and event buffer size is limited to 2 GB).

Obviously you hit a bug in mserver that makes it crash. Let's debug it.

One thing to try is set the write cache size to zero and see if your crash goes away. I see

some indication of something rotten in the event buffer code if write cache is enabled. This

is set in ODB "/Eq/XXX/Common/Write Cache Size", set it to zero. (beware recent confusion

where odb settings have no effect depending on value of "equipment_common_overwrite").

>

> frontend scheme is like this:

>

Best if you use the tmfe c++ frontend, event data handling is much simpler and we do not

have to debug the convoluted old code in mfe.c.

K.O.

>

> poll event time set to 0;

>

> poll_event{

> //if buffer not transferred return (continue cutting the main buffer)

> //read main buffer from hardware

> //buffer not transfered

> }

>

> read event{

> // cut the main buffer to subevents (cut one event from main buffer) return;

> //if (last subevent) {buffer transfered ;return}

> }

>

> What is strange to me that 2 frontends (1 per remote pc) causing this.

>

> Also, I'm executing one FEcode with -i # flag , put setting eventid in

> frontend_init , and using SYSTEM buffer for all.

>

> Is there something I'm missing?

> Thanks.

> A. |

|

2315

|

26 Jan 2022 |

Konstantin Olchanski | Bug Report | Off-by-one in sequencer documentation | > > 3 LOOP n,4

> > 4 MESSAGE $n,1

> > 5 ENDLOOP

>

> Indeed you're right. The loop variable runs from 1...n. I fixed that in the documentation.

Shades/ghosts of FORTRAN. c/c++/perl/python loops loop from 0 to n-1.

K.O. |

|

2316

|

26 Jan 2022 |

Konstantin Olchanski | Info | MityCAMAC Login | For those curious about CAMAC controllers, this one was built around 2014 to

replace the aging CAMAC A1/A2 controllers (parallel and serial) in the TRIUMF

cyclotron controls system (around 50 CAMAC crates). It implements the main

and the auxiliary controller mode (single width and double width modules).

The design predates Altera Cyclone-5 SoC and has separate

ARM processor (TI 335x) and Cyclone-4 FPGA connected by GPMC bus.

ARM processor boots Linux kernel and CentOS-7 userland from an SD card,

FPGA boots from it's own EPCS flash.

User program running on the ARM processor (i.e. a MIDAS frontend)

initiates CAMAC operations, FPGA executes them. Quite simple.

K.O. |

|