| ID |

Date |

Author |

Topic |

Subject |

|

2734

|

02 Apr 2024 |

Konstantin Olchanski | Bug Report | Midas (manalyzer) + ROOT 6.31/01 - compilation error |

> I found solution for my trouble. With MIDAS and ROOT everything is OK,

> the trobule was with my Ubuntu enviroment.

Congratulations with figuring this out.

BTW, this is the 2nd case of contaminated linker environment I run into in the last 30 days. We

just had a problem of "cannot link MIDAS with ROOT" (resolving by "make cmake NO_ROOT=1 NO_CURL=1

NO_MYSQL=1").

This all seems to be a flaw in cmake, it reports "found ROOT at XXX", "found CURL at YYY", "found

MYSQL at ZZZ", then proceeds to link ROOT, CURL and MYSQL libraries from somewhere else,

resulting in shared library version mismatch.

With normal Makefiles, this is fixable by changing the link command from:

g++ -o rmlogger ... -LAAA/lib -LXXX/lib -LYYY/lib -lcurl -lmysql -lROOT

into explicit

g++ -o rmlogger ... -LAAA/lib XXX/lib/libcurl.a YYY/lib/libmysql.a ...

defeating the bogus CURL and MYSQL libraries in AAA.

With cmake, I do not think it is possible to make this transformation.

Maybe it is possible to add a cmake rules to at least detect this situation, i.e. compare library

paths reported by "ldd rmlogger" to those found and expected by cmake.

K.O. |

|

2733

|

02 Apr 2024 |

Konstantin Olchanski | Info | Sequencer editor |

> Stefan and I have been working on improving the sequencer editor ...

Looks grand! Congratulations with getting it completed. The previous version was

my rewrite of the old generated-C pages into html+javascript, nothing to write

home about, I even kept the 1990-ies-style html formatting and styling as much as

possible.

K.O. |

|

2732

|

02 Apr 2024 |

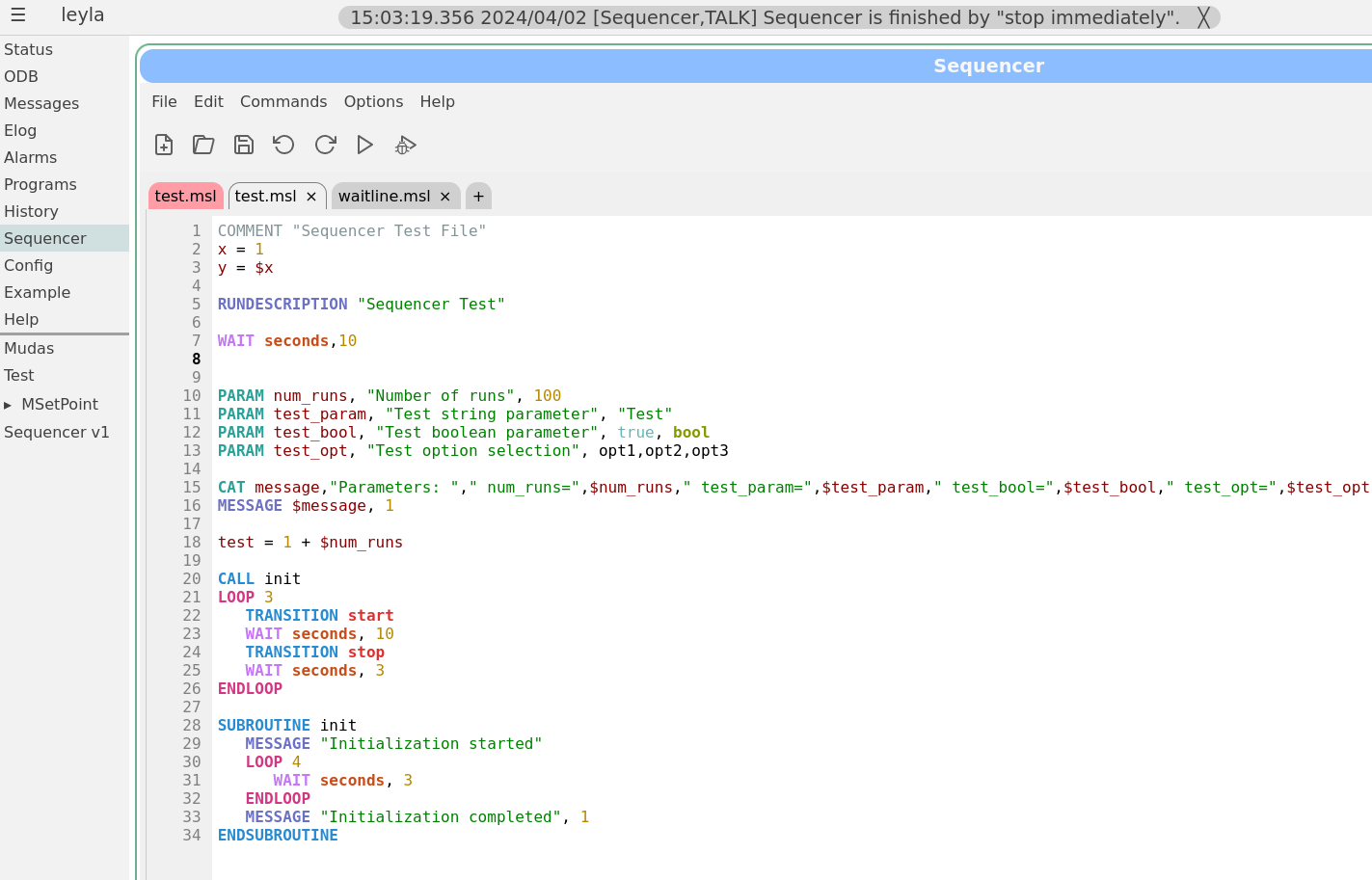

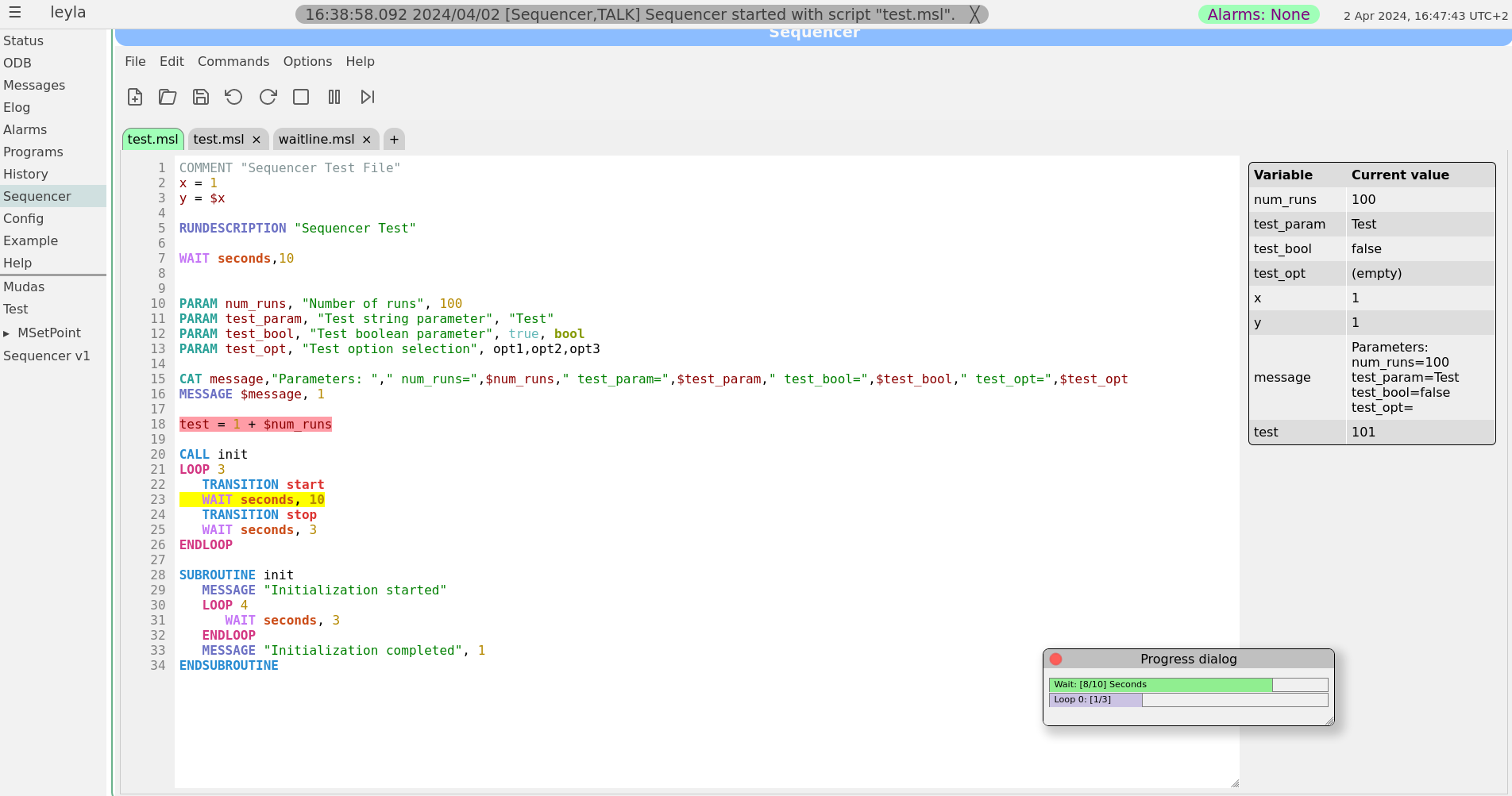

Zaher Salman | Info | Sequencer editor |

Dear all,

Stefan and I have been working on improving the sequencer editor to make it look and feel more like a standard editor. This sequencer v2 has been finally merged into the develop branch earlier today.

The sequencer page has now a main tab which is used as a "console" to show the loaded sequence and it's progress when running. All other tabs are used only for editing scripts. To edit a currently loaded sequence simply double click on the editing area of the main tab or load the file in a new tab. A couple of screen shots of the new editor are attached.

For those who would like to stay with the older sequencer version a bit longer, you may simply copy resources/sequencer_v1.html to resources/sequencer.html. However, this version is not being actively maintained and may become obsolete at some point. Please help us improve the new version instead by reporting bugs and feature requests on bitbucket or here.

Best regards,

Zaher

|

|

2731

|

01 Apr 2024 |

Konstantin Olchanski | Info | xz-utils bomb out, compression benchmarks |

you may have heard the news of a major problem with the xz-utils project, authors of the popular "xz" file compression,

https://nvd.nist.gov/vuln/detail/CVE-2024-3094

the debian bug tracker is interesting reading on this topic, "750 commits or contributions to xz by Jia Tan, who backdoored it",

https://bugs.debian.org/cgi-bin/bugreport.cgi?bug=1068024

and apparently there is problems with the deisng of the .xz file format, making it vulnerable to single-bit errors and unreliable checksums,

https://www.nongnu.org/lzip/xz_inadequate.html

this moved me to review status of file compression in MIDAS.

MIDAS does not use or recommend xz compression, MIDAS programs to not link to xz and lzma libraries provided by xz-utils.

mlogger has built-in support for:

- gzip-1, enabled by default, as the most safe and bog-standard compression method

- bzip2 and pbzip2, as providing the best compression

- lz4, for high data rate situations where gzip and bzip2 cannot keep up with the data

compression benchmarks on an AMD 7700 CPU (8-core, DDR5 RAM) confirm the usual speed-vs-compression tradeoff:

note: observe how both lz4 and pbzip2 compress time is the time it takes to read the file from ZFS cache, around 6 seconds.

note: decompression stacks up in the same order: lz4, gzip fastest, pbzip2 same speed using 10x CPU, bzip2 10x slower uses 1 CPU.

note: because of the fast decompression speed, gzip remains competitive.

no compression: 6 sec, 270 MiBytes/sec,

lz4, bpzip2: 6 sec, same, (pbzip2 uses 10 CPU vs lz4 uses 1 CPU)

gzip -1: 21 sec, 78 MiBytes/sec

bzip2: 70 sec, 23 MiBytes/sec (same speed as pbzip2, but using 1 CPU instead of 10 CPU)

file sizes:

(vslice) dsdaqdev@dsdaqgw:/zdata/vslice$ ls -lSr test.mid*

-rw-r--r-- 1 dsdaqdev users 483319523 Apr 1 14:06 test.mid.bz2

-rw-r--r-- 1 dsdaqdev users 631575929 Apr 1 14:06 test.mid.gz

-rw-r--r-- 1 dsdaqdev users 1002432717 Apr 1 14:06 test.mid.lz4

-rw-r--r-- 1 dsdaqdev users 1729327169 Apr 1 14:06 test.mid

(vslice) dsdaqdev@dsdaqgw:/zdata/vslice$

actual benchmarks:

(vslice) dsdaqdev@dsdaqgw:/zdata/vslice$ /usr/bin/time cat test.mid > /dev/null

0.00user 6.00system 0:06.00elapsed 99%CPU (0avgtext+0avgdata 1408maxresident)k

(vslice) dsdaqdev@dsdaqgw:/zdata/vslice$ /usr/bin/time gzip -1 -k test.mid

14.70user 6.42system 0:21.14elapsed 99%CPU (0avgtext+0avgdata 1664maxresident)k

(vslice) dsdaqdev@dsdaqgw:/zdata/vslice$ /usr/bin/time lz4 -k -f test.mid

2.90user 6.44system 0:09.39elapsed 99%CPU (0avgtext+0avgdata 7680maxresident)k

(vslice) dsdaqdev@dsdaqgw:/zdata/vslice$ /usr/bin/time bzip2 -k -f test.mid

64.76user 8.81system 1:13.59elapsed 99%CPU (0avgtext+0avgdata 8448maxresident)k

(vslice) dsdaqdev@dsdaqgw:/zdata/vslice$ /usr/bin/time pbzip2 -k -f test.mid

86.76user 15.39system 0:09.07elapsed 1125%CPU (0avgtext+0avgdata 114596maxresident)k

decompression benchmarks:

(vslice) dsdaqdev@dsdaqgw:/zdata/vslice$ /usr/bin/time lz4cat test.mid.lz4 > /dev/null

0.68user 0.23system 0:00.91elapsed 99%CPU (0avgtext+0avgdata 7680maxresident)k

(vslice) dsdaqdev@dsdaqgw:/zdata/vslice$ /usr/bin/time zcat test.mid.gz > /dev/null

6.61user 0.23system 0:06.85elapsed 99%CPU (0avgtext+0avgdata 1408maxresident)k

(vslice) dsdaqdev@dsdaqgw:/zdata/vslice$ /usr/bin/time bzcat test.mid.bz2 > /dev/null

27.99user 1.59system 0:29.58elapsed 99%CPU (0avgtext+0avgdata 4656maxresident)k

(vslice) dsdaqdev@dsdaqgw:/zdata/vslice$ /usr/bin/time pbzip2 -dc test.mid.bz2 > /dev/null

37.32user 0.56system 0:02.75elapsed 1377%CPU (0avgtext+0avgdata 157036maxresident)k

K.O. |

|

2730

|

28 Mar 2024 |

Grzegorz Nieradka | Bug Report | Midas (manalyzer) + ROOT 6.31/01 - compilation error |

I found solution for my trouble. With MIDAS and ROOT everything is OK,

the trobule was with my Ubuntu enviroment.

In this case the trobule was caused by earlier installed anaconda and hardcoded path

to anaconda libs folder in PATH enviroment variable.

In anaconda lib folder I have the libstdc++.so.6.0.29 and the hardcoded path

to this folder was added during the linking, by ld program, after the standard path location

of libstdc++.

So the linker tried to link to this version of libstdc++.

When I removed the path for anaconda libs from enviroment and the standard libs location

is /usr/lib/x86_64-linux-gnu/ and I have the libstdc++.so.6.0.32 version

of stdc++ library everything is compiling and linking smoothly without any errors.

Additionaly, everything works smoothly even with the newest ROOT version 6.30/04 compiled

from source.

Thanks for help.

BTW. I would like to take this opportunity to wish everyone a happy Easter and tasty eggs!

Regards,

Grzegorz Nieradka |

|

2729

|

19 Mar 2024 |

Konstantin Olchanski | Bug Report | Midas (manalyzer) + ROOT 6.31/01 - compilation error |

> ok, thank you for your information. I cannot reproduce this problem, I use vanilla Ubuntu

> LTS 22, ROOT binary kit root_v6.30.02.Linux-ubuntu22.04-x86_64-gcc11.4 from root.cern.ch

> and latest midas from git.

>

> something is wrong with your ubuntu or with your c++ standard library or with your ROOT.

>

> a) can you try with root_v6.30.02.Linux-ubuntu22.04-x86_64-gcc11.4 from root.cern.ch

> b) for the midas build, please run "make cclean; make cmake -k" and email me (or post

> here) the complete output.

also, please email me the output of these commands on your machine:

daq00:midas$ ls -l /lib/x86_64-linux-gnu/libstdc++*

lrwxrwxrwx 1 root root 19 May 13 2023 /lib/x86_64-linux-gnu/libstdc++.so.6 -> libstdc++.so.6.0.30

-rw-r--r-- 1 root root 2260296 May 13 2023 /lib/x86_64-linux-gnu/libstdc++.so.6.0.30

daq00:midas$

and

daq00:midas$ ldd $ROOTSYS/bin/rootreadspeed

linux-vdso.so.1 (0x00007ffe6c399000)

libTree.so => /daq/cern_root/root_v6.30.02.Linux-ubuntu22.04-x86_64-gcc11.4/lib/libTree.so (0x00007f67e53b5000)

libRIO.so => /daq/cern_root/root_v6.30.02.Linux-ubuntu22.04-x86_64-gcc11.4/lib/libRIO.so (0x00007f67e4fb9000)

libCore.so => /daq/cern_root/root_v6.30.02.Linux-ubuntu22.04-x86_64-gcc11.4/lib/libCore.so (0x00007f67e4b08000)

libstdc++.so.6 => /lib/x86_64-linux-gnu/libstdc++.so.6 (0x00007f67e48bd000)

libgcc_s.so.1 => /lib/x86_64-linux-gnu/libgcc_s.so.1 (0x00007f67e489b000)

libc.so.6 => /lib/x86_64-linux-gnu/libc.so.6 (0x00007f67e4672000)

libNet.so => /daq/cern_root/root_v6.30.02.Linux-ubuntu22.04-x86_64-gcc11.4/lib/libNet.so (0x00007f67e458b000)

libThread.so => /daq/cern_root/root_v6.30.02.Linux-ubuntu22.04-x86_64-gcc11.4/lib/libThread.so (0x00007f67e4533000)

libm.so.6 => /lib/x86_64-linux-gnu/libm.so.6 (0x00007f67e444c000)

/lib64/ld-linux-x86-64.so.2 (0x00007f67e5599000)

libpcre.so.3 => /lib/x86_64-linux-gnu/libpcre.so.3 (0x00007f67e43d6000)

libz.so.1 => /lib/x86_64-linux-gnu/libz.so.1 (0x00007f67e43b8000)

liblzma.so.5 => /lib/x86_64-linux-gnu/liblzma.so.5 (0x00007f67e438d000)

libxxhash.so.0 => /lib/x86_64-linux-gnu/libxxhash.so.0 (0x00007f67e4378000)

liblz4.so.1 => /lib/x86_64-linux-gnu/liblz4.so.1 (0x00007f67e4358000)

libzstd.so.1 => /lib/x86_64-linux-gnu/libzstd.so.1 (0x00007f67e4289000)

libssl.so.3 => /lib/x86_64-linux-gnu/libssl.so.3 (0x00007f67e41e3000)

libcrypto.so.3 => /lib/x86_64-linux-gnu/libcrypto.so.3 (0x00007f67e3d9f000)

daq00:midas$

K.O. |

|

2728

|

19 Mar 2024 |

Konstantin Olchanski | Bug Report | Midas (manalyzer) + ROOT 6.31/01 - compilation error |

ok, thank you for your information. I cannot reproduce this problem, I use vanilla Ubuntu

LTS 22, ROOT binary kit root_v6.30.02.Linux-ubuntu22.04-x86_64-gcc11.4 from root.cern.ch

and latest midas from git.

something is wrong with your ubuntu or with your c++ standard library or with your ROOT.

a) can you try with root_v6.30.02.Linux-ubuntu22.04-x86_64-gcc11.4 from root.cern.ch

b) for the midas build, please run "make cclean; make cmake -k" and email me (or post

here) the complete output.

K.O. |

|

2727

|

19 Mar 2024 |

Grzegorz Nieradka | Bug Report | Midas (manalyzer) + ROOT 6.31/01 - compilation error |

Dear Konstantin,

Thank you for your interest in my problem.

What I did:

1. I installed the latest ROOT from source according tho the manual,

exactly as in this webpage (https://root.cern/install/).

ROOT sems work correctly, .demo from it is works and some example

file too. The manalyzer is not linking with this ROOT version installed from source.

2. I downgraded the ROOT to the lower version (6.30.04):

git checkout -b v6-30-04 v6-30-04

ROOT seems compiled, installed and run correctly. The manalyzer,

from the MIDAS is not linked.

3. I downoladed the latest version of ROOT:

https://root.cern/download/root_v6.30.04.Linux-ubuntu22.04-x86_64-gcc11.4.tar.gz

and I installed it simple by tar: tar -xzvf root_...

------------------------------------------------------------------

| Welcome to ROOT 6.30/04 https://root.cern |

| (c) 1995-2024, The ROOT Team; conception: R. Brun, F. Rademakers |

| Built for linuxx8664gcc on Jan 31 2024, 10:01:37 |

| From heads/master@tags/v6-30-04 |

| With c++ (Ubuntu 11.4.0-1ubuntu1~22.04) 11.4.0 |

| Try '.help'/'.?', '.demo', '.license', '.credits', '.quit'/'.q' |

------------------------------------------------------------------

Again the ROOT sems work properly, the .demo from it is working, and example file

are working too. Manalyzer from MIDAS is failed to linking.

4. The midas with the option: cmake -D NO_ROOT=ON ..

is compliling, linking and even working.

5. When I try to build MIDAS with ROOT support threre is error:

[ 33%] Linking CXX executable manalyzer_test.exe

/usr/bin/ld: /home/astrocent/workspace/root/lib/libRIO.so: undefined reference to

`std::condition_variable::wait(std::unique_lock<std::mutex>&)@GLIBCXX_3.4.30

I'm trying to attach files:

cmake-midas-root -> My configuration of compiling MIDAS with ROOT

make-cmake-midas -> output of my the command make cmake in MIDAS directory

make-cmake-k -> output of my the command make cmake -k in MIDAS directory

And I'm stupid at this moment.

Regards,

Grzegorz Nieradka |

| Attachment 1: cmake-midas-root

|

- MIDAS: cmake version: 3.22.1

-- The C compiler identification is GNU 11.4.0

-- The CXX compiler identification is GNU 11.4.0

-- Detecting C compiler ABI info

-- Detecting C compiler ABI info - done

-- Check for working C compiler: /usr/bin/cc - skipped

-- Detecting C compile features

-- Detecting C compile features - done

-- Detecting CXX compiler ABI info

-- Detecting CXX compiler ABI info - done

-- Check for working CXX compiler: /usr/bin/c++ - skipped

-- Detecting CXX compile features

-- Detecting CXX compile features - done

-- MIDAS: Setting build type to 'RelWithDebInfo' as non was specified

-- MIDAS: Curent build type is 'RelWithDebInfo'

-- MIDAS: Disabled address sanitizer

-- MIDAS: CMAKE_INSTALL_PREFIX: /home/astrocent/workspace/packages/midas

-- Found Vdt: /home/astrocent/workspace/root/include (found version "0.4")

-- MIDAS: Found ROOT version 6.30.04 in /home/astrocent/workspace/root

-- Found ZLIB: /usr/lib/x86_64-linux-gnu/libz.so (found version "1.2.11")

-- MIDAS: Found ZLIB version 1.2.11

-- Found CURL: /usr/lib/x86_64-linux-gnu/libcurl.so (found version "7.81.0")

-- MIDAS: Found LibCURL version 7.81.0

-- MIDAS: MBEDTLS not found

-- MIDAS: Found MySQL version 8.0.36

-- MIDAS: MySQL CFLAGS: -I/usr/include/mysql and libs: -L/usr/lib/x86_64-linux-gnu -lmysqlclient -lzstd -lssl -lcrypto -lresolv -lm

-- MIDAS: Found PostgreSQL version PostgreSQL 12.9

-- MIDAS: PostgresSQL include: /home/astrocent/anaconda3/include and libs: /home/astrocent/anaconda3/lib

-- MIDAS: ODBC not found

-- Could NOT find SQLite3 (missing: SQLite3_INCLUDE_DIR SQLite3_LIBRARY)

-- MIDAS: SQLITE not found

-- MIDAS (msysmon): NVIDIA CUDA libs not found

-- MIDAS (msysmon): Found LM_SENSORS

-- MIDAS (mlogger): OpenCV not found, (no rtsp camera support))

-- Looking for pthread.h

-- Looking for pthread.h - found

-- Performing Test CMAKE_HAVE_LIBC_PTHREAD

-- Performing Test CMAKE_HAVE_LIBC_PTHREAD - Success

-- Found Threads: TRUE

-- Found Git: /usr/bin/git (found version "2.34.1")

-- MSCB: CMAKE_INSTALL_PREFIX: /home/astrocent/workspace/packages/midas

-- manalyzer: CMAKE_INSTALL_PREFIX: /home/astrocent/workspace/packages/midas

-- manalyzer: Building as a subproject of MIDAS

-- manalyzer: Using ROOT: flags: -std=c++17;-pipe;-fsigned-char;-pthread and includes: /home/astrocent/workspace/root/include

-- Found Python3: /usr/bin/python3.10 (found version "3.10.12") found components: Interpreter

-- example_experiment: Building as a subproject of MIDAS

-- example_experiment: MIDASSYS: /home/astrocent/workspace/packages/midas

-- example_experiment: Using ROOT: flags: -std=c++17;-pipe;-fsigned-char;-pthread and includes: /home/astrocent/workspace/root/include

-- example_experiment: Found ROOT version 6.30.04

-- Configuring done

-- Generating done

-- Build files have been written to: /home/astrocent/workspace/packages/midas/build

|

| Attachment 2: make-cmake-midas

|

astrocent@astrocent-desktop:~/workspace/packages/midas$ make cmake

mkdir -p build

cd build; cmake .. -DCMAKE_EXPORT_COMPILE_COMMANDS=1 -DCMAKE_TARGET_MESSAGES=OFF; make --no-print-directory VERBOSE=1 all install | 2>&1 grep -v -e ^make -e ^Dependee -e cmake_depends -e ^Scanning -e cmake_link_script -e cmake_progress_start -e cmake_clean_target -e build.make

-- MIDAS: cmake version: 3.22.1

-- MIDAS: Setting build type to 'RelWithDebInfo' as non was specified

-- MIDAS: Curent build type is 'RelWithDebInfo'

-- MIDAS: Disabled address sanitizer

-- MIDAS: CMAKE_INSTALL_PREFIX: /home/astrocent/workspace/packages/midas

-- MIDAS: Found ROOT version 6.30.04 in /home/astrocent/workspace/root

-- MIDAS: Found ZLIB version 1.2.11

-- MIDAS: Found LibCURL version 7.81.0

-- MIDAS: MBEDTLS not found

-- MIDAS: Found MySQL version 8.0.36

-- MIDAS: MySQL CFLAGS: -I/usr/include/mysql and libs: -L/usr/lib/x86_64-linux-gnu -lmysqlclient -lzstd -lssl -lcrypto -lresolv -lm

-- MIDAS: Found PostgreSQL version PostgreSQL 12.9

-- MIDAS: PostgresSQL include: /home/astrocent/anaconda3/include and libs: /home/astrocent/anaconda3/lib

-- MIDAS: ODBC not found

-- Could NOT find SQLite3 (missing: SQLite3_INCLUDE_DIR SQLite3_LIBRARY)

-- MIDAS: SQLITE not found

-- MIDAS (msysmon): NVIDIA CUDA libs not found

-- MIDAS (msysmon): Found LM_SENSORS

-- MIDAS (mlogger): OpenCV not found, (no rtsp camera support))

-- MSCB: CMAKE_INSTALL_PREFIX: /home/astrocent/workspace/packages/midas

-- manalyzer: CMAKE_INSTALL_PREFIX: /home/astrocent/workspace/packages/midas

-- manalyzer: Building as a subproject of MIDAS

-- manalyzer: Using ROOT: flags: -std=c++17;-pipe;-fsigned-char;-pthread and includes: /home/astrocent/workspace/root/include

-- example_experiment: Building as a subproject of MIDAS

-- example_experiment: MIDASSYS: /home/astrocent/workspace/packages/midas

-- example_experiment: Using ROOT: flags: -std=c++17;-pipe;-fsigned-char;-pthread and includes: /home/astrocent/workspace/root/include

-- example_experiment: Found ROOT version 6.30.04

-- Configuring done

-- Generating done

-- Build files have been written to: /home/astrocent/workspace/packages/midas/build

/usr/bin/cmake -S/home/astrocent/workspace/packages/midas -B/home/astrocent/workspace/packages/midas/build --check-build-system CMakeFiles/Makefile.cmake 0

cd /home/astrocent/workspace/packages/midas/include && /usr/bin/cmake -E echo_append \#define\ GIT_REVISION\ \" > /home/astrocent/workspace/packages/midas/include/git-revision.h.tmp

cd /home/astrocent/workspace/packages/midas/include && /usr/bin/git log -n 1 --pretty=format:"%ad" >> /home/astrocent/workspace/packages/midas/include/git-revision.h.tmp

cd /home/astrocent/workspace/packages/midas/include && /usr/bin/cmake -E echo_append \ -\ >> /home/astrocent/workspace/packages/midas/include/git-revision.h.tmp

cd /home/astrocent/workspace/packages/midas/include && /usr/bin/git describe --abbrev=8 --tags --dirty | tr -d '\n' >> /home/astrocent/workspace/packages/midas/include/git-revision.h.tmp

cd /home/astrocent/workspace/packages/midas/include && /usr/bin/cmake -E echo_append \ on\ branch\ >> /home/astrocent/workspace/packages/midas/include/git-revision.h.tmp

cd /home/astrocent/workspace/packages/midas/include && /usr/bin/git rev-parse --abbrev-ref HEAD | tr -d '\n' >> /home/astrocent/workspace/packages/midas/include/git-revision.h.tmp

cd /home/astrocent/workspace/packages/midas/include && /usr/bin/cmake -E echo \" >> /home/astrocent/workspace/packages/midas/include/git-revision.h.tmp

cd /home/astrocent/workspace/packages/midas/include && /usr/bin/cmake -E copy_if_different /home/astrocent/workspace/packages/midas/include/git-revision.h.tmp /home/astrocent/workspace/packages/midas/include/git-revision.h

cd /home/astrocent/workspace/packages/midas/include && /usr/bin/cmake -E remove /home/astrocent/workspace/packages/midas/include/git-revision.h.tmp

Dependencies file "CMakeFiles/objlib.dir/src/tinyexpr.c.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/midasio/lz4.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/midasio/lz4frame.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/midasio/lz4hc.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/midasio/midasio.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/midasio/xxhash.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/mjson/mjson.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/mscb/src/strlcpy.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/mvodb/midasodb.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/mvodb/mjsonodb.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/mvodb/mvodb.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/mvodb/mxmlodb.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/mvodb/nullodb.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/mxml/mxml.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/alarm.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/crc32c.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/device_driver.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/elog.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/ftplib.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/history.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/history_common.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/history_image.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/history_odbc.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/history_schema.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/json_paste.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/mdsupport.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/midas.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/mjsonrpc.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/mjsonrpc_user.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/mrpc.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/odb.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/odbxx.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/sha256.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/sha512.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/system.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/tmfe.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Consolidate compiler generated dependencies of target objlib

Dependencies file "CMakeFiles/objlib-c-compat.dir/src/midas_c_compat.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib-c-compat.dir/compiler_depend.internal".

Consolidate compiler generated dependencies of target objlib-c-compat

Dependencies file "CMakeFiles/mfe.dir/src/mfe.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/mfe.dir/compiler_depend.internal".

Consolidate compiler generated dependencies of target mfe

Dependencies file "CMakeFiles/mana.dir/src/mana.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/mana.dir/compiler_depend.internal".

Consolidate compiler generated dependencies of target mana

Dependencies file "CMakeFiles/rmana.dir/src/mana.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/rmana.dir/compiler_depend.internal".

Consolidate compiler generated dependencies of target rmana

Dependencies file "CMakeFiles/mfeo.dir/src/mfe.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/mfeo.dir/compiler_depend.internal".

Consolidate compiler generated dependencies of target mfeo

Dependencies file "CMakeFiles/manao.dir/src/mana.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/manao.dir/compiler_depend.internal".

Consolidate compiler generated dependencies of target manao

Dependencies file "CMakeFiles/rmanao.dir/src/mana.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/rmanao.dir/compiler_depend.internal".

Consolidate compiler generated dependencies of target rmanao

Dependencies file "mscb/CMakeFiles/msc.dir/mxml/mxml.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/mscb/CMakeFiles/msc.dir/compiler_depend.internal".

Dependencies file "mscb/CMakeFiles/msc.dir/src/cmdedit.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/mscb/CMakeFiles/msc.dir/compiler_depend.internal".

Dependencies file "mscb/CMakeFiles/msc.dir/src/msc.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/mscb/CMakeFiles/msc.dir/compiler_depend.internal".

Dependencies file "mscb/CMakeFiles/msc.dir/src/mscb.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/mscb/CMakeFiles/msc.dir/compiler_depend.internal".

Dependencies file "mscb/CMakeFiles/msc.dir/src/mscbrpc.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/mscb/CMakeFiles/msc.dir/compiler_depend.internal".

Dependencies file "mscb/CMakeFiles/msc.dir/src/strlcpy.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/mscb/CMakeFiles/msc.dir/compiler_depend.internal".

Consolidate compiler generated dependencies of target msc

Dependencies file "mscb/CMakeFiles/mscb.dir/mxml/mxml.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/mscb/CMakeFiles/mscb.dir/compiler_depend.internal".

Dependencies file "mscb/CMakeFiles/mscb.dir/src/mscb.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/mscb/CMakeFiles/mscb.dir/compiler_depend.internal".

Dependencies file "mscb/CMakeFiles/mscb.dir/src/mscbrpc.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/mscb/CMakeFiles/mscb.dir/compiler_depend.internal".

Dependencies file "mscb/CMakeFiles/mscb.dir/src/strlcpy.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/mscb/CMakeFiles/mscb.dir/compiler_depend.internal".

Consolidate compiler generated dependencies of target mscb

Dependencies file "manalyzer/CMakeFiles/manalyzer.dir/manalyzer.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/manalyzer/CMakeFiles/manalyzer.dir/compiler_depend.internal".

Consolidate compiler generated dependencies of target manalyzer

Dependencies file "manalyzer/CMakeFiles/manalyzer_main.dir/manalyzer_main.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/manalyzer/CMakeFiles/manalyzer_main.dir/compiler_depend.internal".

Consolidate compiler generated dependencies of target manalyzer_main

Dependencies file "manalyzer/CMakeFiles/manalyzer_test.exe.dir/manalyzer_main.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/manalyzer/CMakeFiles/manalyzer_test.exe.dir/compiler_depend.internal".

Consolidate compiler generated dependencies of target manalyzer_test.exe

[ 0%] Linking CXX executable manalyzer_test.exe

/usr/bin/c++ -O2 -g -DNDEBUG CMakeFiles/manalyzer_test.exe.dir/manalyzer_main.cxx.o -o manalyzer_test.exe -Wl,-rpath,/home/astrocent/workspace/root/lib: libmanalyzer_main.a libmanalyzer.a ../libmidas.a -lcurl -L/usr/lib/x86_64-linux-gnu -lmysqlclient -lzstd -lssl -lcrypto -lresolv -lm -L/home/astrocent/anaconda3/lib -lpq -lutil -lrt -ldl /home/astrocent/workspace/root/lib/libCore.so /home/astrocent/workspace/root/lib/libImt.so /home/astrocent/workspace/root/lib/libRIO.so /home/astrocent/workspace/root/lib/libNet.so /home/astrocent/workspace/root/lib/libHist.so /home/astrocent/workspace/root/lib/libGraf.so /home/astrocent/workspace/root/lib/libGraf3d.so /home/astrocent/workspace/root/lib/libGpad.so /home/astrocent/workspace/root/lib/libROOTDataFrame.so /home/astrocent/workspace/root/lib/libTree.so /home/astrocent/workspace/root/lib/libTreePlayer.so /home/astrocent/workspace/root/lib/libRint.so /home/astrocent/workspace/root/lib/libPostscript.so /home/astrocent/workspace/root/lib/libMatrix.so /home/astrocent/workspace/root/lib/libPhysics.so /home/astrocent/workspace/root/lib/libMathCore.so /home/astrocent/workspace/root/lib/libThread.so /home/astrocent/workspace/root/lib/libMultiProc.so /home/astrocent/workspace/root/lib/libROOTVecOps.so /home/astrocent/workspace/root/lib/libGui.so /home/astrocent/workspace/root/lib/libRHTTP.so /home/astrocent/workspace/root/lib/libXMLIO.so /home/astrocent/workspace/root/lib/libXMLParser.so /usr/lib/x86_64-linux-gnu/libz.so

/usr/bin/ld: /home/astrocent/workspace/root/lib/libRIO.so: undefined reference to `std::condition_variable::wait(std::unique_lock<std::mutex>&)@GLIBCXX_3.4.30'

collect2: error: ld returned 1 exit status

make[3]: *** [manalyzer/CMakeFiles/manalyzer_test.exe.dir/build.make:124: manalyzer/manalyzer_test.exe] Error 1

make[2]: *** [CMakeFiles/Makefile2:766: manalyzer/CMakeFiles/manalyzer_test.exe.dir/all] Error 2

make[1]: *** [Makefile:136: all] Error 2

|

| Attachment 3: make-cmake-k

|

astrocent@astrocent-desktop:~/workspace/packages/midas$ make cmake -k

mkdir -p build

cd build; cmake .. -DCMAKE_EXPORT_COMPILE_COMMANDS=1 -DCMAKE_TARGET_MESSAGES=OFF; make --no-print-directory VERBOSE=1 all install | 2>&1 grep -v -e ^make -e ^Dependee -e cmake_depends -e ^Scanning -e cmake_link_script -e cmake_progress_start -e cmake_clean_target -e build.make

-- MIDAS: cmake version: 3.22.1

-- MIDAS: Setting build type to 'RelWithDebInfo' as non was specified

-- MIDAS: Curent build type is 'RelWithDebInfo'

-- MIDAS: Disabled address sanitizer

-- MIDAS: CMAKE_INSTALL_PREFIX: /home/astrocent/workspace/packages/midas

-- MIDAS: Found ROOT version 6.30.04 in /home/astrocent/workspace/root

-- MIDAS: Found ZLIB version 1.2.11

-- MIDAS: Found LibCURL version 7.81.0

-- MIDAS: MBEDTLS not found

-- MIDAS: Found MySQL version 8.0.36

-- MIDAS: MySQL CFLAGS: -I/usr/include/mysql and libs: -L/usr/lib/x86_64-linux-gnu -lmysqlclient -lzstd -lssl -lcrypto -lresolv -lm

-- MIDAS: Found PostgreSQL version PostgreSQL 12.9

-- MIDAS: PostgresSQL include: /home/astrocent/anaconda3/include and libs: /home/astrocent/anaconda3/lib

-- MIDAS: ODBC not found

-- Could NOT find SQLite3 (missing: SQLite3_INCLUDE_DIR SQLite3_LIBRARY)

-- MIDAS: SQLITE not found

-- MIDAS (msysmon): NVIDIA CUDA libs not found

-- MIDAS (msysmon): Found LM_SENSORS

-- MIDAS (mlogger): OpenCV not found, (no rtsp camera support))

-- MSCB: CMAKE_INSTALL_PREFIX: /home/astrocent/workspace/packages/midas

-- manalyzer: CMAKE_INSTALL_PREFIX: /home/astrocent/workspace/packages/midas

-- manalyzer: Building as a subproject of MIDAS

-- manalyzer: Using ROOT: flags: -std=c++17;-pipe;-fsigned-char;-pthread and includes: /home/astrocent/workspace/root/include

-- example_experiment: Building as a subproject of MIDAS

-- example_experiment: MIDASSYS: /home/astrocent/workspace/packages/midas

-- example_experiment: Using ROOT: flags: -std=c++17;-pipe;-fsigned-char;-pthread and includes: /home/astrocent/workspace/root/include

-- example_experiment: Found ROOT version 6.30.04

-- Configuring done

-- Generating done

-- Build files have been written to: /home/astrocent/workspace/packages/midas/build

/usr/bin/cmake -S/home/astrocent/workspace/packages/midas -B/home/astrocent/workspace/packages/midas/build --check-build-system CMakeFiles/Makefile.cmake 0

cd /home/astrocent/workspace/packages/midas/include && /usr/bin/cmake -E echo_append \#define\ GIT_REVISION\ \" > /home/astrocent/workspace/packages/midas/include/git-revision.h.tmp

cd /home/astrocent/workspace/packages/midas/include && /usr/bin/git log -n 1 --pretty=format:"%ad" >> /home/astrocent/workspace/packages/midas/include/git-revision.h.tmp

cd /home/astrocent/workspace/packages/midas/include && /usr/bin/cmake -E echo_append \ -\ >> /home/astrocent/workspace/packages/midas/include/git-revision.h.tmp

cd /home/astrocent/workspace/packages/midas/include && /usr/bin/git describe --abbrev=8 --tags --dirty | tr -d '\n' >> /home/astrocent/workspace/packages/midas/include/git-revision.h.tmp

cd /home/astrocent/workspace/packages/midas/include && /usr/bin/cmake -E echo_append \ on\ branch\ >> /home/astrocent/workspace/packages/midas/include/git-revision.h.tmp

cd /home/astrocent/workspace/packages/midas/include && /usr/bin/git rev-parse --abbrev-ref HEAD | tr -d '\n' >> /home/astrocent/workspace/packages/midas/include/git-revision.h.tmp

cd /home/astrocent/workspace/packages/midas/include && /usr/bin/cmake -E echo \" >> /home/astrocent/workspace/packages/midas/include/git-revision.h.tmp

cd /home/astrocent/workspace/packages/midas/include && /usr/bin/cmake -E copy_if_different /home/astrocent/workspace/packages/midas/include/git-revision.h.tmp /home/astrocent/workspace/packages/midas/include/git-revision.h

cd /home/astrocent/workspace/packages/midas/include && /usr/bin/cmake -E remove /home/astrocent/workspace/packages/midas/include/git-revision.h.tmp

Dependencies file "CMakeFiles/objlib.dir/src/tinyexpr.c.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/midasio/lz4.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/midasio/lz4frame.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/midasio/lz4hc.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/midasio/midasio.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/midasio/xxhash.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/mjson/mjson.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/mscb/src/strlcpy.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/mvodb/midasodb.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/mvodb/mjsonodb.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/mvodb/mvodb.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/mvodb/mxmlodb.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/mvodb/nullodb.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/mxml/mxml.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/alarm.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/crc32c.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/device_driver.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/elog.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/ftplib.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/history.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/history_common.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/history_image.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/history_odbc.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/history_schema.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/json_paste.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/mdsupport.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/midas.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/mjsonrpc.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/mjsonrpc_user.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/mrpc.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/odb.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/odbxx.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/sha256.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/sha512.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/system.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Dependencies file "CMakeFiles/objlib.dir/src/tmfe.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib.dir/compiler_depend.internal".

Consolidate compiler generated dependencies of target objlib

Dependencies file "CMakeFiles/objlib-c-compat.dir/src/midas_c_compat.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/objlib-c-compat.dir/compiler_depend.internal".

Consolidate compiler generated dependencies of target objlib-c-compat

Dependencies file "CMakeFiles/mfe.dir/src/mfe.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/mfe.dir/compiler_depend.internal".

Consolidate compiler generated dependencies of target mfe

Dependencies file "CMakeFiles/mana.dir/src/mana.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/mana.dir/compiler_depend.internal".

Consolidate compiler generated dependencies of target mana

Dependencies file "CMakeFiles/rmana.dir/src/mana.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/rmana.dir/compiler_depend.internal".

Consolidate compiler generated dependencies of target rmana

Dependencies file "CMakeFiles/mfeo.dir/src/mfe.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/mfeo.dir/compiler_depend.internal".

Consolidate compiler generated dependencies of target mfeo

Dependencies file "CMakeFiles/manao.dir/src/mana.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/manao.dir/compiler_depend.internal".

Consolidate compiler generated dependencies of target manao

Dependencies file "CMakeFiles/rmanao.dir/src/mana.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/CMakeFiles/rmanao.dir/compiler_depend.internal".

Consolidate compiler generated dependencies of target rmanao

Dependencies file "mscb/CMakeFiles/msc.dir/mxml/mxml.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/mscb/CMakeFiles/msc.dir/compiler_depend.internal".

Dependencies file "mscb/CMakeFiles/msc.dir/src/cmdedit.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/mscb/CMakeFiles/msc.dir/compiler_depend.internal".

Dependencies file "mscb/CMakeFiles/msc.dir/src/msc.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/mscb/CMakeFiles/msc.dir/compiler_depend.internal".

Dependencies file "mscb/CMakeFiles/msc.dir/src/mscb.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/mscb/CMakeFiles/msc.dir/compiler_depend.internal".

Dependencies file "mscb/CMakeFiles/msc.dir/src/mscbrpc.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/mscb/CMakeFiles/msc.dir/compiler_depend.internal".

Dependencies file "mscb/CMakeFiles/msc.dir/src/strlcpy.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/mscb/CMakeFiles/msc.dir/compiler_depend.internal".

Consolidate compiler generated dependencies of target msc

Dependencies file "mscb/CMakeFiles/mscb.dir/mxml/mxml.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/mscb/CMakeFiles/mscb.dir/compiler_depend.internal".

Dependencies file "mscb/CMakeFiles/mscb.dir/src/mscb.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/mscb/CMakeFiles/mscb.dir/compiler_depend.internal".

Dependencies file "mscb/CMakeFiles/mscb.dir/src/mscbrpc.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/mscb/CMakeFiles/mscb.dir/compiler_depend.internal".

Dependencies file "mscb/CMakeFiles/mscb.dir/src/strlcpy.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/mscb/CMakeFiles/mscb.dir/compiler_depend.internal".

Consolidate compiler generated dependencies of target mscb

Dependencies file "manalyzer/CMakeFiles/manalyzer.dir/manalyzer.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/manalyzer/CMakeFiles/manalyzer.dir/compiler_depend.internal".

Consolidate compiler generated dependencies of target manalyzer

Dependencies file "manalyzer/CMakeFiles/manalyzer_main.dir/manalyzer_main.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/manalyzer/CMakeFiles/manalyzer_main.dir/compiler_depend.internal".

Consolidate compiler generated dependencies of target manalyzer_main

Dependencies file "manalyzer/CMakeFiles/manalyzer_test.exe.dir/manalyzer_main.cxx.o.d" is newer than depends file "/home/astrocent/workspace/packages/midas/build/manalyzer/CMakeFiles/manalyzer_test.exe.dir/compiler_depend.internal".

Consolidate compiler generated dependencies of target manalyzer_test.exe

[ 0%] Linking CXX executable manalyzer_test.exe

/usr/bin/c++ -O2 -g -DNDEBUG CMakeFiles/manalyzer_test.exe.dir/manalyzer_main.cxx.o -o manalyzer_test.exe -Wl,-rpath,/home/astrocent/workspace/root/lib: libmanalyzer_main.a libmanalyzer.a ../libmidas.a -lcurl -L/usr/lib/x86_64-linux-gnu -lmysqlclient -lzstd -lssl -lcrypto -lresolv -lm -L/home/astrocent/anaconda3/lib -lpq -lutil -lrt -ldl /home/astrocent/workspace/root/lib/libCore.so /home/astrocent/workspace/root/lib/libImt.so /home/astrocent/workspace/root/lib/libRIO.so /home/astrocent/workspace/root/lib/libNet.so /home/astrocent/workspace/root/lib/libHist.so /home/astrocent/workspace/root/lib/libGraf.so /home/astrocent/workspace/root/lib/libGraf3d.so /home/astrocent/workspace/root/lib/libGpad.so /home/astrocent/workspace/root/lib/libROOTDataFrame.so /home/astrocent/workspace/root/lib/libTree.so /home/astrocent/workspace/root/lib/libTreePlayer.so /home/astrocent/workspace/root/lib/libRint.so /home/astrocent/workspace/root/lib/libPostscript.so /home/astrocent/workspace/root/lib/libMatrix.so /home/astrocent/workspace/root/lib/libPhysics.so /home/astrocent/workspace/root/lib/libMathCore.so /home/astrocent/workspace/root/lib/libThread.so /home/astrocent/workspace/root/lib/libMultiProc.so /home/astrocent/workspace/root/lib/libROOTVecOps.so /home/astrocent/workspace/root/lib/libGui.so /home/astrocent/workspace/root/lib/libRHTTP.so /home/astrocent/workspace/root/lib/libXMLIO.so /home/astrocent/workspace/root/lib/libXMLParser.so /usr/lib/x86_64-linux-gnu/libz.so

/usr/bin/ld: /home/astrocent/workspace/root/lib/libRIO.so: undefined reference to `std::condition_variable::wait(std::unique_lock<std::mutex>&)@GLIBCXX_3.4.30'

collect2: error: ld returned 1 exit status

make[3]: *** [manalyzer/CMakeFiles/manalyzer_test.exe.dir/build.make:124: manalyzer/manalyzer_test.exe] Error 1

make[3]: Target 'manalyzer/CMakeFiles/manalyzer_test.exe.dir/build' not remade because of errors.

make[2]: *** [CMakeFiles/Makefile2:766: manalyzer/CMakeFiles/manalyzer_test.exe.dir/all] Error 2

[ 1%] Building CXX object manalyzer/CMakeFiles/manalyzer_example_cxx.exe.dir/manalyzer_example_cxx.cxx.o

cd /home/astrocent/workspace/packages/midas/build/manalyzer && /usr/bin/c++ -DHAVE_CURL -DHAVE_FTPLIB -DHAVE_MIDAS -DHAVE_MYSQL -DHAVE_PGSQL -DHAVE_ROOT_HTTP -DHAVE_THTTP_SERVER -DHAVE_TMFE -D_LARGEFILE64_SOURCE -I/home/astrocent/workspace/packages/midas/manalyzer -I/home/astrocent/workspace/root/include -I/home/astrocent/workspace/packages/midas/include -I/home/astrocent/workspace/packages/midas/mxml -I/home/astrocent/workspace/packages/midas/mscb/include -I/home/astrocent/workspace/packages/midas/mjson -I/home/astrocent/workspace/packages/midas/mvodb -I/home/astrocent/workspace/packages/midas/midasio -I/home/astrocent/workspace/packages/midas/manalyzer/../mvodb -I/home/astrocent/workspace/packages/midas/manalyzer/../midasio -O2 -g -DNDEBUG -Wall -Wformat=2 -Wno-format-nonliteral -Wno-strict-aliasing -Wuninitialized -Wno-unused-function -O2 -g -std=c++17 -pipe -fsigned-char -pthread -DHAVE_ROOT -I/usr/include/mysql -I/home/astrocent/anaconda3/include -MD -MT manalyzer/CMakeFiles/manalyzer_example_cxx.exe.dir/manalyzer_example_cxx.cxx.o -MF CMakeFiles/manalyzer_example_cxx.exe.dir/manalyzer_example_cxx.cxx.o.d -o CMakeFiles/manalyzer_example_cxx.exe.dir/manalyzer_example_cxx.cxx.o -c /home/astrocent/workspace/packages/midas/manalyzer/manalyzer_example_cxx.cxx

[ 1%] Linking CXX executable manalyzer_example_cxx.exe

/usr/bin/c++ -O2 -g -DNDEBUG CMakeFiles/manalyzer_example_cxx.exe.dir/manalyzer_example_cxx.cxx.o -o manalyzer_example_cxx.exe -Wl,-rpath,/home/astrocent/workspace/root/lib: libmanalyzer_main.a libmanalyzer.a ../libmidas.a -lcurl -L/usr/lib/x86_64-linux-gnu -lmysqlclient -lzstd -lssl -lcrypto -lresolv -lm -L/home/astrocent/anaconda3/lib -lpq -lutil -lrt -ldl /home/astrocent/workspace/root/lib/libCore.so /home/astrocent/workspace/root/lib/libImt.so /home/astrocent/workspace/root/lib/libRIO.so /home/astrocent/workspace/root/lib/libNet.so /home/astrocent/workspace/root/lib/libHist.so /home/astrocent/workspace/root/lib/libGraf.so /home/astrocent/workspace/root/lib/libGraf3d.so /home/astrocent/workspace/root/lib/libGpad.so /home/astrocent/workspace/root/lib/libROOTDataFrame.so /home/astrocent/workspace/root/lib/libTree.so /home/astrocent/workspace/root/lib/libTreePlayer.so /home/astrocent/workspace/root/lib/libRint.so /home/astrocent/workspace/root/lib/libPostscript.so /home/astrocent/workspace/root/lib/libMatrix.so /home/astrocent/workspace/root/lib/libPhysics.so /home/astrocent/workspace/root/lib/libMathCore.so /home/astrocent/workspace/root/lib/libThread.so /home/astrocent/workspace/root/lib/libMultiProc.so /home/astrocent/workspace/root/lib/libROOTVecOps.so /home/astrocent/workspace/root/lib/libGui.so /home/astrocent/workspace/root/lib/libRHTTP.so /home/astrocent/workspace/root/lib/libXMLIO.so /home/astrocent/workspace/root/lib/libXMLParser.so /usr/lib/x86_64-linux-gnu/libz.so

/usr/bin/ld: /home/astrocent/workspace/root/lib/libRIO.so: undefined reference to `std::condition_variable::wait(std::unique_lock<std::mutex>&)@GLIBCXX_3.4.30'

collect2: error: ld returned 1 exit status

make[3]: *** [manalyzer/CMakeFiles/manalyzer_example_cxx.exe.dir/build.make:124: manalyzer/manalyzer_example_cxx.exe] Error 1

make[3]: Target 'manalyzer/CMakeFiles/manalyzer_example_cxx.exe.dir/build' not remade because of errors.

make[2]: *** [CMakeFiles/Makefile2:793: manalyzer/CMakeFiles/manalyzer_example_cxx.exe.dir/all] Error 2

[ 2%] Building CXX object manalyzer/CMakeFiles/manalyzer_example_flow.exe.dir/manalyzer_example_flow.cxx.o

cd /home/astrocent/workspace/packages/midas/build/manalyzer && /usr/bin/c++ -DHAVE_CURL -DHAVE_FTPLIB -DHAVE_MIDAS -DHAVE_MYSQL -DHAVE_PGSQL -DHAVE_ROOT_HTTP -DHAVE_THTTP_SERVER -DHAVE_TMFE -D_LARGEFILE64_SOURCE -I/home/astrocent/workspace/packages/midas/manalyzer -I/home/astrocent/workspace/root/include -I/home/astrocent/workspace/packages/midas/include -I/home/astrocent/workspace/packages/midas/mxml -I/home/astrocent/workspace/packages/midas/mscb/include -I/home/astrocent/workspace/packages/midas/mjson -I/home/astrocent/workspace/packages/midas/mvodb -I/home/astrocent/workspace/packages/midas/midasio -I/home/astrocent/workspace/packages/midas/manalyzer/../mvodb -I/home/astrocent/workspace/packages/midas/manalyzer/../midasio -O2 -g -DNDEBUG -Wall -Wformat=2 -Wno-format-nonliteral -Wno-strict-aliasing -Wuninitialized -Wno-unused-function -O2 -g -std=c++17 -pipe -fsigned-char -pthread -DHAVE_ROOT -I/usr/include/mysql -I/home/astrocent/anaconda3/include -MD -MT manalyzer/CMakeFiles/manalyzer_example_flow.exe.dir/manalyzer_example_flow.cxx.o -MF CMakeFiles/manalyzer_example_flow.exe.dir/manalyzer_example_flow.cxx.o.d -o CMakeFiles/manalyzer_example_flow.exe.dir/manalyzer_example_flow.cxx.o -c /home/astrocent/workspace/packages/midas/manalyzer/manalyzer_example_flow.cxx

[ 2%] Linking CXX executable manalyzer_example_flow.exe

/usr/bin/c++ -O2 -g -DNDEBUG CMakeFiles/manalyzer_example_flow.exe.dir/manalyzer_example_flow.cxx.o -o manalyzer_example_flow.exe -Wl,-rpath,/home/astrocent/workspace/root/lib: libmanalyzer_main.a libmanalyzer.a ../libmidas.a -lcurl -L/usr/lib/x86_64-linux-gnu -lmysqlclient -lzstd -lssl -lcrypto -lresolv -lm -L/home/astrocent/anaconda3/lib -lpq -lutil -lrt -ldl /home/astrocent/workspace/root/lib/libCore.so /home/astrocent/workspace/root/lib/libImt.so /home/astrocent/workspace/root/lib/libRIO.so /home/astrocent/workspace/root/lib/libNet.so /home/astrocent/workspace/root/lib/libHist.so /home/astrocent/workspace/root/lib/libGraf.so /home/astrocent/workspace/root/lib/libGraf3d.so /home/astrocent/workspace/root/lib/libGpad.so /home/astrocent/workspace/root/lib/libROOTDataFrame.so /home/astrocent/workspace/root/lib/libTree.so /home/astrocent/workspace/root/lib/libTreePlayer.so /home/astrocent/workspace/root/lib/libRint.so /home/astrocent/workspace/root/lib/libPostscript.so /home/astrocent/workspace/root/lib/libMatrix.so /home/astrocent/workspace/root/lib/libPhysics.so /home/astrocent/workspace/root/lib/libMathCore.so /home/astrocent/workspace/root/lib/libThread.so /home/astrocent/workspace/root/lib/libMultiProc.so /home/astrocent/workspace/root/lib/libROOTVecOps.so /home/astrocent/workspace/root/lib/libGui.so /home/astrocent/workspace/root/lib/libRHTTP.so /home/astrocent/workspace/root/lib/libXMLIO.so /home/astrocent/workspace/root/lib/libXMLParser.so /usr/lib/x86_64-linux-gnu/libz.so

/usr/bin/ld: /home/astrocent/workspace/root/lib/libRIO.so: undefined reference to `std::condition_variable::wait(std::unique_lock<std::mutex>&)@GLIBCXX_3.4.30'

collect2: error: ld returned 1 exit status

make[3]: *** [manalyzer/CMakeFiles/manalyzer_example_flow.exe.dir/build.make:124: manalyzer/manalyzer_example_flow.exe] Error 1

make[3]: Target 'manalyzer/CMakeFiles/manalyzer_example_flow.exe.dir/build' not remade because of errors.

make[2]: *** [CMakeFiles/Makefile2:820: manalyzer/CMakeFiles/manalyzer_example_flow.exe.dir/all] Error 2

[ 3%] Building CXX object manalyzer/CMakeFiles/manalyzer_example_flow_queue.exe.dir/manalyzer_example_flow_queue.cxx.o

cd /home/astrocent/workspace/packages/midas/build/manalyzer && /usr/bin/c++ -DHAVE_CURL -DHAVE_FTPLIB -DHAVE_MIDAS -DHAVE_MYSQL -DHAVE_PGSQL -DHAVE_ROOT_HTTP -DHAVE_THTTP_SERVER -DHAVE_TMFE -D_LARGEFILE64_SOURCE -I/home/astrocent/workspace/packages/midas/manalyzer -I/home/astrocent/workspace/root/include -I/home/astrocent/workspace/packages/midas/include -I/home/astrocent/workspace/packages/midas/mxml -I/home/astrocent/workspace/packages/midas/mscb/include -I/home/astrocent/workspace/packages/midas/mjson -I/home/astrocent/workspace/packages/midas/mvodb -I/home/astrocent/workspace/packages/midas/midasio -I/home/astrocent/workspace/packages/midas/manalyzer/../mvodb -I/home/astrocent/workspace/packages/midas/manalyzer/../midasio -O2 -g -DNDEBUG -Wall -Wformat=2 -Wno-format-nonliteral -Wno-strict-aliasing -Wuninitialized -Wno-unused-function -O2 -g -std=c++17 -pipe -fsigned-char -pthread -DHAVE_ROOT -I/usr/include/mysql -I/home/astrocent/anaconda3/include -MD -MT manalyzer/CMakeFiles/manalyzer_example_flow_queue.exe.dir/manalyzer_example_flow_queue.cxx.o -MF CMakeFiles/manalyzer_example_flow_queue.exe.dir/manalyzer_example_flow_queue.cxx.o.d -o CMakeFiles/manalyzer_example_flow_queue.exe.dir/manalyzer_example_flow_queue.cxx.o -c /home/astrocent/workspace/packages/midas/manalyzer/manalyzer_example_flow_queue.cxx

[ 3%] Linking CXX executable manalyzer_example_flow_queue.exe

/usr/bin/c++ -O2 -g -DNDEBUG CMakeFiles/manalyzer_example_flow_queue.exe.dir/manalyzer_example_flow_queue.cxx.o -o manalyzer_example_flow_queue.exe -Wl,-rpath,/home/astrocent/workspace/root/lib: libmanalyzer_main.a libmanalyzer.a ../libmidas.a -lcurl -L/usr/lib/x86_64-linux-gnu -lmysqlclient -lzstd -lssl -lcrypto -lresolv -lm -L/home/astrocent/anaconda3/lib -lpq -lutil -lrt -ldl /home/astrocent/workspace/root/lib/libCore.so /home/astrocent/workspace/root/lib/libImt.so /home/astrocent/workspace/root/lib/libRIO.so /home/astrocent/workspace/root/lib/libNet.so /home/astrocent/workspace/root/lib/libHist.so /home/astrocent/workspace/root/lib/libGraf.so /home/astrocent/workspace/root/lib/libGraf3d.so /home/astrocent/workspace/root/lib/libGpad.so /home/astrocent/workspace/root/lib/libROOTDataFrame.so /home/astrocent/workspace/root/lib/libTree.so /home/astrocent/workspace/root/lib/libTreePlayer.so /home/astrocent/workspace/root/lib/libRint.so /home/astrocent/workspace/root/lib/libPostscript.so /home/astrocent/workspace/root/lib/libMatrix.so /home/astrocent/workspace/root/lib/libPhysics.so /home/astrocent/workspace/root/lib/libMathCore.so /home/astrocent/workspace/root/lib/libThread.so /home/astrocent/workspace/root/lib/libMultiProc.so /home/astrocent/workspace/root/lib/libROOTVecOps.so /home/astrocent/workspace/root/lib/libGui.so /home/astrocent/workspace/root/lib/libRHTTP.so /home/astrocent/workspace/root/lib/libXMLIO.so /home/astrocent/workspace/root/lib/libXMLParser.so /usr/lib/x86_64-linux-gnu/libz.so

/usr/bin/ld: /home/astrocent/workspace/root/lib/libRIO.so: undefined reference to `std::condition_variable::wait(std::unique_lock<std::mutex>&)@GLIBCXX_3.4.30'

collect2: error: ld returned 1 exit status

make[3]: *** [manalyzer/CMakeFiles/manalyzer_example_flow_queue.exe.dir/build.make:124: manalyzer/manalyzer_example_flow_queue.exe] Error 1

make[3]: Target 'manalyzer/CMakeFiles/manalyzer_example_flow_queue.exe.dir/build' not remade because of errors.

make[2]: *** [CMakeFiles/Makefile2:847: manalyzer/CMakeFiles/manalyzer_example_flow_queue.exe.dir/all] Error 2

[ 4%] Building CXX object manalyzer/CMakeFiles/manalyzer_example_frontend.exe.dir/manalyzer_example_frontend.cxx.o

cd /home/astrocent/workspace/packages/midas/build/manalyzer && /usr/bin/c++ -DHAVE_CURL -DHAVE_FTPLIB -DHAVE_MIDAS -DHAVE_MYSQL -DHAVE_PGSQL -DHAVE_ROOT_HTTP -DHAVE_THTTP_SERVER -DHAVE_TMFE -D_LARGEFILE64_SOURCE -I/home/astrocent/workspace/packages/midas/manalyzer -I/home/astrocent/workspace/root/include -I/home/astrocent/workspace/packages/midas/include -I/home/astrocent/workspace/packages/midas/mxml -I/home/astrocent/workspace/packages/midas/mscb/include -I/home/astrocent/workspace/packages/midas/mjson -I/home/astrocent/workspace/packages/midas/mvodb -I/home/astrocent/workspace/packages/midas/midasio -I/home/astrocent/workspace/packages/midas/manalyzer/../mvodb -I/home/astrocent/workspace/packages/midas/manalyzer/../midasio -O2 -g -DNDEBUG -Wall -Wformat=2 -Wno-format-nonliteral -Wno-strict-aliasing -Wuninitialized -Wno-unused-function -O2 -g -std=c++17 -pipe -fsigned-char -pthread -DHAVE_ROOT -I/usr/include/mysql -I/home/astrocent/anaconda3/include -MD -MT manalyzer/CMakeFiles/manalyzer_example_frontend.exe.dir/manalyzer_example_frontend.cxx.o -MF CMakeFiles/manalyzer_example_frontend.exe.dir/manalyzer_example_frontend.cxx.o.d -o CMakeFiles/manalyzer_example_frontend.exe.dir/manalyzer_example_frontend.cxx.o -c /home/astrocent/workspace/packages/midas/manalyzer/manalyzer_example_frontend.cxx

[ 4%] Linking CXX executable manalyzer_example_frontend.exe

/usr/bin/c++ -O2 -g -DNDEBUG CMakeFiles/manalyzer_example_frontend.exe.dir/manalyzer_example_frontend.cxx.o -o manalyzer_example_frontend.exe -Wl,-rpath,/home/astrocent/workspace/root/lib: libmanalyzer_main.a libmanalyzer.a ../libmidas.a -lcurl -L/usr/lib/x86_64-linux-gnu -lmysqlclient -lzstd -lssl -lcrypto -lresolv -lm -L/home/astrocent/anaconda3/lib -lpq -lutil -lrt -ldl /home/astrocent/workspace/root/lib/libCore.so /home/astrocent/workspace/root/lib/libImt.so /home/astrocent/workspace/root/lib/libRIO.so /home/astrocent/workspace/root/lib/libNet.so /home/astrocent/workspace/root/lib/libHist.so /home/astrocent/workspace/root/lib/libGraf.so /home/astrocent/workspace/root/lib/libGraf3d.so /home/astrocent/workspace/root/lib/libGpad.so /home/astrocent/workspace/root/lib/libROOTDataFrame.so /home/astrocent/workspace/root/lib/libTree.so /home/astrocent/workspace/root/lib/libTreePlayer.so /home/astrocent/workspace/root/lib/libRint.so /home/astrocent/workspace/root/lib/libPostscript.so /home/astrocent/workspace/root/lib/libMatrix.so /home/astrocent/workspace/root/lib/libPhysics.so /home/astrocent/workspace/root/lib/libMathCore.so /home/astrocent/workspace/root/lib/libThread.so /home/astrocent/workspace/root/lib/libMultiProc.so /home/astrocent/workspace/root/lib/libROOTVecOps.so /home/astrocent/workspace/root/lib/libGui.so /home/astrocent/workspace/root/lib/libRHTTP.so /home/astrocent/workspace/root/lib/libXMLIO.so /home/astrocent/workspace/root/lib/libXMLParser.so /usr/lib/x86_64-linux-gnu/libz.so

/usr/bin/ld: /home/astrocent/workspace/root/lib/libRIO.so: undefined reference to `std::condition_variable::wait(std::unique_lock<std::mutex>&)@GLIBCXX_3.4.30'

collect2: error: ld returned 1 exit status

make[3]: *** [manalyzer/CMakeFiles/manalyzer_example_frontend.exe.dir/build.make:124: manalyzer/manalyzer_example_frontend.exe] Error 1

make[3]: Target 'manalyzer/CMakeFiles/manalyzer_example_frontend.exe.dir/build' not remade because of errors.

make[2]: *** [CMakeFiles/Makefile2:874: manalyzer/CMakeFiles/manalyzer_example_frontend.exe.dir/all] Error 2

[ 5%] Building CXX object manalyzer/CMakeFiles/manalyzer_example_root.exe.dir/manalyzer_example_root.cxx.o

cd /home/astrocent/workspace/packages/midas/build/manalyzer && /usr/bin/c++ -DHAVE_CURL -DHAVE_FTPLIB -DHAVE_MIDAS -DHAVE_MYSQL -DHAVE_PGSQL -DHAVE_ROOT_HTTP -DHAVE_THTTP_SERVER -DHAVE_TMFE -D_LARGEFILE64_SOURCE -I/home/astrocent/workspace/packages/midas/manalyzer -I/home/astrocent/workspace/root/include -I/home/astrocent/workspace/packages/midas/include -I/home/astrocent/workspace/packages/midas/mxml -I/home/astrocent/workspace/packages/midas/mscb/include -I/home/astrocent/workspace/packages/midas/mjson -I/home/astrocent/workspace/packages/midas/mvodb -I/home/astrocent/workspace/packages/midas/midasio -I/home/astrocent/workspace/packages/midas/manalyzer/../mvodb -I/home/astrocent/workspace/packages/midas/manalyzer/../midasio -O2 -g -DNDEBUG -Wall -Wformat=2 -Wno-format-nonliteral -Wno-strict-aliasing -Wuninitialized -Wno-unused-function -O2 -g -std=c++17 -pipe -fsigned-char -pthread -DHAVE_ROOT -I/usr/include/mysql -I/home/astrocent/anaconda3/include -MD -MT manalyzer/CMakeFiles/manalyzer_example_root.exe.dir/manalyzer_example_root.cxx.o -MF CMakeFiles/manalyzer_example_root.exe.dir/manalyzer_example_root.cxx.o.d -o CMakeFiles/manalyzer_example_root.exe.dir/manalyzer_example_root.cxx.o -c /home/astrocent/workspace/packages/midas/manalyzer/manalyzer_example_root.cxx

[ 5%] Linking CXX executable manalyzer_example_root.exe

/usr/bin/c++ -O2 -g -DNDEBUG CMakeFiles/manalyzer_example_root.exe.dir/manalyzer_example_root.cxx.o -o manalyzer_example_root.exe -Wl,-rpath,/home/astrocent/workspace/root/lib: libmanalyzer_main.a libmanalyzer.a ../libmidas.a -lcurl -L/usr/lib/x86_64-linux-gnu -lmysqlclient -lzstd -lssl -lcrypto -lresolv -lm -L/home/astrocent/anaconda3/lib -lpq -lutil -lrt -ldl /home/astrocent/workspace/root/lib/libCore.so /home/astrocent/workspace/root/lib/libImt.so /home/astrocent/workspace/root/lib/libRIO.so /home/astrocent/workspace/root/lib/libNet.so /home/astrocent/workspace/root/lib/libHist.so /home/astrocent/workspace/root/lib/libGraf.so /home/astrocent/workspace/root/lib/libGraf3d.so /home/astrocent/workspace/root/lib/libGpad.so /home/astrocent/workspace/root/lib/libROOTDataFrame.so /home/astrocent/workspace/root/lib/libTree.so /home/astrocent/workspace/root/lib/libTreePlayer.so /home/astrocent/workspace/root/lib/libRint.so /home/astrocent/workspace/root/lib/libPostscript.so /home/astrocent/workspace/root/lib/libMatrix.so /home/astrocent/workspace/root/lib/libPhysics.so /home/astrocent/workspace/root/lib/libMathCore.so /home/astrocent/workspace/root/lib/libThread.so /home/astrocent/workspace/root/lib/libMultiProc.so /home/astrocent/workspace/root/lib/libROOTVecOps.so /home/astrocent/workspace/root/lib/libGui.so /home/astrocent/workspace/root/lib/libRHTTP.so /home/astrocent/workspace/root/lib/libXMLIO.so /home/astrocent/workspace/root/lib/libXMLParser.so /usr/lib/x86_64-linux-gnu/libz.so

/usr/bin/ld: /home/astrocent/workspace/root/lib/libRIO.so: undefined reference to `std::condition_variable::wait(std::unique_lock<std::mutex>&)@GLIBCXX_3.4.30'

collect2: error: ld returned 1 exit status

make[3]: *** [manalyzer/CMakeFiles/manalyzer_example_root.exe.dir/build.make:124: manalyzer/manalyzer_example_root.exe] Error 1

make[3]: Target 'manalyzer/CMakeFiles/manalyzer_example_root.exe.dir/build' not remade because of errors.

make[2]: *** [CMakeFiles/Makefile2:901: manalyzer/CMakeFiles/manalyzer_example_root.exe.dir/all] Error 2

[ 6%] Building CXX object manalyzer/CMakeFiles/manalyzer_example_root_graphics.exe.dir/manalyzer_example_root_graphics.cxx.o

cd /home/astrocent/workspace/packages/midas/build/manalyzer && /usr/bin/c++ -DHAVE_CURL -DHAVE_FTPLIB -DHAVE_MIDAS -DHAVE_MYSQL -DHAVE_PGSQL -DHAVE_ROOT_HTTP -DHAVE_THTTP_SERVER -DHAVE_TMFE -D_LARGEFILE64_SOURCE -I/home/astrocent/workspace/packages/midas/manalyzer -I/home/astrocent/workspace/root/include -I/home/astrocent/workspace/packages/midas/include -I/home/astrocent/workspace/packages/midas/mxml -I/home/astrocent/workspace/packages/midas/mscb/include -I/home/astrocent/workspace/packages/midas/mjson -I/home/astrocent/workspace/packages/midas/mvodb -I/home/astrocent/workspace/packages/midas/midasio -I/home/astrocent/workspace/packages/midas/manalyzer/../mvodb -I/home/astrocent/workspace/packages/midas/manalyzer/../midasio -O2 -g -DNDEBUG -Wall -Wformat=2 -Wno-format-nonliteral -Wno-strict-aliasing -Wuninitialized -Wno-unused-function -O2 -g -std=c++17 -pipe -fsigned-char -pthread -DHAVE_ROOT -I/usr/include/mysql -I/home/astrocent/anaconda3/include -MD -MT manalyzer/CMakeFiles/manalyzer_example_root_graphics.exe.dir/manalyzer_example_root_graphics.cxx.o -MF CMakeFiles/manalyzer_example_root_graphics.exe.dir/manalyzer_example_root_graphics.cxx.o.d -o CMakeFiles/manalyzer_example_root_graphics.exe.dir/manalyzer_example_root_graphics.cxx.o -c /home/astrocent/workspace/packages/midas/manalyzer/manalyzer_example_root_graphics.cxx

[ 6%] Linking CXX executable manalyzer_example_root_graphics.exe

/usr/bin/c++ -O2 -g -DNDEBUG CMakeFiles/manalyzer_example_root_graphics.exe.dir/manalyzer_example_root_graphics.cxx.o -o manalyzer_example_root_graphics.exe -Wl,-rpath,/home/astrocent/workspace/root/lib: libmanalyzer_main.a libmanalyzer.a ../libmidas.a -lcurl -L/usr/lib/x86_64-linux-gnu -lmysqlclient -lzstd -lssl -lcrypto -lresolv -lm -L/home/astrocent/anaconda3/lib -lpq -lutil -lrt -ldl /home/astrocent/workspace/root/lib/libCore.so /home/astrocent/workspace/root/lib/libImt.so /home/astrocent/workspace/root/lib/libRIO.so /home/astrocent/workspace/root/lib/libNet.so /home/astrocent/workspace/root/lib/libHist.so /home/astrocent/workspace/root/lib/libGraf.so /home/astrocent/workspace/root/lib/libGraf3d.so /home/astrocent/workspace/root/lib/libGpad.so /home/astrocent/workspace/root/lib/libROOTDataFrame.so /home/astrocent/workspace/root/lib/libTree.so /home/astrocent/workspace/root/lib/libTreePlayer.so /home/astrocent/workspace/root/lib/libRint.so /home/astrocent/workspace/root/lib/libPostscript.so /home/astrocent/workspace/root/lib/libMatrix.so /home/astrocent/workspace/root/lib/libPhysics.so /home/astrocent/workspace/root/lib/libMathCore.so /home/astrocent/workspace/root/lib/libThread.so /home/astrocent/workspace/root/lib/libMultiProc.so /home/astrocent/workspace/root/lib/libROOTVecOps.so /home/astrocent/workspace/root/lib/libGui.so /home/astrocent/workspace/root/lib/libRHTTP.so /home/astrocent/workspace/root/lib/libXMLIO.so /home/astrocent/workspace/root/lib/libXMLParser.so /usr/lib/x86_64-linux-gnu/libz.so

/usr/bin/ld: /home/astrocent/workspace/root/lib/libRIO.so: undefined reference to `std::condition_variable::wait(std::unique_lock<std::mutex>&)@GLIBCXX_3.4.30'

collect2: error: ld returned 1 exit status

make[3]: *** [manalyzer/CMakeFiles/manalyzer_example_root_graphics.exe.dir/build.make:124: manalyzer/manalyzer_example_root_graphics.exe] Error 1

make[3]: Target 'manalyzer/CMakeFiles/manalyzer_example_root_graphics.exe.dir/build' not remade because of errors.

make[2]: *** [CMakeFiles/Makefile2:928: manalyzer/CMakeFiles/manalyzer_example_root_graphics.exe.dir/all] Error 2

[ 7%] Building CXX object progs/CMakeFiles/mserver.dir/mserver.cxx.o

cd /home/astrocent/workspace/packages/midas/build/progs && /usr/bin/c++ -DHAVE_CURL -DHAVE_FTPLIB -DHAVE_MYSQL -DHAVE_PGSQL -D_LARGEFILE64_SOURCE -I/home/astrocent/workspace/packages/midas/include -I/home/astrocent/workspace/packages/midas/mxml -I/home/astrocent/workspace/packages/midas/mscb/include -I/home/astrocent/workspace/packages/midas/mjson -I/home/astrocent/workspace/packages/midas/mvodb -I/home/astrocent/workspace/packages/midas/midasio -O2 -g -DNDEBUG -Wall -Wformat=2 -Wno-format-nonliteral -Wno-strict-aliasing -Wuninitialized -Wno-unused-function -I/usr/include/mysql -I/home/astrocent/anaconda3/include -MD -MT progs/CMakeFiles/mserver.dir/mserver.cxx.o -MF CMakeFiles/mserver.dir/mserver.cxx.o.d -o CMakeFiles/mserver.dir/mserver.cxx.o -c /home/astrocent/workspace/packages/midas/progs/mserver.cxx

[ 7%] Linking CXX executable mserver

/usr/bin/c++ -O2 -g -DNDEBUG CMakeFiles/mserver.dir/mserver.cxx.o -o mserver ../libmidas.a /usr/lib/x86_64-linux-gnu/libz.so -lcurl -L/usr/lib/x86_64-linux-gnu -lmysqlclient -lzstd -lssl -lcrypto -lresolv -lm -L/home/astrocent/anaconda3/lib -lpq -lutil -lrt -ldl

[ 7%] Building CXX object progs/CMakeFiles/mlogger.dir/mlogger.cxx.o

cd /home/astrocent/workspace/packages/midas/build/progs && /usr/bin/c++ -DHAVE_CURL -DHAVE_FTPLIB -DHAVE_MYSQL -DHAVE_PGSQL -D_LARGEFILE64_SOURCE -I/home/astrocent/workspace/packages/midas/include -I/home/astrocent/workspace/packages/midas/mxml -I/home/astrocent/workspace/packages/midas/mscb/include -I/home/astrocent/workspace/packages/midas/mjson -I/home/astrocent/workspace/packages/midas/mvodb -I/home/astrocent/workspace/packages/midas/midasio -O2 -g -DNDEBUG -Wall -Wformat=2 -Wno-format-nonliteral -Wno-strict-aliasing -Wuninitialized -Wno-unused-function -I/usr/include/mysql -I/home/astrocent/anaconda3/include -MD -MT progs/CMakeFiles/mlogger.dir/mlogger.cxx.o -MF CMakeFiles/mlogger.dir/mlogger.cxx.o.d -o CMakeFiles/mlogger.dir/mlogger.cxx.o -c /home/astrocent/workspace/packages/midas/progs/mlogger.cxx

[ 8%] Linking CXX executable mlogger

/usr/bin/c++ -O2 -g -DNDEBUG CMakeFiles/mlogger.dir/mlogger.cxx.o -o mlogger ../libmidas.a /usr/lib/x86_64-linux-gnu/libz.so -lcurl -L/usr/lib/x86_64-linux-gnu -lmysqlclient -lzstd -lssl -lcrypto -lresolv -lm -L/home/astrocent/anaconda3/lib -lpq -lutil -lrt -ldl

[ 9%] Building CXX object progs/CMakeFiles/mhist.dir/mhist.cxx.o

cd /home/astrocent/workspace/packages/midas/build/progs && /usr/bin/c++ -DHAVE_CURL -DHAVE_FTPLIB -DHAVE_MYSQL -DHAVE_PGSQL -D_LARGEFILE64_SOURCE -I/home/astrocent/workspace/packages/midas/include -I/home/astrocent/workspace/packages/midas/mxml -I/home/astrocent/workspace/packages/midas/mscb/include -I/home/astrocent/workspace/packages/midas/mjson -I/home/astrocent/workspace/packages/midas/mvodb -I/home/astrocent/workspace/packages/midas/midasio -O2 -g -DNDEBUG -Wall -Wformat=2 -Wno-format-nonliteral -Wno-strict-aliasing -Wuninitialized -Wno-unused-function -I/usr/include/mysql -I/home/astrocent/anaconda3/include -MD -MT progs/CMakeFiles/mhist.dir/mhist.cxx.o -MF CMakeFiles/mhist.dir/mhist.cxx.o.d -o CMakeFiles/mhist.dir/mhist.cxx.o -c /home/astrocent/workspace/packages/midas/progs/mhist.cxx

[ 9%] Linking CXX executable mhist

/usr/bin/c++ -O2 -g -DNDEBUG CMakeFiles/mhist.dir/mhist.cxx.o -o mhist ../libmidas.a /usr/lib/x86_64-linux-gnu/libz.so -lcurl -L/usr/lib/x86_64-linux-gnu -lmysqlclient -lzstd -lssl -lcrypto -lresolv -lm -L/home/astrocent/anaconda3/lib -lpq -lutil -lrt -ldl

[ 10%] Building CXX object progs/CMakeFiles/mstat.dir/mstat.cxx.o

cd /home/astrocent/workspace/packages/midas/build/progs && /usr/bin/c++ -DHAVE_CURL -DHAVE_FTPLIB -DHAVE_MYSQL -DHAVE_PGSQL -D_LARGEFILE64_SOURCE -I/home/astrocent/workspace/packages/midas/include -I/home/astrocent/workspace/packages/midas/mxml -I/home/astrocent/workspace/packages/midas/mscb/include -I/home/astrocent/workspace/packages/midas/mjson -I/home/astrocent/workspace/packages/midas/mvodb -I/home/astrocent/workspace/packages/midas/midasio -O2 -g -DNDEBUG -Wall -Wformat=2 -Wno-format-nonliteral -Wno-strict-aliasing -Wuninitialized -Wno-unused-function -I/usr/include/mysql -I/home/astrocent/anaconda3/include -MD -MT progs/CMakeFiles/mstat.dir/mstat.cxx.o -MF CMakeFiles/mstat.dir/mstat.cxx.o.d -o CMakeFiles/mstat.dir/mstat.cxx.o -c /home/astrocent/workspace/packages/midas/progs/mstat.cxx

[ 10%] Linking CXX executable mstat

/usr/bin/c++ -O2 -g -DNDEBUG CMakeFiles/mstat.dir/mstat.cxx.o -o mstat ../libmidas.a /usr/lib/x86_64-linux-gnu/libz.so -lcurl -L/usr/lib/x86_64-linux-gnu -lmysqlclient -lzstd -lssl -lcrypto -lresolv -lm -L/home/astrocent/anaconda3/lib -lpq -lutil -lrt -ldl

[ 10%] Building CXX object progs/CMakeFiles/mdump.dir/mdump.cxx.o