| ID |

Date |

Author |

Topic |

Subject |

|

2169

|

19 May 2021 |

Francesco Renga | Suggestion | MYSQL logger |

Dear all,

I'm trying to use the logging on a mysql DB. Following the instructions on

the Wiki, I recompiled MIDAS after installing mysql, and cmake with NEED_MYSQL=1

can find it:

-- MIDAS: Found MySQL version 8.0.23

Then, I compiled my frontend (cmake with no options + make) and run it, but in the

ODB I cannot find the tree for mySQL. I have only:

Logger/Runlog/ASCII

while I would expect also:

Logger/Runlog/SQL

What could be missing? Maybe should I add something in the CMakeList file or run

cmake with some option?

Thank you,

Francesco |

|

2168

|

14 May 2021 |

Stefan Ritt | Bug Report | mhttpd WebServer ODBTree initialization |

> Thanks a lot, this solved my issue!

... or we should turn IPv6 off by default, since not many people use this right now. |

|

2167

|

13 May 2021 |

Mathieu Guigue | Bug Report | mhttpd WebServer ODBTree initialization |

> > It looks like mhttpd managed to bind to the IPv4 address (localhost), but not the IPv6 address (::1). If you don't need it, try setting "/Webserver/Enable IPv6" to false.

>

> We had this issue already several times. This info should be put into the documentation at a prominent location.

>

> Stefan

Thanks a lot, this solved my issue! |

|

2166

|

12 May 2021 |

Pierre Gorel | Bug Report | History formula not correctly managed |

OS: OSX 10.14.6 Mojave

MIDAS: Downloaded from repo on April 2021.

I have a slow control frontend doing the command/readout of a MPOD HV/LV. Since I am reading out the current that are in nA (after updating snmp), I wanted to multiply the number by 1e9.

I noticed the new "Formula" field (introduced in 2019 it seems) instead of the "Factor/Offset" I was used to. None of my entries seems to be accepted (after hitting save, when coming back thee field is empty).

Looking in ODB in "/History/Display/MPOD/HV (Current)/", the field "Formula" is a string of size 32 (even if I have multiple plots in that display). I noticed that the fields "Factor" and "Offset" are still existing and they are arrays with the correct size. However, changing the values does not seem to do anything.

Deleting "Formula" by hand and creating a new field as an array of string (of correct length) seems to do the trick: the formula is displayed in the History display config, and correctly used. |

|

2165

|

12 May 2021 |

Stefan Ritt | Bug Report | mhttpd WebServer ODBTree initialization |

> It looks like mhttpd managed to bind to the IPv4 address (localhost), but not the IPv6 address (::1). If you don't need it, try setting "/Webserver/Enable IPv6" to false.

We had this issue already several times. This info should be put into the documentation at a prominent location.

Stefan |

|

2164

|

12 May 2021 |

Ben Smith | Bug Report | mhttpd WebServer ODBTree initialization |

> midas_hatfe_1 | Mongoose web server listening on http address "localhost:8080", passwords OFF, hostlist OFF

> midas_hatfe_1 | [mhttpd,ERROR] [mhttpd.cxx:19160:mongoose_listen,ERROR] Cannot mg_bind address "[::1]:8080"

It looks like mhttpd managed to bind to the IPv4 address (localhost), but not the IPv6 address (::1). If you don't need it, try setting "/Webserver/Enable IPv6" to false. |

|

2163

|

12 May 2021 |

Mathieu Guigue | Bug Report | mhttpd WebServer ODBTree initialization |

Hi,

Using midas version 12-2020, I am trying to run mhttpd from within a docker container using docker-compose.

Starting from an empty ODB, I simply run `mhttpd` and this is the output I have:

midas_hatfe_1 | <Warning> Starting mhttpd...

midas_hatfe_1 | [mhttpd,INFO] ODB subtree /Runinfo corrected successfully

midas_hatfe_1 | MVOdb::SetMidasStatus: Error: MIDAS db_find_key() at ODB path "/WebServer/Host list" returned status 312

midas_hatfe_1 | Mongoose web server will not use password protection

midas_hatfe_1 | Mongoose web server will not use the hostlist, connections from anywhere will be accepted

midas_hatfe_1 | Mongoose web server listening on http address "localhost:8080", passwords OFF, hostlist OFF

midas_hatfe_1 | [mhttpd,ERROR] [mhttpd.cxx:19160:mongoose_listen,ERROR] Cannot mg_bind address "[::1]:8080"

According to the documentation, the WebServer tree should be created automatically when starting the mhttpd; but it seems not as it doesn't find the entry "/WebServer/Host list".

If I create it by end (using "create STRING /WebServer/Host list"), I still get the error message that mhttpd didn't bind properly to the local port 8080.

I am not sure what it wrong, as mhttpd is working perfectly well in this exact container for midas 03-2020.

Any idea what difference makes it not possible anymore to run into these container?

Thanks very much for your help.

Cheers

Mathieu |

|

2162

|

10 May 2021 |

Stefan Ritt | Bug Report | modbselect trigget hotlink |

Thanks for reporting that bug, I fixed it in the last commit.

Stefan |

|

2161

|

07 May 2021 |

Zaher Salman | Bug Report | modbselect trigget hotlink |

It seems that a modbselect triggers a "change" in an ODB which has a hot link. This happens onload (or whenever the custom page is reloaded) and otherwise it behaves as expected, i.e. no change unless the modbselect is actually changed. Is this the intended behaviour? can this be modified? |

|

2160

|

06 May 2021 |

Ben Smith | Info | New feature in odbxx that works like db_check_record() |

For those unfamiliar, odbxx is the interface that looks like a C++ map, but automatically syncs with the ODB - https://midas.triumf.ca/MidasWiki/index.php/Odbxx.

I've added a new feature that is similar to the existing odb::connect() function, but works like the old db_check_record(). The new odb::connect_and_fix_structure() function:

- keeps the existing value of any keys that are in both the ODB and your code

- creates any keys that are in your code but not yet in the ODB

- deletes any keys that are in the ODB but not your code

- updates the order of keys in the ODB to match your code

This will hopefully make it easier to automate ODB structure changes when you add/remove keys from a frontend.

The new feature is currently in the develop branch, and should be included in the next release. |

|

2159

|

06 May 2021 |

Stefan Ritt | Forum | m is not defined error |

Thanks for reporting and pointing to the right location.

I fixed and committed it.

Best,

Stefan |

|

2158

|

05 May 2021 |

Zaher Salman | Forum | m is not defined error |

We had the same issue here, which comes from mhttpd.js line 2395 on the current git version. This seems to happen mostly when there is an alarm triggered or when there is an error message.

Anyway, the easiest solution for us was to define m at the beginning of mhttpd_message function

let m;

and replace line 2395 with

if (m !== undefined) {

> > I see this mhttpd error starting MSL-script:

> > Uncaught (in promise) ReferenceError: m is not defined

> > at mhttpd_message (VM2848 mhttpd.js:2304)

> > at VM2848 mhttpd.js:2122

>

> your line numbers do not line up with my copy of mhttpd.js. what version of midas

> do you run?

>

> please give me the output of odbedit "ver" command (GIT revision, looks like this:

> IT revision: Wed Feb 3 11:47:02 2021 -0800 - midas-2020-08-a-84-g78d18b1c on

> branch feature/midas-2020-12).

>

> same info is in the midas "help" page (GIT revision).

>

> to decipher the git revision string:

>

> midas-2020-08-a-84-g78d18b1c means:

> is commit 78d18b1c

> which is 84 commits after git tag midas-2020-08-a

>

> "on branch feature/midas-2020-12" confirms that I have the midas-2020-12 pre-

> release version without having to do all the decoding above.

>

> if you also have "-dirty" it means you changed something in the source code

> and warranty is voided. (just joking! we can debug even modified midas source

> code)

>

> K.O. |

|

2157

|

29 Apr 2021 |

Pierre-Andre Amaudruz | Suggestion | Time zone selection for web page |

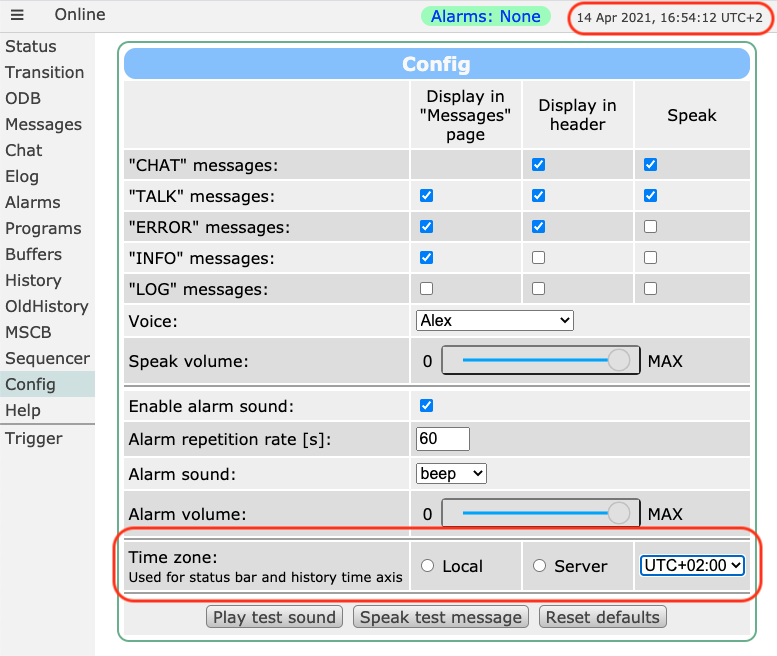

> > The new history as well as the clock in the web page header show the local time

> > of the user's computer running the browser.

> > Would it be possible to make it either always use the time zone of the Midas

> > server, or make it selectable from the config page?

> > It's not ideal trying to relate error messages from the midas.log to history

> > plots if the time stamps don't match.

>

> I implemented a new row in the config page to select the time zone.

>

> "Local": Time zone where the browser runs

> "Server": Time zone where the midas server runs (you have to update mhttpd for that)

> "UTC+X": Any other time zone

>

> The setting affects both the status header and the history display.

>

> I spent quite some time with "named" time zones like "PST" "EST" "CEST", but the

> support for that is not that great in JavaScript, so I decided to go with simple

> UTC+X. Hope that's ok.

>

> Please give it a try and let me know if it's working for you.

>

> Best,

> Stefan

Hi Stefan,

This is great, the UTC+x is perfect, thank you.

PAA |

|

2156

|

26 Apr 2021 |

Zaher Salman | Suggestion | embed modbvalue in SVG |

I found a way to embed modbvalue into a SVG:

<text x="100" y="100" font-size="30rem">

Run=<tspan class="modbvalue" data-odb-path="/Runinfo/Run number"></tspan>

</text>

This seems to behave better that the suggestion below.

> You can't really embed it, but you can overlay it. You tag the SVG with a

> "relative" position and then move the modbvalue with an "absolute" position over

> it:

>

> <svg style="position:relative" width="400" height="100">

> <rect width="300" height="100" style="fill:rgb(255,0,0);stroke-width:3;stroke:rgb(0,0,0)" />

> <div class="modbvalue" style="position:absolute;top:50px;left:50px" data-odb-path="/Runinfo/Run number"></div>

> </svg> |

|

2155

|

14 Apr 2021 |

Stefan Ritt | Bug Report | Minor bug: Change all time axes together doesn't work with +- buttons |

> Version: release/midas-2020-12

>

> In the new history display, the checkbox "Change all time axes together" works

> well with the mouse-based zoom, but does not apply to the +- buttons.

Fixed in current commit.

Stefan |

|

2154

|

14 Apr 2021 |

Stefan Ritt | Bug Report | Time shift in history CSV export |

I finally found some time to fix this issue in the latest commit. Please update and check if it's

working for you.

Stefan |

|

2153

|

14 Apr 2021 |

Stefan Ritt | Info | INT64/UINT64/QWORD not permitted in ODB and history... Change of TID_xxx data types |

> These 64-bit data types do not work with ODB and they do not work with the MIDAS history.

They were never meant to work with the history. They were primarily implemented to put large 64-

bit data words into midas banks. We did not yet have a request to put these values into the ODB.

Once such a request comes, we can address this.

Stefan |

|

2152

|

14 Apr 2021 |

Stefan Ritt | Suggestion | Time zone selection for web page |

> The new history as well as the clock in the web page header show the local time

> of the user's computer running the browser.

> Would it be possible to make it either always use the time zone of the Midas

> server, or make it selectable from the config page?

> It's not ideal trying to relate error messages from the midas.log to history

> plots if the time stamps don't match.

I implemented a new row in the config page to select the time zone.

"Local": Time zone where the browser runs

"Server": Time zone where the midas server runs (you have to update mhttpd for that)

"UTC+X": Any other time zone

The setting affects both the status header and the history display.

I spent quite some time with "named" time zones like "PST" "EST" "CEST", but the

support for that is not that great in JavaScript, so I decided to go with simple

UTC+X. Hope that's ok.

Please give it a try and let me know if it's working for you.

Best,

Stefan |

| Attachment 1: Screenshot_2021-04-14_at_16.54.12_.png

|

|

|

2151

|

13 Apr 2021 |

Stefan Ritt | Forum | Client gets immediately removed when using a script button. |

> I have followed your suggestions and the program still stops immediately. My status as returned from "cm_yield(100)" is always 412 (SS_TIMEOUT) which is fine.

> The issue is that, when run with the script button, the do-wile loop stops immediately because the !ss_kbhit() always evaluates to FALSE.

>

> My temporary solution has been to let the loop run forever :)

Ahh, could be that ss_kbhit() misbehaves if there is no keyboard, meaning that it is started in the background as a script.

We never had the issue before, since all "standard" midas programs like mlogger, mhttpd etc. also use ss_kbhit() and they

can be started in the background via the "-D" flag, but maybe the stdin is then handled differentlhy.

So just remove the ss_kbhit(), but keep the break, so that you can stop your program via the web page, like

#include "midas.h"

#include "stdio.h"

int main() {

cm_connect_experiment("", "", "logic_controller", NULL);

do {

int status = cm_yield(100);

printf("cm_yield returned %d\n", status);

if (status == SS_ABORT || status == RPC_SHUTDOWN)

break;

} while (TRUE);

cm_disconnect_experiment();

return 0;

} |

|

2150

|

13 Apr 2021 |

Konstantin Olchanski | Info | bk_init32a data format |

Until commit a4043ceacdf241a2a98aeca5edf40613a6c0f575 today, mdump mostly did not work with bank32a data.

K.O.

> In April 4th 2020 Stefan added a new data format that fixes the well known problem with alternating banks being

> misaligned against 64-bit addresses. (cannot find announcement on this forum. midas commit

> https://bitbucket.org/tmidas/midas/commits/541732ea265edba63f18367c7c9b8c02abbfc96e)

>

> This brings the number of midas data formats to 3:

>

> bk_init: bank_header_flags set to 0x0000001 (BANK_FORMAT_VERSION)

> bk_init32: bank_header_flags set to 0x0000011 (BANK_FORMAT_VERSION | BANK_FORMAT_32BIT)

> bk_init32a: bank_header_flags set to 0x0000031 (BANK_FORMAT_VERSION | BANK_FORMAT_32BIT | BANK_FORMAT_64BIT_ALIGNED;

>

> TMEvent (midasio and manalyzer) support for "bk_init32a" format added today (commit

> https://bitbucket.org/tmidas/midasio/commits/61b7f07bc412ea45ed974bead8b6f1a9f2f90868)

>

> TMidasEvent (rootana) support for "bk_init32a" format added today (commit

> https://bitbucket.org/tmidas/rootana/commits/3f43e6d30daf3323106a707f6a7ca2c8efb8859f)

>

> ROOTANA should be able to handle bk_init32a() data now.

>

> TMFE MIDAS c++ frontend switched from bk_init32() to bk_init32a() format (midas commit

> https://bitbucket.org/tmidas/midas/commits/982c9c2f8b1e329891a782bcc061d4c819266fcc)

>

> K.O. |