| ID |

Date |

Author |

Topic |

Subject |

|

2646

|

09 Dec 2023 |

Pavel Murat | Forum | how to fix forgotten password ? | [Dear All, I apologize in advance for spamming.]

1) I tried to login into the forum from the lab computer and realized

that I forgot my password

2) I tried to reset the password and found that when registering

I mistyped my email address, having typed '.giv' instead of '.gov'

in the domain name, so the recovery email went into nowhere

(still have one session open on the laptop so can post this question)

- how do I get my email address fixed so I'd be able to reset the password?

-- many thanks, Pasha |

|

2647

|

09 Dec 2023 |

Pavel Murat | Forum | history plotting: where to convert the ADC readings into temps/voltages? | to plot time dependencies of the monitored detector parameters, say, voltages or temperatures,

one needs to convert the coresponging ADC readings into floats.

One could think of two ways of doing that:

- one can perform the ADC-->T or ADC-->V conversion in the MIDAS frontend,

store their [float] values in the data bank, and plot precalculated parameters vs time

- one can also store in the data bank the ADC readings which typically are short's

and convert them into floats (V's or T's) at the plotting time

The first approach doubles the storage space requirements, and I couldn't find the place where

one would do the conversion, if stored were the 16-bit ADC readings.

I'm sure this issue has been thought about, so what is the "recommended MIDAS way" of performing

the ADC -> monitored_number conversion when making MIDAS history plots ?

-- many thanks, regards, Pasha |

|

2652

|

11 Dec 2023 |

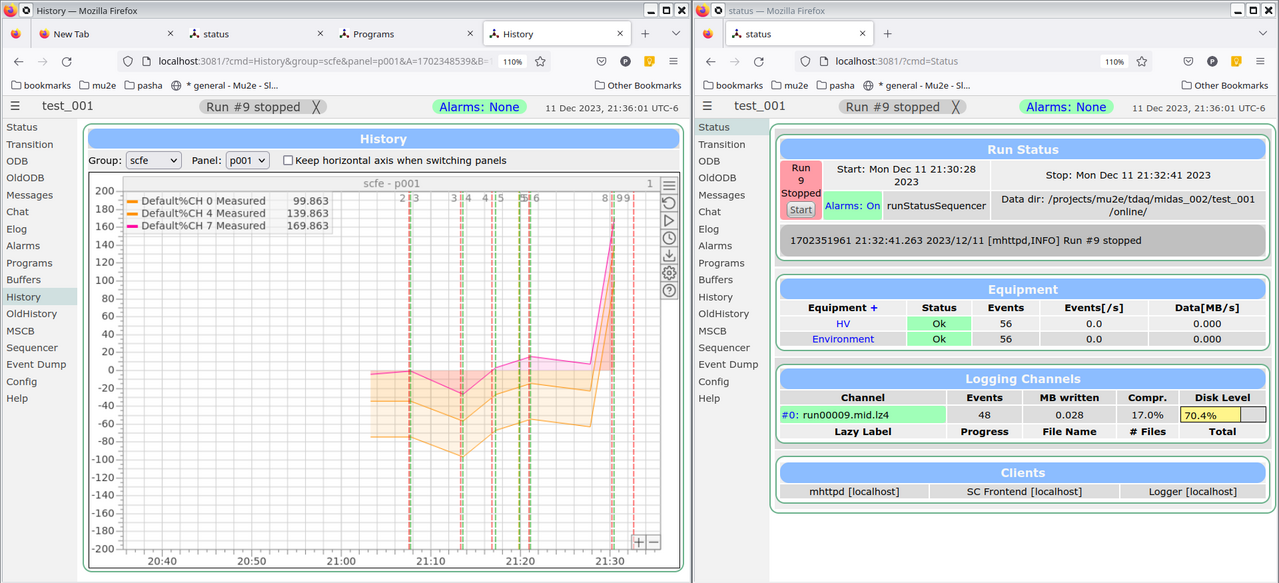

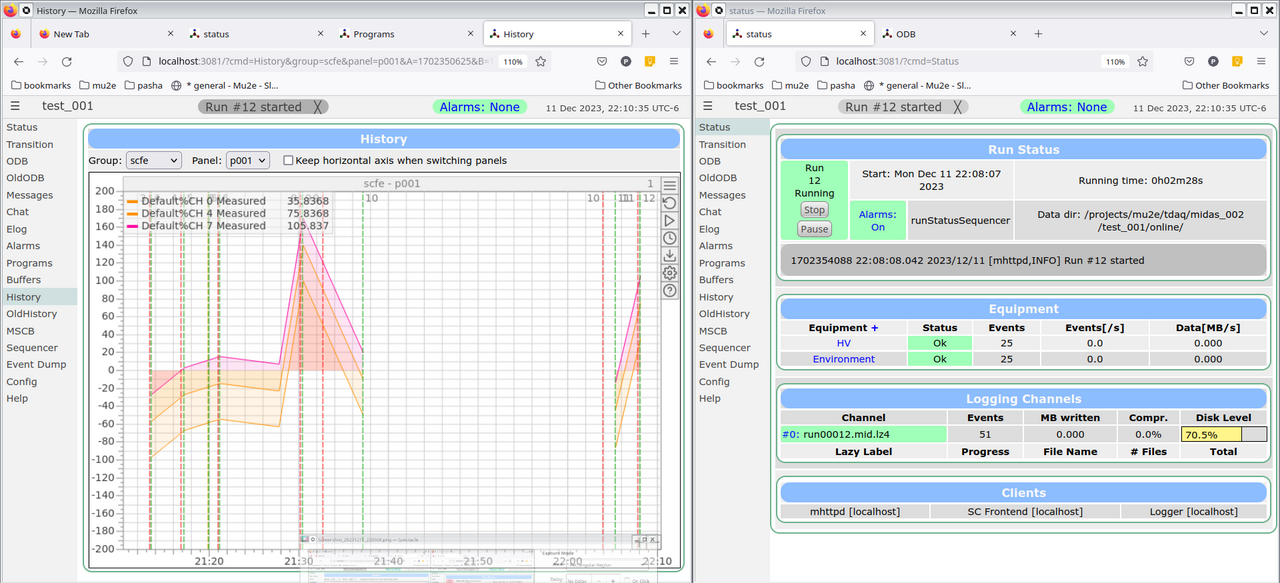

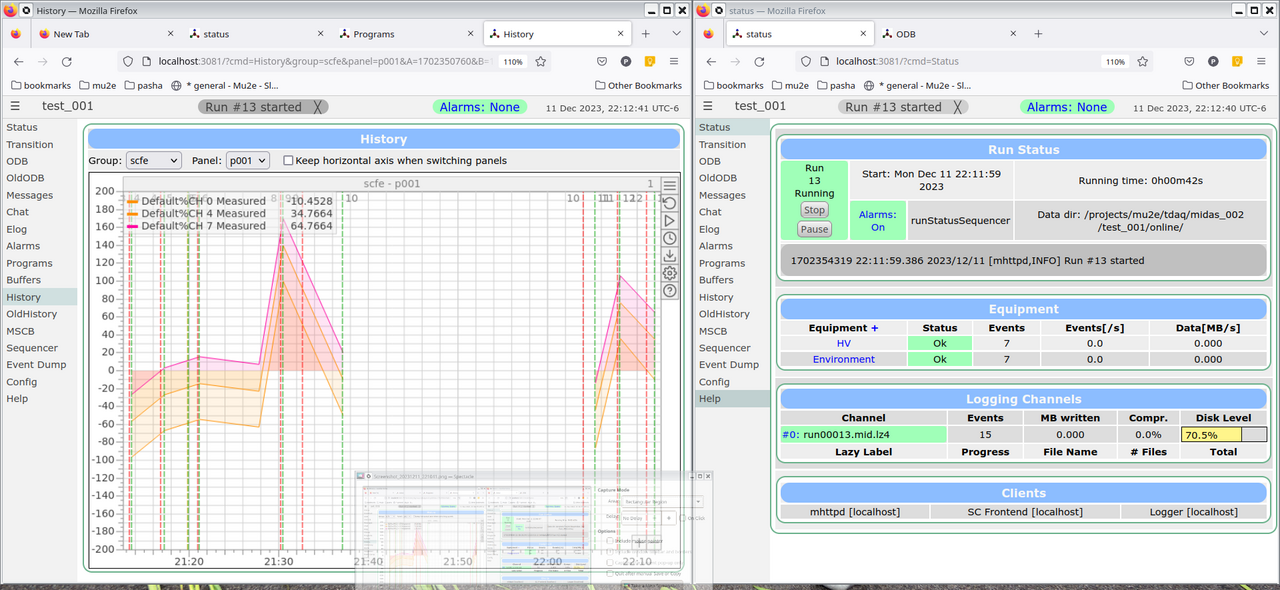

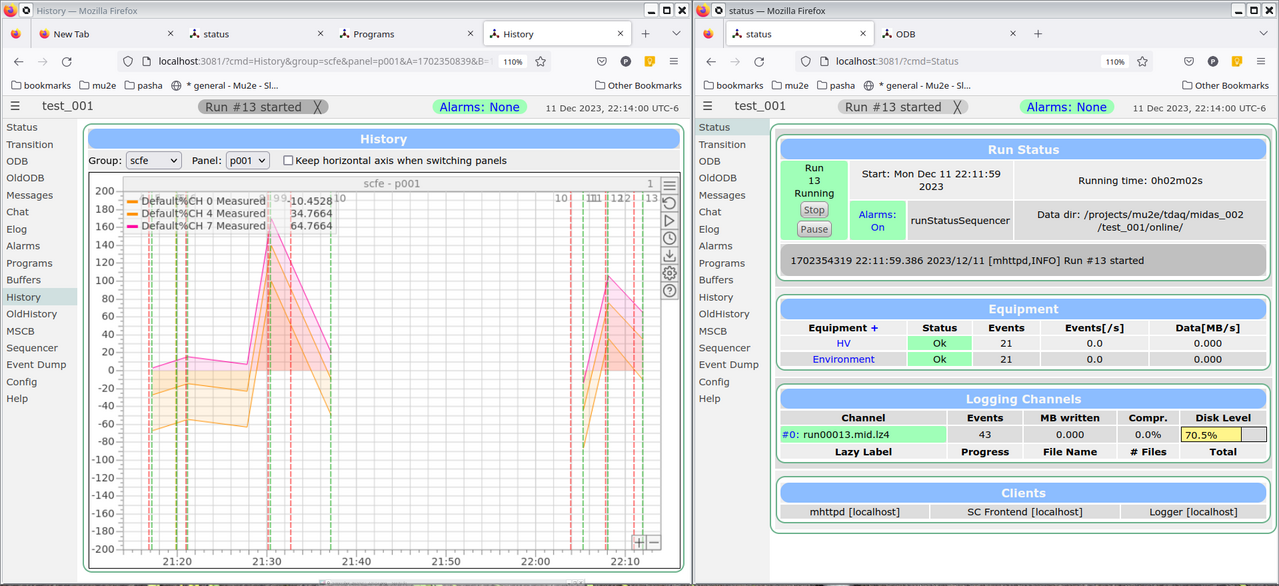

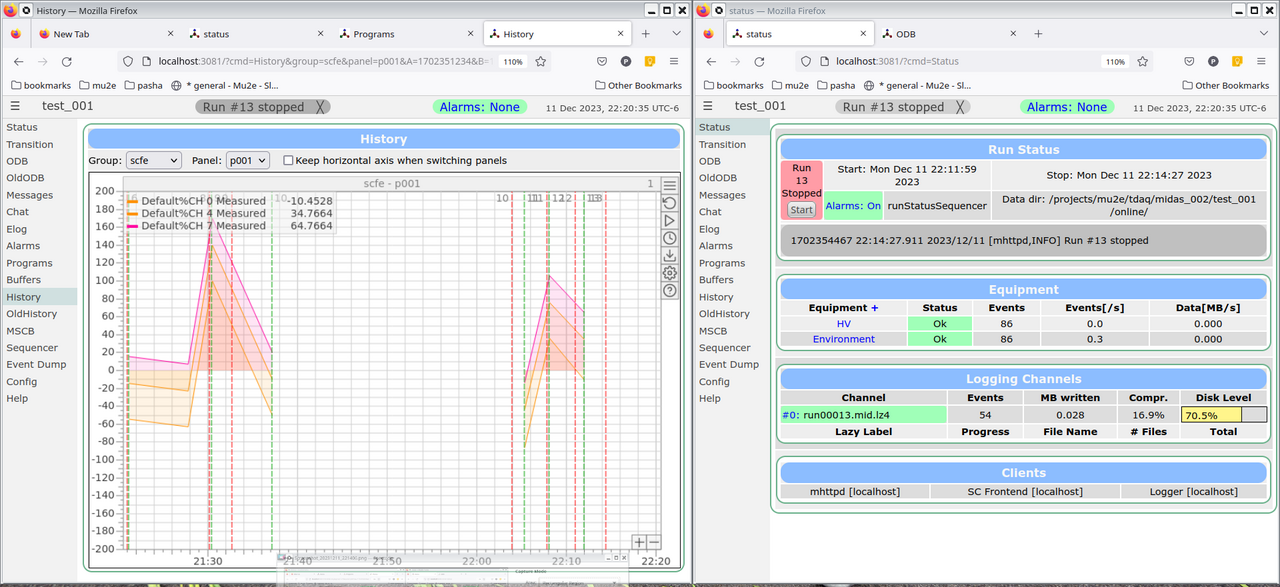

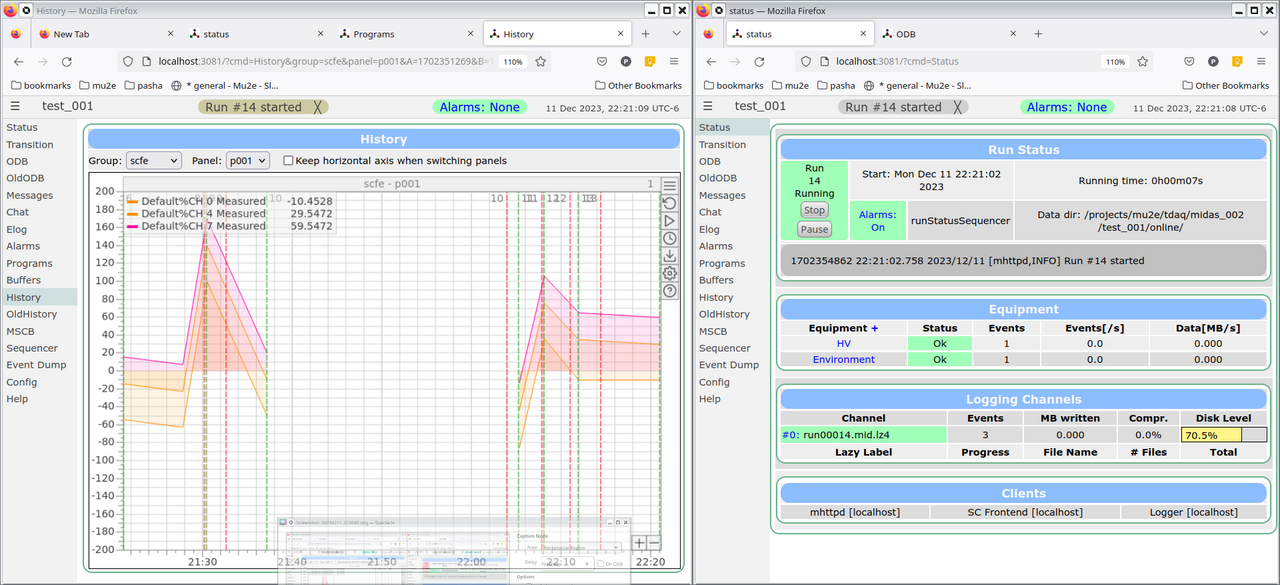

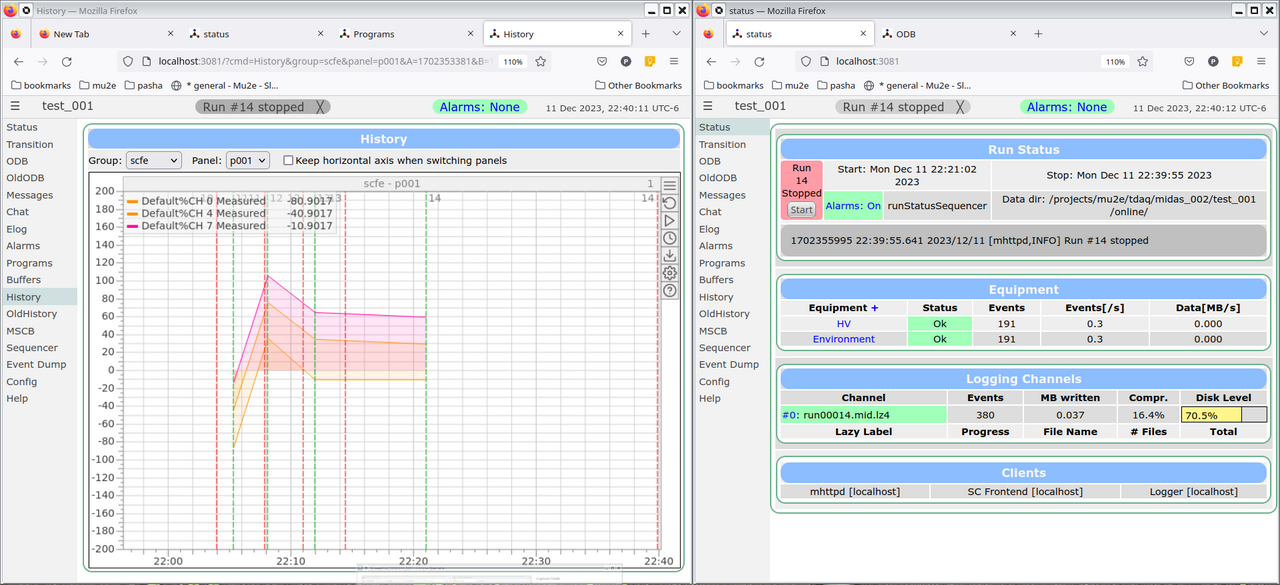

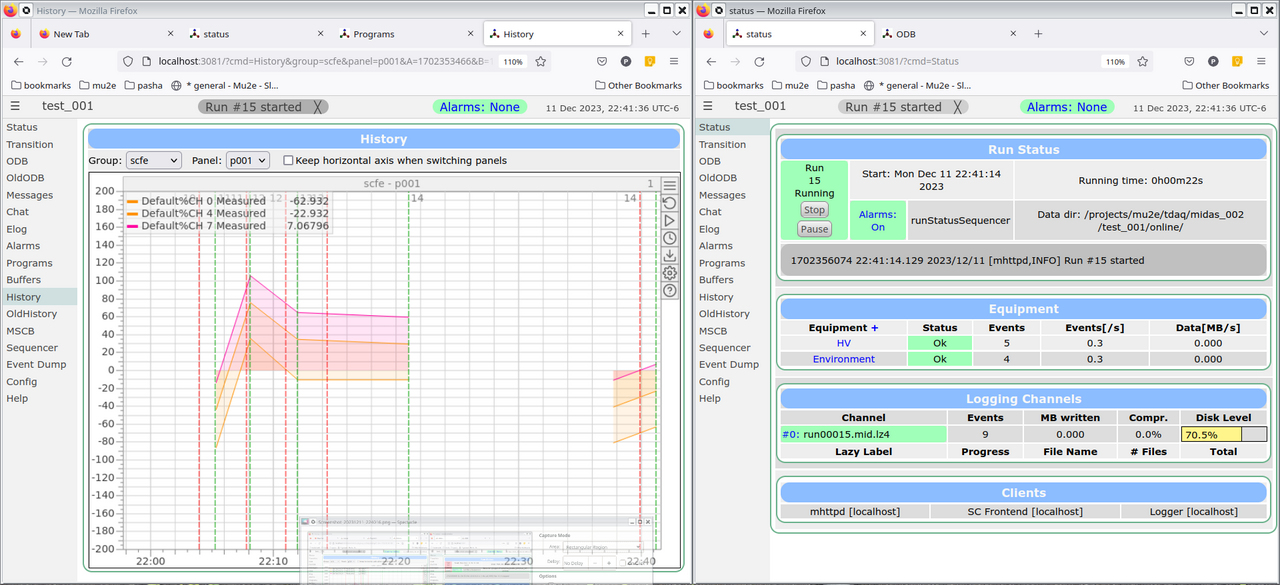

Pavel Murat | Forum | the logic of handling history variables ? | Dear MIDAS developers,

I'm trying to understand handling of the history (slow control) variables in MIDAS,

and it seems that the behavior I'm observing is somewhat counterintuitive.

Most likely, I just do not understand the implemented logic.

As it it rather difficult to report on the behavior of the interactive program,

I'll describe what I'm doing and illustrate the report with the series of attached

screenshots showing the history plots and the status of the run control at different

consecutive points in time.

Starting with the landscape:

- I'm running MIDAS, git commit=30a03c4c (the latest, as of today).

- I have built the midas/examples/slowcont frontend with the following modifications.

(the diffs are enclosed below):

1) the frequency of the history updates is increased from 60sec/10sec to 6sec/1sec

and, in hope to have updates continuos, I replaced (RO_RUNNING | RO_TRANSITIONS)

with RO_ALWAYS.

2) for convenience of debugging, midas/drivers/nulldrv.cxx is replaced with its clone,

which instead of returning zeroes in each channel, generates a sine curve:

V(t) = 100*sin(t/60)+10*channel

- an active channel in /Logger/History is chosen to be FILE

- /History/LoggerHistoryChannel is also set to FILE

- I'm running mlogger and modified, as described, 'scfe' frontend from midas/examples/slowcont

- the attached history plots include three (0,4 and 7) HV:MEASURED channels

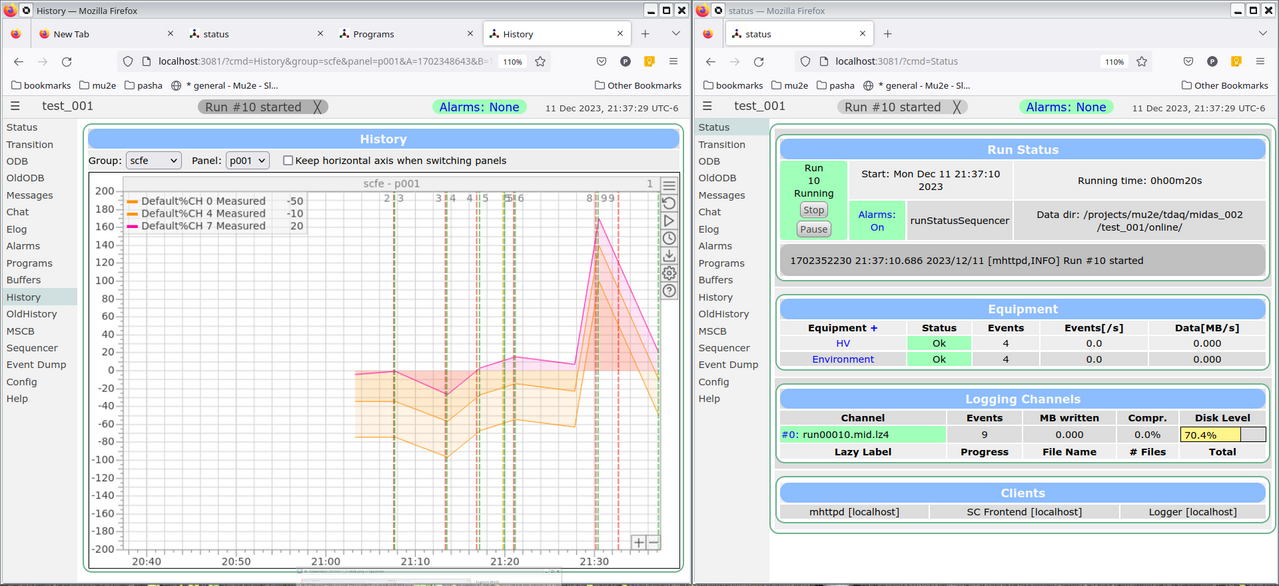

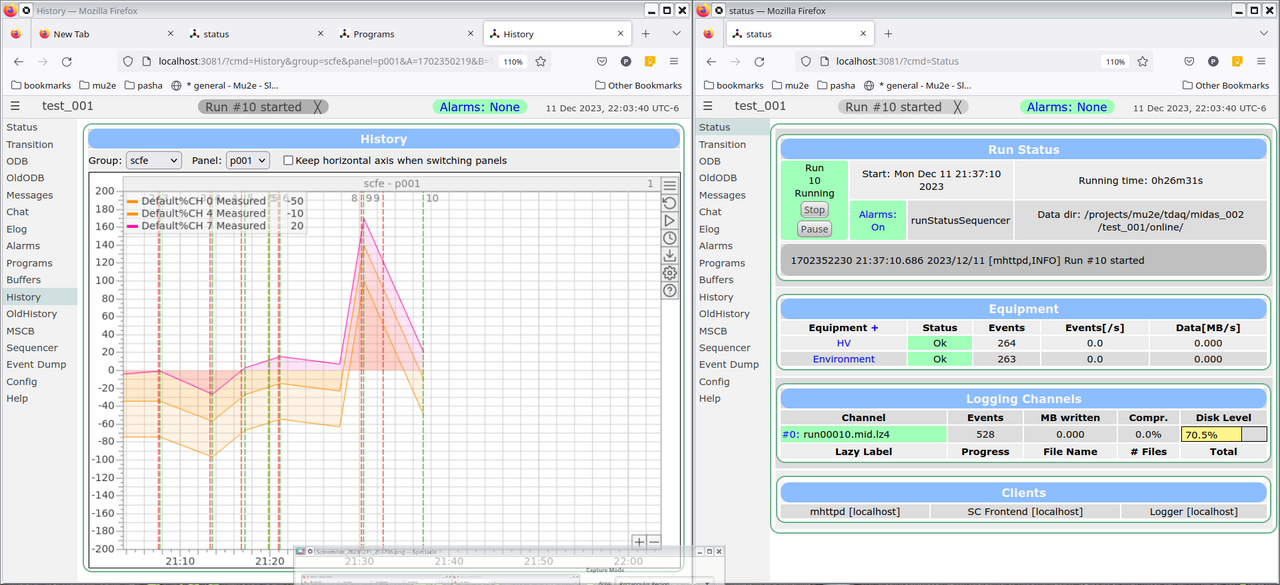

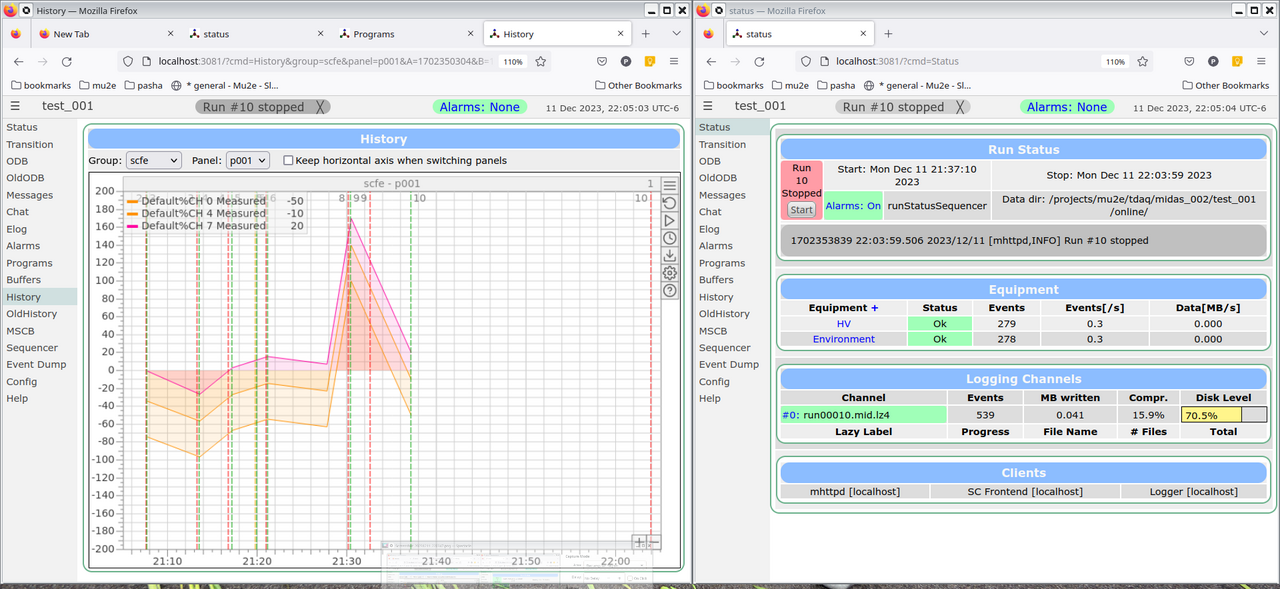

Now, the observations:

1) the history plots are updated only when a new run starts, no matter how hard

I'm trying to update them by clicking on various buttons.

The attached screenshots show the timing sequence of the run control states

(with the times printed) and the corresponding history plots.

The "measured voltages" change only when the next run starts - the voltage graphs

break only at the times corresponding to the vertical green lines.

2) No matter for how long I wait within the run, the history updates are not happening.

3) if the time difference between the two run starts gets too large,

the plotted time dependence starts getting discontinuities

4) finally, if I switch the logging channel from FILE to MIDAS (activate the MIDAS

channel in /Logger/History and set /History/LoggerHistoryChannel to MIDAS),

the updates of the history plots simply stop.

MIDAS feels as a great DAQ framework, so I would appreciate any suggestion on

what I could be doing wrong. I'd also be happy to give a demo in real time

(via ZOOM/SKYPE etc).

-- much appreciate your time, thanks, regards, Pasha

------------------------------------------------------------------------------

diff --git a/examples/slowcont/scfe.cxx b/examples/slowcont/scfe.cxx

index 11f09042..c98d37e8 100644

--- a/examples/slowcont/scfe.cxx

+++ b/examples/slowcont/scfe.cxx

@@ -24,9 +24,10 @@

#include "mfe.h"

#include "class/hv.h"

#include "class/multi.h"

-#include "device/nulldev.h"

#include "bus/null.h"

+#include "nulldev.h"

+

/*-- Globals -------------------------------------------------------*/

/* The frontend name (client name) as seen by other MIDAS clients */

@@ -74,11 +75,11 @@ EQUIPMENT equipment[] = {

0, /* event source */

"FIXED", /* format */

TRUE, /* enabled */

- RO_RUNNING | RO_TRANSITIONS, /* read when running and on transitions */

- 60000, /* read every 60 sec */

+ RO_ALWAYS, /* read when running and on transitions */

+ 6000, /* read every 6 sec */

0, /* stop run after this event limit */

0, /* number of sub events */

- 10000, /* log history at most every ten seconds */

+ 1000, /* log history at most every one second */

"", "", ""} ,

cd_hv_read, /* readout routine */

cd_hv, /* class driver main routine */

@@ -93,8 +94,8 @@ EQUIPMENT equipment[] = {

0, /* event source */

"FIXED", /* format */

TRUE, /* enabled */

- RO_RUNNING | RO_TRANSITIONS, /* read when running and on transitions */

- 60000, /* read every 60 sec */

+ RO_ALWAYS, /* read when running and on transitions */

+ 6000, /* read every 6 sec */

0, /* stop run after this event limit */

0, /* number of sub events */

1, /* log history every event as often as it changes (max 1 Hz) */

------------------------------------------------------------------------------

[test_001]$ diff ../midas/examples/slowcont/nulldev.cxx ../midas/drivers/device/nulldev.cxx

13d12

< #include <math.h>

150,154c149,150

< if (channel < info->num_channels) {

< // *pvalue = info->array[channel];

< time_t t = time(NULL);;

< *pvalue = 100*sin(M_PI*t/60)+10*channel;

< }

---

> if (channel < info->num_channels)

> *pvalue = info->array[channel];

------------------------------------------------------------------------------ |

| Attachment 1: Screenshot_20231211_213608.png

|

|

| Attachment 2: Screenshot_20231211_213736.png

|

|

| Attachment 3: Screenshot_20231211_220347.png

|

|

| Attachment 4: Screenshot_20231211_220508.png

|

|

| Attachment 5: Screenshot_20231211_221041.png

|

|

| Attachment 6: Screenshot_20231211_221252.png

|

|

| Attachment 7: Screenshot_20231211_221406.png

|

|

| Attachment 8: Screenshot_20231211_222042.png

|

|

| Attachment 9: Screenshot_20231211_222114.png

|

|

| Attachment 10: Screenshot_20231211_224016.png

|

|

| Attachment 11: Screenshot_20231211_224141.png

|

|

|

2656

|

12 Dec 2023 |

Pavel Murat | Forum | the logic of handling history variables ? | Hi Sfefan, thanks a lot for taking time to reproduce the issue!

Here comes the resolution, and of course, it was something deeply trivial :

the definition of the HV equipment in midas/examples/slowcont/scfe.cxx has

the history logging time in seconds, however the comment suggests milliseconds (see below),

and for a few days I believed to the comment (:smile:)

Easy to fix.

Also, I think that having a sine wave displayed by midas/examples/slowcont/scfe.cxx

would make this example even more helpful.

-- thanks again, regards, Pasha

--------------------------------------------------------------------------------------------------------

EQUIPMENT equipment[] = {

{"HV", /* equipment name */

{3, 0, /* event ID, trigger mask */

"SYSTEM", /* event buffer */

EQ_SLOW, /* equipment type */

0, /* event source */

"FIXED", /* format */

TRUE, /* enabled */

RO_RUNNING | RO_TRANSITIONS, /* read when running and on transitions */

60000, /* read every 60 sec */

0, /* stop run after this event limit */

0, /* number of sub events */

10000, /* log history at most every ten seconds */ // <------------ this is 10^4 seconds, not 10 seconds

"", "", ""} ,

cd_hv_read, /* readout routine */

cd_hv, /* class driver main routine */

hv_driver, /* device driver list */

NULL, /* init string */

},

https://bitbucket.org/tmidas/midas/src/7f0147eb7bc7395f262b3ae90dd0d2af0625af39/examples/slowcont/scfe.cxx#lines-81 |

|

2667

|

10 Jan 2024 |

Pavel Murat | Forum | slow control frontends - how much do they sleep and how often their drivers are called? | Dear all,

I have implemented a number of slow control frontends which are directed to update the

history once in every 10 sec, and they do just that.

I expected that such frontends would be spending most of the time sleeping and waking up

once in ten seconds to call their respective drivers and send the data to the server.

However I observe that each frontend process consumes almost 100% of a single core CPU time

and the frontend driver is called many times per second.

Is that the expected behavior ?

So far, I couldn't find the place in the system part of the frontend code (is that the right

place to look for?) which regulates the frequency of the frontend driver calls, so I'd greatly

appreciate if someone could point me to that place.

I'm using the following commit:

commit 30a03c4c develop origin/develop Make sure line numbers and sequence lines are aligned.

-- many thanks, regards, Pasha |

|

2669

|

11 Jan 2024 |

Pavel Murat | Forum | slow control frontends - how much do they sleep and how often their drivers are called? | Hi Stefan, thanks a lot !

I just thought that for the EQ_SLOW type equipment calls to sleep() could be hidden in mfe.cxx

and handled based on the requested frequency of the history updates.

Doing the same in the user side is straighforward - the important part is to know where the

responsibility line goes (: smile :)

-- regards, Pasha |

|

2672

|

16 Jan 2024 |

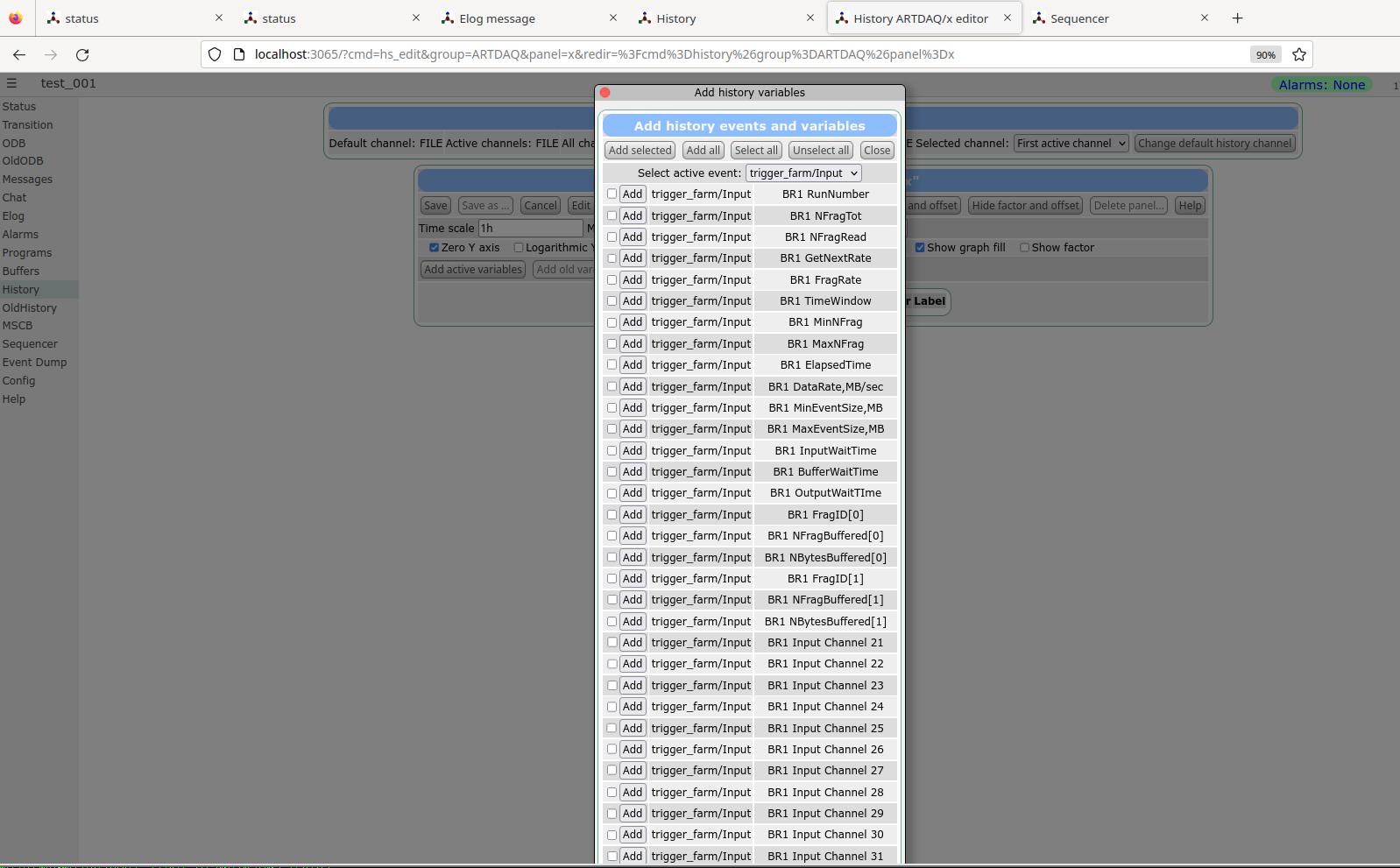

Pavel Murat | Forum | a scroll option for "add history variables" window? | Dear all,

I have a "slow control" frontend which reads out 100 slow control parameters.

When I'm interactively adding a parameter to a history plot,

a nice "Add history variable" pops up .. , but with 100 parameters in the list,

it doesn't fit within the screen...

The browser becomes passive, and I didn't find any easy way of scrolling.

In the attached example, adding a channel 32 variable becomes rather cumbersome,

not speaking about channel 99.

Two questions:

a) how do people get around this "no-scrolling" issue? - perhaps there is a workaround

b) how big of a deal is it to add a scroll bar to the "Add history variables" popup ?

- I do not know javascript myself, but could find help to contribute..

-- many thanks, regards, Pasha |

| Attachment 1: adding_a_variable_to_the_history_plot.png

|

|

|

2677

|

17 Jan 2024 |

Pavel Murat | Forum | a scroll option for "add history variables" window? | > Have you updated to the current midas version? This issue has been fixed a while ago.

Hi Stefan, thanks a lot! I pulled from the head, and the scrolling works now. -- regards, Pasha |

|

2685

|

24 Jan 2024 |

Pavel Murat | Bug Report | Warnings about ODB keys that haven't been touched for 10+ years | I don't immediately see a reason for saying that if a DB key is older than 10 yrs, it may not be valid.

However, it would be worth learning what was the logic behind choosing 10 yrs as a threshold.

If 10 is just a more or less arbitrary number, changing 10 --> 100 seems to be the way to go.

-- regards, Pasha |

|

2686

|

28 Jan 2024 |

Pavel Murat | Forum | number of entries in a given ODB subdirectory ? | Dear MIDAS experts,

- I have a detector configuration with a variable number of hardware components - FPGA's receiving data

from the detector. They are described in ODB using a set of keys ranging

from "/Detector/FPGAs/FPGA00" .... to "/Detector/FPGAs/FPGA68".

Each of "FPGAxx" corresponds to an ODB subdirectory containing parameters of a given FPGA.

The number of FPGAs in the detector configuration is variable - [independent] commissioning

of different detector subsystems involves different number of FPGAs.

In the beginning of the data taking one needs to loop over all of "FPGAxx",

parse the information there and initialize the corresponding FPGAs.

The actual question sounds rather trivial - what is the best way to implement a loop over them?

- it is certainly possible to have the number of FPGAs introduced as an additional configuration parameter,

say, "/Detector/Number_of_FPGAs", and this is what I have resorted to right now.

However, not only that loooks ugly, but it also opens a way to make a mistake

and have the Number_of_FPGAs, introduced separately, different from the actual number

of FPGA's in the detector configuration.

I therefore wonder if there could be a function, smth like

int db_get_n_keys(HNDLE hdb, HNDLE hKeyParent)

returning the number of ODB keys with a common parent, or, to put it simpler,

a number of ODB entries in a given subdirectory.

And if there were a better solution to the problem I'm dealing with, knowing it might be helpful

for more than one person - configuring detector readout may require to deal with a variable number

of very different parameters.

-- many thanks, regards, Pasha |

|

2696

|

29 Jan 2024 |

Pavel Murat | Forum | number of entries in a given ODB subdirectory ? | Hi Stefan, Konstantin,

thanks a lot for your responses - they are very teaching and it is good to have them archived in the forum.

Konstantin, as Stefan already noticed, in this particular case the race condition is not really a concern.

Stefan, the ChatGPT-generated code snippet is awesome! (teach a man how to fish ...)

-- regards, Pasha |

|

2697

|

29 Jan 2024 |

Pavel Murat | Forum | a scroll option for "add history variables" window? | > If you have some ideas on how to better present 100500 history variables, please shout out!

let me share some thoughts. In a particular case which lead to the original posting,

I was using a multi-threaded driver and monitoring several pieces of equipment with different device drivers.

In fact, it was not even hardware, but processes running on different nodes of a distributed computer farm.

To reduce the number of frontends, I was combining together the output of what could've been implemented

as multiple slow control drivers and got 100+ variables in the list - hence the scrolling experience.

At the same time, a list of control variables per driver could've been kept relatively short.

So if a list of control variables of a slow control frontend were split in a History GUI not only by the

equipment piece, but within the equipment "folder", also by the driver, that might help improving

the scalability of the graphical interface.

May be that is already implemented and it is just a matter of me not finding the right base class / example

in the MIDAS code

-- regards, Pasha |

|

2701

|

03 Feb 2024 |

Pavel Murat | Forum | number of entries in a given ODB subdirectory ? | Konstantin is right: KEY.num_values is not the same as the number of subkeys (should it be ?)

For those looking for an example in the future, I attach a working piece of code converted

from the ChatGPT example, together with its printout.

-- regards, Pasha |

| Attachment 1: a.cc

|

#include <stdio.h>

#include <stdlib.h>

#include "midas.h"

int main(int argc, char **argv) {

HNDLE hDB, hKey;

INT status, num_subkeys;

KEY key;

cm_connect_experiment (NULL, NULL, "Example", NULL);

cm_get_experiment_database(&hDB, NULL);

char dir[] = "/ArtdaqConfigurations/demo/mu2edaq09.fnal.gov";

status = db_find_key(hDB, 0, dir , &hKey);

if (status != DB_SUCCESS) {

printf("Error: Cannot find the ODB directory\n");

return 1;

}

//-----------------------------------------------------------------------------

// Iterate over all subkeys in the directory

// note: key.num_values is NOT the number of subkeys in the directory

//-----------------------------------------------------------------------------

db_get_key(hDB, hKey, &key);

printf("key.num_values: %d\n",key.num_values);

HNDLE hSubkey;

KEY subkey;

num_subkeys = 0;

for (int i=0; db_enum_key(hDB, hKey, i, &hSubkey) != DB_NO_MORE_SUBKEYS; ++i) {

db_get_key(hDB, hSubkey, &subkey);

printf("Subkey %d: %s, Type: %d\n", i, subkey.name, subkey.type);

num_subkeys++;

}

printf("number of subkeys: %d\n",num_subkeys);

// Disconnect from MIDAS

cm_disconnect_experiment();

return 0;

}

------------------------------------------------------ output:

mu2etrk@mu2edaq09:~/test_stand>test_001

key.num_values: 1

Subkey 0: BoardReader_01, Type: 15

Subkey 1: BoardReader_02, Type: 15

Subkey 2: EventBuilder_01, Type: 15

Subkey 3: EventBuilder_02, Type: 15

Subkey 4: DataLogger_01, Type: 15

Subkey 5: Dispatcher_01, Type: 15

number of subkeys: 6

---------------------------------------------------------------

|

|

2702

|

03 Feb 2024 |

Pavel Murat | Bug Report | string --> int64 conversion in the python interface ? | Dear MIDAS experts,

I gave a try to the MIDAS python interface and ran all tests available in midas/python/tests.

Two Int64 tests from test_odb.py had failed (see below), everthong else - succeeded

I'm using a ~ 2.5 weeks-old commit and python 3.9 on SL7 Linux platform.

commit c19b4e696400ee437d8790b7d3819051f66da62d (HEAD -> develop, origin/develop, origin/HEAD)

Author: Zaher Salman <zaher.salman@gmail.com>

Date: Sun Jan 14 13:18:48 2024 +0100

The symptoms are consistent with a string --> int64 conversion not happening

where it is needed.

Perhaps the issue have already been fixed?

-- many thanks, regards, Pasha

-------------------------------------------------------------------------------------------

Traceback (most recent call last):

File "/home/mu2etrk/test_stand/pasha_020/midas/python/tests/test_odb.py", line 178, in testInt64

self.set_and_readback_from_parent_dir("/pytest", "int64_2", [123, 40000000000000000], midas.TID_INT64, True)

File "/home/mu2etrk/test_stand/pasha_020/midas/python/tests/test_odb.py", line 130, in set_and_readback_from_parent_dir

self.validate_readback(value, retval[key_name], expected_key_type)

File "/home/mu2etrk/test_stand/pasha_020/midas/python/tests/test_odb.py", line 87, in validate_readback

self.assert_equal(val, retval[i], expected_key_type)

File "/home/mu2etrk/test_stand/pasha_020/midas/python/tests/test_odb.py", line 60, in assert_equal

self.assertEqual(val1, val2)

AssertionError: 123 != '123'

with the test on line 178 commented out, the test on the next line fails in a similar way:

Traceback (most recent call last):

File "/home/mu2etrk/test_stand/pasha_020/midas/python/tests/test_odb.py", line 179, in testInt64

self.set_and_readback_from_parent_dir("/pytest", "int64_2", 37, midas.TID_INT64, True)

File "/home/mu2etrk/test_stand/pasha_020/midas/python/tests/test_odb.py", line 130, in set_and_readback_from_parent_dir

self.validate_readback(value, retval[key_name], expected_key_type)

File "/home/mu2etrk/test_stand/pasha_020/midas/python/tests/test_odb.py", line 102, in validate_readback

self.assert_equal(value, retval, expected_key_type)

File "/home/mu2etrk/test_stand/pasha_020/midas/python/tests/test_odb.py", line 60, in assert_equal

self.assertEqual(val1, val2)

AssertionError: 37 != '37'

--------------------------------------------------------------------------- |

|

2704

|

05 Feb 2024 |

Pavel Murat | Forum | forbidden equipment names ? | Dear MIDAS experts,

I have multiple daq nodes with two data receiving FPGAs on the PCIe bus each.

The FPGAs come under the names of DTC0 and DTC1. Both FPGAs are managed by the same slow control frontend.

To distinguish FPGAs of different nodes from each other, I included the hostname to the equipment name,

so for node=mu2edaq09 the FPGA names are 'mu2edaq09:DTC0' and 'mu2edaq09:DTC1'.

The history system didn't like the names, complaining that

21:26:06.334 2024/02/05 [Logger,ERROR] [mlogger.cxx:5142:open_history,ERROR] Equipment name 'mu2edaq09:DTC1'

contains characters ':', this may break the history system

So the question is : what are the safe equipment/driver naming rules and what characters

are not allowed in them? - I think this is worth documenting, and the current MIDAS docs at

https://daq00.triumf.ca/MidasWiki/index.php/Equipment_List_Parameters#Equipment_Name

don't say much about it.

-- many thanks, regards, Pasha |

|

2706

|

11 Feb 2024 |

Pavel Murat | Forum | number of entries in a given ODB subdirectory ? | > For ODB keys of type TID_KEY, the value num_values IS the number of subkeys.

this logic makes sense, however it doesn't seem to be consistent with the printout of the test example

at the end of https://daq00.triumf.ca/elog-midas/Midas/240203_095803/a.cc . The printout reports

key.num_values = 1, but the actual number of subkeys = 6, and all subkeys being of TID_KEY type

I'm certain that the ODB subtree in question was not accessed concurrently during the test.

-- regards, Pasha |

|

2717

|

19 Feb 2024 |

Pavel Murat | Forum | number of entries in a given ODB subdirectory ? | > > Hmm... is there any use case where you want to know the number of directory entries, but you will not iterate

> > over them later?

>

> I agree.

here comes the use case:

I have a slow control frontend which monitors several DAQ components - software processes.

The components are listed in the system configuration stored in ODB, a subkey per component.

Each component has its own driver, so the length of the driver list, defined by the number of components,

needs to be determined at run time.

I calculate the number of components by iterating over the list of component subkeys in the system configuration,

allocate space for the driver list, and store the pointer to the driver list in the equipment record.

The approach works, but it does require pre-calculating the number of subkeys of a given key.

-- regards, Pasha |

|

2719

|

27 Feb 2024 |

Pavel Murat | Forum | displaying integers in hex format ? | Dear MIDAS Experts,

I'm having an odd problem when trying to display an integer stored in ODB on a custom

web page: the hex specifier, "%x", displays integers as if it were "%d" .

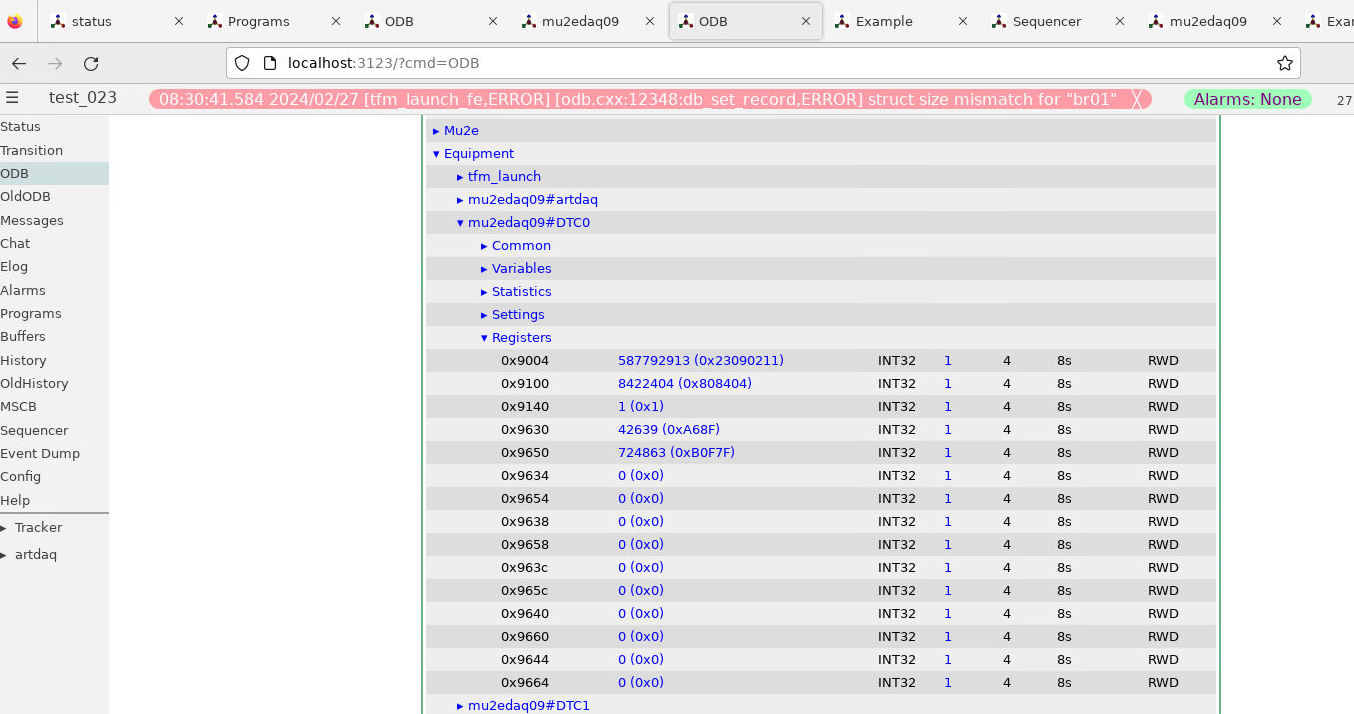

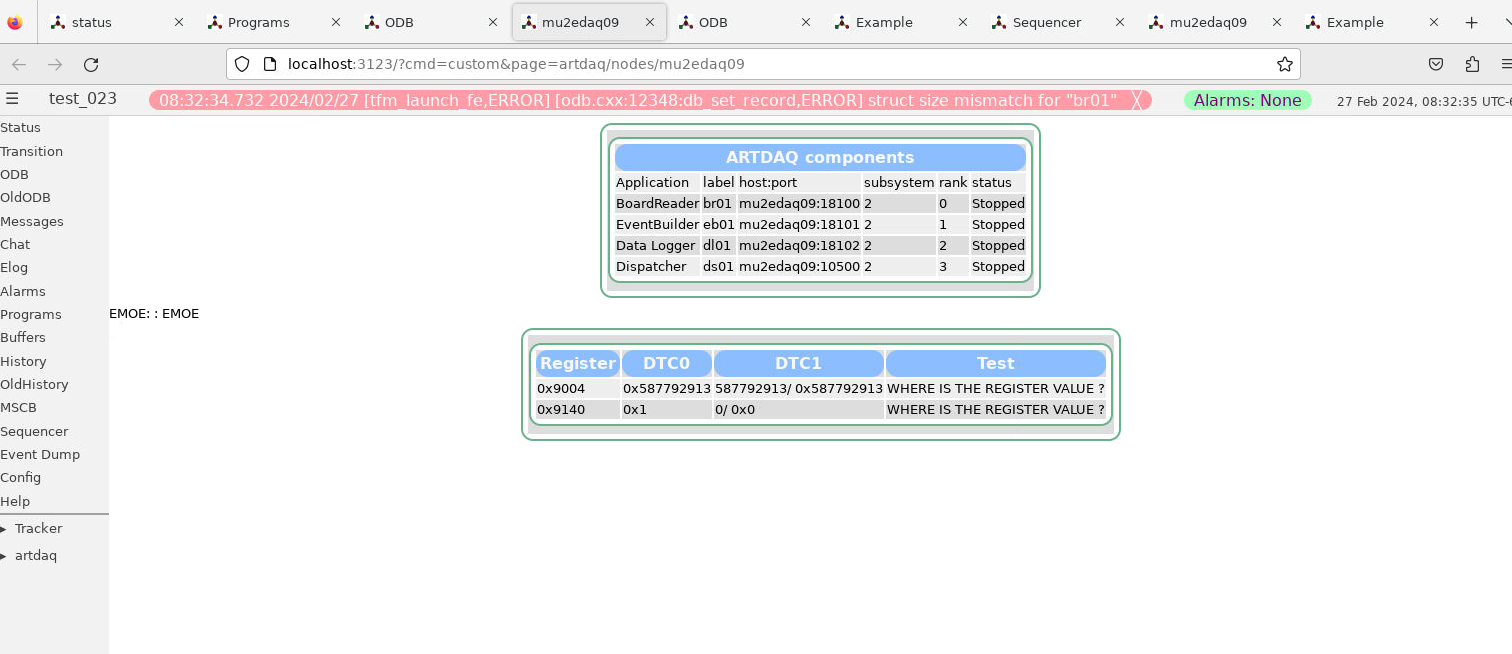

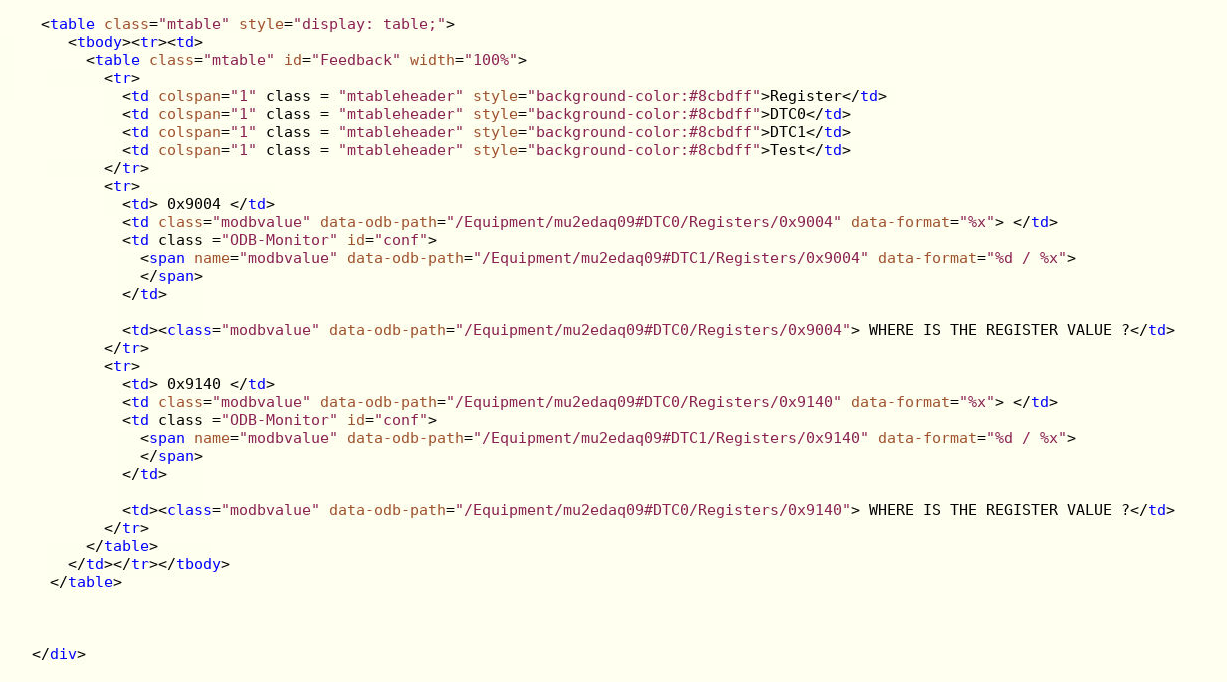

- attachment 1 shows the layout and the contents of the ODB sub-tree in question

- attachment 2 shows the web page as it is displayed

- attachment 3 shows the snippet of html/js producing the web page

I bet I'm missing smth trivial - an advice is greatly appreciated!

Also, is there an equivalent of a "0x%04x" specifier to have the output formatted

into a fixed length string ?

-- thanks, regards, Pasha |

| Attachment 1: 2024_02_27_dtc_registers_in_odb.png

|

|

| Attachment 2: 2024_02_27_custom_page.png

|

|

| Attachment 3: 2024_02_27_custom_page_html.png

|

|

|

2721

|

27 Feb 2024 |

Pavel Murat | Forum | displaying integers in hex format ? | Hi Stefan (and Ben),

thanks for reacting so promptly - your commits on Bitbucket fixed the problem.

For those of us who knows little about how the web browsers work:

- picking up the fix required flushing the cache of the MIDAS client web browser - apparently the web browser

I'm using - Firefox 115.6 - cached the old version of midas.js but wouldn't report it cached and wouldn't load

the updated file on its own.

-- thanks again, regards, Pasha |

|

2787

|

04 Jul 2024 |

Pavel Murat | Suggestion | cmake-installing more files ? | Dear all,

this posting results from the Fermilab move to a new packaging/build system called spack

which doesn't allow to use the MIDAS install procedure described at

https://daq00.triumf.ca/MidasWiki/index.php/Quickstart_Linux#MIDAS_Package_Installation

as is. Spack specifics aside, building MIDAS under spack took

a) adding cmake install for three directories: drivers, resources, and python/midas,

b) adding one more include file - include/tinyexpr.h - to the list of includes installed by cmake.

With those changes I was able to point MIDASSYS to the spack install area and successfully run mhttpd,

build experiment-specific C++ frontends and drivers, use experiment-specific python frontends etc.

I'm not using anything from MIDAS submodules though.

I'm wondering what the experts would think about accepting the changes above to the main tree.

Installation procedures and changed to cmake files are always a sensitive area with a lot of boundary

constraints coming from the existing use patterns, and even a minor change could have unexpected consequences

So I wouldn't be surprised if the fairly minor changes outlined above had side effects.

The patch file is attached for consideration.

-- regards, Pasha |

| Attachment 1: midas-spack.patch

|

diff --git a/CMakeLists.txt b/CMakeLists.txt

index 3c6a4109..57dab96f 100644

--- a/CMakeLists.txt

+++ b/CMakeLists.txt

@@ -642,6 +642,7 @@ else()

include/musbstd.h

include/mvmestd.h

include/odbxx.h

+ include/tinyexpr.h

include/tmfe.h

include/tmfe_rev0.h

mxml/mxml.h

@@ -657,6 +658,9 @@ install(TARGETS midas midas-shared midas-c-compat mfe mana rmana

install(EXPORT ${PROJECT_NAME}-targets DESTINATION lib)

+install(DIRECTORY drivers DESTINATION . )

+install(DIRECTORY resources DESTINATION . )

+install(DIRECTORY python/midas DESTINATION python)

#####################################################################

# generate git revision file

#####################################################################

|

|