| ID |

Date |

Author |

Topic |

Subject |

|

1760

|

13 Jan 2020 |

Peter Kunz | Forum | ODB dump format: json - events 0x8000 and 0x8001 missing | MIDAS version: 2.1

GIT revision: Tue Dec 31 17:40:14 2019 +0100 - midas-2019-09-i-1-gd93944ce-dirty on branch develop

/Logger/Channels/0/Settings

| ODB dump |

y |

| ODB dump format |

json |

With the settings above the file last.json generated for a new run is empty and the events 0x8000 and 0x8001 are missing in the .mid file.

When setting "ODB dump format" to "xml", events 0x8000 and 0x8001 are included in the .mid file, however, the file last.xml is not created.

|

|

1761

|

13 Jan 2020 |

Peter Kunz | Forum | frontend issues with midas-2019-09 | After upgrading to the lastes MIDAS version I got the DAQ frontend of my application running by changing all compiler directives from cc to g++ and using

#include "mfe.h"

extern HNDLE hDB

extern "C" {

#include <CAENComm.h>

}

With these changes everything seems to work fine.

However, I'm having trouble with a slow control frontend using a tcpip driver. It compiled well with the older MIDAS version. Even though all the functions in question are defined in the frontend code, the following error comes up:

g++ -o feMotor -DOS_LINUX -Dextname -g -O2 -fPIC -Wall -Wuninitialized -fpermissive -I/home/pkunz/packages/midas/include -I. -I/home/pkunz/packages/midas/drivers/bus /home/pkunz/packages/midas/lib/libmidas.a feMotor.o /home/pkunz/packages/midas/drivers/bus/tcpip.o cd_Galil.o /home/pkunz/packages/midas/lib/libmidas.a /home/pkunz/packages/midas/lib/mfe.o -lm -lz -lutil -lnsl -lpthread -lrt

/usr/bin/ld: /home/pkunz/packages/midas/lib/mfe.o: in function `initialize_equipment()':

/home/pkunz/packages/midas/src/mfe.cxx:687: undefined reference to `interrupt_configure(int, int, long)'

/usr/bin/ld: /home/pkunz/packages/midas/lib/mfe.o: in function `readout_enable(unsigned int)':

/home/pkunz/packages/midas/src/mfe.cxx:1236: undefined reference to `interrupt_configure(int, int, long)'

/usr/bin/ld: /home/pkunz/packages/midas/src/mfe.cxx:1238: undefined reference to `interrupt_configure(int, int, long)'

/usr/bin/ld: /home/pkunz/packages/midas/lib/mfe.o: in function `main':

/home/pkunz/packages/midas/src/mfe.cxx:2791: undefined reference to `interrupt_configure(int, int, long)'

/usr/bin/ld: /home/pkunz/packages/midas/src/mfe.cxx:2792: undefined reference to `interrupt_configure(int, int, long)'

collect2: error: ld returned 1 exit status

make: *** [Makefile:36: feMotor] Error 1

I guess the the aforementioned DAQ frontend compiles because its equipment definitions don't call on the function `initialize_equipment()', but I can't figure out why it doesn't work. Help is appreciated. P.K. |

|

1767

|

13 Jan 2020 |

Peter Kunz | Forum | frontend issues with midas-2019-09 | Thanks for explaining this, Konstantin.

After updating the function to

INT interrupt_configure(INT cmd, INT source, POINTER_T adr)

{

return CM_SUCCESS;

}

it compiled without errors. In the original code the "INT source" variable was missing.

> (please use the "plain" text, much easier to answer).

>

> Hi, Peter, I think you misread the error message. There is no error about initialize_equipment(), the error

> is about interrupt_configure(). initialize_equipment() is just one of the functions that calls it.

>

> The cause of the error most likely is a mismatch between the declaration of interrupt_configure() in

> mfe.h and the definition of this function in your program (in feMotor.c, I guess).

>

> Sometimes this mismatch is hard to identify just by looking at the code.

>

> One fool-proof method to debug this is to extract the actual function prototypes from your object files,

> both the declaration ("U") in mfe.o and the definition ("T") in your program should be identical:

>

> nm feMotor.o | grep -i interrupt | c++filt

> 0000000000000f90 T interrupt_configure(int, int, long) <--- should be this

>

> nm ~/packages/midas/lib/libmfe.a | grep -i interrupt | c++filt

> U interrupt_configure(int, int, long)

>

> If they are different, you adjust your program until they match. One way to ensure the match is to copy

> the declaration from mfe.h into your program.

>

> K.O.

>

>

>

>

> <p> </p>

>

> <table align="center" cellspacing="1" style="border:1px solid #486090; width:98%">

> <tbody>

> <tr>

> <td style="background-color:#486090">Peter Kunz wrote:</td>

> </tr>

> <tr>

> <td style="background-color:#FFFFB0">

> <p>After upgrading to the lastes MIDAS version I got the DAQ frontend of my application

> running by changing all compiler directives from cc to g++ and using</p>

>

> <p>#include "mfe.h"</p>

>

> <p>extern HNDLE hDB</p>

>

> <p> extern "C" { <br />

> #include <CAENComm.h> <br />

> }</p>

>

> <p>With these changes everything seems to work fine.</p>

>

> <p>However, I'm having trouble with a slow control frontend using a tcpip driver. It

> compiled well with the older MIDAS version. Even though all the functions in question are defined in the

> frontend code, the following error comes up:</p>

>

> <div style="background:#eee;border:1px solid #ccc;padding:5px 10px;">g++ -o feMotor

> -DOS_LINUX -Dextname -g -O2 -fPIC -Wall -Wuninitialized -fpermissive -

> I/home/pkunz/packages/midas/include -I. -I/home/pkunz/packages/midas/drivers/bus

> /home/pkunz/packages/midas/lib/libmidas.a feMotor.o

> /home/pkunz/packages/midas/drivers/bus/tcpip.o cd_Galil.o

> /home/pkunz/packages/midas/lib/libmidas.a /home/pkunz/packages/midas/lib/mfe.o -lm -lz -lutil -lnsl -

> lpthread -lrt<br />

> /usr/bin/ld: /home/pkunz/packages/midas/lib/mfe.o: in function

> `initialize_equipment()':<br />

> /home/pkunz/packages/midas/src/mfe.cxx:687: undefined reference to

> `interrupt_configure(int, int, long)'<br />

> /usr/bin/ld: /home/pkunz/packages/midas/lib/mfe.o: in function

> `readout_enable(unsigned int)':<br />

> /home/pkunz/packages/midas/src/mfe.cxx:1236: undefined reference to

> `interrupt_configure(int, int, long)'<br />

> /usr/bin/ld: /home/pkunz/packages/midas/src/mfe.cxx:1238: undefined reference to

> `interrupt_configure(int, int, long)'<br />

> /usr/bin/ld: /home/pkunz/packages/midas/lib/mfe.o: in function `main':<br />

> /home/pkunz/packages/midas/src/mfe.cxx:2791: undefined reference to

> `interrupt_configure(int, int, long)'<br />

> /usr/bin/ld: /home/pkunz/packages/midas/src/mfe.cxx:2792: undefined reference to

> `interrupt_configure(int, int, long)'<br />

> collect2: error: ld returned 1 exit status<br />

> make: *** [Makefile:36: feMotor] Error 1</div>

>

> <p> I guess the the aforementioned DAQ frontend compiles because its equipment

> definitions don't call on the function `initialize_equipment()', but I can't figure out why

> it doesn't work. Help is appreciated. P.K.</p>

> </td>

> </tr>

> </tbody>

> </table>

>

> <p> </p> |

|

1768

|

13 Jan 2020 |

Peter Kunz | Forum | ODB dump format: json - events 0x8000 and 0x8001 missing | Re: MIDAS versions

Thanks for pointing that out. I wasn't actually aware that there is a release branch and a development branch.

I was just following the installation instructions using

git clone https://bitbucket.org/tmidas/midas --recursive

Apparently that gave me the development branch. How can I get the release version?

(I think my version showed up a "dirty" because I played around with one of the examples. I didn't touch the actual source code.)

> (Please post messages in "plain" mode, they are much easier to answer)

>

> Thank you for reporting this problem. I will try to reproduce it.

>

> In addition, I will say a few words about your version of midas:

>

> > GIT revision: midas-2019-09-i-1-gd93944ce-dirty on branch develop

>

> I recommend that for production systems one used the tagged release versions of midas.

> (i.e. see https://midas.triumf.ca/elog/Midas/1750).

>

> (Your midas is "1 commit after the latest tag" - the "-1" in the git revision).

>

> I apply bug fixes to both the release branch and the develop branch, but for you to get

> these fixes, on the develop branch you will also "get" all the unrelated changes that may

> come with new bugs. On the release branch, you will only get the bug fixes.

>

> In your midas version it says "-dirty" which means that you have local modifications to the

> midas sources. With luck those changes are not related to the bug that you see. (but I

> cannot tell). You can do "git status" and "git diff" to see what the local changes are.

>

> It is much better if bugs are reported against "clean" builds of MIDAS (no "-dirty").

>

>

> K.O.

>

>

> <p> </p>

>

> <table align="center" cellspacing="1" style="border:1px solid #486090; width:98%">

> <tbody>

> <tr>

> <td style="background-color:#486090">Peter Kunz wrote:</td>

> </tr>

> <tr>

> <td style="background-color:#FFFFB0">

> <p>MIDAS version: 2.1<br />

> GIT revision: Tue Dec 31 17:40:14 2019 +0100 -

> midas-2019-09-i-1-gd93944ce-dirty on branch develop</p>

>

> <p>/Logger/Channels/0/Settings</p>

>

> <table border="3" cellpadding="1" class="dialogTable">

> <tbody>

> <tr>

> <td>ODB dump</td>

> <td>y</td>

> </tr>

> <tr>

> <td>ODB dump format</td>

> <td>json</td>

> </tr>

> </tbody>

> </table>

>

> <p>With the settings above the file last.json generated for a new run is

> empty and the events 0x8000 and 0x8001 are missing in the .mid file.</p>

>

> <p>When setting "ODB dump format" to "xml", events

> 0x8000 and 0x8001 are included in the .mid file, however, the file last.xml is not created.

> </p>

>

> <p> </p>

>

> <p> </p>

>

> <p> </p>

> </td>

> </tr>

> </tbody>

> </table>

>

> <p> </p> |

|

1770

|

13 Jan 2020 |

Peter Kunz | Forum | cmake complie issues | Re: ROOT problem

I looked into how my ROOT based MIDAS analyzer compiles. It is using the flag -std=c++14.

MIDAS cmake compiles with -std=gnu++11 with the result:

29%] Building CXX object CMakeFiles/rmana.dir/src/mana.cxx.o

/usr/bin/c++ -D_LARGEFILE64_SOURCE -I/home/pkunz/packages/midas/include -I/home/pkunz/packages/midas/mxml -I/usr/include/root -O2 -g -DNDEBUG -DHAVE_ZLIB -DHAVE_FTPLIB -Wall -Wformat=2 -Wno-format-nonliteral -Wno-strict-aliasing -Wuninitialized -Wno-unused-function -DHAVE_ROOT -std=gnu++11 -o CMakeFiles/rmana.dir/src/mana.cxx.o -c /home/pkunz/packages/midas/src/mana.cxx

In file included from /usr/include/root/TString.h:28,

from /usr/include/root/TCollection.h:29,

from /usr/include/root/TSeqCollection.h:25,

from /usr/include/root/TList.h:25,

from /usr/include/root/TQObject.h:40,

from /usr/include/root/TApplication.h:30,

from /home/pkunz/packages/midas/src/mana.cxx:60:

/usr/include/root/ROOT/RStringView.hxx:32:37: error: ‘experimental’ in namespace ‘std’ does not name a type

32 | using basic_string_view = ::std::experimental::basic_string_view<_CharT,_Traits>;

If I change this to

/usr/bin/c++ -D_LARGEFILE64_SOURCE -I/home/pkunz/packages/midas/include -I/home/pkunz/packages/midas/mxml -I/usr/include/root -O2 -g -DNDEBUG -DHAVE_ZLIB -DHAVE_FTPLIB -Wall -Wformat=2 -Wno-format-nonliteral -Wno-strict-aliasing -Wuninitialized -Wno-unused-function -DHAVE_ROOT -std=c++14 -o CMakeFiles/rmana.dir/src/mana.cxx.o -c /home/pkunz/packages/midas/src/mana.cxx

it compiles without error. I hope this helps.

> (please post messages in "plain" mode, they are much easier to answer)

>

> - nvidia problems - this code was contributed by Joseph (I think?), with luck he will look into

> this problem.

>

> - ROOT problem - it looks like the error is thrown by the ROOT header files, has nothing to do

> with MIDAS?

>

> So what ROOT are you using? I recommend installing ROOT by following instructions at

> root.cern.ch.

>

> Perhaps you used the ROOT packages from the EPEL repository? I have seen trouble with

> those packages before (miscompiled; important optional features turned off; very old

> versions; etc).

>

> P.S.

>

> Historically, ROOT has caused so many reports of "cannot build midas" that I consistently

> vote to "remove ROOT support from MIDAS". But Stefan's code for writing MIDAS data into

> ROOT files is so neat, cannot throw it away. And some people do use it. So at the latest MIDAS

> bash this Summer we decided to keep it.

>

> (Only build targets to use ROOT are the rmlogger executable and the rmana.o object file (and

> it's one-man-army library)).

>

> But.

>

> In the past, one could use "make -k" to get past the errors caused by ROOT, everything will

> get built and installed, except for the code that failed to build.

>

> Now with cmake, it is "all or nothing", if there is any compilation error, nothing gets installed

> into the "bin" directory. So one must discover and use "NO_ROOT=1" (which becomes sticky

> until the next "make cclean". Some people are not used to sticky "make" options, I just got

> burned by this very thing last week).

>

> Perhaps there is a way to tell cmake to ignore compile errors for rmlogger and rmana.

>

>

> K.O.

>

>

>

> <p> </p>

>

> <table align="center" cellspacing="1" style="border:1px solid #486090; width:98%">

> <tbody>

> <tr>

> <td style="background-color:#486090">Peter Kunz wrote:</td>

> </tr>

> <tr>

> <td style="background-color:#FFFFB0">

> <p>While upgrading to the latest MIDAS version</p>

>

> <p>MIDAS version: 2.1 GIT revision: Tue Dec 31 17:40:14 2019 +0100 - midas-

> 2019-09-i-1-gd93944ce-dirty on branch develop</p>

>

> <p>ODB version: 3</p>

>

> <p>I encountered two issues using cmake</p>

>

> <p>1. on machines with NVIDIA drivers:</p>

>

> <div style="background:#eee;border:1px solid #ccc;padding:5px

> 10px;">nvml.h: no such file or directory</div>

>

> <p>(nvml.h doesn't seem to be part of the standard nvidia driver

> package.)</p>

>

> <p>2. Complile including ROOT throws an error with ROOT 6.12/06 on Centos7

> and with ROOT 6.18/04 on Fedora 31:</p>

>

> <div style="background:#eee;border:1px solid #ccc;padding:5px 10px;">[ 29%]

> Building CXX object CMakeFiles/rmana.dir/src/mana.cxx.o In file included from

> /usr/include/root/TString.h:28, from /usr/include/root/TCollection.h:29, from

> /usr/include/root/TSeqCollection.h:25, from /usr/include/root/TList.h:25, from

> /usr/include/root/TQObject.h:40, from /usr/include/root/TApplication.h:30, from

> /home/pkunz/packages/midas/src/mana.cxx:60:</div>

>

> <div style="background:#eee;border:1px solid #ccc;padding:5px

> 10px;">/usr/include/root/ROOT/RStringView.hxx:32:37: error: ‘experimental’ in

> namespace ‘std’ does not name a type 32 | using basic_string_view =

> ::std::experimental::basic_string_view<_CharT,_Traits>;</div>

>

> <p>A workaround (which works for me) is to compile with</p>

>

> <p><tt>cmake .. -DNO_ROOT=1 -DNO_NVIDIA=1</tt></p>

> </td>

> </tr>

> </tbody>

> </table>

>

> <p> </p> |

|

1777

|

14 Jan 2020 |

Peter Kunz | Forum | EPICS frontend does not compile under midas-2019-09-i | I'm still trying to upgrade my MIDAS system to midas-2019-09-i. Most frontends work fine with the modifications already discussed.

However, I ran into some trouble with the epics frontend. Even with the modifications it throws a lot of warnings and errors (see attached log file). I can reduce the errors with -fpermissive, but the following two errors are persistent:

/home/ays/packages/midas/drivers/device/epics_ca.cxx:167:38: error: ‘ca_create_channel’ was not declared in this scope

, &(info->caid[i].chan_id))

/home/ays/packages/midas/drivers/device/epics_ca.cxx:178:37: error: ‘ca_create_subscription’ was not declared in this scope

, &(info->caid[i].evt_id))

This is strange because the functions seem to be declared in base/include/cadef.h along with similar functions that don't throw an error.

I don't know what's going on. The frontend which is almost identical to the example in the midas distribution compiles without warnings or errors under the Midas2017 version. |

| Attachment 1: epics_compile_errors.txt

|

[ays@isys05 epics]$ make

g++ -g -D_POSIX_C_SOURCE=199506L -D_POSIX_THREADS -D_XOPEN_SOURCE=500 -DOSITHREAD_USE_DEFAULT_STACK -D_X86_ -DUNIX -D_BSD_SOURCE -Dlinux -I. -I/home/ays/packages/midas/include -I/home/ays/packages/midas/drivers -I/home/ays/packages/base/include -I/home/ays/packages/base/include/os/Linux/ -DOS_LINUX -c /home/ays/packages/midas/drivers/device/epics_ca.cxx -o epics_ca.o

In file included from /home/ays/packages/midas/drivers/device/epics_ca.cxx:29:0:

/home/ays/packages/midas/include/midas.h:1316:21: warning: non-static data member initializers only available with -std=c++11 or -std=gnu++11 [enabled by default]

DWORD event_id = 0;

^

/home/ays/packages/midas/include/midas.h:1318:18: warning: non-static data member initializers only available with -std=c++11 or -std=gnu++11 [enabled by default]

DWORD n_tag = 0;

^

/home/ays/packages/midas/include/midas.h:1323:19: warning: non-static data member initializers only available with -std=c++11 or -std=gnu++11 [enabled by default]

int hist_fh = 0;

^

/home/ays/packages/midas/include/midas.h:1324:19: warning: non-static data member initializers only available with -std=c++11 or -std=gnu++11 [enabled by default]

int index_fh = 0;

^

/home/ays/packages/midas/include/midas.h:1325:19: warning: non-static data member initializers only available with -std=c++11 or -std=gnu++11 [enabled by default]

int def_fh = 0;

^

/home/ays/packages/midas/include/midas.h:1326:23: warning: non-static data member initializers only available with -std=c++11 or -std=gnu++11 [enabled by default]

DWORD base_time = 0;

^

/home/ays/packages/midas/include/midas.h:1327:23: warning: non-static data member initializers only available with -std=c++11 or -std=gnu++11 [enabled by default]

DWORD def_offset = 0;

^

/home/ays/packages/midas/drivers/device/epics_ca.cxx: In function ‘void exceptionCallback(exception_handler_args)’:

/home/ays/packages/midas/drivers/device/epics_ca.cxx:64:25: warning: deprecated conversion from string constant to ‘char*’ [-Wwrite-strings]

static char *noname = "unknown";

^

/home/ays/packages/midas/drivers/device/epics_ca.cxx:69:50: warning: deprecated conversion from string constant to ‘char*’ [-Wwrite-strings]

if(chid) printChidInfo(chid,"exceptionCallback");

^

/home/ays/packages/midas/drivers/device/epics_ca.cxx: In function ‘void connectionCallback(connection_handler_args)’:

/home/ays/packages/midas/drivers/device/epics_ca.cxx:78:42: warning: deprecated conversion from string constant to ‘char*’ [-Wwrite-strings]

printChidInfo(chid,"connectionCallback");

^

/home/ays/packages/midas/drivers/device/epics_ca.cxx: In function ‘void accessRightsCallback(access_rights_handler_args)’:

/home/ays/packages/midas/drivers/device/epics_ca.cxx:85:44: warning: deprecated conversion from string constant to ‘char*’ [-Wwrite-strings]

printChidInfo(chid,"accessRightsCallback");

^

/home/ays/packages/midas/drivers/device/epics_ca.cxx: In function ‘INT epics_ca_init(HNDLE, void**, INT)’:

/home/ays/packages/midas/drivers/device/epics_ca.cxx:133:35: error: invalid conversion from ‘void*’ to ‘CA_INFO*’ [-fpermissive]

info = calloc(1, sizeof(CA_INFO));

^

/home/ays/packages/midas/drivers/device/epics_ca.cxx:139:57: error: invalid conversion from ‘void*’ to ‘char*’ [-fpermissive]

info->channel_names = calloc(channels, CHN_NAME_LENGTH);

^

/home/ays/packages/midas/drivers/device/epics_ca.cxx:153:47: error: invalid conversion from ‘void*’ to ‘float*’ [-fpermissive]

info->array = calloc(channels, sizeof(float));

^

In file included from /home/ays/packages/midas/drivers/device/epics_ca.cxx:19:0:

./cadef.h:762:12: warning: deprecated conversion from string constant to ‘char*’ [-Wwrite-strings]

__LINE__); \

^

/home/ays/packages/midas/drivers/device/epics_ca.cxx:160:3: note: in expansion of macro ‘SEVCHK’

SEVCHK(ca_add_exception_event(exceptionCallback,NULL), "ca_add_exception_event");

^

./cadef.h:762:12: warning: deprecated conversion from string constant to ‘char*’ [-Wwrite-strings]

__LINE__); \

^

/home/ays/packages/midas/drivers/device/epics_ca.cxx:160:3: note: in expansion of macro ‘SEVCHK’

SEVCHK(ca_add_exception_event(exceptionCallback,NULL), "ca_add_exception_event");

^

/home/ays/packages/midas/drivers/device/epics_ca.cxx:167:38: error: ‘ca_create_channel’ was not declared in this scope

, &(info->caid[i].chan_id))

^

./cadef.h:756:32: note: in definition of macro ‘SEVCHK’

int ca_unique_status_name = (CA_ERROR_CODE); \

^

./cadef.h:762:12: warning: deprecated conversion from string constant to ‘char*’ [-Wwrite-strings]

__LINE__); \

^

/home/ays/packages/midas/drivers/device/epics_ca.cxx:163:5: note: in expansion of macro ‘SEVCHK’

SEVCHK(ca_create_channel(info->channel_names + CHN_NAME_LENGTH * i

^

./cadef.h:762:12: warning: deprecated conversion from string constant to ‘char*’ [-Wwrite-strings]

__LINE__); \

^

/home/ays/packages/midas/drivers/device/epics_ca.cxx:163:5: note: in expansion of macro ‘SEVCHK’

SEVCHK(ca_create_channel(info->channel_names + CHN_NAME_LENGTH * i

^

./cadef.h:762:12: warning: deprecated conversion from string constant to ‘char*’ [-Wwrite-strings]

__LINE__); \

^

/home/ays/packages/midas/drivers/device/epics_ca.cxx:169:5: note: in expansion of macro ‘SEVCHK’

SEVCHK(ca_replace_access_rights_event(info->caid[i].chan_id

^

./cadef.h:762:12: warning: deprecated conversion from string constant to ‘char*’ [-Wwrite-strings]

__LINE__); \

^

/home/ays/packages/midas/drivers/device/epics_ca.cxx:169:5: note: in expansion of macro ‘SEVCHK’

SEVCHK(ca_replace_access_rights_event(info->caid[i].chan_id

^

/home/ays/packages/midas/drivers/device/epics_ca.cxx:178:37: error: ‘ca_create_subscription’ was not declared in this scope

, &(info->caid[i].evt_id))

^

./cadef.h:756:32: note: in definition of macro ‘SEVCHK’

int ca_unique_status_name = (CA_ERROR_CODE); \

^

./cadef.h:762:12: warning: deprecated conversion from string constant to ‘char*’ [-Wwrite-strings]

__LINE__); \

^

/home/ays/packages/midas/drivers/device/epics_ca.cxx:172:5: note: in expansion of macro ‘SEVCHK’

SEVCHK(ca_create_subscription (DBR_FLOAT

^

./cadef.h:762:12: warning: deprecated conversion from string constant to ‘char*’ [-Wwrite-strings]

__LINE__); \

^

/home/ays/packages/midas/drivers/device/epics_ca.cxx:172:5: note: in expansion of macro ‘SEVCHK’

SEVCHK(ca_create_subscription (DBR_FLOAT

^

./cadef.h:762:12: warning: deprecated conversion from string constant to ‘char*’ [-Wwrite-strings]

__LINE__); \

^

/home/ays/packages/midas/drivers/device/epics_ca.cxx:186:3: note: in expansion of macro ‘SEVCHK’

SEVCHK(ca_pend_event(5.0), "ca_ped_event");

^

./cadef.h:762:12: warning: deprecated conversion from string constant to ‘char*’ [-Wwrite-strings]

__LINE__); \

^

/home/ays/packages/midas/drivers/device/epics_ca.cxx:186:3: note: in expansion of macro ‘SEVCHK’

SEVCHK(ca_pend_event(5.0), "ca_ped_event");

^

/home/ays/packages/midas/drivers/device/epics_ca.cxx: In function ‘INT epics_ca(INT, ...)’:

/home/ays/packages/midas/drivers/device/epics_ca.cxx:308:50: error: invalid conversion from ‘void*’ to ‘void**’ [-fpermissive]

status = epics_ca_init(hKey, pinfo, channel);

^

/home/ays/packages/midas/drivers/device/epics_ca.cxx:126:5: error: initializing argument 2 of ‘INT epics_ca_init(HNDLE, void**, INT)’ [-fpermissive]

INT epics_ca_init(HNDLE hKey, void **pinfo, INT channels)

^

In file included from ./cadef.h:95:0,

from /home/ays/packages/midas/drivers/device/epics_ca.cxx:19:

/home/ays/packages/midas/drivers/device/epics_ca.cxx:312:29: error: invalid conversion from ‘void*’ to ‘CA_INFO*’ [-fpermissive]

info = va_arg(argptr, void *);

^

make: *** [epics_ca.o] Error 1

[ays@isys05 epics]$

|

|

1780

|

15 Jan 2020 |

Peter Kunz | Forum | EPICS frontend does not compile under midas-2019-09-i | Hi Konstantin,

I have EPICS Base Release 3.14.8.2 and got your example running with it, though I had to make one change.

For some reason it wouldn't find libca.so, but when I linked the static library libca.a instead, it worked.

Also my midas frontend works now with the updated epics_ca.xx. There was a problem with an old local version of cadef.h

(probably a leftover from some previous testing). After removing it everything compiled well.

Thanks,

Peter

> I fixed the compiler errors in epics_ca.cxx, can you try again? (see https://bitbucket.org/tmidas/midas/commits/)

>

> But, I do not see errors with ca_create_channel() and ca_create_subscription().

>

> Maybe I am using the wrong epics. If you still see these errors, let me know what epics you have, I will try the same one.

>

> This is what I do (and I get epics 7.0).

>

> mkdir -p $HOME/git

> cd $HOME/git

> git clone https://git.launchpad.net/epics-base

> cd epics-base

> make

> ls -l include/cadef.h

> ls -l lib/darwin-x86/libca.a # also linux-x86/libca.a

>

> K.O.

>

> > > I'm still trying to upgrade my MIDAS system to midas-2019-09-i. Most frontends work fine with the modifications already discussed.

> > > However, I ran into some trouble with the epics frontend. Even with the modifications it throws a lot of warnings and errors (see attached log file). I can reduce the errors with -fpermissive, but the following two errors are persistent:

> > >

> > > /home/ays/packages/midas/drivers/device/epics_ca.cxx:167:38: error: ‘ca_create_channel’ was not declared in this scope

> > > , &(info->caid[i].chan_id))

> > >

> > > /home/ays/packages/midas/drivers/device/epics_ca.cxx:178:37: error: ‘ca_create_subscription’ was not declared in this scope

> > > , &(info->caid[i].evt_id))

> > >

> > > This is strange because the functions seem to be declared in base/include/cadef.h along with similar functions that don't throw an error.

> > > I don't know what's going on. The frontend which is almost identical to the example in the midas distribution compiles without warnings or errors under the Midas2017 version.

> >

> > Hi, Peter - it looks like epics_ca.cxx needs to be updated to proper C++ (char* warnings, etc).

> >

> > As for the "function not declared" errors, it's the C++ way to say "you are calling a function with wrong arguments",

> > again, something I should be able to fix without too much trouble.

> >

> > Thank you for reporting this, the midas version of epics_ca.cxx definitely needs fixing.

> >

> > K.O. |

|

2267

|

31 Jul 2021 |

Peter Kunz | Bug Report | ss_shm_name: unsupported shared memory type, bye! | I ran into a problem trying to compile the latest MIDAS version on a Fedora

system.

mhttpd and odbedit return:

ss_shm_name: unsupported shared memory type, bye!

check_shm_type: preferred POSIXv4_SHM got SYSV_SHM

The check returns SYSV_SHM which doesn't seem to be supported in ss_shm_name.

Is there an easy solution for this?

Thanks. |

|

1115

|

23 Sep 2015 |

Peter Kravtsov | Forum | db_paste_node error in offline analyzer | I have a problem with using analyzer offline.

I'm trying to do it this way:

[lkst@pklinux online]$ kill_daq.sh

[lkst@pklinux online]$ odbedit -c "load data/run00020.odb"

[ODBEdit,INFO] Reloading RPC hosts access control list via hotlink callback

[lkst@pklinux online]$ analyzer -i data/run00020.mid -o test20.root

Root server listening on port 9090...

Running analyzer offline. Stop with "!"

[Analyzer,INFO] Set run number 20 in ODB

analyzer: src/odb.c:6631: db_paste_node: Assertion `status == 1' failed.

Load ODB from run 20...Aborted

[lkst@pklinux online]$

I always get this "Assertion `status == 1' failed." error even

if I try it in the examples/experiment enclosed in MIDAS distribution.

After this try I can not run any midas program, even odbedit reports an error,

until I delete the ODB file in /dev/shm/ and load last ODB dump with odbedit.

What do I do wrong and how this can be fixed? |

|

2633

|

22 Nov 2023 |

Pavel Murat | Forum | run number from an external (*SQL) db? | Dear MIDAQ developers,

I wonder if there is a non-intrusive way to have an external (wrt MIDAS)*SQL database

serving as a primary source of the run number information for a MIDAS-based DAQ system?

- like a plugin with a getNextRunNumber() function, for example, or a special client?

Here is the use case:

- multiple subdetectors are taking test data during early commissioning

- a postgres db is a single sorce of run numbers.

- test runs taken by different subsystems are assigned different [unique] run numbers and

the data taken by the subsystem are identified not by the run number/dataset name , but

by the run type, different for different susbsystems.

-- many thanks, regards, Pasha |

|

2636

|

01 Dec 2023 |

Pavel Murat | Forum | run number from an external (*SQL) db? | > > - multiple subdetectors are taking test data during early commissioning

> > - a postgres db is a single sorce of run numbers.

> > - test runs taken by different subsystems are assigned different [unique] run numbers and

> > the data taken by the subsystem are identified not by the run number/dataset name , but

> > by the run type, different for different susbsystems.

>

> For that purpose I would not "mis-use" run numbers. Run number are meant to be incremented

> sequentially, like if you have a time-stamp in seconds since 1.1.1970 (Unix time). Intead, I

> would add additional attributes under /Experiment/Run Parameters like "Subsystem type", "Run

> mode (production/commissioning)" etc. You have much more freedom in choosing any number of

> attributes there. Then, send this attributes to your postgred db via "/Logger/Runlog/SQL/Links

> BOR". Then you can query your database to give you all runs of a certain subtype or mode.

>

> See https://daq00.triumf.ca/MidasWiki/index.php/Logging_to_a_mySQL_database

>

> Stefan

Ben, Stefan - thanks much for your suggestions!(and apologies for the thanks being delayed)

Stefan, I don't think we're talking 'mis-use' - rather different subdetectors being commisisoned

at different locations, on an uncorrelated schedule, using independent run control (RC) instances.

At this point in time, we can't use a common RC instance.

The collected data, however, are written back into a common storage, and we need to avoid two

subdetectors using the same run number. As all RC instances can connect to the same database and request a

run number from there, an external DB serving run numbers to multiple clients looks as a reasonable solution,

which provides unique run numbers for everyone. Of course, the run number gets incremented (although on the DB

server side), and of course different susbystems are assigned different subsystem types.

So, in essense, it is about _where_ the run number is incremented - the RC vs the DB.

If there were a good strategy to implement a DB-based solution that w/o violating

first principles of Midas:), I'd be happy to contribute. It looks like a legitimate use case.

-- let me know, regards, Pasha |

|

2637

|

01 Dec 2023 |

Pavel Murat | Forum | MIDAS state machine : how to get around w/o 'configured' state? | I have one more question, though I understand that it could be somewhat border-line.

The MIDAS state machine doesn't seem to have a state in between 'initialized' and

'running'.

In a larger detectors with multiple subsystems, the DAQ systems often have one more state:

after ending a previous run and before starting a new one from the 'stopped' state,

one needs to make sure that all subdetectors are ready, or 'configured' for the new run.

So theat calls for a 'configure' step during which the detector (all subsystems in

parallel, to save the time) transitions from 'initialized'/'stopped' to 'configured' state,

from which it transitions to the 'running' state.

If one of the subdetectors fails to get configured, it could be excluded from the run

configuration and another attempt to reconfigure the system could be made without

starting a new run. Or an attempt could be made to troubleshoot and configure the

failed subsytem individually , with the rest subsystems waiting in a 'configured' state.

How does the logic of configuring the detector for the new run is implemented in MIDAS?

- it is a fairly common operational procedure, so I'm sure there should be a way

of doing that.

-- thanks again, Pasha |

|

2640

|

02 Dec 2023 |

Pavel Murat | Forum | run number from an external (*SQL) db? | >

> If you go in this direction, there is an alternative to what Ben wrote: Use the sequencer to start a run.

> The sequencer script can obtain a new run number from a central instance (e.g. by calling a shell script

> like 'curl ...' to obtain the new run number, then put it into /Runinfo/Run number as Ben wrote. This has

> the advantage that the run is _started_ already with the correct number, so the history system is fine.

>

Hi Stefan, this sounds like a perfect solution - thanks! - and leads to another, more technical, question:

- how does one communicate with an external shell script from MSL ? I looked at the MIDAS Sequencer page

https://daq00.triumf.ca/MidasWiki/index.php/Sequencer

and didn't find an immediately obvious candidate among the MSL commands.

The closest seems to be

'SCRIPT script [, a, b, c, ...]'

but I couldn't easily figure how to propagate the output of the script back to MIDAS.

Let say, the script creates an ASCII file with the next run number. What is the easiest

way to import the run number into ODB? - Should an external script spawn a [short-lived]

MIDAS client ? - That would work, but I'm almost sure there is a more straightforward solution.

Of course, the assumption that the 'SCRIPT' command provides the solution could be wrong.

-- thanks again, regards, Pasha |

|

2641

|

02 Dec 2023 |

Pavel Murat | Forum | MIDAS state machine : how to get around w/o 'configured' state? | > - To start a run, we start a special sequencer script. We have different scripts for

> calibration runs, data runs, special runs.

>

a sequencer-based way sounds like a very good solution, which provides all needed functionality

and even more flexibility than a state machine transition. Will give it a try.

-- thanks again, regards, Pasha |

|

2642

|

03 Dec 2023 |

Pavel Murat | Forum | run number from an external (*SQL) db? | > - how does one communicate with an external shell script from MSL ?

trying to answer my own question, as I didn't find a clear answer in the forum archive :

1. one could have a MSL script with a 'SCRIPT ./myscript.sh' command in it -

that would run a shell script named 'myscript.sh'

[that was not obvious from the documentation on MIDAS wiki, and adding a couple of clarifying

sentences there would go long ways]

2. if a script produces an ascii file with a known name, for example, 'a.odb', with the following two lines:

--------------------------------------- a.odb

[/Runinfo]

Run number = INT32 : 105

--------------------------------------- end a.odb

one can use the 'odbload' MSL command :

odbload a.odb

and get the run number set to 105. It works, but I'm curious if that is the right (envisaged)

way of interacting with the shell scripts, or one could do better than that.

-- thanks, regards, Pasha |

|

2646

|

09 Dec 2023 |

Pavel Murat | Forum | how to fix forgotten password ? | [Dear All, I apologize in advance for spamming.]

1) I tried to login into the forum from the lab computer and realized

that I forgot my password

2) I tried to reset the password and found that when registering

I mistyped my email address, having typed '.giv' instead of '.gov'

in the domain name, so the recovery email went into nowhere

(still have one session open on the laptop so can post this question)

- how do I get my email address fixed so I'd be able to reset the password?

-- many thanks, Pasha |

|

2647

|

09 Dec 2023 |

Pavel Murat | Forum | history plotting: where to convert the ADC readings into temps/voltages? | to plot time dependencies of the monitored detector parameters, say, voltages or temperatures,

one needs to convert the coresponging ADC readings into floats.

One could think of two ways of doing that:

- one can perform the ADC-->T or ADC-->V conversion in the MIDAS frontend,

store their [float] values in the data bank, and plot precalculated parameters vs time

- one can also store in the data bank the ADC readings which typically are short's

and convert them into floats (V's or T's) at the plotting time

The first approach doubles the storage space requirements, and I couldn't find the place where

one would do the conversion, if stored were the 16-bit ADC readings.

I'm sure this issue has been thought about, so what is the "recommended MIDAS way" of performing

the ADC -> monitored_number conversion when making MIDAS history plots ?

-- many thanks, regards, Pasha |

|

2652

|

11 Dec 2023 |

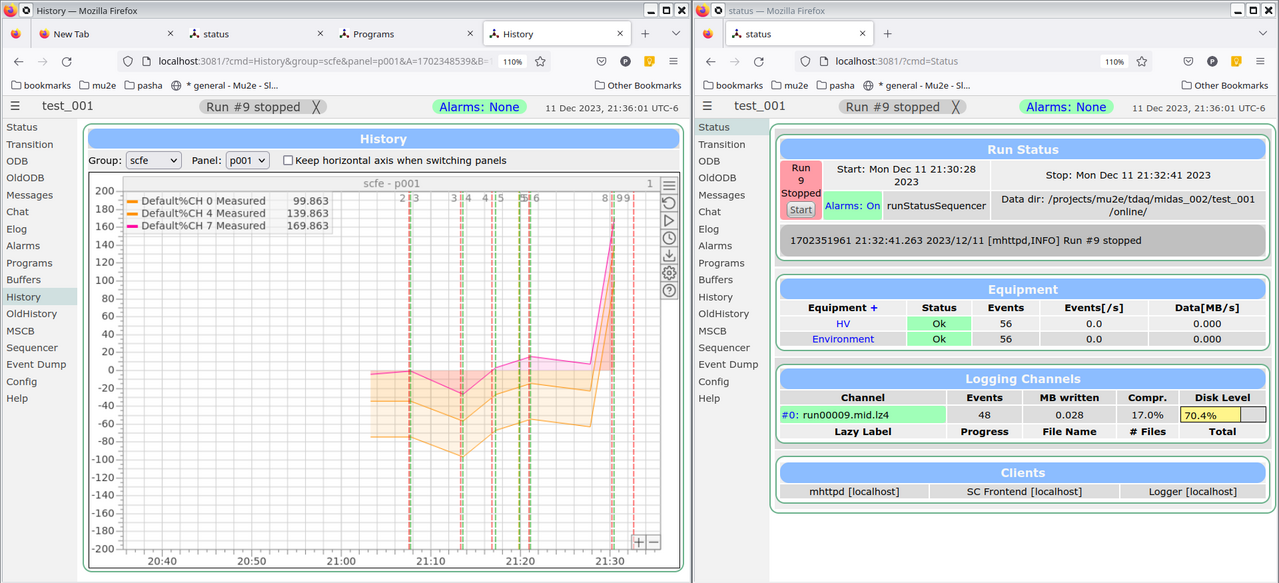

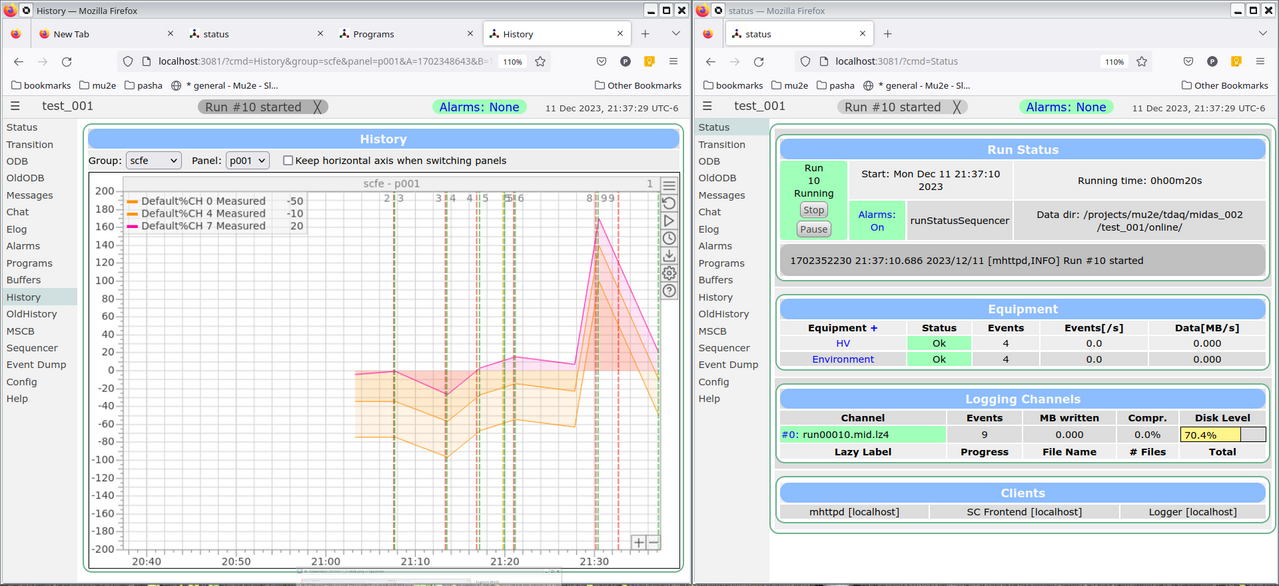

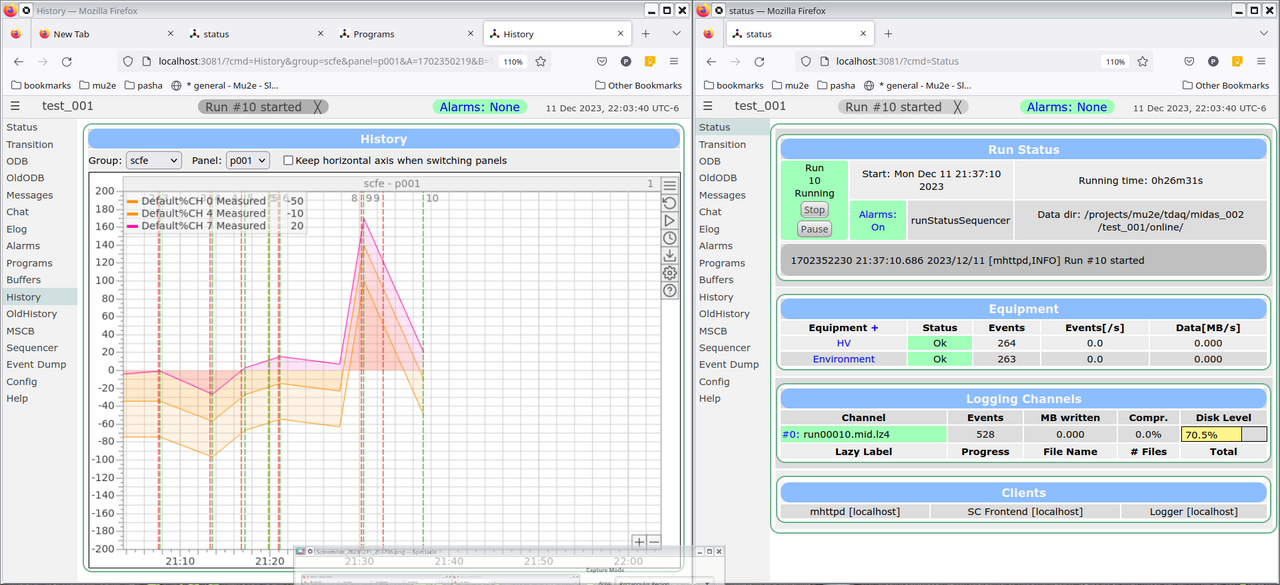

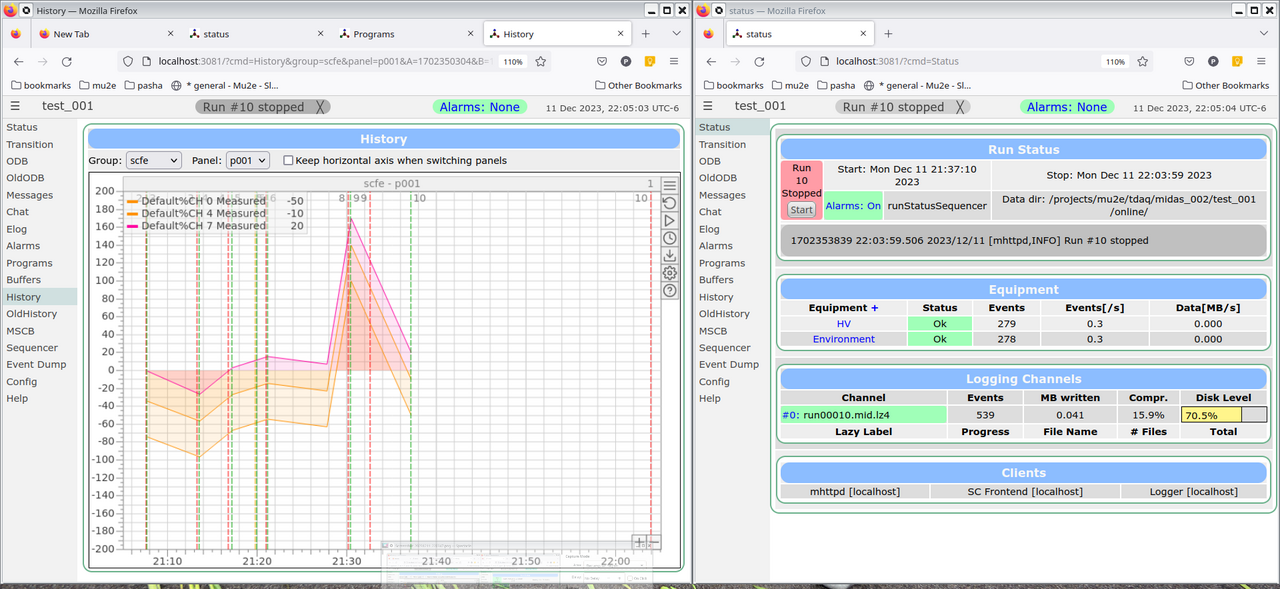

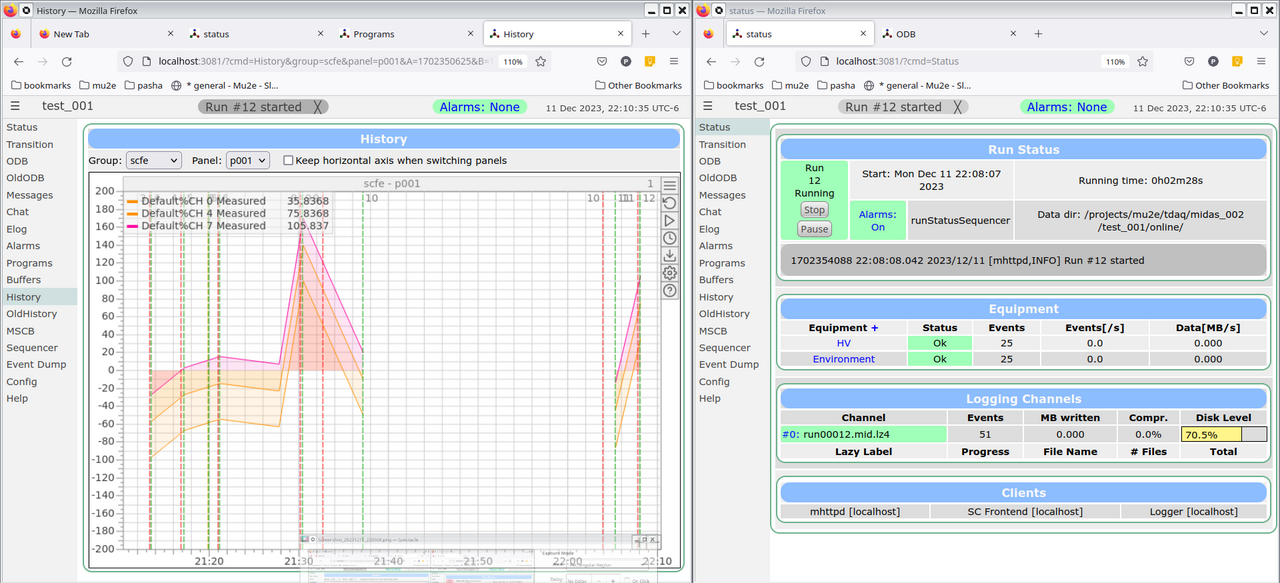

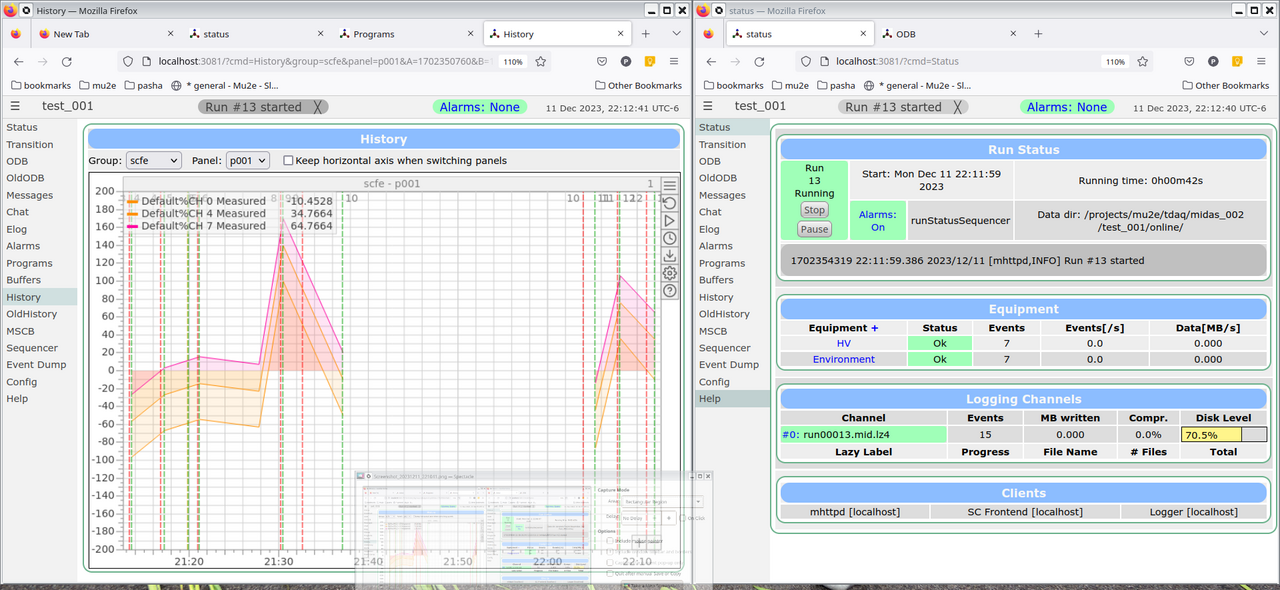

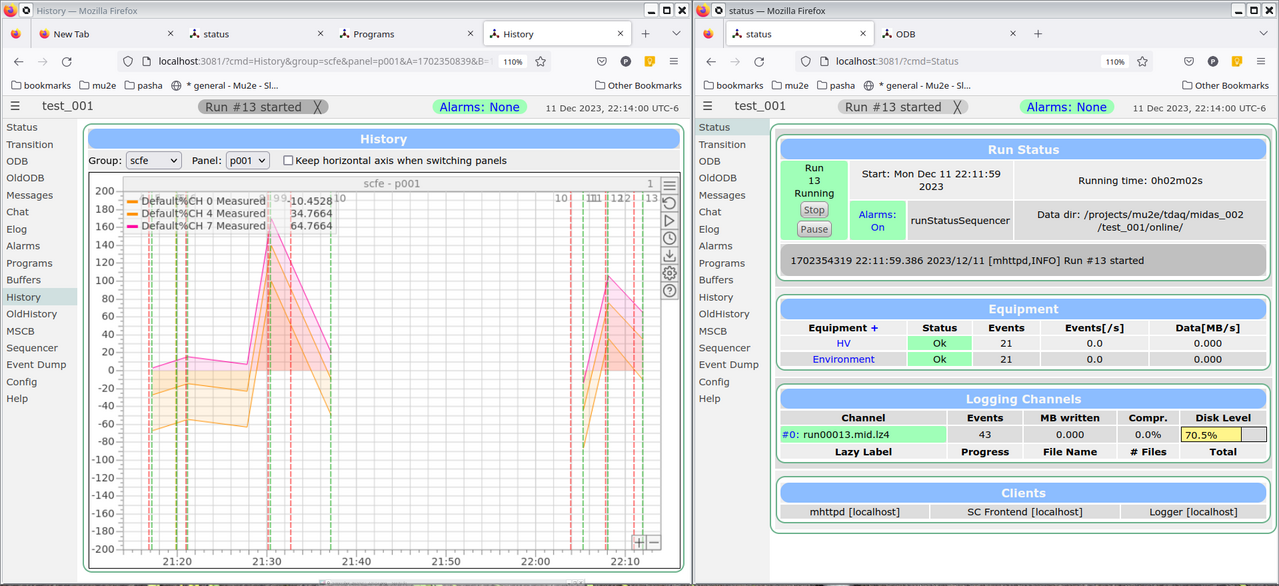

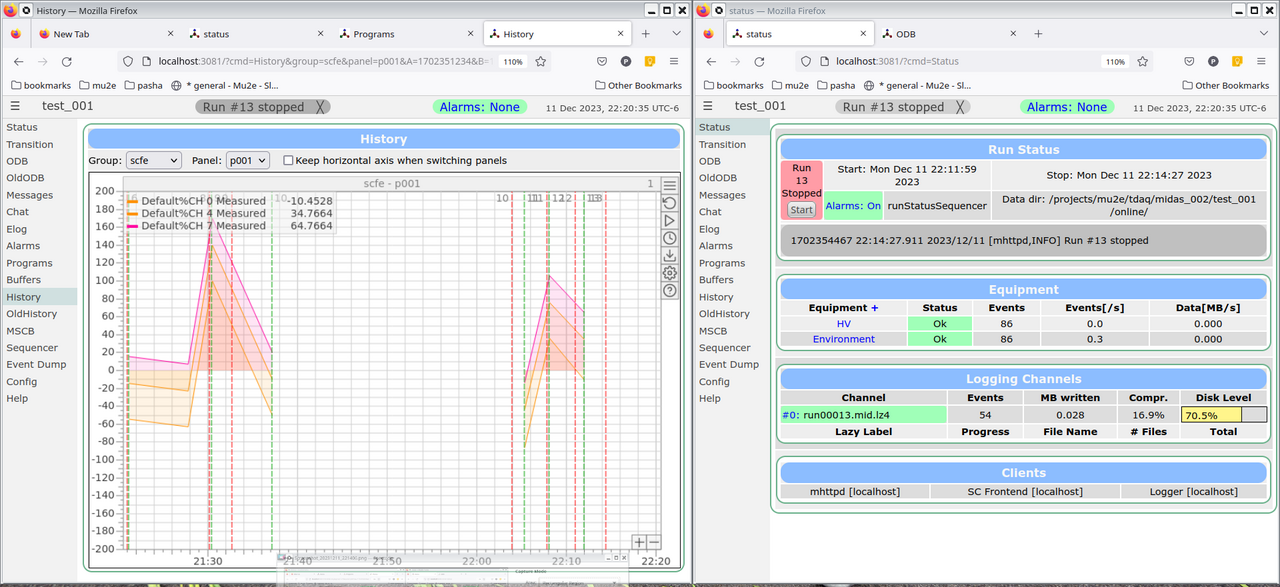

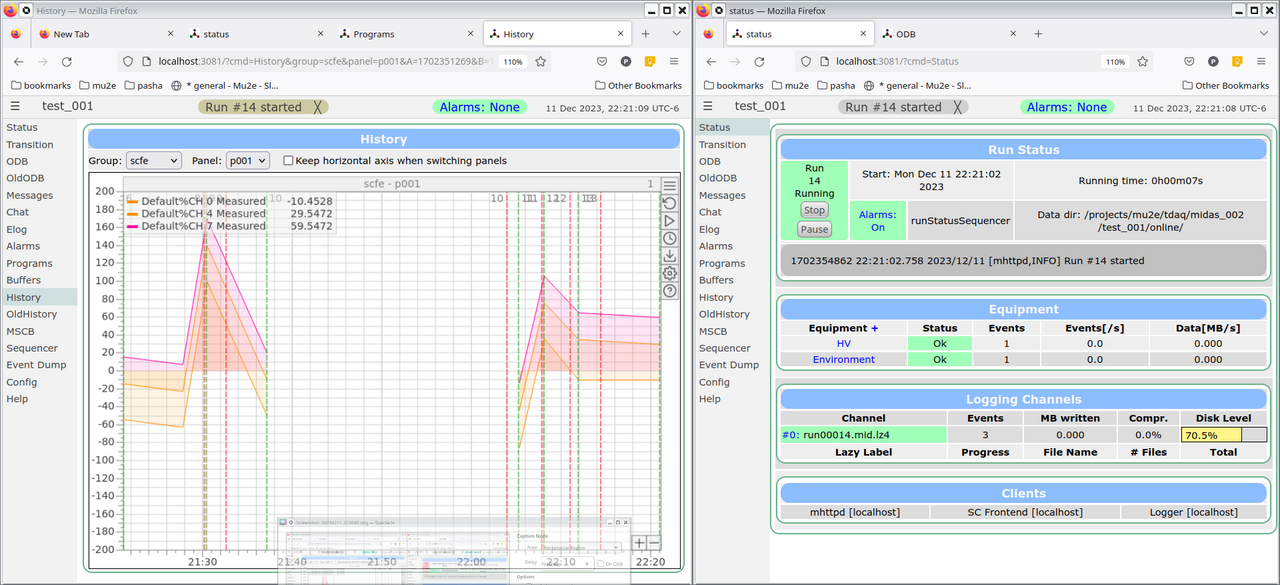

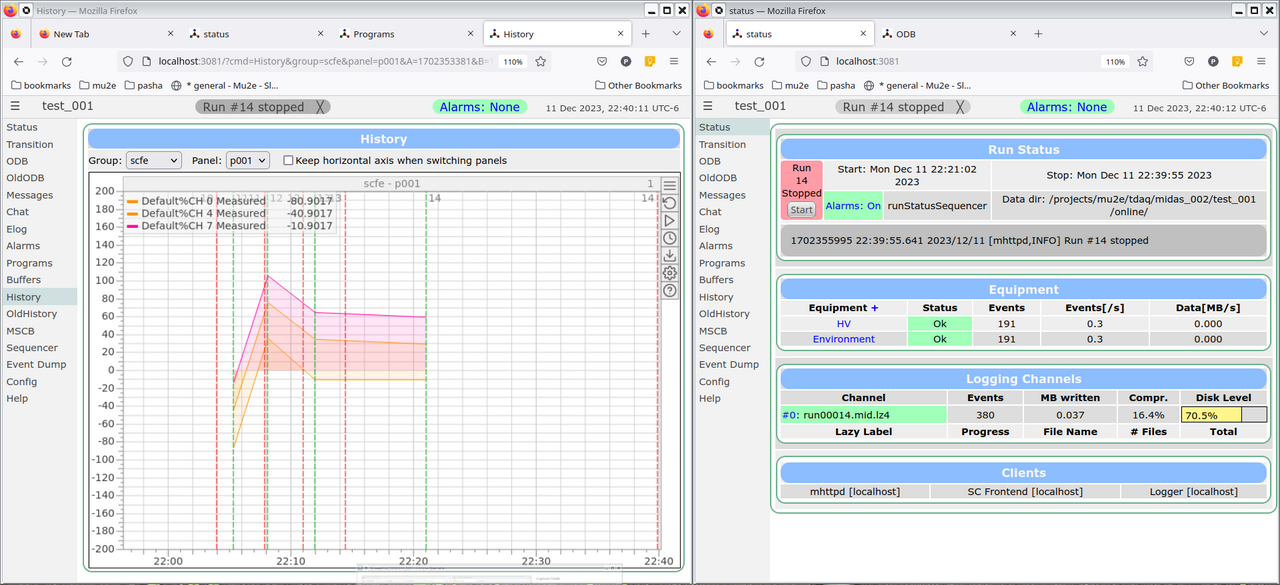

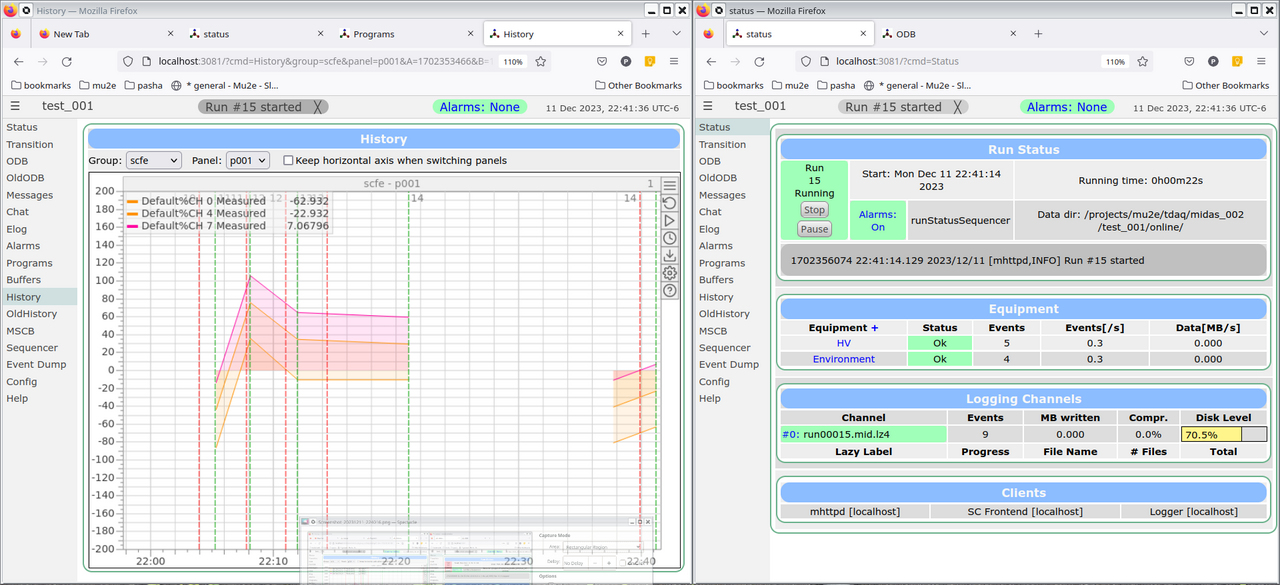

Pavel Murat | Forum | the logic of handling history variables ? | Dear MIDAS developers,

I'm trying to understand handling of the history (slow control) variables in MIDAS,

and it seems that the behavior I'm observing is somewhat counterintuitive.

Most likely, I just do not understand the implemented logic.

As it it rather difficult to report on the behavior of the interactive program,

I'll describe what I'm doing and illustrate the report with the series of attached

screenshots showing the history plots and the status of the run control at different

consecutive points in time.

Starting with the landscape:

- I'm running MIDAS, git commit=30a03c4c (the latest, as of today).

- I have built the midas/examples/slowcont frontend with the following modifications.

(the diffs are enclosed below):

1) the frequency of the history updates is increased from 60sec/10sec to 6sec/1sec

and, in hope to have updates continuos, I replaced (RO_RUNNING | RO_TRANSITIONS)

with RO_ALWAYS.

2) for convenience of debugging, midas/drivers/nulldrv.cxx is replaced with its clone,

which instead of returning zeroes in each channel, generates a sine curve:

V(t) = 100*sin(t/60)+10*channel

- an active channel in /Logger/History is chosen to be FILE

- /History/LoggerHistoryChannel is also set to FILE

- I'm running mlogger and modified, as described, 'scfe' frontend from midas/examples/slowcont

- the attached history plots include three (0,4 and 7) HV:MEASURED channels

Now, the observations:

1) the history plots are updated only when a new run starts, no matter how hard

I'm trying to update them by clicking on various buttons.

The attached screenshots show the timing sequence of the run control states

(with the times printed) and the corresponding history plots.

The "measured voltages" change only when the next run starts - the voltage graphs

break only at the times corresponding to the vertical green lines.

2) No matter for how long I wait within the run, the history updates are not happening.

3) if the time difference between the two run starts gets too large,

the plotted time dependence starts getting discontinuities

4) finally, if I switch the logging channel from FILE to MIDAS (activate the MIDAS

channel in /Logger/History and set /History/LoggerHistoryChannel to MIDAS),

the updates of the history plots simply stop.

MIDAS feels as a great DAQ framework, so I would appreciate any suggestion on

what I could be doing wrong. I'd also be happy to give a demo in real time

(via ZOOM/SKYPE etc).

-- much appreciate your time, thanks, regards, Pasha

------------------------------------------------------------------------------

diff --git a/examples/slowcont/scfe.cxx b/examples/slowcont/scfe.cxx

index 11f09042..c98d37e8 100644

--- a/examples/slowcont/scfe.cxx

+++ b/examples/slowcont/scfe.cxx

@@ -24,9 +24,10 @@

#include "mfe.h"

#include "class/hv.h"

#include "class/multi.h"

-#include "device/nulldev.h"

#include "bus/null.h"

+#include "nulldev.h"

+

/*-- Globals -------------------------------------------------------*/

/* The frontend name (client name) as seen by other MIDAS clients */

@@ -74,11 +75,11 @@ EQUIPMENT equipment[] = {

0, /* event source */

"FIXED", /* format */

TRUE, /* enabled */

- RO_RUNNING | RO_TRANSITIONS, /* read when running and on transitions */

- 60000, /* read every 60 sec */

+ RO_ALWAYS, /* read when running and on transitions */

+ 6000, /* read every 6 sec */

0, /* stop run after this event limit */

0, /* number of sub events */

- 10000, /* log history at most every ten seconds */

+ 1000, /* log history at most every one second */

"", "", ""} ,

cd_hv_read, /* readout routine */

cd_hv, /* class driver main routine */

@@ -93,8 +94,8 @@ EQUIPMENT equipment[] = {

0, /* event source */

"FIXED", /* format */

TRUE, /* enabled */

- RO_RUNNING | RO_TRANSITIONS, /* read when running and on transitions */

- 60000, /* read every 60 sec */

+ RO_ALWAYS, /* read when running and on transitions */

+ 6000, /* read every 6 sec */

0, /* stop run after this event limit */

0, /* number of sub events */

1, /* log history every event as often as it changes (max 1 Hz) */

------------------------------------------------------------------------------

[test_001]$ diff ../midas/examples/slowcont/nulldev.cxx ../midas/drivers/device/nulldev.cxx

13d12

< #include <math.h>

150,154c149,150

< if (channel < info->num_channels) {

< // *pvalue = info->array[channel];

< time_t t = time(NULL);;

< *pvalue = 100*sin(M_PI*t/60)+10*channel;

< }

---

> if (channel < info->num_channels)

> *pvalue = info->array[channel];

------------------------------------------------------------------------------ |

| Attachment 1: Screenshot_20231211_213608.png

|

|

| Attachment 2: Screenshot_20231211_213736.png

|

|

| Attachment 3: Screenshot_20231211_220347.png

|

|

| Attachment 4: Screenshot_20231211_220508.png

|

|

| Attachment 5: Screenshot_20231211_221041.png

|

|

| Attachment 6: Screenshot_20231211_221252.png

|

|

| Attachment 7: Screenshot_20231211_221406.png

|

|

| Attachment 8: Screenshot_20231211_222042.png

|

|

| Attachment 9: Screenshot_20231211_222114.png

|

|

| Attachment 10: Screenshot_20231211_224016.png

|

|

| Attachment 11: Screenshot_20231211_224141.png

|

|

|

2656

|

12 Dec 2023 |

Pavel Murat | Forum | the logic of handling history variables ? | Hi Sfefan, thanks a lot for taking time to reproduce the issue!

Here comes the resolution, and of course, it was something deeply trivial :

the definition of the HV equipment in midas/examples/slowcont/scfe.cxx has

the history logging time in seconds, however the comment suggests milliseconds (see below),

and for a few days I believed to the comment (:smile:)

Easy to fix.

Also, I think that having a sine wave displayed by midas/examples/slowcont/scfe.cxx

would make this example even more helpful.

-- thanks again, regards, Pasha

--------------------------------------------------------------------------------------------------------

EQUIPMENT equipment[] = {

{"HV", /* equipment name */

{3, 0, /* event ID, trigger mask */

"SYSTEM", /* event buffer */

EQ_SLOW, /* equipment type */

0, /* event source */

"FIXED", /* format */

TRUE, /* enabled */

RO_RUNNING | RO_TRANSITIONS, /* read when running and on transitions */

60000, /* read every 60 sec */

0, /* stop run after this event limit */

0, /* number of sub events */

10000, /* log history at most every ten seconds */ // <------------ this is 10^4 seconds, not 10 seconds

"", "", ""} ,

cd_hv_read, /* readout routine */

cd_hv, /* class driver main routine */

hv_driver, /* device driver list */

NULL, /* init string */

},

https://bitbucket.org/tmidas/midas/src/7f0147eb7bc7395f262b3ae90dd0d2af0625af39/examples/slowcont/scfe.cxx#lines-81 |

|

2667

|

10 Jan 2024 |

Pavel Murat | Forum | slow control frontends - how much do they sleep and how often their drivers are called? | Dear all,

I have implemented a number of slow control frontends which are directed to update the

history once in every 10 sec, and they do just that.

I expected that such frontends would be spending most of the time sleeping and waking up

once in ten seconds to call their respective drivers and send the data to the server.

However I observe that each frontend process consumes almost 100% of a single core CPU time

and the frontend driver is called many times per second.

Is that the expected behavior ?

So far, I couldn't find the place in the system part of the frontend code (is that the right

place to look for?) which regulates the frequency of the frontend driver calls, so I'd greatly

appreciate if someone could point me to that place.

I'm using the following commit:

commit 30a03c4c develop origin/develop Make sure line numbers and sequence lines are aligned.

-- many thanks, regards, Pasha |

|