| ID |

Date |

Author |

Topic |

Subject |

|

1907

|

12 May 2020 |

Ruslan Podviianiuk | Forum | List of sequencer files | Hello,

We are going to implement a list of sequencer files to allow users to select one

of them. The name of this file will be transferred to

/ODB/Sequencer/State/Filename field of ODB.

Is it possible to get a list of Sequencer files from MIDAS? Is there a jrpc

command for this?

Thanks.

Best,

Ruslan |

|

1908

|

13 May 2020 |

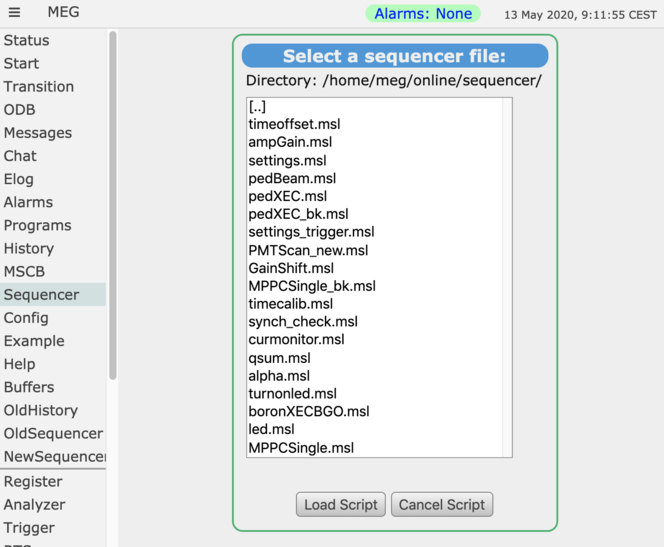

Stefan Ritt | Forum | List of sequencer files | If you load a file into the sequencer from the web interface, you get a list of all files in that directory.

This basically gives you a list of possible sequencer files. It's even more powerful, since you can

create subdirectories and thus group the sequencer files. Attached an example from our

experiment.

Stefan |

| Attachment 1: Screenshot_2020-05-13_at_9.11.55_.png

|

|

|

1909

|

18 May 2020 |

Ruslan Podviianiuk | Forum | List of sequencer files | > If you load a file into the sequencer from the web interface, you get a list of all files in that directory.

> This basically gives you a list of possible sequencer files. It's even more powerful, since you can

> create subdirectories and thus group the sequencer files. Attached an example from our

> experiment.

>

> Stefan

Dear Stefan,

Thank you for the explanation.

Ruslan |

|

1910

|

19 May 2020 |

Ruslan Podviianiuk | Forum | List of sequencer files | > If you load a file into the sequencer from the web interface, you get a list of all files in that directory.

> This basically gives you a list of possible sequencer files. It's even more powerful, since you can

> create subdirectories and thus group the sequencer files. Attached an example from our

> experiment.

>

> Stefan

Dear Stefan,

Could you please answer one more question:

We have a custom webpage and trying to get list of files from the custom webpage and need jrpc command to show it

in custom page. Is there a jrpc command to get this file list?

Thanks, |

|

1912

|

20 May 2020 |

Konstantin Olchanski | Forum | List of sequencer files | >

> We have a custom webpage and trying to get list of files from the custom webpage and need jrpc command to show it

> in custom page. Is there a jrpc command to get this file list?

>

The rpc method used by sequencer web pages is "seq_list_files". How to use it, see resources/load_script.html.

To see list of all rpc methods implemented by your mhttpd, see "help"->"json-rpc schema, text format".

As general explanation, so far we have successfully resisted the desire to turn mhttpd into a generic NFS file

server - if we automatically give all web pages access to all files accessible to the midas user account, it is easy

to lose control of system security (i.e. bad things will happen if web pages can read the ssh private keys ~/.ssh/id_rsa and

modify ~/.ssh/authorized_keys). Generally it is impossible to come up with a whitelist or blacklist of "secrets" that

need to be "hidden" from web pages. But we did implement methods to access files from specific subdirectory trees

defined in ODB which hopefully do not contain any "secrets".

K.O. |

|

1920

|

26 May 2020 |

Pintaudi Giorgio | Forum | API to read MIDAS format file | Eventually, I have settled for the SQLite format.

I could convert the MIDAS history files .hst to SQLite

database .sqlite3 using the utility mh2sql.

It worked out nicely, thank you for the advice.

However, as Konstantine predicted I did notice some

database corruption when a couple of problematic .hst

files were read. I solved the issue by just deleting

those .hst files (I think they were empty anyway).

Now I am developing a piece of code to read the

database using the SOCI library and integrate it

into a TTree but this is not relevant for MIDAS I think.

Thank you again for the discussion. |

|

1930

|

04 Jun 2020 |

Hisataka YOSHIDA | Forum | Template of slow control frontend | I’m beginner of Midas, and trying to develop the slow control front-end with the latest Midas.

I found the scfe.cxx in the “example”, but not enough to refer to write the front-end for my own devices

because it contains only nulldevice and null bus driver case...

(I could have succeeded to run the HV front-end for ISEG MPod, because there is the device driver...)

Can I get some frontend examples such as simple TCP/IP and/or RS232 devices?

Hopefully, I would like to have examples of frontend and device driver.

(if any device driver which is included in the package is similar, please tell me.)

Thanks a lot. |

|

1931

|

04 Jun 2020 |

Pintaudi Giorgio | Forum | Template of slow control frontend | > I’m beginner of Midas, and trying to develop the slow control front-end with the latest Midas.

> I found the scfe.cxx in the “example”, but not enough to refer to write the front-end for my own devices

> because it contains only nulldevice and null bus driver case...

> (I could have succeeded to run the HV front-end for ISEG MPod, because there is the device driver...)

>

> Can I get some frontend examples such as simple TCP/IP and/or RS232 devices?

> Hopefully, I would like to have examples of frontend and device driver.

> (if any device driver which is included in the package is similar, please tell me.)

>

> Thanks a lot.

Dear Yoshida-san,

my name is Giorgio and I am a Ph.D. student working on the T2K experiment.

I had to write many MIDAS frontends recently, so I think that my code could be of some help to you.

As you might already know, the MIDAS slow control system is structured into three layers/levels.

- The highest layer is the "class" layer that directly interfaces with the user and the ODB. It is called

"class" layer because it refers to a class of devices (for example all the high voltage power supplies,

etc...). The idea is that in the same experiment you can have many different models of power supplies but

all of them can be controlled with a single class driver.

- Then there is the "device" layer that implements the functions specific to the particular device.

- Finally, there is the "BUS" layer that directly communicates with the device. The BUS can be Ethernet

(TCP/IP), Serial (RS-232 / RS-422 / RS-485), USB, etc ...

You can read more about the MIDAS slow control system here:

https://midas.triumf.ca/MidasWiki/index.php/Slow_Control_System

Anyway, you need to write code for all those layers. If you are lucky you can reuse some of the already

existing MIDAS code. Keep in mind that all the examples that you find in the MIDAS documentation and the

MIDAS source code are written in C (even if it is then compiled with g++). But, you can write a frontend in

C++ without any problem so choose whichever language you are familiar the most with.

I am attaching an archive with some sample code directly taken from our experiment. It is just a small

fraction of the code not meant to be compilable. The code is disclosed with the GPL3 license, so you can use

it as you please but if you do, please cite my name and the WAGASCI-T2K experiment somewhere visible.

In the archive, you can find two example frontends with the respective drivers. The "Triggers" frontend is

written in C++ (or C+ if you consider that the mfe.cxx API is very C-like). The "WaterLevel" frontend is

written in plain C. The "Triggers" frontend controls our trigger board called CCC and the "WaterLevel"

frontend controls our water level sensors called PicoLog 1012. They share a custom implementation of the

TCP/IP bus. Anyway, this is not relevant to you. You may just want to take a look at the code structure.

Finally, recently there have been some very interesting developments regarding the ODB C++ API. I would

definitely take a look at that. I wish I had that when I was developing these frontends.

Good luck

--

Pintaudi Giorgio, Ph.D. student

Neutrino and Particle Physics Minamino Laboratory

Faculty of Science and Engineering, Yokohama National University

giorgio.pintaudi.kx@ynu.jp

TEL +81(0)45-339-4182 |

| Attachment 1: MIDAS_frontend_sample.zip

|

|

1932

|

04 Jun 2020 |

Stefan Ritt | Forum | Template of slow control frontend | > I’m beginner of Midas, and trying to develop the slow control front-end with the latest Midas.

> I found the scfe.cxx in the “example”, but not enough to refer to write the front-end for my own devices

> because it contains only nulldevice and null bus driver case...

> (I could have succeeded to run the HV front-end for ISEG MPod, because there is the device driver...)

>

> Can I get some frontend examples such as simple TCP/IP and/or RS232 devices?

> Hopefully, I would like to have examples of frontend and device driver.

> (if any device driver which is included in the package is similar, please tell me.)

Have you checked the documentation?

https://midas.triumf.ca/MidasWiki/index.php/Slow_Control_System

Basically you have to replace the nulldevice driver with a "real" driver. You find all existing drivers under

midas/drivers/device. If your favourite is not there, you have to write it. Use one which is close to the one

you need and modify it.

Best,

Stefan |

|

1933

|

04 Jun 2020 |

Hisataka YOSHIDA | Forum | Template of slow control frontend | Dear Stefan,

Thank you for you quick reply.

> Have you checked the documentation?

>

> https://midas.triumf.ca/MidasWiki/index.php/Slow_Control_System

Yes, I have read the wiki, but not easy to figure out how I treat the individual case.

> Basically you have to replace the nulldevice driver with a "real" driver. You find all existing drivers under

> midas/drivers/device. If your favourite is not there, you have to write it. Use one which is close to the one

> you need and modify it.

Okay, I will try to write drivers for my own devices using existing drivers.

(maybe I can find some device drivers which uses TCP/IP, RS232)

Best regards,

Hisataka Yoshida |

|

1934

|

04 Jun 2020 |

Hisataka YOSHIDA | Forum | Template of slow control frontend | Dear Giorgio,

Thank you very much for your kind and quick reply!

I appreciate you giving me such a nice explanation, experience, and great sample codes (This is what I desired!).

They all are useful for me. I will try to write my frontend codes using gift from you.

Thank you again!

Best regards,

Hisataka Yoshida |

|

1942

|

10 Jun 2020 |

Ivo Schulthess | Forum | slow-control equipment crashes when running multi-threaded on a remote machine | Dear all

To reduce the time needed by Midas between runs, we want to change some of our periodic equipment to multi-threaded slow-control equipment. To do that I wanted to start from

the slowcont with the multi/hv class driver and the nulldev device driver and null bus driver. The example runs fine as it is on the local midas machine and also on remote

machines. When adding the DF_MULTITHREAD flag to the device driver list, it does not run anymore on remote machines but aborts with the following assertion:

scfe: /home/neutron/packages/midas/src/midas.cxx:1569: INT cm_get_path(char*, int): Assertion `_path_name.length() > 0' failed.

Running the frontend with GDB and set a breakpoint at the exit leads to the following:

(gdb) where

#0 0x00007ffff68d599f in raise () from /lib64/libc.so.6

#1 0x00007ffff68bfcf5 in abort () from /lib64/libc.so.6

#2 0x00007ffff68bfbc9 in __assert_fail_base.cold.0 () from /lib64/libc.so.6

#3 0x00007ffff68cde56 in __assert_fail () from /lib64/libc.so.6

#4 0x000000000041efbf in cm_get_path (path=0x7fffffffd060 "P\373g", path_size=256)

at /home/neutron/packages/midas/src/midas.cxx:1563

#5 cm_get_path (path=path@entry=0x7fffffffd060 "P\373g", path_size=path_size@entry=256)

at /home/neutron/packages/midas/src/midas.cxx:1563

#6 0x0000000000453dd8 in ss_semaphore_create (name=name@entry=0x7fffffffd2c0 "DD_Input",

semaphore_handle=semaphore_handle@entry=0x67f700 <multi_driver+96>)

at /home/neutron/packages/midas/src/system.cxx:2340

#7 0x0000000000451d25 in device_driver (device_drv=0x67f6a0 <multi_driver>, cmd=<optimized out>)

at /home/neutron/packages/midas/src/device_driver.cxx:155

#8 0x00000000004175f8 in multi_init(eqpmnt*) ()

#9 0x00000000004185c8 in cd_multi(int, eqpmnt*) ()

#10 0x000000000041c20c in initialize_equipment () at /home/neutron/packages/midas/src/mfe.cxx:827

#11 0x000000000040da60 in main (argc=1, argv=0x7fffffffda48)

at /home/neutron/packages/midas/src/mfe.cxx:2757

I also tried to use the generic class driver which results in the same. I am not sure if this is a problem of the multi-threaded frontend running on a remote machine or is it

something of our system which is not properly set up. Anyway I am running out of ideas how to solve this and would appreciate any input.

Thanks in advance,

Ivo |

|

1944

|

10 Jun 2020 |

Konstantin Olchanski | Forum | slow-control equipment crashes when running multi-threaded on a remote machine | Yes, it is supposed to crash. On a remote frontend, cm_get_path() cannot be used

(we are on a different computer, all filesystems maybe no the same!) and is actually not set and

triggers a trap if something tries to use it. (this is the crash you see).

The caller to cm_get_path() is a MIDAS semaphore function.

And I think there is a mistake here. It is unusual for the driver framework to use a semaphore. For multithreaded

protection inside the frontend, a mutex would normally be used. (and mutexes do not use cm_get_path(), so

all is good). But if a semaphore is used, than all frontends running on the same computer become

serialized across the locked section. This is the right thing to do if you have multiple frontends

sharing the same hardware, i.e. a /dev/ttyUSB serial line, but why would a generic framework function

do this. I am not sure, I will have to take a look at why there is a semaphore and what it is locking/protecting.

(In midas, semaphores are normally used to protect global memory, such as ODB, or global resources, such as alarms,

against concurrent access by multiple programs, but of course that does not work for remote frontends,

they are on a different computer! semaphores only work locally, do not work across the network!)

K.O.

>

> scfe: /home/neutron/packages/midas/src/midas.cxx:1569: INT cm_get_path(char*, int): Assertion `_path_name.length() > 0' failed.

>

> Running the frontend with GDB and set a breakpoint at the exit leads to the following:

>

> (gdb) where

> #0 0x00007ffff68d599f in raise () from /lib64/libc.so.6

> #1 0x00007ffff68bfcf5 in abort () from /lib64/libc.so.6

> #2 0x00007ffff68bfbc9 in __assert_fail_base.cold.0 () from /lib64/libc.so.6

> #3 0x00007ffff68cde56 in __assert_fail () from /lib64/libc.so.6

> #4 0x000000000041efbf in cm_get_path (path=0x7fffffffd060 "P\373g", path_size=256)

> at /home/neutron/packages/midas/src/midas.cxx:1563

> #5 cm_get_path (path=path@entry=0x7fffffffd060 "P\373g", path_size=path_size@entry=256)

> at /home/neutron/packages/midas/src/midas.cxx:1563

> #6 0x0000000000453dd8 in ss_semaphore_create (name=name@entry=0x7fffffffd2c0 "DD_Input",

> semaphore_handle=semaphore_handle@entry=0x67f700 <multi_driver+96>)

> at /home/neutron/packages/midas/src/system.cxx:2340

> #7 0x0000000000451d25 in device_driver (device_drv=0x67f6a0 <multi_driver>, cmd=<optimized out>)

> at /home/neutron/packages/midas/src/device_driver.cxx:155

> #8 0x00000000004175f8 in multi_init(eqpmnt*) ()

> #9 0x00000000004185c8 in cd_multi(int, eqpmnt*) ()

> #10 0x000000000041c20c in initialize_equipment () at /home/neutron/packages/midas/src/mfe.cxx:827

> #11 0x000000000040da60 in main (argc=1, argv=0x7fffffffda48)

> at /home/neutron/packages/midas/src/mfe.cxx:2757

>

> I also tried to use the generic class driver which results in the same. I am not sure if this is a problem of the multi-threaded frontend running on a remote machine or is it

> something of our system which is not properly set up. Anyway I am running out of ideas how to solve this and would appreciate any input.

>

> Thanks in advance,

> Ivo |

|

1945

|

10 Jun 2020 |

Stefan Ritt | Forum | slow-control equipment crashes when running multi-threaded on a remote machine | Few comments:

- As KO write, we might need semaphores also on a remote front-end, in case several programs share the same hardware. So it should work and cm_get_path() should not just exit

- When I wrote the multi-threaded device drivers, I did use semaphores instead of mutexes, but I forgot why. Might be that midas semaphores have a timeout and mutexes not, or

something along those lines.

- I do need either semaphores or mutexes since in a multi-threaded slow-control font-end (too many dashes...) several threads have to access an internal data exchange buffer, which

needs protection for multi-threaded environments.

So we can how either fix cm_get_path() or replace all semaphores in with mutexes in midas/src/device_driver.cxx. I have kind of a feeling that we should do both. And what about

switching to c++ std::mutex instead of pthread mutexes?

Stefan |

|

1946

|

12 Jun 2020 |

Ivo Schulthess | Forum | slow-control equipment crashes when running multi-threaded on a remote machine | Thanks you two once again for the very fast answers. I tested the example on the local machine and it works perfectly fine. In the meantime I also created two new drivers for our devices

and everything works with them, the improvement in time is significant and I will create drivers for all our devices where possible. If they are in a working state I can also provide

them to add to the Midas drivers. Of course if it would be possible to run the front-end also on our remote machines this would be even better. I am not experienced in any multi-threaded

programming but if I can provide any help or input, please let me know.

Have a great weekend,

Ivo |

|

1953

|

18 Jun 2020 |

Ruslan Podviianiuk | Forum | ODB key length | Hello,

I have a question about length of the name of ODB key.

Is it possible to create an ODB key containing more than 32 characters?

Thanks.

Ruslan |

|

1954

|

18 Jun 2020 |

Stefan Ritt | Forum | ODB key length | No. But if you need more than 32 characters, you do something wrong. The

information you want to put into the ODB key name should probably be stored in

another string key or so.

Stefan

> Hello,

>

> I have a question about length of the name of ODB key.

> Is it possible to create an ODB key containing more than 32 characters?

>

> Thanks.

> Ruslan |

|

1984

|

21 Aug 2020 |

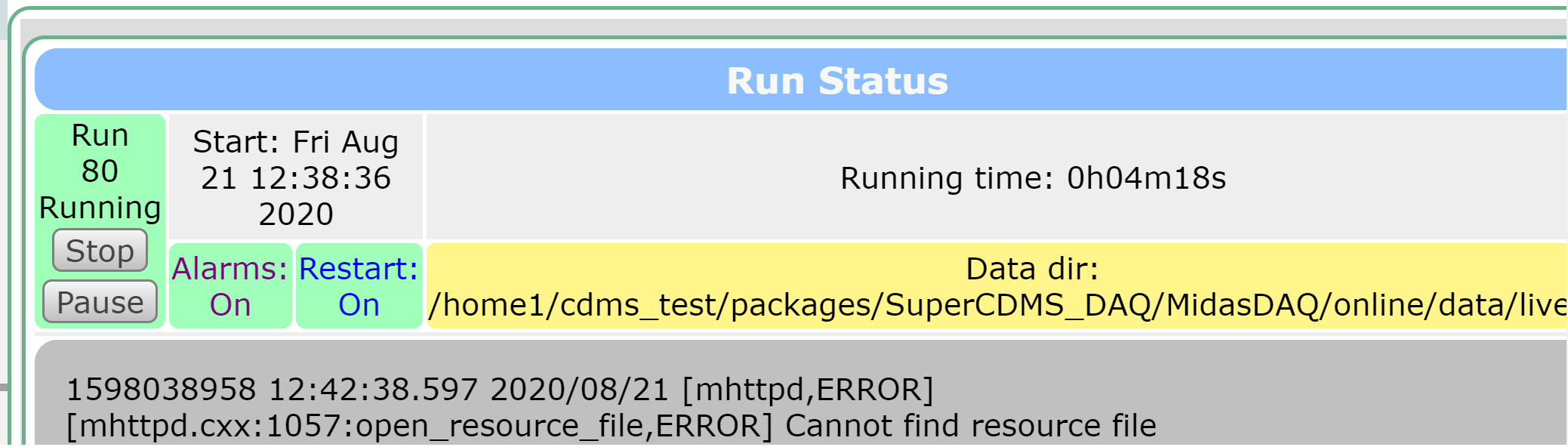

Ruslan Podviianiuk | Forum | time information | Hello,

I have a few questions about time information:

1. Is it possible to get "Running time" using, for example, jsonrpc? (please see

the attached file)

2. Is it possible to configure "Start time" and "Stop time" with time zone? For

example when I start a new run, value of "Start time" key is automatically changed

to "Fri Aug 21 12:38:36 2020" without time zone.

Thank you. |

| Attachment 1: Running_time.png

|

|

|

1985

|

24 Aug 2020 |

Stefan Ritt | Forum | time information |

> 1. Is it possible to get "Running time" using, for example, jsonrpc? (please see

> the attached file)

You have in the ODB "/Runinfo/Start time binary" which is measured in seconds since

1970. By subtracting this from the current time, you get the running time.

> 2. Is it possible to configure "Start time" and "Stop time" with time zone? For

> example when I start a new run, value of "Start time" key is automatically changed

> to "Fri Aug 21 12:38:36 2020" without time zone.

"Start time binary" and "Stop time binary" are in seconds since the 1970 in UTC, so no

time zone involved there. The ASCII versions of the start/stop time are derived from

the binary time using the server's local time zone. If you want to display them in a

different time zone, you have to create a custom page and convert it to another time

zone using JavaScript like

var d = new Date(start_time_binary);

Stefan |

|

1986

|

24 Aug 2020 |

Konstantin Olchanski | Forum | History plot problems for frontend with multiple indicies | This turned out to be a tricky problem. I am adding a warning about it in mlogger. This should go into midas-

2020-07. Closing bug #193. K.O.

> We should fix this for midas-2019-10.

>

> https://bitbucket.org/tmidas/midas/issues/193/confusion-in-history-event-ids

>

> K.O.

>

>

>

>

>

> > Hi Konstantin,

> >

> > > > [local:e666:S]History>ls -l /History/Events

> > > > Key name Type #Val Size Last Opn Mode Value

> > > > ---------------------------------------------------------------------------

> > > > 1 STRING 1 10 2m 0 RWD FeDummy02

> > > > 0 STRING 1 16 2m 0 RWD Run transitions

> > >

> > > Something is very broken. There should be more entries here, at least

> > > there should be entries for "FeDummy01" and usually there is also an entry

> > > for "FeDummy" because one invariably runs fedummy without "-i" at least once.

> >

> > This is a fresh experiment that I started just to test this this issue, that is why there are not many

> > entries in /History/Events. I agree though that we should expect to see a FeDummy01 entry.

> >

> > > The fact that changing from "midas" storage to "file" storage makes no difference

> > > also indicates that something is very broken.

> > >

> > > I want to debug this.

> > >

> > > Since you tried the "file" storage, can you send me the output of "ls -l mhf*.dat" in the directory

> > > with the history files? (it should have the "*.hst" files from the "midas" storage and "mhf*.dat"

> > files

> > > from the "file" storage.

> >

> > When I started this experiment yesterday(?) I disabled the Midas history when I enbled the file

> > history. Jsut now I reenabled the Midas history, so they are currently both active.

> >

> > % ls -l work/online/{*.hst,mhf*.dat}

> > -rw-r--r-- 1 hastings hastings 14996 Sep 17 10:21 work/online/190917.hst

> > -rw-r--r-- 1 hastings hastings 3292 Sep 18 16:29 work/online/190918.hst

> > -rw-r--r-- 1 hastings hastings 867288 Sep 18 16:29 work/online/mhf_1568683062_20190917_fedummy01.dat

> > -rw-r--r-- 1 hastings hastings 867288 Sep 18 16:29 work/online/mhf_1568683062_20190917_fedummy02.dat

> > -rw-r--r-- 1 hastings hastings 166 Sep 17 10:17

> > work/online/mhf_1568683062_20190917_run_transitions.dat

> >

> > And again, just as a sanity check:

> >

> > % odbedit -c 'ls -l /History/Events'

> > Key name Type #Val Size Last Opn Mode Value

> > ---------------------------------------------------------------------------

> > 1 STRING 1 10 1m 0 RWD FeDummy02

> > 0 STRING 1 16 1m 0 RWD Run transitions

> >

> > Regards,

> >

> > Nick. |

|