27 Apr 2023, Konstantin Olchanski, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option 27 Apr 2023, Konstantin Olchanski, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option

|

> H

> /home/mu3e/midas/include/odbxx.h:1102: Wrong key type in XML file

> Stack trace:

> 1 0x00000000000042D828 (null) + 4380712

> 2 0x00000000000048ED4D midas::odb::odb_from_xml(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&) + 605

> 3 0x0000000000004999BD midas::odb::odb(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&) + 317

> 4 0x000000000000495383 midas::odb::read_key(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&) + 1459

> 5 0x0000000000004971E3 midas::odb::connect(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, bool, bool) + 259

> 6 0x000000000000497636 midas::odb::connect(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, bool, bool) + 502

> 7 0x00000000000049883B midas::odb::connect_and_fix_structure(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >) + 171

> 8 0x0000000000004385EF setup_odb() + 8351

> 9 0x00000000000043B2E6 frontend_init() + 22

> 10 0x000000000000433304 main + 1540

> 11 0x0000007F8C6FE3724D __libc_start_main + 239

> 12 0x000000000000433F7A _start + 42

>

> Aborted (core dumped)

>

>

> We have the same problem for all our frontends. When we want to start them locally they work. Starting them locally with ./frontend -h localhost also reproduces the error above.

>

> The error can also be reproduced with the odbxx_test.cxx example in the midas repo by replacing line 22 in midas/examples/odbxx/odbxx_test.cxx (cm_connect_experiment(NULL, NULL, "test", NULL);) with cm_connect_experiment("localhost", "Mu3e", "test", NULL); (Put the name of the experiment instead of "Mu3e")

>

> running odbxx_test locally gives us then the same error as our other frontend.

>

> Thanks in advance,

> Martin |

27 Apr 2023, Konstantin Olchanski, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option 27 Apr 2023, Konstantin Olchanski, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option

|

Looks like your MIDAS is built without debug information (-O2 -g), the stack trace does not have file names and line numbers. Please rebuild with debug information and report the stack trace. Thanks. K.O.

> Connect to experiment Mu3e on host 10.32.113.210...

> OK

> Init hardware...

> terminate called after throwing an instance of 'mexception'

> what():

> /home/mu3e/midas/include/odbxx.h:1102: Wrong key type in XML file

> Stack trace:

> 1 0x00000000000042D828 (null) + 4380712

> 2 0x00000000000048ED4D midas::odb::odb_from_xml(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&) + 605

> 3 0x0000000000004999BD midas::odb::odb(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&) + 317

> 4 0x000000000000495383 midas::odb::read_key(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&) + 1459

> 5 0x0000000000004971E3 midas::odb::connect(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, bool, bool) + 259

> 6 0x000000000000497636 midas::odb::connect(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, bool, bool) + 502

> 7 0x00000000000049883B midas::odb::connect_and_fix_structure(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >) + 171

> 8 0x0000000000004385EF setup_odb() + 8351

> 9 0x00000000000043B2E6 frontend_init() + 22

> 10 0x000000000000433304 main + 1540

> 11 0x0000007F8C6FE3724D __libc_start_main + 239

> 12 0x000000000000433F7A _start + 42

>

> Aborted (core dumped)

K.O. |

28 Apr 2023, Martin Mueller, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option 28 Apr 2023, Martin Mueller, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option

|

> Looks like your MIDAS is built without debug information (-O2 -g), the stack trace does not have file names and line numbers. Please rebuild with debug information and report the stack trace. Thanks. K.O.

>

> > Connect to experiment Mu3e on host 10.32.113.210...

> > OK

> > Init hardware...

> > terminate called after throwing an instance of 'mexception'

> > what():

> > /home/mu3e/midas/include/odbxx.h:1102: Wrong key type in XML file

> > Stack trace:

> > 1 0x00000000000042D828 (null) + 4380712

> > 2 0x00000000000048ED4D midas::odb::odb_from_xml(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&) + 605

> > 3 0x0000000000004999BD midas::odb::odb(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&) + 317

> > 4 0x000000000000495383 midas::odb::read_key(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&) + 1459

> > 5 0x0000000000004971E3 midas::odb::connect(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, bool, bool) + 259

> > 6 0x000000000000497636 midas::odb::connect(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, bool, bool) + 502

> > 7 0x00000000000049883B midas::odb::connect_and_fix_structure(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >) + 171

> > 8 0x0000000000004385EF setup_odb() + 8351

> > 9 0x00000000000043B2E6 frontend_init() + 22

> > 10 0x000000000000433304 main + 1540

> > 11 0x0000007F8C6FE3724D __libc_start_main + 239

> > 12 0x000000000000433F7A _start + 42

> >

> > Aborted (core dumped)

>

> K.O.

As i said we can easily reproduce this with midas/examples/odbxx/odbxx_test.cpp (with cm_connect_experiment changed to "localhost")

Stack trace of odbxx_test with line numbers:

Set ODB key "/Test/Settings/String Array 10[0...9]" = ["","","","","","","","","",""]

Created ODB key "/Test/Settings/Large String Array 10"

Set ODB key "/Test/Settings/Large String Array 10[0...9]" = ["","","","","","","","","",""]

[test,ERROR] [system.cxx:5104:recv_tcp2,ERROR] unexpected connection closure

[test,ERROR] [system.cxx:5158:ss_recv_net_command,ERROR] error receiving network command header, see messages

[test,ERROR] [midas.cxx:13900:rpc_call,ERROR] routine "db_copy_xml": error, ss_recv_net_command() status 411, program abort

Program received signal SIGABRT, Aborted.

0x00007ffff6665cdb in raise () from /lib64/libc.so.6

Missing separate debuginfos, use: zypper install libgcc_s1-debuginfo-11.3.0+git1637-150000.1.9.1.x86_64 libstdc++6-debuginfo-11.2.1+git610-1.3.9.x86_64 libz1-debuginfo-1.2.11-3.24.1.x86_64

(gdb) bt

#0 0x00007ffff6665cdb in raise () from /lib64/libc.so.6

#1 0x00007ffff6667375 in abort () from /lib64/libc.so.6

#2 0x0000000000431bba in rpc_call (routine_id=11249) at /home/labor/midas/src/midas.cxx:13904

#3 0x0000000000460c4e in db_copy_xml (hDB=1, hKey=1009608, buffer=0x7ffff7e9c010 "", buffer_size=0x7fffffffadbc, header=false) at /home/labor/midas/src/odb.cxx:8994

#4 0x000000000046fc4c in midas::odb::odb_from_xml (this=0x7fffffffb3f0, str=...) at /home/labor/midas/src/odbxx.cxx:133

#5 0x000000000040b3d9 in midas::odb::odb (this=0x7fffffffb3f0, str=...) at /home/labor/midas/include/odbxx.h:605

#6 0x000000000040b655 in midas::odb::odb (this=0x7fffffffb3f0, s=0x4a465a "/Test/Settings") at /home/labor/midas/include/odbxx.h:629

#7 0x0000000000407bba in main () at /home/labor/midas/examples/odbxx/odbxx_test.cxx:56

(gdb) |

28 Apr 2023, Konstantin Olchanski, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option 28 Apr 2023, Konstantin Olchanski, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option

|

> As i said we can easily reproduce this with midas/examples/odbxx/odbxx_test.cpp (with cm_connect_experiment changed to "localhost")

> [test,ERROR] [system.cxx:5104:recv_tcp2,ERROR] unexpected connection closure

> [test,ERROR] [system.cxx:5158:ss_recv_net_command,ERROR] error receiving network command header, see messages

> [test,ERROR] [midas.cxx:13900:rpc_call,ERROR] routine "db_copy_xml": error, ss_recv_net_command() status 411, program abort

ok, cool. looks like we crashed the mserver. either run mserver attached to gdb or enable mserver core dump, we need it's stack trace,

the correct stack trace should be rooted in the handler for db_copy_xml.

but most likely odbxx is asking for more data than can be returned through the MIDAS RPC.

what is the ODB key passed to db_copy_xml() and how much data is in ODB at that key? (odbedit "du", right?).

K.O. |

28 Apr 2023, Martin Mueller, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option 28 Apr 2023, Martin Mueller, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option

|

> > As i said we can easily reproduce this with midas/examples/odbxx/odbxx_test.cpp (with cm_connect_experiment changed to "localhost")

> > [test,ERROR] [system.cxx:5104:recv_tcp2,ERROR] unexpected connection closure

> > [test,ERROR] [system.cxx:5158:ss_recv_net_command,ERROR] error receiving network command header, see messages

> > [test,ERROR] [midas.cxx:13900:rpc_call,ERROR] routine "db_copy_xml": error, ss_recv_net_command() status 411, program abort

>

> ok, cool. looks like we crashed the mserver. either run mserver attached to gdb or enable mserver core dump, we need it's stack trace,

> the correct stack trace should be rooted in the handler for db_copy_xml.

>

> but most likely odbxx is asking for more data than can be returned through the MIDAS RPC.

>

> what is the ODB key passed to db_copy_xml() and how much data is in ODB at that key? (odbedit "du", right?).

>

> K.O.

Ok. Maybe i have to make this more clear. ANY odbxx access of a remote odb reproduces this error for us on multiple machines.

It does not matter how much data odbxx is asking for.

Something as simple as this reproduces the error, asking for a single integer:

int main() {

cm_connect_experiment("localhost", "Mu3e", "test", NULL);

midas::odb o = {

{"Int32 Key", 42}

};

o.connect("/Test/Settings");

cm_disconnect_experiment();

return 1;

}

at the same time this runs fine:

int main() {

cm_connect_experiment(NULL, NULL, "test", NULL);

midas::odb o = {

{"Int32 Key", 42}

};

o.connect("/Test/Settings");

cm_disconnect_experiment();

return 1;

}

in both cases mserver does not crash. I do not have a stack trace. There is also no error produced by mserver.

Last year we did not have these problems with the same midas frontends (For example in midas commit 9d2ef471 the code from above runs

fine). I am trying to pinpoint the exact commit where this stopped working now. |

28 Apr 2023, Konstantin Olchanski, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option 28 Apr 2023, Konstantin Olchanski, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option

|

> > > As i said we can easily reproduce this with midas/examples/odbxx/odbxx_test.cpp

> > ok, cool. looks like we crashed the mserver.

> Ok. Maybe i have to make this more clear. ANY odbxx access of a remote odb reproduces this error for us on multiple machines.

> It does not matter how much data odbxx is asking for.

> midas commit 9d2ef471 the code from above runs fine

so, a regression. ouch.

if core dumps are turned off, you will not "see" the mserver crash, because the main mserver is still running. it's the mserver forked to

serve your RPC connection that crashes.

> int main() {

> cm_connect_experiment("localhost", "Mu3e", "test", NULL);

> midas::odb o = {

> {"Int32 Key", 42}

> };

> o.connect("/Test/Settings");

> cm_disconnect_experiment();

> return 1;

> }

to debug this, after cm_connect_experiment() one has to put ::sleep(1000000000); (not that big, obviously),

then while it is sleeping do "ps -efw | grep mserver", this will show the mserver for the test program,

connect to it with gdb, wait for ::sleep() to finish and o.connect() to crash, with luck gdb will show

the crash stack trace in the mserver.

so easy to debug? this is why back in the 1970-ies clever people invented core dumps, only to have

even more clever people in the 2020-ies turn them off and generally make debugging more difficult (attaching

gdb to a running program is also disabled-by-default in some recent linuxes).

rant-off.

to check if core dumps work, to "killall -7 mserver". to enable core dumps on ubuntu, see here:

https://daq00.triumf.ca/DaqWiki/index.php/Ubuntu

last known-working point is:

commit 9d2ef471c4e4a5a325413e972862424549fa1ed5

Author: Ben Smith <bsmith@triumf.ca>

Date: Wed Jul 13 14:45:28 2022 -0700

Allow odbxx to handle connecting to "/" (avoid trying to read subkeys as "//Equipment" etc.

K.O. |

02 May 2023, Niklaus Berger, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option 02 May 2023, Niklaus Berger, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option

|

Thanks for all the helpful hints. When finally managing to evade all timeouts and attach the debugger in just the right moment, we find that we get a segfault in mserver at L827:

case RPC_DB_COPY_XML:

status = db_copy_xml(CHNDLE(0), CHNDLE(1), CSTRING(2), CPINT(3), CBOOL(4));

Some printf debugging then pointed us to the fact that the culprit is the pointer de-referencing in CBOOL(4). This in turn can be traced back to mrpc.cxx L282 ff, where the line with the arrow was missing:

{RPC_DB_COPY_XML, "db_copy_xml",

{{TID_INT32, RPC_IN},

{TID_INT32, RPC_IN},

{TID_ARRAY, RPC_OUT | RPC_VARARRAY},

{TID_INT32, RPC_IN | RPC_OUT},

-> {TID_BOOL, RPC_IN},

{0}}},

If we put that in, the mserver process completes peacfully and we get a segfault in the client ("Wrong key type in XML file") which we will attempt to debug next. Shall I create a pull request for the additional RPC argument or will you just fix this on the fly? |

02 May 2023, Niklaus Berger, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option 02 May 2023, Niklaus Berger, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option

|

And now we also fixed the client segfault, odb.cxx L8992 also needs to know about the header:

if (rpc_is_remote())

return rpc_call(RPC_DB_COPY_XML, hDB, hKey, buffer, buffer_size, header);

(last argument was missing before). |

02 May 2023, Stefan Ritt, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option 02 May 2023, Stefan Ritt, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option

|

> Shall I create a pull request for the additional RPC argument or will you just fix this on the fly?

Just fix it in the fly yourself. Itís an obvious bug, so please commit to develop.

Stefan |

08 May 2023, Alexey Kalinin, Forum, Scrript in sequencer 08 May 2023, Alexey Kalinin, Forum, Scrript in sequencer

|

Hello,

I tried different ways to pass parameters to bash script, but there are seems to

be empty, what could be the problem?

We have seuqencer like

ODBGET "/Runinfo/runnumber", firstrun

LOOP n,10

#changing HV

TRANSITION start

WAIT seconds,300

TRANSITION stop

ENDLOOP

ODBGET "/Runinfo/runnumber", lastrun

SCRIPT /.../script.sh ,$firstrun ,$lastrun

and script.sh like

firstrun=$1

lastrun=$2

Thanks. Alexey. |

08 May 2023, Stefan Ritt, Forum, Scrript in sequencer 08 May 2023, Stefan Ritt, Forum, Scrript in sequencer

|

> I tried different ways to pass parameters to bash script, but there are seems to

> be empty, what could be the problem?

Indeed there was a bug in the sequencer with parameter passing to scripts. I fixed it

and committed the changes to the develop branch.

Stefan |

09 May 2023, Alexey Kalinin, Forum, Scrript in sequencer 09 May 2023, Alexey Kalinin, Forum, Scrript in sequencer

|

Thanks. It works perfect.

Another question is:

Is it possible to run .msl seqscript from bash cmd?

Maybe it's easier then

1 odbedit -c 'set "/sequencer/load filename" filename.msl'

2 odbedit -c 'set "/sequencer/load new file" TRUE'

3 odbedit -c 'set "/sequencer/start script" TRUE'

What is the best way to have a button starting sequencer

from /script (or /alias )?

Alexey.

> > I tried different ways to pass parameters to bash script, but there are seems to

> > be empty, what could be the problem?

>

> Indeed there was a bug in the sequencer with parameter passing to scripts. I fixed it

> and committed the changes to the develop branch.

>

> Stefan |

10 May 2023, Stefan Ritt, Forum, Scrript in sequencer 10 May 2023, Stefan Ritt, Forum, Scrript in sequencer

|

> Thanks. It works perfect.

> Another question is:

> Is it possible to run .msl seqscript from bash cmd?

> Maybe it's easier then

> 1 odbedit -c 'set "/sequencer/load filename" filename.msl'

> 2 odbedit -c 'set "/sequencer/load new file" TRUE'

> 3 odbedit -c 'set "/sequencer/start script" TRUE'

That will work.

> What is the best way to have a button starting sequencer

> from /script (or /alias )?

Have a look at

https://daq00.triumf.ca/MidasWiki/index.php/Sequencer#Controlling_the_sequencer_from_custom_pages

where I put the necessary information.

Stefan |

24 May 2023, Gennaro Tortone, Forum, pull request for PostgreSQL support 24 May 2023, Gennaro Tortone, Forum, pull request for PostgreSQL support

|

Hi,

is there any news regarding this pull request ?

(https://bitbucket.org/tmidas/midas/pull-requests/30)

If you agree to merge I can resolve conflicts that now

(after two months) are listed...

Regards,

Gennaro

>

> Hi,

> I have updated the PR with a new one that includes TimescaleDB support and some

> changes to mhistory.js to support downsampling queries...

>

> Cheers,

> Gennaro

>

> > > some minutes ago I published a PR for PostgreSQL support I developed

> > > at INFN-Napoli for Darkside experiment...

> > >

> > > I don't know if you receive a notification about this PR and in doubt

> > > I wrote this message...

> >

> > Hi, Gennaro, thank you for the very useful contribution. I saw the previous version

> > of your pull request and everything looked quite good. But that pull request was

> > for an older version of midas and it would not have applied cleanly to the current

> > version. I will take a look at your updated pull request. In theory it should only

> > add the Postgres class and modify a few other places in history_schema.cxx and have

> > no changes to anything else. (if you need those changes, it should be a separate

> > pull request).

> >

> > Also I am curious what benefits and drawbacks of Postgres vs mysql/mariadb you have

> > observed for storing and using midas history data.

> >

> > K.O. |

13 Jun 2023, Thomas Senger, Forum, Include subroutine through relative path in sequencer 13 Jun 2023, Thomas Senger, Forum, Include subroutine through relative path in sequencer

|

Hi, I would like to restructure our sequencer scripts and the paths. Until now many things are not generic at all. I would like to ask if it is possible to include files through a relative path for example something like

INCLUDE ../chip/global_basic_functions

Maybe I just did not found how to do it. |

13 Jun 2023, Stefan Ritt, Forum, Include subroutine through relative path in sequencer 13 Jun 2023, Stefan Ritt, Forum, Include subroutine through relative path in sequencer

|

> Hi, I would like to restructure our sequencer scripts and the paths. Until now many things are not generic at all. I would like to ask if it is possible to include files through a relative path for example something like

> INCLUDE ../chip/global_basic_functions

> Maybe I just did not found how to do it.

It was not there. I implemented it in the last commit.

Stefan |

13 Jun 2023, Marco Francesconi, Forum, Include subroutine through relative path in sequencer 13 Jun 2023, Marco Francesconi, Forum, Include subroutine through relative path in sequencer

|

> > Hi, I would like to restructure our sequencer scripts and the paths. Until now many things are not generic at all. I would like to ask if it is possible to include files through a relative path for example something like

> > INCLUDE ../chip/global_basic_functions

> > Maybe I just did not found how to do it.

>

> It was not there. I implemented it in the last commit.

>

> Stefan

Hi Stefan,

when I did this job for MEG II we decided not to include relative paths and the ".." folder to avoid an exploit called "XML Entity Injection".

In short is to avoid leaking files outside the sequencer folders like /etc/password or private SSH keys.

I do not remember in this moment why we pushed for absolute paths instead but let's keep this in mind.

Marco |

13 Jun 2023, Stefan Ritt, Forum, Include subroutine through relative path in sequencer 13 Jun 2023, Stefan Ritt, Forum, Include subroutine through relative path in sequencer

|

> when I did this job for MEG II we decided not to include relative paths and the ".." folder to avoid an exploit called "XML Entity Injection".

> In short is to avoid leaking files outside the sequencer folders like /etc/password or private SSH keys.

> I do not remember in this moment why we pushed for absolute paths instead but let's keep this in mind.

I thought about that. But before we had absolute paths in the sequencer INCLUDE statement. So having "../../../etc/passwd" is as bad as the

absolute path "/etc/passwd". So nothing really changed. What we really should prevent is to LOAD files into the sequencer from outside the

sequence subdirectory. And this is prevented by the file loader. Actually we will soon replace the file loaded with a modern JS dialog, and

the code restricts all operations to within the experiment directory and below.

Stefan |

11 Jul 2023, Anubhav Prakash, Forum, Possible ODB corruption! Webpages https://midptf01.triumf.ca/?cmd=Programs not loading! 11 Jul 2023, Anubhav Prakash, Forum, Possible ODB corruption! Webpages https://midptf01.triumf.ca/?cmd=Programs not loading!

|

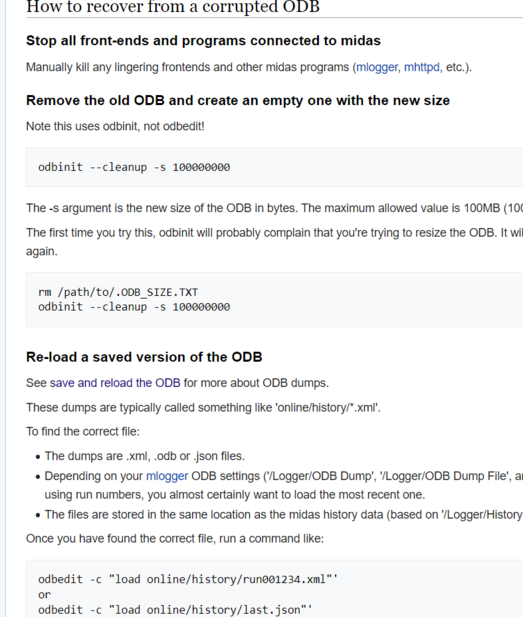

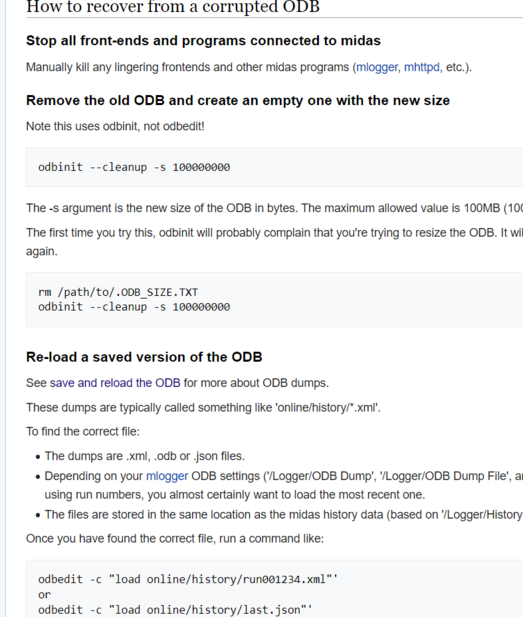

The ODB server seems to have crashed/corrupted. I tried reloading the previous

working version of ODB(using the commands in folliwng image) but it didn't work.

I have also attached the screenshot of the site https://midptf01.triumf.ca/?cmd=Programs. Any help to resolve this would be appreciated! Normally Prof. Thomas Lindner would solve such issues, but he is busy working at CERN till 17th of July, and we cannot afford to wait until then.

The following is the error: when I run bash /home/midptf/online/bin/start_daq.sh

[ODBEdit1,INFO] Fixing ODB "/Programs/ODBEdit" struct size mismatch (expected

316, odb size 92)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/ODBEdit" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11381:db_get_record1,ERROR] after db_check_record()

still struct size mismatch (expected 316, odb size 92) of "/Programs/ODBEdit",

calling db_create_record()

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11387:db_get_record1,ERROR] repaired struct size

mismatch of "/Programs/ODBEdit"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/ODBEdit" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [alarm.cxx:702:al_check,ERROR] Cannot get program info record

for program "ODBEdit", db_get_record1() status 319

[ODBEdit1,INFO] Fixing ODB "/Programs/mhttpd" struct size mismatch (expected

316, odb size 60)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/mhttpd" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11381:db_get_record1,ERROR] after db_check_record()

still struct size mismatch (expected 316, odb size 92) of "/Programs/mhttpd",

calling db_create_record()

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11387:db_get_record1,ERROR] repaired struct size

mismatch of "/Programs/mhttpd"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/mhttpd" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [alarm.cxx:702:al_check,ERROR] Cannot get program info record

for program "mhttpd", db_get_record1() status 319

[ODBEdit1,INFO] Fixing ODB "/Programs/Logger" struct size mismatch (expected

316, odb size 60)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/Logger" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11381:db_get_record1,ERROR] after db_check_record()

still struct size mismatch (expected 316, odb size 92) of "/Programs/Logger",

calling db_create_record()

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11387:db_get_record1,ERROR] repaired struct size

mismatch of "/Programs/Logger"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/Logger" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [alarm.cxx:702:al_check,ERROR] Cannot get program info record

for program "Logger", db_get_record1() status 319

14:54:29 [ODBEdit,ERROR] [odb.cxx:1763:db_validate_db,ERROR] Warning: database

data area is 100% full

14:54:29 [ODBEdit,ERROR] [odb.cxx:1283:db_validate_key,ERROR] hkey 643368, path

"/Alarms/Classes/<NULL>/Display BGColor", string value is not valid UTF-8

14:54:29 [ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot

malloc_data(256), called from db_set_link_data

14:54:29 [ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot

reallocate "/System/Tmp/140305391605888I/Start command" with new size 256 bytes,

online database full

14:54:29 [ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of

zero, set to 32, odb path "Start command"

14:54:29 [ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of

zero, set to 32, odb path "Start command" |

11 Jul 2023, Thomas Lindner, Forum, Possible ODB corruption! Webpages https://midptf01.triumf.ca/?cmd=Programs not loading! 11 Jul 2023, Thomas Lindner, Forum, Possible ODB corruption! Webpages https://midptf01.triumf.ca/?cmd=Programs not loading!

|

Hi Anubhav,

I have fixed the ODB corruption problem.

Cheers,

Thomas

| Anubhav Prakash wrote: | The ODB server seems to have crashed/corrupted. I tried reloading the previous

working version of ODB(using the commands in folliwng image) but it didn't work.

I have also attached the screenshot of the site https://midptf01.triumf.ca/?cmd=Programs. Any help to resolve this would be appreciated! Normally Prof. Thomas Lindner would solve such issues, but he is busy working at CERN till 17th of July, and we cannot afford to wait until then.

The following is the error: when I run bash /home/midptf/online/bin/start_daq.sh

[ODBEdit1,INFO] Fixing ODB "/Programs/ODBEdit" struct size mismatch (expected

316, odb size 92)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/ODBEdit" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11381:db_get_record1,ERROR] after db_check_record()

still struct size mismatch (expected 316, odb size 92) of "/Programs/ODBEdit",

calling db_create_record()

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11387:db_get_record1,ERROR] repaired struct size

mismatch of "/Programs/ODBEdit"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/ODBEdit" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [alarm.cxx:702:al_check,ERROR] Cannot get program info record

for program "ODBEdit", db_get_record1() status 319

[ODBEdit1,INFO] Fixing ODB "/Programs/mhttpd" struct size mismatch (expected

316, odb size 60)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/mhttpd" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11381:db_get_record1,ERROR] after db_check_record()

still struct size mismatch (expected 316, odb size 92) of "/Programs/mhttpd",

calling db_create_record()

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11387:db_get_record1,ERROR] repaired struct size

mismatch of "/Programs/mhttpd"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/mhttpd" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [alarm.cxx:702:al_check,ERROR] Cannot get program info record

for program "mhttpd", db_get_record1() status 319

[ODBEdit1,INFO] Fixing ODB "/Programs/Logger" struct size mismatch (expected

316, odb size 60)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/Logger" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11381:db_get_record1,ERROR] after db_check_record()

still struct size mismatch (expected 316, odb size 92) of "/Programs/Logger",

calling db_create_record()

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11387:db_get_record1,ERROR] repaired struct size

mismatch of "/Programs/Logger"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/Logger" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [alarm.cxx:702:al_check,ERROR] Cannot get program info record

for program "Logger", db_get_record1() status 319

14:54:29 [ODBEdit,ERROR] [odb.cxx:1763:db_validate_db,ERROR] Warning: database

data area is 100% full

14:54:29 [ODBEdit,ERROR] [odb.cxx:1283:db_validate_key,ERROR] hkey 643368, path

"/Alarms/Classes/<NULL>/Display BGColor", string value is not valid UTF-8

14:54:29 [ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot

malloc_data(256), called from db_set_link_data

14:54:29 [ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot

reallocate "/System/Tmp/140305391605888I/Start command" with new size 256 bytes,

online database full

14:54:29 [ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of

zero, set to 32, odb path "Start command"

14:54:29 [ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of

zero, set to 32, odb path "Start command" |

|

|