12 May 2023, Stefan Ritt, Info, New environment variable MIDAS_EXPNAME 12 May 2023, Stefan Ritt, Info, New environment variable MIDAS_EXPNAME

|

A new environment variable MIDAS_EXPNAME has been introduced. This must be

used for cases where people use MIDAS_DIR, and is then equivalent for the

experiment name and directory usually used in the exptab file. This fixes

and issue with creating and deleting shared memory in midas as described in

https://bitbucket.org/tmidas/midas/issues/363/deletion-of-shared-memory-fails

The documentation has been updated at

https://daq00.triumf.ca/MidasWiki/index.php/MIDAS_environment_variables#MIDAS_EXPN

AME

/Stefan |

12 May 2023, Konstantin Olchanski, Info, New environment variable MIDAS_EXPNAME 12 May 2023, Konstantin Olchanski, Info, New environment variable MIDAS_EXPNAME

|

> A new environment variable MIDAS_EXPNAME has been introduced [to be used together with

MIDAS_DIR]

This is fixes an important buglet. If experiment uses MIDAS_DIR instead of exptab, at the time

of connecting to ODB, we do not know the experiment name and use name "Default" to create ODB

shared memory, instead of actual experiment name.

This creates an inconsistency, if some MIDAS programs in the same experiment use MIDAS_DIR while

others use exptab (this would be unusual, but not impossible) they would connect to two

different ODB shared memories, former using name "Default", latter using actual experiment name.

As an indication that something is not right, when stopping MIDAS programs, there is an error

message about failure to delete shared ODB shared memory (because it was created using name

"Default" and delete using the correct experiment name fails).

Also it can cause co-mingling between two different experiments, depending on the type of shared

memory used by MIDAS (see $MIDAS_DIR/.SHM_TYPE.TXT):

POSIX - (usually not used) not affected (experiment name is not used)

POSIXv2 - (usually not used) affected (shm name is "$EXP_NAME_ODB")

POSIXv3 - used on MacOS - affected (shm name is "$UID_$EXP_NAME_ODB" so "$UID_Default_ODB" will

collide)

POSIXv4 - used on Linux - not affected (shm name includes $MIDAS_DIR which is different for

different experiments)

K.O. |

20 Jun 2023, Stefan Ritt, Info, New environment variable MIDAS_EXPNAME 20 Jun 2023, Stefan Ritt, Info, New environment variable MIDAS_EXPNAME

|

I just realized that we had already MIDAS_EXPT_NAME, and now people get confused with

MIDAS_EXPT_NAME

and

MIDAS_EXPNAME

In trying to fix this confusion, I changed the name of the second variable to MIDAS_EXPT_NAME as well,

so we only have one variable now. If this causes any problems please report here.

Stefan |

28 Jul 2023, Stefan Ritt, Info, New environment variable MIDAS_EXPNAME 28 Jul 2023, Stefan Ritt, Info, New environment variable MIDAS_EXPNAME

|

Concerning naming of shared memories I went one step further due to some requirement of a local experiment.

The experiment needs to change the experiment name shown on the web status page depending on the exact

configuration, but we do not want to change the whole midas experiment each time.

So I simple removed the check that the experiment name coming from the environment and used for the shared

memory gets checked against the experiment name in the ODB. The only connection there is that the ODB name

gets set to the environment name is it does not exist or is empty, just to have some default value. So for

most people nothing should change. If one changes however the name in the ODB (under /Experiment/Name),

nothing will change internally, just the web display via mhttpd changes its title.

I hope this has no bad side-effects, so please have a look if you see any issue in your experiment.

Stefan |

24 Jul 2023, Nick Hastings, Bug Report, Incompatible data types with mysql odbc interface 24 Jul 2023, Nick Hastings, Bug Report, Incompatible data types with mysql odbc interface

|

Hello,

I have recently set up a midas-2022-05-c instance and have been trying to configure

it to use the mysql odbc interface. Tables are being created for it but

the logger is issuing errors that some of the column types are incorrect. For example

in the log I see:

14:22:12.689 2023/07/25 [Logger,ERROR] [history_odbc.cxx:1531:hs_define_event,ERROR] Error: History event 'Run transitions': Incompatible data type for tag 'State' type 'UINT32', SQL column 'state' type 'INT UNSIGNED'

14:22:12.689 2023/07/25 [Logger,ERROR] [history_odbc.cxx:1531:hs_define_event,ERROR] Error: History event 'Run transitions': Incompatible data type for tag 'Run number' type 'UINT32', SQL column 'run_number' type 'INT UNSIGNED'

Checking the table in the database I see:

MariaDB [t2kgscND280]> describe run_transitions;

+------------+------------------+------+-----+---------------------+-------------------------------+

| Field | Type | Null | Key | Default | Extra |

+------------+------------------+------+-----+---------------------+-------------------------------+

| _t_time | timestamp | NO | MUL | current_timestamp() | on update current_timestamp() |

| _i_time | int(11) | NO | MUL | NULL | |

| state | int(10) unsigned | YES | | NULL | |

| run_number | int(10) unsigned | YES | | NULL | |

+------------+------------------+------+-----+---------------------+-------------------------------+

4 rows in set (0.000 sec)

Please note that this is not the only history variable that has this problem. There are multiple variables

for which:

Incompatible data type for tag 'Foo Bar' type 'UINT32', SQL column 'foo_bar' type 'INT UNSIGNED'

Checking history_odbc.cxx, I see:

static const char *sql_type_mysql[] = {

"xxxINVALIDxxxNULL", // TID_NULL

"tinyint unsigned", // TID_UINT8

"tinyint", // TID_INT8

"char", // TID_CHAR

"smallint unsigned", // TID_UINT16

"smallint", // TID_INT16

"integer unsigned", // TID_UINT32

"integer", // TID_INT32

"tinyint", // TID_BOOL

"float", // TID_FLOAT

"double", // TID_DOUBLE

"tinyint unsigned", // TID_BITFIELD

"VARCHAR", // TID_STRING

"xxxINVALIDxxxARRAY",

"xxxINVALIDxxxSTRUCT",

"xxxINVALIDxxxKEY",

"xxxINVALIDxxxLINK"

};

So it seems that unsigned int should map to UINT32.

The database is:

Server version: 10.5.16-MariaDB MariaDB Server

Please let me know if more information is needed.

Note that the choice of using the odbc interface is because we

plan to import an old database that was created using the odbc interface

with a previous version of midas (yes this is your old friend T2K/ND280).

Regards,

Nick. |

25 Jul 2023, Nick Hastings, Bug Report, Incompatible data types with mysql odbc interface 25 Jul 2023, Nick Hastings, Bug Report, Incompatible data types with mysql odbc interface

|

Hello,

wanted add few things:

1. I see the same problem for INT32

2. For now I've worked around these problems with https://bitbucket.org/nickhastings/midas/commits/e4776f7511de0647077c8c80d43c17bbfe2184fd

3. I'm using mariadb-connector-odbc-3.1.12-3.el9.x86_64 (System is AlmaLinux 9)

Regards,

Nick. |

18 Jul 2023, Gennaro Tortone, Bug Report, access to filesystem through mhttpd 18 Jul 2023, Gennaro Tortone, Bug Report, access to filesystem through mhttpd

|

Hi,

after some networks security scans I received some warnings because mhttpd expose

server filesystem through HTTP(S)...

in details a MIDAS user can access to /etc/passwd or download other files from

filesystem using a web browser:

(e.g. http://midas.host:8080/etc/passwd)

I know that /etc/passwd does not contain users password and mhttpd runs as an

unprivileged user but in principle this should be avoided in order to minimize

security risks: if I authorize a user to use MIDAS interface in order to handle

acquisition tasks this should not authorize the user to access the server filesystem...

but this access should be restricted to MIDAS web pages, custom pages etc.

What do you think about this ?

Cheers,

Gennaro |

18 Jul 2023, Konstantin Olchanski, Bug Report, access to filesystem through mhttpd 18 Jul 2023, Konstantin Olchanski, Bug Report, access to filesystem through mhttpd

|

> (e.g. http://midas.host:8080/etc/passwd)

not again! I complained about this before, and I added a fix, but it must be broken again.

getting a copy of /etc/passwd is reasonably benign, but getting a copy of

/home/$USER/.ssh/id_rsa, id_rsa.pub, knownhosts and authorized_keys is a disaster.

(running mhttpd behind a web proxy does not solve the problem, number of attackers is

reduced to only the people who know the proxy password and to local users).

K.O. |

19 Jul 2023, Zaher Salman, Bug Report, access to filesystem through mhttpd 19 Jul 2023, Zaher Salman, Bug Report, access to filesystem through mhttpd

|

Have you actually been able to read /etc/passwd this way? I tested this on a few of our servers and it does not work. As far as I know, there is access to files in resources, custom pages etc.

Other possible ways to access the file system is via mjsonrpc calls, but again these are restricted to certain folders.

Can you please give us more details about this.

Zaher

> > (e.g. http://midas.host:8080/etc/passwd)

>

> not again! I complained about this before, and I added a fix, but it must be broken again.

>

> getting a copy of /etc/passwd is reasonably benign, but getting a copy of

> /home/$USER/.ssh/id_rsa, id_rsa.pub, knownhosts and authorized_keys is a disaster.

>

> (running mhttpd behind a web proxy does not solve the problem, number of attackers is

> reduced to only the people who know the proxy password and to local users).

>

> K.O. |

11 Jul 2023, Anubhav Prakash, Forum, Possible ODB corruption! Webpages https://midptf01.triumf.ca/?cmd=Programs not loading! 11 Jul 2023, Anubhav Prakash, Forum, Possible ODB corruption! Webpages https://midptf01.triumf.ca/?cmd=Programs not loading!

|

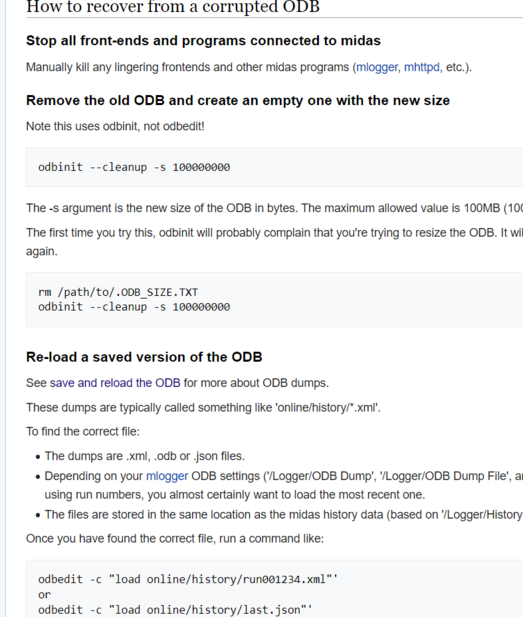

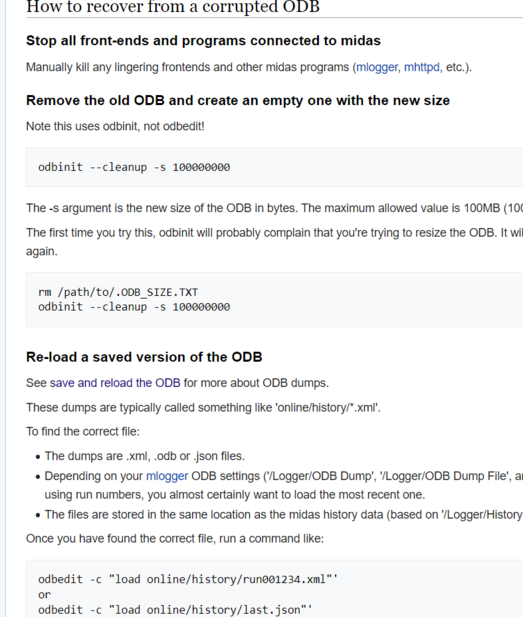

The ODB server seems to have crashed/corrupted. I tried reloading the previous

working version of ODB(using the commands in folliwng image) but it didn't work.

I have also attached the screenshot of the site https://midptf01.triumf.ca/?cmd=Programs. Any help to resolve this would be appreciated! Normally Prof. Thomas Lindner would solve such issues, but he is busy working at CERN till 17th of July, and we cannot afford to wait until then.

The following is the error: when I run bash /home/midptf/online/bin/start_daq.sh

[ODBEdit1,INFO] Fixing ODB "/Programs/ODBEdit" struct size mismatch (expected

316, odb size 92)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/ODBEdit" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11381:db_get_record1,ERROR] after db_check_record()

still struct size mismatch (expected 316, odb size 92) of "/Programs/ODBEdit",

calling db_create_record()

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11387:db_get_record1,ERROR] repaired struct size

mismatch of "/Programs/ODBEdit"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/ODBEdit" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [alarm.cxx:702:al_check,ERROR] Cannot get program info record

for program "ODBEdit", db_get_record1() status 319

[ODBEdit1,INFO] Fixing ODB "/Programs/mhttpd" struct size mismatch (expected

316, odb size 60)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/mhttpd" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11381:db_get_record1,ERROR] after db_check_record()

still struct size mismatch (expected 316, odb size 92) of "/Programs/mhttpd",

calling db_create_record()

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11387:db_get_record1,ERROR] repaired struct size

mismatch of "/Programs/mhttpd"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/mhttpd" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [alarm.cxx:702:al_check,ERROR] Cannot get program info record

for program "mhttpd", db_get_record1() status 319

[ODBEdit1,INFO] Fixing ODB "/Programs/Logger" struct size mismatch (expected

316, odb size 60)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/Logger" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11381:db_get_record1,ERROR] after db_check_record()

still struct size mismatch (expected 316, odb size 92) of "/Programs/Logger",

calling db_create_record()

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11387:db_get_record1,ERROR] repaired struct size

mismatch of "/Programs/Logger"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/Logger" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [alarm.cxx:702:al_check,ERROR] Cannot get program info record

for program "Logger", db_get_record1() status 319

14:54:29 [ODBEdit,ERROR] [odb.cxx:1763:db_validate_db,ERROR] Warning: database

data area is 100% full

14:54:29 [ODBEdit,ERROR] [odb.cxx:1283:db_validate_key,ERROR] hkey 643368, path

"/Alarms/Classes/<NULL>/Display BGColor", string value is not valid UTF-8

14:54:29 [ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot

malloc_data(256), called from db_set_link_data

14:54:29 [ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot

reallocate "/System/Tmp/140305391605888I/Start command" with new size 256 bytes,

online database full

14:54:29 [ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of

zero, set to 32, odb path "Start command"

14:54:29 [ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of

zero, set to 32, odb path "Start command" |

11 Jul 2023, Thomas Lindner, Forum, Possible ODB corruption! Webpages https://midptf01.triumf.ca/?cmd=Programs not loading! 11 Jul 2023, Thomas Lindner, Forum, Possible ODB corruption! Webpages https://midptf01.triumf.ca/?cmd=Programs not loading!

|

Hi Anubhav,

I have fixed the ODB corruption problem.

Cheers,

Thomas

| Anubhav Prakash wrote: | The ODB server seems to have crashed/corrupted. I tried reloading the previous

working version of ODB(using the commands in folliwng image) but it didn't work.

I have also attached the screenshot of the site https://midptf01.triumf.ca/?cmd=Programs. Any help to resolve this would be appreciated! Normally Prof. Thomas Lindner would solve such issues, but he is busy working at CERN till 17th of July, and we cannot afford to wait until then.

The following is the error: when I run bash /home/midptf/online/bin/start_daq.sh

[ODBEdit1,INFO] Fixing ODB "/Programs/ODBEdit" struct size mismatch (expected

316, odb size 92)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/ODBEdit" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11381:db_get_record1,ERROR] after db_check_record()

still struct size mismatch (expected 316, odb size 92) of "/Programs/ODBEdit",

calling db_create_record()

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11387:db_get_record1,ERROR] repaired struct size

mismatch of "/Programs/ODBEdit"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/ODBEdit" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [alarm.cxx:702:al_check,ERROR] Cannot get program info record

for program "ODBEdit", db_get_record1() status 319

[ODBEdit1,INFO] Fixing ODB "/Programs/mhttpd" struct size mismatch (expected

316, odb size 60)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/mhttpd" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11381:db_get_record1,ERROR] after db_check_record()

still struct size mismatch (expected 316, odb size 92) of "/Programs/mhttpd",

calling db_create_record()

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11387:db_get_record1,ERROR] repaired struct size

mismatch of "/Programs/mhttpd"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/mhttpd" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [alarm.cxx:702:al_check,ERROR] Cannot get program info record

for program "mhttpd", db_get_record1() status 319

[ODBEdit1,INFO] Fixing ODB "/Programs/Logger" struct size mismatch (expected

316, odb size 60)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/Logger" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11381:db_get_record1,ERROR] after db_check_record()

still struct size mismatch (expected 316, odb size 92) of "/Programs/Logger",

calling db_create_record()

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11387:db_get_record1,ERROR] repaired struct size

mismatch of "/Programs/Logger"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/Logger" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [alarm.cxx:702:al_check,ERROR] Cannot get program info record

for program "Logger", db_get_record1() status 319

14:54:29 [ODBEdit,ERROR] [odb.cxx:1763:db_validate_db,ERROR] Warning: database

data area is 100% full

14:54:29 [ODBEdit,ERROR] [odb.cxx:1283:db_validate_key,ERROR] hkey 643368, path

"/Alarms/Classes/<NULL>/Display BGColor", string value is not valid UTF-8

14:54:29 [ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot

malloc_data(256), called from db_set_link_data

14:54:29 [ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot

reallocate "/System/Tmp/140305391605888I/Start command" with new size 256 bytes,

online database full

14:54:29 [ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of

zero, set to 32, odb path "Start command"

14:54:29 [ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of

zero, set to 32, odb path "Start command" |

|

21 Jun 2023, Gennaro Tortone, Bug Report, mserver and script execution 21 Jun 2023, Gennaro Tortone, Bug Report, mserver and script execution

|

Hi,

I have the following setup:

- MIDAS release: release/midas-2022-05-c

- host with MIDAS frontend (mclient)

- host with MIDAS server (mhttpd / mserver)

On mclient I run a frontend with:

./feodt5751 -h mserver -e develop -i 0

On mserver I see frontend ready and ODB variables in place;

I noticed a strange behavior with "/Programs/Execute on start run" and

"/Programs/Execute on stop run". In details the script to execute at start of run

is executed on "mserver" host but the script to execute at stop of run is executed on

"mclient" host (!)

Is this a bug or I'm missing some documentation links ?

Thanks in advance,

Gennaro |

26 Jun 2023, Stefan Ritt, Bug Report, mserver and script execution 26 Jun 2023, Stefan Ritt, Bug Report, mserver and script execution

|

Indeed that could well be (and is certainly not intended like that). I checked the code

and found that "execute on start run" and "execute on stop run" are called inside

cm_transition(). That means they are executed on the computer which calls cm_transition().

If you use mhttpd and start a run through the web interface, then mhttpd runs on your

server and "execute on start run" gets executed on your server. If you stop the run

by your frontend running on the client machine (like if a certain number of events

is reached), then "execute on stop run" gets executed on your client.

An easy way around would not to use "/Equipment/Trigger/Common/Event limit" which

gets check by your frontend and therefore on the client computer, but use

"/Logger/Channels/0/Settings/Event limit" which gets checked by the logger and

therefore executed on the server computer.

Getting a consistent behaviour (like always executing scripts on the server) would

require a major rework of the run transition framework with probably many undesired

side-effects, so lots of debugging work.

Stefan |

27 Jun 2023, Gennaro Tortone, Bug Report, mserver and script execution 27 Jun 2023, Gennaro Tortone, Bug Report, mserver and script execution

|

Hi Stefan,

> Indeed that could well be (and is certainly not intended like that). I checked the code

> and found that "execute on start run" and "execute on stop run" are called inside

> cm_transition(). That means they are executed on the computer which calls cm_transition().

> If you use mhttpd and start a run through the web interface, then mhttpd runs on your

> server and "execute on start run" gets executed on your server. If you stop the run

> by your frontend running on the client machine (like if a certain number of events

> is reached), then "execute on stop run" gets executed on your client.

ok, this is clear to me...

> An easy way around would not to use "/Equipment/Trigger/Common/Event limit" which

> gets check by your frontend and therefore on the client computer, but use

> "/Logger/Channels/0/Settings/Event limit" which gets checked by the logger and

> therefore executed on the server computer.

we never used "/Equipment/Trigger/Common/Event limit" but we always used

"/Logger/Channels/0/Settings/Event limit"...

btw I did some tests and I understand that this issue is related to 'deferred transition'

on frontend. Indeed I disabled deferred transition on frontend side and now script

execution is carried out always on MIDAS server;

Cheers,

Gennaro |

27 Jun 2023, Stefan Ritt, Bug Report, mserver and script execution 27 Jun 2023, Stefan Ritt, Bug Report, mserver and script execution

|

> btw I did some tests and I understand that this issue is related to 'deferred transition'

> on frontend. Indeed I disabled deferred transition on frontend side and now script

> execution is carried out always on MIDAS server;

Ah, that's clear now. In a deferred transition, the frontend finally stops the run (after the

condition is given to finish). Since the client calls cm_transition(), the script gets executed on

the client. Changing that would be a rather large rework of the code. So maybe better call a

script which executes another script via ssh on the server.

Stefan |

23 Jun 2023, Gennaro Tortone, Bug Report, deferred stop transition 23 Jun 2023, Gennaro Tortone, Bug Report, deferred stop transition

|

Hi,

I'm facing some issues with 'stop' deferred transition and I suspect of

a MIDAS bug regarding this...

to reproduce the issue I use the 'deferredfe' MIDAS example (develop branch),

changing only the equipment name from 'Deferred' to 'Deferred%02d' in order

to be able to run multiple 'deferredfe' instances;

I run *three* 'deferredfe' frontends using:

./deferredfe -i 0

./deferredfe -i 2

./deferredfe -i 3

Everything goes fine on MIDAS web page and 'deferredfe' frontends are initialized

and ready to run; issues occour after 'start' when I stop the frontends: sometimes

at first shot and sometimes at next 'start'/'stop' the deferred 'stop' transition

seems to be handled in wrong way... and often one frontend goes in 'segmentation fault'

The odd thing is when I run *two* instances: in this case no issues are reported...

Thanks in advance,

Gennaro |

23 Jun 2023, Stefan Ritt, Bug Report, deferred stop transition 23 Jun 2023, Stefan Ritt, Bug Report, deferred stop transition

|

Deferred transitions were only implemented with a single instance of a program deferring the

transition. To have several instances, MIDAS probably needs to be extended. Certainly this

was never tested, so it's not a surprise that we get a segmentation fault.

Stefan |

23 Jun 2023, Gennaro Tortone, Bug Report, deferred stop transition 23 Jun 2023, Gennaro Tortone, Bug Report, deferred stop transition

|

Hi Stefan,

so if I have two different frontends (feov1725 and feodt5751) connected on the same 'mserver'

I'm in the same situation ?

Cheers,

Gennaro

> Deferred transitions were only implemented with a single instance of a program deferring the

> transition. To have several instances, MIDAS probably needs to be extended. Certainly this

> was never tested, so it's not a surprise that we get a segmentation fault.

>

> Stefan |

23 Jun 2023, Stefan Ritt, Bug Report, deferred stop transition 23 Jun 2023, Stefan Ritt, Bug Report, deferred stop transition

|

> I'm in the same situation ?

Yepp. |

26 Jun 2023, Gennaro Tortone, Bug Report, deferred stop transition 26 Jun 2023, Gennaro Tortone, Bug Report, deferred stop transition

|

> Deferred transitions were only implemented with a single instance of a program deferring the

> transition. To have several instances, MIDAS probably needs to be extended. Certainly this

> was never tested, so it's not a surprise that we get a segmentation fault.

>

> Stefan

Hi Stefan,

I copied deferredfe.cxx to mydeferredfe.cxx and I changed mydeferred.cxx to be a different frontend:

const char *frontend_name = "mydeferredfe";

If I start two "different" frontends:

./deferredfe

./mydeferredfe

and try to start/stop a run... the result is the same: frontend status messing up on next 'start':

---

deferredfe:

Started run 332

Event ID:4 - Event#: 0

Event ID:4 - Event#: 1

Event ID:4 - Event#: 2

Event ID:4 - Event#: 3

Event ID:4 - Event#: 4

Event ID:4 - Event#: 5

Event ID:4 - Event#: 6

mydeferredfe:

Started run 332

Transition ignored, Event ID:2 - Event#: 0

Transition ignored, Event ID:2 - Event#: 1

Transition ignored, Event ID:2 - Event#: 2

Transition ignored, Event ID:2 - Event#: 3

Transition ignored, Event ID:2 - Event#: 4

End of cycle... perform transition

Event ID:2 - Event#: 5

End of cycle... perform transition

Event ID:2 - Event#: 6

---

so, it seems that the issue is not related to different 'instances' of same frontend but

that *at most* one frontend on whole MIDAS server can handle deferred transitions...

is this the case ?

Cheers,

Gennaro |

26 Jun 2023, Stefan Ritt, Bug Report, deferred stop transition 26 Jun 2023, Stefan Ritt, Bug Report, deferred stop transition

|

> so, it seems that the issue is not related to different 'instances' of same frontend but

> that *at most* one frontend on whole MIDAS server can handle deferred transitions...

>

> is this the case ?

Correct.

- Stefan |

13 Jun 2023, Thomas Senger, Forum, Include subroutine through relative path in sequencer 13 Jun 2023, Thomas Senger, Forum, Include subroutine through relative path in sequencer

|

Hi, I would like to restructure our sequencer scripts and the paths. Until now many things are not generic at all. I would like to ask if it is possible to include files through a relative path for example something like

INCLUDE ../chip/global_basic_functions

Maybe I just did not found how to do it. |

13 Jun 2023, Stefan Ritt, Forum, Include subroutine through relative path in sequencer 13 Jun 2023, Stefan Ritt, Forum, Include subroutine through relative path in sequencer

|

> Hi, I would like to restructure our sequencer scripts and the paths. Until now many things are not generic at all. I would like to ask if it is possible to include files through a relative path for example something like

> INCLUDE ../chip/global_basic_functions

> Maybe I just did not found how to do it.

It was not there. I implemented it in the last commit.

Stefan |

13 Jun 2023, Marco Francesconi, Forum, Include subroutine through relative path in sequencer 13 Jun 2023, Marco Francesconi, Forum, Include subroutine through relative path in sequencer

|

> > Hi, I would like to restructure our sequencer scripts and the paths. Until now many things are not generic at all. I would like to ask if it is possible to include files through a relative path for example something like

> > INCLUDE ../chip/global_basic_functions

> > Maybe I just did not found how to do it.

>

> It was not there. I implemented it in the last commit.

>

> Stefan

Hi Stefan,

when I did this job for MEG II we decided not to include relative paths and the ".." folder to avoid an exploit called "XML Entity Injection".

In short is to avoid leaking files outside the sequencer folders like /etc/password or private SSH keys.

I do not remember in this moment why we pushed for absolute paths instead but let's keep this in mind.

Marco |

13 Jun 2023, Stefan Ritt, Forum, Include subroutine through relative path in sequencer 13 Jun 2023, Stefan Ritt, Forum, Include subroutine through relative path in sequencer

|

> when I did this job for MEG II we decided not to include relative paths and the ".." folder to avoid an exploit called "XML Entity Injection".

> In short is to avoid leaking files outside the sequencer folders like /etc/password or private SSH keys.

> I do not remember in this moment why we pushed for absolute paths instead but let's keep this in mind.

I thought about that. But before we had absolute paths in the sequencer INCLUDE statement. So having "../../../etc/passwd" is as bad as the

absolute path "/etc/passwd". So nothing really changed. What we really should prevent is to LOAD files into the sequencer from outside the

sequence subdirectory. And this is prevented by the file loader. Actually we will soon replace the file loaded with a modern JS dialog, and

the code restricts all operations to within the experiment directory and below.

Stefan |

09 Jun 2023, Konstantin Olchanski, Info, added IPv6 support for mserver and MIDAS RPC 09 Jun 2023, Konstantin Olchanski, Info, added IPv6 support for mserver and MIDAS RPC

|

as of commit 71fb5e82f3e9a1501b4dc2430f9193ee5d176c82, MIDAS RPC and the mserver

listen for connections both on IPv4 and IPv6. mserver clients and MIDAS RPC

clients can connect to MIDAS using both IPv4 and IPv6. In the default

configuration ("/Expt/Security/Enable non-localhost RPC" set to "n"), IPv4

localhost is used, as before. Support for IPv6 is a by product from switching

from obsolete non-thread-safe gethostbyname() and getaddrbyname() to modern

getaddrinfo() and getnameinfo(). This fixes bug 357, observed crash of mhttpd

inside gethostbyname(). K.O. |

23 May 2023, Kou Oishi, Bug Report, Event builder fails at every 10 runs 23 May 2023, Kou Oishi, Bug Report, Event builder fails at every 10 runs

|

Dear MIDAS experts,

Greetings!

I am currently utilizing MIDAS for our experiment and I have encountered an issue with our event builder, which was developed based on the example code 'eventbuilder/mevb.cxx'. I'm uncertain whether this is a genuine bug or an inherent feature of MIDAS.

The event builder fails to initiate the 10th run since its startup, requiring us to relaunch it. Upon investigating the code, I have identified that this issue stems from line 8404 of mfe.cxx (the version's hash is db94df6fa79772c49888da9374e143067a1fff3a). According to the code, the 10-run limit is imposed by the variable MAX_EVENT_REQUESTS in midas.h. While I can increase this value as the code suggests, it does not provide a complete solution, as the same problem will inevitably resurface. This complication unnecessarily hampers our data collection during long observation periods.

Despite the code indicating 'BM_NO_MEMORY: too many requests,' this explanation does not seem logical to me. In fact, other standard frontends do not encounter this problem and can start new runs as required without requiring a frontend relaunch.

I apologize for not yet fully grasping the intricate implementation of midas.cxx and mfe.cxx. However, I would greatly appreciate any suggestions or insights you can offer to help resolve this issue.

Thank you in advance for your kind assistance. |

31 May 2023, Ben Smith, Bug Report, Event builder fails at every 10 runs 31 May 2023, Ben Smith, Bug Report, Event builder fails at every 10 runs

|

> The event builder fails to initiate the 10th run since its startup,

> 'BM_NO_MEMORY: too many requests,'

Hi Kou,

It sounds like you might be calling bm_request_event() when starting a run, but not calling bm_delete_request() when the run stops. So you end up "leaking" event requests and eventually reach the limit of 10 open requests.

In examples/eventbuilder/mevb.c the request deletion happens in source_unbooking(), which is called as part of the "run stopping" logic. I've just updated the midas repository so the example compiles correctly, and was able to start/stop 15 runs without crashing.

Can you check the end-of-run logic in your version to ensure you're calling bm_delete_request()? |

02 Jun 2023, Kou Oishi, Bug Report, Event builder fails at every 10 runs 02 Jun 2023, Kou Oishi, Bug Report, Event builder fails at every 10 runs

|

Dear Ben,

Hello. Thank you for your attention to this problem!

> It sounds like you might be calling bm_request_event() when starting a run, but not calling bm_delete_request() when the run stops. So you end up "leaking" event requests and eventually reach the limit of 10 open requests.

I understand. Thanks for the description.

> In examples/eventbuilder/mevb.c the request deletion happens in source_unbooking(), which is called as part of the "run stopping" logic. I've just updated the midas repository so the example compiles correctly, and was able to start/stop 15 runs without crashing.

>

> Can you check the end-of-run logic in your version to ensure you're calling bm_delete_request()?

I really appreciate your update.

Although I am away at the moment from the DAQ development, I will test it and report the result here as soon as possible.

Best regards,

Kou |

10 May 2023, Lukas Gerritzen, Suggestion, Desktop notifications for messages 10 May 2023, Lukas Gerritzen, Suggestion, Desktop notifications for messages

|

It would be nice to have MIDAS notifications pop up outside of the browser window.

To get enable this myself, I hijacked the speech synthesis and I added the following to mhttpd_speak_now(text) inside mhttpd.js:

let notification = new Notification('MIDAS Message', {

body: text,

});

I couldn't ask for the permission for notifications here, as Firefox threw the error "The Notification permission may only be requested from inside a short running user-generated event handler". Therefore, I added a button to config.html:

<button class="mbutton" onclick="Notification.requestPermission()">Request notification permission</button>

There might be a more elegant solution to request the permission. |

10 May 2023, Stefan Ritt, Suggestion, Desktop notifications for messages 10 May 2023, Stefan Ritt, Suggestion, Desktop notifications for messages

|

| Lukas Gerritzen wrote: | | It would be nice to have MIDAS notifications pop up outside of the browser window. |

There are certainly dozens of people who do "I don't like pop-up windows all the time". So this has to come with a switch in the config page to turn it off. If there is a switch "allow pop-up windows", then we have the other fraction of people using Edge/Chrome/Safari/Opera saying "it's not working on my specific browser on version x.y.z". So I'm only willing to add that feature if we are sure it's a standard things working in most environments.

Best,

Stefan |

10 May 2023, Lukas Gerritzen, Suggestion, Desktop notifications for messages 10 May 2023, Lukas Gerritzen, Suggestion, Desktop notifications for messages

|

| Stefan Ritt wrote: |

people using Edge/Chrome/Safari/Opera saying "it's not working on my specific browser on version x.y.z". So I'm only willing to add that feature if we are sure it's a standard things working in most environments.

|

[The API looks pretty standard to me. Firefox, Chrome, Opera have been supporting it for about 9 years, Safari for almost 6. I didn't find out when Edge 14 was released, but they're at version 112 now.

Since browsers don't want to annoy their users, many don't allow websites to ask for permissions without user interaction. So the workflow would be something like: The user has to press a button "please ask for permission", then the browser opens a dialog "do you want to grant this website permission to show notifications?" and only then it works. So I don't think it's an annoying popup-mess, especially since system notifications don't capture the focus and typically vanish after a few seconds. If that feature is hidden behind a button on the config page, it shouldn't lead to surprises. Especially since users can always revoke that permission. |

11 May 2023, Stefan Ritt, Suggestion, Desktop notifications for messages 11 May 2023, Stefan Ritt, Suggestion, Desktop notifications for messages

|

Ok, I implemented desktop notifications. In the MIDAS config page, you can now enable browser notifications for the different types of messages. Not sure this works perfectly, but a staring point. So please let me know if there is any issue.

Stefan |

10 May 2023, Lukas Gerritzen, Suggestion, Make sequencer more compatible with mobile devices 10 May 2023, Lukas Gerritzen, Suggestion, Make sequencer more compatible with mobile devices

|

When trying to select a run script on an iPad or other mobile device, you cannot enter subdirectories. This is caused by the following part:

if (script.substring(0, 1) === "[") {

// refuse to load script if the selected a subdirectory

return;

}

and the fact that the <option> elements are listening for double click events, which seem to be impossible on a mobile device.

The following modification allows browsing the directories without changing the double click behaviour on a desktop:

diff --git a/resources/load_script.html b/resources/load_script.html

index 41bfdccd..36caa57f 100644

--- a/resources/load_script.html

+++ b/resources/load_script.html

@@ -59,6 +59,28 @@

</div>

<script>

+ document.getElementById("msg_sel").onchange = function() {

+ script = this.value;

+ button = document.getElementById("load_button");

+ if (script.substring(0, 4) === "[..]") {

+ // Change button to go back

+ enable_button_by_id("load_button");

+ button.innerHTML = "Back";

+ button.onclick = up_subdir;

+ } else if (script.substring(0, 1) === "[") {

+ // Change button to load subdirectory

+ enable_button_by_id("load_button");

+ button.innerHTML = "Enter subdirectory";

+ button.onclick = load_subdir;

+ } else {

+ // Change button to load script

+ enable_button_by_id("load_button");

+ button = document.getElementById("load_button");

+ button.innerHTML = "Load script";

+ button.onclick = load_script;

+ }

+ }

+

function set_if_changed(id, value)

{

var e = document.getElementById(id);

This makes the code quoted above redundant, so the check can actually be omitted. |

10 May 2023, Stefan Ritt, Suggestion, Make sequencer more compatible with mobile devices 10 May 2023, Stefan Ritt, Suggestion, Make sequencer more compatible with mobile devices

|

| Lukas Gerritzen wrote: | When trying to select a run script on an iPad or other mobile device, you cannot enter subdirectories. This is caused by the following part:

|

We are working right now on a general file picker, which will replace also the file picker for the sequencer. So please wait until the new thing is out and then test it there.

Stefan |

08 May 2023, Alexey Kalinin, Forum, Scrript in sequencer 08 May 2023, Alexey Kalinin, Forum, Scrript in sequencer

|

Hello,

I tried different ways to pass parameters to bash script, but there are seems to

be empty, what could be the problem?

We have seuqencer like

ODBGET "/Runinfo/runnumber", firstrun

LOOP n,10

#changing HV

TRANSITION start

WAIT seconds,300

TRANSITION stop

ENDLOOP

ODBGET "/Runinfo/runnumber", lastrun

SCRIPT /.../script.sh ,$firstrun ,$lastrun

and script.sh like

firstrun=$1

lastrun=$2

Thanks. Alexey. |

08 May 2023, Stefan Ritt, Forum, Scrript in sequencer 08 May 2023, Stefan Ritt, Forum, Scrript in sequencer

|

> I tried different ways to pass parameters to bash script, but there are seems to

> be empty, what could be the problem?

Indeed there was a bug in the sequencer with parameter passing to scripts. I fixed it

and committed the changes to the develop branch.

Stefan |

09 May 2023, Alexey Kalinin, Forum, Scrript in sequencer 09 May 2023, Alexey Kalinin, Forum, Scrript in sequencer

|

Thanks. It works perfect.

Another question is:

Is it possible to run .msl seqscript from bash cmd?

Maybe it's easier then

1 odbedit -c 'set "/sequencer/load filename" filename.msl'

2 odbedit -c 'set "/sequencer/load new file" TRUE'

3 odbedit -c 'set "/sequencer/start script" TRUE'

What is the best way to have a button starting sequencer

from /script (or /alias )?

Alexey.

> > I tried different ways to pass parameters to bash script, but there are seems to

> > be empty, what could be the problem?

>

> Indeed there was a bug in the sequencer with parameter passing to scripts. I fixed it

> and committed the changes to the develop branch.

>

> Stefan |

10 May 2023, Stefan Ritt, Forum, Scrript in sequencer 10 May 2023, Stefan Ritt, Forum, Scrript in sequencer

|

> Thanks. It works perfect.

> Another question is:

> Is it possible to run .msl seqscript from bash cmd?

> Maybe it's easier then

> 1 odbedit -c 'set "/sequencer/load filename" filename.msl'

> 2 odbedit -c 'set "/sequencer/load new file" TRUE'

> 3 odbedit -c 'set "/sequencer/start script" TRUE'

That will work.

> What is the best way to have a button starting sequencer

> from /script (or /alias )?

Have a look at

https://daq00.triumf.ca/MidasWiki/index.php/Sequencer#Controlling_the_sequencer_from_custom_pages

where I put the necessary information.

Stefan |

26 Apr 2023, Martin Mueller, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option 26 Apr 2023, Martin Mueller, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option

|

Hi

We have a problem with running midas frontends when they should connect to a experiment on a different machine using the -h option. Starting them locally works fine. Firewall is off on both systems, Enable non-localhost RPC and Disable RPC hosts check are set to 'yes', mserver is running on the machine that we want to connect to.

Error message looks like this:

...

Connect to experiment Mu3e on host 10.32.113.210...

OK

Init hardware...

terminate called after throwing an instance of 'mexception'

what():

/home/mu3e/midas/include/odbxx.h:1102: Wrong key type in XML file

Stack trace:

1 0x00000000000042D828 (null) + 4380712

2 0x00000000000048ED4D midas::odb::odb_from_xml(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&) + 605

3 0x0000000000004999BD midas::odb::odb(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&) + 317

4 0x000000000000495383 midas::odb::read_key(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&) + 1459

5 0x0000000000004971E3 midas::odb::connect(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, bool, bool) + 259

6 0x000000000000497636 midas::odb::connect(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, bool, bool) + 502

7 0x00000000000049883B midas::odb::connect_and_fix_structure(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >) + 171

8 0x0000000000004385EF setup_odb() + 8351

9 0x00000000000043B2E6 frontend_init() + 22

10 0x000000000000433304 main + 1540

11 0x0000007F8C6FE3724D __libc_start_main + 239

12 0x000000000000433F7A _start + 42

Aborted (core dumped)

We have the same problem for all our frontends. When we want to start them locally they work. Starting them locally with ./frontend -h localhost also reproduces the error above.

The error can also be reproduced with the odbxx_test.cxx example in the midas repo by replacing line 22 in midas/examples/odbxx/odbxx_test.cxx (cm_connect_experiment(NULL, NULL, "test", NULL);) with cm_connect_experiment("localhost", "Mu3e", "test", NULL); (Put the name of the experiment instead of "Mu3e")

running odbxx_test locally gives us then the same error as our other frontend.

Thanks in advance,

Martin |

27 Apr 2023, Konstantin Olchanski, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option 27 Apr 2023, Konstantin Olchanski, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option

|

Looks like your MIDAS is built without debug information (-O2 -g), the stack trace does not have file names and line numbers. Please rebuild with debug information and report the stack trace. Thanks. K.O.

> Connect to experiment Mu3e on host 10.32.113.210...

> OK

> Init hardware...

> terminate called after throwing an instance of 'mexception'

> what():

> /home/mu3e/midas/include/odbxx.h:1102: Wrong key type in XML file

> Stack trace:

> 1 0x00000000000042D828 (null) + 4380712

> 2 0x00000000000048ED4D midas::odb::odb_from_xml(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&) + 605

> 3 0x0000000000004999BD midas::odb::odb(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&) + 317

> 4 0x000000000000495383 midas::odb::read_key(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&) + 1459

> 5 0x0000000000004971E3 midas::odb::connect(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, bool, bool) + 259

> 6 0x000000000000497636 midas::odb::connect(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, bool, bool) + 502

> 7 0x00000000000049883B midas::odb::connect_and_fix_structure(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >) + 171

> 8 0x0000000000004385EF setup_odb() + 8351

> 9 0x00000000000043B2E6 frontend_init() + 22

> 10 0x000000000000433304 main + 1540

> 11 0x0000007F8C6FE3724D __libc_start_main + 239

> 12 0x000000000000433F7A _start + 42

>

> Aborted (core dumped)

K.O. |

28 Apr 2023, Martin Mueller, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option 28 Apr 2023, Martin Mueller, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option

|

> Looks like your MIDAS is built without debug information (-O2 -g), the stack trace does not have file names and line numbers. Please rebuild with debug information and report the stack trace. Thanks. K.O.

>

> > Connect to experiment Mu3e on host 10.32.113.210...

> > OK

> > Init hardware...

> > terminate called after throwing an instance of 'mexception'

> > what():

> > /home/mu3e/midas/include/odbxx.h:1102: Wrong key type in XML file

> > Stack trace:

> > 1 0x00000000000042D828 (null) + 4380712

> > 2 0x00000000000048ED4D midas::odb::odb_from_xml(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&) + 605

> > 3 0x0000000000004999BD midas::odb::odb(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&) + 317

> > 4 0x000000000000495383 midas::odb::read_key(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&) + 1459

> > 5 0x0000000000004971E3 midas::odb::connect(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, bool, bool) + 259

> > 6 0x000000000000497636 midas::odb::connect(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, bool, bool) + 502

> > 7 0x00000000000049883B midas::odb::connect_and_fix_structure(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >) + 171

> > 8 0x0000000000004385EF setup_odb() + 8351

> > 9 0x00000000000043B2E6 frontend_init() + 22

> > 10 0x000000000000433304 main + 1540

> > 11 0x0000007F8C6FE3724D __libc_start_main + 239

> > 12 0x000000000000433F7A _start + 42

> >

> > Aborted (core dumped)

>

> K.O.

As i said we can easily reproduce this with midas/examples/odbxx/odbxx_test.cpp (with cm_connect_experiment changed to "localhost")

Stack trace of odbxx_test with line numbers:

Set ODB key "/Test/Settings/String Array 10[0...9]" = ["","","","","","","","","",""]

Created ODB key "/Test/Settings/Large String Array 10"

Set ODB key "/Test/Settings/Large String Array 10[0...9]" = ["","","","","","","","","",""]

[test,ERROR] [system.cxx:5104:recv_tcp2,ERROR] unexpected connection closure

[test,ERROR] [system.cxx:5158:ss_recv_net_command,ERROR] error receiving network command header, see messages

[test,ERROR] [midas.cxx:13900:rpc_call,ERROR] routine "db_copy_xml": error, ss_recv_net_command() status 411, program abort

Program received signal SIGABRT, Aborted.

0x00007ffff6665cdb in raise () from /lib64/libc.so.6

Missing separate debuginfos, use: zypper install libgcc_s1-debuginfo-11.3.0+git1637-150000.1.9.1.x86_64 libstdc++6-debuginfo-11.2.1+git610-1.3.9.x86_64 libz1-debuginfo-1.2.11-3.24.1.x86_64

(gdb) bt

#0 0x00007ffff6665cdb in raise () from /lib64/libc.so.6

#1 0x00007ffff6667375 in abort () from /lib64/libc.so.6

#2 0x0000000000431bba in rpc_call (routine_id=11249) at /home/labor/midas/src/midas.cxx:13904

#3 0x0000000000460c4e in db_copy_xml (hDB=1, hKey=1009608, buffer=0x7ffff7e9c010 "", buffer_size=0x7fffffffadbc, header=false) at /home/labor/midas/src/odb.cxx:8994

#4 0x000000000046fc4c in midas::odb::odb_from_xml (this=0x7fffffffb3f0, str=...) at /home/labor/midas/src/odbxx.cxx:133

#5 0x000000000040b3d9 in midas::odb::odb (this=0x7fffffffb3f0, str=...) at /home/labor/midas/include/odbxx.h:605

#6 0x000000000040b655 in midas::odb::odb (this=0x7fffffffb3f0, s=0x4a465a "/Test/Settings") at /home/labor/midas/include/odbxx.h:629

#7 0x0000000000407bba in main () at /home/labor/midas/examples/odbxx/odbxx_test.cxx:56

(gdb) |

28 Apr 2023, Konstantin Olchanski, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option 28 Apr 2023, Konstantin Olchanski, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option

|

> As i said we can easily reproduce this with midas/examples/odbxx/odbxx_test.cpp (with cm_connect_experiment changed to "localhost")

> [test,ERROR] [system.cxx:5104:recv_tcp2,ERROR] unexpected connection closure

> [test,ERROR] [system.cxx:5158:ss_recv_net_command,ERROR] error receiving network command header, see messages

> [test,ERROR] [midas.cxx:13900:rpc_call,ERROR] routine "db_copy_xml": error, ss_recv_net_command() status 411, program abort

ok, cool. looks like we crashed the mserver. either run mserver attached to gdb or enable mserver core dump, we need it's stack trace,

the correct stack trace should be rooted in the handler for db_copy_xml.

but most likely odbxx is asking for more data than can be returned through the MIDAS RPC.

what is the ODB key passed to db_copy_xml() and how much data is in ODB at that key? (odbedit "du", right?).

K.O. |

28 Apr 2023, Martin Mueller, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option 28 Apr 2023, Martin Mueller, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option

|

> > As i said we can easily reproduce this with midas/examples/odbxx/odbxx_test.cpp (with cm_connect_experiment changed to "localhost")

> > [test,ERROR] [system.cxx:5104:recv_tcp2,ERROR] unexpected connection closure

> > [test,ERROR] [system.cxx:5158:ss_recv_net_command,ERROR] error receiving network command header, see messages

> > [test,ERROR] [midas.cxx:13900:rpc_call,ERROR] routine "db_copy_xml": error, ss_recv_net_command() status 411, program abort

>

> ok, cool. looks like we crashed the mserver. either run mserver attached to gdb or enable mserver core dump, we need it's stack trace,

> the correct stack trace should be rooted in the handler for db_copy_xml.

>

> but most likely odbxx is asking for more data than can be returned through the MIDAS RPC.

>

> what is the ODB key passed to db_copy_xml() and how much data is in ODB at that key? (odbedit "du", right?).

>

> K.O.

Ok. Maybe i have to make this more clear. ANY odbxx access of a remote odb reproduces this error for us on multiple machines.

It does not matter how much data odbxx is asking for.

Something as simple as this reproduces the error, asking for a single integer:

int main() {

cm_connect_experiment("localhost", "Mu3e", "test", NULL);

midas::odb o = {

{"Int32 Key", 42}

};

o.connect("/Test/Settings");

cm_disconnect_experiment();

return 1;

}

at the same time this runs fine:

int main() {

cm_connect_experiment(NULL, NULL, "test", NULL);

midas::odb o = {

{"Int32 Key", 42}

};

o.connect("/Test/Settings");

cm_disconnect_experiment();

return 1;

}

in both cases mserver does not crash. I do not have a stack trace. There is also no error produced by mserver.

Last year we did not have these problems with the same midas frontends (For example in midas commit 9d2ef471 the code from above runs

fine). I am trying to pinpoint the exact commit where this stopped working now. |

28 Apr 2023, Konstantin Olchanski, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option 28 Apr 2023, Konstantin Olchanski, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option

|

> > > As i said we can easily reproduce this with midas/examples/odbxx/odbxx_test.cpp

> > ok, cool. looks like we crashed the mserver.

> Ok. Maybe i have to make this more clear. ANY odbxx access of a remote odb reproduces this error for us on multiple machines.

> It does not matter how much data odbxx is asking for.

> midas commit 9d2ef471 the code from above runs fine

so, a regression. ouch.

if core dumps are turned off, you will not "see" the mserver crash, because the main mserver is still running. it's the mserver forked to

serve your RPC connection that crashes.

> int main() {

> cm_connect_experiment("localhost", "Mu3e", "test", NULL);

> midas::odb o = {

> {"Int32 Key", 42}

> };

> o.connect("/Test/Settings");

> cm_disconnect_experiment();

> return 1;

> }

to debug this, after cm_connect_experiment() one has to put ::sleep(1000000000); (not that big, obviously),

then while it is sleeping do "ps -efw | grep mserver", this will show the mserver for the test program,

connect to it with gdb, wait for ::sleep() to finish and o.connect() to crash, with luck gdb will show

the crash stack trace in the mserver.

so easy to debug? this is why back in the 1970-ies clever people invented core dumps, only to have

even more clever people in the 2020-ies turn them off and generally make debugging more difficult (attaching

gdb to a running program is also disabled-by-default in some recent linuxes).

rant-off.

to check if core dumps work, to "killall -7 mserver". to enable core dumps on ubuntu, see here:

https://daq00.triumf.ca/DaqWiki/index.php/Ubuntu

last known-working point is:

commit 9d2ef471c4e4a5a325413e972862424549fa1ed5

Author: Ben Smith <bsmith@triumf.ca>

Date: Wed Jul 13 14:45:28 2022 -0700

Allow odbxx to handle connecting to "/" (avoid trying to read subkeys as "//Equipment" etc.

K.O. |

02 May 2023, Niklaus Berger, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option 02 May 2023, Niklaus Berger, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option

|

Thanks for all the helpful hints. When finally managing to evade all timeouts and attach the debugger in just the right moment, we find that we get a segfault in mserver at L827:

case RPC_DB_COPY_XML:

status = db_copy_xml(CHNDLE(0), CHNDLE(1), CSTRING(2), CPINT(3), CBOOL(4));

Some printf debugging then pointed us to the fact that the culprit is the pointer de-referencing in CBOOL(4). This in turn can be traced back to mrpc.cxx L282 ff, where the line with the arrow was missing:

{RPC_DB_COPY_XML, "db_copy_xml",

{{TID_INT32, RPC_IN},

{TID_INT32, RPC_IN},

{TID_ARRAY, RPC_OUT | RPC_VARARRAY},

{TID_INT32, RPC_IN | RPC_OUT},

-> {TID_BOOL, RPC_IN},

{0}}},

If we put that in, the mserver process completes peacfully and we get a segfault in the client ("Wrong key type in XML file") which we will attempt to debug next. Shall I create a pull request for the additional RPC argument or will you just fix this on the fly? |

02 May 2023, Niklaus Berger, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option 02 May 2023, Niklaus Berger, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option

|

And now we also fixed the client segfault, odb.cxx L8992 also needs to know about the header:

if (rpc_is_remote())

return rpc_call(RPC_DB_COPY_XML, hDB, hKey, buffer, buffer_size, header);

(last argument was missing before). |

02 May 2023, Stefan Ritt, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option 02 May 2023, Stefan Ritt, Forum, Problem with running midas odbxx frontends on a remote machine using the -h option

|

> Shall I create a pull request for the additional RPC argument or will you just fix this on the fly?

Just fix it in the fly yourself. Itís an obvious bug, so please commit to develop.

Stefan |

01 May 2023, Giovanni Mazzitelli, Bug Report, python issue with mathplot lib vs odb query 01 May 2023, Giovanni Mazzitelli, Bug Report, python issue with mathplot lib vs odb query

|

Ciao,

we have a very strange issue with python lib with client.odb_get("/") function

when running as midas process and matplotlib is used.

we are developing a remote console by means of sending via kafka producer the odb,

camera image and pmt waveforms, in the INFN cloud where grafana make available

data for non expert shifters, as well as sending midas events for online

reconstruction to the htcondr queue on cloud. The process work perfectly and allow

use to parallelise to standard midas pipeline for file production, ecc the online

monitoring and data processing where we have computing resources (our DAQ is

underground at LNGS). Part of the work will be presented next weak at CHEP

the full code is available at https://github.com/CYGNUS-

RD/middleware/blob/master/dev/event_producer_s3.py

but to get the strange behaviour I report here a test script:

----

def main(verbose=False):

from matplotlib import pyplot as plt

import time

import midas

import midas.client

client = midas.client.MidasClient("middleware")

buffer_handle = client.open_event_buffer("SYSTEM",None,1000000000)

request_id = client.register_event_request(buffer_handle, sampling_type = 2)

fpath = os.path.dirname(os.path.realpath(sys.argv[0]))

while True:

#

odb = client.odb_get("/")

if verbose:

print(odb)

start1 = time.time()

client.communicate(10)

time.sleep(1)

client.deregister_event_request(buffer_handle, request_id)

client.disconnect()

----

if I run it as cli interactivity including or not matplotlib the everything si ok.

As I run it as midas "program" I get:

-----

Traceback (most recent call last):

File "/home/standard/daq/middleware/dev/test_midas_error.py", line 48, in

<module>

main(verbose=options.verbose)

File "/home/standard/daq/middleware/dev/test_midas_error.py", line 29, in main

odb = client.odb_get("/")

File "/home/standard/packages/midas/python/midas/client.py", line 354, in

odb_get

retval = midas.safe_to_json(buf.value, use_ordered_dict=True)

File "/home/standard/packages/midas/python/midas/__init__.py", line 552, in

safe_to_json

return json.loads(decoded, strict=False,

object_pairs_hook=collections.OrderedDict)

File "/usr/lib/python3.8/json/__init__.py", line 370, in loads

return cls(**kw).decode(s)

File "/usr/lib/python3.8/json/decoder.py", line 337, in decode

obj, end = self.raw_decode(s, idx=_w(s, 0).end())

File "/usr/lib/python3.8/json/decoder.py", line 353, in raw_decode

obj, end = self.scan_once(s, idx)

json.decoder.JSONDecodeError: Expecting property name enclosed in double quotes:

line 300 column 26 (char 17535)

----

if I comment out the import of matplotlib every think works perfectly again also

as midas program.

it seams that there is a difference between the to way of use the code, and that

is sufficient the call to matplotlib to corrupt in some way the odb. any ideas? |

01 May 2023, Ben Smith, Bug Report, python issue with mathplot lib vs odb query 01 May 2023, Ben Smith, Bug Report, python issue with mathplot lib vs odb query

|

> it seams that there is a difference between the to way of use the code, and that

> is sufficient the call to matplotlib to corrupt in some way the odb. any ideas?

I can't reproduce this on my machines, so this is going to be fun to debug!

Can you try running the program below please? It takes the important bits from odb_get() but prints out the string before we try to parse it as JSON. Feel free to send me the output via email (bsmith@triumf.ca) if you don't want to post your entire ODB dump in the elog.

import sys

import os

import time

import midas

import midas.client

import ctypes

def debug_get(client):

c_path = ctypes.create_string_buffer(b"/")

hKey = ctypes.c_int()

client.lib.c_db_find_key(client.hDB, 0, c_path, ctypes.byref(hKey))

buf = ctypes.c_char_p()

bufsize = ctypes.c_int()

bufend = ctypes.c_int()

client.lib.c_db_copy_json_save(client.hDB, hKey, ctypes.byref(buf), ctypes.byref(bufsize), ctypes.byref(bufend))

print("-" * 80)

print("FULL DUMP")

print("-" * 80)

print(buf.value)

print("-" * 80)

print("Chars 17000-18000")

print("-" * 80)

print(buf.value[17000:18000])

print("-" * 80)

as_dict = midas.safe_to_json(buf.value, use_ordered_dict=True)

client.lib.c_free(buf)

return as_dict

def main(verbose=False):

client = midas.client.MidasClient("middleware")

buffer_handle = client.open_event_buffer("SYSTEM",None,1000000000)

request_id = client.register_event_request(buffer_handle, sampling_type = 2)

fpath = os.path.dirname(os.path.realpath(sys.argv[0]))

while True:

# odb = client.odb_get("/")

odb = debug_get(client)

if verbose:

print(odb)

start1 = time.time()

client.communicate(10)

time.sleep(1)

client.deregister_event_request(buffer_handle, request_id)

client.disconnect()

if __name__ == "__main__":

main() |

01 May 2023, Giovanni Mazzitelli, Bug Report, python issue with mathplot lib vs odb query 01 May 2023, Giovanni Mazzitelli, Bug Report, python issue with mathplot lib vs odb query

|

> > it seams that there is a difference between the to way of use the code, and that

> > is sufficient the call to matplotlib to corrupt in some way the odb. any ideas?

>

> I can't reproduce this on my machines, so this is going to be fun to debug!

>

> Can you try running the program below please? It takes the important bits from odb_get() but prints out the string before we try to parse it as JSON. Feel free to send me the output via email (bsmith@triumf.ca) if you don't want to post your entire ODB dump in the elog.

Thank you!

if I added the matplotlib as follow:

#!/usr/bin/env python3

import sys

import os

import time

import midas

import midas.client

import ctypes

from matplotlib import pyplot as plt

def debug_get(client):

c_path = ctypes.create_string_buffer(b"/")

hKey = ctypes.c_int()

client.lib.c_db_find_key(client.hDB, 0, c_path, ctypes.byref(hKey))

buf = ctypes.c_char_p()

bufsize = ctypes.c_int()

bufend = ctypes.c_int()

client.lib.c_db_copy_json_save(client.hDB, hKey, ctypes.byref(buf), ctypes.byref(bufsize), ctypes.byref(bufend))

print("-" * 80)

print("FULL DUMP")

print("-" * 80)

print(buf.value)

print("-" * 80)

print("Chars 17000-18000")

print("-" * 80)

print(buf.value[17000:18000])

print("-" * 80)

as_dict = midas.safe_to_json(buf.value, use_ordered_dict=True)

client.lib.c_free(buf)

return as_dict

def main(verbose=False):

client = midas.client.MidasClient("middleware")

buffer_handle = client.open_event_buffer("SYSTEM",None,1000000000)

request_id = client.register_event_request(buffer_handle, sampling_type = 2)

fpath = os.path.dirname(os.path.realpath(sys.argv[0]))

while True:

# odb = client.odb_get("/")

odb = debug_get(client)

if verbose:

print(odb)

start1 = time.time()

client.communicate(10)

time.sleep(1)

client.deregister_event_request(buffer_handle, request_id)

client.disconnect()

if __name__ == "__main__":

from optparse import OptionParser

parser = OptionParser(usage='usage: %prog\t ')

parser.add_option('-v','--verbose', dest='verbose', action="store_true", default=False, help='verbose output;');

(options, args) = parser.parse_args()

main(verbose=options.verbose)

then tested the code in interactive mode without any error. as soon as I submit as midas "Program" I get the attached output.

thank you again, Giovanni |

01 May 2023, Ben Smith, Bug Report, python issue with mathplot lib vs odb query 01 May 2023, Ben Smith, Bug Report, python issue with mathplot lib vs odb query

|

Looks like a localisation issue. Your floats are formatted as "6,6584e+01", whereas the JSON decoder expects "6.6584e+01".

Can you run the following few lines please? Then I'll be able to write a test using the same setup as you:

import locale

print(locale.getlocale())

from matplotlib import pyplot as plt

print(locale.getlocale())

|

01 May 2023, Ben Smith, Bug Report, python issue with mathplot lib vs odb query 01 May 2023, Ben Smith, Bug Report, python issue with mathplot lib vs odb query

|

> Looks like a localisation issue. Your floats are formatted as "6,6584e+01", whereas the JSON decoder expects "6.6584e+01".

This should be fixed in the latest commit to the midas develop branch. The JSON specification requires a dot for the decimal separator, so we must ignore the user's locale when formatting floats/doubles for JSON.

I've tested the fix on my machine by manually changing the locale, and also added an automated test in the python directory. |

01 May 2023, Giovanni Mazzitelli, Bug Report, python issue with mathplot lib vs odb query 01 May 2023, Giovanni Mazzitelli, Bug Report, python issue with mathplot lib vs odb query

|

> > Looks like a localisation issue. Your floats are formatted as "6,6584e+01", whereas the JSON decoder expects "6.6584e+01".

>

> This should be fixed in the latest commit to the midas develop branch. The JSON specification requires a dot for the decimal separator, so we must ignore the user's locale when formatting floats/doubles for JSON.

>

> I've tested the fix on my machine by manually changing the locale, and also added an automated test in the python directory.

Thanks very macth Ben,

so if I understand correctly we have to update MIDAS to latest develop branch available? can you sand me the link to be sure of install the right update.