| ID |

Date |

Author |

Topic |

Subject |

|

2232

|

25 Jun 2021 |

Stefan Ritt | Bug Report | my html modbvalue thing is not working? | Can you post your complete page here so that I can have a look?

Stefan |

|

2249

|

29 Jun 2021 |

Lukas Gerritzen | Bug Report | modbcheckbox behaves erroneous with UINT32 variables | For boolean and INT32 variables, modbcheckbox works as expected. You click, it

sets the variable to true or 1, the checkbox stays checked until you click again

and it's being set back to 0.

For UINT32 variables, you can turn the variable "on", but the checkbox visually

becomes unchecked immediately. Clicking again does not set the variable to

0/false and the tick visually appears for a fraction of a second, but vanishes

again. |

|

2250

|

30 Jun 2021 |

Stefan Ritt | Bug Report | modbcheckbox behaves erroneous with UINT32 variables | > For boolean and INT32 variables, modbcheckbox works as expected. You click, it

> sets the variable to true or 1, the checkbox stays checked until you click again

> and it's being set back to 0.

>

> For UINT32 variables, you can turn the variable "on", but the checkbox visually

> becomes unchecked immediately. Clicking again does not set the variable to

> 0/false and the tick visually appears for a fraction of a second, but vanishes

> again.

Thanks for reporting that bug. Fixed in

https://bitbucket.org/tmidas/midas/commits/4ef26bdc5a32716efe8e8f0e9ce328bafad6a7bf

Stefan |

|

2252

|

30 Jun 2021 |

Lukas Gerritzen | Bug Report | modbcheckbox behaves erroneous with UINT32 variables | Thanks for the quick fix. |

|

2256

|

09 Jul 2021 |

Konstantin Olchanski | Bug Report | cmake question | cmake check and mate in 1 move. please help.

the midas cmake file has a typo in the ROOT_CXX_FLAGS, I fixed it and now I am dead in the

water, need help from cmake experts and pushers.

On Ubuntu:

ROOT_CXX_FLAGS has -std=c++14

midas cmake defines -std=gnu++11 (never mind that I asked for c++11, not "c++11 with GNU

extensions")

the two compiler flags collide and the build explodes, the best I can tell c++11 prevails

and ROOT header files blow up because they expect c++14.

if I remove the midas cmake request for c++11, -std=gnu++11 is gone, there is no conflict

with ROOT C++14 request and the build works just fine.

but now it explodes on CentOS-7 because by default, c++11 is not enabled. (include <mutex>

blows up).

what a mess.

K.O. |

|

2260

|

11 Jul 2021 |

Konstantin Olchanski | Bug Report | cmake with CMAKE_INSTALL_PREFIX fails | big thanks to Andreas S. for getting most of this figured out. I now understand

much better how cmake installs things and how it generates config files, both

find_package(midas) style and install(export) style.

with the latest updates, CMAKE_INSTALL_PREFIX should work correctly. I now understand how it works,

how to use it and how to test it, it should not break again.

for posterity, my commends to Andreas's pull request:

thank you for providing this code, it was very helpful. at the end I implemented things slightly differently. It took me a while to understand that I have to provide 2 “install” modes, for your case, I need to

“install” the header files and everything works “the cmake way”, for our normal case, we use include files in-place and have to include all the git submodules to the include path. I am quite happy with the

result. K.O.

K.O. |

|

2263

|

13 Jul 2021 |

Konstantin Olchanski | Bug Report | cmake question | > cmake check and mate in 1 move. please help.

> -std=c++11 and -std=c++14 collision...

I have a solution implemented for this, I am not happy with it, Stefan is not happy with it. See

discussion: https://bitbucket.org/tmidas/midas/commits/50a15aa70a4fe3927764605e8964b55a3bb1732b

K.O. |

|

2264

|

14 Jul 2021 |

Konstantin Olchanski | Bug Report | cmake question | > > cmake check and mate in 1 move. please help.

> > -std=c++11 and -std=c++14 collision...

>

> I have a solution implemented for this, I am not happy with it, Stefan is not happy with it. See

> discussion: https://bitbucket.org/tmidas/midas/commits/50a15aa70a4fe3927764605e8964b55a3bb1732b

>

I figured it out, solution is to use:

target_compile_features(midas PUBLIC cxx_std_11)

this is how it works:

- centos-7 (g++ has c++11 off by default): -std=gnu++11 is added automatically (not -std=c++11, but

probably correct, as some c++11 functions were available as gnu extensions)

- ubuntu-20.04 LTS without ROOT: nothing added (I guess correct, g++ has c++11 is enabled by default)

- ubuntu-20.04 LTS with -std=c++14 from ROOT: nothing added, c++14 as requested by ROOT is in affect.

- macos without ROOT: -std=gnu++11 is added automatically

- macos with -std=c++11 from ROOT: ditto, so both -std=c++11 and -std=gnu++11 are present in this order,

wrong-ish, but works.

and good luck figuring this out just from cmake documentation:

https://cmake.org/cmake/help/latest/command/target_compile_features.html

K.O. |

|

2267

|

31 Jul 2021 |

Peter Kunz | Bug Report | ss_shm_name: unsupported shared memory type, bye! | I ran into a problem trying to compile the latest MIDAS version on a Fedora

system.

mhttpd and odbedit return:

ss_shm_name: unsupported shared memory type, bye!

check_shm_type: preferred POSIXv4_SHM got SYSV_SHM

The check returns SYSV_SHM which doesn't seem to be supported in ss_shm_name.

Is there an easy solution for this?

Thanks. |

|

2268

|

02 Aug 2021 |

Andreas Suter | Bug Report | cmake with CMAKE_INSTALL_PREFIX fails | Dear Konstantin,

I have tried your adopted version. You did already quite a job which is more consistent than what I was suggesting.

Yet, I still have a problem (git sha2 2d3872dfd31) when starting on a clean system (i.e. no midas present yet):

Without CMAKE_INSTALL_PREFIX set, everything is fine.

However, when setting CMAKE_INSTALL_PREFIX, I get the following error message on the build level (cmake --build ./ -- VERBOSE=1) from the manalyzer:

[ 32%] Building CXX object manalyzer/CMakeFiles/manalyzer.dir/manalyzer.cxx.o

cd /home/l_musr_tst/Tmp/midas/build/manalyzer && /usr/bin/c++ -DHAVE_FTPLIB -DHAVE_MIDAS -DHAVE_ROOT_HTTP -DHAVE_THTTP_SERVER -DHAVE_TMFE -DHAVE_ZLIB -D_LARGEFILE64_SOURCE -I/home/l_musr_tst/Tmp/midas/manalyzer -I/usr/local/root/include -O2 -g -Wall -Wformat=2 -Wno-format-nonliteral -Wno-strict-aliasing -Wuninitialized -Wno-unused-function -std=c++11 -pipe -fsigned-char -pthread -DHAVE_ROOT -std=gnu++11 -o CMakeFiles/manalyzer.dir/manalyzer.cxx.o -c /home/l_musr_tst/Tmp/midas/manalyzer/manalyzer.cxx

In file included from /home/l_musr_tst/Tmp/midas/manalyzer/manalyzer.cxx:14:0:

/home/l_musr_tst/Tmp/midas/manalyzer/manalyzer.h:13:21: fatal error: midasio.h: No such file or directory

#include "midasio.h"

^

compilation terminated.

Obviously, still some include paths are missing. I tried quickly to see if an easy fix is possible, but I failed.

Question: is it possible to use manalyzer without midas? I am asking since the MIDAS_FOUND flag is confusing me.

> big thanks to Andreas S. for getting most of this figured out. I now understand

> much better how cmake installs things and how it generates config files, both

> find_package(midas) style and install(export) style.

>

> with the latest updates, CMAKE_INSTALL_PREFIX should work correctly. I now understand how it works,

> how to use it and how to test it, it should not break again.

>

> for posterity, my commends to Andreas's pull request:

>

> thank you for providing this code, it was very helpful. at the end I implemented things slightly differently. It took me a while to understand that I have to provide 2 “install” modes, for your case, I need to

> “install” the header files and everything works “the cmake way”, for our normal case, we use include files in-place and have to include all the git submodules to the include path. I am quite happy with the

> result. K.O.

>

> K.O. |

|

2269

|

05 Aug 2021 |

Stefan Ritt | Bug Report | mhttpd WebServer ODBTree initialization | Well, we all see it here at PSI, so this is enough reason to turn this off by default. Shall

I do it? |

|

2270

|

19 Aug 2021 |

Konstantin Olchanski | Bug Report | select() FD_SETSIZE overrun | I am looking at the mlogger in the ALPHA anti-hydrogen experiment at CERN. It is

mysteriously misbehaving during run start and stop.

The problem turns out to be with the select() system call.

The corresponding FD_SET(), FD_ISSET() & co operate on a an array of fixed size

FD_SETSIZE, value 1024, in my case. But the socket number is 1409, so we overrun

the FD_SET() array. Ouch.

I see that all uses of select() in midas have no protection against this.

(we should probably move away from select() to newer poll() or whatever it is)

Why does mlogger open so many file descriptors? The usual, scaling problems in the

history. The old midas history does not reuse file descriptors, so opens the same

3 history files (.hst, .idx, etc) for each history event. The new FILE history

opens just one file per history event. But if the number of events is bigger than

1024, we run into same trouble.

(BTW, the system limit on file descriptors is 4096 on the affected machine, 1024

on some other machines, see "limit" or "ulimit -a").

K.O. |

|

2271

|

20 Aug 2021 |

Stefan Ritt | Bug Report | select() FD_SETSIZE overrun | > I am looking at the mlogger in the ALPHA anti-hydrogen experiment at CERN. It is

> mysteriously misbehaving during run start and stop.

>

> The problem turns out to be with the select() system call.

>

> The corresponding FD_SET(), FD_ISSET() & co operate on a an array of fixed size

> FD_SETSIZE, value 1024, in my case. But the socket number is 1409, so we overrun

> the FD_SET() array. Ouch.

>

> I see that all uses of select() in midas have no protection against this.

>

> (we should probably move away from select() to newer poll() or whatever it is)

>

> Why does mlogger open so many file descriptors? The usual, scaling problems in the

> history. The old midas history does not reuse file descriptors, so opens the same

> 3 history files (.hst, .idx, etc) for each history event. The new FILE history

> opens just one file per history event. But if the number of events is bigger than

> 1024, we run into same trouble.

>

> (BTW, the system limit on file descriptors is 4096 on the affected machine, 1024

> on some other machines, see "limit" or "ulimit -a").

>

> K.O.

I cannot imagine that you have more than 1024 different events in ALPHA. That wouldn't

fit on your status page.

I have some other suspicion: The logger opens a history file on access, then closes it

again after writing to it. In the old days we had a case where we had a return from the

write function BEFORE the file has been closed. This is kind of a memory leak, but with

file descriptors. After some time of course you run out of file descriptors and crash.

Now that bug has been fixed many years ago, but it sounds to me like there is another

"fd leak" somewhere. You should add some debugging in the history code to print the

file descriptors when you open a file and when you leave that routine. The leak could

however also be somewhere else, like writing to the message file, ODB dump, ...

The right thing of course would be to rewrite everything with std::ofstream which

closes automatically the file when the object gets out of scope.

Stefan |

|

2278

|

28 Sep 2021 |

Richard Longland | Bug Report | Install clash between MIDAS 2020-08 and mscb | All,

I am performing a fresh install of MIDAS on an Ubuntu linux box. I follow the

usual installation procedure:

1) git clone https://bitbucket.org/tmidas/midas --recursive

2) cd midas

3) git checkout release/midas-2020-08

4) mkdir build

5) cd build

6) cmake ..

7) make

Step 3 warns me that

"warning: unable to rmdir 'manalyzer': Directory not empty" and

"warning: unable to rmdir 'midasio': Directory not empty"

Step 7 fails.

Compilation fails with an mhttp error related to mscb:

mhttpd.cxx:8224:59: error: too few arguments to function 'int mscb_ping(int,

short unsigned int, int, int)'

8224 | status = mscb_ping(fd, (unsigned short) ind, 1);

I was able to get around this by rolling mscb back to some old version (commit

74468dd), but am extremely nervous about mix-and-matching the code this way.

Any advice would be greatly appreciated.

Cheers,

Richard |

|

2279

|

28 Sep 2021 |

Stefan Ritt | Bug Report | Install clash between MIDAS 2020-08 and mscb | > 1) git clone https://bitbucket.org/tmidas/midas --recursive

> 2) cd midas

> 3) git checkout release/midas-2020-08

> 4) mkdir build

> 5) cd build

> 6) cmake ..

> 7) make

When you do step 3), you get

~/tmp/midas$ git checkout release/midas-2020-08

warning: unable to rmdir 'manalyzer': Directory not empty

warning: unable to rmdir 'midasio': Directory not empty

M mjson

M mscb

M mvodb

M mxml

The 'M' in front of the submodules like mscb tell you that you

have an older version of midas (namely midas-2020-08), but the

*current* submodules, which won't match. So you have to roll back

also the submodules with:

3.5) git submodule update --recursive

This fetched those versions of the submodules which match the

midas version 2020-08. See here for details:

https://git-scm.com/book/en/v2/Git-Tools-Submodules

From where did you get the command

git checkout release/xxxx ???

If you tell me the location of that documentation, I will take

care that it will be amended with the command

git submodule update --recursive

Best,

Stefan |

|

2280

|

29 Sep 2021 |

Richard Longland | Bug Report | nstall clash between MIDAS 2020-08 and mscb | Thank you, Stefan.

I found these instructions under

1) The changelog: https://midas.triumf.ca/MidasWiki/index.php/Changelog#2020-12

2) Konstantin's elog announcements (e.g. https://midas.triumf.ca/elog/Midas/2089)

I do see reference to updating the submodules under the TRIUMF install

instructions

(https://midas.triumf.ca/MidasWiki/index.php/Setup_MIDAS_experiment_at_TRIUMF#Inst

all_MIDAS) although perhaps it can be clarified.

Cheers,

Richard |

|

2281

|

29 Sep 2021 |

Stefan Ritt | Bug Report | nstall clash between MIDAS 2020-08 and mscb | > Thank you, Stefan.

>

> I found these instructions under

> 1) The changelog: https://midas.triumf.ca/MidasWiki/index.php/Changelog#2020-12

> 2) Konstantin's elog announcements (e.g. https://midas.triumf.ca/elog/Midas/2089)

>

> I do see reference to updating the submodules under the TRIUMF install

> instructions

> (https://midas.triumf.ca/MidasWiki/index.php/Setup_MIDAS_experiment_at_TRIUMF#Inst

> all_MIDAS) although perhaps it can be clarified.

>

> Cheers,

> Richard

Hi Richard,

I updated the documentation at

https://midas.triumf.ca/MidasWiki/index.php/Changelog#Updating_midas

by putting the submodule update command everywhere.

Best,

Stefan |

|

2296

|

29 Oct 2021 |

Frederik Wauters | Bug Report | midas::odb::iterator + operator | I have 16 array odb key

{"FIR Energy", {

{"Energy Gap Value", std::array<uint32_t,16>(10) },

I can get the maximum of this array like

uint32_t max_value = *std::max_element(values.begin(),values.end());

but when I need the maximum of a sub range

uint32_t max_value = *std::max_element(values.begin(),values.begin()+4);

I get

/home/labor/new_daq/frontends/SIS3316Module.cpp:584:62: error: no match for ‘operator+’ (operand types are ‘midas::odb::iterator’ and ‘int’)

584 | max_value = *std::max_element(values.begin(),values.begin()+4);

| ~~~~~~~~~~~~~~^~

| | |

| | int

|

As the + operator is overloaded for midas::odb::iterator, I was expected this to work.

(and yes, I can find the max element by accessing the elements on by one) |

|

2297

|

29 Oct 2021 |

Frederik Wauters | Bug Report | midas::odb::iterator + operator | work around | ok, so retrieving as a std::array (as it was defined) does not work

std::array<uint32_t,16> avalues = settings["FIR Energy"]["Energy Gap Value"];

but retrieving as an std::vector does, and then I have a standard c++ iterator which I can use in std stuff

std::vector<uint32_t> values = settings["FIR Energy"]["Energy Gap Value"];

> I have 16 array odb key

>

> {"FIR Energy", {

> {"Energy Gap Value", std::array<uint32_t,16>(10) },

>

> I can get the maximum of this array like

>

>

> uint32_t max_value = *std::max_element(values.begin(),values.end());

>

> but when I need the maximum of a sub range

>

> uint32_t max_value = *std::max_element(values.begin(),values.begin()+4);

>

> I get

>

> /home/labor/new_daq/frontends/SIS3316Module.cpp:584:62: error: no match for ‘operator+’ (operand types are ‘midas::odb::iterator’ and ‘int’)

> 584 | max_value = *std::max_element(values.begin(),values.begin()+4);

> | ~~~~~~~~~~~~~~^~

> | | |

> | | int

> |

>

> As the + operator is overloaded for midas::odb::iterator, I was expected this to work.

>

> (and yes, I can find the max element by accessing the elements on by one) |

|

2298

|

29 Oct 2021 |

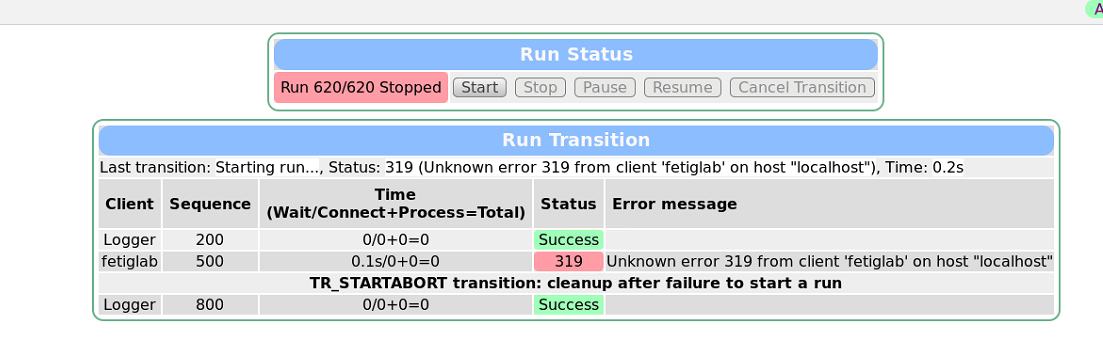

Kushal Kapoor | Bug Report | Unknown Error 319 from client | I’m trying to run MIDAS using a frontend code/client named “fetiglab”. Run stops

after 2/3sec with an error saying “Unknown error 319 from client “fetiglab” on

localhost.

Frontend code compiled without any errors and MIDAS reads the frontend

successfully, this only comes when I start the new run on MIDAS, here are a few

more details from the terminal-

11:46:32 [fetiglab,ERROR] [odb.cxx:11268:db_get_record,ERROR] struct size

mismatch for "/" (expected size: 1, size in ODB: 41920)

11:46:32 [Logger,INFO] Deleting previous file

"/home/rcmp/online3/run00621_000.root"

11:46:32 [ODBEdit,ERROR] [midas.cxx:5073:cm_transition,ERROR] transition START

aborted: client "fetiglab" returned status 319

11:46:32 [ODBEdit,ERROR] [midas.cxx:5246:cm_transition,ERROR] Could not start a

run: cm_transition() status 319, message 'Unknown error 319 from client

'fetiglab' on host "localhost"'

TR_STARTABORT transition: cleanup after failure to start a run

‌

I’ve also enclosed a screenshot for the same, any suggestions would be highly

appreciated. thanks |

| Attachment 1: Screenshot_2021-10-26_114015.png

|

|

|