| ID |

Date |

Author |

Topic |

Subject |

|

2216

|

15 Jun 2021 |

Konstantin Olchanski | Info | 1000 Mbytes/sec through midas achieved! | I am sure everybody else has 10gige and 40gige networks and are sending terabytes of data before breakfast.

Myself, I only have one computer with a 10gige network link and sufficient number of daq boards to fill

it with data. Here is my success story of getting all this data through MIDAS.

This is the anti-matter experiment ALPHA-g now under final assembly at CERN. The main particle detector is a long but

thin cylindrical TPC. It surrounds the magnetic bottle (particle trap) where we make and study anti-hydrogen. There are

64 daq boards to read the TPC cathode pads and 8 daq boards to read the anode wires and to form the trigger. Each daq

board can produce data at 80-90 Mbytes/sec (1gige links). Data is sent as UDP packets (no jumbo frames). Altera FPGA

firmware was done here at TRIUMF by Bryerton Shaw, Chris Pearson, Yair Lynn and myself.

Network interconnect is a 96-port Juniper switch with a 10gige uplink to the main daq computer (quad core Intel(R)

Xeon(R) CPU E3-1245 v6 @ 3.70GHz, 64 GBytes of DDR4 memory).

MIDAS data path is: UDP packet receiver frontend -> event builder -> mlogger -> disk -> lazylogger -> CERN EOS cloud

storage.

First chore was to get all the UDP packets into the main computer. "U" in UDP stands for "unreliable", and at first, UDP

packets have been disappearing pretty much anywhere they could. To fix this, in order:

- reading from the udp socket must be done in a dedicated thread (in the midas context, pauses to write statistics or

check alarms result in lost udp packets)

- udp socket buffer has to be very big

- maximum queue sizes must be enabled in the 10gige NIC

- ethernet flow control must be enabled on the 10gige link

- ethernet flow control must be enabled in the switch (to my surprise many switches do not have working end-to-end

ethernet flow control and lose UDP packets, ask me about this. our big juniper switch balked at first, but I got it

working eventually).

- ethernet flow control must be enabled on the 1gige links to each daq module

- ethernet flow control must be enabled in the FPGA firmware (it's a checkbox in qsys)

- FPGA firmware internally must have working back pressure and flow control (avalon and axi buses)

- ideally, this back-pressure should feed back to the trigger. ALPHA-g does not have this (it does not need it).

Next chore was to multithread the UDP receiver frontend and to multithread the event builder. Stock single-threaded

programs quickly max out with 100% CPU use and reach nowhere near 10gige data speeds.

Naive multithreading, with two threads, reader (read UDP packet, lock a mutex, put it into a deque, unlock, repeat) and

sender (lock a mutex, get a packet from deque, unlock, bm_send_event(), repeat) spends all it's time locking and

unlocking the mutex and goes nowhere fast (with 1500 byte packets, about 600 kHz of lock/unlock at 10gige speed).

So one has to do everything in batches: reader thread: accumulate 1000 udp packets in an std::vector, lock the mutex,

dump this batch into a deque, unlock, repeat; sender thread: lock mutex, get 1000 packets from the deque, unlock, stuff

the 1000 packets into 1 midas event, bm_send_event(), repeat.

It takes me 5 of these multithreaded udp reader frontends to keep up with a 10gige link without dropping any UDP packets.

My first implementation chewed up 500% CPU, that's all of it, there is only 4 CPU cores available, leaving nothing

for the event builder (and mlogger, and ...)

I had to:

a) switch from plain socket read() to socket recvmmsg() - 100000 udp packets per syscall vs 1 packet per syscall, and

b) switch from plain bm_send_event() to bm_send_event_sg() - using a scatter-gather list to avoid a memcpy() of each udp

packet into one big midas event.

Next is the event builder.

The event builder needs to read data from the 5 midas event buffers (one buffer per udp reader frontend, each midas event

contains 1000 udp packets as indovidual data banks), examine trigger timestamps inside each udp packet, collect udp

packets with matching timestamps into a physics event, bm_send_event() it to the SYSTEM buffer. rinse and repeat.

Initial single threaded implementation maxed out at about 100-200 Mbytes/sec with 100% busy CPU.

After trying several threading schemes, the final implementation has these threads:

- 5 threads to read the 5 event buffers, these threads also examine the udp packets, extract timestamps, etc

- 1 thread to sort udp packets by timestamp and to collect them into physics events

- 1 thread to bm_send_event() physics events to the SYSTEM buffer

- main thread and rpc handler thread (tmfe frontend)

(Again, to reduce lock contention, all data is passed between threads in large batches)

This got me up to about 800 Mbytes/sec. To get more, I had to switch the event builder from old plain bm_send_event() to

the scatter-gather bm_send_event_sg(), *and* I had to reduce CPU use by other programs, see steps (a) and (b) above.

So, at the end, success, full 10gige data rate from daq boards to the MIDAS SYSTEM buffer.

(But wait, what about the mlogger? In this experiment, we do not have a disk storage array to sink this

much data. But it is an already-solved problem. On the data storage machines I built for GRIFFIN - 8 SATA NAS HDDs using

raidz2 ZFS - the stock MIDAS mlogger can easily sink 1000 Mbytes/sec from SYSTEM buffer to disk).

Lessons learned:

- do not use UDP. dealing with packet loss will cost you a fortune in headache medicines and hair restorations.

- use jumbo frames. difference in per-packet overhead between 1500 byte and 9000 byte packets is almost a factor of 10.

- everything has to be done in bulk to reduce per-packet overheads. recvmmsg(), batched queue push/pop, etc

- avoid memory allocations (I has a per-packet std::string, replaced it with char[5])

- avoid memcpy(), use writev(), bm_send_event_sg() & co

K.O.

P.S. Let's counting the number of data copies in this system:

x udp reader frontend:

- ethernet NIC DMA into linux network buffers

- recvmmsg() memcpy() from linux network buffer to my memory

- bm_send_event_sg() memcpy() from my memory to the MIDAS shared memory event buffer

x event builder:

- bm_receive_event() memcpy() from MIDAS shared memory event buffer to my event buffer

- my memcpy() from my event buffer to my per-udp-packet buffers

- bm_send_event_sg() memcpy() from my per-udp-packet buffers to the MIDAS shared memory event buffer (SYSTEM)

x mlogger:

- bm_receive_event() memcpy() from MIDAS SYSTEM buffer

- memcpy() in the LZ4 data compressor

- write() syscall memcpy() to linux system disk buffer

- SATA interface DMA from linux system disk buffer to disk.

Would a monolithic massively multithreaded daq application be more efficient?

("udp receiver + event builder + logger"). Yes, about 4 memcpy() out of about 10 will go away.

Would I be able to write such a monolithic daq application?

I think not. Already, at 10gige data rates, for all practical purposes, it is impossible

to debug most problems, especially subtle trouble in multithreading (race conditions)

and in memory allocations. At best, I can sprinkle assert()s and look at core dumps.

So the good old divide-and-conquer approach is still required, MIDAS still rules.

K.O. |

|

2217

|

15 Jun 2021 |

Stefan Ritt | Info | 1000 Mbytes/sec through midas achieved! | In MEG II we also kind of achieved this rate. Marco F. will post an entry soon to describe the details. There is only one thing

I want to mention, which is our network switch. Instead of an expensive high-grade switch, we chose a cheap "Chinese" high-grade

switch. We have "rack switches", which are collector switch for each rack receiving up to 10 x 1GBit inputs, and outputting 1 x

10 GBit to an "aggregation switch", which collects all 10 GBit lines form rack switches and forwards it with (currently a single

) 10 GBit line. For the rack switch we use a

MikroTik CRS354-48G-4S+2Q+RM 54 port

and for the aggregation switch

MikroTik CRS326-24S-2Q+RM 26 Port

both cost in the order of 500 US$. We were astonished that they don't loose UDP packets when all inputs send a packet at the

same time, and they have to pipe them to the single output one after the other, but apparently the switch have enough buffers

(which is usually NOT written in the data sheets).

To avoid UDP packet loss for several events, we do traffic shaping by arming the trigger only when the previous event is

completely received by the frontend. This eliminates all flow control and other complicated methods. Marco can tell you the

details.

Another interesting aspect: While we get the data into the frontend, we have problems in getting it through midas. Your

bm_send_event_sg() is maybe a good approach which we should try. To benchmark the out-of-the-box midas, I run the dummy frontend

attached on my MacBook Pro 2.4 GHz, 4 cores, 16 GB RAM, 1 TB SSD disk. I got

Event size: 7 MB

No logging: 900 events/s = 6.7 GBytes/s

Logging with LZ4 compression: 155 events/s = 1.2 GBytes/s

Logging without compression: 170 events/s = 1.3 GBytes/s

So with this simple approach I got already more than 1 GByte of "dummy data" through midas, indicating that the buffer

management is not so bad. I did use the plain mfe.c frontend framework, no bm_send_event_sg() (but mfe.c uses rpc_send_event() which is an

optimized version of bm_send_event()).

Best,

Stefan |

| Attachment 1: frontend.cxx

|

#include <stdio.h>

#include <stdlib.h>

#include <math.h>

#include <assert.h> // assert()

#include "midas.h"

#include "experim.h"

#include "mfe.h"

/*-- Globals -------------------------------------------------------*/

/* The frontend name (client name) as seen by other MIDAS clients */

const char *frontend_name = "Sample Frontend";

/* The frontend file name, don't change it */

const char *frontend_file_name = __FILE__;

/* frontend_loop is called periodically if this variable is TRUE */

BOOL frontend_call_loop = FALSE;

/* a frontend status page is displayed with this frequency in ms */

INT display_period = 3000;

/* maximum event size produced by this frontend */

INT max_event_size = 8 * 1024 * 1024;

/* maximum event size for fragmented events (EQ_FRAGMENTED) */

INT max_event_size_frag = 5 * 1024 * 1024;

/* buffer size to hold events */

INT event_buffer_size = 20 * 1024 * 1024;

/*-- Function declarations -----------------------------------------*/

INT frontend_init(void);

INT frontend_exit(void);

INT begin_of_run(INT run_number, char *error);

INT end_of_run(INT run_number, char *error);

INT pause_run(INT run_number, char *error);

INT resume_run(INT run_number, char *error);

INT frontend_loop(void);

INT read_trigger_event(char *pevent, INT off);

INT read_periodic_event(char *pevent, INT off);

INT poll_event(INT source, INT count, BOOL test);

INT interrupt_configure(INT cmd, INT source, POINTER_T adr);

/*-- Equipment list ------------------------------------------------*/

BOOL equipment_common_overwrite = TRUE;

EQUIPMENT equipment[] = {

{"Trigger", /* equipment name */

{1, 0, /* event ID, trigger mask */

"SYSTEM", /* event buffer */

EQ_POLLED, /* equipment type */

0, /* event source */

"MIDAS", /* format */

TRUE, /* enabled */

RO_RUNNING, /* read only when running */

100, /* poll for 100ms */

0, /* stop run after this event limit */

0, /* number of sub events */

0, /* don't log history */

"", "", "",},

read_trigger_event, /* readout routine */

},

{""}

};

INT frontend_init() { return SUCCESS; }

INT frontend_exit() { return SUCCESS; }

INT begin_of_run(INT run_number, char *error) { return SUCCESS; }

INT end_of_run(INT run_number, char *error) { return SUCCESS; }

INT pause_run(INT run_number, char *error) { return SUCCESS; }

INT resume_run(INT run_number, char *error) { return SUCCESS; }

INT frontend_loop() { return SUCCESS; }

INT interrupt_configure(INT cmd, INT source, POINTER_T adr) { return SUCCESS; }

/*------------------------------------------------------------------*/

INT poll_event(INT source, INT count, BOOL test)

{

int i;

DWORD flag;

for (i = 0; i < count; i++) {

/* poll hardware and set flag to TRUE if new event is available */

flag = TRUE;

if (flag)

if (!test)

return TRUE;

}

return 0;

}

/*-- Event readout -------------------------------------------------*/

INT read_trigger_event(char *pevent, INT off)

{

UINT8 *pdata;

bk_init32(pevent);

bk_create(pevent, "ADC0", TID_UINT32, (void **)&pdata);

// generate 7 MB of dummy data

pdata += (7 * 1024 * 1024);

bk_close(pevent, pdata);

return bk_size(pevent);

}

|

|

2218

|

16 Jun 2021 |

Marco Francesconi | Info | 1000 Mbytes/sec through midas achieved! | As reported by Stefan, in MEG II we have very similar ethernet throughputs.

In total, we have 34 crates each with 32 DRS4 digitiser chips and a single 1 Gbps readout link through a Xilinx Zynq SoC.

The data arrives in push mode without any external intervention, the only throttling being an optional prescaling on the trigger rate.

We discovered the hard way that 1 Gbps throughput on Zynq is not trivial at all: the embedded ethernet MAC does not support jumbo frames (always read the fine prints in the manuals!) and the embedded Linux ethernet stack seems to struggle when we go beyond 250 Mbps of UDP traffic.

Anyhow, even with the reduced speed, the maximum throughput at network input is around 8.5 Gbps which passes through the Mikrotik switches mentioned by Stefan.

We had very bad experiences in the past with similar price-point switches, observing huge packet drops when the instantaneous switching capacity cannot cope with the traffic, but so far we are happy with the Mikrotik ones.

On the receiver side, we have the DAQ server with an Intel E5-2630 v4 CPU and a 10 Gbit connection to the network using an Intel X710 Network card.

In the past, we used also a "cheap" 10 Gbit card from Tehuti but the driver performance was so bad that it could not digest more than 5 Gbps of data.

The current frontend is based on the mfe.c scheme for historical reasons (the very first version dates back to 2015).

We opted for a monolithic multithread solution so we can reuse the underlying DAQ code for other experiments which may not have the complete Midas backend.

Just to mention them: one is the FOOT experiment (which afaik uses an adapted version of Altas DAQ) and the other is the LOLX experiment (for which we are going to ship to Canada soon a small 32 channel system using Midas).

A major modification to Konstantin scheme is that we need to calibrate all WFMs online so that a software zero suppression can be applied to reduce the final data size (that part is still to be implemented).

This requirement results in additional resource usage to parse the UDP content into floats and calibrate them.

Currently, we have 7 packet collector threads to digest the full packet flow (using recvmmsg), followed by an event building stage that uses 4 threads and 3 other threads for WFM calibration.

We have progressive packet numbers on each packet generated by the hardware and a set of flags marking the start and end of the event; combining the packet number difference between the start and end of the event and the total received packets for that event it is really easy to understand if packet drops are happening.

All the thread infrastructure was tested and we could digest the complete throughput, we still have to finalise the full 10 Gbit connection to Midas because the final system has been installed only recently (April).

We are using EQ_USER flag to push events into mfe.c buffers with up to 4 threads, but I was observing that above ~1.5 Gbps the rb_get_wp() returns almost always DB_TIMEOUT and I'm forced to drop the event.

This conflicts with the measurements reported by Stefan (we were discussing this yesterday), so we are still investigating the possible cause.

It is difficult to report three years of development in a single Elog, I hope I put all the relevant point here.

It looks to me that we opted for very complementary approaches for high throughput ethernet with Midas, and I think there are still a lot of details that could be worth reporting.

In case someone organises some kind of "virtual workshop" on this, I'm willing to participate.

Best,

Marco

> In MEG II we also kind of achieved this rate. Marco F. will post an entry soon to describe the details. There is only one thing

> I want to mention, which is our network switch. Instead of an expensive high-grade switch, we chose a cheap "Chinese" high-grade

> switch. We have "rack switches", which are collector switch for each rack receiving up to 10 x 1GBit inputs, and outputting 1 x

> 10 GBit to an "aggregation switch", which collects all 10 GBit lines form rack switches and forwards it with (currently a single

> ) 10 GBit line. For the rack switch we use a

>

> MikroTik CRS354-48G-4S+2Q+RM 54 port

>

> and for the aggregation switch

>

> MikroTik CRS326-24S-2Q+RM 26 Port

>

> both cost in the order of 500 US$. We were astonished that they don't loose UDP packets when all inputs send a packet at the

> same time, and they have to pipe them to the single output one after the other, but apparently the switch have enough buffers

> (which is usually NOT written in the data sheets).

>

> To avoid UDP packet loss for several events, we do traffic shaping by arming the trigger only when the previous event is

> completely received by the frontend. This eliminates all flow control and other complicated methods. Marco can tell you the

> details.

>

> Another interesting aspect: While we get the data into the frontend, we have problems in getting it through midas. Your

> bm_send_event_sg() is maybe a good approach which we should try. To benchmark the out-of-the-box midas, I run the dummy frontend

> attached on my MacBook Pro 2.4 GHz, 4 cores, 16 GB RAM, 1 TB SSD disk. I got

>

> Event size: 7 MB

>

> No logging: 900 events/s = 6.7 GBytes/s

>

> Logging with LZ4 compression: 155 events/s = 1.2 GBytes/s

>

> Logging without compression: 170 events/s = 1.3 GBytes/s

>

> So with this simple approach I got already more than 1 GByte of "dummy data" through midas, indicating that the buffer

> management is not so bad. I did use the plain mfe.c frontend framework, no bm_send_event_sg() (but mfe.c uses rpc_send_event() which is an

> optimized version of bm_send_event()).

>

> Best,

> Stefan |

|

2220

|

17 Jun 2021 |

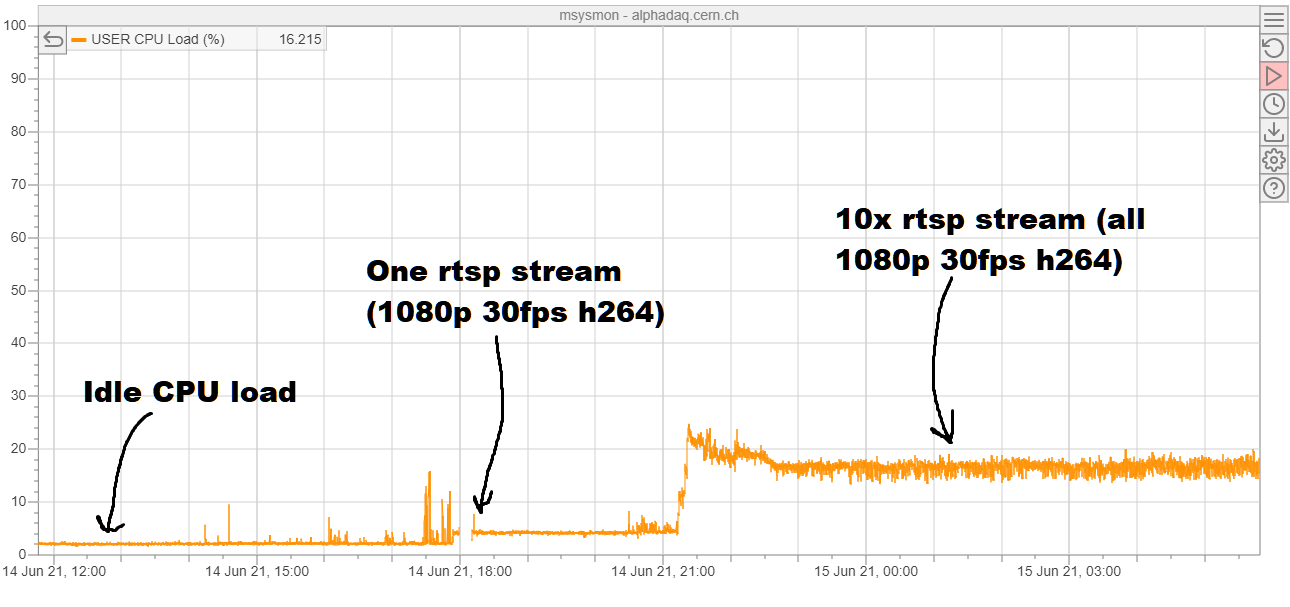

Joseph McKenna | Info | Add support for rtsp camera streams in mlogger (history_image.cxx) | mlogger (history_image) now supports rtsp cameras, in ALPHA we have

acquisitioned several new network connected cameras. Unfortunately they dont

have a way of just capturing a single frame using libcurl

========================================

Motivation to link to OpenCV libraries

========================================

After looking at the ffmpeg libraries, it seemed non trivial to use them to

listen to a rtsp stream and write a series of jpgs.

OpenCV became an obvious choice (it is itself linked to ffmpeg and

gstreamer), its a popular, multiplatform, open source library that's easy to

use. It is available in the default package managers in centos 7 and ubuntu

(an is installed by default on lxplus).

========================================

How it works:

========================================

The framework laid out in history_image.cxx is great. A separate thread is

dedicated for each camera. This is continued with the rtsp support, using

the same periodicity:

if (ss_time() >= o["Last fetch"] + o["Period"]) {

An rtsp camera is detected by its URL, if the URL starts with ‘rtsp://’ its

obvious its using the rtsp protocol and the cv::VideoCapture object is

created (line 147).

If the connection fails, it will continue to retry, but only send an error

message on the first 10 attempts (line 150). This counter is reset on

successful connection

If MIDAS has been built without OpenCV, mlogger will send an error message

that OpenCV is required if a rtsp URL is given (line 166)

The VideoCapture ‘stays live' and will grab frames from the camera based on

the sleep, saving to file based on the Period set in the ODB.

If the VideoCapture object is unable to grab a frame, it will release() the

camera, send an error message to MIDAS, then destroy itself, and create a

new version (this destroy and create fully resets the connection to a

camera, required if its on flaky wifi)

If the VideoCapture gets an empty frame, it also follows the same reset

steps.

If the VideoCaption fills a cv::Frame object successfully, the image is

saved to disk in the same way as the curl tools.

========================================

Concerns for the future:

========================================

VideoCapture is decoding the video stream in the background, allowing us to

grab frames at will. This is nice as we can be pretty agnostic to the video

format in the stream (I tested with h264 from a TP-LINK TAPO C100, but the

CPU usage is not negligible.

I noticed that this used ~2% of the CPU time on an intel i7-4770 CPU, given

enough cameras this is considerable. In ALPHA, I have been testing with 10

cameras:

elog:2220/1

My suggestion / request would be to move the camera management out of

mlogger and into a new program (mcamera?), so that users can choose to off

load the CPU load to another system (I understand the OpenCV will use GPU

decoders if available also, which can also lighten the CPU load). |

| Attachment 1: unnamed.png

|

|

|

2222

|

18 Jun 2021 |

Konstantin Olchanski | Info | 1000 Mbytes/sec through midas achieved! | > In MEG II we also kind of achieved this rate.

>

> Instead of an expensive high-grade switch, we chose a cheap "Chinese" high-grade switch.

Right. We built this DAQ system about 3 years ago and the cheep Chineese switches arrived

on the market about 1 year after we purchased the big 96 port juniper switch. Bad timing/good timing.

Actually I have a very nice 24-port 1gige switch ($2000 about 3 years ago), I could have

used 4 of them in parallel, but they were discontinued and replaced with a $5000 switch

(+$3000 for a 10gige uplink. I think I got the last very last one cheap switch).

But not all Chineese switches are equal. We have an Ubiquity 10gige switch, and it does

not have working end-to-end ethernet flow control. (yikes!).

BTW, for this project we could not use just any cheap switch, we must have 64 fiber SFP ports

for connecting on-TPC electronics. This narrows the market significantly and it does

not match the industry standard port counts 8-16-24-48-96.

> MikroTik CRS354-48G-4S+2Q+RM 54 port

> MikroTik CRS326-24S-2Q+RM 26 Port

We have a hard time buying this stuff in Vancouver BC, Canada. Most of our regular suppliers

are US based and there is a technology trade war still going on between the US and China.

I guess we could buy direct on alibaba, but for the risk of scammers, scalpers and iffy shipping.

> both cost in the order of 500 US$

tell one how much we overpay for US based stuff. not surprising, with how Cisco & co can afford

to buy sports arenas, etc.

> We were astonished that they don't loose UDP packets when all inputs send a packet at the

> same time, and they have to pipe them to the single output one after the other,

> but apparently the switch have enough buffers.

You probably see ethernet flow control in action. Look at the counters for ethernet pause frames

in your daq boards and in your main computer.

> (which is usually NOT written in the data sheets).

True, when I looked into this, I found a paper by somebody in Berkley for special

technique to measure the size of such buffers.

(The big Juniper switch has only 8 Mbytes of buffer. The current wisdom for backbone networks

is to have as little buffering as possible).

> To avoid UDP packet loss for several events, we do traffic shaping by arming the trigger only when the previous event is

> completely received by the frontend. This eliminates all flow control and other complicated methods. Marco can tell you the

> details.

We do not do this. (very bad!). When each trigger arrives, all 64+8 DAQ boards send a train of UDP packets

at maximum line speed (64+8 at 1 gige) all funneled into one 10 gige ((64+8)/10 oversubscription).

Before we got ethernet flow control to work properly, we had to throttle all the 1gige links by about 60%

to get any complete events at all. This would not have been acceptable for physics data taking.

> Another interesting aspect: While we get the data into the frontend, we have problems in getting it through midas. Your

> bm_send_event_sg() is maybe a good approach which we should try. To benchmark the out-of-the-box midas, I run the dummy frontend

> attached on my MacBook Pro 2.4 GHz, 4 cores, 16 GB RAM, 1 TB SSD disk.

Dummy frontend is not very representative, because limitation is the memory bandwidth

and CPU load, and a real ethernet receiver has quite a bit of both (interrupt processing,

DMA into memory, implicit memcpy() inside the socket read()).

For example, typical memcpy() speeds are between 22 and 10 Gbytes/sec for current

generation CPUs and DRAM. This translates for a total budget of 22 and 10 memcpy()

at 10gige speeds. Subtract from this 1 memcpy() to DMA data from ethernet into memory

and 1 memcpy() to DMA data from memory to storage. Subtract from this 2 implicit

memcpy() for read() in the frontend and write() in mlogger. (the Linux sendfile() syscall

was invented to cut them out). Subtract from this 1 memcpy() for instruction and incidental

data fetch (no interesting program fits into cache). Subtract from this memory bandwidth

for running the rest of linux (systemd, ssh, cron jobs, NFS, etc). Hardly anything

left when all is said and done. (Found it, the alphagdaq memcpy() runs at 14 Gbytes/sec,

so total budget of 14 memcpy() at 10gige speeds).

And the event builder eats up 2 CPU cores to process the UDP packets at 10gige rate,

there is quite a bit of CPU-expensive data unpacking, inspection and processing

going on that cannot be cut out. (alphagdaq has 4 cores, "8 threads").

K.O.

P.S. Waiting for rack-mounted machines with AMD "X" series processors... K.O. |

|

2223

|

18 Jun 2021 |

Konstantin Olchanski | Info | 1000 Mbytes/sec through midas achieved! | > ... MEG II ... 34 crates each with 32 DRS4 digitiser chips and a single 1 Gbps readout link through a Xilinx Zynq SoC.

>

> Zynq ... embedded ethernet MAC does not support jumbo frames (always read the fine prints in the manuals!)

> and the embedded Linux ethernet stack seems to struggle when we go beyond 250 Mbps of UDP traffic.

that's an ouch. we use the altera ethernet mac, and jumbo frames are supported, but the firmware data path

was originally written assuming 1500-byte packets and it is too much work to rewrite it for jumbo frames.

we send the data directly from the FPGA fabric to the ethernet, there is an avalon/axi bus multiplexer

to split the ethernet packets to the NIOS slow control CPU. not sure if such scheme is possible

for SoC FPGAs with embedded ARM CPUs.

and yes, a 1 GHz ARM CPU will not do 10gige. You see it yourself, measure your memcpy() speed. Where

typical PC will have dual-channel 128-bit wide memory (and the famous for it's low latency

Intel memory controller), ARM SoC will have at best 64-bit wide memory (some boards are only 32-bit wide!),

with DDR3 (not DDR4) severely under-clocked (i.e. DDR3-900, etc). This is why the new Apple ARM chips

are so interesting - can Apple ARM memory controller beat the Intel x86 memory controller?

> On the receiver side, we have the DAQ server with an Intel E5-2630 v4 CPU

that's the right gear for the job. quad-channel memory with nominal "Max Memory Bandwidth 68.3 GB/s",

10 CPU cores. My benchmark of memcpy() for the much older duad-channel memory i7-4820 with DDR3-1600 DIMMs

is 20 Gbytes/sec. waiting for ARM CPU with similar specs.

> and a 10 Gbit connection to the network using an Intel X710 Network card.

> In the past, we used also a "cheap" 10 Gbit card from Tehuti but the driver performance was so bad that it could not digest more than 5 Gbps of data.

yup, same here. use Intel ethernet exclusively, even for 1gige links.

> A major modification to Konstantin scheme is that we need to calibrate all WFMs online so that a software zero suppression

I implemented hardware zero suppression in the FPGA code. I think 1 GHz ARM CPU does not have the oomph for this.

> rb_get_wp() returns almost always DB_TIMEOUT

replace rb_xxx() with std::deque<std::vector<char>> (protected by a mutex, of course). lots of stuff in the mfe.c frontend

is obsolete in the same way. check out the newer tmfe frontends (tmfe.md, tmfe.h and tmfe examples).

> It is difficult to report three years of development in a single Elog

but quite successful at it. big thanks for your write-up. I think our info is quite useful for the next people.

K.O. |

|

2224

|

18 Jun 2021 |

Konstantin Olchanski | Info | Add support for rtsp camera streams in mlogger (history_image.cxx) | > mlogger (history_image) now supports rtsp cameras

my goodness, we will drive the video surveillance industry out of business.

> My suggestion / request would be to move the camera management out of

> mlogger and into a new program (mcamera?), so that users can choose to off

> load the CPU load to another system (I understand the OpenCV will use GPU

> decoders if available also, which can also lighten the CPU load).

every 2 years I itch to separate mlogger into two parts - data logger

and history logger.

but then I remember that the "I" in MIDAS stands for "integrated",

and "M" stands for "maximum" and I say, "nah..."

(I guess we are not maximum integrated enough to have mhttpd, mserver

and mlogger to be one monolithic executable).

There is also a line of thinking that mlogger should remain single-threaded

for maximum reliability and ease of debugging. So if we keep adding multithreaded

stuff to it, perhaps it should be split-apart after all. (anything that makes

the size of mlogger.cxx smaller is a good thing, imo).

K.O. |

|

2257

|

09 Jul 2021 |

Konstantin Olchanski | Info | cannot push to bitbucket | the day has arrived when I cannot git push to bitbucket. cloud computing rules!

I have never seen this error before and I do not think we have any hooks installed,

so it must be some bitbucket stuff. their status page says some kind of maintenance

is happening, but the promised error message is "repository is read only" or something

similar.

I hope this clears out automatically. I am updating all the cmake crud and I have no idea

which changes I already pushed and which I did not, so no idea if anything will work for

people who pull from midas until this problem is cleared out.

daq00:mvodb$ git push

X11 forwarding request failed on channel 0

Enumerating objects: 3, done.

Counting objects: 100% (3/3), done.

Delta compression using up to 12 threads

Compressing objects: 100% (2/2), done.

Writing objects: 100% (2/2), 247 bytes | 247.00 KiB/s, done.

Total 2 (delta 1), reused 0 (delta 0)

remote: null value in column "attempts" violates not-null constraint

remote: DETAIL: Failing row contains (13586899, 2021-07-10 01:13:28.812076+00, 1970-01-01

00:00:00+00, 1970-01-01 00:00:00+00, 65975727, null).

To bitbucket.org:tmidas/mvodb.git

! [remote rejected] master -> master (pre-receive hook declined)

error: failed to push some refs to 'git@bitbucket.org:tmidas/mvodb.git'

daq00:mvodb$

K.O. |

|

2258

|

11 Jul 2021 |

Konstantin Olchanski | Info | midas cmake update | I reworked the midas cmake files:

- install via CMAKE_INSTALL_PREFIX should work correctly now:

- installed are bin, lib and include - everything needed to build against the midas library

- if built without CMAKE_INSTALL_PREFIX, a special mode "MIDAS_NO_INSTALL_INCLUDE_FILES" is activated, and the include path

contains all the subdirectories need for compilation

- -I$MIDASSYS/include and -L$MIDASSYS/lib -lmidas work in both cases

- to "use" midas, I recommend: include($ENV{MIDASSYS}/lib/midas-targets.cmake)

- config files generated for find_package(midas) now have correct information (a manually constructed subset of information

automatically exported by cmake's install(export))

- people who want to use "find_package(midas)" will have to contribute documentation on how to use it (explain the magic used to

find the "right midas" in /usr/local/midas or in /midas or in ~/packages/midas or in ~/pacjages/new-midas) and contribute an

example superproject that shows how to use it and that can be run from the bitpucket automatic build. (features that are not part

of the automatic build we cannot insure against breakage).

On my side, here is an example of using include($ENV{MIDASSYS}/lib/midas-targets.cmake). I posted this before, it is used in

midas/examples/experiment and I will ask ben to include it into the midas wiki documentation.

Below is the complete cmake file for building the alpha-g event bnuilder and main control frontend. When presented like this, I

have to agree that cmake does provide positive value to the user. (the jury is still out whether it balances out against the

negative value in the extra work to "just support find_package(midas) already!").

#

# CMakeLists.txt for alpha-g frontends

#

cmake_minimum_required(VERSION 3.12)

project(agdaq_frontends)

include($ENV{MIDASSYS}/lib/midas-targets.cmake)

add_compile_options("-O2")

add_compile_options("-g")

#add_compile_options("-std=c++11")

add_compile_options(-Wall -Wformat=2 -Wno-format-nonliteral -Wno-strict-aliasing -Wuninitialized -Wno-unused-function)

add_compile_options("-DTMFE_REV0")

add_compile_options("-DOS_LINUX")

add_executable(feevb feevb.cxx TsSync.cxx)

target_link_libraries(feevb midas)

add_executable(fectrl fectrl.cxx GrifComm.cxx EsperComm.cxx JsonTo.cxx KOtcp.cxx $ENV{MIDASSYS}/src/tmfe_rev0.cxx)

target_link_libraries(fectrl midas)

#end |

|

2261

|

13 Jul 2021 |

Stefan Ritt | Info | MidasConfig.cmake usage | Thanks for the contribution of MidasConfig.cmake. May I kindly ask for one extension:

Many of our frontends require inclusion of some midas-supplied drivers and libraries

residing under

$MIDASSYS/drivers/class/

$MIDASSYS/drivers/device

$MIDASSYS/mscb/src/

$MIDASSYS/src/mfe.cxx

I guess this can be easily added by defining a MIDAS_SOURCES in MidasConfig.cmake, so

that I can do things like:

add_executable(my_fe

myfe.cxx

$(MIDAS_SOURCES}/src/mfe.cxx

${MIDAS_SOURCES}/drivers/class/hv.cxx

...)

Does this make sense or is there a more elegant way for that?

Stefan |

|

2262

|

13 Jul 2021 |

Konstantin Olchanski | Info | MidasConfig.cmake usage | > $MIDASSYS/drivers/class/

> $MIDASSYS/drivers/device

> $MIDASSYS/mscb/src/

> $MIDASSYS/src/mfe.cxx

>

> I guess this can be easily added by defining a MIDAS_SOURCES in MidasConfig.cmake, so

> that I can do things like:

>

> add_executable(my_fe

> myfe.cxx

> $(MIDAS_SOURCES}/src/mfe.cxx

> ${MIDAS_SOURCES}/drivers/class/hv.cxx

> ...)

1) remove $(MIDAS_SOURCES}/src/mfe.cxx from "add_executable", add "mfe" to

target_link_libraries() as in examples/experiment/frontend:

add_executable(frontend frontend.cxx)

target_link_libraries(frontend mfe midas)

2) ${MIDAS_SOURCES}/drivers/class/hv.cxx surely is ${MIDASSYS}/drivers/...

If MIDAS is built with non-default CMAKE_INSTALL_PREFIX, "drivers" and co are not

available, as we do not "install" them. Where MIDASSYS should point in this case is

anybody's guess. To run MIDAS, $MIDASSYS/resources is needed, but we do not install

them either, so they are not available under CMAKE_INSTALL_PREFIX and setting

MIDASSYS to same place as CMAKE_INSTALL_PREFIX would not work.

I still think this whole business of installing into non-default CMAKE_INSTALL_PREFIX

location has not been thought through well enough. Too much thinking about how cmake works

and not enough thinking about how MIDAS works and how MIDAS is used. Good example

of "my tool is a hammer, everything else must have the shape of a nail".

K.O. |

|

2283

|

11 Oct 2021 |

Stefan Ritt | Info | Modification in the history logging system | A requested change in the history logging system has been made today. Previously, history values were

logged with a maximum frequency (usually once per second) but also with a minimum frequency, meaning

that values were logged for example every 60 seconds, even if they did not change. This causes a problem.

If a frontend is inactive or crashed which produces variables to be logged, one cannot distinguish between

a crashed or inactive frontend program or a history value which simply did not change much over time.

The history system was designed from the beginning in a way that values are only logged when they actually

change. This design pattern was broken since about spring 2021, see for example this issue:

https://bitbucket.org/tmidas/midas/issues/305/log_history_periodic-doesnt-account-for

Today I modified the history code to fix this issue. History logging is now controlled by the value of

common/Log history in the following way:

* Common/Log history = 0 means no history logging

* Common/Log history = 1 means log whenever the value changes in the ODB

* Common/Log history = N means log whenever the value changes in the ODB and

the previous write was more than N seconds ago

So most experiments should be happy with 0 or 1. Only experiments which have fluctuating values due to noisy

sensors might benefit from a value larger than 1 to limit the history logging. Anyhow this is not the preferred

way to limit history logging. This should be done by the front-end limiting the updates to the ODB. Most of the

midas slow control drivers have a “threshold” value. Only if the input changes by more then the threshold are

written to the ODB. This allows a per-channel “dead band” and not a per-event limit on history logging

as ‘log history’ would do. In addition, the threshold reduces the write accesses to the ODB, although that is

only important for very large experiments.

Stefan |

|

2316

|

26 Jan 2022 |

Konstantin Olchanski | Info | MityCAMAC Login | For those curious about CAMAC controllers, this one was built around 2014 to

replace the aging CAMAC A1/A2 controllers (parallel and serial) in the TRIUMF

cyclotron controls system (around 50 CAMAC crates). It implements the main

and the auxiliary controller mode (single width and double width modules).

The design predates Altera Cyclone-5 SoC and has separate

ARM processor (TI 335x) and Cyclone-4 FPGA connected by GPMC bus.

ARM processor boots Linux kernel and CentOS-7 userland from an SD card,

FPGA boots from it's own EPCS flash.

User program running on the ARM processor (i.e. a MIDAS frontend)

initiates CAMAC operations, FPGA executes them. Quite simple.

K.O. |

|

2348

|

23 Feb 2022 |

Stefan Ritt | Info | Midas slow control event generation switched to 32-bit banks | The midas slow control system class drivers automatically read their equipment and generate events containing midas banks. So far these have been 16-bit banks using bk_init(). But now more and more experiments use large amount of channels, so the 16-bit address space is exceeded. Until last week, there was even no check that this happens, leading to unpredictable crashes.

Therefore I switched the bank generation in the drivers generic.cxx, hv.cxx and multi.cxx to 32-bit banks via bk_init32(). This should be in principle transparent, since the midas bank functions automatically detect the bank type during reading. But I thought I let everybody know just in case.

Stefan |

|

2350

|

03 Mar 2022 |

Konstantin Olchanski | Info | zlib required, lz4 internal | as of commit 8eb18e4ae9c57a8a802219b90d4dc218eb8fdefb, the gzip compression

library is required, not optional.

this fixes midas and manalyzer mis-build if the system gzip library

is accidentally not installed. (is there any situation where

gzip library is not installed on purpose?)

midas internal lz4 compression library was renamed to mlz4 to avoid collision

against system lz4 library (where present). lz4 files from midasio are now

used, lz4 files in midas/include and midas/src are removed.

I see that on recent versions of ubuntu we could switch to the system version

of the lz4 library. however, on centos-7 systems it is usually not present

and it still is a supported and widely used platform, so we stay

with the midas-internal library for now.

K.O. |

|

2351

|

03 Mar 2022 |

Konstantin Olchanski | Info | manalyzer updated | manalyzer was updated to latest version. mostly multi-threading improvements from

Joseph and myself. K.O. |

|

2355

|

16 Mar 2022 |

Stefan Ritt | Info | New midas sequencer version | A new version of the midas sequencer has been developed and now available in the

develop/seq_eval branch. Many thanks to Lewis Van Winkle and his TinyExpr library

(https://codeplea.com/tinyexpr), which has now been integrated into the sequencer

and allow arbitrary Math expressions. Here is a complete list of new features:

* Math is now possible in all expressions, such as "x = $i*3 + sin($y*pi)^2", or

in "ODBSET /Path/value[$i*2+1], 10"

* "SET <var>,<value>" can be written as "<var>=<value>", but the old syntax is

still possible.

* There are new functions ODBCREATE and ODBDLETE to create and delete ODB keys,

including arrays

* Variable arrays are now possible, like "a[5] = 0" and "MESSAGE $a[5]"

If the branch works for us in the next days and I don't get complaints from

others, I will merge the branch into develop next week.

Stefan |

|

2358

|

22 Mar 2022 |

Stefan Ritt | Info | New midas sequencer version | After several days of testing in various experiments, the new sequencer has

been merged into the develop branch. One more feature was added. The path to

the ODB can now contain variables which are substituted with their values.

Instead writing

ODBSET /Equipment/XYZ/Setting/1/Switch, 1

ODBSET /Equipment/XYZ/Setting/2/Switch, 1

ODBSET /Equipment/XYZ/Setting/3/Switch, 1

one can now write

LOOP i, 3

ODBSET /Equipment/XYZ/Setting/$i/Switch, 1

ENDLOOP

Of course it is not possible for me to test any possible script. So if you

have issues with the new sequencer, please don't hesitate to report them

back to me.

Best,

Stefan |

|

2382

|

12 Apr 2022 |

Konstantin Olchanski | Info | ODB JSON support | > > > > odbedit can now save ODB in JSON-formatted files.

> > encode NaN, Inf and -Inf as JSON string values "NaN", "Infinity" and "-Infinity". (Corresponding to the respective Javascript values).

> http://docs.oasis-open.org/odata/odata-json-format/v4.0/os/odata-json-format-v4.0-os.html

> > Values of types [...] Edm.Single, Edm.Double, and Edm.Decimal are represented as JSON numbers,

> except for NaN, INF, and –INF which are represented as strings "NaN", "INF" and "-INF".

> https://xkcd.com/927/

Per xkcd, there is a new json standard "json5". In addition to other things, numeric

values NaN, +Infinity and -Infinity are encoded as literals NaN, Infinity and -Infinity (without quotes):

https://spec.json5.org/#numbers

Good discussion of this mess here:

https://stackoverflow.com/questions/1423081/json-left-out-infinity-and-nan-json-status-in-ecmascript

K.O. |

|

2383

|

13 Apr 2022 |

Stefan Ritt | Info | ODB JSON support | > Per xkcd, there is a new json standard "json5". In addition to other things, numeric

> values NaN, +Infinity and -Infinity are encoded as literals NaN, Infinity and -Infinity (without quotes):

> https://spec.json5.org/#numbers

Just for curiosity: Is this implemented by the midas json library now? |

|