| ID |

Date |

Author |

Topic |

Subject |

|

1843

|

21 Feb 2020 |

Konstantin Olchanski | Forum | Writting Midas Events via FPGAs | Hi, Stefan - is this our famous 64-bit misalignement? Where we have each alternating bank aligned and misaligned at 64 bits? Without changing the data

format, one can always store data in 64-bit aligned banks by inserting a dummy banks between real banks:

event header

bank header

bank1 --- 64-bit aligned --- with data

bank2 --- misaligned, no data

bank3 --- 64-bit aligned --- with data

bank4 --- misaligned, no data

...

for sure, wastes space for bank2, bank4, etc, but at 12 bytes per bank, maybe this is negligible overhead compared to total event size.

BTW, aligned-to-64-bit is old news. The the PWB FPGA, I have 128-bit data paths to DDR RAM, the data has to be aligned to 128 bits, or else!

K.O.

> Actually the cause of all of the is a real bug in the midas functions. We want each bank 8-byte aligned, so there is code in bk_close like:

>

> midas.cxx:14788:

> ((BANK_HEADER *) event)->data_size += sizeof(BANK32) + ALIGN8(pbk32->data_size);

>

> While the old sizeof(BANK)=8, the extended sizeof(BANK32)=12, so not 8-byte aligned. This code should rather be:

>

> ((BANK_HEADER *) event)->data_size += ALIGN8(sizeof(BANK32) +pbk32->data_size);

>

> But if we change that, it would break every midas data file on this planet!

>

> The only chance I see is to use the "flags" in the BANK_HEADER to distinguish a current bank from a "correct" bank.

> So we could introduce a flag BANK_FORMAT_ALIGNED which distinguishes between the two pieces of code above.

> Then bk_iterate32 would look at that flag and do the right thing.

>

> Any thoughts?

>

> Best,

> Stefan |

|

1842

|

20 Feb 2020 |

Stefan Ritt | Forum | Writting Midas Events via FPGAs | Actually the cause of all of the is a real bug in the midas functions. We want each bank 8-byte aligned, so there is code in bk_close like:

midas.cxx:14788:

((BANK_HEADER *) event)->data_size += sizeof(BANK32) + ALIGN8(pbk32->data_size);

While the old sizeof(BANK)=8, the extended sizeof(BANK32)=12, so not 8-byte aligned. This code should rather be:

((BANK_HEADER *) event)->data_size += ALIGN8(sizeof(BANK32) +pbk32->data_size);

But if we change that, it would break every midas data file on this planet!

The only chance I see is to use the "flags" in the BANK_HEADER to distinguish a current bank from a "correct" bank.

So we could introduce a flag BANK_FORMAT_ALIGNED which distinguishes between the two pieces of code above.

Then bk_iterate32 would look at that flag and do the right thing.

Any thoughts?

Best,

Stefan |

|

1841

|

20 Feb 2020 |

Marius Koeppel | Forum | Writting Midas Events via FPGAs |

We also agree and found the problem now. Since we build everything (MIDAS Event Header, Bank Header, Banks etc.) in the FPGA we had some struggle with the MIDAS data format (http://lmu.web.psi.ch/docu/manuals/bulk_manuals/software/midas195/html/AppendixA.html). We thought that only the MIDAS Event needs to be aligned to 64 bit but as it turned out also the bank data (Stefan updated the wiki page already) needs to be aligned. Since we are using the BANK32 it was a bit unclear for us since the bank header is not 64 bit aligned. But we managed this now by adding empty data and the system is running now.

Our setup looks like this:

Software:

- mfe.cxx multithread equipment

- mfe readout thread grabs pointer from dma ring buffer

- since the dma buffer is volatile we do copy_n for transforming the data to MIDAS

- the data is already in the MIDAS format so done from our side :)

- mfe readout thread increments the ring buffer

- mfe main thread grabs events from ring buffer, sends them to the mserver

Firmware:

- Arria 10 development board

- Altera PCIe block

- Own DMA engine since we are doing burst writing DMA with PCIe 3.0.

- Own device driver

- no interrupts

If you have more questions fell free to ask. |

|

Draft

|

20 Feb 2020 |

Marius Koeppel | | |

We also agree and found the problem now. Since we build everything (MIDAS Event Header, Bank Header, Banks etc.) in the FPGA we had some struggle with the MIDAS data format (http://lmu.web.psi.ch/docu/manuals/bulk_manuals/software/midas195/html/AppendixA.html). We thought that only the MIDAS Event needs to be aligned to 64 bit but as it turned out also the bank data (Stefan updated the wiki page already) needs to be aligned. Since we are using the BANK32 it was a bit unclear for us since the bank header is not 64 bit aligned. But we managed this now by adding empty data and the system is running now.

Our setup looks like this:

- mfe.cxx multithread equipment

- mfe readout thread grabs pointer from dma ring buffer

- since the dma buffer is volatile we do copy_n for transforming the data to MIDAS

- the data is already in the MIDAS format so done from our side :)

- mfe readout thread increments the ring buffer

- mfe main thread grabs events from ring buffer, sends them to the mserver

From the firmware side we have an Arria 10 development board and

But now I am curious, which DMA controller you use? The Altera or Xilinx PCIe block with the vendor supplied DMA driver? Or you do DMA on an ARM SoC FPGA? (no PCI/PCIe,

different DMA controller, different DMA driver).

I am curious because we will be implementing pretty much what you do on ARM SoC FPGAs pretty soon, so good to know

if there is trouble to expect.

But I will probably use the tmfe.h c++ frontend and a "pure c++" ring buffer instead of mfe.cxx and the midas "rb" ring buffer.

(I did not look at your code at all, there could be a bug right there, this ring buffer stuff is tricky. With luck there is no bug

in your dma driver. The dma drivers for our vme bridges did do have bugs).

K.O. |

|

1839

|

20 Feb 2020 |

Konstantin Olchanski | Forum | Difference between "Event Data Size" and "All Bank Size" | > Thanks for pointing out this error. The "All Bank Size" contains the size of all banks including their

> bank headers, but NOT the global bank header itself. I modified the documentation accordingly.

>

> If you want to study the C code which tells you how to fill these headers, look at midas.cxx line

> 14788.

Also take a look at the midas event parser in ROOTANA midasio.cxx, the code is pretty clean c++

https://bitbucket.org/tmidas/rootana/src/master/libMidasInterface/midasio.cxx

But Stefan's code in midas.cxx and in the documentation is the authoritative information.

K.O. |

|

1838

|

20 Feb 2020 |

Konstantin Olchanski | Forum | Writting Midas Events via FPGAs | > rb_xxx functions are midas event agnostic. The receiving side in mfe.cxx (lines 1418 in receive_trigger_event) however pulls one event at a time. If you

> have some inconsistency I would put some debugging code there.

I agree with Stefan, I do not think there is any bugs in the ring buffer code.

But. I do not think we ever did DMA the data directly into the ring buffer. Hmm...

I just checked, this is what we do (and this worked in the ALPHA Si-strip DAQ system for 10 years now):

- mfe.cxx multithread equipment

- mfe readout thread grabs pointer from ring buffer

- mfe creates event headers, etc

- calls our read_event() function

- creates data bank

- DMA data into the data bank (this is the DMA from VME block reads, using DMA controller inside the UniverseII and tsi148 VME-to-PCI bridges)

- close data bank

- return to mfe

- mfe readout thread increments the ring buffer

- mfe main thread grabs events from ring buffer, sends them to the mserver

So there could be trouble:

a) the ring buffer code does not have the required "volatile" (ahem, "atomic") annotations, so DMA may have a bad interaction with compiler optimizations (values stored in registers

instead of in memory, etc)

b) the DMA driver must doctor the memory settings to (1) mark the DMA target memory uncachable or (1b) invalidate the cache after DMA completes, (2) mark the DMA target

memory unswappable.

So I see possibilities for the ring buffer to malfunction.

But now I am curious, which DMA controller you use? The Altera or Xilinx PCIe block with the vendor supplied DMA driver? Or you do DMA on an ARM SoC FPGA? (no PCI/PCIe,

different DMA controller, different DMA driver).

I am curious because we will be implementing pretty much what you do on ARM SoC FPGAs pretty soon, so good to know

if there is trouble to expect.

But I will probably use the tmfe.h c++ frontend and a "pure c++" ring buffer instead of mfe.cxx and the midas "rb" ring buffer.

(I did not look at your code at all, there could be a bug right there, this ring buffer stuff is tricky. With luck there is no bug

in your dma driver. The dma drivers for our vme bridges did do have bugs).

K.O. |

|

1837

|

20 Feb 2020 |

Konstantin Olchanski | Bug Report | RPC Error: ACK or other control chars from "db_get_values" | > The unexpected token is \0x6

> RPC Error json parser exception: SyntaxError: JSON.parse: bad control character in string literal at line 80 column 30 of the JSON data, method: "db_get_valus", params: [object Object], id: 1582020074098.

Yes, there is a problem.

Traditionally, midas strings in ODB have no restriction on the content (I think even the '\0x0' char is permitted).

But web browser javascript strings are supposed to be valid unicode (UTF-16, if I read this right: https://tc39.es/ecma262/#sec-ecmascript-language-types-string-type).

The collision between the two happens when ODB values are json-encoded by midas, then json-decoded by the web browser.

The midas json encoder (mjson.h, mjson.cxx) encodes ODB strings according to JSON rules, but does not ensure that the result is valid UTF-8. (valid UTF-8 is not required, if I read the specs correctly http://www.ecma-

international.org/publications/files/ECMA-ST/ECMA-404.pdf and https://www.json.org/json-en.html)

The web browser json decoder requires valid UTF-8 and throws exceptions if it does not like something. Different browsers it slightly differently, so we have an error handler for this in the mjsonrpc results processor.

What does this mean in practice?

Now that MIDAS is very web oriented, MIDAS strings must be web browser friendly, too:

a) all ODB key names (subdirectory names, link names, etc) must be UTF-8 unicode, and this has been enforced by ODB for some time now.

b) all ODB string values must be valid UTF-8 unicode. This is not enforced right now.

Historically, it was okey to use ODB TID_STRING to store arbitrary binary data, but now, I think, we must deprecate this,

at least for any ODB entries that could be returned to a web browser (which means all of them, after we implement a fully

html+javascript odb editor). For storing binary data, arrays of TID_CHAR, TID_DWORD & co are probably a better match.

The MIDAS and ROOTANA json decoders (the same mjson.h, mjson.cxx) do not care about UTF-8, so ODB dumps

in JSON format are not affected by any of this. (But I am not sure about the JSON decoder in ROOT).

Bottom line:

I think db_validate() should check for invalid UTF-8 in ODB key names and in TID_STRING values

and at least warn the user. (I am not sure if invalid UTF-8 can be fixed automatically). db_create()

should reject key names that are not valid UTF-8 (it already does this, I think). db_set_value(TID_STRING) should

probably reject invalid UTF-8 strings, this needs to be discussed some more.

https://bitbucket.org/tmidas/midas/issues/215/everything-in-odb-must-be-valid-utf-8

K.O. |

|

1836

|

18 Feb 2020 |

Stefan Ritt | Bug Report | RPC Error: ACK or other control chars from "db_get_values" | You are the first one reporting this error, so it must be due to your values in the ODB. Can you track it down to specific ODB contents? If so, can you post it so that I can reproduce your error?

Stefan |

|

1835

|

18 Feb 2020 |

Lukas Gerritzen | Bug Report | RPC Error: ACK or other control chars from "db_get_values" | Hi,

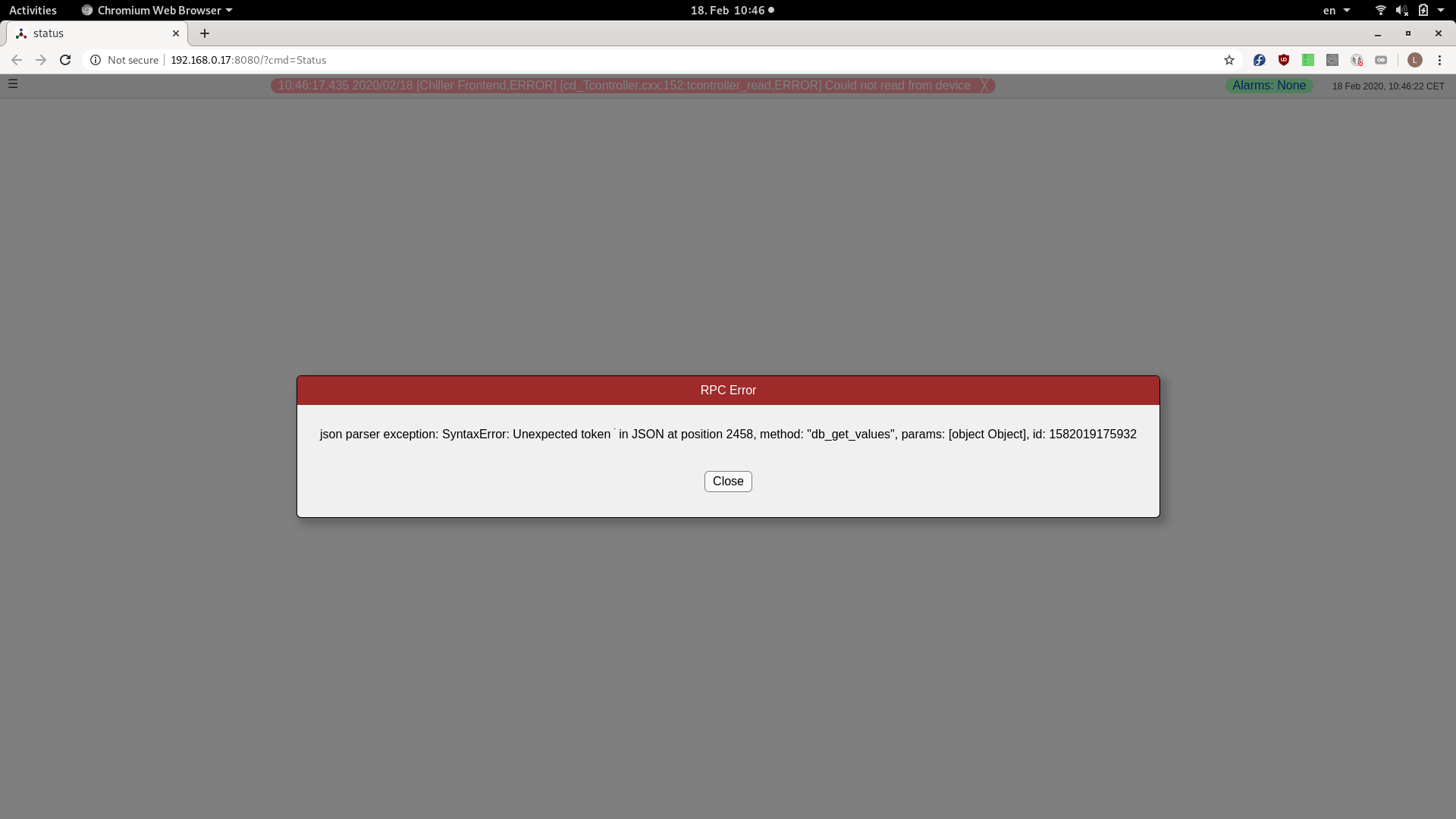

for some reason we occasionally get JSON errors in the browser when accessing MIDAS. It is then not possible to open a new window or tab, see attachment. The unexpected token is \0x6, so the acknowledge symbol.

If this happens, then all "alive sessions" keep being usable despite error messages, but show similar error messages:

>RPC Error

>json parser exception: SyntaxError: JSON.parse: bad control character in string literal at line 80 column 30 of the JSON data, method: "db_get_valus", params: [object Object], id: 1582020074098.

Do you have any idea why db_get_values yields ACK or other control characters?

Thanks |

| Attachment 1: Screenshot_from_2020-02-18_10-46-22.png

|

|

|

1834

|

14 Feb 2020 |

Stefan Ritt | Forum | Writting Midas Events via FPGAs | rb_xxx functions are midas event agnostic. The receiving side in mfe.cxx (lines 1418 in receive_trigger_event) however pulls one event at a time. If you

have some inconsistency I would put some debugging code there.

Stefan

> Hello Stefan,

> is there a difference for the later data processing (after writing the ring buffer blocks)

> if we write single events or multiple in one rb_get_wp - memcopy - rb_increment_wp cycle?

> Both Marius and me have seen some inconsistencies in the number of events produced that is reported in the status page when writing multiple

events in one go,

> so I was wondering if this is due to us treating the buffer badly or the way midas handles the events after that.

>

> Given that we produce the full event in our (FPGA) domain, an option would be to always copy one event from the dma to the midas-system buffer

in a loop.

> The question is if there is a difference (for midas) between

> [pseudo code, much simplified]

>

> while(dma_read_index < last_dma_write_index){

> if(rb_get_wp(pdata)!=SUCCESS){

> dma_read_index+=event_size;

> continue;

> }

> copy_n(dma_buffer, pdata, event_size);

> rb_increment_wp(event_size);

> dma_read_index+=event_size;

> }

>

> and

>

> while(dma_read_index < last_dma_write_index){

> if(rb_get_wp(pdata)!=SUCCESS){

> ...

> };

> total_size=max_n_events_that_fit_in_rb_block();

> copy_n(dma_buffer, pdata, total_size);

> rb_increment_wp(total_size);

> dma_read_index+=total_size;

> }

>

> Cheers,

> Konrad

>

> > The rb_xxx function are (thoroughly tested!) robust against high data rate given that you use them as intended:

> >

> > 1) Once you create the ring buffer via rb_create(), specify the maximum event size (overall event size, not bank size!). Later there is no protection

any more, so if you obtain pdata from rb_get_wp, you can of course write 4GB to pdata, overwriting everything in your memory, causing a total crash.

It's your responsibility to not write more bytes into pdata then

> > what you specified as max event size in rb_create()

> >

> > 2) Once you obtain a write pointer to the ring buffer via rb_get_wp, this function might fail when the receiving side reads data slower than the

producing side, simply because the buffer is full. In that case the producing side has to wait until space is freed up in the buffer by the receiving side.

If your call to rb_get_wp returns DB_TIMEOUT, it means that the

> > function did not obtain enough free space for the next event. In that case you have to wait (like ss_sleep(10)) and try again, until you succeed.

Only when rb_get_wp() returns DB_SUCCESS, you are allowed to write into pdata, up to the maximum event size specified in rb_create of course. I

don't see this behaviour in your code. You would need something

> > like

> >

> > do {

> > status = rb_get_wp(rbh, (void **)&pdata, 10);

> > if (status == DB_TIMEOUT)

> > ss_sleep(10);

> > } while (status == DB_TIMEOUT);

> >

> > Best,

> > Stefan

> >

> >

> > > Dear all,

> > >

> > > we creating Midas events directly inside a FPGA and send them off via DMA into the PC RAM. For reading out this RAM via Midas the FPGA

sends as a pointer where it has written the last 4kB of data. We use this pointer for telling the ring buffer of midas where the new events are. The

buffer looks something like:

> > >

> > > // event 1

> > > dma_buf[0] = 0x00000001; // Trigger and Event ID

> > > dma_buf[1] = 0x00000001; // Serial number

> > > dma_buf[2] = TIME; // time

> > > dma_buf[3] = 18*4-4*4; // event size

> > > dma_buf[4] = 18*4-6*4; // all bank size

> > > dma_buf[5] = 0x11; // flags

> > > // bank 0

> > > dma_buf[6] = 0x46454230; // bank name

> > > dma_buf[7] = 0x6; // bank type TID_DWORD

> > > dma_buf[8] = 0x3*4; // data size

> > > dma_buf[9] = 0xAFFEAFFE; // data

> > > dma_buf[10] = 0xAFFEAFFE; // data

> > > dma_buf[11] = 0xAFFEAFFE; // data

> > > // bank 1

> > > dma_buf[12] = 0x1; // bank name

> > > dma_buf[12] = 0x46454231; // bank name

> > > dma_buf[13] = 0x6; // bank type TID_DWORD

> > > dma_buf[14] = 0x3*4; // data size

> > > dma_buf[15] = 0xAFFEAFFE; // data

> > > dma_buf[16] = 0xAFFEAFFE; // data

> > > dma_buf[17] = 0xAFFEAFFE; // data

> > >

> > > // event 2

> > > .....

> > >

> > > dma_buf[fpga_pointer] = 0xXXXXXXXX;

> > >

> > >

> > > And we do something like:

> > >

> > > while{true}

> > > // obtain buffer space

> > > status = rb_get_wp(rbh, (void **)&pdata, 10);

> > > fpga_pointer = fpga.read_last_data_add();

> > >

> > > wlen = last_fpga_pointer - fpga_pointer; \\ in 32 bit words

> > > copy_n(&dma_buf[last_fpga_pointer], wlen, pdata);

> > > rb_status = rb_increment_wp(rbh, wlen * 4); \\ in byte

> > >

> > > last_fpga_pointer = fpga_pointer;

> > >

> > > Leaving the case out where the dma_buf wrap around this works fine for a small data rate. But if we increase the rate the fpga_pointer also

increases really fast and wlen gets quite big. Actually it gets bigger then max_event_size which is checked in rb_increment_wp leading to an error.

> > >

> > > The problem now is that the event size is actually not to big but since we have multi events in the buffer which are read by midas in one step.

So we think in this case the function rb_increment_wp is comparing actually the wrong thing. Also increasing the max_event_size does not help.

> > >

> > > Remark: dma_buf is volatile so memcpy is not possible here.

> > >

> > > Cheers,

> > > Marius |

|

1833

|

14 Feb 2020 |

Konrad Briggl | Forum | Writting Midas Events via FPGAs | Hello Stefan,

is there a difference for the later data processing (after writing the ring buffer blocks)

if we write single events or multiple in one rb_get_wp - memcopy - rb_increment_wp cycle?

Both Marius and me have seen some inconsistencies in the number of events produced that is reported in the status page when writing multiple events in one go,

so I was wondering if this is due to us treating the buffer badly or the way midas handles the events after that.

Given that we produce the full event in our (FPGA) domain, an option would be to always copy one event from the dma to the midas-system buffer in a loop.

The question is if there is a difference (for midas) between

[pseudo code, much simplified]

while(dma_read_index < last_dma_write_index){

if(rb_get_wp(pdata)!=SUCCESS){

dma_read_index+=event_size;

continue;

}

copy_n(dma_buffer, pdata, event_size);

rb_increment_wp(event_size);

dma_read_index+=event_size;

}

and

while(dma_read_index < last_dma_write_index){

if(rb_get_wp(pdata)!=SUCCESS){

...

};

total_size=max_n_events_that_fit_in_rb_block();

copy_n(dma_buffer, pdata, total_size);

rb_increment_wp(total_size);

dma_read_index+=total_size;

}

Cheers,

Konrad

> The rb_xxx function are (thoroughly tested!) robust against high data rate given that you use them as intended:

>

> 1) Once you create the ring buffer via rb_create(), specify the maximum event size (overall event size, not bank size!). Later there is no protection any more, so if you obtain pdata from rb_get_wp, you can of course write 4GB to pdata, overwriting everything in your memory, causing a total crash. It's your responsibility to not write more bytes into pdata then

> what you specified as max event size in rb_create()

>

> 2) Once you obtain a write pointer to the ring buffer via rb_get_wp, this function might fail when the receiving side reads data slower than the producing side, simply because the buffer is full. In that case the producing side has to wait until space is freed up in the buffer by the receiving side. If your call to rb_get_wp returns DB_TIMEOUT, it means that the

> function did not obtain enough free space for the next event. In that case you have to wait (like ss_sleep(10)) and try again, until you succeed. Only when rb_get_wp() returns DB_SUCCESS, you are allowed to write into pdata, up to the maximum event size specified in rb_create of course. I don't see this behaviour in your code. You would need something

> like

>

> do {

> status = rb_get_wp(rbh, (void **)&pdata, 10);

> if (status == DB_TIMEOUT)

> ss_sleep(10);

> } while (status == DB_TIMEOUT);

>

> Best,

> Stefan

>

>

> > Dear all,

> >

> > we creating Midas events directly inside a FPGA and send them off via DMA into the PC RAM. For reading out this RAM via Midas the FPGA sends as a pointer where it has written the last 4kB of data. We use this pointer for telling the ring buffer of midas where the new events are. The buffer looks something like:

> >

> > // event 1

> > dma_buf[0] = 0x00000001; // Trigger and Event ID

> > dma_buf[1] = 0x00000001; // Serial number

> > dma_buf[2] = TIME; // time

> > dma_buf[3] = 18*4-4*4; // event size

> > dma_buf[4] = 18*4-6*4; // all bank size

> > dma_buf[5] = 0x11; // flags

> > // bank 0

> > dma_buf[6] = 0x46454230; // bank name

> > dma_buf[7] = 0x6; // bank type TID_DWORD

> > dma_buf[8] = 0x3*4; // data size

> > dma_buf[9] = 0xAFFEAFFE; // data

> > dma_buf[10] = 0xAFFEAFFE; // data

> > dma_buf[11] = 0xAFFEAFFE; // data

> > // bank 1

> > dma_buf[12] = 0x1; // bank name

> > dma_buf[12] = 0x46454231; // bank name

> > dma_buf[13] = 0x6; // bank type TID_DWORD

> > dma_buf[14] = 0x3*4; // data size

> > dma_buf[15] = 0xAFFEAFFE; // data

> > dma_buf[16] = 0xAFFEAFFE; // data

> > dma_buf[17] = 0xAFFEAFFE; // data

> >

> > // event 2

> > .....

> >

> > dma_buf[fpga_pointer] = 0xXXXXXXXX;

> >

> >

> > And we do something like:

> >

> > while{true}

> > // obtain buffer space

> > status = rb_get_wp(rbh, (void **)&pdata, 10);

> > fpga_pointer = fpga.read_last_data_add();

> >

> > wlen = last_fpga_pointer - fpga_pointer; \\ in 32 bit words

> > copy_n(&dma_buf[last_fpga_pointer], wlen, pdata);

> > rb_status = rb_increment_wp(rbh, wlen * 4); \\ in byte

> >

> > last_fpga_pointer = fpga_pointer;

> >

> > Leaving the case out where the dma_buf wrap around this works fine for a small data rate. But if we increase the rate the fpga_pointer also increases really fast and wlen gets quite big. Actually it gets bigger then max_event_size which is checked in rb_increment_wp leading to an error.

> >

> > The problem now is that the event size is actually not to big but since we have multi events in the buffer which are read by midas in one step. So we think in this case the function rb_increment_wp is comparing actually the wrong thing. Also increasing the max_event_size does not help.

> >

> > Remark: dma_buf is volatile so memcpy is not possible here.

> >

> > Cheers,

> > Marius |

|

1832

|

13 Feb 2020 |

Berta Beltran | Bug Report | Compiling Midas in OS 10.15 Catalina | > Now you are stuck with openssl, which is optional for mhttpd. If you only use mhttpd locally, you maybe don't need SSL support. In that case you can jus do

>

> [midas/build] $ cmake -D NO_SSL=1 ..

>

> To disable that. If you do need SSL, maybe you can try to install openssl11 via mac ports.

>

> Stefan

Thanks Stefan.

If I run the compilation with the flag NO_SSL it works just fine. Still I think that Mhttpd is going to be important for us in the future as we may want to control the experiment remotely, so I will keep trying. But at least I

can get started.

Thanks

Berta |

|

1831

|

13 Feb 2020 |

Stefan Ritt | Forum | Writting Midas Events via FPGAs | The rb_xxx function are (thoroughly tested!) robust against high data rate given that you use them as intended:

1) Once you create the ring buffer via rb_create(), specify the maximum event size (overall event size, not bank size!). Later there is no protection any more, so if you obtain pdata from rb_get_wp, you can of course write 4GB to pdata, overwriting everything in your memory, causing a total crash. It's your responsibility to not write more bytes into pdata then

what you specified as max event size in rb_create()

2) Once you obtain a write pointer to the ring buffer via rb_get_wp, this function might fail when the receiving side reads data slower than the producing side, simply because the buffer is full. In that case the producing side has to wait until space is freed up in the buffer by the receiving side. If your call to rb_get_wp returns DB_TIMEOUT, it means that the

function did not obtain enough free space for the next event. In that case you have to wait (like ss_sleep(10)) and try again, until you succeed. Only when rb_get_wp() returns DB_SUCCESS, you are allowed to write into pdata, up to the maximum event size specified in rb_create of course. I don't see this behaviour in your code. You would need something

like

do {

status = rb_get_wp(rbh, (void **)&pdata, 10);

if (status == DB_TIMEOUT)

ss_sleep(10);

} while (status == DB_TIMEOUT);

Best,

Stefan

> Dear all,

>

> we creating Midas events directly inside a FPGA and send them off via DMA into the PC RAM. For reading out this RAM via Midas the FPGA sends as a pointer where it has written the last 4kB of data. We use this pointer for telling the ring buffer of midas where the new events are. The buffer looks something like:

>

> // event 1

> dma_buf[0] = 0x00000001; // Trigger and Event ID

> dma_buf[1] = 0x00000001; // Serial number

> dma_buf[2] = TIME; // time

> dma_buf[3] = 18*4-4*4; // event size

> dma_buf[4] = 18*4-6*4; // all bank size

> dma_buf[5] = 0x11; // flags

> // bank 0

> dma_buf[6] = 0x46454230; // bank name

> dma_buf[7] = 0x6; // bank type TID_DWORD

> dma_buf[8] = 0x3*4; // data size

> dma_buf[9] = 0xAFFEAFFE; // data

> dma_buf[10] = 0xAFFEAFFE; // data

> dma_buf[11] = 0xAFFEAFFE; // data

> // bank 1

> dma_buf[12] = 0x1; // bank name

> dma_buf[12] = 0x46454231; // bank name

> dma_buf[13] = 0x6; // bank type TID_DWORD

> dma_buf[14] = 0x3*4; // data size

> dma_buf[15] = 0xAFFEAFFE; // data

> dma_buf[16] = 0xAFFEAFFE; // data

> dma_buf[17] = 0xAFFEAFFE; // data

>

> // event 2

> .....

>

> dma_buf[fpga_pointer] = 0xXXXXXXXX;

>

>

> And we do something like:

>

> while{true}

> // obtain buffer space

> status = rb_get_wp(rbh, (void **)&pdata, 10);

> fpga_pointer = fpga.read_last_data_add();

>

> wlen = last_fpga_pointer - fpga_pointer; \\ in 32 bit words

> copy_n(&dma_buf[last_fpga_pointer], wlen, pdata);

> rb_status = rb_increment_wp(rbh, wlen * 4); \\ in byte

>

> last_fpga_pointer = fpga_pointer;

>

> Leaving the case out where the dma_buf wrap around this works fine for a small data rate. But if we increase the rate the fpga_pointer also increases really fast and wlen gets quite big. Actually it gets bigger then max_event_size which is checked in rb_increment_wp leading to an error.

>

> The problem now is that the event size is actually not to big but since we have multi events in the buffer which are read by midas in one step. So we think in this case the function rb_increment_wp is comparing actually the wrong thing. Also increasing the max_event_size does not help.

>

> Remark: dma_buf is volatile so memcpy is not possible here.

>

> Cheers,

> Marius |

|

Draft

|

13 Feb 2020 |

Stefan Ritt | Forum | Writting Midas Events via FPGAs | The rb_xxx functions are (thoroughly tested) robust against high data rate given that you use them as intended:

1) Once you create the ring buffer via rb_create(), specify the maximum event size (midas event, not bank size!). Later there is not protection any more, so if you obtain pdata from rb_get_wp, you can of course write 4GB to pdata, overwriting everything in your memory, causing a fatal crash. It's your duty not to write more bytes into pdata then what you specified in rb_create()

2) Once you obtain a write pointer to the ring buffer via rb_get_wp(), this function might fail when the receiving side reads data slower than the producing side, simply because the buffer is full. In that case the producing side was to wait to get new buffer space. If you call to rb_get_wp() returns DB_TIMEOUT, it means that the function did not obtain enough free space for the next event. In that case you have to wait (like ss_sleep(10)) and try again. Only when rb_get_wp() returns DB_SUCCESS, you are allowed to write into pdata (up to the maximum event size specified in rb_create() of course).

/Stefan

> Dear all,

>

> we creating Midas events directly inside a FPGA and send them off via DMA into the PC RAM. For reading out this RAM via Midas the FPGA sends as a pointer where it has written the last 4kB of data. We use this pointer for telling the ring buffer of midas where the new events are. The buffer looks something like:

>

> // event 1

> dma_buf[0] = 0x00000001; // Trigger and Event ID

> dma_buf[1] = 0x00000001; // Serial number

> dma_buf[2] = TIME; // time

> dma_buf[3] = 18*4-4*4; // event size

> dma_buf[4] = 18*4-6*4; // all bank size

> dma_buf[5] = 0x11; // flags

> // bank 0

> dma_buf[6] = 0x46454230; // bank name

> dma_buf[7] = 0x6; // bank type TID_DWORD

> dma_buf[8] = 0x3*4; // data size

> dma_buf[9] = 0xAFFEAFFE; // data

> dma_buf[10] = 0xAFFEAFFE; // data

> dma_buf[11] = 0xAFFEAFFE; // data

> // bank 1

> dma_buf[12] = 0x1; // bank name

> dma_buf[12] = 0x46454231; // bank name

> dma_buf[13] = 0x6; // bank type TID_DWORD

> dma_buf[14] = 0x3*4; // data size

> dma_buf[15] = 0xAFFEAFFE; // data

> dma_buf[16] = 0xAFFEAFFE; // data

> dma_buf[17] = 0xAFFEAFFE; // data

>

> // event 2

> .....

>

> dma_buf[fpga_pointer] = 0xXXXXXXXX;

>

>

> And we do something like:

>

> while{true}

> // obtain buffer space

> status = rb_get_wp(rbh, (void **)&pdata, 10);

> fpga_pointer = fpga.read_last_data_add();

>

> wlen = last_fpga_pointer - fpga_pointer; \\ in 32 bit words

> copy_n(&dma_buf[last_fpga_pointer], wlen, pdata);

> rb_status = rb_increment_wp(rbh, wlen * 4); \\ in byte

>

> last_fpga_pointer = fpga_pointer;

>

> Leaving the case out where the dma_buf wrap around this works fine for a small data rate. But if we increase the rate the fpga_pointer also increases really fast and wlen gets quite big. Actually it gets bigger then max_event_size which is checked in rb_increment_wp leading to an error.

>

> The problem now is that the event size is actually not to big but since we have multi events in the buffer which are read by midas in one step. So we think in this case the function rb_increment_wp is comparing actually the wrong thing. Also increasing the max_event_size does not help.

>

> Remark: dma_buf is volatile so memcpy is not possible here.

>

> Cheers,

> Marius |

|

1829

|

13 Feb 2020 |

Marius Koeppel | Forum | Writting Midas Events via FPGAs | Dear all,

we creating Midas events directly inside a FPGA and send them off via DMA into the PC RAM. For reading out this RAM via Midas the FPGA sends as a pointer where it has written the last 4kB of data. We use this pointer for telling the ring buffer of midas where the new events are. The buffer looks something like:

// event 1

dma_buf[0] = 0x00000001; // Trigger and Event ID

dma_buf[1] = 0x00000001; // Serial number

dma_buf[2] = TIME; // time

dma_buf[3] = 18*4-4*4; // event size

dma_buf[4] = 18*4-6*4; // all bank size

dma_buf[5] = 0x11; // flags

// bank 0

dma_buf[6] = 0x46454230; // bank name

dma_buf[7] = 0x6; // bank type TID_DWORD

dma_buf[8] = 0x3*4; // data size

dma_buf[9] = 0xAFFEAFFE; // data

dma_buf[10] = 0xAFFEAFFE; // data

dma_buf[11] = 0xAFFEAFFE; // data

// bank 1

dma_buf[12] = 0x1; // bank name

dma_buf[12] = 0x46454231; // bank name

dma_buf[13] = 0x6; // bank type TID_DWORD

dma_buf[14] = 0x3*4; // data size

dma_buf[15] = 0xAFFEAFFE; // data

dma_buf[16] = 0xAFFEAFFE; // data

dma_buf[17] = 0xAFFEAFFE; // data

// event 2

.....

dma_buf[fpga_pointer] = 0xXXXXXXXX;

And we do something like:

while{true}

// obtain buffer space

status = rb_get_wp(rbh, (void **)&pdata, 10);

fpga_pointer = fpga.read_last_data_add();

wlen = last_fpga_pointer - fpga_pointer; \\ in 32 bit words

copy_n(&dma_buf[last_fpga_pointer], wlen, pdata);

rb_status = rb_increment_wp(rbh, wlen * 4); \\ in byte

last_fpga_pointer = fpga_pointer;

Leaving the case out where the dma_buf wrap around this works fine for a small data rate. But if we increase the rate the fpga_pointer also increases really fast and wlen gets quite big. Actually it gets bigger then max_event_size which is checked in rb_increment_wp leading to an error.

The problem now is that the event size is actually not to big but since we have multi events in the buffer which are read by midas in one step. So we think in this case the function rb_increment_wp is comparing actually the wrong thing. Also increasing the max_event_size does not help.

Remark: dma_buf is volatile so memcpy is not possible here.

Cheers,

Marius |

|

1828

|

13 Feb 2020 |

Stefan Ritt | Bug Report | Compiling Midas in OS 10.15 Catalina | Now you are stuck with openssl, which is optional for mhttpd. If you only use mhttpd locally, you maybe don't need SSL support. In that case you can jus do

[midas/build] $ cmake -D NO_SSL=1 ..

To disable that. If you do need SSL, maybe you can try to install openssl11 via mac ports.

Stefan |

|

1827

|

12 Feb 2020 |

Berta Beltran | Bug Report | Compiling Midas in OS 10.15 Catalina | > Another thought: Can you delete the midas build directory and run cmake again? Like

>

> $ cd midas/build

> $ rm -rf *

> $ cmake ..

> $ make VERBOSE=1

>

> If you have the old build cache and upgraded your OS in meantime, the cache needs to be rebuild. The VERBOSE

> flag tells you the compiler options, and you see where the compiler looks for the SDK directory.

>

> Cheers,

> Stefan

Hi Stefan,

Thanks again for your reply. Yes! the suggestion of rebuilding the cache was the right one, this is the output of cmake ..

Darrens-Mac-mini:~ betacage$ cd packages/midas/build/

Darrens-Mac-mini:build betacage$ rm -rf *

Darrens-Mac-mini:build betacage$ cmake ..

-- MIDAS: cmake version: 3.16.3

-- The C compiler identification is AppleClang 11.0.0.11000033

-- The CXX compiler identification is AppleClang 11.0.0.11000033

-- Check for working C compiler: /Applications/Xcode.app/Contents/Developer/Toolchains/XcodeDefault.xctoolchain/usr/bin/cc

-- Check for working C compiler: /Applications/Xcode.app/Contents/Developer/Toolchains/XcodeDefault.xctoolchain/usr/bin/cc -- works

-- Detecting C compiler ABI info

-- Detecting C compiler ABI info - done

-- Detecting C compile features

-- Detecting C compile features - done

-- Check for working CXX compiler: /Applications/Xcode.app/Contents/Developer/Toolchains/XcodeDefault.xctoolchain/usr/bin/c++

-- Check for working CXX compiler: /Applications/Xcode.app/Contents/Developer/Toolchains/XcodeDefault.xctoolchain/usr/bin/c++ -- works

-- Detecting CXX compiler ABI info

-- Detecting CXX compiler ABI info - done

-- Detecting CXX compile features

-- Detecting CXX compile features - done

-- MIDAS: CMAKE_INSTALL_PREFIX: /Users/betacage/packages/midas

-- MIDAS: Found ROOT version 6.18/04

-- Found ZLIB: /usr/lib/libz.dylib (found version "1.2.11")

-- MIDAS: Found ZLIB version 1.2.11

-- Found OpenSSL: /usr/lib/libcrypto.dylib (found version "1.1.1d")

-- MIDAS: Found OpenSSL version 1.1.1d

-- MIDAS: MySQL not found

-- MIDAS: ODBC not found

-- MIDAS: SQLITE not found

-- MIDAS: nvidia-smi not found

-- Setting default build type to "RelWithDebInfo"

-- Found Git: /usr/bin/git (found version "2.21.1 (Apple Git-122.3)")

-- MIDAS example/experiment: MIDAS in-tree-build

-- MIDAS: Found ZLIB version 1.2.11

-- MIDAS example/experiment: Found ROOT version 6.18/04

-- Configuring done

-- Generating done

-- Build files have been written to: /Users/betacage/packages/midas/build

Unfortunately running make VERBOSE=1 the compilation crashes, with the following error

[ 39%] Linking CXX executable mhttpd

cd /Users/betacage/packages/midas/build/progs && /Applications/CMake.app/Contents/bin/cmake -E cmake_link_script CMakeFiles/mhttpd.dir/link.txt

--verbose=1

/Applications/Xcode.app/Contents/Developer/Toolchains/XcodeDefault.xctoolchain/usr/bin/c++ -O2 -g -DNDEBUG -isysroot

/Applications/Xcode.app/Contents/Developer/Platforms/MacOSX.platform/Developer/SDKs/MacOSX10.15.sdk -Wl,-search_paths_first -Wl,-

headerpad_max_install_names CMakeFiles/mhttpd.dir/mhttpd.cxx.o CMakeFiles/mhttpd.dir/mongoose6.cxx.o CMakeFiles/mhttpd.dir/mgd.cxx.o

CMakeFiles/mhttpd.dir/__/mscb/src/mscb.cxx.o -o mhttpd ../libmidas.a /usr/lib/libssl.dylib /usr/lib/libcrypto.dylib -lz

ld: cannot link directly with dylib/framework, your binary is not an allowed client of /usr/lib/libcrypto.dylib for architecture x86_64

clang: error: linker command failed with exit code 1 (use -v to see invocation)

make[2]: *** [progs/mhttpd] Error 1

make[1]: *** [progs/CMakeFiles/mhttpd.dir/all] Error 2

make: *** [all] Error 2

Libcrypto seems to be part of the OpenSSL, so I am back to the original problem again. I did install OpenSSL via MacPorts.

I have found this tread regarding this problem. But have to go and pick up my kids from school, so I don't have time to investigate today.

https://stackoverflow.com/questions/58446253/xcode-11-ld-error-your-binary-is-not-an-allowed-client-of-usr-lib-libcrypto-dy

Thanks again for staying with me and for responding so promptly.

Berta |

|

1826

|

12 Feb 2020 |

Stefan Ritt | Bug Report | Compiling Midas in OS 10.15 Catalina | Another thought: Can you delete the midas build directory and run cmake again? Like

$ cd midas/build

$ rm -rf *

$ cmake ..

$ make VERBOSE=1

If you have the old build cache and upgraded your OS in meantime, the cache needs to be rebuild. The VERBOSE

flag tells you the compiler options, and you see where the compiler looks for the SDK directory.

Cheers,

Stefan |

|

Draft

|

12 Feb 2020 |

Stefan Ritt | Bug Report | Compiling Midas in OS 10.15 Catalina | Another thought: Can you delete the build directory and run cmake again? Like

$ cd /midas/build

$ rm -rf *

$ cmake ..

If you have the old build cache and upgraded the OS in meantime, the cache needs to be rebuild.

Stefan |

|

1824

|

12 Feb 2020 |

Berta Beltran | Bug Report | Compiling Midas in OS 10.15 Catalina | > For your reference, here on my MacOSX 10.14.6 with XCode 11.3.1 the pthread.h file is present in locations listed below.

>

> Did you execute "xcode-select --install" ?

>

>

> ~$ locate pthread.h

> /Applications/Xcode.app/Contents/Developer/Platforms/AppleTVOS.platform/Developer/SDKs/AppleTVOS.sdk/usr/include/pthread/pthread.h

> /Applications/Xcode.app/Contents/Developer/Platforms/AppleTVOS.platform/Developer/SDKs/AppleTVOS.sdk/usr/include/pthread.h

> /Applications/Xcode.app/Contents/Developer/Platforms/AppleTVSimulator.platform/Developer/SDKs/AppleTVSimulator.sdk/usr/include/pthread/pthread.h

> /Applications/Xcode.app/Contents/Developer/Platforms/AppleTVSimulator.platform/Developer/SDKs/AppleTVSimulator.sdk/usr/include/pthread.h

> /Applications/Xcode.app/Contents/Developer/Platforms/MacOSX.platform/Developer/SDKs/MacOSX.sdk/usr/include/pthread/pthread.h

> /Applications/Xcode.app/Contents/Developer/Platforms/MacOSX.platform/Developer/SDKs/MacOSX.sdk/usr/include/pthread.h

> /Applications/Xcode.app/Contents/Developer/Platforms/WatchOS.platform/Developer/SDKs/WatchOS.sdk/usr/include/pthread/pthread.h

> /Applications/Xcode.app/Contents/Developer/Platforms/WatchOS.platform/Developer/SDKs/WatchOS.sdk/usr/include/pthread.h

> /Applications/Xcode.app/Contents/Developer/Platforms/WatchSimulator.platform/Developer/SDKs/WatchSimulator.sdk/usr/include/pthread/pthread.h

> /Applications/Xcode.app/Contents/Developer/Platforms/WatchSimulator.platform/Developer/SDKs/WatchSimulator.sdk/usr/include/pthread.h

> /Applications/Xcode.app/Contents/Developer/Platforms/iPhoneOS.platform/Developer/SDKs/iPhoneOS.sdk/usr/include/pthread/pthread.h

> /Applications/Xcode.app/Contents/Developer/Platforms/iPhoneOS.platform/Developer/SDKs/iPhoneOS.sdk/usr/include/pthread.h

> /Applications/Xcode.app/Contents/Developer/Platforms/iPhoneSimulator.platform/Developer/SDKs/iPhoneSimulator.sdk/usr/include/pthread/pthread.h

> /Applications/Xcode.app/Contents/Developer/Platforms/iPhoneSimulator.platform/Developer/SDKs/iPhoneSimulator.sdk/usr/include/pthread.h

> /Library/Developer/CommandLineTools/SDKs/MacOSX.sdk/usr/include/pthread/pthread.h

> /Library/Developer/CommandLineTools/SDKs/MacOSX.sdk/usr/include/pthread.h

Hi Stefan,

Thanks for looking into this. Yes, I have done "xcode-select --install"

This is my output when I try to locate pthread.h

Darrens-Mac-mini:~ betacage$ locate pthread.h

/Applications/Xcode.app/Contents/Developer/Platforms/AppleTVOS.platform/Developer/SDKs/AppleTVOS.sdk/usr/include/pthread/pthread.h

/Applications/Xcode.app/Contents/Developer/Platforms/AppleTVOS.platform/Developer/SDKs/AppleTVOS.sdk/usr/include/pthread.h

/Applications/Xcode.app/Contents/Developer/Platforms/AppleTVSimulator.platform/Developer/SDKs/AppleTVSimulator.sdk/usr/include/pthread/pthread.h

/Applications/Xcode.app/Contents/Developer/Platforms/AppleTVSimulator.platform/Developer/SDKs/AppleTVSimulator.sdk/usr/include/pthread.h

/Applications/Xcode.app/Contents/Developer/Platforms/MacOSX.platform/Developer/SDKs/MacOSX.sdk/usr/include/pthread/pthread.h

/Applications/Xcode.app/Contents/Developer/Platforms/MacOSX.platform/Developer/SDKs/MacOSX.sdk/usr/include/pthread.h

/Applications/Xcode.app/Contents/Developer/Platforms/WatchOS.platform/Developer/SDKs/WatchOS.sdk/usr/include/pthread/pthread.h

/Applications/Xcode.app/Contents/Developer/Platforms/WatchOS.platform/Developer/SDKs/WatchOS.sdk/usr/include/pthread.h

/Applications/Xcode.app/Contents/Developer/Platforms/WatchSimulator.platform/Developer/SDKs/WatchSimulator.sdk/usr/include/pthread/pthread.h

/Applications/Xcode.app/Contents/Developer/Platforms/WatchSimulator.platform/Developer/SDKs/WatchSimulator.sdk/usr/include/pthread.h

/Applications/Xcode.app/Contents/Developer/Platforms/iPhoneOS.platform/Developer/SDKs/iPhoneOS.sdk/usr/include/pthread/pthread.h

/Applications/Xcode.app/Contents/Developer/Platforms/iPhoneOS.platform/Developer/SDKs/iPhoneOS.sdk/usr/include/pthread.h

/Applications/Xcode.app/Contents/Developer/Platforms/iPhoneSimulator.platform/Developer/SDKs/iPhoneSimulator.sdk/usr/include/pthread/pthread.h

/Applications/Xcode.app/Contents/Developer/Platforms/iPhoneSimulator.platform/Developer/SDKs/iPhoneSimulator.sdk/usr/include/pthread.h

/Library/Developer/CommandLineTools/SDKs/MacOSX10.14.sdk/usr/include/pthread/pthread.h

/Library/Developer/CommandLineTools/SDKs/MacOSX10.14.sdk/usr/include/pthread.h

/Library/Developer/CommandLineTools/SDKs/MacOSX10.15.sdk/usr/include/pthread/pthread.h

/Library/Developer/CommandLineTools/SDKs/MacOSX10.15.sdk/usr/include/pthread.h

and this is what I see in my SDKs folder

Darrens-Mac-mini:SDKs betacage$ pwd

/Library/Developer/CommandLineTools/SDKs

Darrens-Mac-mini:SDKs betacage$ ls

MacOSX.sdk MacOSX10.14.sdk MacOSX10.15.sdk

So it looks like in this computer one needs to read the version of the SDK as pthread.h is not in MacOSX.sdk but in MacOSX10.15.sdk

Thanks

Berta |

|