| ID |

Date |

Author |

Topic |

Subject |

|

1992

|

02 Sep 2020 |

Ruslan Podviianiuk | Forum | Transition status message | > The information you want is in the ODB:

> * "/System/Transition/status" is the overall integer status code.

> * "/System/Transition/error" is the overall error message string.

>

> There is also per-client status information in the ODB:

> * "/System/Transition/Clients/<client_name>/status"

> * "/System/Transition/Clients/<client_name>/error"

Thank you so much, Ben! |

|

1995

|

08 Sep 2020 |

Konstantin Olchanski | Forum | Transition status message | > > The information you want is in the ODB:

> > * "/System/Transition/status" is the overall integer status code.

> > * "/System/Transition/error" is the overall error message string.

> >

> > There is also per-client status information in the ODB:

> > * "/System/Transition/Clients/<client_name>/status"

> > * "/System/Transition/Clients/<client_name>/error"

You can also use web page .../resources/transition.html as an example of how

to read transition (and other) data from ODB into your own web page. example.html

may also be helpful.

K.O. |

|

1996

|

08 Sep 2020 |

Ruslan Podviianiuk | Forum | Transition status message | > > > The information you want is in the ODB:

> > > * "/System/Transition/status" is the overall integer status code.

> > > * "/System/Transition/error" is the overall error message string.

> > >

> > > There is also per-client status information in the ODB:

> > > * "/System/Transition/Clients/<client_name>/status"

> > > * "/System/Transition/Clients/<client_name>/error"

>

> You can also use web page .../resources/transition.html as an example of how

> to read transition (and other) data from ODB into your own web page. example.html

> may also be helpful.

>

> K.O.

Thank you Konstantin!

Ruslan |

|

2937

|

05 Feb 2025 |

Andreas Suter | Forum | Transition from mana -> manalyzer | Hi,

we are planning to migrate from mana to manalyzer. I started to have a look into it and realized that I have some lose ends.

Is there a clear migration docu somewhere?

Currently I understand it the following way (which might be wrong):

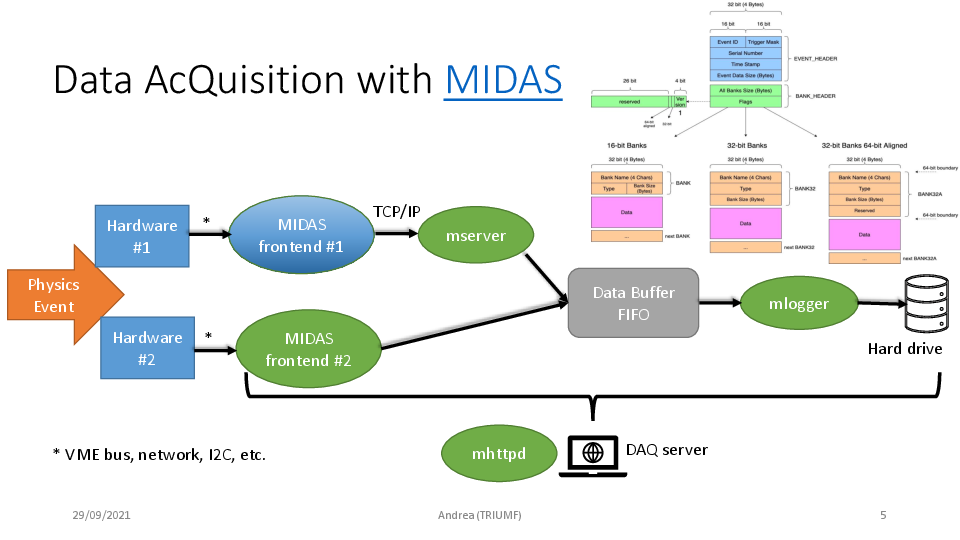

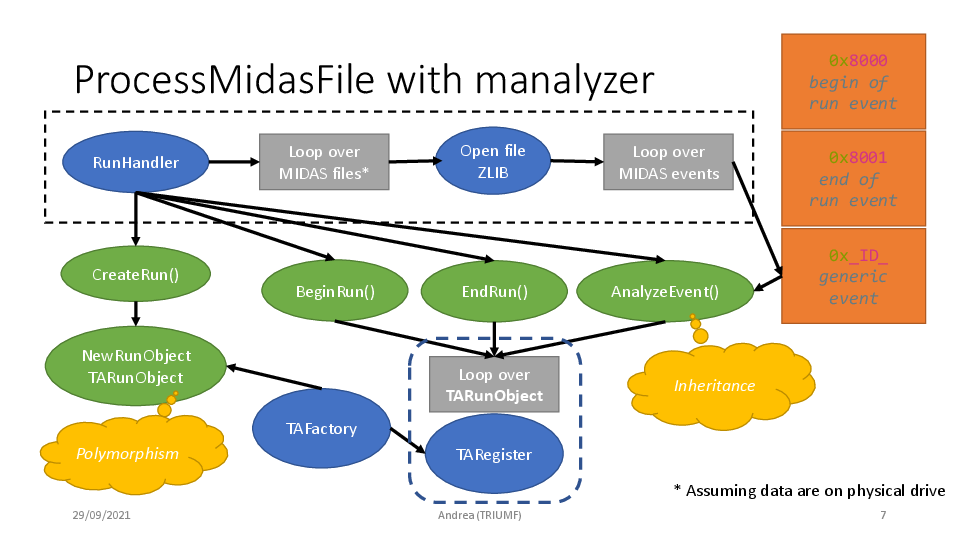

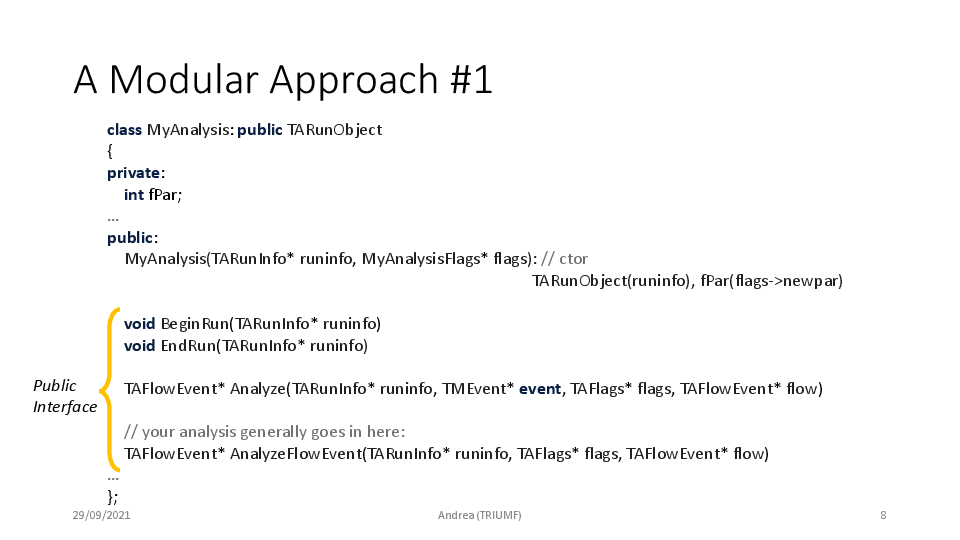

The class TARunObject is used to write analyzer modules which are registered by TAFactory. I hope this is right?

However, in mana there is an analyzer implemented by the user which binds the modules and has additional routines:

analyzer_init(), analyzer_exit(), analyzer_loop()

ana_begin_of_run(), ana_end_of_run(), ana_pause_run(), ana_resume_run()

which we are using.

This part I somehow miss in manalyzer, most probably due to lack of understanding, and missing documentation.

Could somebody please give me a boost? |

|

2938

|

05 Feb 2025 |

Andrea Capra | Forum | Transition from mana -> manalyzer | Hi Andreas,

please find in elog:2938/1 a short introduction that I wrote sometime ago.

I'm glad to offer additional support, if needed.

> Hi,

>

> we are planning to migrate from mana to manalyzer. I started to have a look into it and realized that I have some lose ends.

> Is there a clear migration docu somewhere?

>

> Currently I understand it the following way (which might be wrong):

> The class TARunObject is used to write analyzer modules which are registered by TAFactory. I hope this is right?

>

> However, in mana there is an analyzer implemented by the user which binds the modules and has additional routines:

> analyzer_init(), analyzer_exit(), analyzer_loop()

> ana_begin_of_run(), ana_end_of_run(), ana_pause_run(), ana_resume_run()

> which we are using.

>

> This part I somehow miss in manalyzer, most probably due to lack of understanding, and missing documentation.

>

> Could somebody please give me a boost? |

| Attachment 1: dsadc_analyzer_midas_elog.pdf

|

|

|

2939

|

06 Feb 2025 |

Konstantin Olchanski | Forum | Transition from mana -> manalyzer | > Could somebody please give me a boost?

no need to shout into the void, it is pretty easy to identify the author of manalyzer and ask me directly.

> we are planning to migrate from mana to manalyzer. I started to have a look into it and realized that I have some lose ends.

> Is there a clear migration docu somewhere?

README.md and examples in the manalyzer git repository.

If something is missing, or unclear, please ask.

> Currently I understand it the following way (which might be wrong):

> The class TARunObject is used to write analyzer modules which are registered by TAFactory. I hope this is right?

Please read the README file. It explains what is going on there.

Design of manalyzer had 2 main goals:

a) lifetime of all c++ objects (and ROOT objects) is well defined (to fix a design defect in rootana)

b) event flow and data flow are documentable (problem in mfe.c frontends, etc)

> However, in mana there is an analyzer implemented by the user which binds the modules and has additional routines:

> analyzer_init(), analyzer_exit(), analyzer_loop()

> ana_begin_of_run(), ana_end_of_run(), ana_pause_run(), ana_resume_run()

> which we are using.

I have never used the mana analyzer, I wrote the c++ rootana analyzer very early on (first commit in 2006).

But the basic steps should all be there for you:

- initialization (create histograms, open files) can be done in the module constructor or in BeginRun()

- finalization (fit histograms, close files) should be done in EndRun()

- event processing (obviously) in Analyze()

- pause run, resume run and switch to next subrun file have corresponding methods

- all the "flow" and multithreading stuff you can ignore to first order.

To start the migration, I recommend you take manalyzer_example_root.cxx and start stuffing it with your code.

If you run into any problems, I am happy to help with them. Ask here or contact me directly.

> This part I somehow miss in manalyzer, most probably due to lack of understanding, and missing documentation.

True, I wrote a migration guide for the frontend mfe.c to c++ tmfe, because we do this migration

quite often. But I never wrote a migration guide from mana.c analyzer, because we never did such

migration. Most experiments at TRIUMF are post-2006 and use rootana in it's different incarnations.

P.S. I designed the C++ TMFe frontend after manalyzer and I think it came out quite better, I especially

value the design input from Stefan, Thomas, Pierre, Joseph and Ben.

P.P.S. Be free to ignore all this manalyzer business and write your own analyzer based

on the midasio library:

int main()

{

TMReaderInteraface* f = TMNewReader(file.mid.gz");

while (1) {

TMEvent* e = TMReadEvent(f);

dwim(e);

delete e;

}

delete f;

}

For online processing I use the TMFe class, it has enough bits to be a frontend and an analyzer,

or you can use the older TMidasOnline from rootana.

Access to ODB is via the mvodb library, which is new in midas, but has been part of rootana

and my frontend toolkit since at least 2011 or earlier, inspired by Peter Green's even

older "myload" ODB access library.

K.O. |

|

2940

|

06 Feb 2025 |

Konstantin Olchanski | Forum | Transition from mana -> manalyzer | > > Is there a clear migration docu somewhere?

I can also give you links to the alpha-g analyzer (very complex) and the DarkLight trigger TDC analyzer (very simple),

there is also analyzer examples of in-between complexity.

K.O. |

|

939

|

20 Nov 2013 |

Konstantin Olchanski | Bug Report | Too many bm_flush_cache() in mfe.c | I was looking at something in the mserver and noticed that for remote frontends, for every periodic event,

there are about 3 RPC calls to bm_flush_cache().

Sure enough, in mfe.c::send_event(), for every event sent, there are 2 calls to bm_flush_cache() (once for

the buffer we used, second for all buffers). Then, for a good measure, the mfe idle loop calls

bm_flush_cache() for all buffers about once per second (even if no events were generated).

So what is going on here? To allow good performance when processing many small events,

the MIDAS event buffer code (bm_send_event()) buffers small events internally, and only after this internal

buffer is full, the accumulated events are flushed into the shared memory event buffer,

where they become visible to the mlogger, mdump and other consumers.

Because of this internal buffering, infrequent small size periodic events can become

stuck for quite a long time, confusing the user: "my frontend is sending events, how come I do not

see them in mdump?"

To avoid this, mfe.c manually flushes these internal event buffers by calling bm_flush_buffer().

And I think that works just fine for frontends directly connected to the shared memory, one call to

bm_flush_buffer() should be sufficient.

But for remote fronends connected through the mserver, it turns out there is a race condition between

sending the event data on one tcp connection and sending the bm_flush_cache() rpc request on another

tcp connection.

I see that the mserver always reads the rpc connection before the event connection, so bm_flush_cache()

is done *before* the event is written into the buffer by bm_send_event(). So the newly

send event is stuck in the buffer until bm_flush_cache() for the *next* event shows up:

mfe.c: send_event1 -> flush -> ... wait until next event ... -> send_event2 -> flush

mserver: flush -> receive_event1 -> ... wait ... -> flush -> receive_event2 -> ... wait ...

mdump -> ... nothing ... -> ... nothing ... -> event1 -> ... nothing ...

Enter the 2nd call to bm_flush_cache in mfe.c (flush all buffers) - now because mserver seems to be

alternating between reading the rpc connection and the event connection, the race condition looks like

this:

mfe.c: send_event -> flush -> flush

mserver: flush -> receive_event -> flush

mdump: ... -> event -> ...

So in this configuration, everything works correctly, the data is not stuck anywhere - but by accident, and

at the price of an extra rpc call.

But what about the periodic 1/second bm_flush_cache() on all buffers? I think it does not quite work

either because the race condition is still there: we send an event, and the first flush may race it and only

the 2nd flush gets the job done, so the delay between sending the event and seeing it in mdump would be

around 1-2 seconds. (no more than 2 seconds, I think). Since users expect their events to show up "right

away", a 2 second delay is probably not very good.

Because periodic events are usually not high rate, the current situation (4 network transactions to send 1

event - 1x send event, 3x flush buffer) is probably acceptable. But this definitely sets a limit on the

maximum rate to 3x (2x?) the mserver rpc latency - without the rpc calls to bm_flush_buffer() there

would be no limit - the events themselves are sent through a pipelined tcp connection without

handshaking.

One solution to this would be to implement periodic bm_flush_buffer() in the mserver, making all calls to

bm_flush_buffer() in mfe.c unnecessary (unless it's a direct connection to shared memory).

Another solution could be to send events with a special flag telling the mserver to "flush the buffer right

away".

P.S. Look ma!!! A race condition with no threads!!!

K.O. |

|

940

|

21 Nov 2013 |

Stefan Ritt | Bug Report | Too many bm_flush_cache() in mfe.c | > And I think that works just fine for frontends directly connected to the shared memory, one call to

> bm_flush_buffer() should be sufficient.

That's correct. What you want is once per second or so for polled events, and once per periodic event (which anyhow will typically come only every 10 seconds or so). If there are 3 calls

per event, this is certainly too much.

> But for remote fronends connected through the mserver, it turns out there is a race condition between

> sending the event data on one tcp connection and sending the bm_flush_cache() rpc request on another

> tcp connection.

>

> ...

>

> One solution to this would be to implement periodic bm_flush_buffer() in the mserver, making all calls to

> bm_flush_buffer() in mfe.c unnecessary (unless it's a direct connection to shared memory).

>

> Another solution could be to send events with a special flag telling the mserver to "flush the buffer right

> away".

That's a very good and useful observation. I never really thought about that.

Looking at your proposed solutions, I prefer the second one. mserver is just an interface for RPC calls, it should not do anything "by itself". This was a strategic decision at the beginning.

So sending a flag to punch through the cache on mserver seems to me has less side effects. Will just break binary compatibility :-)

/Stefan |

|

624

|

01 Sep 2009 |

Jimmy Ngai | Forum | Timeout during run transition | Dear All,

I'm using SL5 and MIDAS rev 4528. Occasionally, when I stop a run in odbedit,

a timeout would occur:

[midas.c:9496:rpc_client_call,ERROR] rpc timeout after 121 sec, routine

= "rc_transition", host = "computerB", connection closed

Error: Unknown error 504 from client 'Frontend' on host computerB

This error seems to be random without any reason or pattern. After this error

occurs, I cannot start or stop any run. Sometime restarting MIDAS can bring

the system working again, but sometime not.

Another transition timeout occurs after I change any ODB value using the web

interface:

[midas.c:8291:rpc_client_connect,ERROR] timeout on receive remote computer

info:

[midas.c:3642:cm_transition,ERROR] cannot connect to client "Frontend" on host

computerB, port 36255, status 503

Error: Cannot connect to client 'Frontend'

This error is reproducible: start run -> change ODB value within webpage ->

stop run -> timeout!

Any idea?

Thanks,

Jimmy |

|

625

|

03 Sep 2009 |

Stefan Ritt | Forum | Timeout during run transition | > Dear All,

>

> I'm using SL5 and MIDAS rev 4528. Occasionally, when I stop a run in odbedit,

> a timeout would occur:

> [midas.c:9496:rpc_client_call,ERROR] rpc timeout after 121 sec, routine

> = "rc_transition", host = "computerB", connection closed

> Error: Unknown error 504 from client 'Frontend' on host computerB

>

> This error seems to be random without any reason or pattern. After this error

> occurs, I cannot start or stop any run. Sometime restarting MIDAS can bring

> the system working again, but sometime not.

>

> Another transition timeout occurs after I change any ODB value using the web

> interface:

> [midas.c:8291:rpc_client_connect,ERROR] timeout on receive remote computer

> info:

> [midas.c:3642:cm_transition,ERROR] cannot connect to client "Frontend" on host

> computerB, port 36255, status 503

> Error: Cannot connect to client 'Frontend'

>

> This error is reproducible: start run -> change ODB value within webpage ->

> stop run -> timeout!

A few hints for debugging:

- do the run stop via odbedit and the "-v" flag, like

[local:Online:R]/> stop -v

then you see which computer is contacted when.

- Then put some debugging code into your front-end end_of_run() routine at the

beginning and the end of that routine, so you see when it's executed and how long

this takes. If you do lots of things in your EOR routine, this could maybe cause a

timeout.

- Then make sure that cm_yield() in mfe.c is called periodically by putting some

debugging code there. This function checks for any network message, such as the

stop command from odbedit. If you trigger event readout has an endless loop for

example, cm_yield() will never be called and any transition will timeout.

- Make sure that not 100% CPU is used on your frontend. Some OSes have problems

handling incoming network connections if the CPU is completely used of if

input/output operations are too heavy.

- Stefan |

|

2144

|

09 Apr 2021 |

Lars Martin | Suggestion | Time zone selection for web page | The new history as well as the clock in the web page header show the local time

of the user's computer running the browser.

Would it be possible to make it either always use the time zone of the Midas

server, or make it selectable from the config page?

It's not ideal trying to relate error messages from the midas.log to history

plots if the time stamps don't match. |

|

2152

|

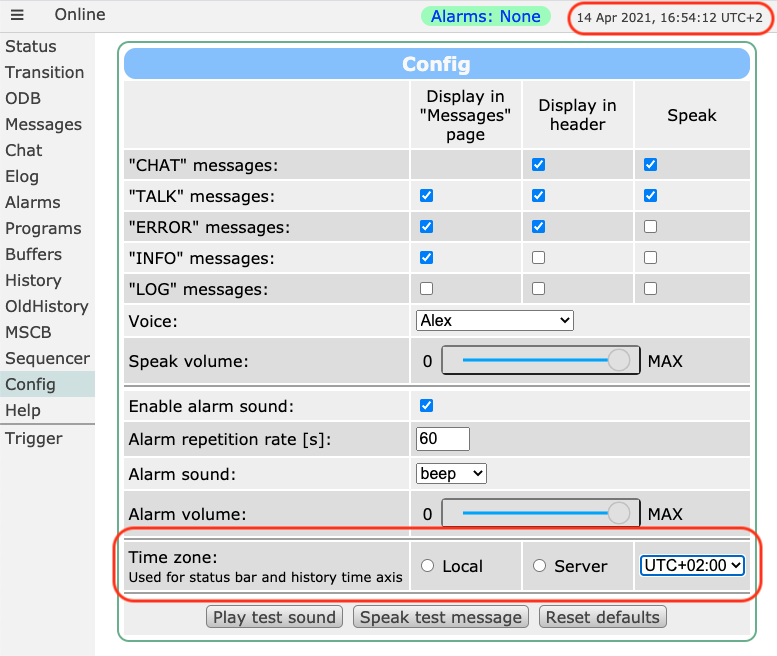

14 Apr 2021 |

Stefan Ritt | Suggestion | Time zone selection for web page | > The new history as well as the clock in the web page header show the local time

> of the user's computer running the browser.

> Would it be possible to make it either always use the time zone of the Midas

> server, or make it selectable from the config page?

> It's not ideal trying to relate error messages from the midas.log to history

> plots if the time stamps don't match.

I implemented a new row in the config page to select the time zone.

"Local": Time zone where the browser runs

"Server": Time zone where the midas server runs (you have to update mhttpd for that)

"UTC+X": Any other time zone

The setting affects both the status header and the history display.

I spent quite some time with "named" time zones like "PST" "EST" "CEST", but the

support for that is not that great in JavaScript, so I decided to go with simple

UTC+X. Hope that's ok.

Please give it a try and let me know if it's working for you.

Best,

Stefan |

| Attachment 1: Screenshot_2021-04-14_at_16.54.12_.png

|

|

|

2157

|

29 Apr 2021 |

Pierre-Andre Amaudruz | Suggestion | Time zone selection for web page | > > The new history as well as the clock in the web page header show the local time

> > of the user's computer running the browser.

> > Would it be possible to make it either always use the time zone of the Midas

> > server, or make it selectable from the config page?

> > It's not ideal trying to relate error messages from the midas.log to history

> > plots if the time stamps don't match.

>

> I implemented a new row in the config page to select the time zone.

>

> "Local": Time zone where the browser runs

> "Server": Time zone where the midas server runs (you have to update mhttpd for that)

> "UTC+X": Any other time zone

>

> The setting affects both the status header and the history display.

>

> I spent quite some time with "named" time zones like "PST" "EST" "CEST", but the

> support for that is not that great in JavaScript, so I decided to go with simple

> UTC+X. Hope that's ok.

>

> Please give it a try and let me know if it's working for you.

>

> Best,

> Stefan

Hi Stefan,

This is great, the UTC+x is perfect, thank you.

PAA |

|

2132

|

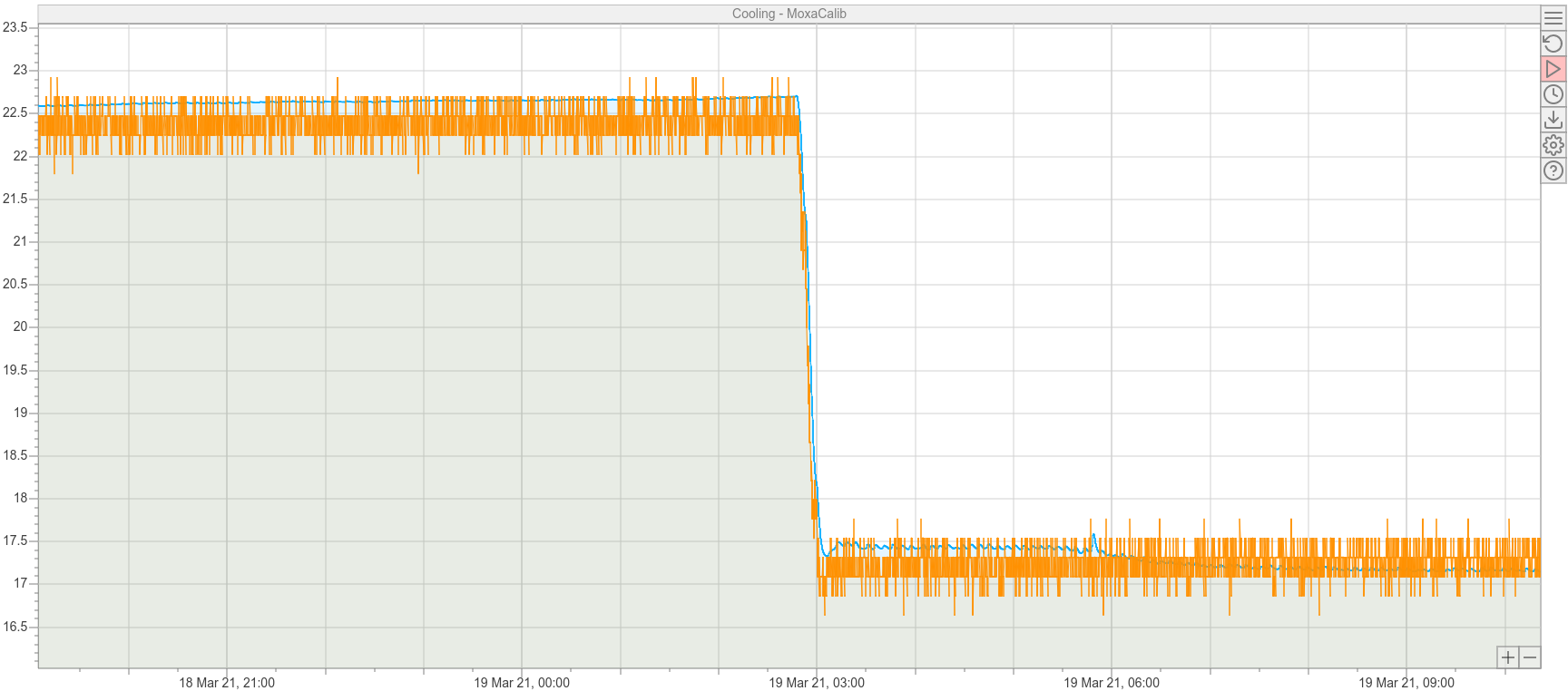

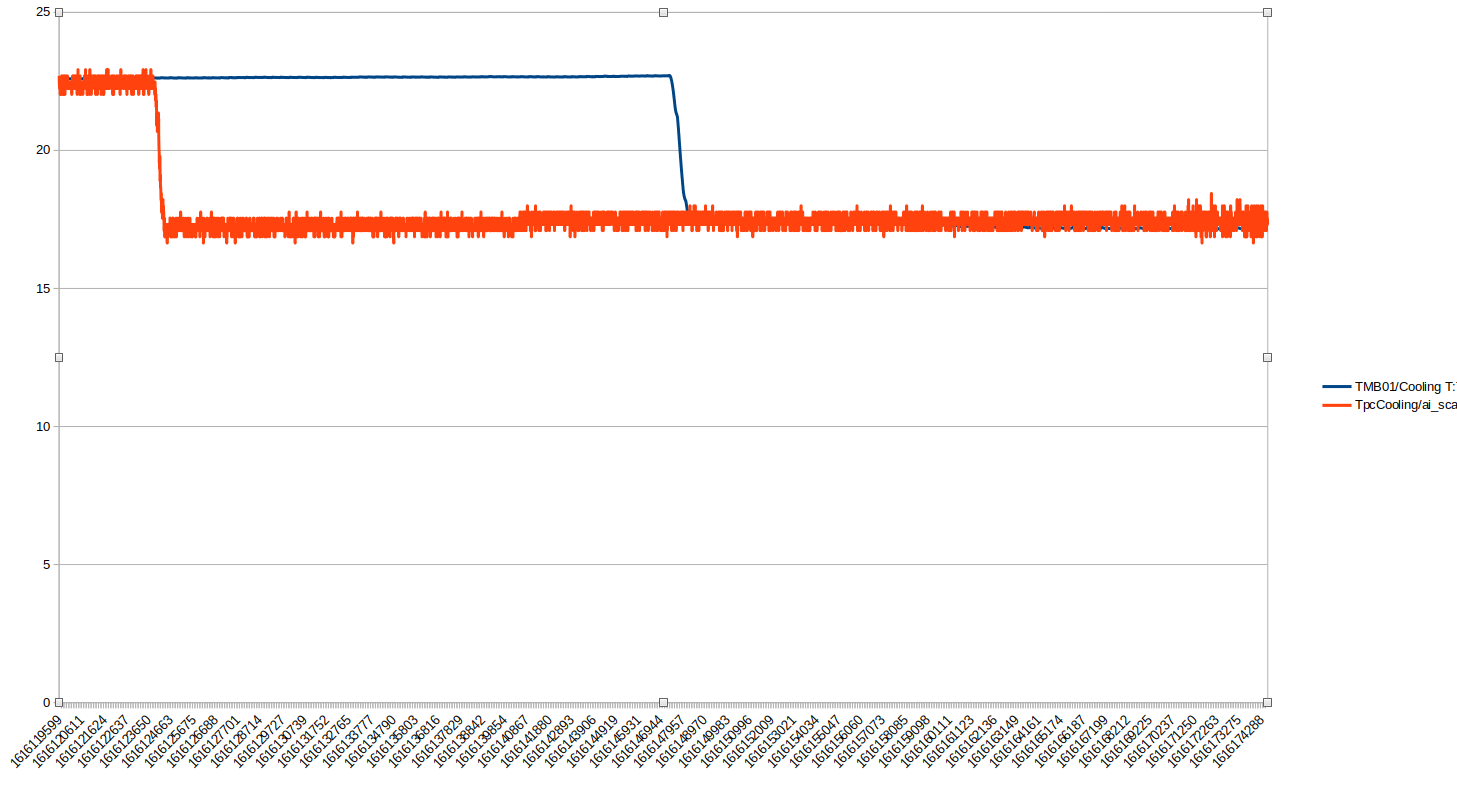

23 Mar 2021 |

Lars Martin | Bug Report | Time shift in history CSV export | Version: release/midas-2020-12

I'm exporting the history data shown in elog:2132/1 to CSV, but when I look at the

CSV data, the step no longer occurs at the same time in both data sets (elog:2132/2) |

| Attachment 1: Cooling-MoxaCalib-20212118-190450-20212119-102151.png

|

|

| Attachment 2: Screenshot_from_2021-03-23_12-29-21.png

|

|

|

2133

|

23 Mar 2021 |

Lars Martin | Bug Report | Time shift in history CSV export | History is from two separate equipments/frontends, but both have "Log history" set to 1. |

|

2134

|

23 Mar 2021 |

Lars Martin | Bug Report | Time shift in history CSV export | Tried with export of two different time ranges, and the shift appears to remain the same,

about 4040 rows. |

|

2135

|

24 Mar 2021 |

Stefan Ritt | Bug Report | Time shift in history CSV export | I confirm there is a problem. If variables are from the same equipment, they have the same

time stamps, like

t1 v1(t1) v2(t1)

t2 v1(t2) v2(t2)

t3 v1(t3) v2(t3)

when they are from different equipments, they have however different time stamps

t1 v1(t1)

t2 v2(t2)

t3 v1(t3)

t4 v2(t4)

The bug in the current code is that all variables use the time stamps of the first variable,

which is wrong in the case of different equipments, like

t1 v1(t1) v2(*t2*)

t3 v1(t3) v2(*t4*)

So I can change the code, but I'm not sure what would be the bast way. The easiest would be to

export one array per variable, like

t1 v1(t1)

t2 v1(t2)

...

t3 v2(t3)

t4 v2(t4)

...

Putting that into a single array would leave gaps, like

t1 v1(t1) [gap]

t2 [gap] v2(t2)

t3 v1(t3) [gap]

t4 [ga]] v2(t4)

plus this is programmatically more complicated, since I have to merge two arrays. So which

export format would you prefer?

Stefan |

|

2136

|

24 Mar 2021 |

Lars Martin | Bug Report | Time shift in history CSV export | I think from my perspective the separate files are fine. I personally don't really like the format

with the gaps, so don't see an advantage in putting in the extra work.

I'm surprised the shift is this big, though, it was more than a whole hour in my case, is it the

time difference between when the frontends were started? |

|

2154

|

14 Apr 2021 |

Stefan Ritt | Bug Report | Time shift in history CSV export | I finally found some time to fix this issue in the latest commit. Please update and check if it's

working for you.

Stefan |

|