06 Aug 2019, Thomas Lindner, Info, Precedence of equipment/common structure 06 Aug 2019, Thomas Lindner, Info, Precedence of equipment/common structure

|

Hi Stefan,

This change does not sound like a good idea to me. I think that this change will cause just as much confusion as before; probably more since you are changing established behaviour.

It is common that MIDAS frontends usually have a Settings directory in the ODB where details about the frontend behaviour are set. The Settings directory might get initialized from strings in the frontend code, but after initialization the Settings in the ODB have precedence and define how the frontend will behave. Indeed, most of my custom webpages are designed to control my frontend programs through their Settings ODB tree.

So you have created a situation where Frontend/Settings in the ODB has precedence and is the main place for changing frontend behaviour; but Frontend/Common in the ODB is essentially meaningless and will get overwritten the next time the frontend restarts. That seems likely to confuse people.

If you really want to make this change I suggest that you delete the Frontend/Common directory entirely; or make it read-only so that people aren't fooled into changing it.

Thomas

> Today I fixed a long-annoying problem. We have in each front-end an equipment structure

> which defined the event id, event type, readout frequency etc. This is mapped to the ODB

> subtree

>

> /Equipment/<name>/Common

>

> In the past, the ODB setting took precedence over the frontend structure. We defined this

> like 25 years ago and I forgot what the exact reason was. It causes however many people

> (including myself) to fall into this trap: You change something in the front-end EQUIPMENT

> structure, you restart the front-end, but the new setting does not take effect since the

> (old) ODB value took precedence. After some debugging you find out that you have to both

> change the EQUIPMENT structure (which defines the default value for a fresh ODB) and the

> ODB value itself.

>

> So I changed it in the current develop tree that the front-end structure takes precedence.

> You still have a hot-link, so if you want to change anything while the front-end is running

> (like the readout period), you can do that in the ODB and it takes effect immediately. But

> when you start the front-end the next time, the value from the EQUIPMENT structure is

> taken again. So please be aware of this new feature.

>

> Happy BC day,

> Stefan |

06 Aug 2019, Stefan Ritt, Info, Precedence of equipment/common structure 06 Aug 2019, Stefan Ritt, Info, Precedence of equipment/common structure

|

Hi Thomas,

the change only affects Eqipment/<name>/common not the Equipment/<name>/Settings.

The Common subtree is still hot-linked into the frontend, so when running things can be changed if needed. This mainly concerns the readout period of periodic events.

Sometimes you want to change this quickly without restarting the frontend. Changing the other settings are kind of dangerous. If you change the ID of an event on the fly

you won't be able to analyze your data. So having this read-only in the ODB might be a good idea (you still need it in the ODB for the status page), except for the values

you want to change (like the readout period).

Let's see what other people have to say.

Stefan

> Hi Stefan,

>

> This change does not sound like a good idea to me. I think that this change will cause just as much confusion as before; probably more since you are changing established behaviour.

>

> It is common that MIDAS frontends usually have a Settings directory in the ODB where details about the frontend behaviour are set. The Settings directory might get initialized from strings in the frontend code, but after initialization the Settings in the ODB have precedence and define how the frontend will behave. Indeed, most of my custom webpages are designed to control my frontend programs through their Settings ODB tree.

>

> So you have created a situation where Frontend/Settings in the ODB has precedence and is the main place for changing frontend behaviour; but Frontend/Common in the ODB is essentially meaningless and will get overwritten the next time the frontend restarts. That seems likely to confuse people.

>

> If you really want to make this change I suggest that you delete the Frontend/Common directory entirely; or make it read-only so that people aren't fooled into changing it.

>

> Thomas

>

>

>

> > Today I fixed a long-annoying problem. We have in each front-end an equipment structure

> > which defined the event id, event type, readout frequency etc. This is mapped to the ODB

> > subtree

> >

> > /Equipment/<name>/Common

> >

> > In the past, the ODB setting took precedence over the frontend structure. We defined this

> > like 25 years ago and I forgot what the exact reason was. It causes however many people

> > (including myself) to fall into this trap: You change something in the front-end EQUIPMENT

> > structure, you restart the front-end, but the new setting does not take effect since the

> > (old) ODB value took precedence. After some debugging you find out that you have to both

> > change the EQUIPMENT structure (which defines the default value for a fresh ODB) and the

> > ODB value itself.

> >

> > So I changed it in the current develop tree that the front-end structure takes precedence.

> > You still have a hot-link, so if you want to change anything while the front-end is running

> > (like the readout period), you can do that in the ODB and it takes effect immediately. But

> > when you start the front-end the next time, the value from the EQUIPMENT structure is

> > taken again. So please be aware of this new feature.

> >

> > Happy BC day,

> > Stefan |

06 Aug 2019, Stefan Ritt, Info, Precedence of equipment/common structure 06 Aug 2019, Stefan Ritt, Info, Precedence of equipment/common structure

|

After some internal discussion, I decided to undo my previous change again, in order not to break existing habits. Instead, I created a new function

set_odb_equipment_common(equipment, name);

which should be called from frontend_init() which explicitly copies all data from the equipment structure in the front-end into the ODB.

Stefan |

09 Aug 2019, Konstantin Olchanski, Info, Precedence of equipment/common structure 09 Aug 2019, Konstantin Olchanski, Info, Precedence of equipment/common structure

|

> Today I fixed a long-annoying problem. ...

> /Equipment/<name>/Common

> In the past, the ODB setting took precedence over the frontend structure...

> We defined this like 25 years ago and I forgot what the exact reason was.

> It causes however many people (including myself) to fall into this trap: ...

There is good number of confusions regarding entries in /eq/xxx/common:

- for some of them, the frontend code settings take precedence and overwrite settings in odb ("frontend file name")

- for some of them, ODB takes precedence and frontend code values are ignored ("read on" and "period")

- for some of them, changes in ODB take effect immediately (via db_watch) ("period")

- for some of them, frontend restart is required for changes to take effect (output event buffer name "buffer")

- some of them continuously update the odb values ("status", "status color")

I do not think there is a simple way to improve on this.

(One solution would replace the single "common" with several subdirectories, "per function",

one would have items where the code takes precedence, one would have items where odb takes

precedence (in effect, "standard settings"), one will have items that the frontend always updates

and that should not be changes via odb ("frontend name", etc). I am not sure this one solution

is necessarily an "improvement").

Lacking any ideas for improvements, I vote for the status quo. (plus a review of the documentation to ensure we have clearly

written up what each entry in "common" does and whether the user is permitted to edit it in odb).

K.O. |

13 Aug 2019, Stefan Ritt, Info, Precedence of equipment/common structure 13 Aug 2019, Stefan Ritt, Info, Precedence of equipment/common structure

|

> Lacking any ideas for improvements, I vote for the status quo. (plus a review of the documentation to ensure we have clearly

> written up what each entry in "common" does and whether the user is permitted to edit it in odb).

I agree with that.

Stefan |

18 Sep 2008, Stefan Ritt, Info, Potential problems in multi-threaded slow control front-end 18 Sep 2008, Stefan Ritt, Info, Potential problems in multi-threaded slow control front-end

|

We had recently some problems at our experiment which I would like to share

with the community. This affects however only experiments which have a slow

control front-end in multi-threaded mode.

The problem is related with the fact that the midas API is not thread safe, so

a device driver or bus driver from the slow control system may not call any ODB

function. We found several drivers (mainly psi_separator.c, psi_beamline.c etc)

which use inside read/write function the midas PAI function cm_msg() to report

any error. While this is ok for the init section (which is executed in the main

frontend thread) this is not ok for the read/write function inside the driver.

If this is done anyhow, it can happen that the main thread locks the ODB (via

db_lock_database()) and the thread interrupts that call and locks the ODB

again. In rare cases this can cause a stale lock on the ODB. This blocks all

other programs to access the ODB and the experiment will die loudly. It is hard

to identify, since error messages cannot be produced any more, and remote

programs (not affected by the lock) just show a rpc timeout.

I fixed all drivers now in our experiment which solved the problem for us, but

I urge other people to double check their device drivers as well.

In case of problems, there is a thread ID check in

db_lock_database()/db_unlock_database() which can be activated by supplying

-DCHECK_THREAD_ID

in the compile command line. If then these functions are called from different

threads, the program aborts with an assertion failure, which can then be

debugged.

There is also a stack history system implemented with new functions

ss_stack_xxxx. Using this system, one can check which functions called

db_lock_database() *before* an error occurs. Using this system, I identified

the malicious drivers. Maybe this system can also be used in other error

debugging scenarios. |

03 May 2024, Thomas Senger, Suggestion, Possible addition to IF Statements 03 May 2024, Thomas Senger, Suggestion, Possible addition to IF Statements

|

Hello there,

in our setup we use many variables with many different exceptions. Would it be possible to implement something like an

IF or/and IF statement? I believe that this is currently not possible.

Best regards,

Thomas Senger |

03 May 2024, Stefan Ritt, Suggestion, Possible addition to IF Statements 03 May 2024, Stefan Ritt, Suggestion, Possible addition to IF Statements

|

The tinyexpr library I use to evaluate expressions does not support boolean operations. I would have to switch to the newer

tineyexpr-plusplus version, which also has much richer functionality:

https://github.com/Blake-Madden/tinyexpr-plusplus/blob/tinyexpr%2B%2B/TinyExprChanges.md

Unfortunately it requires C++17, and at the moment we limit MIDAS to C++11, meaning we would break this requirement. I

believe at the moment there are still some experiments (mainly at TRIUMF) which are stuck to older OS and therefore cannot

switch to C++17, but hopefully this will change over time.

Stefan |

11 Jul 2023, Anubhav Prakash, Forum, Possible ODB corruption! Webpages https://midptf01.triumf.ca/?cmd=Programs not loading! 11 Jul 2023, Anubhav Prakash, Forum, Possible ODB corruption! Webpages https://midptf01.triumf.ca/?cmd=Programs not loading!

|

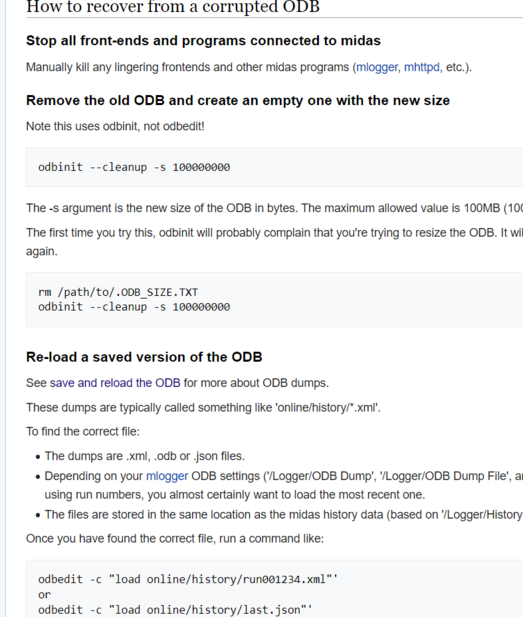

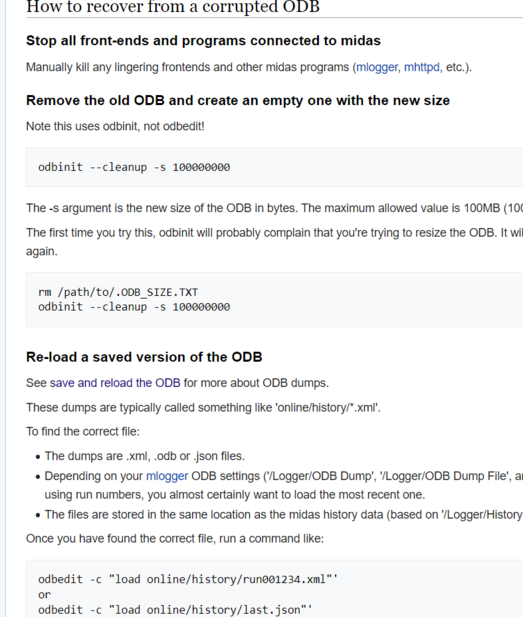

The ODB server seems to have crashed/corrupted. I tried reloading the previous

working version of ODB(using the commands in folliwng image) but it didn't work.

I have also attached the screenshot of the site https://midptf01.triumf.ca/?cmd=Programs. Any help to resolve this would be appreciated! Normally Prof. Thomas Lindner would solve such issues, but he is busy working at CERN till 17th of July, and we cannot afford to wait until then.

The following is the error: when I run bash /home/midptf/online/bin/start_daq.sh

[ODBEdit1,INFO] Fixing ODB "/Programs/ODBEdit" struct size mismatch (expected

316, odb size 92)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/ODBEdit" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11381:db_get_record1,ERROR] after db_check_record()

still struct size mismatch (expected 316, odb size 92) of "/Programs/ODBEdit",

calling db_create_record()

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11387:db_get_record1,ERROR] repaired struct size

mismatch of "/Programs/ODBEdit"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/ODBEdit" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [alarm.cxx:702:al_check,ERROR] Cannot get program info record

for program "ODBEdit", db_get_record1() status 319

[ODBEdit1,INFO] Fixing ODB "/Programs/mhttpd" struct size mismatch (expected

316, odb size 60)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/mhttpd" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11381:db_get_record1,ERROR] after db_check_record()

still struct size mismatch (expected 316, odb size 92) of "/Programs/mhttpd",

calling db_create_record()

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11387:db_get_record1,ERROR] repaired struct size

mismatch of "/Programs/mhttpd"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/mhttpd" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [alarm.cxx:702:al_check,ERROR] Cannot get program info record

for program "mhttpd", db_get_record1() status 319

[ODBEdit1,INFO] Fixing ODB "/Programs/Logger" struct size mismatch (expected

316, odb size 60)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/Logger" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11381:db_get_record1,ERROR] after db_check_record()

still struct size mismatch (expected 316, odb size 92) of "/Programs/Logger",

calling db_create_record()

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11387:db_get_record1,ERROR] repaired struct size

mismatch of "/Programs/Logger"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/Logger" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [alarm.cxx:702:al_check,ERROR] Cannot get program info record

for program "Logger", db_get_record1() status 319

14:54:29 [ODBEdit,ERROR] [odb.cxx:1763:db_validate_db,ERROR] Warning: database

data area is 100% full

14:54:29 [ODBEdit,ERROR] [odb.cxx:1283:db_validate_key,ERROR] hkey 643368, path

"/Alarms/Classes/<NULL>/Display BGColor", string value is not valid UTF-8

14:54:29 [ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot

malloc_data(256), called from db_set_link_data

14:54:29 [ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot

reallocate "/System/Tmp/140305391605888I/Start command" with new size 256 bytes,

online database full

14:54:29 [ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of

zero, set to 32, odb path "Start command"

14:54:29 [ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of

zero, set to 32, odb path "Start command" |

11 Jul 2023, Thomas Lindner, Forum, Possible ODB corruption! Webpages https://midptf01.triumf.ca/?cmd=Programs not loading! 11 Jul 2023, Thomas Lindner, Forum, Possible ODB corruption! Webpages https://midptf01.triumf.ca/?cmd=Programs not loading!

|

Hi Anubhav,

I have fixed the ODB corruption problem.

Cheers,

Thomas

| Anubhav Prakash wrote: | The ODB server seems to have crashed/corrupted. I tried reloading the previous

working version of ODB(using the commands in folliwng image) but it didn't work.

I have also attached the screenshot of the site https://midptf01.triumf.ca/?cmd=Programs. Any help to resolve this would be appreciated! Normally Prof. Thomas Lindner would solve such issues, but he is busy working at CERN till 17th of July, and we cannot afford to wait until then.

The following is the error: when I run bash /home/midptf/online/bin/start_daq.sh

[ODBEdit1,INFO] Fixing ODB "/Programs/ODBEdit" struct size mismatch (expected

316, odb size 92)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/ODBEdit" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11381:db_get_record1,ERROR] after db_check_record()

still struct size mismatch (expected 316, odb size 92) of "/Programs/ODBEdit",

calling db_create_record()

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11387:db_get_record1,ERROR] repaired struct size

mismatch of "/Programs/ODBEdit"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/ODBEdit" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [alarm.cxx:702:al_check,ERROR] Cannot get program info record

for program "ODBEdit", db_get_record1() status 319

[ODBEdit1,INFO] Fixing ODB "/Programs/mhttpd" struct size mismatch (expected

316, odb size 60)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/mhttpd" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11381:db_get_record1,ERROR] after db_check_record()

still struct size mismatch (expected 316, odb size 92) of "/Programs/mhttpd",

calling db_create_record()

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11387:db_get_record1,ERROR] repaired struct size

mismatch of "/Programs/mhttpd"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/mhttpd" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [alarm.cxx:702:al_check,ERROR] Cannot get program info record

for program "mhttpd", db_get_record1() status 319

[ODBEdit1,INFO] Fixing ODB "/Programs/Logger" struct size mismatch (expected

316, odb size 60)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/Logger" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11381:db_get_record1,ERROR] after db_check_record()

still struct size mismatch (expected 316, odb size 92) of "/Programs/Logger",

calling db_create_record()

[ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot malloc_data(256),

called from db_set_link_data

[ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot reallocate

"/System/Tmp/140305391605888I/Start command" with new size 256 bytes, online

database full

[ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of zero, set

to 32, odb path "Start command"

[ODBEdit1,ERROR] [odb.cxx:11387:db_get_record1,ERROR] repaired struct size

mismatch of "/Programs/Logger"

[ODBEdit1,ERROR] [odb.cxx:11293:db_get_record,ERROR] struct size mismatch for

"/Programs/Logger" (expected size: 316, size in ODB: 92)

[ODBEdit1,ERROR] [alarm.cxx:702:al_check,ERROR] Cannot get program info record

for program "Logger", db_get_record1() status 319

14:54:29 [ODBEdit,ERROR] [odb.cxx:1763:db_validate_db,ERROR] Warning: database

data area is 100% full

14:54:29 [ODBEdit,ERROR] [odb.cxx:1283:db_validate_key,ERROR] hkey 643368, path

"/Alarms/Classes/<NULL>/Display BGColor", string value is not valid UTF-8

14:54:29 [ODBEdit1,ERROR] [odb.cxx:556:realloc_data,ERROR] cannot

malloc_data(256), called from db_set_link_data

14:54:29 [ODBEdit1,ERROR] [odb.cxx:6923:db_set_link_data,ERROR] Cannot

reallocate "/System/Tmp/140305391605888I/Start command" with new size 256 bytes,

online database full

14:54:29 [ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of

zero, set to 32, odb path "Start command"

14:54:29 [ODBEdit1,ERROR] [odb.cxx:8531:db_paste,ERROR] found string length of

zero, set to 32, odb path "Start command" |

|

17 Mar 2015, Wes Gohn, Forum, PosgresQL 17 Mar 2015, Wes Gohn, Forum, PosgresQL

|

For our MIDAS installation at Fermilab, it is necessary that we be able to write to a PosgresQL

database (MySQL is not supported here). This will be required of both mlogger and mscb.

Has anyone done this before? And do you know of a relatively simple way of implementing it, or do

we need to replicate the mysql functions that are already in the mlogger/mscb code to add functions

that perform the equivalent Posgres commands?

Thanks!

Wes |

17 Mar 2015, Lee Pool, Forum, PosgresQL 17 Mar 2015, Lee Pool, Forum, PosgresQL

|

> For our MIDAS installation at Fermilab, it is necessary that we be able to write to a PosgresQL

> database (MySQL is not supported here). This will be required of both mlogger and mscb.

>

> Has anyone done this before? And do you know of a relatively simple way of implementing it, or do

> we need to replicate the mysql functions that are already in the mlogger/mscb code to add functions

> that perform the equivalent Posgres commands?

>

> Thanks!

> Wes

Hi Wes

I did this a few years ago, and replicated the mysql functions within mlogger.

Lee |

21 Nov 2023, Ivo Schulthess, Forum, Polled frontend writes data to ODB without RO_ODB 21 Nov 2023, Ivo Schulthess, Forum, Polled frontend writes data to ODB without RO_ODB

|

Good morning,

In our setup, we have a neutron detector that creates up to 16 MB of polled (EQ_POLLED) data in one event (event limit = 1) that we do not want to have saved into the ODB. Nevertheless, I cannot disable it. The equipment has only the read-on-flag RO_RUNNING, and the read-on value in the ODB is 1. The data are also not saved to the history. I also tried with a minimal example frontend with the same settings, but also those "data" get written to the ODB. For now, I increased the size of the ODB to 40 MB (50% for keys and 50% for data is automatic), but in principle, I do not want it to be saved to the ODB at all. Is there something I am missing?

Thanks in advance for your advice.

Cheers,

Ivo |

22 Nov 2023, Stefan Ritt, Forum, Polled frontend writes data to ODB without RO_ODB 22 Nov 2023, Stefan Ritt, Forum, Polled frontend writes data to ODB without RO_ODB

|

I cannot confirm that. I just tried myself with examples/experiment/frontend.cxx, removed the RO_ODB, and the trigger events did NOT get copied to the ODB.

Actually you can debug the code yourself. The relevant line is in mfe.cxx:2075:

/* send event to ODB */

if (pevent->data_size && (eq_info->read_on & RO_ODB)) {

if (actual_millitime - eq->last_called > ODB_UPDATE_TIME) {

eq->last_called = actual_millitime;

update_odb(pevent, eq->hkey_variables, eq->format);

eq->odb_out++;

}

}

so if read_on is equal 1, the function update_odb should never be called.

So the problem must be on your side.

Best,

Stefan |

15 Dec 2003, Stefan Ritt, , Poll about default indent style 15 Dec 2003, Stefan Ritt, , Poll about default indent style

|

Dear all,

there are continuing requests about the C indent style we use in midas. As

you know, the current style does not comply with any standard. It is even

a mixture of styles since code comes from different people. To fix this

once and forever, I am considering using the "indent" program which comes

with every linux installation. Running indent regularly on all our code

ensures a consistent look. So I propose (actually the idea came from Paul

Knowles) to put a new section in the midas makefile:

indent:

find . -name "*.[hc]" -exec indent <flags> {} \;

so one can easily do a "make indent". The question is now how the <flags>

should look like. The standard is GNU style, but this deviates from the

original K&R style such that the opening "{" is put on a new line, which I

use but most of you do not. The "-kr" style does the standard K&R style,

but used tabs (which is not good), and does a 4-column indention which is

I think too much. So I would propose following flags:

indent -kr -nut -i2 -di8 -bad <filename.c>

Please take some of your source code, and format it this way, and let me

know if these flags are a good combination or if you would like to have

anything changed. It should also be checked (->PAA) that this style

complies with the DOC++ system. Once we all agree, I can put it into the

makefile, execute it and commit the newly formatted code for the whole

source tree. |

18 Dec 2003, Paul Knowles, , Poll about default indent style 18 Dec 2003, Paul Knowles, , Poll about default indent style

|

Hi Stefan,

> once and forever, I am considering using the "indent" program which comes

> with every linux installation. Running indent regularly on all our code

> ensures a consistent look.

I think this can be called a Good Thing.

> The "-kr" style does the standard K&R style,

> but used tabs (which is not good), and does a 4-column

> indention which is I think too much. So I would propose

> following flags:

> indent -kr -nut -i2 -di8 -bad <filename.c>

(some of this is a repeat from an earlier mail to SR):

You might also want a -l90 for a longer line length than 75

characters. K&R style with indentation from 5 to 8 spaces

is a good indicator of complexity: as soon as 40 characters

of code wind up unreadably squashed to the right of the

screen, you have to refactor to have less indentation

levels. This means you wind up rolling up the inner parts

of deeply nested conditionals or loops as separate

functions, making the whole code easier to understand.

I think that setting -i2 is ``going around the problem''

of deep nesting. If you really need to keep the indentation

tabs less than 4 (8 is ideal) because your code is falling off the

right edge of the screen, you are indented too deeply. Why do

I say that? There is the famous ``7+-1'' idea that you can hold

in you head only 7 ideas (give or take one) at any time. I'm not

that smart and I top out at about 5: So for example, a conditional

in a loop in a conditional in a switch is about as deep a level

of nesting as I can easily understand (remember that I also have

to hold the line i'm working on as well): that's 4 levels, plus one for the

function itself and we are at 40 characters away from the right edge

of the screen using -i8 and have some 40 characters available for writing code

(how often is a line of code really longer than about 40 characters?).

On top of that, the indentation is easily seen so you know immediately

wheather you are at the upper conditional, or inner conditional. A -i2

just doesn't make the difference big enough. -i5 is a happy balance

with enough visual clue as to the indentation level, but leaves you 50

to 60 characters for the code line itself.

However, if you are indenting very deeply, then the poor reader can't hold

on to the context: there are more than 6 or 7 things to keep in mind.

In those cases, roll up the inner levels as a separate function and

call it that way. The inner complexity of the nested statements gets

nicely abstracted and then dumb people like me can understand what

you are doing.

So, in brief: indent is a good idea, and -in with n>=4 will be best.

I don't think -i2 will lend itself to making the code so much easier

to read.

thanks for listening.

.p. |

18 Dec 2003, Stefan Ritt, , Poll about default indent style 18 Dec 2003, Stefan Ritt, , Poll about default indent style

|

Hi Paul,

I agree with you that a nesting level of more than 4-5 is a bad thing, but I

believe that throughout the midas code, this level is not exceeded (my poor

mind also does not hold more than 5 things (;-) ). An indent level of 8 columns

alone does hot force you too much in not extending the nesting level. I have

seen code which does that, so there are nesting levels of 8 and more, which

ends up that the code is smashed to the right side of the screen, where each

statement is broken into many line since each line only holds 10 or 20

characters. All the nice real estate on the left side of the scree is lost.

So having said that, I don't feel a strong need of giving up a "-i2", since the

midas code does not contain deep nesting levels and hopefully will never have.

In my opinion, a small indent level makes more use of your screen space, since

you do not have a large white area at the left. A typical nesting level is 3-4,

which causes already 32 blank charactes at the left, or 1/3 of your screen,

just for nothing. It will lead to more lines (even with -l90), so people have

to scroll more.

What do others think (Pierre, Konstantin, Renee) ? |

01 Jan 2004, Konstantin Olchanski, , Poll about default indent style 01 Jan 2004, Konstantin Olchanski, , Poll about default indent style

|

> I don't feel a strong need of giving up a "-i2"...

I am comfortable with the current MIDAS styling convention and I would rather not

have yet another private religious war over the right location for the curley braces.

If we are to consider changing the MIDAS coding convention, I urge all and sundry

to read the ROOT coding convention, as written by Rene Brun and Fons Rademakers at

http://root.cern.ch/root/Conventions.html. The ROOT people did their homework, they

did read the literature and they produced a well considered and well argumented style.

Also, while there, do read the Taligent documentation- by far, one of the most

coherent manuals to C++ programming style.

K.O. |

06 Jan 2004, Stefan Ritt, , Poll about default indent style 06 Jan 2004, Stefan Ritt, , Poll about default indent style

|

Ok, taking all comments so far into account, I conclude adopting the ROOT

coding style would be best for us. So I put

indent:

find . -name "*.[hc]" -exec indent -kr -nut -i3 {} \;

Into the makefile. Hope everybody is happy now (;-))) |

24 Jul 2015, Konstantin Olchanski, Info, Plans for improving midas network security 24 Jul 2015, Konstantin Olchanski, Info, Plans for improving midas network security

|

There is a number of problems with network security in midas. (as separate from web/http/https security).

1) too many network sockets are unnecessarily bound to the external network interface instead of localhost (UDP ports are already bound to localhost on MacOS).

2) by default the RPC ports of each midas program accept connections and RPC commands from anywhere in the world (an access control list is already implemented via /Experiment/Security/Rpc Hosts, but not active by default)

3) mserver also has an access control list but it is not integrated with the access control list for the RPC ports.

4) it is difficult to run midas in the presence of firewalls (midas programs listen on random network ports - cannot be easily added to firewall rules)

There is a new git branch "feature/rpcsecurity" where I am addressing some of these problems:

1) UDP sockets are only used for internal communications (hotlinks & etc) within one machine, so they should be bound to the localhost address and become invisible to external machines. This change breaks binary compatibility from old clients - they are have to be relinked with the new midas library or hotlinks & etc will stop working. If some clients cannot be rebuild (I have one like this), I am preserving the old way by checking for a special file in the experiment directory (same place as ODB.SHM). (done)

2) if one runs on a single machine, does not use the mserver and does not have clients running on other machines, then all the RPC ports can be bound to localhost. (this kills the MacOS popups about "odbedit wants to connect to the Internet"). (partially done)

This (2) will become the new default - out of the box, midas will not listen to any external network connections - making it very secure.

To use the mserver, one will have to change the ODB setting "/Experiment/Security/Enable external RPC connections" and restart all midas programs (I am looking for a better name for this odb setting).

3) the out-of-the-box default access control list for RPC connections will be set to "localhost", which will reject all external connections, even when they are enabled by (2). One will be required to enter the names of all machines that will run midas clients in "/Experiment/Security/Rpc hosts". (already implemented in main midas, but default access control list is empty meaning everybody is permitted)

4) the mserver will be required to attach to some experiment and will use this same access control list to restrict access to the main mserver listener port. Right now the mserver listens on this port without attaching to any experiment and accepts the access control list via command line arguments. I think after this change a single mserver will still be able to service multiple experiments (TBD).

5) I am adding an option to fix TCP port numbers for MIDAS programs via "/Experiment/Security/Rpc ports/fename = (int)5555". Once a remote frontend is bound to a fixed port, appropriate openings can be made in the firewall, etc. Default port number value will be 0 meaning "use random port", same as now.

One problem remains with initial connecting to the mserver. The client connects to the main mserver listener port (easy to firewall), but then the mserver connects back to the client - this reverse connection is difficult to firewall and this handshaking is difficult to fix in the midas sources. It will probably remain unresolved for now.

K.O. |

|