| ID |

Date |

Author |

Topic |

Subject |

|

1906

|

12 May 2020 |

Stefan Ritt | Info | New ODB++ API | Since the beginning of the lockdown I have been working hard on a new object-oriented interface to the online database ODB. I have the code now in an initial state where it is ready for

testing and commenting. The basic idea is that there is an object midas::odb, which represents a value or a sub-tree in the ODB. Reading, writing and watching is done through this

object. To get started, the new API has to be included with

#include <odbxx.hxx>

To create ODB values under a certain sub-directory, you can either create one key at a time like:

midas::odb o;

o.connect("/Test/Settings", true); // this creates /Test/Settings

o.set_auto_create(true); // this turns on auto-creation

o["Int32 Key"] = 1; // create all these keys with different types

o["Double Key"] = 1.23;

o["String Key"] = "Hello";

or you can create a whole sub-tree at once like:

midas::odb o = {

{"Int32 Key", 1},

{"Double Key", 1.23},

{"String Key", "Hello"},

{"Subdir", {

{"Another value", 1.2f}

}

};

o.connect("/Test/Settings");

To read and write to the ODB, just read and write to the odb object

int i = o["Int32 Key];

o["Int32 Key"] = 42;

std::cout << o << std::endl;

This works with basic types, strings, std::array and std::vector. Each read access to this object triggers an underlying read from the ODB, and each write access triggers a write to the

ODB. To watch a value for change in the odb (the old db_watch() function), you can use now c++ lambdas like:

o.watch([](midas::odb &o) {

std::cout << "Value of key \"" + o.get_full_path() + "\" changed to " << o << std::endl;

});

Attached is a full running example, which is now also part of the midas repository. I have tested most things, but would not yet use it in a production environment. Not 100% sure if there

are any memory leaks. If someone could valgrind the test program, I would appreciate (currently does not work on my Mac).

Have fun!

Stefan

|

| Attachment 1: odbxx_test.cxx

|

/********************************************************************\

Name: odbxx_test.cxx

Created by: Stefan Ritt

Contents: Test and Demo of Object oriented interface to ODB

\********************************************************************/

#include <string>

#include <iostream>

#include <array>

#include <functional>

#include "odbxx.hxx"

#include "midas.h"

/*------------------------------------------------------------------*/

int main() {

cm_connect_experiment(NULL, NULL, "test", NULL);

midas::odb::set_debug(true);

// create ODB structure...

midas::odb o = {

{"Int32 Key", 42},

{"Bool Key", true},

{"Subdir", {

{"Int32 key", 123 },

{"Double Key", 1.2},

{"Subsub", {

{"Float key", 1.2f}, // floats must be explicitly specified

{"String Key", "Hello"},

}}

}},

{"Int Array", {1, 2, 3}},

{"Double Array", {1.2, 2.3, 3.4}},

{"String Array", {"Hello1", "Hello2", "Hello3"}},

{"Large Array", std::array<int, 10>{} }, // array with explicit size

{"Large String", std::string(63, '\0') }, // string with explicit size

};

// ...and push it to ODB. If keys are present in the

// ODB, their value is kept. If not, the default values

// from above are copied to the ODB

o.connect("/Test/Settings", true);

// alternatively, a structure can be created from an existing ODB subtree

midas::odb o2("/Test/Settings/Subdir");

std::cout << o2 << std::endl;

// retrieve, set, and change ODB value

int i = o["Int32 Key"];

o["Int32 Key"] = i+1;

o["Int32 Key"]++;

o["Int32 Key"] *= 1.3;

std::cout << "Should be 57: " << o["Int32 Key"] << std::endl;

// test with bool

o["Bool Key"] = !o["Bool Key"];

// test with std::string

std::string s = o["Subdir"]["Subsub"]["String Key"];

s += " world!";

o["Subdir"]["Subsub"]["String Key"] = s;

// test with a vector

std::vector<int> v = o["Int Array"];

v[1] = 10;

o["Int Array"] = v; // assign vector to ODB object

o["Int Array"][1] = 2; // modify ODB object directly

i = o["Int Array"][1]; // read from ODB object

o["Int Array"].resize(5); // resize array

o["Int Array"]++; // increment all values of array

// test with a string vector

std::vector<std::string> sv;

sv = o["String Array"];

sv[1] = "New String";

o["String Array"] = sv;

o["String Array"][2] = "Another String";

// iterate over array

int sum = 0;

for (int e : o["Int Array"])

sum += e;

std::cout << "Sum should be 11: " << sum << std::endl;

// creat key from other key

midas::odb oi(o["Int32 Key"]);

oi = 123;

// test auto refresh

std::cout << oi << std::endl; // each read access reads value from ODB

oi.set_auto_refresh_read(false); // turn off auto refresh

std::cout << oi << std::endl; // this does not read value from ODB

oi.read(); // this does manual read

std::cout << oi << std::endl;

midas::odb ox("/Test/Settings/OTF");

ox.delete_key();

// create ODB entries on-the-fly

midas::odb ot;

ot.connect("/Test/Settings/OTF", true); // this forces /Test/OTF to be created if not already there

ot.set_auto_create(true); // this turns on auto-creation

ot["Int32 Key"] = 1; // create all these keys with different types

ot["Double Key"] = 1.23;

ot["String Key"] = "Hello";

ot["Int Array"] = std::array<int, 10>{};

ot["Subdir"]["Int32 Key"] = 42;

ot["String Array"] = std::vector<std::string>{"S1", "S2", "S3"};

std::cout << ot << std::endl;

o.read(); // re-read the underlying ODB tree which got changed by above OTF code

std::cout << o.print() << std::endl;

// iterate over sub-keys

for (auto& oit : o)

std::cout << oit.get_odb()->get_name() << std::endl;

// print whole sub-tree

std::cout << o.print() << std::endl;

// dump whole subtree

std::cout << o.dump() << std::endl;

// delete test key from ODB

o.delete_key();

// watch ODB key for any change with lambda function

midas::odb ow("/Experiment");

ow.watch([](midas::odb &o) {

std::cout << "Value of key \"" + o.get_full_path() + "\" changed to " << o << std::endl;

});

do {

int status = cm_yield(100);

if (status == SS_ABORT || status == RPC_SHUTDOWN)

break;

} while (!ss_kbhit());

cm_disconnect_experiment();

return 1;

}

|

|

1913

|

20 May 2020 |

Konstantin Olchanski | Info | New ODB++ API | > midas::odb o;

> o["foo"] = 1;

This is an excellent development.

ODB is a tree-structured database, JSON is a tree-structured data format,

and they seem to fit together like hand and glove. For programming

web pages, Javascript and JSON-style access to ODB seems to work really well.

And now with modern C++ we can have a similar API for working with ODB tree data,

as if it were Javascript JSON tree data.

Let's see how well it works in practice!

K.O. |

|

1914

|

20 May 2020 |

Stefan Ritt | Info | New ODB++ API | In meanwhile, there have been minor changes and improvements to the API:

Previously, we had:

> midas::odb o;

> o.connect("/Test/Settings", true); // this creates /Test/Settings

> o.set_auto_create(true); // this turns on auto-creation

> o["Int32 Key"] = 1; // create all these keys with different types

> o["Double Key"] = 1.23;

> o["String Key"] = "Hello";

Now, we only need:

o.connect("/Test/Settings");

o["Int32 Key"] = 1; // create all these keys with different types

...

no "true" needed any more. If the ODB tree does not exist, it gets created. Similarly, set_auto_create() can be dropped, it's on by default (thought this makes more sense). Also the iteration over subkeys has

been changed slightly.

The full example attached has been updated accordingly.

Best,

Stefan |

| Attachment 1: odbxx_test.cxx

|

/********************************************************************\

Name: odbxx_test.cxx

Created by: Stefan Ritt

Contents: Test and Demo of Object oriented interface to ODB

\********************************************************************/

#include <string>

#include <iostream>

#include <array>

#include <functional>

#include "midas.h"

#include "odbxx.hxx"

/*------------------------------------------------------------------*/

int main() {

cm_connect_experiment(NULL, NULL, "test", NULL);

midas::odb::set_debug(true);

// create ODB structure...

midas::odb o = {

{"Int32 Key", 42},

{"Bool Key", true},

{"Subdir", {

{"Int32 key", 123 },

{"Double Key", 1.2},

{"Subsub", {

{"Float key", 1.2f}, // floats must be explicitly specified

{"String Key", "Hello"},

}}

}},

{"Int Array", {1, 2, 3}},

{"Double Array", {1.2, 2.3, 3.4}},

{"String Array", {"Hello1", "Hello2", "Hello3"}},

{"Large Array", std::array<int, 10>{} }, // array with explicit size

{"Large String", std::string(63, '\0') }, // string with explicit size

};

// ...and push it to ODB. If keys are present in the

// ODB, their value is kept. If not, the default values

// from above are copied to the ODB

o.connect("/Test/Settings");

// alternatively, a structure can be created from an existing ODB subtree

midas::odb o2("/Test/Settings/Subdir");

std::cout << o2 << std::endl;

// set, retrieve, and change ODB value

o["Int32 Key"] = 42;

int i = o["Int32 Key"];

o["Int32 Key"] = i+1;

o["Int32 Key"]++;

o["Int32 Key"] *= 1.3;

std::cout << "Should be 57: " << o["Int32 Key"] << std::endl;

// test with bool

o["Bool Key"] = false;

o["Bool Key"] = !o["Bool Key"];

// test with std::string

o["Subdir"]["Subsub"]["String Key"] = "Hello";

std::string s = o["Subdir"]["Subsub"]["String Key"];

s += " world!";

o["Subdir"]["Subsub"]["String Key"] = s;

// test with a vector

std::vector<int> v = o["Int Array"]; // read vector

std::fill(v.begin(), v.end(), 10);

o["Int Array"] = v; // assign vector to ODB array

o["Int Array"][1] = 2; // modify array element

i = o["Int Array"][1]; // read from array element

o["Int Array"].resize(5); // resize array

o["Int Array"]++; // increment all values of array

// test with a string vector

std::vector<std::string> sv;

sv = o["String Array"];

sv[1] = "New String";

o["String Array"] = sv;

o["String Array"][2] = "Another String";

// iterate over array

int sum = 0;

for (int e : o["Int Array"])

sum += e;

std::cout << "Sum should be 47: " << sum << std::endl;

// creat key from other key

midas::odb oi(o["Int32 Key"]);

oi = 123;

// test auto refresh

std::cout << oi << std::endl; // each read access reads value from ODB

oi.set_auto_refresh_read(false); // turn off auto refresh

std::cout << oi << std::endl; // this does not read value from ODB

oi.read(); // this forces a manual read

std::cout << oi << std::endl;

// create ODB entries on-the-fly

midas::odb ot;

ot.connect("/Test/Settings/OTF");// this forces /Test/OTF to be created if not already there

ot["Int32 Key"] = 1; // create all these keys with different types

ot["Double Key"] = 1.23;

ot["String Key"] = "Hello";

ot["Int Array"] = std::array<int, 10>{};

ot["Subdir"]["Int32 Key"] = 42;

ot["String Array"] = std::vector<std::string>{"S1", "S2", "S3"};

std::cout << ot << std::endl;

o.read(); // re-read the underlying ODB tree which got changed by above OTF code

std::cout << o.print() << std::endl;

// iterate over sub-keys

for (midas::odb& oit : o)

std::cout << oit.get_name() << std::endl;

// print whole sub-tree

std::cout << o.print() << std::endl;

// dump whole subtree

std::cout << o.dump() << std::endl;

// delete test key from ODB

o.delete_key();

// watch ODB key for any change with lambda function

midas::odb ow("/Experiment");

ow.watch([](midas::odb &o) {

std::cout << "Value of key \"" + o.get_full_path() + "\" changed to " << o << std::endl;

});

do {

int status = cm_yield(100);

if (status == SS_ABORT || status == RPC_SHUTDOWN)

break;

} while (!ss_kbhit());

cm_disconnect_experiment();

return 1;

}

|

|

1915

|

20 May 2020 |

Pintaudi Giorgio | Info | New ODB++ API | All this is very good news. I really wish this were available some months ago: it would have helped me immensely. The old C API was clunky at best.

I really like the idea and looking forward to using it (even if at the moment I do not have the need to) ... |

|

1916

|

20 May 2020 |

Konstantin Olchanski | Info | New ODB++ API | > All this is very good news. I really wish this were available some months ago: it would have helped me immensely. The old C API was clunky at best.

> I really like the idea and looking forward to using it (even if at the moment I do not have the need to) ...

Yes, I have designed new C-style MIDAS ODB APIs twice now (VirtualOdb in ROOTANA and MVOdb in ROOTANA and MIDAS),

and I was never happy with the results. There is too many corner cases and odd behaviour. Let's see how

this C++ interface shakes out.

For use in analyzers, Stefan's C++ interface still need to be virtualized - right now it has only one implementation

with the MIDAS ODB backend. In analyzers, we need XML, JSON (and a NULL ODB) backends. The API looks

to be clean enough to add this, but I have not looked at the implementation yet. So "watch this space" as they say.

K.O. |

|

1941

|

09 Jun 2020 |

Isaac Labrie Boulay | Info | Preparing the VME hardware - VME address jumpers. | Hey folks,

I'm currently working on setting up a MIDAS experiment and I am following the

"Setup MIDAS experiment at Triumf" page on

MidasWiki(https://midas.triumf.ca/MidasWiki/index.php/Setup_MIDAS_experiment_at_

TRIUMF).

The 3rd line of the hardware checklist under the "Prepare VME hardware section"

has a link to a page that doesn't exit anymore, I'm trying to figure out how to

setup the VME address jumpers on the VME modules.

Does anyone know how to setup the VME modules? Or, can anyone send me a link to

instructions?

Thanks a lot for your time.

Isaac |

| Attachment 1: VME_address_jumpers_broken_link.PNG

|

|

|

1943

|

10 Jun 2020 |

Konstantin Olchanski | Info | Preparing the VME hardware - VME address jumpers. | Hi, if you are not using any VME hardware, then you have no VME address jumpers to

set. https://en.wikipedia.org/wiki/VMEbus

K.O. |

|

1947

|

12 Jun 2020 |

Isaac Labrie Boulay | Info | Preparing the VME hardware - VME address jumpers. | > Hi, if you are not using any VME hardware, then you have no VME address jumpers to

> set. https://en.wikipedia.org/wiki/VMEbus

>

> K.O.

Hi thanks for taking the time to help me out. I am using a VME-MWS in this experiment.

Let me know what you think.

Isaac |

|

1955

|

19 Jun 2020 |

Isaac Labrie-Boulay | Info | Building/running a Frontend Task | To build a frontend task, the user code and system code are compiled and linked

together with the required libraries, by running a Makefile (e.g.

../midas/examples/experiment/Makefile in the MIDAS package).

I tried building the CAMAC example frontend and I get this error:

g++: error: /home/rcmp/packages/midas/drivers/camac/ces8210.c: No such file or

directory

g++: error: /home/rcmp/packages/midas/linux/lib/libmidas.a: No such file or

directory

make: *** [camac_init.exe] Error 1

Obviously, I'm running the "make all" command from the camac directory. Why

would I get this "no such file" error? Do I need to download the MIDAS packages

inside my experiment directory?

Thanks for helping me out.

Isaac |

|

1958

|

24 Jun 2020 |

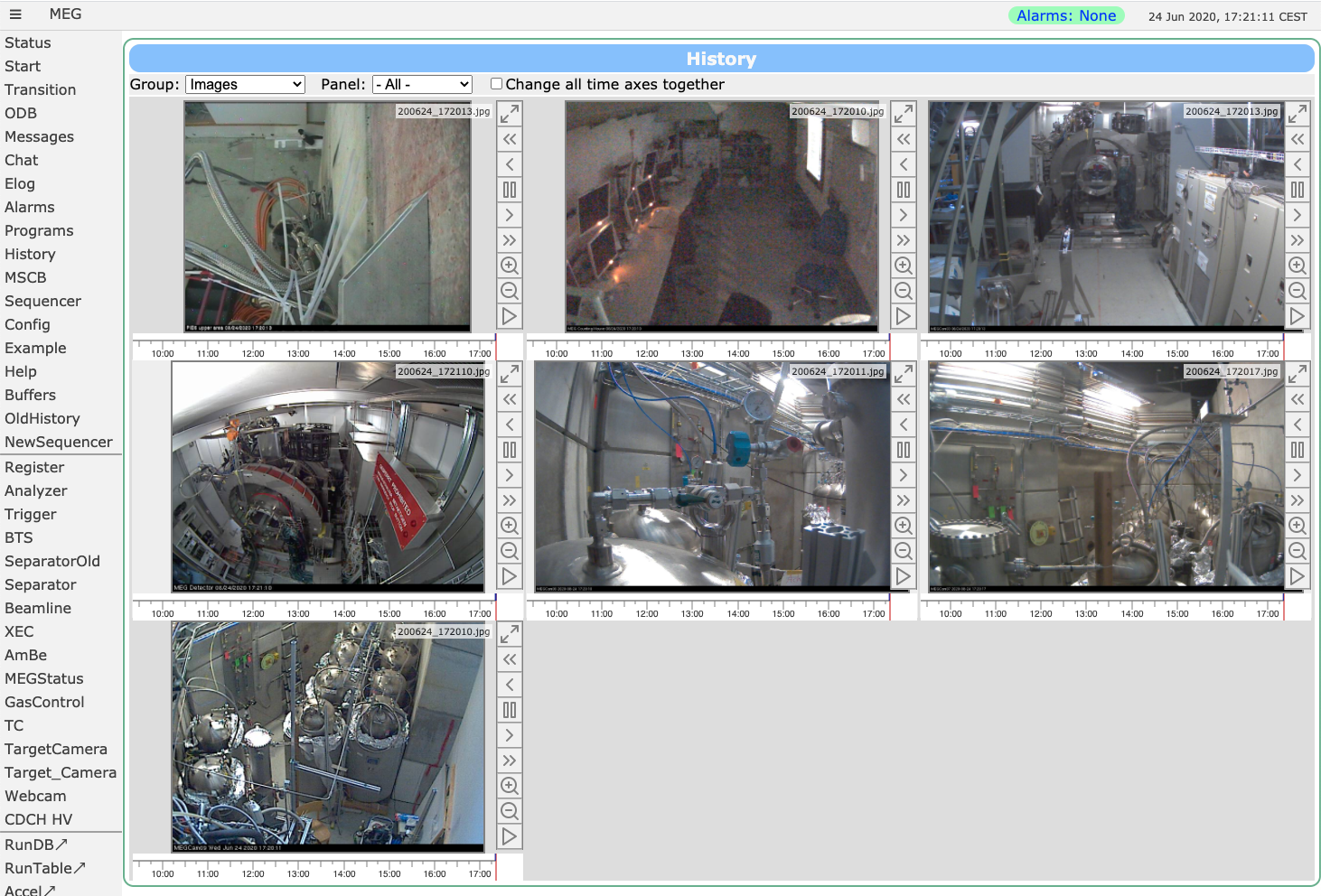

Stefan Ritt | Info | New image history system available | I'm happy to report that the Corona Lockdown in Europe also had some positive side

effects: Finally I found time to implement an image history system in midas,

something I wanted to do since many years, but never found time for that.

The idea is that you can incorporate any network-connected WebCam into the midas

history system. You specify an update interval (like one minute) and the logger

fetches regularly images from that webcam. The images are stored as raw files in

the midas history directory, and can be retrieved via the web browser similarly to

the "normal" history. Attached is an image from the MEG Experiment at PSI to give

you some idea.

The cool thing now is that you can go "backwards" in time and browse all stored

images. The buttons at each image allow you to step backward, forward, and play a

movie of images, forward or backward. You can query for a certain date/time and

download a specific image to your local disk. You can even synchronize all time

axes, drag left and right on each image to see your experiment from different

cameras at the same time stamps. You see a blue ribbon below each image which shows

time stamps for which an image is available.

Initially, only the most recent image is loaded to speed up loading time. As soon

as you click on the image or one of the arrow buttons, previous images are loaded

progressively, which you can see in the ribbon bar becoming blue. For slow internet

connections this can take some time. For typical webcams and one minute update

period you get typically a few GB per week.

To make this happen, you define a new ODB subtree

/History/Images/<name>/

Name: Name of Camera

Enabled: Boolean to enable readout of camera

URL URL to fetch an image from the camera

Period Time period in seconds to fetch a new image

Storage hours Number of hours to store the images (0 for infinite)

Extension Image file extension, usually ".jpg" or ".png"

Timescale Initial horizontal time scale (like 8h)

The tricky part is to obtain the URL from your camera. For some cameras you can get

that from the manual, others you have to "hack": Display an image in your browser

using the camera's internal web interface, inspect the source code of your web page

and you get the URL. For AXIS cameras I use, the URL is typically

http://<name>/axis-cgi/jpg/image.cgi

For the Netatmo cameras I have at home (which I used during development in my home

office), the procedure is more complicated, but you can google it. The logger is

now linked against the CURL library to fetch images, so it also support https://.

If libcurl is not installed on your system, the image history functionality will be

disabled.

I tested the system for a few days now and it seem stable, which however does not

mean that it is bug-free. So please report back any issue. The change is committed

to the current develop branch.

I hope this extension helps all those people who are forced to do more remote

monitoring of experiment during these times.

Best,

Stefan |

| Attachment 1: Screenshot_2020-06-24_at_17.21.11_.png

|

|

|

1959

|

28 Jun 2020 |

Konstantin Olchanski | Info | mhttpd https support openssl -> mbedtls | For password protection of midas web pages, https is required, good old http

with passwords transmitted in-the-clear is no longer considered secure. Latest

recommendation is to run mhttpd behind an industry-standard https proxy, for

example apache httpd. These proxies provide built-in password protection and

have integration with certbot to provide automatic renewal of https

certificates.

That said, for a long time now mhttpd provides native https support through the

mongoose web server library and the openssl cryptography library.

Unfortunately, for years now, we have been running into trouble with the midas

build process bombing out due to inconsistent versions and locations of system-

provided and user-installed openssl libraries. Despite our best efforts (and

through the switch to cmake!) these problems keep coming back and coming back.

Luckily, latest versions of mongoose support the mbedtls cryptography library. I

have tested it and it works well enough for me to switch the MIDAS default build

from "openssl if found" to "mbedtls if-asked-for-by-user".

Starting with commit e7b02f9, cmake builds do not look for and do not try to use

openssl. mhttpd is built without support for https. This is consistent with the

recommendation to run it behind an apache httpd password protected https proxy.

To enable https support using mbedtls, run "make mbedtls". This will "git clone"

the mbedtls library and add it to the midas build. mhttpd will be built with

https support enabled.

To disable mbedtls support, use "make cmake NO_MBEDTLS=1" or run "make

clean_mbedtls" (this will remove the mbedtls sources from the midas build).

To restore previous use of openssl, set the cmake variable "USE_OPENSSL".

In my test, mhttpd with https through mbedtls and a letsencrypt certificate gain

a score of "A" from SSLlabs. (very good).

(you have to use progs/mtcproxy to run this test - SSLlabs only probe port 443

and mtcproxy will forward it to mhttpd port 8443. to build, run "make

mtcpproxy").

References:

https://github.com/cesanta/mongoose

https://github.com/ARMmbed/mbedtls

K.O. |

|

1960

|

28 Jun 2020 |

Konstantin Olchanski | Info | mhttpd https support openssl -> mbedtls | To add. Using https with either openssl or mbedtls requires obtaining an https certificate. This can be self-

signed, or signed by a higher authority, or issued by the "let's encrypt" project.

mhttpd is looking for this certificate in the file ssl_cert.pem.

If this file does not exist, mhttpd will print the instructions for creating it using openssl (self-signed) or

using certbot (instantaneously and automatically issued let's encrypt certificate).

The certbot route is recommended:

1) (as root) setup certbot (i.e. see my CentOS and Ubuntu instructions on DAQWiki)

2) (as root) copy /etc/letsencrypt/live/$HOME/fullchain.pem and privkey.pem to $MIDASSYS

3) cat fullchain.pem privkey.pem > ssl_cert.pem

4) start mhttpd, watch the first few lines it prints to confirm it found the right certificate file.

The only missing piece for using this in production is lack of integration

with certbot automatic certificate renewal:

- a script has to run for steps (2) and (3) above

- mhttpd has to tell openssl/mbedtls to reload the certificate file (alternative is to automatically restart

mhttpd, bad!).

As an alternative, we can wait for the mongoose web server library and for the mbedtls crypto library to "grow"

certbot-style automatic certificate renewal features. (unavoidable, in my view).

K.O. |

|

1961

|

28 Jun 2020 |

Konstantin Olchanski | Info | Makefile update | I reworked the MIDAS Makefile to simplify things and to remove redundancy with functions

provided by cmake.

When you say "make", the list of options is printed.

The first and main options are "make cmake" and "make cclean" to run the cmake build.

This is my recommended way to build midas - the output of "make cmake" was tuned to provide

the information need to debug build problems (all compiler commands, command line switches

and file paths are reported). (normal "cmake VERBOSE=1" is tuned for debugging of cmake and

for maximum obfuscation of problems building the actual project).

Build options are implemented through cmake variables:

options that can be added to "make cmake":

NO_LOCAL_ROUTINES=1 NO_CURL=1

NO_ROOT=1 NO_ODBC=1 NO_SQLITE=1 NO_MYSQL=1 NO_SSL=1 NO_MBEDTLS=1

NO_EXPORT_COMPILE_COMMANDS=1

for example "make cmake NO_ROOT=1" to disable auto-detection of ROOT.

Two more make targets create reduced builds of midas:

"make mini" builds a subset of midas suitable for building frontend programs. Big programs

like mlogger and mhttpd are excluded, optional components like CURL or SQLITE are not needed.

"make remoteonly" builds a subset of midas suitable for building remotely connected

frontends. Big parts of midas are excluded, many system-dependent functions are excluded,

etc. This is intended for embedded applications, such as fpga, uclinux, etc.

But wait, there is more. Here is the full list:

daqubuntu:midas$ make

Usage:

make cmake --- full build of midas

make cclean --- remove everything build by make cmake

options that can be added to "make cmake":

NO_LOCAL_ROUTINES=1 NO_CURL=1

NO_ROOT=1 NO_ODBC=1 NO_SQLITE=1 NO_MYSQL=1 NO_SSL=1 NO_MBEDTLS=1

NO_EXPORT_COMPILE_COMMANDS=1

make dox --- run doxygen, results are in ./html/index.html

make cleandox --- remove doxygen output

make htmllint --- run html check on resources/*.html

make test --- run midas self test

make mbedtls --- enable mhttpd support for https via the mbedtls https library

make update_mbedtls --- update mbedtls to latest version

make clean_mbedtls --- remove mbedtls from this midas build

make mtcpproxy --- build the https proxy to forward root-only port 443 to mhttpd https

port 8443

make mini --- minimal build, results are in linux/{bin,lib}

make cleanmini --- remove everything build by make mini

make remoteonly --- minimal build, remote connetion only, results are in linux-

remoteonly/{bin,lib}

make cleanremoteonly --- remove everything build by make remoteonly

make linux32 --- minimal x86 -m32 build, results are in linux-m32/{bin,lib}

make clean32 --- remove everything built by make linux32

make linux64 --- minimal x86 -m64 build, results are in linux-m64/{bin,lib}

make clean64 --- remove everything built by make linux64

make linuxarm --- minimal ARM cross-build, results are in linux-arm/{bin,lib}

make cleanarm --- remove everything built by make linuxarm

make clean --- run all 'clean' commands

daqubuntu:midas$

K.O. |

|

1963

|

15 Jul 2020 |

Stefan Ritt | Info | Makefile update | Please note that you can also compile midas in the standard cmake way with

$ mkdir build

$ cd build

$ cmake ..

$ make install

in the root midas directory. You might have to use "cmake3" on some systems.

Stefan |

|

1964

|

15 Jul 2020 |

Stefan Ritt | Info | Minimal CMakeLists.txt for your midas front-end | Since a few people asked me, here is a "minimal" CMakeLists.txt file for a user-written front-end

program "myfe":

---------------------------

cmake_minimum_required(VERSION 3.0)

project(myfe)

# Check for MIDASSYS environment variable

if (NOT DEFINED ENV{MIDASSYS})

message(SEND_ERROR "MIDASSYS environment variable not defined.")

endif()

set(CMAKE_CXX_STANDARD 11)

set(MIDASSYS $ENV{MIDASSYS})

if (${CMAKE_SYSTEM_NAME} MATCHES Linux)

set(LIBS -lpthread -lutil -lrt)

endif()

add_executable(myfe myfe.cxx)

target_include_directories(myfe PRIVATE ${MIDASSYS}/include)

target_link_libraries(crfe ${MIDASSYS}/lib/libmfe.a ${MIDASSYS}/lib/libmidas.a ${LIBS}) |

|

1965

|

07 Aug 2020 |

Konstantin Olchanski | Info | update of MYSQL history documentation | I updated the documentation for setting up a MYSQL (MariaDB) database for

recording MIDAS history: https://midas.triumf.ca/MidasWiki/index.php/History_System#Write_MYSQL-history_events

One thing to note: the "writer" user must have the "INDEX" permission, otherwise

many things will not work correctly.

Included are the instructions for importing exiting *.hst history files into the

SQL database: mh2sql --mysql mysql_writer.txt *.hst

Let me know if there is interest in adding support for writing into Postgres SQL

database. We used to support both MySQL and Postgres through the ODBC library,

but in the new code, each database has to be supported through it's native API.

There is code for SQLITE, MYSQL, but no code for Postgres, although it is not too

hard to add.

K.O. |

|

1974

|

10 Aug 2020 |

Mathieu Guigue | Info | MidasConfig.cmake usage | As the Midas software is installed using CMake, it can be easily integrated into

other CMake projects using the MidasConfig.cmake file produced during the Midas

installation.

This file points to the location of the include and libraries of Midas using three

variables:

- MIDAS_INCLUDE_DIRS

- MIDAS_LIBRARY_DIRS

- MIDAS_LIBRARIES

Then the CMakeLists file of the new project can use the CMake find_package

functionalities like:

```

find_package (Midas REQUIRED)

if (MIDAS_FOUND)

MESSAGE(STATUS "Found midas: libraries ${MIDAS_LIBRARIES}")

pbuilder_add_ext_libraries (${MIDAS_LIBRARIES})

else (MIDAS_FOUND)

message(FATAL "Unable to find midas")

endif (MIDAS_FOUND)

include_directories (${MIDAS_INCLUDE_DIR})

```

pbuilder_add_ext_libraries is a CMake macro allowing to automatically add the

libraries into the project: this macro can be found here:

https://github.com/project8/scarab/blob/master/cmake/PackageBuilder.cmake

If such macro doesn't exist, the linkage to each executable/library can be done

similarly to https://midas.triumf.ca/elog/Midas/1964 using:

```

target_link_libraries(crfe ${MIDAS_LIBARIES} ${LIBS})

```

The current version of the MidasConfig.cmake is minimal and could for example

include a version number: this would allow to define a e.g. minimal version of

Midas needed by the new project. |

|

2004

|

13 Oct 2020 |

Soichiro Kuribayashi | Info | About remote control of front end part of MIDAS on chip | Hello!

My name is Soichiro Kuribayashi and I am a Ph.D. student at Kyoto University.

I'm a T2K collaborator and working for Super FGD which is new detector in ND280.

I'm a beginner of MIDAS and I've just started to develop the DAQ software with

MIDAS for Super FGD.

For the DAQ of Super FGD, we will run remotely front end part of MIDAS on ZYNQ

which is system on chip.

For this remote control of front end part with mserver, we have to mount home

directory of DAQ PC(Cent OS8) on that of Linux on ZYNQ.

So I wonder if we should use NFS(Network file system) + NIS(Network information

service) + autofs for the mounting. Is it correct?

If you have any information or any suggestion for the remote control on chip,

please let me know.

Best regards,

Soichiro |

|

2005

|

13 Oct 2020 |

Konstantin Olchanski | Info | About remote control of front end part of MIDAS on chip | > My name is Soichiro Kuribayashi and I am a Ph.D. student at Kyoto University.

> I'm a T2K collaborator and working for Super FGD which is new detector in ND280.

Hi! I did much of the DAQ software for the original FGD. I hope I can help.

> For the DAQ of Super FGD, we will run remotely front end part of MIDAS on ZYNQ

> which is system on chip.

This would be the same as the existing FGD. Inside the FGD DCC is a Virtex4 FPGA

with a 300MHz PPC CPU running Linux from a CompactFlash card (Kentaro-san did this

part). On this linux system runs the FGD DCC midas frontend. It connects

to the FGD midas instance using the mserver. This frontend executable is

copied to the DCC using "scp", there is no common nfs mounted home directory.

> For this remote control of front end part with mserver, we have to mount home

> directory of DAQ PC(Cent OS8) on that of Linux on ZYNQ.

> So I wonder if we should use NFS(Network file system) + NIS(Network information

> service) + autofs for the mounting. Is it correct?

Since you have a bigger SOC and you can run pretty much a complete linux,

I do recommend that you go this route. During development it is very convenient

to have common home directories on the main machine and on the frontend fpga

machines.

But this is not necessary. the midas mserver connection does not require

common (nfs-mounted) home directory, you can copy the files to the frontend

fpga using scp and rsync and you can use the gdb "remote debugger" function.

I can also suggest that on your frontend SOC/FPGA machine, you boot linux

using the "nfs-root" method. This way, the local flash memory only

contains a boot loader (and maybe the linux kernel image, depending on

bootloader limitations). The rest of the linux rootfs can be on your

central development machine. This way management of flash cards,

confusion with different contents of local flash and need to make backups

of frontend machines is much reduced.

If you use a fast SSD and ZFS with deduplication, you will also have good

performance gain (NFS over 1gige network to server with fast SSD works

so much better compared to the very slow SD/MMC/NAND flash).

I can point you to some of my documentation how we do this.

>

> If you have any information or any suggestion for the remote control on chip,

> please let me know.

>

I would say you are on a good track. For early development on just one board,

pretty much any way you do it will work, but once you start scaling up

beyound 3-4-5 frontends, you will start seeing benefits from common NFS-mounted

home directories, NFS-root booted linux, etc.

And of course you may want to study the existing ND280/FGD DAQ. I hope you

have access to the running system at Jparc. If not, I have a copy of

pretty much everything (except for running hardware, it is stored in the basement,

dead) and I can give you access.

P.S. This reminds me that the cascade software from ND280 (they key part

for connecting the FGD, the TPC, the slow controls & etc into one experiment)

was never merged into the midas repository. I opened a ticket for this,

now we will not forget again:

https://bitbucket.org/tmidas/midas/issues/291/import-cascase-frontend-from-t2k-

nd280-fgd

K.O. |

|

2006

|

13 Oct 2020 |

Soichiro Kuribayashi | Info | About remote control of front end part of MIDAS on chip | Dear Konstantin,

Thank you very much for your reply and detailed information.

I would appreciate if you could help us.

> I can also suggest that on your frontend SOC/FPGA machine, you boot linux

> using the "nfs-root" method. This way, the local flash memory only

> contains a boot loader (and maybe the linux kernel image, depending on

> bootloader limitations). The rest of the linux rootfs can be on your

> central development machine. This way management of flash cards,

> confusion with different contents of local flash and need to make backups

> of frontend machines is much reduced.

As you said, we can run complete Linux (Ubuntu 16) on ZYNQ and I'm using common NFS

system now. However, I didn't know "nfs-root" method which you mentioned and this method

seems to be reasonable way to just share linux rootfs.

First of all, I will try this method for simpler system.

> If you use a fast SSD and ZFS with deduplication, you will also have good

> performance gain (NFS over 1gige network to server with fast SSD works

> so much better compared to the very slow SD/MMC/NAND flash).

>

> I can point you to some of my documentation how we do this.

I'm concerned about such performance and I have checked the performance with common NFS

over gige network and my DAQ PC roughly(data transfer rate ~ O(10) MByte/sec). However, I

didn't know the ZFS and also how we can have performance gain with a fast SSD and ZFS.

Please let me know your documentation how to do it if possible.

> I would say you are on a good track. For early development on just one board,

> pretty much any way you do it will work, but once you start scaling up

> beyound 3-4-5 frontends, you will start seeing benefits from common NFS-mounted

> home directories, NFS-root booted linux, etc.

I'm developing with just one board and common NFS-mounted now. I'm looking forward to

seeing such benefits when I will use multiple frontends.

> And of course you may want to study the existing ND280/FGD DAQ. I hope you

> have access to the running system at Jparc. If not, I have a copy of

> pretty much everything (except for running hardware, it is stored in the basement,

> dead) and I can give you access.

I don't have access to the system at Jparc, but Nick has told us where FGD DAQ code is.

Is bellow URL everything of code of FGD DAQ?

https://git.t2k.org/hastings/fgddaq/-/tree/master

Best regards,

Soichiro |

|