11 Feb 2014, Randolf Pohl, Forum, Huge events (>10MB) every second or so 11 Feb 2014, Randolf Pohl, Forum, Huge events (>10MB) every second or so

|

I'm looking into using MIDAS for an experiment that creates one large event

(20MB or more) every second.

Q1: It looks like I should use EQ_FRAGMENTED. Has this feature been in use

recently? Is it known to work/not work?

More specifically, the computer should initiate a 1 second data taking, start to

such the data out of the electronics (which may take a while), change some

experimental parameters, and start over.

Q2: What's the best way to do this? EQ_PERIODIC?

I cannot guarantee that the time required to read the hardware has an upper bound.

In a standalone-prog I would simply use a big loop and let the machine execute

it as fast as it can: 1.1s, 1.5s, 1.1s, 1.3s, 2.5s, ..... depending on the HW

deadtimes.

Will this work with EQ_PERIODIC?

(Sorry for these maybe stupid questions, but I have so far only used MIDAS for

externally generated events, with <32kB event size).

Thanks a lot,

Randolf |

11 Feb 2014, Stefan Ritt, Forum, Huge events (>10MB) every second or so 11 Feb 2014, Stefan Ritt, Forum, Huge events (>10MB) every second or so

|

> I'm looking into using MIDAS for an experiment that creates one large event

> (20MB or more) every second.

>

> Q1: It looks like I should use EQ_FRAGMENTED. Has this feature been in use

> recently? Is it known to work/not work?

>

> More specifically, the computer should initiate a 1 second data taking, start to

> such the data out of the electronics (which may take a while), change some

> experimental parameters, and start over.

>

> Q2: What's the best way to do this? EQ_PERIODIC?

> I cannot guarantee that the time required to read the hardware has an upper bound.

> In a standalone-prog I would simply use a big loop and let the machine execute

> it as fast as it can: 1.1s, 1.5s, 1.1s, 1.3s, 2.5s, ..... depending on the HW

> deadtimes.

> Will this work with EQ_PERIODIC?

>

> (Sorry for these maybe stupid questions, but I have so far only used MIDAS for

> externally generated events, with <32kB event size).

>

>

> Thanks a lot,

>

> Randolf

Hi Randolf,

EQ_FRAGMENTED is kind of historically, when computers had a few MB of memory and you have to play special tricks to get large data buffers through. Today I

would just use EQ_PERIODIC and increase the midas maximal event size to your needs. For details look here:

https://midas.triumf.ca/MidasWiki/index.php/Event_Buffer

The front-end scheduler is asynchronous, which means that your readout is called when the given period (1 second) is elapsed. If the readout takes longer

than 1s, the schedule will (hopefully) call your readout immediately after the event has been sent. So you get automatically your maximal data rate. At MEG, we

use 2 MB events with 10 Hz, so a 20 MB/sec data rate should not be a problem on decent computers.

Best,

Stefan |

18 Feb 2014, Konstantin Olchanski, Forum, Huge events (>10MB) every second or so 18 Feb 2014, Konstantin Olchanski, Forum, Huge events (>10MB) every second or so

|

> I'm looking into using MIDAS for an experiment that creates one large event

> (20MB or more) every second.

Hi, there - 20 Mbyte event at 1/sec is not so large these days. (Well, depending on your hardware).

Using typical 1-2 year old PC hardware, 20 M/sec to local disk should work right away. Sending data from a

remote front end (through the mserver), or writing to a remote disk (NFS, etc) - will of course requre a GigE

network connection.

By default, MIDAS is configured for using about 1-2 Mbyte events, so for your case, you will need to:

- increase the event size limits in your frontend,

- increase /Experiment/MAX_EVENT_SIZE in ODB

- increase the size of the SYSTEM event buffer (/Experiment/Buffer sizes/SYSTEM in ODB)

I generally recommend sizing the SYSTEM event buffer to hold a few seconds worth of data (ot

accommodate any delays in writing to local disk - competing reads, internal delays of the disks, etc).

So for 20 M/s, the SYSTEM buffer size should be about 40-60 Mbytes.

For your case, you also want to buffer 3-5-10 events, so the SYSTEM buffer size would be between 100 and

200 MBytes.

Assuming you have between 8-16-32 GBytes of RAM, this should not be a problem.

One the other hand, if you are running on a low-power ("green") ARM system with 1 Gbyte of RAM and a

1GHz CPU, you should be able to handle the data rate of 20 Mbytes/sec, as long as your network and

storage can handle it - I see GigE ethernet running at about 30-40 Mbytes/sec, so you should be okey,

but local storage to SD flash is only about 10 Mbytes/sec - too slow. You can try USB-attached HDD or SSD,

this should run at up to 30-40 Mbytes/sec. I would expect no problems with this rate from MIDAS, as long

as you can fit into your 1 GByte of RAM - obviously your SYSTEM buffer will have to be a little smaller than

on a full-featured PC.

More information on MIDAS event size limits is here (as already reported by Stefan)

https://midas.triumf.ca/MidasWiki/index.php/Event_Buffer

Let us know how it works out for you.

K.O. |

01 Mar 2014, Randolf Pohl, Forum, Huge events (>10MB) every second or so 01 Mar 2014, Randolf Pohl, Forum, Huge events (>10MB) every second or so

|

Works, and here is how I did it. The attached example is based on the standard MIDAS

example in "src/midas/examples/experiment".

My somewhat unsorted notes, haven't really tweaked the numbers. But it WORKS.

(1) mlogger writes "last.xml" (hard-coded!) which takes an awful amount of time

as it writes the complete ODB containing the 10MB bank!

just outcomment

// odb_save("last.xml");

in mlogger.cxx, function

INT tr_start(INT run_number, char *error)

(line ~3870 in mlogger rev. 5377, .cxx-file included)

(2) frontend.c:

* the most important declarations are

/* BIG_DATA_BYTES is the data in 1 bank

BIG_EVENT_SIZE is the event size. It's a bit larger than the bank size

because MIDAS needs to add some header bytes, I think

*/

#define BIG_DATA_BYTES (10*1024*1024) // 10 MB

#define BIG_EVENT_SIZE (BIG_DATA_BYTES + 100)

/* maximum event size produced by this frontend */

INT max_event_size = BIG_EVENT_SIZE;

/* maximum event size for fragmented events (EQ_FRAGMENTED) */

INT max_event_size_frag = 5 * BIG_EVENT_SIZE;

/* buffer size to hold 10 events */

INT event_buffer_size = 10 * BIG_EVENT_SIZE;

* bk_init() can hold at most 32kByte size events! Use bk_init32() instead.

* complete frontend.c is attached

(3) in an xterm do

# . setup.sh

# odbedit -s 41943040

(first invocation of odbedit must create large enough odb,

otherwise you'll get "odb full" errors)

(4) odbedit> load big.odb

(attached). Essentials are:

/Experiment/MAX_EVENT_SIZE = 20971520

/Experiment/Buffer sizes/SYSTEM = 41943040 <- at least 2 events!

To avoid excessive latecies when starting/stopping a run, do

/Logger/ODB Dump = no

/Logger/Channels/0/Settings/ODB Dump = no

and create an Equipment Tree to make the mlogger happy

(5) a few more xterms, always ". setup.sh":

# mlogger_patched (see (1))

# ./frontend (attched)

(6) in your odbedit (4) say "start". You should fill your disk rather quickly. |

01 Mar 2014, Stefan Ritt, Forum, Huge events (>10MB) every second or so 01 Mar 2014, Stefan Ritt, Forum, Huge events (>10MB) every second or so

|

> Works, and here is how I did it. The attached example is based on the standard MIDAS

> example in "src/midas/examples/experiment".

If you have such huge events, it does not make sense to put them into the ODB. The size needs to be increased (as

you realized correctly) and the run stop takes long if you write last.xml. So just remove the RO_ODB flag in the

frontend program and you won't have these problems.

/Stefan |

23 Feb 2014, Andre Frankenthal, Bug Report, Installation failing on Mac OS X 10.9 -- related to strlcat and strlcpy 23 Feb 2014, Andre Frankenthal, Bug Report, Installation failing on Mac OS X 10.9 -- related to strlcat and strlcpy

|

Hi,

I don't know if this actually fits the Bug Report category. I've been trying to install Midas on my Mac OS

Mavericks and I keep getting errors like "conflicting types for '___builtin____strlcpy_chk' ..." and similarly for

strlcat. I googled a bit and I think the problem might be that in Mavericks strlcat and strlcpy are already

defined in string.h, and so there might be a redundant definition somewhere. I'm not sure what the best

way to fix this would be though. Any help would be appreciated.

Thanks,

Andre |

27 Feb 2014, Konstantin Olchanski, Bug Report, Installation failing on Mac OS X 10.9 -- related to strlcat and strlcpy 27 Feb 2014, Konstantin Olchanski, Bug Report, Installation failing on Mac OS X 10.9 -- related to strlcat and strlcpy

|

>

> I don't know if this actually fits the Bug Report category. I've been trying to install Midas on my Mac OS

> Mavericks and I keep getting errors like "conflicting types for '___builtin____strlcpy_chk' ..." and similarly for

> strlcat. I googled a bit and I think the problem might be that in Mavericks strlcat and strlcpy are already

> defined in string.h, and so there might be a redundant definition somewhere. I'm not sure what the best

> way to fix this would be though. Any help would be appreciated.

>

We have run into this problem - MacOS 10.9 plays funny games with definitions of strlcpy() & co - and it has been fixed since last Summer.

For the record, current MIDAS builds just fine on MacOS 10.9.2.

For a pure test, try the instructions posted at midas.triumf.ca:

cd $HOME

mkdir packages

cd packages

git clone https://bitbucket.org/tmidas/midas

git clone https://bitbucket.org/tmidas/mscb

git clone https://bitbucket.org/tmidas/mxml

cd midas

make

K.O. |

27 Feb 2014, Andre Frankenthal, Bug Report, Installation failing on Mac OS X 10.9 -- related to strlcat and strlcpy 27 Feb 2014, Andre Frankenthal, Bug Report, Installation failing on Mac OS X 10.9 -- related to strlcat and strlcpy

|

> >

> > I don't know if this actually fits the Bug Report category. I've been trying to install Midas on my Mac OS

> > Mavericks and I keep getting errors like "conflicting types for '___builtin____strlcpy_chk' ..." and similarly for

> > strlcat. I googled a bit and I think the problem might be that in Mavericks strlcat and strlcpy are already

> > defined in string.h, and so there might be a redundant definition somewhere. I'm not sure what the best

> > way to fix this would be though. Any help would be appreciated.

> >

>

> We have run into this problem - MacOS 10.9 plays funny games with definitions of strlcpy() & co - and it has been fixed since last Summer.

>

> For the record, current MIDAS builds just fine on MacOS 10.9.2.

>

> For a pure test, try the instructions posted at midas.triumf.ca:

>

> cd $HOME

> mkdir packages

> cd packages

> git clone https://bitbucket.org/tmidas/midas

> git clone https://bitbucket.org/tmidas/mscb

> git clone https://bitbucket.org/tmidas/mxml

> cd midas

> make

>

> K.O.

Thanks, it works like a charm now! I must have obtained an outdated version of Midas.

Andre |

23 Feb 2014, William Page, Forum, db_check_record() for verifying structure of ODB subtree 23 Feb 2014, William Page, Forum, db_check_record() for verifying structure of ODB subtree

|

Hi,

I have been trying to use db_check_record() in order to verify that a subtree in the ODB has the correct

variables, variable order, and overall size. I'm going off the documentation

(https://midas.psi.ch/htmldoc/group__odbfunctionc.html) and use a string to compare against the ODB

structure. Since the string format is not specified for db_check_record(), I'm formatting my string

according to the db_create_record() example.

Instead of db_check_record() checking the entire ODB subtree against all the variables represented in the

string, I'm finding that only the first variable is checked. The later variables in the string can be

misspelled, out of order, or inexistent, and db_check_record() will still return 1.

Am I using db_check_record incorrectly?

Thank you for any help with this issue.

I also believe that some of the documentation for db_check_record is outdated. For example, init_string

is referenced in the documentation but isn't part of the function definition. |

21 Feb 2014, Konstantin Olchanski, Info, Javascript ODBMLs(), modified ODBMCopy() JSON encoding 21 Feb 2014, Konstantin Olchanski, Info, Javascript ODBMLs(), modified ODBMCopy() JSON encoding

|

I made a few minor modifications to the ODB JSON encoder and implemented a javascript "ls" function to

report full ODB directory information as available from odbedit "ls -l" and the mhttpd odb editor page.

Using the new ODBMLs(), the existing ODBMCreate(), ODBMDelete() & etc a complete ODB editor can be

written in Javascript (or in any other AJAX-capable language).

While implementing this function, I found some problems in the ODB JSON encoder when handling

symlinks, also some problems with handling symlinks in odbedit and in the mhttpd ODB editor - these are

now fixed.

Changes to the ODB JSON encoder:

- added the missing information to the ODB KEY (access_mode, notify_count)

- added symlink target information ("link")

- changed encoding of simple variable (i.e. jcopy of /experiment/name) - when possible (i.e. ODB KEY

information is omitted), they are encoded as bare values (before, they were always encoded as structures

with variable names, etc). This change makes it possible to implement ODBGet() and ODBMGet() using the

AJAX jcopy method with JSON data encoding. Bare value encoding in ODBMCopy()/AJAX jcopy is enabled by

using the "json-nokeys-nolastwritten" encoding option.

All these changes are supposed to be backward compatible (encoding used by ODBMCopy() for simple

values and "-nokeys-nolastwritten" was previously not documented).

Documentation was updated:

https://midas.triumf.ca/MidasWiki/index.php/Mhttpd.js

K.O. |

23 Sep 2013, Stefan Ritt, Info, Custom page header implemented 23 Sep 2013, Stefan Ritt, Info, Custom page header implemented

|

Due to popular request, I implemented a custom header for mhttpd. This allows to inject some HTML code

to be shown on top of the menu bar on all mhttpd pages. One possible application is to bring back the old

status line with the name of the current experiment, the actual time and the refresh interval.

To use this feature, one can put a new entry into the ODB under

/Custom/Header

which can be either a string (to show some short HTML code directly) or the name of a file containing some

HTML code. If /Custom/Path is present, that path is used to locate the header file. A simple header file to

recreate the GOT look (good-old-times) is here:

<div id="footerDiv" class="footerDiv">

<div style="display:inline; float:left;">MIDAS experiment "Test"</div>

<div id="refr" style="display:inline; float:right;"></div>

</div>

<script type="text/javascript">

var r = document.getElementById('refr');

var now = new Date();

var c = document.cookie.split('midas_refr=');

r.innerHTML = now.toString() + ' ' + 'Refr:' + c.pop().split(';').shift();

</script>

The JavaScript code is used to retrieve the midas_refr cookie which stores the refresh interval and displays

it together with the current time.

Another application of this feature might be to check certain values in the ODB (via the ODBGet function)

and some some important status or error condition.

/Stefan |

12 Feb 2014, Stefan Ritt, Info, Custom page header implemented 12 Feb 2014, Stefan Ritt, Info, Custom page header implemented

|

As reported in the bug tracker, the proposed header does not work if no specific (= different from the default 60 sec.) update period is specified,

since then no cookie is present. Here is the updated code which works for all cases:

<div id="footerDiv" class="footerDiv">

<div style="display:inline; float:left;">MIDAS experiment "Test"</div>

<div id="refr" style="display:inline; float:right;"></div>

</div>

<script type="text/javascript">

var r = document.getElementById('refr');

var now = new Date();

var refr;

if (document.cookie.search('midas_refr') == -1)

refr = 60;

else {

var c = document.cookie.split('midas_refr=');

refr = c.pop().split(';').shift();

}

r.innerHTML = now.toString() + ' ' + 'Refr:' + refr;

</script>

/Stefan |

18 Feb 2014, Konstantin Olchanski, Info, Custom page header implemented 18 Feb 2014, Konstantin Olchanski, Info, Custom page header implemented

|

I am not sure what to do with the javascript snippet - I understand it should be somehow connected to /Custom/Header, but if I create the /Custom/Header string, I cannot put this snippet

into this string using odbedit - if I try to cut&paste it into odbedit, it is truncated to the first line - nor using the mhttpd odb editor - when I cut&paste it into the odb editor text entry box, it

is truncated to the first 519 bytes (must be a hard limit somewhere). K.O.

> As reported in the bug tracker, the proposed header does not work if no specific (= different from the default 60 sec.) update period is specified,

> since then no cookie is present. Here is the updated code which works for all cases:

>

>

>

> <div id="footerDiv" class="footerDiv">

> <div style="display:inline; float:left;">MIDAS experiment "Test"</div>

> <div id="refr" style="display:inline; float:right;"></div>

> </div>

> <script type="text/javascript">

> var r = document.getElementById('refr');

> var now = new Date();

> var refr;

> if (document.cookie.search('midas_refr') == -1)

> refr = 60;

> else {

> var c = document.cookie.split('midas_refr=');

> refr = c.pop().split(';').shift();

> }

> r.innerHTML = now.toString() + ' ' + 'Refr:' + refr;

> </script>

>

>

>

> /Stefan |

19 Feb 2014, Stefan Ritt, Info, Custom page header implemented 19 Feb 2014, Stefan Ritt, Info, Custom page header implemented

|

> I am not sure what to do with the javascript snippet

Just read elog:908, it tells you to put this into a file, name it header.html for example, and put into the ODB:

/Custom/Header [string32] = header.html

make sure that you put the file into the directory indicated by /Custom/Path.

Cheers,

Stefan |

29 Jan 2014, Konstantin Olchanski, Bug Fix, make dox 29 Jan 2014, Konstantin Olchanski, Bug Fix, make dox

|

The capability to generate doxygen documentation of MIDAS was restored.

Use "make dox" and "make cleandox",

find generated documentation in ./html,

look at it via "firefox html/index.html".

The documentation is not generated by default - it takes quite a long time to build all the call graphs.

And the call graphs is what makes this documentation useful - without some visual graphical

representation it is quite difficult to understand some parts of MIDAS. Both caller and callee graphs are

generated.

Note that doxygen documentation for the javascript functions in mhttpd.js is also generated, making a

handy reference in addition to the full documentation on the MIDAS Wiki.

K.O. |

30 Jan 2014, Stefan Ritt, Bug Fix, make dox 30 Jan 2014, Stefan Ritt, Bug Fix, make dox

|

> The capability to generate doxygen documentation of MIDAS was restored.

>

> Use "make dox" and "make cleandox",

> find generated documentation in ./html,

> look at it via "firefox html/index.html".

>

> The documentation is not generated by default - it takes quite a long time to build all the call graphs.

>

> And the call graphs is what makes this documentation useful - without some visual graphical

> representation it is quite difficult to understand some parts of MIDAS. Both caller and callee graphs are

> generated.

>

> Note that doxygen documentation for the javascript functions in mhttpd.js is also generated, making a

> handy reference in addition to the full documentation on the MIDAS Wiki.

>

> K.O.

To generate the files, you need doxygen installed which not everybody has. Is there a web site where one can see the generated graphs?

/Stefan |

18 Feb 2014, Konstantin Olchanski, Bug Fix, make dox 18 Feb 2014, Konstantin Olchanski, Bug Fix, make dox

|

> > The capability to generate doxygen documentation of MIDAS was restored.

> >

> > Use "make dox" and "make cleandox",

> > find generated documentation in ./html,

> > look at it via "firefox html/index.html".

> >

>

> To generate the files, you need doxygen installed which not everybody has.

On most Linux systems, doxygen is easy to install. Red Hat instructions are here:

http://www.triumf.info/wiki/DAQwiki/index.php/SLinstall#Install_packages_needed_for_QUARTUS.2C_ROOT.2C_EPICS_and_MIDAS_DAQ

On MacOS, doxygen is easy to install via macports: sudo port install doxygen

> Is there a web site where one can see the generated graphs?

http://ladd00.triumf.ca/~olchansk/midas/index.html

there is no cron job to update this, but I may update it infrequently.

K.O. |

19 Feb 2014, Stefan Ritt, Bug Fix, make dox 19 Feb 2014, Stefan Ritt, Bug Fix, make dox

|

> On most Linux systems, doxygen is easy to install. Red Hat instructions are here:

> http://www.triumf.info/wiki/DAQwiki/index.php/SLinstall#Install_packages_needed_for_QUARTUS.2C_ROOT.2C_EPICS_and_MIDAS_DAQ

>

> On MacOS, doxygen is easy to install via macports: sudo port install doxygen

>

> > Is there a web site where one can see the generated graphs?

>

> http://ladd00.triumf.ca/~olchansk/midas/index.html

>

> there is no cron job to update this, but I may update it infrequently.

>

> K.O.

Great, thanks a lot!

-Stefan |

31 Jan 2014, Stefan Ritt, Info, Separation of MSCB subtree 31 Jan 2014, Stefan Ritt, Info, Separation of MSCB subtree

|

Since several projects at PSI need MSCB but not MIDAS, I decided to separate the two repositories. So if you

need MIDAS with MSCB support inside mhttpd, you have to clone MIDAS, MXML and MSCB from bitbucket

(or the local clone at TRIUMF) as described in

https://midas.triumf.ca/MidasWiki/index.php/Main_Page#Download

I tried to fix all Makefiles to link to the new locations, but I'm not sure if I got all. So if something does not

compile please let me know.

-Stefan |

18 Feb 2014, Konstantin Olchanski, Info, Separation of MSCB subtree 18 Feb 2014, Konstantin Olchanski, Info, Separation of MSCB subtree

|

> Since several projects at PSI need MSCB but not MIDAS, I decided to separate the two repositories. So if you

> need MIDAS with MSCB support inside mhttpd, you have to clone MIDAS, MXML and MSCB from bitbucket

> (or the local clone at TRIUMF) as described in

>

> https://midas.triumf.ca/MidasWiki/index.php/Main_Page#Download

>

> I tried to fix all Makefiles to link to the new locations, but I'm not sure if I got all. So if something does not

> compile please let me know.

>

> -Stefan

After this split, Makefiles used to build experiment frontends need to be modified for the new location of the mscb tree:

replace

$(MIDASSYS)/mscb

with

$(MIDASSYS)/../mscb

K.O. |

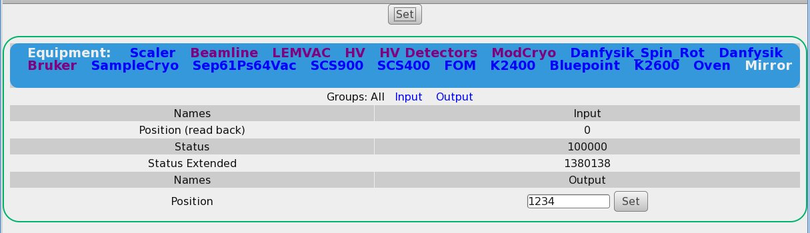

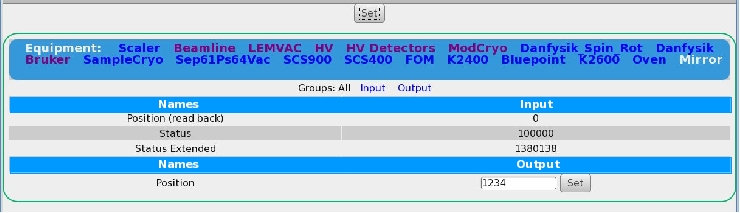

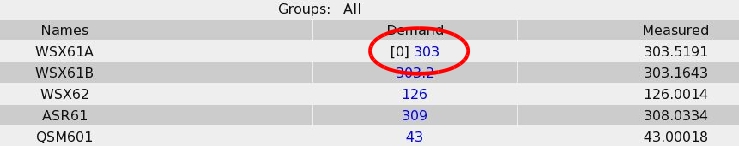

11 Feb 2014, Andreas Suter, Bug Report, mhttpd, etc. 11 Feb 2014, Andreas Suter, Bug Report, mhttpd, etc.

|

I found a couple of bugs in the current mhttpd, midas version: "93fa5ed"

This concerns all browser I checked (firefox, chrome, internet explorer, opera)

1) When trying to change a value of a frontend using a multi class driver (we

have a lot of them), the field for changing appears, but I cannot get it set!

Neither via the two set buttons (why 2?) nor via return.

It also would be nice, if the css could be changed such that input/output for

multi-driver would be better separated; something along as suggested in

2) If I changing a value (generic/hv class driver), the index of the array

remains when chaning a value until the next update of the page

3) We are using a web-password. In the current version the password is plain visible when entering.

4) I just copied the header as described here: https://midas.triumf.ca/elog/Midas/908, but I get another result:

It looks like as a wrong cookie is filtered? |

11 Feb 2014, Stefan Ritt, Bug Report, mhttpd, etc. 11 Feb 2014, Stefan Ritt, Bug Report, mhttpd, etc.

|

| Andreas Suter wrote: | | I found a couple of bugs in the current mhttpd, midas version: "93fa5ed" |

See my reply on the issue tracker:

https://bitbucket.org/tmidas/midas/issue/18/mhttpd-bugs |

15 Jan 2014, Konstantin Olchanski, Bug Report, MIDAS password protection is broken 15 Jan 2014, Konstantin Olchanski, Bug Report, MIDAS password protection is broken

|

If you follow the MIDAS documentation for setting up password protection, you will get strange messages:

ladd00:midas$ ./linux/bin/odbedit

[local:testexpt:S]/>passwd <---- setup a password

Password:

Retype password:

[local:testexpt:S]/> exit

ladd00:midas$ odbedit

Password: <---- enter correct password here

ss_semaphore_wait_for: semop/semtimedop(21135376) returned -1, errno 22 (Invalid argument)

ss_semaphore_release: semop/semtimedop(21135376) returned -1, errno 22 (Invalid argument)

[local:testexpt:S]/>ss_semaphore_wait_for: semop/semtimedop(21037069) returned -1, errno 43 (Identifier removed)

The same messages will appear from all other programs - mhttpd, etc. They will be printed about every 1 second.

So what do they mean? They mean what they say - the semaphore is not there, it is easy to check using "ipcs" that semaphores with

those ids do not exist. In fact all the semaphores are missing (the ODB semaphore is eventually recreated, so at least ODB works

correctly).

In this situation, MIDAS will not work correctly.

What is happening?

- cm_connect_experiment1() creates all the semaphores and remembers them in cm_set_experiment_semaphore()

- calls cm_set_client_info()

- cm_set_client_info() finds ODB /expt/sec/password, and returns CM_WRONG_PASSWORD

- before returning, it calls db_close_all_databases() and bm_close_all_buffers(), which delete all semaphores (put a print statement in

ss_semaphore_delete() to see this).

- (values saved by cm_set_experiment_semaphore() are stale now).

- (if by luck you have other midas programs still running, the semaphores will not be deleted)

- we are back to cm_connect_experiment1() which will ask for the password, call cm_set_client_info() again and continue as usual

- it will reopen ODB, recreating the ODB semaphore

- (but all the other semaphores are still deleted and values saved by cm_set_experiment_semaphore() are stale)

I through to improve this by fixing a bug in cm_msg_log() (where the messages are coming from) - it tries to lock the "MSG"

semaphore, but even if it could not lock it, it continues as usual and even calls an unlock at the end. (very bad). For catastrophic

locking failures like this (semaphore is deleted), we usually abort. But if I abort here, I get completely locked out from odb - odbedit

crashes right away and there is no way to do any corrective action other than delete odb and reload it from an xml file.

I know that some experiments use this password protection - why/how does it work there?

I think they are okey because they put critical programs like odbedit, mserver, mlogger and mhttpd into "/expt/sec/allowed

programs". In this case the pass the password check in cm_set_client_info() and the semaphores are not deleted. If any subsequent

program asks for the password, the semaphores survive because mlogger or mhttpd is already running and keeps semaphores from

being deleted.

What a mess.

K.O. |

15 Jan 2014, Konstantin Olchanski, Bug Report, MIDAS password protection is broken 15 Jan 2014, Konstantin Olchanski, Bug Report, MIDAS password protection is broken

|

> I through to improve this by fixing a bug in cm_msg_log() (where the messages are coming from)

The periodic messages about broken semaphore actually come from al_check(). I put some whining there, too.

K.O. |

05 Feb 2014, Stefan Ritt, Bug Report, MIDAS password protection is broken 05 Feb 2014, Stefan Ritt, Bug Report, MIDAS password protection is broken

|

> If you follow the MIDAS documentation for setting up password protection, you will get strange messages:

This is interesting. When I used it last time (some years ago...) it worked fine. I did not touch this, and now it's broken. Must be related to some modifications of the semaphore system.

Well, anyhow, the problem seems to me the db_close_all_databses() and the re-opening of the ODB. Apparently the db_close_database() call does not clean up the semaphores properly.

Actually there is absolutely no need to close and re-open the ODB upon a wrong password, so I just removed that code and now it works again.

/Stefan |

15 Jan 2014, Konstantin Olchanski, Bug Report, MIDAS Web password broken 15 Jan 2014, Konstantin Olchanski, Bug Report, MIDAS Web password broken

|

The MIDAS Web password function is broken - with the web password enabled, I am not prompted for a

password when editing ODB. The password still partially works - I am prompted for the web password

when starting a run. K.O.

P.S. https://midas.triumf.ca/MidasWiki/index.php/Security says "web password" needed for "write access",

but does not specify if this includes editing odb. (I would think so, and I think I remember that it used to). |

05 Feb 2014, Stefan Ritt, Bug Report, MIDAS Web password broken 05 Feb 2014, Stefan Ritt, Bug Report, MIDAS Web password broken

|

> The MIDAS Web password function is broken - with the web password enabled, I am not prompted for a

> password when editing ODB. The password still partially works - I am prompted for the web password

> when starting a run. K.O.

>

> P.S. https://midas.triumf.ca/MidasWiki/index.php/Security says "web password" needed for "write access",

> but does not specify if this includes editing odb. (I would think so, and I think I remember that it used to).

Didn't we agree to put those issues into the bitbucket issue tracker?

This functionality got broken when implementing the new inline edit functionality. Actually one has to "manually" check for the password. The old way

was that there web page asking for the web password, but if we do ODBSet via Ajax there is nobody who could fill out that form. So I added a

"manual" checking into ODBCheckWebPassword(). This will not work for custom pages, but they have their own way to define passwords.

/Stefan |

16 Jan 2014, Konstantin Olchanski, Info, MIDAS and "international characters", UTF-8 and Unicode. 16 Jan 2014, Konstantin Olchanski, Info, MIDAS and "international characters", UTF-8 and Unicode.

|

I made some tests of MIDAS support for "international characters" and we seem to be in a reasonable

shape.

The standard standard is UTF-8 encoding of Unicode and the MIDAS core is believed to be UTF-8 clean -

one can use "international characters" in ODB names, in ODB values, in filenames, etc.

The web interface had some problems with percent-encoding of ODB URLs, but as of current git version,

everything seems to work okey, as long as the web browser is in the UTF-8 encoding mode. The default

mode is "Western ISO-8859-1" and javascript encodeURIComponent() is mangling some stuff making the

ODB editor not work. Switching to UTF-8 mode seems to fix that.

Perhaps we should make the UTF-8 encoding the default for mhttpd-generated web pages. This should be

okey for TRIUMF - we use English language almost exclusively, but need to check with other labs before

making such a change. I especially worry about PSI because I am not sure if and how they any of the special

German-language characters.

On the minus side, odbedit does not seem to accept non-English characters at all. Maybe it is easy to fix.

K.O. |

15 Jan 2014, Konstantin Olchanski, Bug Fix, Fixed spurious symlinks to midas.log 15 Jan 2014, Konstantin Olchanski, Bug Fix, Fixed spurious symlinks to midas.log

|

In some experiments (i.e. DEAP), we see spurious symlinks to midas.log scattered just about everywhere. I

now traced this to an uninitialized variable in cm_msg_log() and it should be fixed now. K.O. |

17 Dec 2013, Stefan Ritt, Info, IEEE Real Time 2014 Call for Abstracts 17 Dec 2013, Stefan Ritt, Info, IEEE Real Time 2014 Call for Abstracts

|

Hello,

I'm co-organizing the upcoming Real Time Conference, which covers also the field of data acquisition, so it might be interesting for people working

with MIDAS. If you have something to report, you could also consider to send an abstract to this conference. It will be located in Nara, Japan. The conference

site is now open at http://rt2014.rcnp.osaka-u.ac.jp/

Best regards,

Stefan Ritt |

16 Dec 2013, Konstantin Olchanski, Bug Fix, Abolished SYNC and ASYNC defines 16 Dec 2013, Konstantin Olchanski, Bug Fix, Abolished SYNC and ASYNC defines

|

A few months ago, definitions of SYNC and ASYNC in midas.h have been changed away from "0" and "1",

and this caused problems with some event buffer management functions bm_xxx().

For example, when event buffers are getting full, bm_send_event(SYNC) unexpectedly started returning

BM_ASYNC_RETURN instead of waiting for free space, causing unexpected crashes of frontend programs.

Part of the problem was confusion between SYNC/ASYNC used by buffer management (bm_xxx) and by run

transition (cm_transition()) functions. Adding to confusion, documentation of bm_send_event() & co used

FALSE/TRUE while most actual calls used SYNC/ASYNC.

To sort this out, an executive decision was made to abolish the SYNC/ASYNC defines:

For buffer management calls bm_send_event(), bm_receive_event(), etc, please use:

SYNC -> BM_WAIT

ASYNC -> BM_NO_WAIT

For run transitions, please use:

SYNC -> TR_SYNC

ASYNC -> TR_ASYNC

MTHREAD -> TR_MTHREAD

DETACH -> TR_DETACH

K.O. |

16 Dec 2013, Konstantin Olchanski, Info, MIDAS on ARM 16 Dec 2013, Konstantin Olchanski, Info, MIDAS on ARM

|

I added MIDAS Makefile rules for building ARM binaries: "make linuxarm" and "make cleanarm" will create

(and clean) object files, libraries and executables under "linux-arm" using the TI Sitara ARM SDK or the

Yocto SDK ARM cross-compilers (GCC 4.7.x and 4.8.x respectively). (Makefile rules for building PPC

binaries have existed for years).

The hardware we have at TRIUMF are "ARMv7" machines - TI Sitara 335x CPUs (google mityarm) and Altera

Cyclone 5 FPGA ARM (google sockit). (as opposed to the ARMv5 CPU on the RaspberryPi). The software

binary API standard settled by Fedora Linux is "hard float" (as opposed to "soft float" used by older SDKs).

So "ARMv7 hard float" is what we intend to use at TRIUMF, but ARMv5 and soft-float should also work ok,

so please report successes and/or problems to this forum.

K.O. |

28 Nov 2013, Konstantin Olchanski, Info, Audit of fixed size arrays 28 Nov 2013, Konstantin Olchanski, Info, Audit of fixed size arrays

|

In one of the experiments, we hit a long time bug in mdump - there was an array of 32 equipments and if

there were more than 32 entries under /equipment, it would overrun and corrupt memory. Somehow this

only showed up after mdump was switched to c++. The solution was to use std::vector instead of fixed

size array.

Just in case, I checked other midas programs for fixed size arrays (other than fixed size strings) and found

none. (in midas.c, there is a fixed size array of TR_FIFO[10], but code inspection shows that it cannot

overrun).

I used this script. It can be modified to also identify any strange sized string arrays.

K.O.

#!/usr/bin/perl -w

while (1) {

my $in = <STDIN>;

last unless $in;

#print $in;

$in =~ s/^\s+//;

next if $in =~ /^char/;

next if $in =~ /^static char/;

my $a = $in =~ /(.*)[(\d+)\]/;

next unless $a;

my $a1 = $1;

my $a2 = $2;

next if $a2 == 0;

next if $a2 == 1;

next if $a2 == 2;

next if $a2 == 3;

#print "[$a] [$a1] [$a2]\n";

print "-> $a1[$a2]\n";

}

# end |

20 Nov 2013, Konstantin Olchanski, Bug Report, Too many bm_flush_cache() in mfe.c 20 Nov 2013, Konstantin Olchanski, Bug Report, Too many bm_flush_cache() in mfe.c

|

I was looking at something in the mserver and noticed that for remote frontends, for every periodic event,

there are about 3 RPC calls to bm_flush_cache().

Sure enough, in mfe.c::send_event(), for every event sent, there are 2 calls to bm_flush_cache() (once for

the buffer we used, second for all buffers). Then, for a good measure, the mfe idle loop calls

bm_flush_cache() for all buffers about once per second (even if no events were generated).

So what is going on here? To allow good performance when processing many small events,

the MIDAS event buffer code (bm_send_event()) buffers small events internally, and only after this internal

buffer is full, the accumulated events are flushed into the shared memory event buffer,

where they become visible to the mlogger, mdump and other consumers.

Because of this internal buffering, infrequent small size periodic events can become

stuck for quite a long time, confusing the user: "my frontend is sending events, how come I do not

see them in mdump?"

To avoid this, mfe.c manually flushes these internal event buffers by calling bm_flush_buffer().

And I think that works just fine for frontends directly connected to the shared memory, one call to

bm_flush_buffer() should be sufficient.

But for remote fronends connected through the mserver, it turns out there is a race condition between

sending the event data on one tcp connection and sending the bm_flush_cache() rpc request on another

tcp connection.

I see that the mserver always reads the rpc connection before the event connection, so bm_flush_cache()

is done *before* the event is written into the buffer by bm_send_event(). So the newly

send event is stuck in the buffer until bm_flush_cache() for the *next* event shows up:

mfe.c: send_event1 -> flush -> ... wait until next event ... -> send_event2 -> flush

mserver: flush -> receive_event1 -> ... wait ... -> flush -> receive_event2 -> ... wait ...

mdump -> ... nothing ... -> ... nothing ... -> event1 -> ... nothing ...

Enter the 2nd call to bm_flush_cache in mfe.c (flush all buffers) - now because mserver seems to be

alternating between reading the rpc connection and the event connection, the race condition looks like

this:

mfe.c: send_event -> flush -> flush

mserver: flush -> receive_event -> flush

mdump: ... -> event -> ...

So in this configuration, everything works correctly, the data is not stuck anywhere - but by accident, and

at the price of an extra rpc call.

But what about the periodic 1/second bm_flush_cache() on all buffers? I think it does not quite work

either because the race condition is still there: we send an event, and the first flush may race it and only

the 2nd flush gets the job done, so the delay between sending the event and seeing it in mdump would be

around 1-2 seconds. (no more than 2 seconds, I think). Since users expect their events to show up "right

away", a 2 second delay is probably not very good.

Because periodic events are usually not high rate, the current situation (4 network transactions to send 1

event - 1x send event, 3x flush buffer) is probably acceptable. But this definitely sets a limit on the

maximum rate to 3x (2x?) the mserver rpc latency - without the rpc calls to bm_flush_buffer() there

would be no limit - the events themselves are sent through a pipelined tcp connection without

handshaking.

One solution to this would be to implement periodic bm_flush_buffer() in the mserver, making all calls to

bm_flush_buffer() in mfe.c unnecessary (unless it's a direct connection to shared memory).

Another solution could be to send events with a special flag telling the mserver to "flush the buffer right

away".

P.S. Look ma!!! A race condition with no threads!!!

K.O. |

21 Nov 2013, Stefan Ritt, Bug Report, Too many bm_flush_cache() in mfe.c 21 Nov 2013, Stefan Ritt, Bug Report, Too many bm_flush_cache() in mfe.c

|

> And I think that works just fine for frontends directly connected to the shared memory, one call to

> bm_flush_buffer() should be sufficient.

That's correct. What you want is once per second or so for polled events, and once per periodic event (which anyhow will typically come only every 10 seconds or so). If there are 3 calls

per event, this is certainly too much.

> But for remote fronends connected through the mserver, it turns out there is a race condition between

> sending the event data on one tcp connection and sending the bm_flush_cache() rpc request on another

> tcp connection.

>

> ...

>

> One solution to this would be to implement periodic bm_flush_buffer() in the mserver, making all calls to

> bm_flush_buffer() in mfe.c unnecessary (unless it's a direct connection to shared memory).

>

> Another solution could be to send events with a special flag telling the mserver to "flush the buffer right

> away".

That's a very good and useful observation. I never really thought about that.

Looking at your proposed solutions, I prefer the second one. mserver is just an interface for RPC calls, it should not do anything "by itself". This was a strategic decision at the beginning.

So sending a flag to punch through the cache on mserver seems to me has less side effects. Will just break binary compatibility :-)

/Stefan |

15 Nov 2013, Konstantin Olchanski, Bug Report, stuck data buffers 15 Nov 2013, Konstantin Olchanski, Bug Report, stuck data buffers

|

We have seen several times a problem with stuck data buffers. The symptoms are very confusing -

frontends cannot start, instead hang forever in a state very hard to kill. Also "mdump -s -d -z

BUF03" for the affected data buffers is stuck.

We have identified the source of this problem - the semaphore for the buffer is locked and nobody

will ever unlock it - MIDAS relies on a feature of SYSV semaphores where they are automatically

unlocked by the OS and cannot ever be stuck ever. (see man semop, SEM_UNDO function).

I think this SEM_UNDO function is broken in recent Linux kernels and sometimes the semaphore

remains locked after the process that locked it has died. MIDAS is not programmed to deal with this

situation and the stuck semaphore has to be cleared manually.

Here, "BUF3" is used as example, but we have seen "SYSTEM" and ODB with stuck semaphores, too.

Steps:

a) confirm that we are using SYSV semaphores: "ipcs" should show many semaphores

b) identify the stuck semaphore: "strace mdump -s -d -z BUF03".

c) here will be a large printout, but ultimately you will see repeated entries of

"semtimedop(9633800, {{0, -1, SEM_UNDO}}, 1, {1, 0}^C <unfinished ...>"

d) erase the stuck semaphore "ipcrm -s 9633800", where the number comes from semtimedop() in

the strace output.

e) try again: "mdump -s -d -z BUF03" should work now.

Ultimately, I think we should switch to POSIX semaphores - they are easier to manage (the strace

and ipcrm dance becomes "rm /dev/shm/deap_BUF03.sem" - but they do not have the SEM_UNDO

function, so detection of locked and stuck semaphores will have to be done by MIDAS. (Unless we

can find some library of semaphore functions that already provides such advanced functionality).

K.O. |

14 Nov 2013, Konstantin Olchanski, Bug Report, MacOS10.9 strlcpy() problem 14 Nov 2013, Konstantin Olchanski, Bug Report, MacOS10.9 strlcpy() problem

|

On MacOS 10.9 MIDAS will crashes in strlcpy() somewhere inside odb.c. We think this is because strlcpy()

in MacOS 10.9 was changed to abort() if input and output strings overlap. For overlapping memory one is

supposed to use memmove(). This is fixed in current midas, for older versions, you can try this patch:

konstantin-olchanskis-macbook:midas olchansk$ git diff

diff --git a/src/odb.c b/src/odb.c

index 1589dfa..762e2ed 100755

--- a/src/odb.c

+++ b/src/odb.c

@@ -6122,7 +6122,10 @@ INT db_paste(HNDLE hDB, HNDLE hKeyRoot, const char *buffer)

pc++;

while ((*pc == ' ' || *pc == ':') && *pc)

pc++;

- strlcpy(data_str, pc, sizeof(data_str));

+

+ //strlcpy(data_str, pc, sizeof(data_str)); // MacOS 10.9 does not permit strlcpy() of overlapping

strings

+ assert(strlen(pc) < sizeof(data_str)); // "pc" points at a substring inside "data_str"

+ memmove(data_str, pc, strlen(pc)+1);

if (n_data > 1) {

data_str[0] = 0;

konstantin-olchanskis-macbook:midas olchansk$

As historical reference:

a) MacOS documentation says "behavior is undefined", which is no longer true, the behaviour is KABOOM!

https://developer.apple.com/library/mac/documentation/Darwin/Reference/ManPages/man3/strlcpy.3.h

tml

b) the original strlcpy paper from OpenBSD does not contain the word "overlap"

http://www.courtesan.com/todd/papers/strlcpy.html

c) the OpenBSD man page says the same as Apple man page (behaviour undefined)

http://www.openbsd.org/cgi-bin/man.cgi?query=strlcpy

d) the linux kernel strlcpy() uses memcpy() and is probably unsafe for overlapping strings

http://lxr.free-electrons.com/source/lib/string.c#L149

e) midas strlcpy() looks to be safe for overlapping strings.

K.O. |

09 Nov 2013, Razvan Stefan Gornea, Forum, Installation problem 09 Nov 2013, Razvan Stefan Gornea, Forum, Installation problem

|

Hi,

I run into problems while trying to install Midas on Slackware 14.0. In the past

I have easily installed Midas on many other versions of Slackware. I have a new

computer set up with Slackware 14.0 and I just got the Midas latest version from

https://bitbucket.org/tmidas/midas

Apparently there is a problem with a shared library which should be on the

system, I think make checks for /usr/include/mysql and then supposes that

libodbc.so should be on disk. I don't know why on my system it is not.

But I was wondering if I have some other problems (configuration problem?)

because I get a very large number of warnings. My last installation of Midas is

like from two years ago but I don't remember getting many warnings. Do I do

something obviously wrong? Here is uname -a output and I attached a file with

the output from make in midas folder (GNU Make 3.82 Built for

x86_64-slackware-linux-gnu). Thanks a lot!

Linux lheppc83 3.2.29 #2 SMP Mon Sep 17 14:19:22 CDT 2012 x86_64 Intel(R)

Xeon(R) CPU E5520 @ 2.27GHz GenuineIntel GNU/Linux |

10 Nov 2013, Stefan Ritt, Forum, Installation problem 10 Nov 2013, Stefan Ritt, Forum, Installation problem

|

Seems to me a problem with the ODBC library, so maybe Konstantin can comment.

/Stefan |

11 Nov 2013, Konstantin Olchanski, Forum, Installation problem 11 Nov 2013, Konstantin Olchanski, Forum, Installation problem

|

> I run into problems while trying to install Midas on Slackware 14.0.

Thank you for reporting this. We do not have any slackware computers so we cannot see these message usually.

We use SL/RHEL 5/6 and MacOS for most development, plus we now have an Ubuntu test machine, where I see a

whole different spew of compiler messages.

Most of the messages are:

a) useless compiler whining:

src/midas.c: In function 'cm_transition2':

src/midas.c:3769:74: warning: variable 'error' set but not used [-Wunused-but-set-variable]

b) an actual error in fal.c:

src/fal.c:131:0: warning: "EQUIPMENT_COMMON_STR" redefined [enabled by default]

c) actual error in fal.c: assignment into string constant is not permitted: char*x="aaa"; x[0]='c'; // core dump

src/fal.c:383:1: warning: deprecated conversion from string constant to 'char*' [-Wwrite-strings]

these are fixed by making sure all such pointers are "const char*" and the corresponding midas functions are

also "const char*".

d) maybe an error (gcc sometimes gets this one wrong)

./mscb/mscb.c: In function 'int mscb_info(int, short unsigned int, MSCB_INFO*)':

./mscb/mscb.c:1682:8: warning: 'size' may be used uninitialized in this function [-Wuninitialized]

> Apparently there is a problem with a shared library which should be on the

> system, I think make checks for /usr/include/mysql and then supposes that

> libodbc.so should be on disk. I don't know why on my system it is not.

g++ -g -O2 -Wall -Wno-strict-aliasing -Wuninitialized -Iinclude -Idrivers -I../mxml -I./mscb -Llinux/lib -

DHAVE_FTPLIB -D_LARGEFILE64_SOURCE -DHAVE_MYSQL -I/usr/include/mysql -DHAVE_ODBC -DHAVE_SQLITE

-DHAVE_ROOT -pthread -m64 -I/home/exodaq/root_5.35.10/include -DHAVE_ZLIB -DHAVE_MSCB -DOS_LINUX

-fPIC -Wno-unused-function -o linux/bin/mhttpd linux/lib/mhttpd.o linux/lib/mgd.o linux/lib/mscb.o

linux/lib/sequencer.o linux/lib/libmidas.a linux/lib/libmidas.a -lodbc -lsqlite3 -lutil -lpthread -lrt -lz -lm

/usr/lib64/gcc/x86_64-slackware-linux/4.7.1/../../../../x86_64-slackware-linux/bin/ld: cannot find -lodbc

The ODBC library is not found (shared .so or static .a).

The Makefile check is for /usr/include/sql.h (usually part of the ODBC package). On the command line above,

HAVE_ODBC is set, and the rest of MIDAS compiled okey, so the ODBC header files at least are present. But why

the library is not found?

I do not know how slackware packages this stuff the way they do and I do not have a slackware system to check

how it should look like, so I cannot suggest anything other than commenting out "HAVE_ODBC := ..." in the

Makefile.

> But I was wondering if I have some other problems (configuration problem?)

> because I get a very large number of warnings. My last installation of Midas is

> like from two years ago but I don't remember getting many warnings.

There are no "many warnings". Mostly it's just one same warning repeated many times that complains about

perfectly valid code:

src/midas.c: In function 'cm_transition':

src/midas.c:4388:19: warning: variable 'tr_main' set but not used [-Wunused-but-set-variable]

They complain about code:

{ int i=foo(); ... } // yes, "i" is not used, yes, if you have to keep it if you want to be able to see the return value

of foo() in gdb.

> Do I do something obviously wrong?

No you. GCC people turned on one more noisy junk warning.

> Thanks a lot!

No idea about your missing ODBC library, I do not even know how to get a package listing on slackware (and

proud of it).

But if you do know how to get a package listing for your odbc package, please send it here. On RHEL/SL, I would

do:

rpm -qf /usr/include/sql.h ### find out the name of the package that owns this file

rpm -ql xxx ### list all files in this package

K.O. |

11 Nov 2013, Konstantin Olchanski, Forum, Installation problem 11 Nov 2013, Konstantin Olchanski, Forum, Installation problem

|

> > I run into problems while trying to install Midas on Slackware 14.0.

>

> b) an actual error in fal.c:

>

> src/fal.c:131:0: warning: "EQUIPMENT_COMMON_STR" redefined [enabled by default]

>

> c) actual error in fal.c: assignment into string constant is not permitted: char*x="aaa"; x[0]='c'; // core dump

>

> src/fal.c:383:1: warning: deprecated conversion from string constant to 'char*' [-Wwrite-strings]

>

> these are fixed by making sure all such pointers are "const char*" and the corresponding midas functions are

the warnings in fal.c are now fixed.

K.O. |

13 Nov 2013, Konstantin Olchanski, Forum, Installation problem 13 Nov 2013, Konstantin Olchanski, Forum, Installation problem

|

> > I run into problems while trying to install Midas on Slackware 14.0.

>

> Thank you for reporting this. We do not have any slackware computers so we cannot see these message usually.

>

>

> src/midas.c: In function 'cm_transition2':

> src/midas.c:3769:74: warning: variable 'error' set but not used [-Wunused-but-set-variable]

>

got around to look at compile messages on ubuntu: in addition to "variable 'error' set but not used" we have these:

warning: ignoring return value of 'ssize_t write(int, const void*, size_t)'

warning: ignoring return value of 'ssize_t read(int, void*, size_t)'

warning: ignoring return value of 'int setuid(__uid_t)'

and a few more of similar

K.O. |

13 Nov 2013, Stefan Ritt, Forum, Installation problem 13 Nov 2013, Stefan Ritt, Forum, Installation problem

|

> got around to look at compile messages on ubuntu: in addition to "variable 'error' set but not used" we have these:

>

> warning: ignoring return value of 'ssize_t write(int, const void*, size_t)'

> warning: ignoring return value of 'ssize_t read(int, void*, size_t)'

> warning: ignoring return value of 'int setuid(__uid_t)'

> and a few more of similar

Arghh, now it is getting even more picky. I can understand the "variable xyz set but not used" and I'm willing to remove all the variables. But checking the

return value from every function? Well, if the disk gets full, our code will silently ignore this for write(), so maybe it's not a bad idea to add a few checks. Also

for the read(), there could be some problem, where an explicit cm_msg() in case of an error would help. |

14 Nov 2013, Razvan Stefan Gornea, Forum, Installation problem 14 Nov 2013, Razvan Stefan Gornea, Forum, Installation problem

|

Hi, Thanks a lot for the response! Yes to search packages and list their content in Slackware it is pretty similar to your illustration. Slackware seems to use iODBC in which case it would link with -liodbc I guess.

root@lheppc83:~# slackpkg file-search sql.h

Looking for sql.h in package list. Please wait... DONE

The list below shows the packages that contains "sql\.h" file.

[ installed ] - libiodbc-3.52.7-x86_64-2

You can search specific packages using "slackpkg search package".

root@lheppc83:~# cat /var/log/packages/libiodbc-3.52.7-x86_64-2

PACKAGE NAME: libiodbc-3.52.7-x86_64-2

COMPRESSED PACKAGE SIZE: 255.0K

UNCOMPRESSED PACKAGE SIZE: 1.0M

PACKAGE LOCATION: /var/log/mount/slackware64/l/libiodbc-3.52.7-x86_64-2.txz

PACKAGE DESCRIPTION:

libiodbc: libiodbc (Independent Open DataBase Connectivity)

libiodbc:

libiodbc: iODBC is the acronym for Independent Open DataBase Connectivity,

libiodbc: an Open Source platform independent implementation of both the ODBC

libiodbc: and X/Open specifications. It allows for developing solutions

libiodbc: that are language, platform and database independent.

libiodbc:

libiodbc:

libiodbc:

libiodbc: Homepage: http://iodbc.org/

libiodbc:

FILE LIST:

./

usr/

usr/share/

usr/share/libiodbc/

usr/share/libiodbc/samples/

usr/share/libiodbc/samples/iodbctest.c

usr/share/libiodbc/samples/Makefile

usr/man/

usr/man/man1/

usr/man/man1/iodbc-config.1.gz

usr/man/man1/iodbctestw.1.gz

usr/man/man1/iodbctest.1.gz

usr/man/man1/iodbcadm-gtk.1.gz

usr/bin/

usr/bin/iodbctest

usr/bin/iodbcadm-gtk

usr/bin/iodbctestw

usr/bin/iodbc-config

usr/include/

usr/include/iodbcinst.h

usr/include/sqlext.h

usr/include/iodbcunix.h

usr/include/isqltypes.h

usr/include/sql.h

usr/include/iodbcext.h

usr/include/isql.h

usr/include/odbcinst.h

usr/include/isqlext.h

usr/include/sqlucode.h

usr/include/sqltypes.h

usr/lib64/

usr/lib64/libiodbc.la

usr/lib64/libdrvproxy.so.2.1.19

usr/lib64/libiodbcinst.la

usr/lib64/libiodbcadm.so.2.1.19

usr/lib64/libiodbcinst.so.2.1.19

usr/lib64/libiodbcadm.la

usr/lib64/pkgconfig/

usr/lib64/pkgconfig/libiodbc.pc

usr/lib64/libiodbc.so.2.1.19

usr/lib64/libdrvproxy.la

usr/doc/

usr/doc/libiodbc-3.52.7/

usr/doc/libiodbc-3.52.7/ChangeLog

usr/doc/libiodbc-3.52.7/README

usr/doc/libiodbc-3.52.7/COPYING

usr/doc/libiodbc-3.52.7/AUTHORS

usr/doc/libiodbc-3.52.7/INSTALL

install/

install/doinst.sh

install/slack-desc |

14 Nov 2013, Konstantin Olchanski, Forum, Installation problem 14 Nov 2013, Konstantin Olchanski, Forum, Installation problem

|

# slackpkg file-search sql.h

[ installed ] - libiodbc-3.52.7-x86_64-2

...

# slackpkg search package

...

# cat /var/log/packages/libiodbc-3.52.7-x86_64-2

usr/include/sql.h

...

usr/lib64/libiodbc.so.2.1.19

...

Thanks, I am saving the slackpkg commands for future reference. Looks like the immediate problem is

with the library name: libiodbc instead of libodbc. But the header file sql.h is the same.

I am not sure if it is worth making a generic solution for this: on MacOS, all ODBC functions are now

obsoleted, to be removed, and since we are stanardized on MySQL anyway, so I think I will rewrite the SQL

history driver to use the MySQL interface directly. Then all this ODBC extra layering will go away.

K.O. |

12 Nov 2013, Stefan Ritt, Forum, Installation problem 12 Nov 2013, Stefan Ritt, Forum, Installation problem

|

The warnings with the set but unused variables are real. While John O'Donnell proposed:

==========

somewhere I long the way I found an include file to help remove this kind of message. try something like:

#include "use.h"

int foo () { return 3; }

int main () {

{ USED int i=foo(); }

return 0;

}

with -Wall, and you will see the unused messages are gone.

==========

I would rather go and remove the unused variables to clean up the code a bit. Unfortunately my gcc version does

not yet bark on that. So once I get a new version and I got plenty of spare time (....) I will consider removing all

these variables.

/Stefan |

14 Nov 2013, Konstantin Olchanski, Forum, Installation problem 14 Nov 2013, Konstantin Olchanski, Forum, Installation problem

|

> #include "use.h"

> { USED int i=foo(); }

Sounds nifty, but google does not find use.h.

As for unused variables, some can be removed, others not so much, there is some code in there:

int i = blah...

#if 0

if (i=42) printf("wow, we got a 42!\n");

#endif

and

if (0) printf("debug: i=%d\n", i);

(difference is if you remove "i" or otherwise break the disabled debug code, "#if 0" will complain the next time you need that debugging code, "if (0)" will

complain right away).

Some of this disabled debug code I would rather not remove - so much debug scaffolding I have added, removed, added again, removed again, all in the same

places that I cannot be bothered with removing it anymore. I "#if 0" it and it stays there until I need it next time. But of course now gcc complains about it.

K.O. |

|