| ID |

Date |

Author |

Topic |

Subject |

|

1096

|

19 Aug 2015 |

Konstantin Olchanski | Bug Report | Sequencer limits | >

> See LOOP doc

> LOOP cnt, 10

> ODBGET /foo/bflag, bb

> IF $bb==1 THEN

> SET cnt, 10

> ELSE

> ...

>

Looks like we have PE |

|

1097

|

20 Aug 2015 |

Stefan Ritt | Bug Report | Sequencer limits | > > - ODBGet (and ODBSet?) does seem to be able to take a variable as a path... I

> > was trying to use an array whose index would be incremented.

>

> To be checked.

It does not take a variable as a path, but as an index. So you can do

LOOP i, 5

WAIT seconds, 3

ODBSET /System/Tmp/Test[$i], $i

ENDLOOP

And you will get

/System/Tmp/Test

[1] 1

[2] 2

[3] 3

[4] 4

[5] 5

/Stefan |

|

2303

|

19 Nov 2021 |

Jacob Thorne | Forum | Sequencer error with ODB Inc | Hi,

I am having problems with the midas sequencer, here is my code:

1 COMMENT "Example to move a Standa stage"

2 RUNDESCRIPTION "Example movement sequence - each run is one position of a single stage

3

4 PARAM numRuns

5 PARAM sequenceNumber

6 PARAM RunNum

7

8 PARAM positionT2

9 PARAM deltapositionT2

10

11 ODBSet "/Runinfo/Run number", $RunNum

12 ODBSet "/Runinfo/Sequence number", $sequenceNumber

13

14 ODBSet "/Equipment/Neutron Detector/Settings/Detector/Type of Measurement", 2

15 ODBSet "/Equipment/Neutron Detector/Settings/Detector/Number of Time Bins", 10

16 ODBSet "/Equipment/Neutron Detector/Settings/Detector/Number of Sweeps", 1

17 ODBSet "/Equipment/Neutron Detector/Settings/Detector/Dwell Time", 100000

18

19 ODBSet "/Equipment/MTSC/Settings/Devices/Stage 2 Translation/Device Driver/Set Position", $positionT2

20

21 LOOP $numRuns

22 WAIT ODBvalue, "/Equipment/MTSC/Settings/Devices/Stage 2 Translation/Ready", ==, 1

23 TRANSITION START

24 WAIT ODBvalue, "/Equipment/Neutron Detector/Statistics/Events sent", >=, 1

25 WAIT ODBvalue, "/Runinfo/State", ==, 1

26 WAIT ODBvalue, "/Runinfo/Transition in progress", ==, 0

27 TRANSITION STOP

28 ODBInc "/Equipment/MTSC/Settings/Devices/Stage 2 Translation/Device Driver/Set Position", $deltapositionT2

29

30 ENDLOOP

31

32 ODBSet "/Runinfo/Sequence number", 0

The issue comes with line 28, the ODBInc does not work, regardless of what number I put I get the following error:

[Sequencer,ERROR] [odb.cxx:7046:db_set_data_index1,ERROR] "/Equipment/MTSC/Settings/Devices/Stage 2 Translation/Device Driver/Set Position" invalid element data size 32, expected 4

I don't see why this should happen, the format is correct and the number that I input is an int.

Sorry if this is a basic question.

Jacob |

|

2306

|

02 Dec 2021 |

Stefan Ritt | Forum | Sequencer error with ODB Inc | Thanks for reporting that bug. Indeed there was a problem in the sequencer code which I fixed now. Please try the updated develop branch.

Stefan |

|

2732

|

02 Apr 2024 |

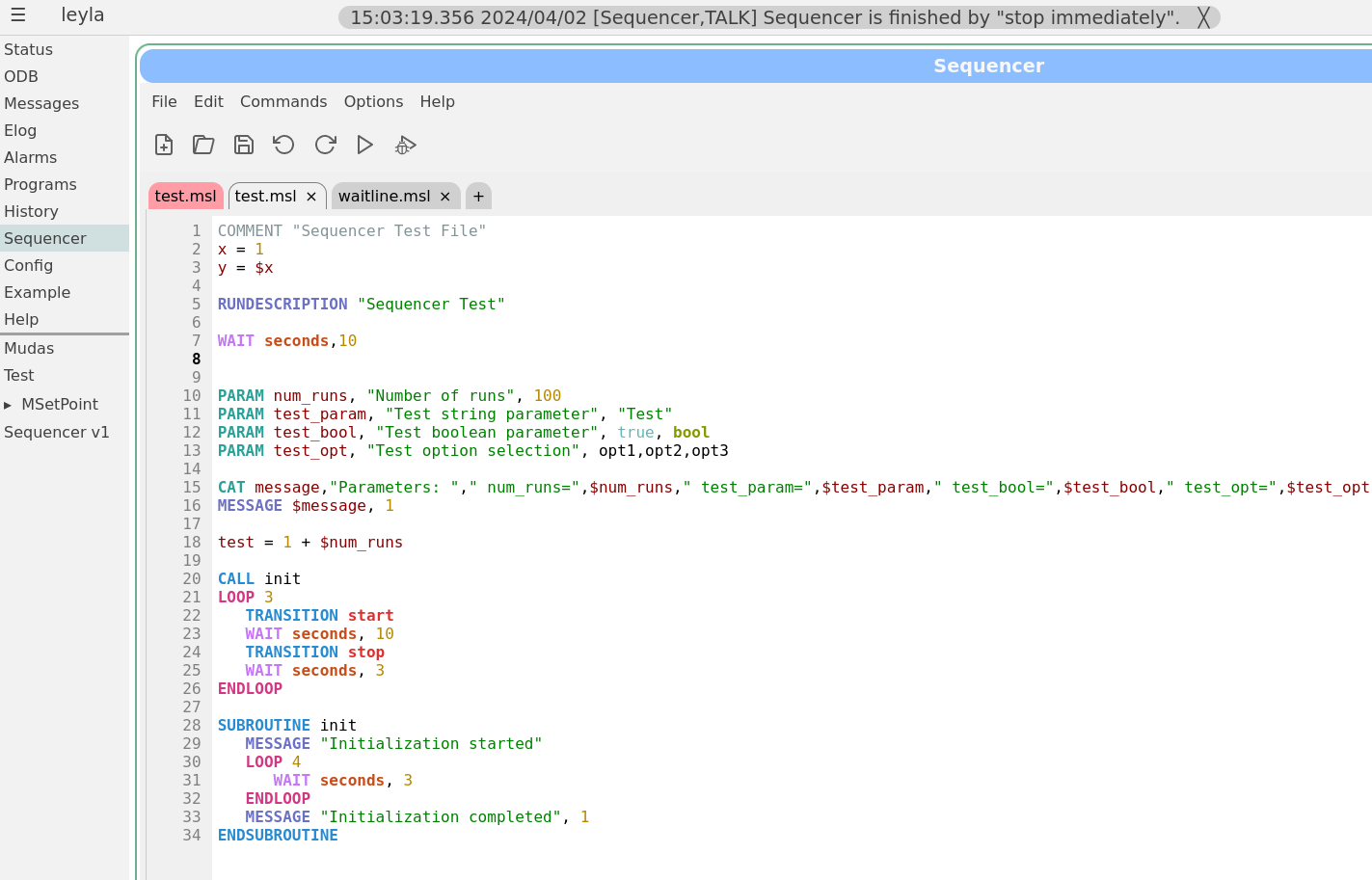

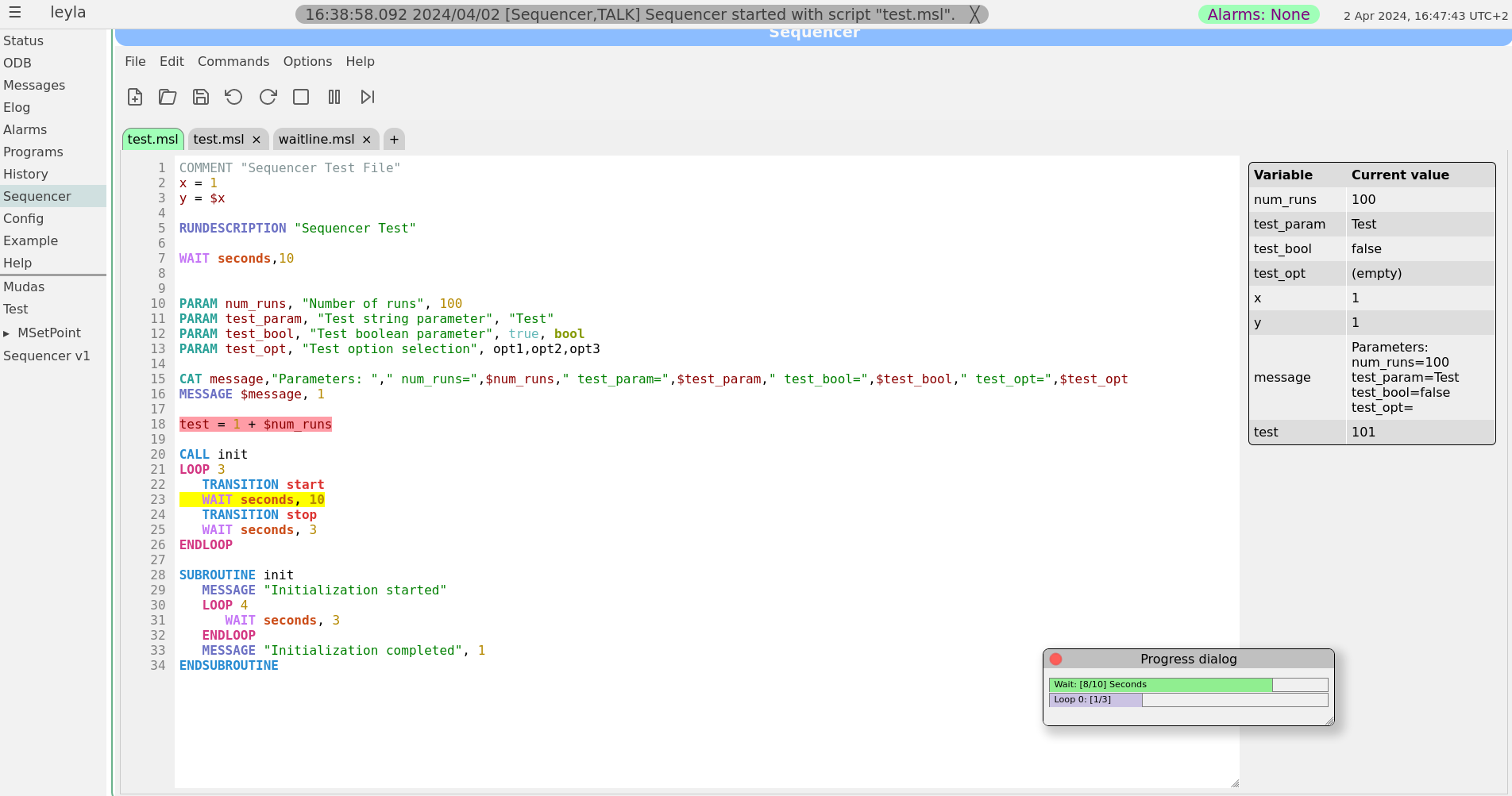

Zaher Salman | Info | Sequencer editor | Dear all,

Stefan and I have been working on improving the sequencer editor to make it look and feel more like a standard editor. This sequencer v2 has been finally merged into the develop branch earlier today.

The sequencer page has now a main tab which is used as a "console" to show the loaded sequence and it's progress when running. All other tabs are used only for editing scripts. To edit a currently loaded sequence simply double click on the editing area of the main tab or load the file in a new tab. A couple of screen shots of the new editor are attached.

For those who would like to stay with the older sequencer version a bit longer, you may simply copy resources/sequencer_v1.html to resources/sequencer.html. However, this version is not being actively maintained and may become obsolete at some point. Please help us improve the new version instead by reporting bugs and feature requests on bitbucket or here.

Best regards,

Zaher

|

|

2733

|

02 Apr 2024 |

Konstantin Olchanski | Info | Sequencer editor | > Stefan and I have been working on improving the sequencer editor ...

Looks grand! Congratulations with getting it completed. The previous version was

my rewrite of the old generated-C pages into html+javascript, nothing to write

home about, I even kept the 1990-ies-style html formatting and styling as much as

possible.

K.O. |

|

3013

|

01 Apr 2025 |

Lukas Gerritzen | Suggestion | Sequencer ODBSET feature requests | I would like to request the following sequencer features if you find the ideas as sensible as I do:

- A "Reload File" button

- Support for patterns in ODBSET, e.g.:

-

ODBSET "/Path/value[1,3,5]", 1 -

ODBSET "/Path/value[1-5,7-9]", 1 - Arbitrary combinations of the above

- Support for variable substitution:

-

SET GOODCHANNELS, "1-5,7,9"; ODBSET "/Path/value[$GOODCHANNELS]", 1 -

SET BADCHANNELS, "6,8"; ODBSET "/Path/value[!$BADCHANNELS]", 1 -

ODBSET "/Path/value[0-100, except $BADCHANNELS]", 1

To add some context: I am using the sequencer for a voltage scan of several thousand channels. However, a few dozen of them have shorts, so I cannot simply set all demands to the voltage step. Currently, this is solved with a manually-created ODB file for each individual voltage step, but as you can imagine, this is quite difficult to maintain.

I also encountered a small annoyance in the current workflow of editing sequencer files in the browser:

- Load a file

- Double-click it to edit it, acknowledge the "To edit the sequence it must be opened in an editor tab" dialog

- A new tab opens

- Edit something, click "Start", acknowledge the "Save and start?" dialog (which pops up even if no changes are made)

- Run the script

- Double-click to make more changes -> another tab opens

After a while, many tabs with the same file are open. I understand this may be considered "user error", but perhaps the sequencer could avoid opening redundant tabs for the same file, or prompt before doing so?

Thanks for considering these suggestions! |

|

3014

|

01 Apr 2025 |

Lukas Gerritzen | Suggestion | Sequencer ODBSET feature requests | While trying to simplify the existing spaghetti code, I encountered problems with type safety. Compare the following:SET v, "54"

SET file, "MPPCHV_$v.odb"

ODBLOAD $file -> successfully loads MPPCHV_54.odb

SET v, "54.2"

SET file, "MPPCHV_$v.odb"

ODBLOAD $file -> Error reading file "[...]/MPPCHV_54.200000.odb"

The "54.2" appears to be stored as a float rather than a string. Maybe "54" was stored as an integer? I don't know how to verify this in odbedit.

Actually, I would be fine with setting the value as a float, as it allows arithmetic. In that case, I would appreciate something like a SPRINTF function in MSL:SET v, 54.2

SPRINTF file, "MPPCHV_%f.odb", $v

ODBLOAD $file Or, maybe a bit more modern, something akin to Python's f-stringsODBLOAD f"MPPCHV_{v:.1f}.odb" |

|

3015

|

01 Apr 2025 |

Stefan Ritt | Suggestion | Sequencer ODBSET feature requests | A new sequencer which understands Python is in the works. There you can use all features from that language.

Stefan |

|

3016

|

01 Apr 2025 |

Stefan Ritt | Suggestion | Sequencer ODBSET feature requests | The extended ODBSET[x,y1-y2,z] could make sense to be implemented, since it then will match the alarm system which uses the same syntax.

The $GOODCHANNELS/$BADCHANNELS is however a very strange syntax which I haven't seen in any other computer language. It would take me probably several days to properly implement this, while it would take you much less time to explicitly use a few ODBSET statements to set the bad channels to zero.

For the file edit workflow, the author of the editor will have a look.

Stefan

| Lukas Gerritzen wrote: | I would like to request the following sequencer features if you find the ideas as sensible as I do:

- A "Reload File" button

- Support for patterns in ODBSET, e.g.:

-

ODBSET "/Path/value[1,3,5]", 1 -

ODBSET "/Path/value[1-5,7-9]", 1 - Arbitrary combinations of the above

- Support for variable substitution:

-

SET GOODCHANNELS, "1-5,7,9"; ODBSET "/Path/value[$GOODCHANNELS]", 1 -

SET BADCHANNELS, "6,8"; ODBSET "/Path/value[!$BADCHANNELS]", 1 -

ODBSET "/Path/value[0-100, except $BADCHANNELS]", 1

|

|

|

3019

|

01 Apr 2025 |

Konstantin Olchanski | Suggestion | Sequencer ODBSET feature requests | > ODBSET "/Path/value[1,3,5]"

> ODBSET "/Path/value[1-5,7-9]"

we support this array index syntax in several places,

specifically, in javascript odb get and set mjsonrpc RPCs.

> SET GOODCHANNELS, "1-5,7,9"; ODBSET "/Path/value[$GOODCHANNELS]"

> SET BADCHANNELS, "6,8"; ODBSET "/Path/value[!$BADCHANNELS]"

> ODBSET "/Path/value[0-100, except $BADCHANNELS]"

this is very clever syntax, but I have not seen any programming

language actually implement it (not even perl).

there must be a good reason why nobody does this. probably we should not do it either.

but as Stefan said (and my opinion), the route of extending MIDAS sequencer

language until it becomes a superset of python, perl, tcl, bash, javascript

and algol is not a sustainable approach. I once looked at using LUA for this,

but I think basing off an full featured programming language like python

is better.

K.O. |

|

3021

|

01 Apr 2025 |

Pavel Murat | Suggestion | Sequencer ODBSET feature requests | I once looked at using LUA for this,

> but I think basing off an full featured programming language like python

> is better.

if it came to a vote, my vote would go to Lua: it would allow to do everything needed,

with much less external dependencies and with much less motivation to over-use the interpreter.

The CMS experience was very teaching in this respect...

-- my 2c, regards, Pasha |

|

3024

|

02 Apr 2025 |

Konstantin Olchanski | Suggestion | Sequencer ODBSET feature requests | > I once looked at using LUA for this

>

> > but I think basing off an full featured programming language like python

> > is better.

>

> if it came to a vote, my vote would go to Lua: it would allow to do everything needed,

> with much less external dependencies and with much less motivation to over-use the interpreter.

> The CMS experience was very teaching in this respect...

Unfortunately I am only slightly aware of Lua to say how nicve or how bad it is. And we are

not sure how well it supports the single-line-stepping that permits the nice graphical

visualization of Stefan's sequencer.

It looks like python has the single-line-stepping built-in as a standard feature

and python is a more popular and more versatile machine, so to me python looks

like a better choice compared to lua (obscure), perl ("nobody uses it anymore")

or bash (ugly syntax).

K.O. |

|

3025

|

02 Apr 2025 |

Stefan Ritt | Suggestion | Sequencer ODBSET feature requests | And there is one more argument:

We have a Python expert in our development team who wrote already the Python-to-C bindings. That means when running a Python

script, we can already start/stop runs, write/read to the ODB etc. We only have to get the single stepping going which seems feasible to

me, since there are some libraries like inspect.currentframe() and traceback.extract_stack(). For single-stepping there are debug APIs

like debugpy. With Lua we really would have to start from scratch.

Stefan |

|

3026

|

07 Apr 2025 |

Zaher Salman | Suggestion | Sequencer ODBSET feature requests |

| Lukas Gerritzen wrote: |

I also encountered a small annoyance in the current workflow of editing sequencer files in the browser:

- Load a file

- Double-click it to edit it, acknowledge the "To edit the sequence it must be opened in an editor tab" dialog

- A new tab opens

- Edit something, click "Start", acknowledge the "Save and start?" dialog (which pops up even if no changes are made)

- Run the script

- Double-click to make more changes -> another tab opens

After a while, many tabs with the same file are open. I understand this may be considered "user error", but perhaps the sequencer could avoid opening redundant tabs for the same file, or prompt before doing so?

Thanks for considering these suggestions! |

The original reason the restricting edits in the first tab is that it is used to reflect the state of the sequencer, i.e. the file that is currently loaded in the ODB.

Imagine two users are working in parallel on the same file, each preparing their own sequence. One finishes editing and starts the sequencer. How would the second person know that by now the file was changed and is running?

I am open to suggestions to minimize the number of clicks and/or other options to make the first tab editable while making it safe and visible to all other users. Maybe a lock mechanism in the ODB can help here.

Zaher |

|

3027

|

07 Apr 2025 |

Stefan Ritt | Suggestion | Sequencer ODBSET feature requests | If people are simultaneously editing scripts this is indeed an issue, which probably never can be resolved by technical means. It need communication between the users.

For the main script some ODB locking might look like:

- First person clicks on "Edit", system checks that file is not locked and sequencer is not running, then goes into edit mode

- When entering edit mode, the editor puts a lock in to the ODB, like "Scrip lock = pc1234".

- When another person clicks on "Edit", the system replies "File current being edited on pc1234"

- When the first person saves the file or closes the web browser, the lock gets removed.

- Since a browser can crash without removing a lock, we need some automatic lock recovery, like if the lock is there, the next users gets a message "file currently locked. Click "override" to "steal" the lock and edit the file".

All that is not 100% perfect, but will probably cover 99% of the cases.

There is still the problem on all other scripts. In principle we would need a lock for each file which is not so simple to implement (would need arrays of files and host names).

Another issue will arise if a user opens a file twice for editing. The second attempt will fail, but I believe this is what we want.

A hostname for the lock is the easiest we can get. Would be better to also have a user name, but since the midas API does not require a log in, we won't have a user name handy. At it would be too tedious to ask "if you want to edit this file, enter your username".

Just some thoughts.

Stefan |

|

952

|

31 Jan 2014 |

Stefan Ritt | Info | Separation of MSCB subtree | Since several projects at PSI need MSCB but not MIDAS, I decided to separate the two repositories. So if you

need MIDAS with MSCB support inside mhttpd, you have to clone MIDAS, MXML and MSCB from bitbucket

(or the local clone at TRIUMF) as described in

https://midas.triumf.ca/MidasWiki/index.php/Main_Page#Download

I tried to fix all Makefiles to link to the new locations, but I'm not sure if I got all. So if something does not

compile please let me know.

-Stefan |

|

960

|

18 Feb 2014 |

Konstantin Olchanski | Info | Separation of MSCB subtree | > Since several projects at PSI need MSCB but not MIDAS, I decided to separate the two repositories. So if you

> need MIDAS with MSCB support inside mhttpd, you have to clone MIDAS, MXML and MSCB from bitbucket

> (or the local clone at TRIUMF) as described in

>

> https://midas.triumf.ca/MidasWiki/index.php/Main_Page#Download

>

> I tried to fix all Makefiles to link to the new locations, but I'm not sure if I got all. So if something does not

> compile please let me know.

>

> -Stefan

After this split, Makefiles used to build experiment frontends need to be modified for the new location of the mscb tree:

replace

$(MIDASSYS)/mscb

with

$(MIDASSYS)/../mscb

K.O. |

|

530

|

26 Nov 2008 |

Jimmy Ngai | Info | Send email alert in alarm system | Dear All,

We have a temperature/humidity sensor in MIDAS now and will add a liquid level

sensor to MIDAS soon. We want the operators to get alerted ASAP when the

laboratory environment or the liquid level reached some critical levels. Can

MIDAS send email alerts or SMS alerts to cell phones when the alarms are

triggered? If yes, how can I config it?

Many thanks!

Best Regards,

Jimmy |

|

531

|

26 Nov 2008 |

Stefan Ritt | Info | Send email alert in alarm system | > We have a temperature/humidity sensor in MIDAS now and will add a liquid level

> sensor to MIDAS soon. We want the operators to get alerted ASAP when the

> laboratory environment or the liquid level reached some critical levels. Can

> MIDAS send email alerts or SMS alerts to cell phones when the alarms are

> triggered? If yes, how can I config it?

Sure that's possible, that's why MIDAS contains an alarm system. To use it, define

an ODB alarm on your liquid level, like

/Alarms/Alarms/Liquid Level

Active y

Triggered 0 (0x0)

Type 3 (0x3)

Check interval 60 (0x3C)

Checked last 1227690148 (0x492D10A4)

Time triggered first (empty)

Time triggered last (empty)

Condition /Equipment/Environment/Variables/Input[0] < 10

Alarm Class Level Alarm

Alarm Message Liquid Level is only %s

The Condition if course might be different in your case, just select the correct

variable from your equipment. In this case, the alarm triggers an alarm of class

"Level Alarm". Now you define this alarm class:

/Alarms/Classes/Level Alarm

Write system message y

Write Elog message n

System message interval 600 (0x258)

System message last 0 (0x0)

Execute command /home/midas/level_alarm '%s'

Execute interval 1800 (0x708)

Execute last 0 (0x0)

Stop run n

Display BGColor red

Display FGColor black

The key here is to call a script "level_alarm", which can send emails. Use

something like:

#/bin/csh

echo $1 | mail -s \"Level Alarm\" your.name@domain.edu

odbedit -c 'msg 2 level_alarm \"Alarm was sent to your.name@domain.edu\"'

The second command just generates a midas system message for confirmation. Most

cell phones (depends on the provider) have an email address. If you send an email

there, it gets translated into a SMS message.

The script file above can of course be more complicated. We use a perl script

which parses an address list, so everyone can register by adding his/her email

address to that list. The script collects also some other slow control variables

(like pressure, temperature) and combines this into the SMS message.

For very sensitive systems, having an alarm via SMS is not everything, since the

alarm system could be down (computer crash or whatever). In this case we use

'negative alarms' or however you might call it. The system sends every 30 minutes

an SMS with the current levels etc. If the SMS is missing for some time, it might

be an indication that something in the midas system is wrong and one can go there

and investigate. |

|