| ID |

Date |

Author |

Topic |

Subject |

|

836

|

11 Sep 2012 |

Stefan Ritt | Info | MIDAS button to display image | > Hi,

>

> I've written a python script that reads some data from a file and generates a

> .png image. I want to have a button on my MIDAS status page that:

>

> - executes the script and waits for it to finish,

> - then displays the image

>

> How can I do that? I tried using the sequencer to just execute the script every

> 30 seconds, but I can't get it to work, and it would be better to only execute

> the script on demand anyway.

>

> I also am having trouble getting image display to work. I have the ODB keys set:

>

> [local:oven1:S]/Custom>ls

> Temperature Map& /home/deap/ovendaq/online/index.html

> Images

>

> [local:oven1:S]/Custom>ls Images/temps.png/

> Background /home/deap/ovendaq/online/temps.png

>

> And the HTML file is just this:

> <img src="temps.png">

>

> But the image won't display. It shows a "broken" picture, and when I try to view

> it directly it says: Invalid custom page: Page not found in ODB.

>

> Any help would be appreciated...

>

> Thanks

> Shaun

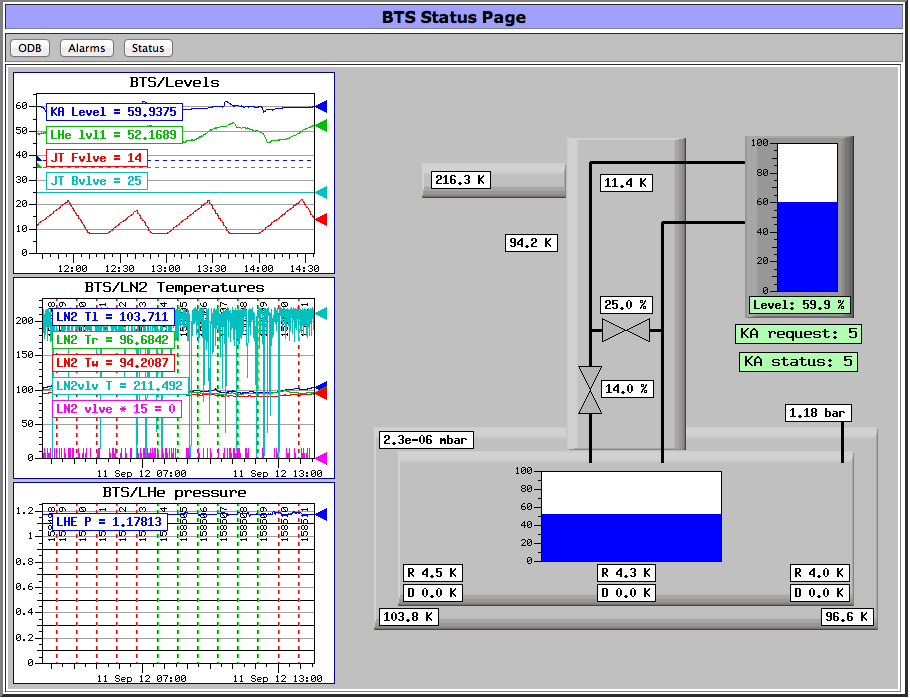

If you use the "custom" image system, you need to use GIF images. mhttpd can dynamically create GIF

images,

with a background image and overlaid labels, bar graphs etc. But mhttpd just contains a GIF library to do

that

in memory, but no PNG library.

Actually I would recommend you not to use a script to create an image, but use the custom image system

to

display temperatures. In the attachment you see an page from our experiment which contains a

background image (the greyish boxes), labels (white temperature boxes), bar graphs (blue level boxes)

and history pages (left side). This is all dynamically created inside mhttpd using the custom page system

without any external script. All you have to do is to get the temperatures and levels inside the ODB via the

slow control system. If you want, I can send you the full code for that page.

Cheers,

Stefan |

| Attachment 1: Screen_Shot_2012-09-11_at_14.36.56_.png

|

|

|

854

|

24 Jan 2013 |

Konstantin Olchanski | Info | Compression benchmarks | In the DEAP experiment, the normal MIDAS mlogger gzip compression is not fast enough for some data

taking modes, so I am doing tests of other compression programs. Here is the results.

Executive summary:

fastest compression is no compression (cat at 1800 Mbytes/sec - memcpy speed), next best are:

"lzf" at 300 Mbytes/sec and "lzop" at 250 Mbytes/sec with 50% compression

"gzip -1" at around 70 Mbytes/sec with around 70% compression

"bzip2" at around 12 Mbytes/sec with around 80% compression

"pbzip2", as advertised, scales bzip2 compression linearly with the number of CPUs to 46 Mbytes/sec (4

real CPUs), then slower to a maximum 60 Mbytes/sec (8 hyper-threaded CPUs).

This confirms that our original choice of "gzip -1" method for compression using zlib inside mlogger is

still a good choice. bzip2 can gain an additional 10% compression at the cost of 6 times more CPU

utilization. lzo/lzf can do 50% compression at GigE network speed and at "normal" disk speed.

I think these numbers make a good case for adding lzo/lzf compression to mlogger.

Comments about the data:

- time measured is the "elapsed" time of the compression program. it excludes the time spent flushing

the compressed output file to disk.

- the relevant number is the first rate number (input data rate)

- test machine has 32GB of RAM, so all I/O is cached, disk speed does not affect these results

- "cat" gives a measure of overall machine "speed" (but test file is too small to give precise measurement)

- "gzip -1" is the recommended MIDAS mlogger compression setting

- "pbzip2 -p8" uses 8 "hyper-threaded" CPUs, but machine only has 4 "real" CPU cores

<pre>

cat : time 0.2s, size 431379371 431379371, comp 0%, rate 1797M/s 1797M/s

cat : time 0.6s, size 1013573981 1013573981, comp 0%, rate 1809M/s 1809M/s

cat : time 1.1s, size 2027241617 2027241617, comp 0%, rate 1826M/s 1826M/s

gzip -1 : time 6.4s, size 431379371 141008293, comp 67%, rate 67M/s 22M/s

gzip : time 30.3s, size 431379371 131017324, comp 70%, rate 14M/s 4M/s

gzip -9 : time 94.2s, size 431379371 133071189, comp 69%, rate 4M/s 1M/s

gzip -1 : time 15.2s, size 1013573981 347820209, comp 66%, rate 66M/s 22M/s

gzip -1 : time 29.4s, size 2027241617 638495283, comp 69%, rate 68M/s 21M/s

bzip2 -1 : time 34.4s, size 431379371 91905771, comp 79%, rate 12M/s 2M/s

bzip2 : time 33.9s, size 431379371 86144682, comp 80%, rate 12M/s 2M/s

bzip2 -9 : time 34.2s, size 431379371 86144682, comp 80%, rate 12M/s 2M/s

pbzip2 -p1 : time 34.9s, size 431379371 86152857, comp 80%, rate 12M/s 2M/s (1 CPU)

pbzip2 -p1 -1 : time 34.6s, size 431379371 91935441, comp 79%, rate 12M/s 2M/s

pbzip2 -p1 -9 : time 34.8s, size 431379371 86152857, comp 80%, rate 12M/s 2M/s

pbzip2 -p2 : time 17.6s, size 431379371 86152857, comp 80%, rate 24M/s 4M/s (2 CPU)

pbzip2 -p3 : time 11.9s, size 431379371 86152857, comp 80%, rate 36M/s 7M/s (3 CPU)

pbzip2 -p4 : time 9.3s, size 431379371 86152857, comp 80%, rate 46M/s 9M/s (4 CPU)

pbzip2 -p4 : time 45.3s, size 2027241617 384406870, comp 81%, rate 44M/s 8M/s

pbzip2 -p8 : time 33.3s, size 2027241617 384406870, comp 81%, rate 60M/s 11M/s

lzop -1 : time 1.6s, size 431379371 213416336, comp 51%, rate 261M/s 129M/s

lzop : time 1.7s, size 431379371 213328371, comp 51%, rate 249M/s 123M/s

lzop : time 4.3s, size 1013573981 515317099, comp 49%, rate 234M/s 119M/s

lzop : time 7.3s, size 2027241617 978374154, comp 52%, rate 277M/s 133M/s

lzop -9 : time 176.6s, size 431379371 157985635, comp 63%, rate 2M/s 0M/s

lzf : time 1.4s, size 431379371 210789363, comp 51%, rate 299M/s 146M/s

lzf : time 3.6s, size 1013573981 523007102, comp 48%, rate 282M/s 145M/s

lzf : time 6.7s, size 2027241617 972953255, comp 52%, rate 303M/s 145M/s

lzma -0 : time 27s, size 431379371 112406964, comp 74%, rate 15M/s 4M/s

lzma -1 : time 35s, size 431379371 111235594, comp 74%, rate 12M/s 3M/s

lzma: > 5 min, killed

xz -0 : time 28s, size 431379371 112424452, comp 74%, rate 15M/s 4M/s

xz -1 : time 35s, size 431379371 111252916, comp 74%, rate 12M/s 3M/s

xz: > 5 min, killed

</pre>

Columns are:

compression program

time: elapsed time of the compression program (excludes the time to flush output file to disk)

size: size of input file, size of output file

comp: compression ration (0%=no compression, 100%=file compresses into nothing)

rate: input data rate (size of input file divided by elapsed time), output data rate (size of output file

divided by elapsed time)

Machine used for testing (from /proc/cpuinfo):

Intel(R) Core(TM) i7-3820 CPU @ 3.60GHz

quad core cpu with hyper-threading (8 CPU total)

32 GB quad-channel DDR3-1600.

Script used for testing:

#!/usr/bin/perl -w

my $x = join(" ", @ARGV);

my $in = "test.mid";

my $out = "test.mid.out";

my $tout = "test.time";

my $cmd = "/usr/bin/time -o $tout -f \"%e\" /usr/bin/time $x < test.mid > test.mid.out";

print $cmd,"\n";

my $t0 = time();

system $cmd;

my $t1 = time();

my $c = `cat $tout`;

print "Elapsed time: $c";

my $t = $c;

#system "/bin/ls -l $in $out";

my $sin = -s $in;

my $sout = -s $out;

my $xt = $t1-$t0;

$xt = 1 if $xt<1;

print "Total time: $xt\n";

print sprintf("%-20s: time %5.1fs, size %12d %12d, comp %3.0f%%, rate %3dM/s %3dM/s", $x, $t, $sin,

$sout, 100*($sin-$sout)/$sin, ($sin/$t)/1e6, ($sout/$t)/1e6), "\n";

exit 0;

# end

Typical output:

[deap@deap00 pet]$ ./r.perl lzf

/usr/bin/time -o test.time -f "%e" /usr/bin/time lzf < test.mid > test.mid.out

1.27user 0.15system 0:01.44elapsed 99%CPU (0avgtext+0avgdata 2800maxresident)k

0inputs+411704outputs (0major+268minor)pagefaults 0swaps

Elapsed time: 1.44

Total time: 3

lzf : time 1.4s, size 431379371 210789363, comp 51%, rate 299M/s 146M/s

K.O. |

|

858

|

06 Feb 2013 |

Stefan Ritt | Info | Compression benchmarks | I redid the tests from Konstantin for our MEG experiment at PSI. The event structure is different, so it

is interesting how the two different experiments compare. We have an event size of 2.4 MB and a trigger

rate of ~10 Hz, so we produce a raw data rate of 24 MB/sec. A typical run contains 2000 events, so has a

size of 5 GB. Here are the results:

cat : time 7.8s, size 4960156030 4960156030, comp 0%, rate 639M/s 639M/s

gzip -1 : time 147.2s, size 4960156030 2468073901, comp 50%, rate 33M/s 16M/s

pbzip2 -p1 : time 679.6s, size 4960156030 1738127829, comp 65%, rate 7M/s 2M/s (1 CPU)

pbzip2 -p8 : time 96.1s, size 4960156030 1738127829, comp 65%, rate 51M/s 18M/s (8 CPU)

As one can see, our compression ratio is poorer (due to the quasi random noise in our waveforms), but the

difference between gzip -1 and pbzip2 is larger (15% instead 10% for DEAP). The single CPU version of

pbzip cannot sustain our DAQ rate of 24 MB, but the parallel version can. Actually we have a somehow old

dual-core dual-CPU board 2.5 GHz Xenon box, and make 8 hyper-threading CPUs out of the total 4 cores.

Interestingly the compression rate scales with 7.3 for 8 virtual cores, so hyper-threading does its job.

So we take all our data with the pbzip2 compression. The additional 15% as compared with gzip does

not sound much, but we produce raw 250 TB/year. So gzip gives us 132 TB/year and pbzip2 gives

us 98 TB/year, and we save quite some disks.

Note that you can run bzip2 (as all the other methods) already now with the current logger, if you specify

an external compression program in the ODB using the pipe functionality:

local:MEG:S]/>cd Logger/Channels/0/Settings/

[local:MEG:S]Settings>ls

Active y

Type Disk

Filename |pbzip2>/megdata/run%06d.mid.bz2

Format MIDAS

Compression 0

ODB dump y

Log messages 0

Buffer SYSTEM

Event ID -1

Trigger mask -1

Event limit 0

Byte limit 0

Subrun Byte limit 0

Tape capacity 0

Subdir format

Current filename /megdata/run197090.mid.bz2

</pre> |

|

863

|

13 Feb 2013 |

Konstantin Olchanski | Info | Review of github and bitbucket | I have done a review of github and bitbucket as candidates for hosting GIT repositories for collaborative

DAQ-type projects. Here is my impressions.

1. GIT as a software management tool seems to be a reasonable choice for DAQ-type projects. "master"

repositories can be hosted at places like github or self-hosted (in the simplest case, only

http://host/~user web access is required to host a git repository), for each "daq project" aka "experiment"

one would "clone" the master repository, perform any local modifications as required, with full local

version control, and when desired feed the changes back to the master repository as direct commits (git

push), as patches posted to github ("pull requests") or patches emailed to the maintainers (git format-

patch).

2. Modern requirements for hosting a DAQ-type project include:

a) code repository (GIT, etc) with reasonably easy user access control (i.e. commit privileges should be

assigned by the project administrators directly, regardless of who is on the payroll at which lab or who is

a registered user of CERN or who is in some LDAP database managed by some IT departement

somewhere).

b) a wiki for documentation, with similar user access control requirements.

c) a mailing list, forum or bug tracking system for communication and "community building"

d) an ability to web host large static files (schematics, datasheets, firmware files, etc)

e) reasonable web-based tools for browsing the files, looking at diffs, "cvs annotate/git blame", etc.

3. Both github and bitbucket satisfy most of these requirements in similar ways:

a) GIT repositories:

aa) access using git, ssh and https with password protection. ssh keys can be uploaded to the server,

permitting automatic commits from scripts and cron jobs.

bb) anonymous checkout possible (cannot be disabled)

cc) user management is simple: participants have to self-register, confirm their email address, the project

administrator to gives them commit access to specific git repositories (and wikis).

dd) for the case of multiple project administrators, one creates "teams" of participants. In this

configuration the repositories are owned by the "team" and all designated "team administrators" have

equal administrative access to the project.

b) Wiki:

aa) both github and bitbucket provide rudimentary wikis, with wiki pages stored in secondary git

repositories (*NOT* as a branch or subdirectory of the main repo).

bb) github supports "markdown" and "mediawiki" syntax

cc) bitbucket supports "markdown" and "creole" syntax (all documentation and examples use the "creole"

syntax).

dd) there does not seem to be any way to set the "project standard" syntax - both wikis have the "new

page" editor default to the "markdown" syntax.

ee) compared to mediawiki (wikipedia, triumf daq wiki) and even plone, both github and bitbucket wikis

lack important features:

1) cannot edit individual sections of a page, only the whole page at once, bad if you have long pages.

2) cannot upload images (and other documents) directly through the web editor/interface. Both wikis

require that you clone the wiki git repository, commit image and other files locally and push the wiki git

repo into the server (hopefully without any collisions), only then you can use the images and documents

in the wiki.

3) there is no "preview" function for images - in mediawiki I can have small size automatically generated

"preview" images on the wiki page, when I click on them I get the full size image. (Even "elog" can do this!)

ff) to be extra helpful, the wiki git repository is invisible to the normal git repository graphical tools for

looking at revisions, branches, diffs, etc. While github has a special web page listing all existing wiki

pages, bitbucket does not have such a page, so you better write down the filenames on a piece of paper.

c) mailing list/forum/bug tracking:

aa) both github and bitbucket implement reasonable bug tracking systems (but in both systems I do not

see any button to export the bug database - all data is stuck inside the hosting provider. Perhaps there is

a "hidden button" somewhere).

bb) bitbucket sends quite reasonable email notifications

cc) github is silent, I do not see any email notifications at all about anything. Maybe github thinks I do not

want to see notices about my own activities, good of it to make such decisions for me.

d) hosting of large files: both git and wiki functions can host arbitrary files (compared to mediawiki only

accepting some file types, i.e. Quartus pof files are rejected).

e) web based tools: thumbs up to both! web interfaces are slick and responsive, easy to use.

Conclusions:

Both github and bitbucket provide similar full-featured git repository hosting, user management and bug

tracking.

Both provide very rudimentary wiki systems. Compared to full featured wikis (i.e. mediawiki), this is like

going back to SCCS for code management (from before RCS, before CVS, before SVN). Disappointing. A

deal breaker if my vote counts.

K.O. |

|

864

|

14 Feb 2013 |

Stefan Ritt | Info | Review of github and bitbucket | Let me add my five cents:

We use bitbucket now since two months at PSI, and are very happy with it.

Pros:

- We like the GIT flow model (http://nvie.com/posts/a-successful-git-branching-model/). You can at the same time do hot fixes, have a "distribution

version", and keep a development branch, where you can try new things without compromising the distribution.

- Nice and fast Web interface, especially the "blame" is lightning fast compared to SVN/CVS

- GIT is non-centralized, so your local clone of a repository contains everything. If bitbucket is down/asks for money, you can continue with your local

repository and clone it to some other hosting service, or host it yourself

- SourceTree (http://www.sourcetreeapp.com/) is a nice GUI for Mac lovers.

- Easy user management

- Free for academic use

Con:

- Wiki is limited as KO wrote, so it should not be used as a "full" wiki to replace Plone for example, just to annotate your project

- SVN revision number is gone. This is on purpose since it does not make sense any more if you keep several parallel branches (merging becomes a

nightmare), so one has to use either the (random) commit-ID or start tagging again.

So I conclusion, I would say that it's time to switch MIDAS to GIT. We'll probably do that in July when I will be at TRIUMF.

/Stefan |

|

866

|

08 Mar 2013 |

Konstantin Olchanski | Info | ODB /Experiment/MAX_EVENT_SIZE | Somebody pointed out an error in the MIDAS documentation regarding maximum event size

supported by MIDAS and the MAX_EVENT_SIZE #define in midas.h.

Since MIDAS svn rev 4801 (August 2010), one can create events with size bigger than

MAX_EVENT_SIZE in midas.h (without having to recompile MIDAS):

To do so, one must increase:

- the value of ODB /Experiment/MAX_EVENT_SIZE

- the size of the SYSTEM shared memory event buffer (and any buffers used by the event builder,

etc)

- max_event_size & co in your frontend.

Actual limits on the bank size and event size are written up here:

https://ladd00.triumf.ca/elog/Midas/757

The bottom line is that the maximum event size is limited by the size of the SYSTEM buffer which is

limited by the physical memory of your computer. No recompilation of MIDAS necessary.

K.O. |

|

867

|

01 Apr 2013 |

Randolf Pohl | Info | Review of github and bitbucket | And my 2ct:

Go for git!

I've been using git since 2007 or so, after cvs and svn. Git has some killer features which I can't miss any more:

* No central repo. Have all the history with you on the train.

* Branching and merging, with stable branches and feature branches.

Happy hacking while my students do analysis on a stable version.

Or multiple development branches for several features.

And merging really works, including fixing up merge conflicts.

* "git bisect" for finding which commit introduced a (reproducible) bug.

* "gitk --all"

I use git for everything: Software, tex, even (Ooffice) Word documents.

Go for git. :-)

Randolf |

|

868

|

02 Apr 2013 |

Konstantin Olchanski | Info | Review of github and bitbucket | Hi, thanks for your positive feedback. I have been using git for small private projects for a few years now

and I like it. It is similar to the old SCCS days - good version control without having to setup servers,

accounts, doodads, etc.

> * No central repo. Have all the history with you on the train.

> * Branching and merging, with stable branches and feature branches.

> Happy hacking while my students do analysis on a stable version.

> Or multiple development branches for several features.

This is the part that worries me the most. Without a "central" "authoritative" repository,

in just a few quick days, everybody will have their own incompatible version of midas.

I guess I am okey with your private midas diverging from mainstream, but when *I* end up

with 10 different incompatible versions just in *my* repository, can that be good?

> And merging really works, including fixing up merge conflicts.

But somebody still has to do it. With a central repository, the problem takes care of

itself - each developer has to do their own merging - with svn, you cannot commit

to the head without merging the head into your code first. But with git, I can just throw

my changes int some branch out there hoping that somebody else would do the merging.

But guess what, there aint anybody home but us chickens. We do not have a mad finn here

to enforce discipline and keep us in shape...

As an example, look at the HADOOP/HDFS code development, they have at least 3 "mainstream"

branches going, neither has all the features combined together and each branch has bugs with

the fixes in a different branch. What a way to run a railroad.

> * "git bisect" for finding which commit introduced a (reproducible) bug.

> * "gitk --all"

>

> Go for git. :-)

Absolutely. For me, as soon as I can wrap my head around this business of "who does all the merging".

K.O. |

|

869

|

02 Apr 2013 |

Randolf Pohl | Info | Review of github and bitbucket | Hi Konstantin,

> > * No central repo. Have all the history with you on the train.

> > * Branching and merging, with stable branches and feature branches.

> > Happy hacking while my students do analysis on a stable version.

> > Or multiple development branches for several features.

>

> This is the part that worries me the most. Without a "central" "authoritative" repository,

> in just a few quick days, everybody will have their own incompatible version of midas.

No! This is probably one of the biggest misunderstandings of the git workflow.

You can of course _define_ one central repo: This is the one that you and Stefan decide to be "the source" (as

Linus does for the kernel). It's like the central svn repo: Only Stefan and you can push to it, and everybody

else will pull from it. Why should I pull MIDAS from some obscure source, when your "public" repo is available.

Look at the Linux Kernel: Linus' version is authoritative, even though everybody and his best friend has his

own kernel repo.

So, the main workflow does not change a lot: You collect patches, commit them, and "push" them to the central

repo. All users "pull" from this central repo. This is very much what svn offers.

>

> I guess I am okey with your private midas diverging from mainstream, but when *I* end up

> with 10 different incompatible versions just in *my* repository, can that be good?

See above: _You_ define what the central repo is.

But: I _bet_ you will very soon have 10 versions in your personal repo, because _you choose_ to do so. It's

just SO much easier. The non-linear history with many branches is a _feature_. I can't live without it any more:

Looking at my MIDAS analyzer:

I have a "public" repo in /pub/git/lamb.git. This is where I publish my analyzer versions. All my collaborators

pull from this.

Then I have my personal repo in ~/src/lamb.

This is where I develop. When I think something is ready for the public, I merge this branch into the public repo.

Whenever I start to work on a new feature, I create a branch in my _local_ repo (~/src/lamb). I can fiddle and

play, not affecting anybody else, because it never sees the public repo.

OK, collaborator A finds a bug. I switch to my local copy of the public version, fix the bug, and push the fix

to the publix repo. Then I go back to my (local) feature branch, merge the bug fix, and continue hacking.

Only when the feature is ready, I push it to the public repo.

Things get moe interesting as you work on several features simultaneously. You have e.g. 3 topic branches:

(a) is nearly ready, and you want a bunch of people to test it.

push branch "feature (a)" to the public repo and tell the people which branch to pull.

(b) is WIP, you hack on it without affecting (a).

(c) is bug fixes which may or may not affect (a) or (b).

And so on.

You will soon discover the beauty of several parallel branches.

Plus, git merges are SO simple that you never think about "how to merge"

>

> > And merging really works, including fixing up merge conflicts.

>

> But somebody still has to do it. With a central repository, the problem takes care of

> itself - each developer has to do their own merging - with svn, you cannot commit

> to the head without merging the head into your code first. But with git, I can just throw

> my changes int some branch out there hoping that somebody else would do the merging.

> But guess what, there aint anybody home but us chickens. We do not have a mad finn here

> to enforce discipline and keep us in shape...

See above: You will have the exact same workflow in git, if you like.

> As an example, look at the HADOOP/HDFS code development, they have at least 3 "mainstream"

> branches going, neither has all the features combined together and each branch has bugs with

> the fixes in a different branch. What a way to run a railroad.

I haven't look at this. All I can say: Branches are one of the best features.

>

> > * "git bisect" for finding which commit introduced a (reproducible) bug.

> > * "gitk --all"

> >

> > Go for git. :-)

>

> Absolutely. For me, as soon as I can wrap my head around this business of "who does all the merging".

Easy: YOU do it.

Keep going as in svn: Collect patches, and send them out.

And then, try "git checkout -b my_first_branch", hack, hack, hack,

"git merge master".

Best,

Randolf

>

> K.O. |

|

870

|

03 Apr 2013 |

Stefan Ritt | Info | Review of github and bitbucket | > * "git bisect" for finding which commit introduced a (reproducible) bug.

I did not know this command, so I read about it. This IS WONDERFUL! I had once (actually with MSCB) the case that a bug was introduced i the last 100

revisions, but I did not know in which. So I checked out -1, -2, -3 revisions, then thought a bit, then tried -99, -98, then had the bright idea to try -50, then

slowly converged. Later I realised that I should have done a binary search, like -50, if ok try -25, if bad try -37, and so on to iteratively find the offending

commit. Finding that there is a command it git which does this automatically is great news.

Stefan |

|

871

|

03 Apr 2013 |

Randolf Pohl | Info | Review of github and bitbucket | > > * "git bisect" for finding which commit introduced a (reproducible) bug.

>

> I did not know this command, so I read about it. This IS WONDERFUL! I had once (actually with MSCB) the case that a bug was introduced i the last 100

> revisions, but I did not know in which. So I checked out -1, -2, -3 revisions, then thought a bit, then tried -99, -98, then had the bright idea to try -50, then

> slowly converged. Later I realised that I should have done a binary search, like -50, if ok try -25, if bad try -37, and so on to iteratively find the offending

> commit. Finding that there is a command it git which does this automatically is great news.

even more so considering the nonlinear history (due to branching) in a regular git repo. |

|

872

|

05 Apr 2013 |

Konstantin Olchanski | Info | ODB JSON support | odbedit can now save ODB in JSON-formatted files. (JSON is a popular data encoding standard associated

with Javascript). The intent is to eventually use the ODB JSON encoder in mhttpd to simplify passing of

ODB data to custom web pages. In mhttpd I also intend to support the JSON-P variation of JSON (via the

jQuery "callback=?" notation).

JSON encoding implementation follows specifications at:

http://json.org/

http://www.json-p.org/

http://api.jquery.com/jQuery.getJSON/ (seek to JSONP)

The result passes validation by:

http://jsonlint.com/

Added functions:

INT EXPRT db_save_json(HNDLE hDB, HNDLE hKey, const char *file_name);

INT EXPRT db_copy_json(HNDLE hDB, HNDLE hKey, char **buffer, int *buffer_size, int *buffer_end, int

save_keys, int follow_links);

For example of using this code, see odbedit.c and odb.c::db_save_json().

Example json file:

Notes:

1) hex numbers are quoted "0x1234" - JSON does not permit "hex numbers", but Javascript will

automatically convert strings containing hex numbers into proper integers.

2) "double" is encoded with full 15 digit precision, "float" with full 7 digit precision. If floating point values

are actually integers, they are encoded as integers (10.0 -> "10" if (value == (int)value)).

3) in this example I deleted all the "name/key" entries except for "stringvalue" and "sbyte2". I use the

"/key" notation for ODB KEY data because the "/" character cannot appear inside valid ODB entry names.

Normally, depending on the setting of "save_keys" argument, KEY data is present or absent for all entries.

ladd03:midas$ odbedit

[local:testexpt:S]/>cd /test

[local:testexpt:S]/test>save test.js

[local:testexpt:S]/test>exit

ladd03:midas$ more test.js

# MIDAS ODB JSON

# FILE test.js

# PATH /test

{

"test" : {

"intarr" : [ 15, 0, 0, 3, 0, 0, 0, 0, 0, 9 ],

"dblvalue" : 2.2199999999999999e+01,

"fltvalue" : 1.1100000e+01,

"dwordvalue" : "0x0000007d",

"wordvalue" : "0x0141",

"boolvalue" : true,

"stringvalue" : [ "aaa123bbb", "", "", "", "", "", "", "", "", "" ],

"stringvalue/key" : {

"type" : 12,

"num_values" : 10,

"item_size" : 1024,

"last_written" : 1288592982

},

"byte1" : 10,

"byte2" : 241,

"char1" : "1",

"char2" : "-",

"sbyte1" : 10,

"sbyte2" : -15,

"sbyte2/key" : {

"type" : 2,

"last_written" : 1365101364

}

}

}

svn rev 5356

K.O. |

|

881

|

30 Apr 2013 |

Konstantin Olchanski | Info | ROOT switched to GIT | Latest news - the ROOT project switched from SVN to GIT.

Announcement:

http://root.cern.ch/drupal/content/root-has-moved-git

Fons's presentation with details on the conversion process, repository size and performance

improvements:

https://indico.cern.ch/getFile.py/access?contribId=0&resId=0&materialId=slides&confId=246803

"no switch yard" work flow:

http://root.cern.ch/drupal/content/suggested-work-flow-distributed-projects-nosy

GIT cheat sheet:

http://root.cern.ch/drupal/content/git-tips-and-tricks

K.O. |

|

882

|

06 May 2013 |

Konstantin Olchanski | Info | Recent-ish SVN changes at PSI | A little while ago, PSI made some changes to the SVN hosting. The main SVN URL seems to remain the

same, but SVN viewer moved to a new URL (it seems a bit faster compared to the old viewer):

https://savannah.psi.ch/viewvc/meg_midas/trunk/

Also the SSH host key has changed to:

savannah.psi.ch,192.33.120.96 ssh-rsa

AAAAB3NzaC1yc2EAAAABIwAAAQEAwVWEoaOmF9uggkUEV2/HhZo2ncH0zUfd0ExzzgW1m0HZQ5df1OYIb

pyBH6WD7ySU7fWkihbt2+SpyClMkWEJMvb5W82SrXtmzd9PFb3G7ouL++64geVKHdIKAVoqm8yGaIKIS0684

dyNO79ZacbOYC9l9YehuMHPHDUPPdNCFW2Gr5mkf/uReMIoYz81XmgAIHXPSgErv2Nv/BAA1PCWt6THMMX

E2O2jGTzJCXuZsJ2RoyVVR4Q0Cow1ekloXn/rdGkbUPMt/m3kNuVFhSzYGdprv+g3l7l1PWwEcz7V1BW9LNPp

eIJhxy9/DNUsF1+funzBOc/UsPFyNyJEo0p0Xw==

Fingerprint: a3:18:18:c4:14:f9:3e:79:2c:9c:fa:90:9a:d6:d2:fc

The change of host key is annoying because it makes "svn update" fail with an unhelpful message (some

mumble about ssh -q). To fix this fault, run "ssh svn@savannah.psi.ch", then fixup the ssh host key as

usual.

K.O. |

|

883

|

06 May 2013 |

Konstantin Olchanski | Info | TRIUMF MIDAS page moved to DAQWiki | The MIDAS web page at TRIUMF (http://midas.triumf.ca) moved from the daq-plone site to the DAQWiki

(MediaWiki) site. Links were updated, checked and corrected:

https://www.triumf.info/wiki/DAQwiki/index.php/MIDAS

Included is the link to our MIDAS installation instructions. These are more complete compared to the

instructions in the MIDAS documentation:

https://www.triumf.info/wiki/DAQwiki/index.php/Setup_MIDAS_experiment

K.O. |

|

884

|

07 May 2013 |

Konstantin Olchanski | Info | Updated: javascript custom page examples | I updated the MIDAS javascript examples in examples/javascript1. All existing mhttpd.js functions are

now exampled. (yes).

Here is the full list of functions, with notes:

ODBSet(path, value, pwdname);

ODBGet(path, format, defval, len, type);

ODBMGet(paths, callback, formats); --- doc incomplete - no example of callback() use

ODBGetRecord(path);

ODBExtractRecord(record, key);

new ODBKey(path); --- doc incomplete, wrong - one has to use "new ODBKey" - last_used was added.

ODBCopy(path, format); -- no doc

ODBRpc_rev0(name, rpc, args); --- doc refer to example

ODBRpc_rev1(name, rpc, max_reply_length, args); --- same

ODBGetMsg(n);

ODBGenerateMsg(m);

ODBGetAlarms(); --- no doc

ODBEdit(path); --- undoc - forces page reload

As annotated, the main documentation is partially incomplete and partially wrong (i.e. ODBKey() has to be

invoked as "new ODBKey()"). I hope this will be corrected soon. In the mean time, I recommend that

everybody uses this example as best documentation available.

http://ladd00.triumf.ca/~daqweb/doc/midas/html/RC_mhttpd_custom_js_lib.html

svn rev 5360

K.O. |

|

886

|

10 May 2013 |

Konstantin Olchanski | Info | mhttpd JSON support | > odbedit can now save ODB in JSON-formatted files.

> Added functions:

> INT EXPRT db_save_json(HNDLE hDB, HNDLE hKey, const char *file_name);

> INT EXPRT db_copy_json(HNDLE hDB, HNDLE hKey, char **buffer, int *buffer_size, int *buffer_end, int save_keys, int follow_links);

>

Added JSON encoding format to Javascript ODBCopy() ("jcopy"). Use format="json", Javascript example updated with an example example.

Also updated db_copy_json():

- always return NUL-terminated string

- "save_keys" values: 0 - do not save any KEY data, 1 - save all KEY data, 2 - save only KEY.last_written

odb.c, mhttpd.cxx, example.html

svn rev 5362

K.O. |

|

887

|

10 May 2013 |

Konstantin Olchanski | Info | Updated: javascript custom page examples | > ODBCopy(path, format); -- no doc

Updated example of ODBCopy:

format="" returns data in traditional ODB save format

format="xml" returns data in XML encoding

format="json" returns data in JSON encoding.

K.O. |

|

888

|

17 May 2013 |

Konstantin Olchanski | Info | mhttpd JSON-P support | >

> Added JSON encoding format to Javascript ODBCopy(path,format) ("jcopy"). Use format="json", Javascript example updated with an example example.

>

More ODBCopy() expansion: format="json-p" returns data suitable for JSON-P ("script tag") messaging.

Also implemented multiple-paths for "jcopy" (similar to "jget"/ODBMGet()). An example ODBMCopy(paths,callback,format) is present in example.html (will move to mhttpd.js).

Added JSON encoding options:

- format="json-nokeys" will omit all KEY information except for "last_written"

- "json-nokeys-nolastwritten" will also omit "last_written"

- "json-nofollowlinks" will return ODB symlink KEYs instead of following them (ODBGet/ODBMGet always follows symlinks)

- "json-p" adds JSON-P encapsulation

All these JSON format options can be used at the same time, i.e. format="json-p-nofollowlinks"

To see how it all works, please look at examples/javascript1/example.html.

The new code seems to be functional enough, but it is still work in progress and there are a few problems:

- ODBMCopy() using the "xml" format returns gibberish (the MIDAS XML encoder has to be told to omit the <?xml> header)

- example.html does not actually parse any of the XML data, so we do not know if XML encoding is okey

- JSON encoding has an extra layer of objects (variables.Variables.foo instead of variables.foo)

- ODBRpc() with JSON/JSON-P encoding not done yet.

mhttpd.cxx, example.html

svn rev 5364

K.O. |

|

889

|

31 May 2013 |

Konstantin Olchanski | Info | mhttpd JSON-P support | > To see how it all works, please look at examples/javascript1/example.html.

>

> - JSON encoding has an extra layer of objects (variables.Variables.foo instead of variables.foo)

>

This is now fixed. See updated example.html. Current encoding looks like this:

{

"System" : {

"Clients" : {

"24885" : {

"Name/key" : { "type" : 12, "item_size" : 32, "last_written" : 1370024816 },

"Name" : "ODBEdit",

"Host/key" : { "type" : 12, "item_size" : 256, "last_written" : 1370024816 },

"Host" : "ladd03.triumf.ca",

"Hardware type/key" : { "type" : 7, "last_written" : 1370024816 },

"Hardware type" : 44,

"Server Port/key" : { "type" : 7, "last_written" : 1370024816 },

"Server Port" : 52539

}

},

"Tmp" : {

...

odb.c, example.html

svn rev 5368

K.O. |

|