|

|

|

Back

Midas

Rome

Roody

Rootana

|

| Midas DAQ System, Page 1 of 51 |

Not logged in |

|

|

|

| New entries since: | Wed Dec 31 16:00:00 1969 | |

|

|

05 Feb 2026, Konstantin Olchanski, Bug Report, omnibus bugs from running DarkLight 05 Feb 2026, Konstantin Olchanski, Bug Report, omnibus bugs from running DarkLight

|

We finished running the DarkLight experiment and I am reporting accumulated bugs that we have run into.

1) history plots on 12 hrs, 24 hrs tend to hang with "page not responsive". most plots have 16-20 variables,

which are recorded at 1/sec interval. (yes, we must see all the variables at the same time and yes, we want to

record them with fine granularity).

2) starting runs gets into a funny mode if a GEM frontend aborts (hardware problems), transition page reports

"wwrrr, timeout 0", and stays stuck forever, "cancel transition" does nothing. observe it goes from "w"

(waiting) to "r" (RPC running) without a "c" (connecting...) and timeout should never be zero (120 sec in

ODB).

3) ODB editor clicking on hex number versus decimal number no longer allows editing in hex, Stefan implemented

this useful feature and it worked for a while, but now seems broken.

4) ODB editor "right click" to "delete" or "rename" key does not work, the right-click menu disappears

immediately before I can use it (dl-server-2), click on item (it is now blue), right-click menu disappears

before I can use it (daq17). it looks like a timing or race condition.

5) ODB editor "create link" link target name is limited to 32 bytes, links cannot be created (dl-server-2), ok

on daq17 with current MIDAS.

6) MIDAS on dl-server-2 is "installed" in such a way that there is no connection to the git repository, no way

to tell what git checkout it corresponds to. Help page just says "branch master", git-revision.h is empty. We

should discourage such use of MIDAS and promote our "normal way" where for all MIDAS binary programs we know

what source code and what git commit was used to build them.

6a) MIDAS on dl-server-2 had a pretty much non-functional history display, I reported it here, Stefan provided

a fix, I manually retrofitted it into dl-server-2 MIDAS and we were able to run the experiment. (good)

6b) bug (5) suggests that there is more bugs being introduced and fixed without any notice to other midas

users (via this forum or via the bitbucket bug tracker).

K.O. |

06 Feb 2026, Stefan Ritt, Bug Report, omnibus bugs from running DarkLight 06 Feb 2026, Stefan Ritt, Bug Report, omnibus bugs from running DarkLight

|

Thanks for the detailed report. Let me reply one-by-one.

> 1) history plots on 12 hrs, 24 hrs tend to hang with "page not responsive". most plots have 16-20 variables,

> which are recorded at 1/sec interval. (yes, we must see all the variables at the same time and yes, we want to

> record them with fine granularity).

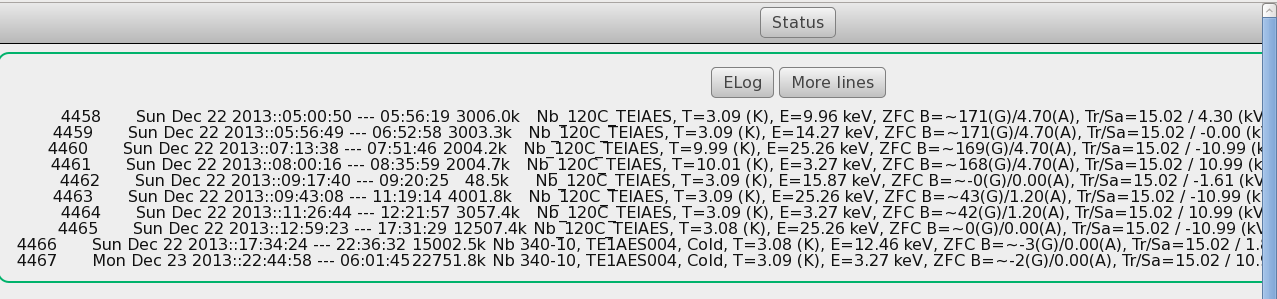

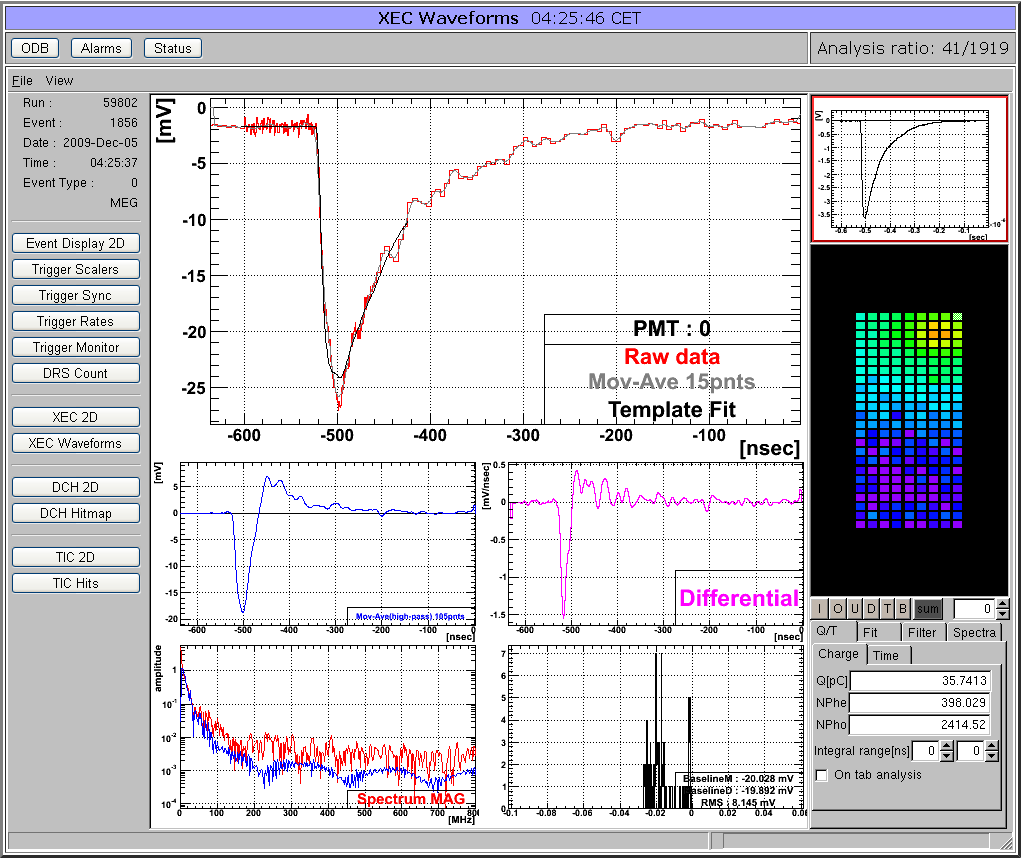

Attached is a similar plot. 8 values recorded every second, displayed for 24h. The backend is actually a Raspberry Pi! I see no issues there. Do you have

the current history version which does the re-binning? Actually the plot below is still without rebinding (see the "1" at the top right), and it contains ~72000 points x 8. The browser does not have any issue

with it.

Stefan |

12 Feb 2026, Stefan Ritt, Bug Report, omnibus bugs from running DarkLight 12 Feb 2026, Stefan Ritt, Bug Report, omnibus bugs from running DarkLight

|

Now I had a similar case that the browser froze when showing 24h of data. Tuned out that 80k points are a bit much. I changed the code so that it starts binning when showing 8h or more. This is not a perfect solution. The code should check at which interval data is written, then

automatically start binning when approaching 4000 points or more. That would however require more complicated code, so I leave it as it is right now. Feedback welcome.

Stefan |

06 Feb 2026, Stefan Ritt, Bug Report, omnibus bugs from running DarkLight 06 Feb 2026, Stefan Ritt, Bug Report, omnibus bugs from running DarkLight

|

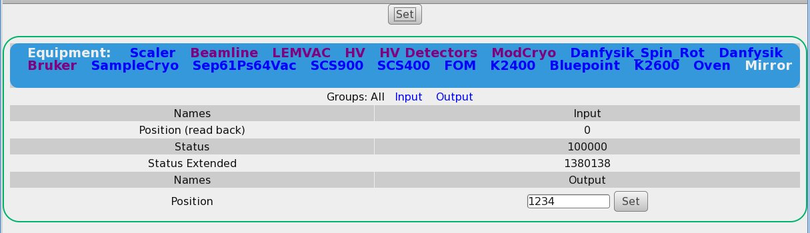

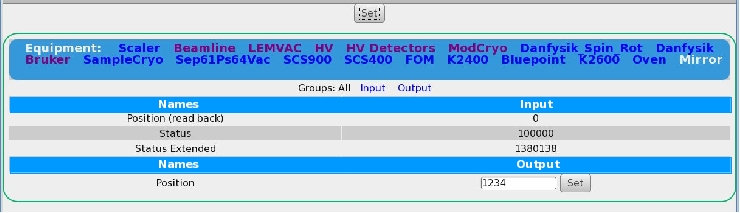

> 3) ODB editor clicking on hex number versus decimal number no longer allows editing in hex, Stefan implemented

> this useful feature and it worked for a while, but now seems broken.

I cannot confirm. See below. There was some issue some time ago, but that's fixed since a while. Please pull on develop and try again.

Here is the change: https://bitbucket.org/tmidas/midas/commits/882974260876529c43811c63a16b4a32395d416a

Stefan |

06 Feb 2026, Stefan Ritt, Bug Report, omnibus bugs from running DarkLight 06 Feb 2026, Stefan Ritt, Bug Report, omnibus bugs from running DarkLight

|

> 4) ODB editor "right click" to "delete" or "rename" key does not work, the right-click menu disappears

> immediately before I can use it (dl-server-2), click on item (it is now blue), right-click menu disappears

> before I can use it (daq17). it looks like a timing or race condition.

Confirmed and fixed: https://bitbucket.org/tmidas/midas/commits/4ba30761683ac9aa558471d2d2d35ce05e72096a

/Stefan |

06 Feb 2026, Stefan Ritt, Bug Report, omnibus bugs from running DarkLight 06 Feb 2026, Stefan Ritt, Bug Report, omnibus bugs from running DarkLight

|

> 5) ODB editor "create link" link target name is limited to 32 bytes, links cannot be created (dl-server-2), ok

> on daq17 with current MIDAS.

Works for me with the current version.

> 6) MIDAS on dl-server-2 is "installed" in such a way that there is no connection to the git repository, no way

> to tell what git checkout it corresponds to. Help page just says "branch master", git-revision.h is empty. We

> should discourage such use of MIDAS and promote our "normal way" where for all MIDAS binary programs we know

> what source code and what git commit was used to build them.

Not sure if you have seen it. I make a "install" script to clone, compile and install midas. Some people use this already. Maybe give it a shot. Might need

adjustment for different systems, I certainly haven't covered all corner cases. But on a RaspberryPi it's then just one command to install midas, modify

the environment, install mhttpd as a service and load the ODB defaults. I know that some people want it "their way" and that's ok, but for the novice user

that might be a good starting point. It's documented here: https://daq00.triumf.ca/MidasWiki/index.php/Install_Script

The install script is plain shell, so should be easy to be understandable.

> 6a) MIDAS on dl-server-2 had a pretty much non-functional history display, I reported it here, Stefan provided

> a fix, I manually retrofitted it into dl-server-2 MIDAS and we were able to run the experiment. (good)

>

> 6b) bug (5) suggests that there is more bugs being introduced and fixed without any notice to other midas

> users (via this forum or via the bitbucket bug tracker).

If I would notify everybody about a new bug I introduced, I would know that it's a bug and I would not introduce it ;-)

For all the fixes I encourage people to check the commit log. Doing an elog entry for every bug fix would be considered spam by many people because

that can be many emails per week. The commit log is here: https://bitbucket.org/tmidas/midas/commits/branch/develop

If somebody volunteers to consolidate all commits and make a monthly digest to be posted here, I'm all in favor, but I'm not that individual.

Stefan |

23 Jan 2026, Mathieu Guigue, Info, Homebrew support for midas 23 Jan 2026, Mathieu Guigue, Info, Homebrew support for midas

|

Dear all,

For my personal convenience, I started to add an homebrew formula

for

midas (*):

https://github.com/guiguem/homebrew-tap/blob/main/Formula/

midas.rb

It

is convenient in particular to deploy as it automatically gets all

the right

dependencies; for MacOS (**), there are bottles already available.

The

installation would then be

brew tap guiguem/tap

brew install midas

I

thought I

would share it here, if this is helpful to someone else (***).

This

was tested

rather extensively, including the development of manalyzer modules

using this

bottled version as backend.

A possible upgrade (if people are

interested) would

be to develop/deploy a "mainstream" midas version (and I would

rename mine

"midas-mod").

Cheers

Mathieu

-----

Notes:

(*) The version installed

by this

formula is a very slightly modified version of midas, designed to

support more

than 100 front-ends (needed for HK).

See commits here:

https://

gitlab.in2p3.fr/

hk/clocks/midas/-/

commit/060b77afb38e38f9a3155d2606860f12d680f4de

https://

gitlab.in2p3.fr/hk/

clocks/midas/-/

commit/1da438ad1946de7ba697e849de6a6675ac45ebb8

I have the

recollection this

version might not be compatible with the main midas one.

(**) I also have some

stuff for Ubuntu, but Ubuntu seems to do additional

linkage to curl which needs

to be handled (easy).

That being said the

installation from sources works fine!

(***) Some oddities were unraveled such as

the fact that the build_interface

pointing to the source include directory are

still appearing in the

midasConfig.cmake files (leading to issues in brew). This

was fixed by replacing

the faulty path to the final installation location. Maybe

this should be fixed ? |

23 Jan 2026, Stefan Ritt, Info, Homebrew support for midas 23 Jan 2026, Stefan Ritt, Info, Homebrew support for midas

|

Hi Mathieu,

thanks for your contribution. Have you looked at the install.sh script I developed last week:

https://daq00.triumf.ca/MidasWiki/index.php/Install_Script

which basically does the same, plus it modifies the environment and installs mhttpd as a service.

Actually I modeled the installation after the way Homebrew is installed in the first place (using curl).

I wonder if the two things can kind of be integrated. Would be great to get with brew always the newest midas version, and it would also

check and modify the environment.

If you tell me exactly what is wrong MidasConfig.cmake.in I'm happy to fix it.

Best,

Stefan |

23 Jan 2026, Mathieu Guigue, Info, Homebrew support for midas 23 Jan 2026, Mathieu Guigue, Info, Homebrew support for midas

|

Thanks Stefan!

Actually, these two approaches are slightly different I guess:

- the installation script you are linking manages the

installation and the subsequent steps, but doesn't manage the dependencies: for instance on my machine, it didn't find root and so manalyzer

is built without root support.

Maybe this is just something to adapt?

Brew on the other hand manages root and so knows how to link these two

together.

- The nice thing I like about brew is that one can "ship bottles" aka compiled version of the code; it is great and fast for

deployment and avoid compilation issues.

- I like that your setup does deploy and launch all the necessary executables ! I know brew can do

this too via brew services (see an example here: https://github.com/Homebrew/homebrew-core/blob/HEAD/Formula/r/rabbitmq.rb#L83 ), maybe worth

investigating...?

- Brew relies on code tagging to better manage the bottles, so that it uses the tag to get a well-defined version of the

code and give a name to the version.

I had to implement my own tags e.g. midas-mod-2025-12-a to get a release.

I am not sure how to do in the

case of midas where the tags are not that frequent...

Thank you for the feedback, I will make the modifications (aka naming my formula

``midas-mod'') so that it doesn't collide with a future official midas one.

Concerning the MidasConfig.cmake issue, this is what I need

(note that the INTERFACE_INCLUDE_DIRECTORIES is pointing to

/opt/homebrew/Cellar/midas/midas-mod-2025-12-a/)

set_target_properties(midas::midas PROPERTIES

INTERFACE_COMPILE_DEFINITIONS "HAVE_CURL;HAVE_MYSQL;HAVE_SQLITE;HAVE_FTPLIB"

INTERFACE_COMPILE_OPTIONS "-I/opt/homebrew/Cellar/mariadb/12.1.2/include/mysql;-I/opt/homebrew/Cellar/mariadb/12.1.2/include/mysql/mysql"

INTERFACE_INCLUDE_DIRECTORIES "/opt/homebrew/Cellar/midas/midas-mod-2025-12-a/;${_IMPORT_PREFIX}/include"

INTERFACE_LINK_LIBRARIES "/opt/

homebrew/opt/zlib/lib/libz.dylib;-lcurl;-L/opt/homebrew/Cellar/mariadb/12.1.2/lib/ -lmariadb;/opt/homebrew/opt/sqlite/lib/libsqlite3.dylib"

)

whereas by default INTERFACE_INCLUDE_DIRECTORIES points to the source code location (in the case of brew, something like /private/<some-

hash> ).

Brew deletes the source code at the end of the installation, whereas midas seems to rely on the fact that the source code is still

present...

Does it help?

A way to fix is to search for this ``/private'' path and replace it, but this isn't ideal I guess...

This is what I

did in the midas formula:

--------

# Fix broken CMake export paths if they exist

cmake_files = Dir["#{lib}/**/*manalyzer*.cmake"]

cmake_files.each do |file|

if File.read(file).match?(%r{/private/tmp/midas-[^/"]+})

inreplace file, %r{/private/tmp/midas-

[^/"]+},

prefix.to_s

end

inreplace file, %r{/tmp/midas-[^/"]+}, prefix.to_s if File.read(file).match?(%r{/tmp/midas-[^/"]+})

end

cmake_files = Dir["#{lib}/**/*midas*.cmake"]

cmake_files.each do |file|

if File.read(file).match?(%r{/private/tmp/midas-

[^/"]+})

inreplace file, %r{/private/tmp/midas-[^/"]+},

prefix.to_s

end

inreplace file, %r{/tmp/midas-[^/"]+},

prefix.to_s if File.read(file).match?(%r{/tmp/midas-[^/"]+})

end

-----

I guess this code could be changed into some bash commands and

added to your script?

Thank you very much again!

Mathieu

> Hi Mathieu,

>

> thanks for your contribution. Have you looked at the

install.sh script I developed last week:

>

> https://daq00.triumf.ca/MidasWiki/index.php/Install_Script

>

> which basically does the

same, plus it modifies the environment and installs mhttpd as a service.

>

> Actually I modeled the installation after the way Homebrew is

installed in the first place (using curl).

>

> I wonder if the two things can kind of be integrated. Would be great to get with brew always

the newest midas version, and it would also

> check and modify the environment.

>

> If you tell me exactly what is wrong

MidasConfig.cmake.in I'm happy to fix it.

>

> Best,

> Stefan |

26 Jan 2026, Stefan Ritt, Info, Homebrew support for midas 26 Jan 2026, Stefan Ritt, Info, Homebrew support for midas

|

> Actually, these two approaches are slightly different I guess:

> - the installation script you are linking manages the

> installation and the subsequent steps, but doesn't manage the dependencies: for instance on my machine, it didn't find root and so manalyzer

> is built without root support.

> Maybe this is just something to adapt?

Yes indeed. From your perspective, you probably always want ROOT with MIDAS. But at PSI here we have several installation where we do not

need ROOT. These are mainly beamline control PCs which just connect to EPICS or pump station controls replacing Labview installations. All

graphics there is handled with the new mplot graphs which is better in some case.

I therefore added a check into install.sh which tells you explicitly if ROOT is found and included or not. Then it's up to the user to choose to

install ROOT or not.

> Brew on the other hand manages root and so knows how to link these two

> together.

If you really need it, yes.

> - The nice thing I like about brew is that one can "ship bottles" aka compiled version of the code; it is great and fast for

> deployment and avoid compilation issues.

> - I like that your setup does deploy and launch all the necessary executables ! I know brew can do

> this too via brew services (see an example here: https://github.com/Homebrew/homebrew-core/blob/HEAD/Formula/r/rabbitmq.rb#L83 ), maybe worth

> investigating...?

Indeed this is an advantage of brew, and I wholeheartedly support it therefore. If you decide to support this for the midas

community, I would like you to document it at

https://daq00.triumf.ca/MidasWiki/index.php/Installation

Please talk to Ben <bsmith@triumf.ca> who manages the documentation and can give you write access there. The downside is that you will

then become the supporter for the brew and all user requests will be forwarded to you as long as you are willing to maintain the package ;-)

> - Brew relies on code tagging to better manage the bottles, so that it uses the tag to get a well-defined version of the

> code and give a name to the version.

> I had to implement my own tags e.g. midas-mod-2025-12-a to get a release.

> I am not sure how to do in the

> case of midas where the tags are not that frequent...

Yes we always struggle with the tagging (what is a "release", when should we release, ...). Maybe it's the simplest if we tag once per month

blindly with midas-2026-02a or so. In the past KO took care of the tagging, he should reply here with his thoughts.

> Thank you for the feedback, I will make the modifications (aka naming my formula

> ``midas-mod'') so that it doesn't collide with a future official midas one.

Nope. The idea is that YOU do the future official midas realize from now on ;-)

> Concerning the MidasConfig.cmake issue, this is what I need ...

Let's take this offline not to spam others.

Best,

Stefan |

20 Jan 2026, Stefan Ritt, Info, New tabbed custom pages 20 Jan 2026, Stefan Ritt, Info, New tabbed custom pages

|

Tabbed custom pages have been implemented in MIDAS. Below you see and example. The documentation

is here:

https://daq00.triumf.ca/MidasWiki/index.php/Custom_Page#Tabbed_Pages

Stefan |

14 Jan 2026, Derek Fujimoto, Bug Report, DEBUG messages not showing and related 14 Jan 2026, Derek Fujimoto, Bug Report, DEBUG messages not showing and related

|

I have an application where I want to (optionally) send my own debugging messages to the midas.log file, but am having some problems with this:

* Messages with type MT_DEBUG don't show up in midas.log or on the messages page (calling cm_msg from python)

* messages.html is missing the DEBUG filter option

* Messages sent to other log files (not midas.log) don't get message banners on the web page. Is this intentional?

So I think either there is a bug, or I need to start MIDAS with some flag to enable debugging. Looking at the source, I don't see why these messages wouldn't get logged.

Any insight would be appreciated! |

14 Jan 2026, Stefan Ritt, Bug Report, DEBUG messages not showing and related 14 Jan 2026, Stefan Ritt, Bug Report, DEBUG messages not showing and related

|

MT_DEBUG messages are there for debugging, not logging. They only go into the SYSMSG buffer and NOT to the log file. If you want anything logged, just use MT_INFO.

Not sure if that's missing in the documentation. Anyhow, there are my original ideas (from 1995 ;-) )

MT_ERROR

Error message, to be displayed in red

MT_INFO

Info or status message

MT_DEBUG

Only sent to SYSMSG buffer, not to midas.log file. Handy if you produce lots of message and don't want to flood the message file. Plus it does not change the timing of your app, since the SYSMSG buffer is much faster than writing

to a file.

MT_USER

Message generated interactively by a user, like in the chat window or via the odbedit "msg" command

MT_LOG

Messages with are only logged but not put into the SYSMSG buffer

MT_TALK

Messages which should go through the speech synthesis in the browser and are "spoken"

MT_CALL

Message which would be forwarded to the user via a messaging app (historically this was an actual analog telephone call via a modem ;-) )

If that is missing in the documentation, please feel free to copy/paste it to the appropriate place.

Stefan |

14 Jan 2026, Derek Fujimoto, Bug Report, DEBUG messages not showing and related 14 Jan 2026, Derek Fujimoto, Bug Report, DEBUG messages not showing and related

|

Ok thanks for the quick and clear response!

Derek

> MT_DEBUG messages are there for debugging, not logging. They only go into the SYSMSG buffer and NOT to the log file. If you want anything logged, just use MT_INFO.

>

> Not sure if that's missing in the documentation. Anyhow, there are my original ideas (from 1995 ;-) )

>

> MT_ERROR

> Error message, to be displayed in red

>

> MT_INFO

> Info or status message

>

> MT_DEBUG

> Only sent to SYSMSG buffer, not to midas.log file. Handy if you produce lots of message and don't want to flood the message file. Plus it does not change the timing of your app, since the SYSMSG buffer is much faster than writing

> to a file.

>

> MT_USER

> Message generated interactively by a user, like in the chat window or via the odbedit "msg" command

>

> MT_LOG

> Messages with are only logged but not put into the SYSMSG buffer

>

> MT_TALK

> Messages which should go through the speech synthesis in the browser and are "spoken"

>

> MT_CALL

> Message which would be forwarded to the user via a messaging app (historically this was an actual analog telephone call via a modem ;-) )

>

> If that is missing in the documentation, please feel free to copy/paste it to the appropriate place.

>

>

> Stefan |

09 Jan 2026, Stefan Ritt, Forum, MIDAS installation 09 Jan 2026, Stefan Ritt, Forum, MIDAS installation

|

Since we have no many RaspberryPi based control systems running at our lab with midas, I want to

streamline the midas installation, such that a non-expert can install it on these devices.

First, midas has to be cloned under "midas" in the user's home directory with

git clone https://bitbucket.org/tmidas/midas.git --recurse-submodules

For simplicity, this puts midas right into /home/<user>/midas, and not into any "packages" subdirectory

which I believe is not necessary.

Then I wrote a setup script midas/midas_setup.sh which does the following:

- Add midas environment variables to .bashrc / .zschenv depending on the shell being used

- Compile and install midas to midas/bin

- Load an initial ODB which allows insecure http access to port 8081

- Install mhttpd as a system service and start it via systemctl

Since I'm not a linux system expert, the current file might be a bit clumsy. I know that automatic shell

detection can be made much more elaborate, but I wanted a script which can easy be understood even by

non-experts and adapted slightly if needed.

If you know about shell scripts and linux administration, please have a quick look at the attached script

and give me any feedback.

Stefan |

10 Jan 2026, Marius Koeppel, Forum, MIDAS installation 10 Jan 2026, Marius Koeppel, Forum, MIDAS installation

|

Dear Stefan,

That’s a great idea. For a private home automation project using a Raspberry Pi Zero, I used the

following setup:

https://github.com/makoeppel/midasHome/

This server has been running for about a year now

and reports the temperature in my home. Looking at your script, I think we are conceptually doing the same

thing.

I see three parts I would do slightly differently:

1. I would create an .env file to hold the

variables:

export PATH="$HOME/midas/bin:$PATH"

export MIDASSYS="$HOME/midas"

export MIDAS_DIR="$HOME/online"

export MIDAS_EXPT_NAME="Online"

2. For odbedit -c "load midas_setup.odb" > /dev/null

I would consider making

this a bit more explicit (using odbedit) so users can change the configuration if needed—possibly by

introducing a .conf file.

3. In my project, I used the MIDAS Python bindings, which are currently missing in

your script:

export PYTHONPATH=$PYTHONPATH:$MIDASSYS/python

I also have one additional comment regarding

Docker. I think it would make sense to support a Docker image for MIDAS. This would give non-expert users an

even simpler setup. I created a related project some time ago:

https://github.com/makoeppel/midasDocker

I'd

be happy to help with this part as well.

Best regards,

Marius |

13 Jan 2026, Stefan Ritt, Forum, MIDAS installation 13 Jan 2026, Stefan Ritt, Forum, MIDAS installation

|

Thanks for your feedback. I reworked the installation script, and now also called it "install.sh" since it includes also the git clone. I modeled it after

homebrew a mit (https://brew.sh). This means you can now run the script on a prison linux system with:

/bin/bash -c "$(curl -sS https://bitbucket.org/tmidas/midas/raw/HEAD/install.sh)"

It contains three defaults for MIDASSYS, MIDAS_DIR and MIDAS_EXPT_NAME, but when you run in, you can overwrite these

defaults interactively. The script creates all directories, clones midas, compiles and installs it, installs and runs mhttpd as a system

service, then starts the logger and the example frontend. I also added your PYTHONPATH variable. The RC file is now automatically

detected.

Yes one could add more config files, but I want to have this basic install as simple as possible. If more things are needed, they

should be added as separate scripts or .ODB files.

Please have a look and let me know what you think about. I tested it on a RaspberryPi, but not yet on other systems.

Stefan |

13 Jan 2026, Stefan Ritt, Forum, MIDAS installation 13 Jan 2026, Stefan Ritt, Forum, MIDAS installation

|

I put the documentation under

https://daq00.triumf.ca/MidasWiki/index.php/Install_Script

Would be good if anybody could check that.

Stefan |

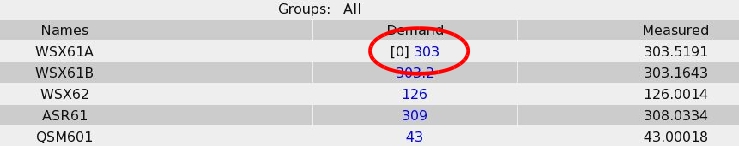

04 Jan 2026, Stefan Ritt, Info, Ad-hoc history plots of slow control equipment 04 Jan 2026, Stefan Ritt, Info, Ad-hoc history plots of slow control equipment

|

After popular demand and during some quite holidays I implemented ad-hoc history plots. To enable this

for a certain equipment, put

/Equipment/<name>/Settings/History buttons = true

into the ODB. You will then see a graph button after each variable. Pressing this button reveals the history

for this variable, see attachment.

Stefan |

17 Dec 2025, Derek Fujimoto, Info, mplot updates 17 Dec 2025, Derek Fujimoto, Info, mplot updates

|

Hello everyone,

Stefan and I have make a few updates to mplot to clean up some of the code and make it more usable directly from Javascript. With one exception this does not change the html interface. The below describes changes up to commit cd9f85c.

Breaking Changes:

- The idea is to have a "graph" be the overarching figure object, whereas the "plot" is the line or points associated with a single dataset.

- Some internal variable names have been changed to reflect this while minimizing breaking changes

- defaultParam renamed defaultGraphParam.

- There is no longer an initialized defaultParam.plot[0], these defaults are now defaultPlotParam which is a separate global variable

- MPlotGraph constructor signature MPlotGraph(divElement, param) changed to MPlotGraph(divElement, graphParam)

- HTML key data-bgcolor changed to data-zero-color as the former was misleading

New Features

- New addPlot() function.

- While the functionality of setData is preserved you can now use addPlot(plotParam) to add a new plot to the graph with minimal copying of the old defaultParam.plot[0]

- Minimal example, from plot_example.html: given some div container with id "P6":

let d = document.getElementById("P6"); // get div

d.mpg = new MPlotGraph(d, { title: { text: "Generated" }}); // make graph

d.mpg.addPlot( { xData: [0, 1, 2, 3, 4], yData: [10, 12, 12, 14, 11] } ); // add plot to the graph

- modifyPlot() and deletePlot() still to come

- New lines styles: none, solid, dashed, dotted

- Barplot-style category plots

|

08 Dec 2025, Konstantin Olchanski, Bug Report, odbxx memory leak with JSON ODB dump 08 Dec 2025, Konstantin Olchanski, Bug Report, odbxx memory leak with JSON ODB dump

|

I was testing odbxx with manalyzer, decided to print an odb value in every event,

and it worked fine in online mode, but bombed out when running from a data file

(JSON ODB dump). The following code has a memory leak. No idea if XML ODB dump

has the same problem.

int memory_leak()

{

midas::odb::set_odb_source(midas::odb::STRING, std::string(run.fRunInfo-

>fBorOdbDump.data(), run.fRunInfo->fBorOdbDump.size()));

while (1) {

int time = midas::odb("/Runinfo/Start time binary");

printf("time %d\n", time);

}

}

K.O. |

09 Dec 2025, Stefan Ritt, Bug Report, odbxx memory leak with JSON ODB dump 09 Dec 2025, Stefan Ritt, Bug Report, odbxx memory leak with JSON ODB dump

|

Thanks for reporting this. It was caused by a

MJsonNode* node = MJsonNode::Parse(str.c_str());

not followed by a

delete node;

I added that now in odb::odb_from_json_string(). Can you try again?

Stefan |

09 Dec 2025, Konstantin Olchanski, Bug Report, odbxx memory leak with JSON ODB dump 09 Dec 2025, Konstantin Olchanski, Bug Report, odbxx memory leak with JSON ODB dump

|

> Thanks for reporting this. It was caused by a

>

> MJsonNode* node = MJsonNode::Parse(str.c_str());

>

> not followed by a

>

> delete node;

>

> I added that now in odb::odb_from_json_string(). Can you try again?

>

> Stefan

Close, but no cigar, node you delete is not the node you got from Parse(), see "node = subnode;".

If I delete the node returned by Parse(), I confirm the memory leak is gone.

BTW, it looks like we are parsing the whole JSON ODB dump (200k+) on every odbxx access. Can you parse it just once?

K.O. |

10 Dec 2025, Stefan Ritt, Bug Report, odbxx memory leak with JSON ODB dump 10 Dec 2025, Stefan Ritt, Bug Report, odbxx memory leak with JSON ODB dump

|

> BTW, it looks like we are parsing the whole JSON ODB dump (200k+) on every odbxx access. Can you parse it just once?

You are right. I changed the code so that the dump is only parsed once. Please give it a try.

Stefan |

11 Dec 2025, Konstantin Olchanski, Bug Report, odbxx memory leak with JSON ODB dump 11 Dec 2025, Konstantin Olchanski, Bug Report, odbxx memory leak with JSON ODB dump

|

> > BTW, it looks like we are parsing the whole JSON ODB dump (200k+) on every odbxx access. Can you parse it just once?

> You are right. I changed the code so that the dump is only parsed once. Please give it a try.

Confirmed fixed, thanks! There are 2 small changes I made in odbxx.h, please pull.

K.O. |

11 Dec 2025, Stefan Ritt, Bug Report, odbxx memory leak with JSON ODB dump 11 Dec 2025, Stefan Ritt, Bug Report, odbxx memory leak with JSON ODB dump

|

> Confirmed fixed, thanks! There are 2 small changes I made in odbxx.h, please pull.

There was one missing enable_jsroot in manalyzer, please pull yourself.

Stefan |

12 Dec 2025, Konstantin Olchanski, Bug Report, odbxx memory leak with JSON ODB dump 12 Dec 2025, Konstantin Olchanski, Bug Report, odbxx memory leak with JSON ODB dump

|

> > Confirmed fixed, thanks! There are 2 small changes I made in odbxx.h, please pull.

> There was one missing enable_jsroot in manalyzer, please pull yourself.

pulled, pushed to rootana, thanks for fixing it!

K.O. |

09 Dec 2025, Mark Grimes, Bug Report, manalyzer fails to compile on some systems because of missing #include <cmath> 09 Dec 2025, Mark Grimes, Bug Report, manalyzer fails to compile on some systems because of missing #include <cmath>

|

Hi,

We're getting errors in our build system like:

/code/midas/manalyzer/manalyzer.cxx: In member function ‘void Profiler::Begin(TARunInfo*,

std::vector<TARunObject*>)’:

/code/midas/manalyzer/manalyzer.cxx:799:27: error: ‘pow’ was not declared in this scope

799 | bins[i] = TimeRange*pow(1.1,i)/pow(1.1,Nbins);

The solution is to add "#include <cmath>" at the top of manalyzer.cxx; I guess on a lot of systems the

include is implicit from some other include so doesn't cause errors. I don't have the permissions to push

branches, could this be added please?

Thanks,

Mark. |

09 Dec 2025, Konstantin Olchanski, Bug Report, manalyzer fails to compile on some systems because of missing #include <cmath> 09 Dec 2025, Konstantin Olchanski, Bug Report, manalyzer fails to compile on some systems because of missing #include <cmath>

|

> /code/midas/manalyzer/manalyzer.cxx:799:27: error: ‘pow’ was not declared in this scope

> 799 | bins[i] = TimeRange*pow(1.1,i)/pow(1.1,Nbins);

math.h added, pushed. nice catch.

implicit include of math.h came through TFile.h (ROOT v6.34.02), perhaps you have a newer ROOT

and they jiggled the include files somehow.

TFile.h -> TDirectoryFile.h -> TDirectory.h -> TNamed.h -> TString.h -> TMathBase.h -> cmath -> math.h

K.O. |

11 Dec 2025, Mark Grimes, Bug Report, manalyzer fails to compile on some systems because of missing #include <cmath> 11 Dec 2025, Mark Grimes, Bug Report, manalyzer fails to compile on some systems because of missing #include <cmath>

|

Thanks. Are you happy for me to update the submodule commit in Midas to use this fix? I should have sufficient permission if you agree.

> > /code/midas/manalyzer/manalyzer.cxx:799:27: error: ‘pow’ was not declared in this scope

> > 799 | bins[i] = TimeRange*pow(1.1,i)/pow(1.1,Nbins);

>

> math.h added, pushed. nice catch.

>

> implicit include of math.h came through TFile.h (ROOT v6.34.02), perhaps you have a newer ROOT

> and they jiggled the include files somehow.

>

> TFile.h -> TDirectoryFile.h -> TDirectory.h -> TNamed.h -> TString.h -> TMathBase.h -> cmath -> math.h

>

> K.O. |

11 Dec 2025, Konstantin Olchanski, Bug Report, manalyzer fails to compile on some systems because of missing #include <cmath> 11 Dec 2025, Konstantin Olchanski, Bug Report, manalyzer fails to compile on some systems because of missing #include <cmath>

|

> TFile.h -> TDirectoryFile.h -> TDirectory.h -> TNamed.h -> TString.h -> TMathBase.h -> cmath -> math.h

reading ROOT release notes, 6.38 removed TMathBase.h from TString.h, with a warning "This change may cause errors during compilation of

ROOT-based code". Upright citizens, nice guys!

> Thanks. Are you happy for me to update the submodule commit in Midas to use this fix? I should have sufficient permission if you agree.

I am doing some last minute tests, will pull it into midas and rootana later today.

K.O. |

19 May 2025, Jonas A. Krieger, Suggestion, manalyzer root output file with custom filename including run number 19 May 2025, Jonas A. Krieger, Suggestion, manalyzer root output file with custom filename including run number

|

Hi all,

Would it be possible to extend manalyzer to support custom .root file names that include the run number?

As far as I understand, the current behavior is as follows:

The default filename is ./root_output_files/output%05d.root , which can be customized by the following two command line arguments.

-Doutputdirectory: Specify output root file directory

-Ooutputfile.root: Specify output root file filename

If an output file name is specified with -O, -D is ignored, so the full path should be provided to -O.

I am aiming to write files where the filename contains sufficient information to be unique (e.g., experiment, year, and run number). However, if I specify it with -O, this would require restarting manalyzer after every run; a scenario that I would like to avoid if possible.

Please find a suggestion of how manalyzer could be extended to introduce this functionality through an additional command line argument at

https://bitbucket.org/krieger_j/manalyzer/commits/24f25bc8fe3f066ac1dc576349eabf04d174deec

Above code would allow the following call syntax: ' ./manalyzer.exe -O/data/experiment1_%06d.root --OutputNumbered '

But note that as is, it would fail if a user specifies an incompatible format such as -Ooutput%s.root .

So a safer, but less flexible option might be to instead have the user provide only a prefix, and then attach %05d.root in the code.

Thank you for considering these suggestions! |

12 Nov 2025, Jonas A. Krieger, Suggestion, manalyzer root output file with custom filename including run number 12 Nov 2025, Jonas A. Krieger, Suggestion, manalyzer root output file with custom filename including run number

|

Hi all,

Could you please get back to me about whether something like my earlier suggestion might be considered, or if I should set up some workaround to rename files at EOR for our experiments?

https://daq00.triumf.ca/elog-midas/Midas/3042 :

-----------------------------------------------

> Hi all,

>

> Would it be possible to extend manalyzer to support custom .root file names that include the run number?

>

> As far as I understand, the current behavior is as follows:

> The default filename is ./root_output_files/output%05d.root , which can be customized by the following two command line arguments.

>

> -Doutputdirectory: Specify output root file directory

> -Ooutputfile.root: Specify output root file filename

>

> If an output file name is specified with -O, -D is ignored, so the full path should be provided to -O.

>

> I am aiming to write files where the filename contains sufficient information to be unique (e.g., experiment, year, and run number). However, if I specify it with -O, this would require restarting manalyzer after every run; a scenario that I would like to avoid if possible.

>

> Please find a suggestion of how manalyzer could be extended to introduce this functionality through an additional command line argument at

> https://bitbucket.org/krieger_j/manalyzer/commits/24f25bc8fe3f066ac1dc576349eabf04d174deec

>

> Above code would allow the following call syntax: ' ./manalyzer.exe -O/data/experiment1_%06d.root --OutputNumbered '

> But note that as is, it would fail if a user specifies an incompatible format such as -Ooutput%s.root .

>

> So a safer, but less flexible option might be to instead have the user provide only a prefix, and then attach %05d.root in the code.

>

> Thank you for considering these suggestions! |

25 Nov 2025, Konstantin Olchanski, Suggestion, manalyzer root output file with custom filename including run number 25 Nov 2025, Konstantin Olchanski, Suggestion, manalyzer root output file with custom filename including run number

|

Hi, Jonas, thank you for reminding me about this. I hope to work on manalyzer in the next few weeks and I will review the ROOT output file name scheme.

K.O.

> Hi all,

>

> Could you please get back to me about whether something like my earlier suggestion might be considered, or if I should set up some workaround to rename files at EOR for our experiments?

>

> https://daq00.triumf.ca/elog-midas/Midas/3042 :

> -----------------------------------------------

> > Hi all,

> >

> > Would it be possible to extend manalyzer to support custom .root file names that include the run number?

> >

> > As far as I understand, the current behavior is as follows:

> > The default filename is ./root_output_files/output%05d.root , which can be customized by the following two command line arguments.

> >

> > -Doutputdirectory: Specify output root file directory

> > -Ooutputfile.root: Specify output root file filename

> >

> > If an output file name is specified with -O, -D is ignored, so the full path should be provided to -O.

> >

> > I am aiming to write files where the filename contains sufficient information to be unique (e.g., experiment, year, and run number). However, if I specify it with -O, this would require restarting manalyzer after every run; a scenario that I would like to avoid if possible.

> >

> > Please find a suggestion of how manalyzer could be extended to introduce this functionality through an additional command line argument at

> > https://bitbucket.org/krieger_j/manalyzer/commits/24f25bc8fe3f066ac1dc576349eabf04d174deec

> >

> > Above code would allow the following call syntax: ' ./manalyzer.exe -O/data/experiment1_%06d.root --OutputNumbered '

> > But note that as is, it would fail if a user specifies an incompatible format such as -Ooutput%s.root .

> >

> > So a safer, but less flexible option might be to instead have the user provide only a prefix, and then attach %05d.root in the code.

> >

> > Thank you for considering these suggestions! |

08 Dec 2025, Konstantin Olchanski, Suggestion, manalyzer root output file with custom filename including run number 08 Dec 2025, Konstantin Olchanski, Suggestion, manalyzer root output file with custom filename including run number

|

I updated the root helper constructor to give the user more control over ROOT output file names.

You can now change it to anything you want in the module run constructor, see manalyzer_example_esoteric.cxx

Is this good enough?

struct ExampleE1: public TARunObject

{

ExampleE1(TARunInfo* runinfo)

: TARunObject(runinfo)

{

#ifdef HAVE_ROOT

if (runinfo->fRoot)

runinfo->fRoot->fOutputFileName = "my_custom_file_name.root";

#endif

}

}

K.O. |

17 Sep 2025, Mark Grimes, Suggestion, Get manalyzer to configure midas::odb when running offline 17 Sep 2025, Mark Grimes, Suggestion, Get manalyzer to configure midas::odb when running offline

|

Hi,

Lots of users like the midas::odb interface for reading from the ODB in manalyzers. It currently doesn't

work offline however without a few manual lines to tell midas::odb to read from the ODB copy in the run

header. The code also gets a bit messy to work out the current filename and get midas::odb to reopen the

file currently being processed. This would be much cleaner if manalyzer set this up automatically, and then

user code could be written that is completely ignorant of whether it is running online or offline.

The change I suggest is in the `set_offline_odb` branch, commit 4ffbda6, which is simply:

diff --git a/manalyzer.cxx b/manalyzer.cxx

index 371f135..725e1d2 100644

--- a/manalyzer.cxx

+++ b/manalyzer.cxx

@@ -15,6 +15,7 @@

#include "manalyzer.h"

#include "midasio.h"

+#include "odbxx.h"

//////////////////////////////////////////////////////////

@@ -2075,6 +2076,8 @@ static int ProcessMidasFiles(const std::vector<std::string>& files, const std::v

if (!run.fRunInfo) {

run.CreateRun(runno, filename.c_str());

run.fRunInfo->fOdb = MakeFileDumpOdb(event->GetEventData(), event->data_size);

+ // Also set the source for midas::odb in case people prefer that interface

+ midas::odb::set_odb_source(midas::odb::STRING, std::string(event->GetEventData(), event-

>data_size));

run.BeginRun();

}

It happens at the point where the ODB record is already available and requires no effort from the user to

be able to read the ODB offline.

Thanks,

Mark. |

17 Sep 2025, Konstantin Olchanski, Suggestion, Get manalyzer to configure midas::odb when running offline 17 Sep 2025, Konstantin Olchanski, Suggestion, Get manalyzer to configure midas::odb when running offline

|

> Lots of users like the midas::odb interface for reading from the ODB in manalyzers.

> +#include "odbxx.h"

This is a useful improvement. Before commit of this patch, can you confirm the RunInfo destructor

deletes this ODB stuff from odbxx? manalyzer takes object life times very seriously.

There is also the issue that two different RunInfo objects would load two different ODB dumps

into odbxx. (inability to access more than 1 ODB dump is a design feature of odbxx).

This is not an actual problem in manalyzer because it only processes one run at a time

and only 1 or 0 RunInfo objects exists at any given time.

Of course with this patch extending manalyzer to process two or more runs at the same time becomes impossible.

K.O. |

18 Sep 2025, Mark Grimes, Suggestion, Get manalyzer to configure midas::odb when running offline 18 Sep 2025, Mark Grimes, Suggestion, Get manalyzer to configure midas::odb when running offline

|

> ....Before commit of this patch, can you confirm the RunInfo destructor

> deletes this ODB stuff from odbxx? manalyzer takes object life times very seriously.

The call stores the ODB string in static members of the midas::odb class. So these will have a lifetime of the process or until they're replaced by another

call. When a midas::odb is instantiated it reads from these static members and then that data has the lifetime of that instance.

> Of course with this patch extending manalyzer to process two or more runs at the same time becomes impossible.

Yes, I hadn't realised that was an option. For that to work I guess the aforementioned static members could be made thread local storage, and

processing of each run kept to a specific thread. Although I could imagine user code making assumptions and breaking, like storing a midas::odb as a

class member or something.

Note that I missed doing the same for the end of run event, which should probably also be added.

Thanks,

Mark. |

18 Sep 2025, Stefan Ritt, Suggestion, Get manalyzer to configure midas::odb when running offline 18 Sep 2025, Stefan Ritt, Suggestion, Get manalyzer to configure midas::odb when running offline

|

> > Of course with this patch extending manalyzer to process two or more runs at the same time becomes impossible.

>

> Yes, I hadn't realised that was an option. For that to work I guess the aforementioned static members could be made thread local storage, and

> processing of each run kept to a specific thread. Although I could imagine user code making assumptions and breaking, like storing a midas::odb as a

> class member or something.

If we want to analyze several runs, I can easily add code to make this possible. In a new call to set_odb_source(), the previously allocated memory in that function can be freed. We can aldo make the memory handling

thread-specific, allowing several thread to analyze different runs at the same time. But I will only invest work there once it's really needed by someone.

Stefan |

22 Sep 2025, Stefan Ritt, Suggestion, Get manalyzer to configure midas::odb when running offline 22 Sep 2025, Stefan Ritt, Suggestion, Get manalyzer to configure midas::odb when running offline

|

I will work today on the odbxx API to make sure there are no memory leaks when you switch form one file to another. I talked to KO so he agreed that yo then commit your proposed change of manalyzer

Best,

Stefan |

22 Sep 2025, Konstantin Olchanski, Suggestion, Get manalyzer to configure midas::odb when running offline 22 Sep 2025, Konstantin Olchanski, Suggestion, Get manalyzer to configure midas::odb when running offline

|

> I will work today on the odbxx API to make sure there are no memory leaks when you switch form one file to another. I talked to KO so he agreed that yo then commit your proposed change of manalyzer

That, and add a "clear()" method that resets odbxx state to "empty". I will call odbxx.clear() everywhere where I call "delete fOdb;" (TARunInfo::dtor and other places).

K.O. |

22 Sep 2025, Stefan Ritt, Suggestion, Get manalyzer to configure midas::odb when running offline 22 Sep 2025, Stefan Ritt, Suggestion, Get manalyzer to configure midas::odb when running offline

|

> > I will work today on the odbxx API to make sure there are no memory leaks when you switch form one file to another. I talked to KO so he agreed that yo then commit your proposed change of manalyzer

>

> That, and add a "clear()" method that resets odbxx state to "empty". I will call odbxx.clear() everywhere where I call "delete fOdb;" (TARunInfo::dtor and other places).

No need for clear(), since no memory gets allocated by midas::odd::set_odb_source(). All it does is to remember the file name. When you instantiate a midas::odd object, the file gets loaded, and the midas::odd object gets initialized from the file contents. Then the buffer

gets deleted (actually it's a simple local variable). Of course this causes some overhead (each midas::odd() constructor reads the whole file), but since the OS will cache the file, it's probably not so bad.

Stefan |

26 Sep 2025, Mark Grimes, Suggestion, Get manalyzer to configure midas::odb when running offline 26 Sep 2025, Mark Grimes, Suggestion, Get manalyzer to configure midas::odb when running offline

|

> ...I talked to KO so he agreed that yo then commit your proposed change of manalyzer

Merged and pushed.

Thanks,

Mark. |

22 Sep 2025, Konstantin Olchanski, Suggestion, Get manalyzer to configure midas::odb when running offline 22 Sep 2025, Konstantin Olchanski, Suggestion, Get manalyzer to configure midas::odb when running offline

|

> > ....Before commit of this patch, can you confirm the RunInfo destructor

> > deletes this ODB stuff from odbxx? manalyzer takes object life times very seriously.

>

> The call stores the ODB string in static members of the midas::odb class. So these will have a lifetime of the process or until they're replaced by another

> call. When a midas::odb is instantiated it reads from these static members and then that data has the lifetime of that instance.

this is the behavious we need to modify.

> > Of course with this patch extending manalyzer to process two or more runs at the same time becomes impossible.

> Yes, I hadn't realised that was an option.

It is an option I would like to keep open. Not too many use cases, but imagine a "split brain" experiment

that has two MIDAS instances record data into two separate midas files. (if LIGO were to use MIDAS,

consider LIGO Hanford and LIGO Livingston).

Assuming data in these two data sets have common precision timestamps,

our task is to assemble data from two input files into single physics events. The analyzer will need

to read two input files, each file with it's run number, it's own ODB dump, etc, process the midas

events (unpack, calibrate, filter, etc), look at the timestamps, assemble the data into physics events.

This trivially generalizes into reading 2, 3, or more input files.

> For that to work I guess the aforementioned static members could be made thread local storage, and

> processing of each run kept to a specific thread. Although I could imagine user code making assumptions and breaking, like storing a midas::odb as a

> class member or something.

manalyzer is already multithreaded, if you will need to keep track of which thread should see which odbxx global object,

seems like abuse of the thread-local storage idea and intent.

> Note that I missed doing the same for the end of run event, which should probably also be added.

Ideally, the memory sanitizer will flag this for us, complain about anything that odbxx.clear() failes to free.

K.O. |

22 Sep 2025, Stefan Ritt, Suggestion, Get manalyzer to configure midas::odb when running offline 22 Sep 2025, Stefan Ritt, Suggestion, Get manalyzer to configure midas::odb when running offline

|

> > > Of course with this patch extending manalyzer to process two or more runs at the same time becomes impossible.

> > Yes, I hadn't realised that was an option.

>

> It is an option I would like to keep open. Not too many use cases, but imagine a "split brain" experiment

> that has two MIDAS instances record data into two separate midas files. (if LIGO were to use MIDAS,

> consider LIGO Hanford and LIGO Livingston).

>

> Assuming data in these two data sets have common precision timestamps,

> our task is to assemble data from two input files into single physics events. The analyzer will need

> to read two input files, each file with it's run number, it's own ODB dump, etc, process the midas

> events (unpack, calibrate, filter, etc), look at the timestamps, assemble the data into physics events.

>

> This trivially generalizes into reading 2, 3, or more input files.

>

> > For that to work I guess the aforementioned static members could be made thread local storage, and

> > processing of each run kept to a specific thread. Although I could imagine user code making assumptions and breaking, like storing a midas::odb as a

> > class member or something.

>

> manalyzer is already multithreaded, if you will need to keep track of which thread should see which odbxx global object,

> seems like abuse of the thread-local storage idea and intent.

I made the global variables storing the file name of type "thread_local", so each thread gets it's own copy. This means however that each thread must then call midas::odb::set_odb_source() individually before

creating any midas::odb objects. Interestingly enough I just learned that thread_local (at least under linux) is almost zero overhead, since these variable are placed by the linker into a separate memory space which is

separate for each thread, so accessing them only means to add a memory offset.

Let's see how far we get with this...

Stefan |

07 Dec 2025, Konstantin Olchanski, Suggestion, Get manalyzer to configure midas::odb when running offline 07 Dec 2025, Konstantin Olchanski, Suggestion, Get manalyzer to configure midas::odb when running offline

|

> #include "manalyzer.h"

> #include "midasio.h"

> +#include "odbxx.h"

This commit broke the standalone ("no MIDAS") build of manalyzer. Either odbxx has to be an independant package

(like mvodb) or it has to be conditioned on HAVE_MIDAS.

(this was flagged by failed bitbucket build of rootana)

K.O. |

08 Dec 2025, Konstantin Olchanski, Suggestion, Get manalyzer to configure midas::odb when running offline 08 Dec 2025, Konstantin Olchanski, Suggestion, Get manalyzer to configure midas::odb when running offline

|

> > #include "manalyzer.h"

> > #include "midasio.h"

> > +#include "odbxx.h"

>

> This commit broke the standalone ("no MIDAS") build of manalyzer. Either odbxx has to be an independant package

> (like mvodb) or it has to be conditioned on HAVE_MIDAS.

>

> (this was flagged by failed bitbucket build of rootana)

Corrected. You can only use odbxx is manalyzer is built with HAVE_MIDAS. (mvodb is an independant package and is

always available, no need to pull and build the full MIDAS).

Also notice how I now initialize odbxx from fBorOdbDump and fEorOdbDump. Also tested against multithreaded access, it

works (as Stefan promised).

K.O. |

26 Nov 2025, Lars Martin, Bug Report, Error(?) in custom page documentation 26 Nov 2025, Lars Martin, Bug Report, Error(?) in custom page documentation

|

https://daq00.triumf.ca/MidasWiki/index.php/Custom_Page#modb

says that

If the ODB path does not point to an individual value but to a subdirectory, the

whole subdirectory is mapped to this.value as a JavaSctipt object such as

<div class="modb" data-odb-path="/Runinfo" onchange="func(this.value)">

<script>function func(value) { console.log(value["run number"]); }</script>

In fact, it seems to return the JSON string of said object, so you'd have to write

console.log(JSON.parse(value)["run number"]) |

27 Nov 2025, Stefan Ritt, Bug Report, Error(?) in custom page documentation 27 Nov 2025, Stefan Ritt, Bug Report, Error(?) in custom page documentation

|

Indeed a bug. Fixed in commit

https://bitbucket.org/tmidas/midas/commits/5c1133df073f493d74d1fc4c03fbcfe80a3edae4

Stefan |

27 Nov 2025, Zaher Salman, Bug Report, Error(?) in custom page documentation 27 Nov 2025, Zaher Salman, Bug Report, Error(?) in custom page documentation

|

This commit breaks the sequencer pages...

> Indeed a bug. Fixed in commit

>

> https://bitbucket.org/tmidas/midas/commits/5c1133df073f493d74d1fc4c03fbcfe80a3edae4

>

> Stefan |

27 Nov 2025, Konstantin Olchanski, Bug Report, Error(?) in custom page documentation 27 Nov 2025, Konstantin Olchanski, Bug Report, Error(?) in custom page documentation

|

the double-decode bug strikes again!

> This commit breaks the sequencer pages...

>

> > Indeed a bug. Fixed in commit

> >

> > https://bitbucket.org/tmidas/midas/commits/5c1133df073f493d74d1fc4c03fbcfe80a3edae4

> >

> > Stefan |

08 Dec 2025, Zaher Salman, Bug Report, Error(?) in custom page documentation 08 Dec 2025, Zaher Salman, Bug Report, Error(?) in custom page documentation

|

The sequencer pages were adjusted to the work with this bug fix.

> This commit breaks the sequencer pages...

>

> > Indeed a bug. Fixed in commit

> >

> > https://bitbucket.org/tmidas/midas/commits/5c1133df073f493d74d1fc4c03fbcfe80a3edae4

> >

> > Stefan |

05 Dec 2025, Konstantin Olchanski, Info, address and thread sanitizers 05 Dec 2025, Konstantin Olchanski, Info, address and thread sanitizers

|

I added cmake support for the thread sanitizer (address sanitizer was already

there). Use:

make cmake -j YES_THREAD_SANITIZER=1 # (or YES_ADDRESS_SANITIZER=1)

However, thread sanitizer is broken on U-24, programs refuse to start ("FATAL:

ThreadSanitizer: unexpected memory mapping") and report what looks like bogus

complaints about mutexes ("unlock of an unlocked mutex (or by a wrong thread)").

On macos, thread sanitizer does not report any errors or warnings or ...

P.S.

The Undefined Behaviour Sanitizer (UBSAN) complained about a few places where

functions could have been called with a NULL pointer arguments, I added some

assert()s to make it happy.

K.O. |

05 Dec 2025, Konstantin Olchanski, Bug Fix, update of JRPC and BRPC 05 Dec 2025, Konstantin Olchanski, Bug Fix, update of JRPC and BRPC

|

With the merge of RPC_CXX code, MIDAS RPC can now return data of arbitrary large size and I am

proceeding to update the corresponding mjsonrpc interface.

If you use JRPC and BRPC in the tmfe framework, you need to do nothing, the updated RPC handlers

are already tested and merged, the only effect is that large data returned by HandleRpc() and

HandleBinaryRpc() will no longer be truncated.

If you use your own handlers for JRPC and BRPC, please add the RPC handlers as shown at the end

of this message. There is no need to delete/remove the old RPC handlers.

To avoid unexpected breakage, the new code is not yet enabled by default, but you can start

using it immediately by replacing the mjsonrpc call:

mjsonrpc_call("jrpc", ...

with

mjsonrpc_call("jrpc_cxx", ...

ditto for "brpc", see resources/example.html for complete code.

After migration is completed, if you have some old frontends where you cannot add the new RPC

handlers, you can still call them using the "jrpc_old" and "brpc_old" mjsonrpc calls.

I will cut-over the default "jrpc" and "brpc" calls to the new RPC_CXX in about a month or so.

If you need more time, please let me know.

K.O.

Register the new RPCs:

cm_register_function(RPC_JRPC_CXX, rpc_cxx_callback);

cm_register_function(RPC_BRPC_CXX, binary_rpc_cxx_callback);

and add the handler functions: (see tmfe.cxx for full example)

static INT rpc_cxx_callback(INT index, void *prpc_param[])

{

const char* cmd = CSTRING(0);

const char* args = CSTRING(1);

std::string* pstr = CPSTDSTRING(2);

*pstr = "my return data";

return RPC_SUCCESS;

}

static INT binary_rpc_cxx_callback(INT index, void *prpc_param[])

{

const char* cmd = CSTRING(0);

const char* args = CSTRING(1);

std::vector<char>* pbuf = CPSTDVECTOR(2);

pbuf->clear();

pbuf->push_back(my return data);

return RPC_SUCCESS;

}

K.O. |

24 Apr 2024, Konstantin Olchanski, Info, MIDAS RPC add support for std::string and std::vector<char> 24 Apr 2024, Konstantin Olchanski, Info, MIDAS RPC add support for std::string and std::vector<char>

|

I now fully understand the MIDAS RPC code, had to add some debugging printfs,

write some test code (odbedit test_rpc), catch and fix a few bugs.

Fixes for the bugs are now committed.

Small refactor of rpc_execute() should be committed soon, this removes the

"goto" in the memory allocation of output buffer. Stefan's original code used a

fixed size buffer, I later added allocation "as-neeed" but did not fully

understand everything and implemented it as "if buffer too small, make it

bigger, goto start over again".

After that, I can implement support for std::string and std::vector<char>.

The way it looks right now, the on-the-wire data format is flexible enough to

make this change backward-compatible and allow MIDAS programs built with old

MIDAS to continue connecting to the new MIDAS and vice-versa.

MIDAS RPC support for std::string should let us improve security by removing

even more uses of fixed-size string buffers.

Support for std::vector<char> will allow removal of last places where

MAX_EVENT_SIZE is used and simplify memory allocation in other "give me data"

RPC calls, like RPC_JRPC and RPC_BRPC.

K.O. |

29 May 2024, Konstantin Olchanski, Info, MIDAS RPC add support for std::string and std::vector<char> 29 May 2024, Konstantin Olchanski, Info, MIDAS RPC add support for std::string and std::vector<char>

|

This is moving slowly. I now have RPC caller side support for std::string and

std::vector<char>. RPC server side is next. K.O. |

01 Dec 2025, Konstantin Olchanski, Info, MIDAS RPC add support for std::string and std::vector<char> 01 Dec 2025, Konstantin Olchanski, Info, MIDAS RPC add support for std::string and std::vector<char>

|

> This is moving slowly. I now have RPC caller side support for std::string and

> std::vector<char>. RPC server side is next. K.O.

The RPC_CXX code is now merged into MIDAS branch feature/rpc_call_cxx.

This code fully supports passing std::string and std::vector<char> through the MIDAS RPC is both directions.

For data passed from client to mserver, memory for string and vector data is allocated automatically as needed.

For data returned from mserver to client, memory to hold returned string and vector data is allocated automatically as

need.

This means that RPC calls can return data of arbitrary size, the rpc caller does not need to know maximum data size.

Removing this limitation was the main motivation for this development.

I completed this code in June 2024, but could not merge it because I broke my git repository (oops). Now I am doing

the merge manually. Changes are isolated to rpc_call_encode(), rpc_call_decode() and rpc_execute(). My intent right

now is to use the new RPC code only for RPCs that pass std::string and std::vector<char>, existing RPCs will use the

old code without any changes. This seems to be the safest way to move forward.

Included is test_rpc() which tests and probes most normal uses cases and some corner cases. When writing the test

code, I found a few bugs in the old MIDAS RPC code. If I remember right, I committed fixes for those bugs to main

MIDAS right then and there.

K.O. |

05 Dec 2025, Konstantin Olchanski, Info, MIDAS RPC add support for std::string and std::vector<char> 05 Dec 2025, Konstantin Olchanski, Info, MIDAS RPC add support for std::string and std::vector<char>

|

> > This is moving slowly. I now have RPC caller side support for std::string and

> > std::vector<char>. RPC server side is next. K.O.

> The RPC_CXX code is now merged into MIDAS branch feature/rpc_call_cxx.

> This code fully supports passing std::string and std::vector<char> through the MIDAS RPC is both directions.

The RPC_CXX in now merged into MIDAS develop. commit 34cd969fbbfecc82c290e6c2dfc7c6d53b6e0121.

There is a new RPC parameter encoder and decoder. To avoid unexpected breakage, it is only used for newly added RPC_CXX

calls, but I expect to eventually switch all RPC calls to use the new encoder and decoder.

As examples of new code, see RPC_JRPC_CXX and RPC_BRPC_CXX, they return RPC data in an std::string and std::vector<char>

respectively, amount of returned data is unlimited, mjsonrpc parameter "max_reply_length" is no longer needed/used.

Also included of RPC_BM_RECEIVE_EVENT_CXX, it receives event data as an std::vector<char> and maximum event size is no

longer limited, ODB /Experiment/MAX_EVENT_SIZE is no longer needed/used. To avoid unexpected breakage, this new code is not

enabled yet.

K.O. |

24 Sep 2025, Thomas Lindner, Suggestion, Improve process for adding new variables that can be shown in history plots 24 Sep 2025, Thomas Lindner, Suggestion, Improve process for adding new variables that can be shown in history plots

|

Documenting a discussion I had with Konstantin a while ago.

One aspect of the MIDAS history plotting I find frustrating is the sequence for adding a new history

variable and then plotting them. At least for me ,the sequence for a new variable is:

1) Add a new named variable to a MIDAS bank; compile the frontend and restart it; check that the new

variable is being displayed correctly in MIDAS equipment pages.

2) Stop and restart programs; usually I do

- stop and restart mlogger

- stop and restart mhttpd

- stop and restart mlogger

- stop and restart mhttpd

3) Start adding the new variable to the history plots.

My frustration is with step 2, where the logger and web server need to be restarted multiple times.

I think that only one of those programs actually needs to be restart twice, but I can never remember

which one, so I restart both programs twice just to be sure.

I don't entirely understand the sequence of what happens with these restarts so that the web server

becomes aware of what new variables are in the history system; so I can't make a well-motivated

suggestion.

Ideally it would be nice if step 2 only required restarting the mlogger and mhttpd automatically

became aware of what new variables were in the system.

But even just having a single restart of mlogger, then mhttpd would be an improvement on the current

situation and easier to explain to users.

Hopefully this would not be a huge amount of work. |

25 Nov 2025, Konstantin Olchanski, Suggestion, Improve process for adding new variables that can be shown in history plots 25 Nov 2025, Konstantin Olchanski, Suggestion, Improve process for adding new variables that can be shown in history plots

|

> One aspect of the MIDAS history plotting I find frustrating is the sequence for adding a new history

> variable and then plotting them. ...

this has been a problem in MIDAS for a very long time, we have tried and failed to fix/streamline/improve

it many times and obviously failed. many times.

this is what must happen when adding a new history variable:

1) new /eq/xxx/variables/vvv entry must show up in ODB

1a) add the code for the new data to the frontend

1b) start the frontend

1c) if new variable is added in the frontend init() method, it will be created in ODB, done.

1d) if new variable is added by the event readout code (i.e. via MIDAS event data bank automatically

written to ODB by RO_ODB flags), then we need to start a run.

1e) if this is not periodic event, but beam event or laser event or some other triggered event, we must

also turn on the beam, turn on the laser, etc.

1z) observe that ODB entry exists

3) mlogger must discover this new ODB entry:

3a) mlogger used to rescan ODB each time something in ODB changes, this code was removed

3b) mlogger used to rescan ODB each time a new run is started, this code was removed

3c) mlogger rescans ODB each time it is restarted, this still works.

so sequence is like this: modify, restart frontend, starts a run, stop the run, observe odb entry is

created, restart mlogger, observe new mhf files are created in the history directory.

4) mhttpd must discover that a new mhf file now exists, read it's header to discover history event and

variable names and make them available to the history panel editor.

it is not clear to me that this part currently works:

4a) mhttpd caches the history event list and will not see new variables unless this cache is updated.

4b) when web history panel editor is opened, it is supposed to tell mhttpd to update the cache. I am

pretty sure it worked when I wrote this code...

4c) but obviously it does not work now.

restarting mhttpd obviously makes it load the history data anew, but there is no button to make it happen

on the MIDAS web pages.

so it sounds like I have to sit down and at least retest this whole scheme to see that it works at least

in some way.

then try to improve it:

a) the frontend dance in (1) is unavoidable

b) mlogger must be restarted, I think Stefan and myself agree on this. In theory we could add a web page

button to call an mlogger RPC and have it reload the history. but this button already exists, it's called

"restart mlogger".

c) newly create history event should automatically show up in the history panel editor without any

additional user action

d) document the two intermediate debugging steps:

d1) check that the new variable was created in ODB

d2) check that mlogger created (and writes to) the new history file

this is how I see it and I am open to suggestion, changes, improvements, etc.

K.O. |

26 Nov 2025, Thomas Lindner, Suggestion, Improve process for adding new variables that can be shown in history plots 26 Nov 2025, Thomas Lindner, Suggestion, Improve process for adding new variables that can be shown in history plots

|

> 3) mlogger must discover this new ODB entry:

>

> 3a) mlogger used to rescan ODB each time something in ODB changes, this code was removed

> 3b) mlogger used to rescan ODB each time a new run is started, this code was removed

> 3c) mlogger rescans ODB each time it is restarted, this still works.

>

> so sequence is like this: modify, restart frontend, starts a run, stop the run, observe odb entry is

> created, restart mlogger, observe new mhf files are created in the history directory.

I assume that mlogger rescanning ODB is somewhat intensive process; and that's why we don't want rescanning to

happen every time the ODB is changed?

Stopping/restarting mlogger is okay. But would it be better to have some alternate way to force mlogger to

rescan the ODB? Like an odbedit command like 'mlogger_rescan'; or some magic ODB key to force the rescan. I

guess neither of these options is really any easier for the developer. It just seems awkward to need to restart

mlogger for this.

It would be great if mhttpd can be fixed so that it updates the cache when history editor is opened. |

27 Nov 2025, Stefan Ritt, Suggestion, Improve process for adding new variables that can be shown in history plots 27 Nov 2025, Stefan Ritt, Suggestion, Improve process for adding new variables that can be shown in history plots

|

> I assume that mlogger rescanning ODB is somewhat intensive process; and that's why we don't want rescanning to

> happen every time the ODB is changed?

A rescan maybe takes some tens of milliseconds. Something you can do on every run, but not on every ODB change (like writing to the slow control values).

We would need a somehow more clever code which keeps a copy of the variable names for each equipment. If the names change or the array size changes,

the scan can be triggered.

> Stopping/restarting mlogger is okay. But would it be better to have some alternate way to force mlogger to

> rescan the ODB? Like an odbedit command like 'mlogger_rescan'; or some magic ODB key to force the rescan. I

> guess neither of these options is really any easier for the developer. It just seems awkward to need to restart

> mlogger for this.

Indeed. But whatever "new" we design for the scan will users complain "last week it was enough to restart the logger, now what do I have to do". So nothing

is perfect. But having a button in the ODB editor like "Rebuild history database" might look more elegant. One issue is that it needs special treatment, since

the logger (in the Mu3e experiment) needs >10s for the scan, so a simple rpc call will timeout.

Let's see what KO has to say on this.

Best,

Stefan |

27 Nov 2025, Konstantin Olchanski, Suggestion, Improve process for adding new variables that can be shown in history plots 27 Nov 2025, Konstantin Olchanski, Suggestion, Improve process for adding new variables that can be shown in history plots

|

> > I assume that mlogger rescanning ODB is somewhat intensive process; and that's why we don't want rescanning to

> > happen every time the ODB is changed?

>

> A rescan maybe takes some tens of milliseconds. Something you can do on every run, but not on every ODB change (like writing to the slow control values).

> We would need a somehow more clever code which keeps a copy of the variable names for each equipment. If the names change or the array size changes,

> the scan can be triggered.

>

That's right, scanning ODB for history changes is essentially free.

Question is what do we do if something was added or removed.

I see two ways to think about it:

1) history is independent from "runs", we see a change, we apply it (even if it takes 10 sec or 2 minutes).

2) "nothing should change during a run", we must process all changes before we start a run (starting a run takes forever),

and we must ignore changes during a run (i.e. updated frontend starts to write new data to history). (this is why

the trick to "start a new run twice" used to work).

>

> > Stopping/restarting mlogger is okay. But would it be better to have some alternate way to force mlogger to

> > rescan the ODB?

>

It is "free" to rescan ODB every 10 second or so. Then we can output a midas message "please restart the logger",

and set an ODB flag, then when user opens the history panel editor, it will see this flag

and tell the user "please restart the logger to see the latest changes in history". It can even list

the specific changes, if we want ot be verbose about it.

>

> Indeed. But whatever "new" we design for the scan will users complain "last week it was enough to restart the logger, now what do I have to do". So nothing

> is perfect. But having a button in the ODB editor like "Rebuild history database" might look more elegant. One issue is that it needs special treatment, since

> the logger (in the Mu3e experiment) needs >10s for the scan, so a simple rpc call will timeout.

>

I like the elegance of "just restart the logger".

Having a web page button to tell logger to rescan the history is cumbersome technically,

(web page calls mjsonrpc to mhttpd, mhttpd calls a midas rpc to mlogger "please set a flag to rescan the history",

then web page polls mhttpd to poll mlogger for "are you done yet?". or instead of polling,

deal with double timeouts, in midas rpc to mlogger and mjsronrpc timeout in javascript).

And to avoid violating (2) above, we must tell user "you cannot push this button during a run!".

I say, let's take the low road for now and see if it's good enough:

a) have the history system report any changes in midas.log - "history event added", "new history variable added" (or "renamed"),

this will let user see that their changes to the equipment frontend "took" and flag any accidental/unwanted changes.

b) have mlogger periodically scan ODB and set a "please restart me" flag. observe this flag in the history editor

and tell the user "please restart the logger to see latest changes in the history".

K.O. |